parakeet

🦜🪺 Parakeet is a GoLang library, made to simplify the development of small generative AI applications with Ollama 🦙.

Stars: 96

Parakeet is a Go library for creating GenAI apps with Ollama. It enables the creation of generative AI applications that can generate text-based content. The library provides tools for simple completion, completion with context, chat completion, and more. It also supports function calling with tools and Wasm plugins. Parakeet allows users to interact with language models and create AI-powered applications easily.

README:

Parakeet is the simplest Go library to create GenAI apps with Ollama.

A GenAI app is an application that uses generative AI technology. Generative AI can create new text, images, or other content based on what it's been trained on. So a GenAI app could help you write a poem, design a logo, or even compose a song! These are still under development, but they have the potential to be creative tools for many purposes. - Gemini

✋ Parakeet is only for creating GenAI apps generating text (not image, music,...).

go get github.com/parakeet-nest/parakeetollamaUrl := "http://localhost:11434"

model := "deepseek-coder"

systemContent := `You are an expert in computer programming.

Please make friendly answer for the noobs.

Add source code examples if you can.`

userContent := `Ccreate a "hello world" program in Golang.`

options := llm.SetOptions(map[string]interface{}{

option.Temperature: 0.5,

option.RepeatLastN: 2,

option.RepeatPenalty: 2.2,

})

query := llm.Query{

Model: model,

Messages: []llm.Message{

{Role: "system", Content: systemContent},

{Role: "user", Content: userContent},

},

Options: options,

}

_, err := completion.ChatStream(ollamaUrl, query,

func(answer llm.Answer) error {

fmt.Print(answer.Message.Content)

return nil

})ollamaUrl := "http://localhost:11434"

model := "allenporter/xlam:1b"

toolsList := []llm.Tool{

{

Type: "function",

Function: llm.Function{

Name: "multiplyNumbers",

Description: "Make a multiplication of the two given numbers",

Parameters: llm.Parameters{

Type: "object",

Properties: map[string]llm.Property{

"a": {

Type: "number",

Description: "first operand",

},

"b": {

Type: "number",

Description: "second operand",

},

},

Required: []string{"a", "b"},

},

},

},

{

Type: "function",

Function: llm.Function{

Name: "addNumbers",

Description: "Make an addition of the two given numbers",

Parameters: llm.Parameters{

Type: "object",

Properties: map[string]llm.Property{

"a": {

Type: "number",

Description: "first operand",

},

"b": {

Type: "number",

Description: "second operand",

},

},

Required: []string{"a", "b"},

},

},

},

}

messages := []llm.Message{

{Role: "user", Content: `add 2 and 40`},

{Role: "user", Content: `multiply 2 and 21`},

}

options := llm.SetOptions(map[string]interface{}{

option.Temperature: 0.0,

option.RepeatLastN: 2,

option.RepeatPenalty: 2.0,

})

query := llm.Query{

Model: model,

Messages: messages,

Tools: toolsList,

Options: options,

Format: "json",

}

answer, err := completion.Chat(ollamaUrl, query)

if err != nil {

log.Fatal("😡:", err)

}

for idx, toolCall := range answer.Message.ToolCalls {

result, err := toolCall.Function.ToJSONString()

if err != nil {

log.Fatal("😡:", err)

}

// displqy the tool to call

fmt.Println("ToolCall", idx, ":", result)

/* Results:

ToolCall 0 : {"name":"addNumbers","arguments":{"a":2,"b":40}}

ToolCall 1 : {"name":"multiplyNumbers","arguments":{"a":2,"b":21}}

*/

}ollamaUrl := "http://localhost:11434"

model := "qwen2.5:0.5b"

options := llm.SetOptions(map[string]interface{}{

option.Temperature: 1.5,

})

// define schema for a structured output

schema := map[string]any{

"type": "object",

"properties": map[string]any{

"name": map[string]any{

"type": "string",

},

"capital": map[string]any{

"type": "string",

},

"languages": map[string]any{

"type": "array",

"items": map[string]any{

"type": "string",

},

},

},

"required": []string{"name", "capital", "languages"},

}

query := llm.Query{

Model: model,

Messages: []llm.Message{

{Role: "user", Content: "Tell me about Canada."},

},

Options: options,

Format: schema,

Raw: false,

}

answer, err := completion.Chat(ollamaUrl, query)

fmt.Println(answer.Message.Content)

/* Results:

{

"capital": "Ottawa",

"languages": ["English", "French"],

"name": "Canada of the West: Land of Ice and Rainbows"

}

*/docs := []string{

`Michael Burnham is the main character on the Star Trek series, Discovery.

She's a human raised on the logical planet Vulcan by Spock's father.

Burnham is intelligent and struggles to balance her human emotions with Vulcan logic.

She's become a Starfleet captain known for her determination and problem-solving skills.

Originally played by actress Sonequa Martin-Green`,

`James T. Kirk, also known as Captain Kirk, is a fictional character from the Star Trek franchise.

He's the iconic captain of the starship USS Enterprise,

boldly exploring the galaxy with his crew.

Originally played by actor William Shatner,

Kirk has appeared in TV series, movies, and other media.`,

`Jean-Luc Picard is a fictional character in the Star Trek franchise.

He's most famous for being the captain of the USS Enterprise-D,

a starship exploring the galaxy in the 24th century.

Picard is known for his diplomacy, intelligence, and strong moral compass.

He's been portrayed by actor Patrick Stewart.`,

`Lieutenant Philippe Charrière, known as the **Silent Sentinel** of the USS Discovery,

is the enigmatic programming genius whose codes safeguard the ship's secrets and operations.

His swift problem-solving skills are as legendary as the mysterious aura that surrounds him.

Charrière, a man of few words, speaks the language of machines with unrivaled fluency,

making him the crew's unsung guardian in the cosmos. His best friend is Spiderman from the Marvel Cinematic Universe.`,

}

ollamaUrl := "http://localhost:11434"

embeddingsModel := "mxbai-embed-large:latest" // This model is for the embeddings of the documents

smallChatModel := "qwen2.5:1.5b" // This model is for the chat completion

store := embeddings.MemoryVectorStore{

Records: make(map[string]llm.VectorRecord),

}

// Create embeddings from documents and save them in the store

for idx, doc := range docs {

fmt.Println("Creating embedding from document ", idx)

embedding, err := embeddings.CreateEmbedding(

ollamaUrl,

llm.Query4Embedding{

Model: embeddingsModel,

Prompt: doc,

},

strconv.Itoa(idx),

)

if err != nil {

fmt.Println("😡:", err)

} else {

store.Save(embedding)

}

}

// Question for the Chat system

userContent := `Who is Philippe Charrière and what spaceship does he work on?`

systemContent := `You are an AI assistant. Your name is Seven.

Some people are calling you Seven of Nine.

You are an expert in Star Trek.

All questions are about Star Trek.

Using the provided context, answer the user's question

to the best of your ability using only the resources provided.`

// Create an embedding from the question

embeddingFromQuestion, err := embeddings.CreateEmbedding(

ollamaUrl,

llm.Query4Embedding{

Model: embeddingsModel,

Prompt: userContent,

},

"question",

)

if err != nil {

log.Fatalln("😡:", err)

}

//🔎 searching for similarity...

similarity, _ := store.SearchMaxSimilarity(embeddingFromQuestion)

documentsContent := `<context><doc>` + similarity.Prompt + `</doc></context>`

query := llm.Query{

Model: smallChatModel,

Messages: []llm.Message{

{Role: "system", Content: systemContent},

{Role: "system", Content: documentsContent},

{Role: "user", Content: userContent},

},

Options: llm.SetOptions(map[string]interface{}{

option.Temperature: 0.4,

option.RepeatLastN: 2,

}),

}

fmt.Println("🤖 answer:")

// Answer the question

_, err = completion.ChatStream(ollamaUrl, query,

func(answer llm.Answer) error {

fmt.Print(answer.Message.Content)

return nil

})For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for parakeet

Similar Open Source Tools

parakeet

Parakeet is a Go library for creating GenAI apps with Ollama. It enables the creation of generative AI applications that can generate text-based content. The library provides tools for simple completion, completion with context, chat completion, and more. It also supports function calling with tools and Wasm plugins. Parakeet allows users to interact with language models and create AI-powered applications easily.

ollama-ex

Ollama is a powerful tool for running large language models locally or on your own infrastructure. It provides a full implementation of the Ollama API, support for streaming requests, and tool use capability. Users can interact with Ollama in Elixir to generate completions, chat messages, and perform streaming requests. The tool also supports function calling on compatible models, allowing users to define tools with clear descriptions and arguments. Ollama is designed to facilitate natural language processing tasks and enhance user interactions with language models.

modelfusion

ModelFusion is an abstraction layer for integrating AI models into JavaScript and TypeScript applications, unifying the API for common operations such as text streaming, object generation, and tool usage. It provides features to support production environments, including observability hooks, logging, and automatic retries. You can use ModelFusion to build AI applications, chatbots, and agents. ModelFusion is a non-commercial open source project that is community-driven. You can use it with any supported provider. ModelFusion supports a wide range of models including text generation, image generation, vision, text-to-speech, speech-to-text, and embedding models. ModelFusion infers TypeScript types wherever possible and validates model responses. ModelFusion provides an observer framework and logging support. ModelFusion ensures seamless operation through automatic retries, throttling, and error handling mechanisms. ModelFusion is fully tree-shakeable, can be used in serverless environments, and only uses a minimal set of dependencies.

ruby-openai

Use the OpenAI API with Ruby! 🤖🩵 Stream text with GPT-4, transcribe and translate audio with Whisper, or create images with DALL·E... Hire me | 🎮 Ruby AI Builders Discord | 🐦 Twitter | 🧠 Anthropic Gem | 🚂 Midjourney Gem ## Table of Contents * Ruby OpenAI * Table of Contents * Installation * Bundler * Gem install * Usage * Quickstart * With Config * Custom timeout or base URI * Extra Headers per Client * Logging * Errors * Faraday middleware * Azure * Ollama * Counting Tokens * Models * Examples * Chat * Streaming Chat * Vision * JSON Mode * Functions * Edits * Embeddings * Batches * Files * Finetunes * Assistants * Threads and Messages * Runs * Runs involving function tools * Image Generation * DALL·E 2 * DALL·E 3 * Image Edit * Image Variations * Moderations * Whisper * Translate * Transcribe * Speech * Errors * Development * Release * Contributing * License * Code of Conduct

ling

Ling is a workflow framework supporting streaming of structured content from large language models. It enables quick responses to content streams, reducing waiting times. Ling parses JSON data streams character by character in real-time, outputting content in jsonuri format. It facilitates immediate front-end processing by converting content during streaming input. The framework supports data stream output via JSONL protocol, correction of token errors in JSON output, complex asynchronous workflows, status messages during streaming output, and Server-Sent Events.

promptic

Promptic is a tool designed for LLM app development, providing a productive and pythonic way to build LLM applications. It leverages LiteLLM, allowing flexibility to switch LLM providers easily. Promptic focuses on building features by providing type-safe structured outputs, easy-to-build agents, streaming support, automatic prompt caching, and built-in conversation memory.

bosquet

Bosquet is a tool designed for LLMOps in large language model-based applications. It simplifies building AI applications by managing LLM and tool services, integrating with Selmer templating library for prompt templating, enabling prompt chaining and composition with Pathom graph processing, defining agents and tools for external API interactions, handling LLM memory, and providing features like call response caching. The tool aims to streamline the development process for AI applications that require complex prompt templates, memory management, and interaction with external systems.

bellman

Bellman is a unified interface to interact with language and embedding models, supporting various vendors like VertexAI/Gemini, OpenAI, Anthropic, VoyageAI, and Ollama. It consists of a library for direct interaction with models and a service 'bellmand' for proxying requests with one API key. Bellman simplifies switching between models, vendors, and common tasks like chat, structured data, tools, and binary input. It addresses the lack of official SDKs for major players and differences in APIs, providing a single proxy for handling different models. The library offers clients for different vendors implementing common interfaces for generating and embedding text, enabling easy interchangeability between models.

ogpt.nvim

OGPT.nvim is a Neovim plugin that enables users to interact with various language models (LLMs) such as Ollama, OpenAI, TextGenUI, and more. Users can engage in interactive question-and-answer sessions, have persona-based conversations, and execute customizable actions like grammar correction, translation, keyword generation, docstring creation, test addition, code optimization, summarization, bug fixing, code explanation, and code readability analysis. The plugin allows users to define custom actions using a JSON file or plugin configurations.

firecrawl

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It crawls all accessible subpages and provides clean markdown for each, without requiring a sitemap. The API is easy to use and can be self-hosted. It also integrates with Langchain and Llama Index. The Python SDK makes it easy to crawl and scrape websites in Python code.

agent-kit

AgentKit is a framework for creating and orchestrating AI Agents, enabling developers to build, test, and deploy reliable AI applications at scale. It allows for creating networked agents with separate tasks and instructions to solve specific tasks, as well as simple agents for tasks like writing content. The framework requires the Inngest TypeScript SDK as a dependency and provides documentation on agents, tools, network, state, and routing. Example projects showcase AgentKit in action, such as the Test Writing Network demo using Workflow Kit, Supabase, and OpenAI.

npi

NPi is an open-source platform providing Tool-use APIs to empower AI agents with the ability to take action in the virtual world. It is currently under active development, and the APIs are subject to change in future releases. NPi offers a command line tool for installation and setup, along with a GitHub app for easy access to repositories. The platform also includes a Python SDK and examples like Calendar Negotiator and Twitter Crawler. Join the NPi community on Discord to contribute to the development and explore the roadmap for future enhancements.

lmstudio.js

lmstudio.js is a pre-release alpha client SDK for LM Studio, allowing users to use local LLMs in JS/TS/Node. It is currently undergoing rapid development with breaking changes expected. Users can follow LM Studio's announcements on Twitter and Discord. The SDK provides API usage for loading models, predicting text, setting up the local LLM server, and more. It supports features like custom loading progress tracking, model unloading, structured output prediction, and cancellation of predictions. Users can interact with LM Studio through the CLI tool 'lms' and perform tasks like text completion, conversation, and getting prediction statistics.

openmacro

Openmacro is a multimodal personal agent that allows users to run code locally. It acts as a personal agent capable of completing and automating tasks autonomously via self-prompting. The tool provides a CLI natural-language interface for completing and automating tasks, analyzing and plotting data, browsing the web, and manipulating files. Currently, it supports API keys for models powered by SambaNova, with plans to add support for other hosts like OpenAI and Anthropic in future versions.

DeRTa

DeRTa (Refuse Whenever You Feel Unsafe) is a tool designed to improve safety in Large Language Models (LLMs) by training them to refuse compliance at any response juncture. The tool incorporates methods such as MLE with Harmful Response Prefix and Reinforced Transition Optimization (RTO) to address refusal positional bias and strengthen the model's capability to transition from potential harm to safety refusal. DeRTa provides training data, model weights, and evaluation scripts for LLMs, enabling users to enhance safety in language generation tasks.

langchain-swift

LangChain for Swift. Optimized for iOS, macOS, watchOS (part) and visionOS.(beta) This is a pure client library, no server required

For similar tasks

parakeet

Parakeet is a Go library for creating GenAI apps with Ollama. It enables the creation of generative AI applications that can generate text-based content. The library provides tools for simple completion, completion with context, chat completion, and more. It also supports function calling with tools and Wasm plugins. Parakeet allows users to interact with language models and create AI-powered applications easily.

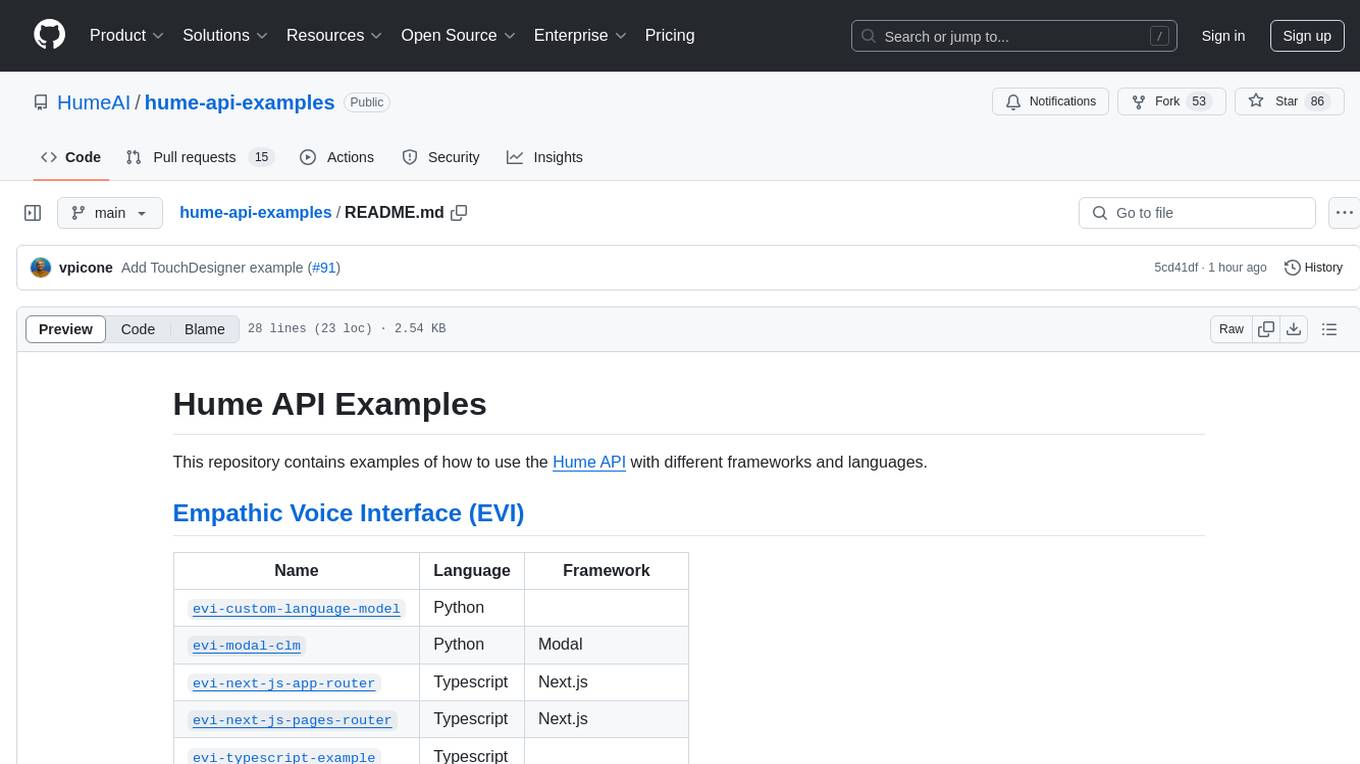

hume-api-examples

This repository contains examples of how to use the Hume API with different frameworks and languages. It includes examples for Empathic Voice Interface (EVI) and Expression Measurement API. The EVI examples cover custom language models, modal, Next.js integration, Vue integration, Hume Python SDK, and React integration. The Expression Measurement API examples include models for face, language, burst, and speech, with implementations in Python and Typescript using frameworks like Next.js.

serverless-rag-demo

The serverless-rag-demo repository showcases a solution for building a Retrieval Augmented Generation (RAG) system using Amazon Opensearch Serverless Vector DB, Amazon Bedrock, Llama2 LLM, and Falcon LLM. The solution leverages generative AI powered by large language models to generate domain-specific text outputs by incorporating external data sources. Users can augment prompts with relevant context from documents within a knowledge library, enabling the creation of AI applications without managing vector database infrastructure. The repository provides detailed instructions on deploying the RAG-based solution, including prerequisites, architecture, and step-by-step deployment process using AWS Cloudshell.

june

june-va is a local voice chatbot that combines Ollama for language model capabilities, Hugging Face Transformers for speech recognition, and the Coqui TTS Toolkit for text-to-speech synthesis. It provides a flexible, privacy-focused solution for voice-assisted interactions on your local machine, ensuring that no data is sent to external servers. The tool supports various interaction modes including text input/output, voice input/text output, text input/audio output, and voice input/audio output. Users can customize the tool's behavior with a JSON configuration file and utilize voice conversion features for voice cloning. The application can be further customized using a configuration file with attributes for language model, speech-to-text model, and text-to-speech model configurations.

llm

The 'llm' package for Emacs provides an interface for interacting with Large Language Models (LLMs). It abstracts functionality to a higher level, concealing API variations and ensuring compatibility with various LLMs. Users can set up providers like OpenAI, Gemini, Vertex, Claude, Ollama, GPT4All, and a fake client for testing. The package allows for chat interactions, embeddings, token counting, and function calling. It also offers advanced prompt creation and logging capabilities. Users can handle conversations, create prompts with placeholders, and contribute by creating providers.

sparkle

Sparkle is a tool that streamlines the process of building AI-driven features in applications using Large Language Models (LLMs). It guides users through creating and managing agents, defining tools, and interacting with LLM providers like OpenAI. Sparkle allows customization of LLM provider settings, model configurations, and provides a seamless integration with Sparkle Server for exposing agents via an OpenAI-compatible chat API endpoint.

react-native-rag

React Native RAG is a library that enables private, local RAGs to supercharge LLMs with a custom knowledge base. It offers modular and extensible components like `LLM`, `Embeddings`, `VectorStore`, and `TextSplitter`, with multiple integration options. The library supports on-device inference, vector store persistence, and semantic search implementation. Users can easily generate text responses, manage documents, and utilize custom components for advanced use cases.

RustGPT

A complete Large Language Model implementation in pure Rust with no external ML frameworks. Demonstrates building a transformer-based language model from scratch, including pre-training, instruction tuning, interactive chat mode, full backpropagation, and modular architecture. Model learns basic world knowledge and conversational patterns. Features custom tokenization, greedy decoding, gradient clipping, modular layer system, and comprehensive test coverage. Ideal for understanding modern LLMs and key ML concepts. Dependencies include ndarray for matrix operations and rand for random number generation. Contributions welcome for model persistence, performance optimizations, better sampling, evaluation metrics, advanced architectures, training improvements, data handling, and model analysis. Follows standard Rust conventions and encourages contributions at beginner, intermediate, and advanced levels.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.