swarmgo

SwarmGo is a Go package that allows you to create AI agents capable of interacting, coordinating, and executing tasks. Inspired by OpenAI's Swarm framework, SwarmGo focuses on making agent coordination and execution lightweight, highly controllable, and easily testable.

Stars: 164

SwarmGo is a Go package designed to create AI agents capable of interacting, coordinating, and executing tasks. It focuses on lightweight agent coordination and execution, offering powerful primitives like Agents and handoffs. SwarmGo enables building scalable solutions with rich dynamics between tools and networks of agents, all while keeping the learning curve low. It supports features like memory management, streaming support, concurrent agent execution, LLM interface, and structured workflows for organizing and coordinating multiple agents.

README:

SwarmGo is a Go package that allows you to create AI agents capable of interacting, coordinating, and executing tasks. Inspired by OpenAI's Swarm framework, SwarmGo focuses on making agent coordination and execution lightweight, highly controllable, and easily testable.

It achieves this through two primitive abstractions: Agents and handoffs. An Agent encompasses instructions and tools (functions it can execute), and can at any point choose to hand off a conversation to another Agent.

These primitives are powerful enough to express rich dynamics between tools and networks of agents, allowing you to build scalable, real-world solutions while avoiding a steep learning curve.

- Why SwarmGo

- Installation

- Quick Start

- Usage

- Agent Handoff

- Streaming Support

- Concurrent Agent Execution

- LLM Interface

- Workflows

- Examples

- Contributing

- License

- Acknowledgments

SwarmGo explores patterns that are lightweight, scalable, and highly customizable by design. It's best suited for situations dealing with a large number of independent capabilities and instructions that are difficult to encode into a single prompt.

SwarmGo runs (almost) entirely on the client and, much like the Chat Completions API, does not store state between calls.

go get github.com/prathyushnallamothu/swarmgoHere's a simple example to get you started:

package main

import (

"context"

"fmt"

"log"

swarmgo "github.com/prathyushnallamothu/swarmgo"

openai "github.com/sashabaranov/go-openai"

llm "github.com/prathyushnallamothu/swarmgo/llm"

)

func main() {

client := swarmgo.NewSwarm("YOUR_OPENAI_API_KEY", llm.OpenAI)

agent := &swarmgo.Agent{

Name: "Agent",

Instructions: "You are a helpful assistant.",

Model: "gpt-3.5-turbo",

}

messages := []openai.ChatCompletionMessage{

{Role: "user", Content: "Hello!"},

}

ctx := context.Background()

response, err := client.Run(ctx, agent, messages, nil, "", false, false, 5, true)

if err != nil {

log.Fatalf("Error: %v", err)

}

fmt.Println(response.Messages[len(response.Messages)-1].Content)

}Creating an Agent An Agent represents an AI assistant with specific instructions and functions (tools) it can use.

agent := &swarmgo.Agent{

Name: "Agent",

Instructions: "You are a helpful assistant.",

Model: "gpt-4o",

}- Name: Identifier for the agent (no spaces or special characters).

- Instructions: The system prompt or instructions for the agent.

- Model: The OpenAI model to use (e.g., "gpt-4o").

To interact with the agent, use the Run method:

messages := []openai.ChatCompletionMessage{

{Role: "user", Content: "Hello!"},

}

ctx := context.Background()

response, err := client.Run(ctx, agent, messages, nil, "", false, false, 5, true)

if err != nil {

log.Fatalf("Error: %v", err)

}

fmt.Println(response.Messages[len(response.Messages)-1].Content)Agents can use functions to perform specific tasks. Functions are defined and then added to an agent.

func getWeather(args map[string]interface{}, contextVariables map[string]interface{}) swarmgo.Result {

location := args["location"].(string)

// Simulate fetching weather data

return swarmgo.Result{

Value: fmt.Sprintf(`{"temp": 67, "unit": "F", "location": "%s"}`, location),

}

}agent.Functions = []swarmgo.AgentFunction{

{

Name: "getWeather",

Description: "Get the current weather in a given location.",

Parameters: map[string]interface{}{

"type": "object",

"properties": map[string]interface{}{

"location": map[string]interface{}{

"type": "string",

"description": "The city to get the weather for.",

},

},

"required": []interface{}{"location"},

},

Function: getWeather,

},

}Context variables allow you to pass information between function calls and agents.

func instructions(contextVariables map[string]interface{}) string {

name, ok := contextVariables["name"].(string)

if !ok {

name = "User"

}

return fmt.Sprintf("You are a helpful assistant. Greet the user by name (%s).", name)

}

agent.InstructionsFunc = instructionsAgents can hand off conversations to other agents. This is useful for delegating tasks or escalating when an agent is unable to handle a request.

func transferToAnotherAgent(args map[string]interface{}, contextVariables map[string]interface{}) swarmgo.Result {

anotherAgent := &swarmgo.Agent{

Name: "AnotherAgent",

Instructions: "You are another agent.",

Model: "gpt-3.5-turbo",

}

return swarmgo.Result{

Agent: anotherAgent,

Value: "Transferring to AnotherAgent.",

}

}agent.Functions = append(agent.Functions, swarmgo.AgentFunction{

Name: "transferToAnotherAgent",

Description: "Transfer the conversation to AnotherAgent.",

Parameters: map[string]interface{}{

"type": "object",

"properties": map[string]interface{}{},

},

Function: transferToAnotherAgent,

})SwarmGo now includes built-in support for streaming responses, allowing real-time processing of AI responses and tool calls. This is particularly useful for long-running operations or when you want to provide immediate feedback to users.

To use streaming, implement the StreamHandler interface:

type StreamHandler interface {

OnStart()

OnToken(token string)

OnToolCall(toolCall openai.ToolCall)

OnComplete(message openai.ChatCompletionMessage)

OnError(err error)

}A default implementation (DefaultStreamHandler) is provided, but you can create your own handler for custom behavior:

type CustomStreamHandler struct {

totalTokens int

}

func (h *CustomStreamHandler) OnStart() {

fmt.Println("Starting stream...")

}

func (h *CustomStreamHandler) OnToken(token string) {

h.totalTokens++

fmt.Print(token)

}

func (h *CustomStreamHandler) OnComplete(msg openai.ChatCompletionMessage) {

fmt.Printf("\nComplete! Total tokens: %d\n", h.totalTokens)

}

func (h *CustomStreamHandler) OnError(err error) {

fmt.Printf("Error: %v\n", err)

}

func (h *CustomStreamHandler) OnToolCall(tool openai.ToolCall) {

fmt.Printf("\nUsing tool: %s\n", tool.Function.Name)

}Here's an example of using streaming with a file analyzer:

client := swarmgo.NewSwarm("YOUR_OPENAI_API_KEY", llm.OpenAI)

agent := &swarmgo.Agent{

Name: "FileAnalyzer",

Instructions: "You are an assistant that analyzes files.",

Model: "gpt-4",

}

handler := &CustomStreamHandler{}

err := client.StreamingResponse(

context.Background(),

agent,

messages,

nil,

"",

handler,

true,

)For a complete example of file analysis with streaming, see examples/file_analyzer_stream/main.go.

SwarmGo supports running multiple agents concurrently using the ConcurrentSwarm type. This is particularly useful when you need to parallelize agent tasks or run multiple analyses simultaneously.

// Create a concurrent swarm

cs := swarmgo.NewConcurrentSwarm(apiKey)

// Configure multiple agents

configs := map[string]swarmgo.AgentConfig{

"agent1": {

Agent: agent1,

Messages: []openai.ChatCompletionMessage{

{Role: openai.ChatMessageRoleUser, Content: "Task 1"},

},

MaxTurns: 1,

ExecuteTools: true,

},

"agent2": {

Agent: agent2,

Messages: []openai.ChatCompletionMessage{

{Role: openai.ChatMessageRoleUser, Content: "Task 2"},

},

MaxTurns: 1,

ExecuteTools: true,

},

}

// Run agents concurrently with a timeout

ctx, cancel := context.WithTimeout(context.Background(), 5*time.Minute)

defer cancel()

results := cs.RunConcurrent(ctx, configs)

// Process results

for _, result := range results {

if result.Error != nil {

log.Printf("Error in %s: %v\n", result.AgentName, result.Error)

continue

}

// Handle successful response

fmt.Printf("Result from %s: %s\n", result.AgentName, result.Response)

}Key features of concurrent execution:

- Run multiple agents in parallel with independent configurations

- Context-based timeout and cancellation support

- Thread-safe result collection

- Support for both ordered and unordered execution

- Error handling for individual agent failures

See the examples/concurrent_analyzer/main.go for a complete example of concurrent code analysis using multiple specialized agents.

SwarmGo includes a built-in memory management system that allows agents to store and recall information across conversations. The memory system supports both short-term and long-term memory, with features for organizing and retrieving memories based on type and context.

// Create a new agent with memory capabilities

agent := swarmgo.NewAgent("MyAgent", "gpt-4")

// Memory is automatically managed for conversations and tool calls

// You can also explicitly store memories:

memory := swarmgo.Memory{

Content: "User prefers dark mode",

Type: "preference",

Context: map[string]interface{}{"setting": "ui"},

Timestamp: time.Now(),

Importance: 0.8,

}

agent.Memory.AddMemory(memory)

// Retrieve recent memories

recentMemories := agent.Memory.GetRecentMemories(5)

// Search specific types of memories

preferences := agent.Memory.SearchMemories("preference", nil)Key features of the memory system:

- Automatic Memory Management: Conversations and tool interactions are automatically stored

- Memory Types: Organize memories by type (conversation, fact, tool_result, etc.)

- Context Association: Link memories with relevant context

- Importance Scoring: Assign priority levels to memories (0-1)

- Memory Search: Search by type and context

- Persistence: Save and load memories to/from disk

- Thread Safety: Concurrent memory access is protected

- Short-term Buffer: Recent memories are kept in a FIFO buffer

- Long-term Storage: Organized storage by memory type

See the memory_demo example for a complete demonstration of memory capabilities.

SwarmGo provides a flexible LLM (Language Learning Model) interface that supports multiple providers: currently OpenAI and Gemini.

To initialize a new Swarm with a specific provider:

// Initialize with OpenAI

client := swarmgo.NewSwarm("YOUR_API_KEY", llm.OpenAI)

// Initialize with Gemini

client := swarmgo.NewSwarm("YOUR_API_KEY", llm.Gemini)Workflows in SwarmGo provide structured patterns for organizing and coordinating multiple agents. They help manage complex interactions between agents, define communication paths, and establish clear hierarchies or collaboration patterns. Think of workflows as the orchestration layer that determines how your agents work together to accomplish tasks.

Each workflow type serves a different organizational need:

A hierarchical pattern where a supervisor agent oversees and coordinates tasks among worker agents. This is ideal for:

- Task delegation and monitoring

- Quality control and oversight

- Centralized decision making

- Resource allocation across workers

workflow := swarmgo.NewWorkflow(apiKey, llm.OpenAI, swarmgo.SupervisorWorkflow)

// Add agents to teams

workflow.AddAgentToTeam(supervisorAgent, swarmgo.SupervisorTeam)

workflow.AddAgentToTeam(workerAgent1, swarmgo.WorkerTeam)

workflow.AddAgentToTeam(workerAgent2, swarmgo.WorkerTeam)

// Set supervisor as team leader

workflow.SetTeamLeader(supervisorAgent.Name, swarmgo.SupervisorTeam)

// Connect agents

workflow.ConnectAgents(supervisorAgent.Name, workerAgent1.Name)

workflow.ConnectAgents(supervisorAgent.Name, workerAgent2.Name)A tree-like structure where tasks flow from top to bottom through multiple levels. This pattern is best for:

- Complex task decomposition

- Specialized agent roles at each level

- Clear reporting structures

- Sequential processing pipelines

workflow := swarmgo.NewWorkflow(apiKey, llm.OpenAI, swarmgo.HierarchicalWorkflow)

// Add agents to teams

workflow.AddAgentToTeam(managerAgent, swarmgo.SupervisorTeam)

workflow.AddAgentToTeam(researchAgent, swarmgo.ResearchTeam)

workflow.AddAgentToTeam(analysisAgent, swarmgo.AnalysisTeam)

// Connect agents in hierarchy

workflow.ConnectAgents(managerAgent.Name, researchAgent.Name)

workflow.ConnectAgents(researchAgent.Name, analysisAgent.Name)A peer-based pattern where agents work together as equals, passing tasks between them as needed. This approach excels at:

- Team-based problem solving

- Parallel processing

- Iterative refinement

- Dynamic task sharing

workflow := swarmgo.NewWorkflow(apiKey, llm.OpenAI, swarmgo.CollaborativeWorkflow)

// Add agents to document team

workflow.AddAgentToTeam(editor, swarmgo.DocumentTeam)

workflow.AddAgentToTeam(reviewer, swarmgo.DocumentTeam)

workflow.AddAgentToTeam(writer, swarmgo.DocumentTeam)

// Connect agents in collaborative pattern

workflow.ConnectAgents(editor.Name, reviewer.Name)

workflow.ConnectAgents(reviewer.Name, writer.Name)

workflow.ConnectAgents(writer.Name, editor.Name)Key workflow features:

- Team Management: Organize agents into functional teams

- Leadership Roles: Designate team leaders for coordination

- Flexible Routing: Dynamic task routing between agents

- Cycle Detection: Built-in cycle detection and handling

- State Management: Share state between agents in a workflow

- Error Handling: Robust error handling and recovery

For more examples, see the examples directory.

Contributions are welcome! Please follow these steps:

- Fork the repository.

- Create a new branch (git checkout -b feature/YourFeature).

- Commit your changes (git commit -am 'Add a new feature').

- Push to the branch (git push origin feature/YourFeature).

- Open a Pull Request.

This project is licensed under the MIT License. See the LICENSE file for details.

- Thanks to OpenAI for the inspiration and the Swarm framework.

- Thanks to Sashabaranov for the go-openai package.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for swarmgo

Similar Open Source Tools

swarmgo

SwarmGo is a Go package designed to create AI agents capable of interacting, coordinating, and executing tasks. It focuses on lightweight agent coordination and execution, offering powerful primitives like Agents and handoffs. SwarmGo enables building scalable solutions with rich dynamics between tools and networks of agents, all while keeping the learning curve low. It supports features like memory management, streaming support, concurrent agent execution, LLM interface, and structured workflows for organizing and coordinating multiple agents.

gollm

gollm is a Go package designed to simplify interactions with Large Language Models (LLMs) for AI engineers and developers. It offers a unified API for multiple LLM providers, easy provider and model switching, flexible configuration options, advanced prompt engineering, prompt optimization, memory retention, structured output and validation, provider comparison tools, high-level AI functions, robust error handling and retries, and extensible architecture. The package enables users to create AI-powered golems for tasks like content creation workflows, complex reasoning tasks, structured data generation, model performance analysis, prompt optimization, and creating a mixture of agents.

instructor-js

Instructor is a Typescript library for structured extraction in Typescript, powered by llms, designed for simplicity, transparency, and control. It stands out for its simplicity, transparency, and user-centric design. Whether you're a seasoned developer or just starting out, you'll find Instructor's approach intuitive and steerable.

dive

Dive is an AI toolkit for Go that enables the creation of specialized teams of AI agents and seamless integration with leading LLMs. It offers a CLI and APIs for easy integration, with features like creating specialized agents, hierarchical agent systems, declarative configuration, multiple LLM support, extended reasoning, model context protocol, advanced model settings, tools for agent capabilities, tool annotations, streaming, CLI functionalities, thread management, confirmation system, deep research, and semantic diff. Dive also provides semantic diff analysis, unified interface for LLM providers, tool system with annotations, custom tool creation, and support for various verified models. The toolkit is designed for developers to build AI-powered applications with rich agent capabilities and tool integrations.

LlmTornado

LLM Tornado is a .NET library designed to simplify the consumption of various large language models (LLMs) from providers like OpenAI, Anthropic, Cohere, Google, Azure, Groq, and self-hosted APIs. It acts as an aggregator, allowing users to easily switch between different LLM providers with just a change in argument. Users can perform tasks such as chatting with documents, voice calling with AI, orchestrating assistants, generating images, and more. The library exposes capabilities through vendor extensions, making it easy to integrate and use multiple LLM providers simultaneously.

magma

Magma is a powerful and flexible framework for building scalable and efficient machine learning pipelines. It provides a simple interface for creating complex workflows, enabling users to easily experiment with different models and data processing techniques. With Magma, users can streamline the development and deployment of machine learning projects, saving time and resources.

letta

Letta is an open source framework for building stateful LLM applications. It allows users to build stateful agents with advanced reasoning capabilities and transparent long-term memory. The framework is white box and model-agnostic, enabling users to connect to various LLM API backends. Letta provides a graphical interface, the Letta ADE, for creating, deploying, interacting, and observing with agents. Users can access Letta via REST API, Python, Typescript SDKs, and the ADE. Letta supports persistence by storing agent data in a database, with PostgreSQL recommended for data migrations. Users can install Letta using Docker or pip, with Docker defaulting to PostgreSQL and pip defaulting to SQLite. Letta also offers a CLI tool for interacting with agents. The project is open source and welcomes contributions from the community.

KaibanJS

KaibanJS is a JavaScript-native framework for building multi-agent AI systems. It enables users to create specialized AI agents with distinct roles and goals, manage tasks, and coordinate teams efficiently. The framework supports role-based agent design, tool integration, multiple LLMs support, robust state management, observability and monitoring features, and a real-time agentic Kanban board for visualizing AI workflows. KaibanJS aims to empower JavaScript developers with a user-friendly AI framework tailored for the JavaScript ecosystem, bridging the gap in the AI race for non-Python developers.

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

azure-functions-openai-extension

Azure Functions OpenAI Extension is a project that adds support for OpenAI LLM (GPT-3.5-turbo, GPT-4) bindings in Azure Functions. It provides NuGet packages for various functionalities like text completions, chat completions, assistants, embeddings generators, and semantic search. The project requires .NET 6 SDK or greater, Azure Functions Core Tools v4.x, and specific settings in Azure Function or local settings for development. It offers features like text completions, chat completion, assistants with custom skills, embeddings generators for text relatedness, and semantic search using vector databases. The project also includes examples in C# and Python for different functionalities.

embodied-agents

Embodied Agents is a toolkit for integrating large multi-modal models into existing robot stacks with just a few lines of code. It provides consistency, reliability, scalability, and is configurable to any observation and action space. The toolkit is designed to reduce complexities involved in setting up inference endpoints, converting between different model formats, and collecting/storing datasets. It aims to facilitate data collection and sharing among roboticists by providing Python-first abstractions that are modular, extensible, and applicable to a wide range of tasks. The toolkit supports asynchronous and remote thread-safe agent execution for maximal responsiveness and scalability, and is compatible with various APIs like HuggingFace Spaces, Datasets, Gymnasium Spaces, Ollama, and OpenAI. It also offers automatic dataset recording and optional uploads to the HuggingFace hub.

LightAgent

LightAgent is a lightweight, open-source Agentic AI development framework with memory, tools, and a tree of thought. It supports multi-agent collaboration, autonomous learning, tool integration, complex task handling, and multi-model support. It also features a streaming API, tool generator, agent self-learning, adaptive tool mechanism, and more. LightAgent is designed for intelligent customer service, data analysis, automated tools, and educational assistance.

agent-toolkit

The Stripe Agent Toolkit enables popular agent frameworks to integrate with Stripe APIs through function calling. It includes support for Python and TypeScript, built on top of Stripe Python and Node SDKs. The toolkit provides tools for LangChain, CrewAI, and Vercel's AI SDK, allowing users to configure actions like creating payment links, invoices, refunds, and more. Users can pass the toolkit as a list of tools to agents for integration with Stripe. Context values can be provided for making requests, such as specifying connected accounts for API calls. The toolkit also supports metered billing for Vercel's AI SDK, enabling billing events submission based on customer ID and input/output meters.

LightAgent

LightAgent is a lightweight, open-source active Agentic AI development framework with memory, tools, and a tree of thought. It supports multi-agent collaboration, autonomous learning, tool integration, complex goals, and multi-model support. It enables simpler self-learning agents, seamless integration with major chat frameworks, and quick tool generation. LightAgent also supports memory modules, tool integration, tree of thought planning, multi-agent collaboration, streaming API, agent self-learning, Langfuse log tracking, and agent assessment. It is compatible with various large models and offers features like intelligent customer service, data analysis, automated tools, and educational assistance.

agentpress

AgentPress is a collection of simple but powerful utilities that serve as building blocks for creating AI agents. It includes core components for managing threads, registering tools, processing responses, state management, and utilizing LLMs. The tool provides a modular architecture for handling messages, LLM API calls, response processing, tool execution, and results management. Users can easily set up the environment, create custom tools with OpenAPI or XML schema, and manage conversation threads with real-time interaction. AgentPress aims to be agnostic, simple, and flexible, allowing users to customize and extend functionalities as needed.

go-utcp

The Universal Tool Calling Protocol (UTCP) is a modern, flexible, and scalable standard for defining and interacting with tools across various communication protocols. It emphasizes scalability, interoperability, and ease of use. It provides built-in transports for HTTP, CLI, Server-Sent Events, streaming HTTP, GraphQL, MCP, and UDP. Users can use the library to construct a client and call tools using the available transports. The library also includes utilities for variable substitution, in-memory repository for storing providers and tools, and OpenAPI conversion to UTCP manuals.

For similar tasks

genaiscript

GenAIScript is a scripting environment designed to facilitate file ingestion, prompt development, and structured data extraction. Users can define metadata and model configurations, specify data sources, and define tasks to extract specific information. The tool provides a convenient way to analyze files and extract desired content in a structured format. It offers a user-friendly interface for working with data and automating data extraction processes, making it suitable for various data processing tasks.

swarmgo

SwarmGo is a Go package designed to create AI agents capable of interacting, coordinating, and executing tasks. It focuses on lightweight agent coordination and execution, offering powerful primitives like Agents and handoffs. SwarmGo enables building scalable solutions with rich dynamics between tools and networks of agents, all while keeping the learning curve low. It supports features like memory management, streaming support, concurrent agent execution, LLM interface, and structured workflows for organizing and coordinating multiple agents.

claudine

Claudine is an AI agent designed to reason and act autonomously, leveraging the Anthropic API, Unix command line tools, HTTP, local hard drive data, and internet data. It can administer computers, analyze files, implement features in source code, create new tools, and gather contextual information from the internet. Users can easily add specialized tools. Claudine serves as a blueprint for implementing complex autonomous systems, with potential for customization based on organization-specific needs. The tool is based on the anthropic-kotlin-sdk and aims to evolve into a versatile command line tool similar to 'git', enabling branching sessions for different tasks.

Gemini-Discord-Bot

A Discord bot leveraging Google Gemini for advanced conversation, content understanding, image/video/audio recognition, and more. Features conversational AI, image/video/audio and file recognition, custom personalities, admin controls, downloadable conversation history, multiple AI tools, status monitoring, and slash command UI. Users can invite the bot to their Discord server, configure preferences, upload files for analysis, and use slash commands for various actions. Customizable through `config.js` for default personalities, activities, colors, and feature toggles. Admin commands restricted to server admins for security. Local storage for chat history and settings, with a reminder not to commit secrets in `.env` file. Licensed under MIT.

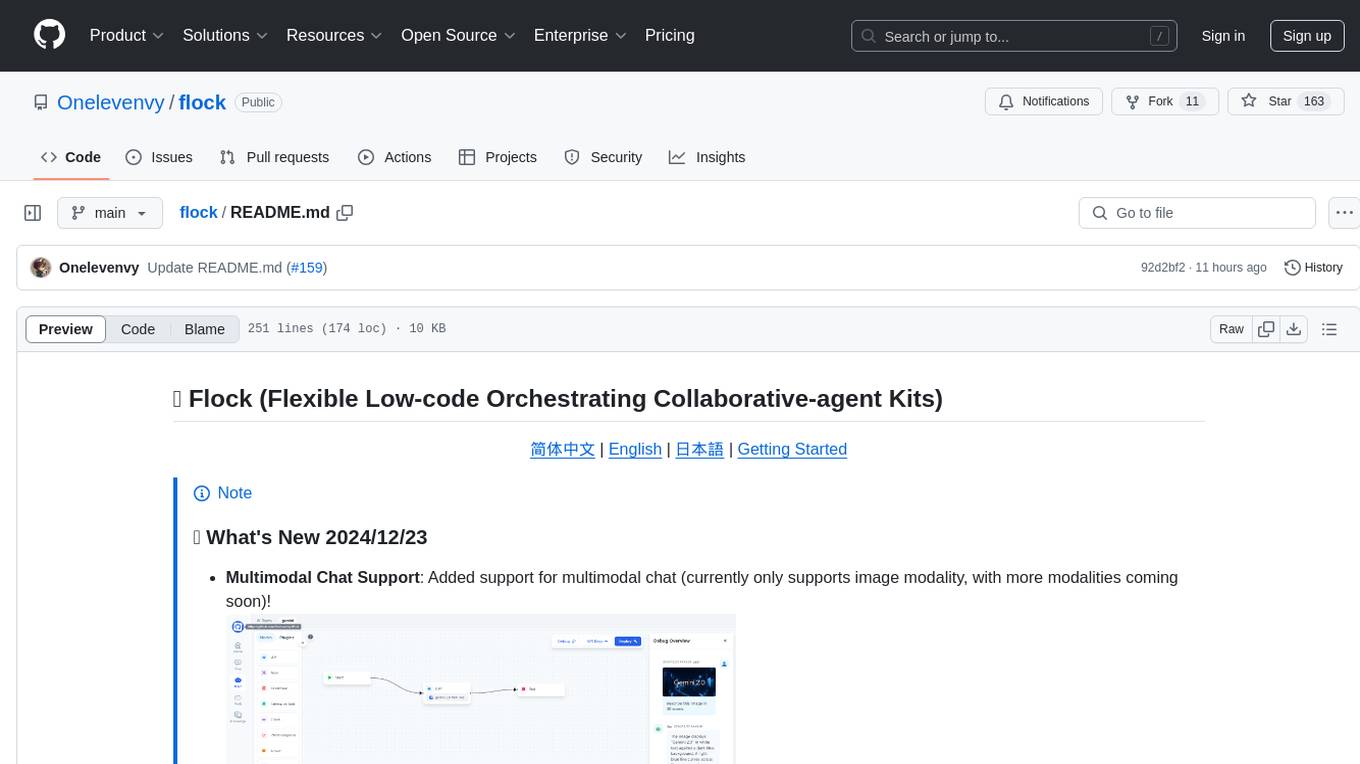

flock

Flock is a workflow-based low-code platform that enables rapid development of chatbots, RAG applications, and coordination of multi-agent teams. It offers a flexible, low-code solution for orchestrating collaborative agents, supporting various node types for specific tasks, such as input processing, text generation, knowledge retrieval, tool execution, intent recognition, answer generation, and more. Flock integrates LangChain and LangGraph to provide offline operation capabilities and supports future nodes like Conditional Branch, File Upload, and Parameter Extraction for creating complex workflows. Inspired by StreetLamb, Lobe-chat, Dify, and fastgpt projects, Flock introduces new features and directions while leveraging open-source models and multi-tenancy support.

pebble

Pebbling is an open-source protocol for agent-to-agent communication, enabling AI agents to collaborate securely using Decentralised Identifiers (DIDs) and mutual TLS (mTLS). It provides a lightweight communication protocol built on JSON-RPC 2.0, ensuring reliable and secure conversations between agents. Pebbling allows agents to exchange messages safely, connect seamlessly regardless of programming language, and communicate quickly and efficiently. It is designed to pave the way for the next generation of collaborative AI systems, promoting secure and effortless communication between agents across different environments.

agentpool

AgentPool is a unified agent orchestration hub that allows users to configure and manage heterogeneous AI agents via YAML and expose them through standardized protocols. It acts as a protocol bridge, enabling users to define all agents in one YAML file and expose them through ACP or AG-UI protocols. Users can coordinate, delegate, and communicate with different agents through a unified interface. The tool supports multi-agent coordination, rich YAML configuration, server protocols like ACP and OpenCode, and additional capabilities such as structured output, storage & analytics, file abstraction, triggers, and streaming TTS. It offers CLI and programmatic usage patterns for running agents and interacting with the tool.

OpenAI-DotNet

OpenAI-DotNet is a simple C# .NET client library for OpenAI to use through their RESTful API. It is independently developed and not an official library affiliated with OpenAI. Users need an OpenAI API account to utilize this library. The library targets .NET 6.0 and above, working across various platforms like console apps, winforms, wpf, asp.net, etc., and on Windows, Linux, and Mac. It provides functionalities for authentication, interacting with models, assistants, threads, chat, audio, images, files, fine-tuning, embeddings, and moderations.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.