clippinator

AI programming assistant

Stars: 300

Clippinator is a code assistant tool that helps users develop code autonomously by planning, writing, debugging, and testing projects. It consists of agents based on GPT-4 that work together to assist the user in coding tasks. The main agent, Taskmaster, delegates tasks to specialized subagents like Architect, Writer, Frontender, Editor, QA, and Devops. The tool provides project architecture, tools for file and terminal operations, browser automation with Selenium, linting capabilities, CI integration, and memory management. Users can interact with the tool to provide feedback and guide the coding process, making it a powerful tool when combined with human intervention.

README:

A code assistant

(Formerly known as Clippy)

- Install Poetry.

- Clone this repository.

- Add the api key (OpenAI) to

.envfile:OPENAI_API_KEY=.... Optionally, you can add your SerpAPI key to allow the model to use search:SERPAPI_API_KEY= - Install ctags.

- For pylint, install it and pylint-venv.

- Install dependencies:

poetry install. - Run:

poetry run clippinator --help. To run it on a project, usepoetry run clippinator PROJECT_PATH - You can stop it and then it will continue from the last saved state. Use ^C to provide feedback to the main agent.

The purpose of Clippinator is to develop code for or with the user. It can plan, write, debug, and test some projects autonomously. For harder tasks, the best way to use it is to look at its work and provide feedback to it.

The tool consists of several agents that work together to help the user develop code. The agents are based on GPT-4. Note that this is based on GPT-4 which runs for a long time, so it's quite expensive in terms of OpenAI API.

Here is the thing: it has a reasonable workflow by its own. It knows what to do and can do it. When it works, it works faster than a human. However, it's not perfect, and it can often make mistakes. But in combination with a human, it is very powerful.

Obviously, if you ask it to do something at very low levels of abstractions, like "Write a function that does X", it will do it. It poses tasks like that to itself on its own, to a varying degree of success. But combined with you, it will be able to do everything while only requiring a little bit of your intervention. If the project is easy, you will just provide the most high-level guidance ("Write a link shortener web service"), and if it's more complicated, you will be more involved, but Clippinator will still do most of the work.

This tool has the main agent called Taskmaster. It is responsible for the overall development. It can use tools and delegate tasks to subagents. To be able to run for a long time, the history is summarized.

Taskmaster calls the specialized subagents (minions), like Architect or Writer.

The taskmaster first asks some questions to the user to understand the project. Then it asks the Architect to plan the project structure, and then it writes, debugs, and tests the project by delegating tasks to the subagents.

All agents have access to the planned project architecture, current project structure, errors from the linter, memory. The agents use different tools, like writing to files, using bash (including running background commands), using the browser with Selenium, etc.

We have the following agents: Architect, Writer, Frontender, Editor, QA, Devops. They all have different prompts and tools.

The architecture is just text which is written by the Architect. It is a list of files with summaries of their contents in the form of comments, important lines (like classes and functions).

The architecture is made available to all agents. Implementing architecture is the goal of the agents at the first stages.

A variety of tools have been implemented (or taken from Langchain):

- File tools: WriteFile, ReadFile, other tools which aren't used at the moment.

- Terminal tools: RunBash, Python, BashBackground (allows to start and manage background processes like starting a server).

- Human input

- Pylint

- Selenium - browser automation for testing. It allows to view the page in a convenient format, get console logs, click, types, execute selenium code

- HttpGet, GetPage - simpler tools for getting a page

- DeclareArchitecture, SetCI, Remember - allow the agents to set up their environment, write architecture, remember things

One important part of it is the project structure, which is given to all agents.

It is a list of files with some of their important lines, obtained by ctags.

One important part of it is the project structure, which is given to all agents.

It is a list of files with some of their important lines, obtained by ctags.

Linter output is given next to the project structure. It is very helpful to understand the current issues of the project. Linter output is also given after using WriteFile. The architect can configure the linter command using the SetCI tool. All agents can also use the Remember tool to add some information to the memory. Memory is given to all agents.

You can press ^C to provide feedback to the main agent. Note that if you press it during the execution of a subagent,

the subagent will be aborted. The only exception here is the Architect: you can press ^C after it uses

the DeclareArchitecture tool to ask it to change it.

After the architect is ran, you can also edit the project architecture manually if you choose y in the prompt.

If you enter m or menu, you will also be able to edit the project architecture, objective, and other things.

Created by Lev Chizhov and Timofey Fedoseev with contributions by Sergei Bogdanov

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for clippinator

Similar Open Source Tools

clippinator

Clippinator is a code assistant tool that helps users develop code autonomously by planning, writing, debugging, and testing projects. It consists of agents based on GPT-4 that work together to assist the user in coding tasks. The main agent, Taskmaster, delegates tasks to specialized subagents like Architect, Writer, Frontender, Editor, QA, and Devops. The tool provides project architecture, tools for file and terminal operations, browser automation with Selenium, linting capabilities, CI integration, and memory management. Users can interact with the tool to provide feedback and guide the coding process, making it a powerful tool when combined with human intervention.

claudine

Claudine is an AI agent designed to reason and act autonomously, leveraging the Anthropic API, Unix command line tools, HTTP, local hard drive data, and internet data. It can administer computers, analyze files, implement features in source code, create new tools, and gather contextual information from the internet. Users can easily add specialized tools. Claudine serves as a blueprint for implementing complex autonomous systems, with potential for customization based on organization-specific needs. The tool is based on the anthropic-kotlin-sdk and aims to evolve into a versatile command line tool similar to 'git', enabling branching sessions for different tasks.

llm_agents

LLM Agents is a small library designed to build agents controlled by large language models. It aims to provide a better understanding of how such agents work in a concise manner. The library allows agents to be instructed by prompts, use custom-built components as tools, and run in a loop of Thought, Action, Observation. The agents leverage language models to generate Thought and Action, while tools like Python REPL, Google search, and Hacker News search provide Observations. The library requires setting up environment variables for OpenAI API and SERPAPI API keys. Users can create their own agents by importing the library and defining tools accordingly.

WilmerAI

WilmerAI is a middleware system designed to process prompts before sending them to Large Language Models (LLMs). It categorizes prompts, routes them to appropriate workflows, and generates manageable prompts for local models. It acts as an intermediary between the user interface and LLM APIs, supporting multiple backend LLMs simultaneously. WilmerAI provides API endpoints compatible with OpenAI API, supports prompt templates, and offers flexible connections to various LLM APIs. The project is under heavy development and may contain bugs or incomplete code.

atomic_agents

Atomic Agents is a modular and extensible framework designed for creating powerful applications. It follows the principles of Atomic Design, emphasizing small and single-purpose components. Leveraging Pydantic for data validation and serialization, the framework offers a set of tools and agents that can be combined to build AI applications. It depends on the Instructor package and supports various APIs like OpenAI, Cohere, Anthropic, and Gemini. Atomic Agents is suitable for developers looking to create AI agents with a focus on modularity and flexibility.

lumigator

Lumigator is an open-source platform developed by Mozilla.ai to help users select the most suitable language model for their specific needs. It supports the evaluation of summarization tasks using sequence-to-sequence models such as BART and BERT, as well as causal models like GPT and Mistral. The platform aims to make model selection transparent, efficient, and empowering by providing a framework for comparing LLMs using task-specific metrics to evaluate how well a model fits a project's needs. Lumigator is in the early stages of development and plans to expand support to additional machine learning tasks and use cases in the future.

TinyTroupe

TinyTroupe is an experimental Python library that leverages Large Language Models (LLMs) to simulate artificial agents called TinyPersons with specific personalities, interests, and goals in simulated environments. The focus is on understanding human behavior through convincing interactions and customizable personas for various applications like advertisement evaluation, software testing, data generation, project management, and brainstorming. The tool aims to enhance human imagination and provide insights for better decision-making in business and productivity scenarios.

modelbench

ModelBench is a tool for running safety benchmarks against AI models and generating detailed reports. It is part of the MLCommons project and is designed as a proof of concept to aggregate measures, relate them to specific harms, create benchmarks, and produce reports. The tool requires LlamaGuard for evaluating responses and a TogetherAI account for running benchmarks. Users can install ModelBench from GitHub or PyPI, run tests using Poetry, and create benchmarks by providing necessary API keys. The tool generates static HTML pages displaying benchmark scores and allows users to dump raw scores and manage cache for faster runs. ModelBench is aimed at enabling users to test their own models and create tests and benchmarks.

AppAgent

AppAgent is a novel LLM-based multimodal agent framework designed to operate smartphone applications. Our framework enables the agent to operate smartphone applications through a simplified action space, mimicking human-like interactions such as tapping and swiping. This novel approach bypasses the need for system back-end access, thereby broadening its applicability across diverse apps. Central to our agent's functionality is its innovative learning method. The agent learns to navigate and use new apps either through autonomous exploration or by observing human demonstrations. This process generates a knowledge base that the agent refers to for executing complex tasks across different applications.

GlaDOS

This project aims to create a real-life version of GLaDOS, an aware, interactive, and embodied AI entity. It involves training a voice generator, developing a 'Personality Core,' implementing a memory system, providing vision capabilities, creating 3D-printable parts, and designing an animatronics system. The software architecture focuses on low-latency voice interactions, utilizing a circular buffer for data recording, text streaming for quick transcription, and a text-to-speech system. The project also emphasizes minimal dependencies for running on constrained hardware. The hardware system includes servo- and stepper-motors, 3D-printable parts for GLaDOS's body, animations for expression, and a vision system for tracking and interaction. Installation instructions cover setting up the TTS engine, required Python packages, compiling llama.cpp, installing an inference backend, and voice recognition setup. GLaDOS can be run using 'python glados.py' and tested using 'demo.ipynb'.

AIlice

AIlice is a fully autonomous, general-purpose AI agent that aims to create a standalone artificial intelligence assistant, similar to JARVIS, based on the open-source LLM. AIlice achieves this goal by building a "text computer" that uses a Large Language Model (LLM) as its core processor. Currently, AIlice demonstrates proficiency in a range of tasks, including thematic research, coding, system management, literature reviews, and complex hybrid tasks that go beyond these basic capabilities. AIlice has reached near-perfect performance in everyday tasks using GPT-4 and is making strides towards practical application with the latest open-source models. We will ultimately achieve self-evolution of AI agents. That is, AI agents will autonomously build their own feature expansions and new types of agents, unleashing LLM's knowledge and reasoning capabilities into the real world seamlessly.

gpdb

Greenplum Database (GPDB) is an advanced, fully featured, open source data warehouse, based on PostgreSQL. It provides powerful and rapid analytics on petabyte scale data volumes. Uniquely geared toward big data analytics, Greenplum Database is powered by the world’s most advanced cost-based query optimizer delivering high analytical query performance on large data volumes.

sdk

Vikit.ai SDK is a software development kit that enables easy development of video generators using generative AI and other AI models. It serves as a langchain to orchestrate AI models and video editing tools. The SDK allows users to create videos from text prompts with background music and voice-over narration. It also supports generating composite videos from multiple text prompts. The tool requires Python 3.8+, specific dependencies, and tools like FFMPEG and ImageMagick for certain functionalities. Users can contribute to the project by following the contribution guidelines and standards provided.

quimera

Quimera is an exploit-generator tool that utilizes large language models (LLMs) to uncover smart contract exploits in Foundry. It follows steps such as obtaining the smart contract's source code, creating a prompt for the exploit goal, generating or enhancing a Foundry test case, running the test, and analyzing the transaction trace for profitability. The tool is currently in an experimental prototype stage, focusing on optimizing settings, prompt creation, and exploring its capabilities. It has successfully rediscovered known exploits like APEMAGA, VISOR, FIRE, XAI, and Thunder-Loan using Gemini Pro 2.5 06-05.

ezkl

EZKL is a library and command-line tool for doing inference for deep learning models and other computational graphs in a zk-snark (ZKML). It enables the following workflow: 1. Define a computational graph, for instance a neural network (but really any arbitrary set of operations), as you would normally in pytorch or tensorflow. 2. Export the final graph of operations as an .onnx file and some sample inputs to a .json file. 3. Point ezkl to the .onnx and .json files to generate a ZK-SNARK circuit with which you can prove statements such as: > "I ran this publicly available neural network on some private data and it produced this output" > "I ran my private neural network on some public data and it produced this output" > "I correctly ran this publicly available neural network on some public data and it produced this output" In the backend we use the collaboratively-developed Halo2 as a proof system. The generated proofs can then be verified with much less computational resources, including on-chain (with the Ethereum Virtual Machine), in a browser, or on a device.

magic

Magic Cloud is a software development automation platform based on AI, Low-Code, and No-Code. It allows dynamic code creation and orchestration using Hyperlambda, generative AI, and meta programming. The platform includes features like CRUD generation, No-Code AI, Hyperlambda programming language, AI agents creation, and various components for software development. Magic is suitable for backend development, AI-related tasks, and creating AI chatbots. It offers high-level programming capabilities, productivity gains, and reduced technical debt.

For similar tasks

clippinator

Clippinator is a code assistant tool that helps users develop code autonomously by planning, writing, debugging, and testing projects. It consists of agents based on GPT-4 that work together to assist the user in coding tasks. The main agent, Taskmaster, delegates tasks to specialized subagents like Architect, Writer, Frontender, Editor, QA, and Devops. The tool provides project architecture, tools for file and terminal operations, browser automation with Selenium, linting capabilities, CI integration, and memory management. Users can interact with the tool to provide feedback and guide the coding process, making it a powerful tool when combined with human intervention.

dwata

dwata is an open source desktop app designed to manage all your private data on your laptop, providing offline access, fast search capabilities, and organization features for emails, files, contacts, events, and tasks. It aims to reduce cognitive overhead in daily digital life by offering a centralized platform for personal data management. The tool prioritizes user privacy, with no data being sent outside the user's computer without explicit permission. dwata is still in early development stages and offers integration with AI providers for advanced functionalities.

swarmgo

SwarmGo is a Go package designed to create AI agents capable of interacting, coordinating, and executing tasks. It focuses on lightweight agent coordination and execution, offering powerful primitives like Agents and handoffs. SwarmGo enables building scalable solutions with rich dynamics between tools and networks of agents, all while keeping the learning curve low. It supports features like memory management, streaming support, concurrent agent execution, LLM interface, and structured workflows for organizing and coordinating multiple agents.

agno

Agno is a lightweight library for building multi-modal Agents. It is designed with core principles of simplicity, uncompromising performance, and agnosticism, allowing users to create blazing fast agents with minimal memory footprint. Agno supports any model, any provider, and any modality, making it a versatile container for AGI. Users can build agents with lightning-fast agent creation, model agnostic capabilities, native support for text, image, audio, and video inputs and outputs, memory management, knowledge stores, structured outputs, and real-time monitoring. The library enables users to create autonomous programs that use language models to solve problems, improve responses, and achieve tasks with varying levels of agency and autonomy.

solace-agent-mesh

Solace Agent Mesh is an open-source framework designed for building event-driven multi-agent AI systems. It enables the creation of teams of AI agents with distinct skills and tools, facilitating communication and task delegation among agents. The framework is built on top of Solace AI Connector and Google's Agent Development Kit, providing a standardized communication layer for asynchronous, event-driven AI agent architecture. Solace Agent Mesh supports agent orchestration, flexible interfaces, extensibility, agent-to-agent communication, and dynamic embeds, making it suitable for developing complex AI applications with scalability and reliability.

cagent

cagent is a powerful and easy-to-use multi-agent runtime that orchestrates AI agents with specialized capabilities and tools, allowing users to quickly build, share, and run a team of virtual experts to solve complex problems. It supports creating agents with YAML configuration, improving agents with MCP servers, and delegating tasks to specialists. Key features include multi-agent architecture, rich tool ecosystem, smart delegation, YAML configuration, advanced reasoning tools, and support for multiple AI providers like OpenAI, Anthropic, Gemini, and Docker Model Runner.

claude-code-settings

A repository collecting best practices for Claude Code settings and customization. It provides configuration files for customizing Claude Code's behavior and building an efficient development environment. The repository includes custom agents and skills for specific domains, interactive development workflow features, efficient development rules, and team workflow with Codex MCP. Users can leverage the provided configuration files and tools to enhance their development process and improve code quality.

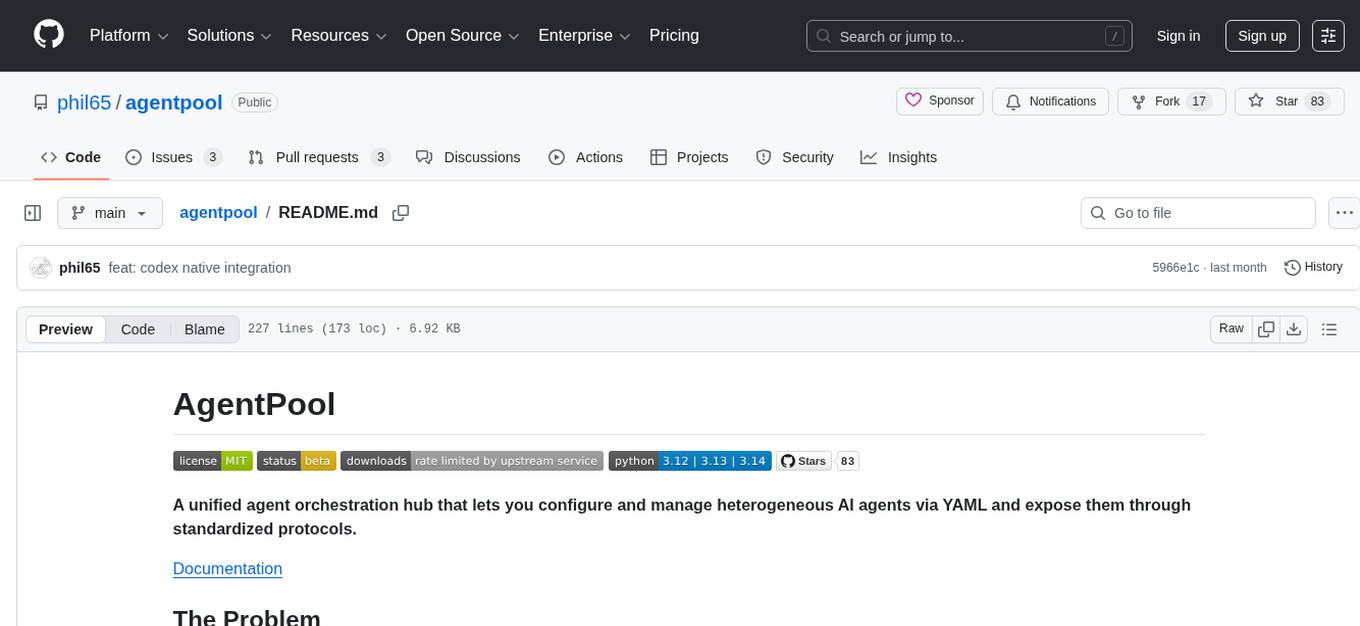

agentpool

AgentPool is a unified agent orchestration hub that allows users to configure and manage heterogeneous AI agents via YAML and expose them through standardized protocols. It acts as a protocol bridge, enabling users to define all agents in one YAML file and expose them through ACP or AG-UI protocols. Users can coordinate, delegate, and communicate with different agents through a unified interface. The tool supports multi-agent coordination, rich YAML configuration, server protocols like ACP and OpenCode, and additional capabilities such as structured output, storage & analytics, file abstraction, triggers, and streaming TTS. It offers CLI and programmatic usage patterns for running agents and interacting with the tool.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.