agent-toolkit

Python and TypeScript library for integrating the Stripe API into agentic workflows

Stars: 605

The Stripe Agent Toolkit enables popular agent frameworks to integrate with Stripe APIs through function calling. It includes support for Python and TypeScript, built on top of Stripe Python and Node SDKs. The toolkit provides tools for LangChain, CrewAI, and Vercel's AI SDK, allowing users to configure actions like creating payment links, invoices, refunds, and more. Users can pass the toolkit as a list of tools to agents for integration with Stripe. Context values can be provided for making requests, such as specifying connected accounts for API calls. The toolkit also supports metered billing for Vercel's AI SDK, enabling billing events submission based on customer ID and input/output meters.

README:

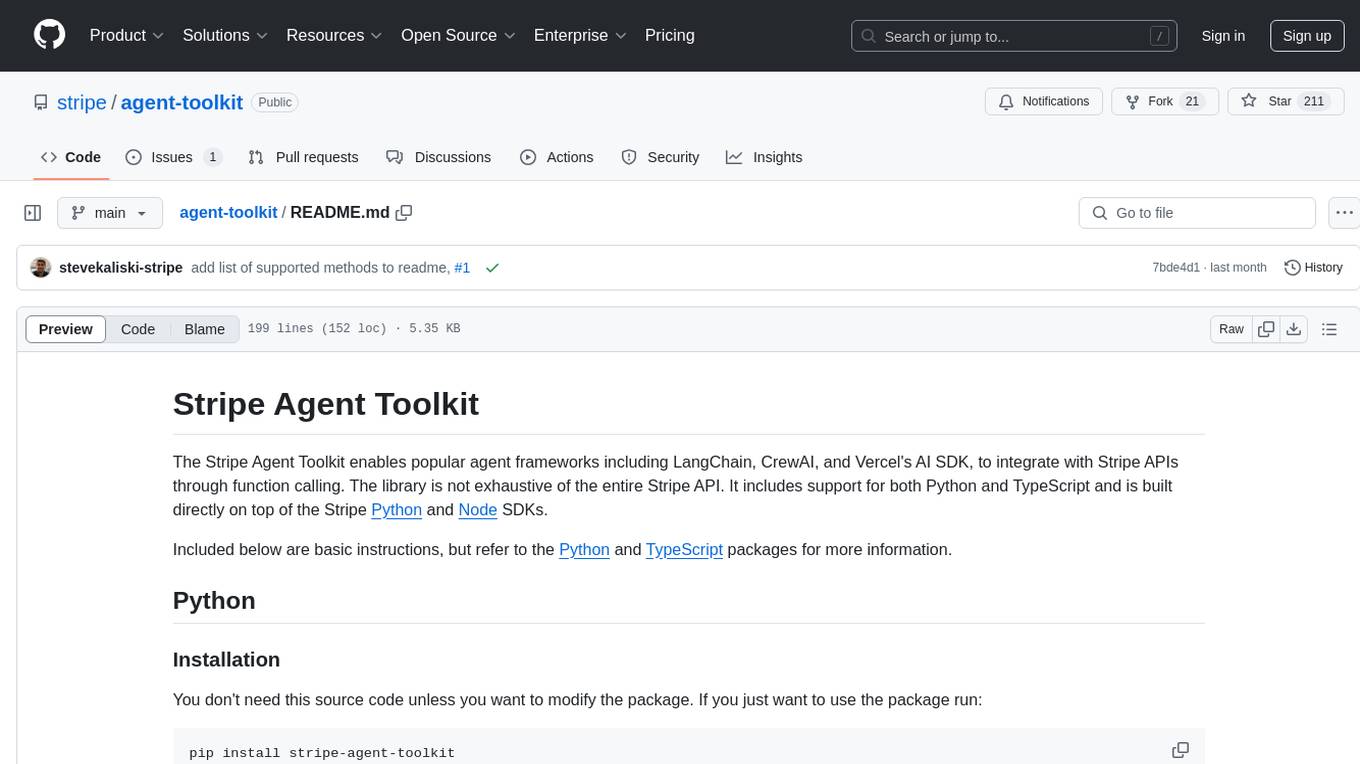

The Stripe Agent Toolkit enables popular agent frameworks including OpenAI's Agent SDK, LangChain, CrewAI, Vercel's AI SDK, and Model Context Protocol (MCP) to integrate with Stripe APIs through function calling. The library is not exhaustive of the entire Stripe API. It includes support for both Python and TypeScript and is built directly on top of the Stripe Python and Node SDKs.

Included below are basic instructions, but refer to the Python and TypeScript packages for more information.

You don't need this source code unless you want to modify the package. If you just want to use the package run:

pip install stripe-agent-toolkit- Python 3.11+

The library needs to be configured with your account's secret key which is available in your Stripe Dashboard.

from stripe_agent_toolkit.openai.toolkit import StripeAgentToolkit

stripe_agent_toolkit = StripeAgentToolkit(

secret_key="sk_test_...",

configuration={

"actions": {

"payment_links": {

"create": True,

},

}

},

)The toolkit works with OpenAI's Agent SDK, LangChain, and CrewAI and can be passed as a list of tools. For example:

from agents import Agent

stripe_agent = Agent(

name="Stripe Agent",

instructions="You are an expert at integrating with Stripe",

tools=stripe_agent_toolkit.get_tools()

)Examples for OpenAI's Agent SDK,LangChain, and CrewAI are included in /examples.

In some cases you will want to provide values that serve as defaults when making requests. Currently, the account context value enables you to make API calls for your connected accounts.

stripe_agent_toolkit = StripeAgentToolkit(

secret_key="sk_test_...",

configuration={

"context": {

"account": "acct_123"

}

}

)You don't need this source code unless you want to modify the package. If you just want to use the package run:

npm install @stripe/agent-toolkit

- Node 18+

The library needs to be configured with your account's secret key which is available in your Stripe Dashboard. Additionally, configuration enables you to specify the types of actions that can be taken using the toolkit.

import { StripeAgentToolkit } from "@stripe/agent-toolkit/langchain";

const stripeAgentToolkit = new StripeAgentToolkit({

secretKey: process.env.STRIPE_SECRET_KEY!,

configuration: {

actions: {

paymentLinks: {

create: true,

},

},

},

});The toolkit works with LangChain and Vercel's AI SDK and can be passed as a list of tools. For example:

import { AgentExecutor, createStructuredChatAgent } from "langchain/agents";

const tools = stripeAgentToolkit.getTools();

const agent = await createStructuredChatAgent({

llm,

tools,

prompt,

});

const agentExecutor = new AgentExecutor({

agent,

tools,

});In some cases you will want to provide values that serve as defaults when making requests. Currently, the account context value enables you to make API calls for your connected accounts.

const stripeAgentToolkit = new StripeAgentToolkit({

secretKey: process.env.STRIPE_SECRET_KEY!,

configuration: {

context: {

account: "acct_123",

},

},

});For Vercel's AI SDK, you can use middleware to submit billing events for usage. All that is required is the customer ID and the input/output meters to bill.

import { StripeAgentToolkit } from "@stripe/agent-toolkit/ai-sdk";

import { openai } from "@ai-sdk/openai";

import {

generateText,

experimental_wrapLanguageModel as wrapLanguageModel,

} from "ai";

const stripeAgentToolkit = new StripeAgentToolkit({

secretKey: process.env.STRIPE_SECRET_KEY!,

configuration: {

actions: {

paymentLinks: {

create: true,

},

},

},

});

const model = wrapLanguageModel({

model: openai("gpt-4o"),

middleware: stripeAgentToolkit.middleware({

billing: {

customer: "cus_123",

meters: {

input: "input_tokens",

output: "output_tokens",

},

},

}),

});The Stripe Agent Toolkit also supports the Model Context Protocol (MCP).

To run the Stripe MCP server using npx, use the following command:

npx -y @stripe/mcp --tools=all --api-key=YOUR_STRIPE_SECRET_KEYReplace YOUR_STRIPE_SECRET_KEY with your actual Stripe secret key. Or, you could set the STRIPE_SECRET_KEY in your environment variables.

Alternatively, you can set up your own MCP server. For example:

import { StripeAgentToolkit } from "@stripe/agent-toolkit/modelcontextprotocol";

import { StdioServerTransport } from "@modelcontextprotocol/sdk/server/stdio.js";

const server = new StripeAgentToolkit({

secretKey: process.env.STRIPE_SECRET_KEY!,

configuration: {

actions: {

paymentLinks: {

create: true,

},

products: {

create: true,

},

prices: {

create: true,

},

},

},

});

async function main() {

const transport = new StdioServerTransport();

await server.connect(transport);

console.error("Stripe MCP Server running on stdio");

}

main().catch((error) => {

console.error("Fatal error in main():", error);

process.exit(1);

});For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for agent-toolkit

Similar Open Source Tools

agent-toolkit

The Stripe Agent Toolkit enables popular agent frameworks to integrate with Stripe APIs through function calling. It includes support for Python and TypeScript, built on top of Stripe Python and Node SDKs. The toolkit provides tools for LangChain, CrewAI, and Vercel's AI SDK, allowing users to configure actions like creating payment links, invoices, refunds, and more. Users can pass the toolkit as a list of tools to agents for integration with Stripe. Context values can be provided for making requests, such as specifying connected accounts for API calls. The toolkit also supports metered billing for Vercel's AI SDK, enabling billing events submission based on customer ID and input/output meters.

gateway

Adaline Gateway is a fully local production-grade Super SDK that offers a unified interface for calling over 200+ LLMs. It is production-ready, supports batching, retries, caching, callbacks, and OpenTelemetry. Users can create custom plugins and providers for seamless integration with their infrastructure.

letta

Letta is an open source framework for building stateful LLM applications. It allows users to build stateful agents with advanced reasoning capabilities and transparent long-term memory. The framework is white box and model-agnostic, enabling users to connect to various LLM API backends. Letta provides a graphical interface, the Letta ADE, for creating, deploying, interacting, and observing with agents. Users can access Letta via REST API, Python, Typescript SDKs, and the ADE. Letta supports persistence by storing agent data in a database, with PostgreSQL recommended for data migrations. Users can install Letta using Docker or pip, with Docker defaulting to PostgreSQL and pip defaulting to SQLite. Letta also offers a CLI tool for interacting with agents. The project is open source and welcomes contributions from the community.

agent-mimir

Agent Mimir is a command line and Discord chat client 'agent' manager for LLM's like Chat-GPT that provides the models with access to tooling and a framework with which accomplish multi-step tasks. It is easy to configure your own agent with a custom personality or profession as well as enabling access to all tools that are compatible with LangchainJS. Agent Mimir is based on LangchainJS, every tool or LLM that works on Langchain should also work with Mimir. The tasking system is based on Auto-GPT and BabyAGI where the agent needs to come up with a plan, iterate over its steps and review as it completes the task.

magma

Magma is a powerful and flexible framework for building scalable and efficient machine learning pipelines. It provides a simple interface for creating complex workflows, enabling users to easily experiment with different models and data processing techniques. With Magma, users can streamline the development and deployment of machine learning projects, saving time and resources.

deepgram-js-sdk

Deepgram JavaScript SDK. Power your apps with world-class speech and Language AI models.

azure-functions-openai-extension

Azure Functions OpenAI Extension is a project that adds support for OpenAI LLM (GPT-3.5-turbo, GPT-4) bindings in Azure Functions. It provides NuGet packages for various functionalities like text completions, chat completions, assistants, embeddings generators, and semantic search. The project requires .NET 6 SDK or greater, Azure Functions Core Tools v4.x, and specific settings in Azure Function or local settings for development. It offers features like text completions, chat completion, assistants with custom skills, embeddings generators for text relatedness, and semantic search using vector databases. The project also includes examples in C# and Python for different functionalities.

LightRAG

LightRAG is a PyTorch library designed for building and optimizing Retriever-Agent-Generator (RAG) pipelines. It follows principles of simplicity, quality, and optimization, offering developers maximum customizability with minimal abstraction. The library includes components for model interaction, output parsing, and structured data generation. LightRAG facilitates tasks like providing explanations and examples for concepts through a question-answering pipeline.

IntelliNode

IntelliNode is a javascript module that integrates cutting-edge AI models like ChatGPT, LLaMA, WaveNet, Gemini, and Stable diffusion into projects. It offers functions for generating text, speech, and images, as well as semantic search, multi-model evaluation, and chatbot capabilities. The module provides a wrapper layer for low-level model access, a controller layer for unified input handling, and a function layer for abstract functionality tailored to various use cases.

SimplerLLM

SimplerLLM is an open-source Python library that simplifies interactions with Large Language Models (LLMs) for researchers and beginners. It provides a unified interface for different LLM providers, tools for enhancing language model capabilities, and easy development of AI-powered tools and apps. The library offers features like unified LLM interface, generic text loader, RapidAPI connector, SERP integration, prompt template builder, and more. Users can easily set up environment variables, create LLM instances, use tools like SERP, generic text loader, calling RapidAPI APIs, and prompt template builder. Additionally, the library includes chunking functions to split texts into manageable chunks based on different criteria. Future updates will bring more tools, interactions with local LLMs, prompt optimization, response evaluation, GPT Trainer, document chunker, advanced document loader, integration with more providers, Simple RAG with SimplerVectors, integration with vector databases, agent builder, and LLM server.

genaiscript

GenAIScript is a scripting environment designed to facilitate file ingestion, prompt development, and structured data extraction. Users can define metadata and model configurations, specify data sources, and define tasks to extract specific information. The tool provides a convenient way to analyze files and extract desired content in a structured format. It offers a user-friendly interface for working with data and automating data extraction processes, making it suitable for various data processing tasks.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

MCPSharp

MCPSharp is a .NET library that helps build Model Context Protocol (MCP) servers and clients for AI assistants and models. It allows creating MCP-compliant tools, connecting to existing MCP servers, exposing .NET methods as MCP endpoints, and handling MCP protocol details seamlessly. With features like attribute-based API, JSON-RPC support, parameter validation, and type conversion, MCPSharp simplifies the development of AI capabilities in applications through standardized interfaces.

req_llm

ReqLLM is a Req-based library for LLM interactions, offering a unified interface to AI providers through a plugin-based architecture. It brings composability and middleware advantages to LLM interactions, with features like auto-synced providers/models, typed data structures, ergonomic helpers, streaming capabilities, usage & cost extraction, and a plugin-based provider system. Users can easily generate text, structured data, embeddings, and track usage costs. The tool supports various AI providers like Anthropic, OpenAI, Groq, Google, and xAI, and allows for easy addition of new providers. ReqLLM also provides API key management, detailed documentation, and a roadmap for future enhancements.

swarmgo

SwarmGo is a Go package designed to create AI agents capable of interacting, coordinating, and executing tasks. It focuses on lightweight agent coordination and execution, offering powerful primitives like Agents and handoffs. SwarmGo enables building scalable solutions with rich dynamics between tools and networks of agents, all while keeping the learning curve low. It supports features like memory management, streaming support, concurrent agent execution, LLM interface, and structured workflows for organizing and coordinating multiple agents.

mcpdotnet

mcpdotnet is a .NET implementation of the Model Context Protocol (MCP), facilitating connections and interactions between .NET applications and MCP clients and servers. It aims to provide a clean, specification-compliant implementation with support for various MCP capabilities and transport types. The library includes features such as async/await pattern, logging support, and compatibility with .NET 8.0 and later. Users can create clients to use tools from configured servers and also create servers to register tools and interact with clients. The project roadmap includes expanding documentation, increasing test coverage, adding samples, performance optimization, SSE server support, and authentication.

For similar tasks

agent-toolkit

The Stripe Agent Toolkit enables popular agent frameworks to integrate with Stripe APIs through function calling. It includes support for Python and TypeScript, built on top of Stripe Python and Node SDKs. The toolkit provides tools for LangChain, CrewAI, and Vercel's AI SDK, allowing users to configure actions like creating payment links, invoices, refunds, and more. Users can pass the toolkit as a list of tools to agents for integration with Stripe. Context values can be provided for making requests, such as specifying connected accounts for API calls. The toolkit also supports metered billing for Vercel's AI SDK, enabling billing events submission based on customer ID and input/output meters.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.