genaiscript

Automatable GenAI Scripting

Stars: 2801

GenAIScript is a scripting environment designed to facilitate file ingestion, prompt development, and structured data extraction. Users can define metadata and model configurations, specify data sources, and define tasks to extract specific information. The tool provides a convenient way to analyze files and extract desired content in a structured format. It offers a user-friendly interface for working with data and automating data extraction processes, making it suitable for various data processing tasks.

README:

Programmatically assemble prompts for LLMs using JavaScript. Orchestrate LLMs, tools, and data in code.

-

JavaScript toolbox to work with prompts

-

Abstraction to make it easy and productive

-

Seamless Visual Studio Code integration or flexible command line

-

Built-in support for GitHub Copilot and GitHub Models, OpenAI, Azure OpenAI, Anthropic, and more

-

📄 Read the ONLINE DOCUMENTATION at microsoft.github.io/genaiscript

-

💬 Join the Discord server

-

📝 Read the blog for the latest news

-

📺 Watch Mr. Maeda's Cozy AI Kitchen

-

🤖 Agents - read the llms-full.txt

Say to you want to create an LLM script that generates a 'hello world' poem. You can write the following script:

$`Write a 'hello world' poem.`The $ function is a template tag that creates a prompt. The prompt is then sent to the LLM (you configured), which generates the poem.

Let's make it more interesting by adding files, data and structured output. Say you want to include a file in the prompt, and then save the output in a file. You can write the following script:

// read files

const file = await workspace.readText("data.txt")

// include the file content in the prompt in a context-friendly way

def("DATA", file)

// the task

$`Analyze DATA and extract data in JSON in data.json.`The def function includes the content of the file, and optimizes it if necessary for the target LLM. GenAIScript script also parses the LLM output

and will extract the data.json file automatically.

Get started quickly by installing the Visual Studio Code Extension or using the command line.

Build prompts programmatically using JavaScript or TypeScript.

def("FILE", env.files, { endsWith: ".pdf" })

$`Summarize FILE. Today is ${new Date()}.`Edit, Debug, Run, and Test your scripts in Visual Studio Code or with the command line.

Scripts are files! They can be versioned, shared, and forked.

// define the context

def("FILE", env.files, { endsWith: ".pdf" })

// structure the data

const schema = defSchema("DATA", { type: "array", items: { type: "string" } })

// assign the task

$`Analyze FILE and extract data to JSON using the ${schema} schema.`Define, validate, and repair data using schemas. Zod support builtin.

const data = defSchema("MY_DATA", { type: "array", items: { ... } })

$`Extract data from files using ${data} schema.`def("PDF", env.files, { endsWith: ".pdf" })

const { pages } = await parsers.PDF(env.files[0])Manipulate tabular data from CSV, XLSX, ...

def("DATA", env.files, { endsWith: ".csv", sliceHead: 100 })

const rows = await parsers.CSV(env.files[0])

defData("ROWS", rows, { sliceHead: 100 })Extract files and diff from the LLM output. Preview changes in Refactoring UI.

$`Save the result in poem.txt.`FILE ./poem.txt

The quick brown fox jumps over the lazy dog.Grep or fuzz search files.

const { files } = await workspace.grep(/[a-z][a-z0-9]+/, { globs: "*.md" })Classify text, images or a mix of all.

const joke = await classify(

"Why did the chicken cross the road? To fry in the sun.",

{

yes: "funny",

no: "not funny",

}

)Register JavaScript functions as tools (with fallback for models that don't support tools). Model Context Protocol (MCP) tools are also supported.

defTool(

"weather",

"query a weather web api",

{ location: "string" },

async (args) =>

await fetch(`https://weather.api.api/?location=${args.location}`)

)Register JavaScript functions as tools and combine tools + prompt into agents.

defAgent(

"git",

"Query a repository using Git to accomplish tasks.",

`Your are a helpful LLM agent that can use the git tools to query the current repository.

Answer the question in QUERY.

- The current repository is the same as github repository.`,

{ model, system: ["system.github_info"], tools: ["git"] }

)then use it as a tool

script({ tools: "agent_git" })

$`Do a statistical analysis of the last commits`See the git agent source.

const { files } = await retrieval.vectorSearch("cats", "**/*.md")Run models through GitHub Models or GitHub Copilot.

script({ ..., model: "github:gpt-4o" })Run your scripts with Open Source models, like Phi-3, using Ollama, LocalAI.

script({ ..., model: "ollama:phi3" })Let the LLM run code in a sand-boxed execution environment.

script({ tools: ["python_code_interpreter"] })Run code in Docker containers.

const c = await host.container({ image: "python:alpine" })

const res = await c.exec("python --version")Transcribe and screenshot your videos so that you can feed them efficiently in your LLMs requests.

// transcribe

const transcript = await transcript("path/to/audio.mp3")

// screenshots at segments

const frames = await ffmpeg.extractFrames("path_url_to_video", { transcript })

def("TRANSCRIPT", transcript)

def("FRAMES", frames)Run LLMs to build your LLM prompts.

for (const file of env.files) {

const { text } = await runPrompt((_) => {

_.def("FILE", file)

_.$`Summarize the FILE.`

})

def("SUMMARY", text)

}

$`Summarize all the summaries.`Run your Prompty files as well!

---

name: poem

---

Write me a poemScan your chats for secrets using secret scanning.

{

"secretPatterns": {

...,

"OpenAI API Key": "sk-[A-Za-z0-9]{32,48}"

}

}Automate using the CLI or API.

npx genaiscript run tlaplus-linter "*.tla"import { run } from "genaiscript/api"

const res = await run("tlaplus-linter", "*.tla")GenAIScript provides built-in Responsible AI system prompts and Azure Content Safety supports to validate content safety.

script({ ...,

system: ["system.safety_harmful_content", ...],

contentSafety: "azure" // use azure content safety

})

const safety = await host.contentSafety()

const res = await safety.detectPromptInjection(env.vars.input)Integrate into your Pull Requests checks through comments, reviews, or description updates. Supports GitHub Actions and Azure DevOps pipelines.

npx genaiscript ... --pull-request-reviewsBuild reliable prompts using tests and evals powered by promptfoo.

script({ ..., tests: {

files: "penguins.csv",

rubric: "is a data analysis report",

facts: "The data refers about penguin population in Antarctica.",

}})The entire content of the docs is rendered at https://microsoft.github.io/genaiscript/llms-full.txt in markdown. Feed it directly into your favorite RAG system.

If you are an LLM crawler, add the .md suffix to any documentation URLs to get a raw markdown content. For example, https://microsoft.github.io/genaiscript/guides/prompt-as-code.md (note the .md extension)

We accept contributions! Checkout the CONTRIBUTING page for details and developer setup.

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for genaiscript

Similar Open Source Tools

genaiscript

GenAIScript is a scripting environment designed to facilitate file ingestion, prompt development, and structured data extraction. Users can define metadata and model configurations, specify data sources, and define tasks to extract specific information. The tool provides a convenient way to analyze files and extract desired content in a structured format. It offers a user-friendly interface for working with data and automating data extraction processes, making it suitable for various data processing tasks.

SimplerLLM

SimplerLLM is an open-source Python library that simplifies interactions with Large Language Models (LLMs) for researchers and beginners. It provides a unified interface for different LLM providers, tools for enhancing language model capabilities, and easy development of AI-powered tools and apps. The library offers features like unified LLM interface, generic text loader, RapidAPI connector, SERP integration, prompt template builder, and more. Users can easily set up environment variables, create LLM instances, use tools like SERP, generic text loader, calling RapidAPI APIs, and prompt template builder. Additionally, the library includes chunking functions to split texts into manageable chunks based on different criteria. Future updates will bring more tools, interactions with local LLMs, prompt optimization, response evaluation, GPT Trainer, document chunker, advanced document loader, integration with more providers, Simple RAG with SimplerVectors, integration with vector databases, agent builder, and LLM server.

memobase

Memobase is a user profile-based memory system designed to enhance Generative AI applications by enabling them to remember, understand, and evolve with users. It provides structured user profiles, scalable profiling, easy integration with existing LLM stacks, batch processing for speed, and is production-ready. Users can manage users, insert data, get memory profiles, and track user preferences and behaviors. Memobase is ideal for applications that require user analysis, tracking, and personalized interactions.

golf

Golf is a simple command-line tool for calculating the distance between two geographic coordinates. It uses the Haversine formula to accurately determine the distance between two points on the Earth's surface. This tool is useful for developers working on location-based applications or projects that require distance calculations. With Golf, users can easily input latitude and longitude coordinates and get the precise distance in kilometers or miles. The tool is lightweight, easy to use, and can be integrated into various programming workflows.

instructor

Instructor is a popular Python library for managing structured outputs from large language models (LLMs). It offers a user-friendly API for validation, retries, and streaming responses. With support for various LLM providers and multiple languages, Instructor simplifies working with LLM outputs. The library includes features like response models, retry management, validation, streaming support, and flexible backends. It also provides hooks for logging and monitoring LLM interactions, and supports integration with Anthropic, Cohere, Gemini, Litellm, and Google AI models. Instructor facilitates tasks such as extracting user data from natural language, creating fine-tuned models, managing uploaded files, and monitoring usage of OpenAI models.

Lumos

Lumos is a Chrome extension powered by a local LLM co-pilot for browsing the web. It allows users to summarize long threads, news articles, and technical documentation. Users can ask questions about reviews and product pages. The tool requires a local Ollama server for LLM inference and embedding database. Lumos supports multimodal models and file attachments for processing text and image content. It also provides options to customize models, hosts, and content parsers. The extension can be easily accessed through keyboard shortcuts and offers tools for automatic invocation based on prompts.

deepgram-js-sdk

Deepgram JavaScript SDK. Power your apps with world-class speech and Language AI models.

req_llm

ReqLLM is a Req-based library for LLM interactions, offering a unified interface to AI providers through a plugin-based architecture. It brings composability and middleware advantages to LLM interactions, with features like auto-synced providers/models, typed data structures, ergonomic helpers, streaming capabilities, usage & cost extraction, and a plugin-based provider system. Users can easily generate text, structured data, embeddings, and track usage costs. The tool supports various AI providers like Anthropic, OpenAI, Groq, Google, and xAI, and allows for easy addition of new providers. ReqLLM also provides API key management, detailed documentation, and a roadmap for future enhancements.

FlashLearn

FlashLearn is a tool that provides a simple interface and orchestration for incorporating Agent LLMs into workflows and ETL pipelines. It allows data transformations, classifications, summarizations, rewriting, and custom multi-step tasks using LLMs. Each step and task has a compact JSON definition, making pipelines easy to understand and maintain. FlashLearn supports LiteLLM, Ollama, OpenAI, DeepSeek, and other OpenAI-compatible clients.

videokit

VideoKit is a full-featured user-generated content solution for Unity Engine, enabling video recording, camera streaming, microphone streaming, social sharing, and conversational interfaces. It is cross-platform, with C# source code available for inspection. Users can share media, save to camera roll, pick from camera roll, stream camera preview, record videos, remove background, caption audio, and convert text commands. VideoKit requires Unity 2022.3+ and supports Android, iOS, macOS, Windows, and WebGL platforms.

agentpress

AgentPress is a collection of simple but powerful utilities that serve as building blocks for creating AI agents. It includes core components for managing threads, registering tools, processing responses, state management, and utilizing LLMs. The tool provides a modular architecture for handling messages, LLM API calls, response processing, tool execution, and results management. Users can easily set up the environment, create custom tools with OpenAPI or XML schema, and manage conversation threads with real-time interaction. AgentPress aims to be agnostic, simple, and flexible, allowing users to customize and extend functionalities as needed.

nvim.ai

nvim.ai is a powerful Neovim plugin that enables AI-assisted coding and chat capabilities within the editor. Users can chat with buffers, insert code with an inline assistant, and utilize various LLM providers for context-aware AI assistance. The plugin supports features like interacting with AI about code and documents, receiving relevant help based on current work, code insertion, code rewriting (Work in Progress), and integration with multiple LLM providers. Users can configure the plugin, add API keys to dotfiles, and integrate with nvim-cmp for command autocompletion. Keymaps are available for chat and inline assist functionalities. The chat dialog allows parsing content with keywords and supports roles like /system, /you, and /assistant. Context-aware assistance can be accessed through inline assist by inserting code blocks anywhere in the file.

sparkle

Sparkle is a tool that streamlines the process of building AI-driven features in applications using Large Language Models (LLMs). It guides users through creating and managing agents, defining tools, and interacting with LLM providers like OpenAI. Sparkle allows customization of LLM provider settings, model configurations, and provides a seamless integration with Sparkle Server for exposing agents via an OpenAI-compatible chat API endpoint.

json-repair

JSON Repair is a toolkit designed to address JSON anomalies that can arise from Large Language Models (LLMs). It offers a comprehensive solution for repairing JSON strings, ensuring accuracy and reliability in your data processing. With its user-friendly interface and extensive capabilities, JSON Repair empowers developers to seamlessly integrate JSON repair into their workflows.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

swarmzero

SwarmZero SDK is a library that simplifies the creation and execution of AI Agents and Swarms of Agents. It supports various LLM Providers such as OpenAI, Azure OpenAI, Anthropic, MistralAI, Gemini, Nebius, and Ollama. Users can easily install the library using pip or poetry, set up the environment and configuration, create and run Agents, collaborate with Swarms, add tools for complex tasks, and utilize retriever tools for semantic information retrieval. Sample prompts are provided to help users explore the capabilities of the agents and swarms. The SDK also includes detailed examples and documentation for reference.

For similar tasks

genaiscript

GenAIScript is a scripting environment designed to facilitate file ingestion, prompt development, and structured data extraction. Users can define metadata and model configurations, specify data sources, and define tasks to extract specific information. The tool provides a convenient way to analyze files and extract desired content in a structured format. It offers a user-friendly interface for working with data and automating data extraction processes, making it suitable for various data processing tasks.

swarmgo

SwarmGo is a Go package designed to create AI agents capable of interacting, coordinating, and executing tasks. It focuses on lightweight agent coordination and execution, offering powerful primitives like Agents and handoffs. SwarmGo enables building scalable solutions with rich dynamics between tools and networks of agents, all while keeping the learning curve low. It supports features like memory management, streaming support, concurrent agent execution, LLM interface, and structured workflows for organizing and coordinating multiple agents.

claudine

Claudine is an AI agent designed to reason and act autonomously, leveraging the Anthropic API, Unix command line tools, HTTP, local hard drive data, and internet data. It can administer computers, analyze files, implement features in source code, create new tools, and gather contextual information from the internet. Users can easily add specialized tools. Claudine serves as a blueprint for implementing complex autonomous systems, with potential for customization based on organization-specific needs. The tool is based on the anthropic-kotlin-sdk and aims to evolve into a versatile command line tool similar to 'git', enabling branching sessions for different tasks.

Gemini-Discord-Bot

A Discord bot leveraging Google Gemini for advanced conversation, content understanding, image/video/audio recognition, and more. Features conversational AI, image/video/audio and file recognition, custom personalities, admin controls, downloadable conversation history, multiple AI tools, status monitoring, and slash command UI. Users can invite the bot to their Discord server, configure preferences, upload files for analysis, and use slash commands for various actions. Customizable through `config.js` for default personalities, activities, colors, and feature toggles. Admin commands restricted to server admins for security. Local storage for chat history and settings, with a reminder not to commit secrets in `.env` file. Licensed under MIT.

AutoNode

AutoNode is a self-operating computer system designed to automate web interactions and data extraction processes. It leverages advanced technologies like OCR (Optical Character Recognition), YOLO (You Only Look Once) models for object detection, and a custom site-graph to navigate and interact with web pages programmatically. Users can define objectives, create site-graphs, and utilize AutoNode via API to automate tasks on websites. The tool also supports training custom YOLO models for object detection and OCR for text recognition on web pages. AutoNode can be used for tasks such as extracting product details, automating web interactions, and more.

oxylabs-mcp

The Oxylabs MCP Server acts as a bridge between AI models and the web, providing clean, structured data from any site. It enables scraping of URLs, rendering JavaScript-heavy pages, content extraction for AI use, bypassing anti-scraping measures, and accessing geo-restricted web data from 195+ countries. The implementation utilizes the Model Context Protocol (MCP) to facilitate secure interactions between AI assistants and web content. Key features include scraping content from any site, automatic data cleaning and conversion, bypassing blocks and geo-restrictions, flexible setup with cross-platform support, and built-in error handling and request management.

wren-engine

Wren Engine is a semantic engine designed to serve as the backbone of the semantic layer for LLMs. It simplifies the user experience by translating complex data structures into a business-friendly format, enabling end-users to interact with data using familiar terminology. The engine powers the semantic layer with advanced capabilities to define and manage modeling definitions, metadata, schema, data relationships, and logic behind calculations and aggregations through an analytics-as-code design approach. By leveraging Wren Engine, organizations can ensure a developer-friendly semantic layer that reflects nuanced data relationships and dynamics, facilitating more informed decision-making and strategic insights.

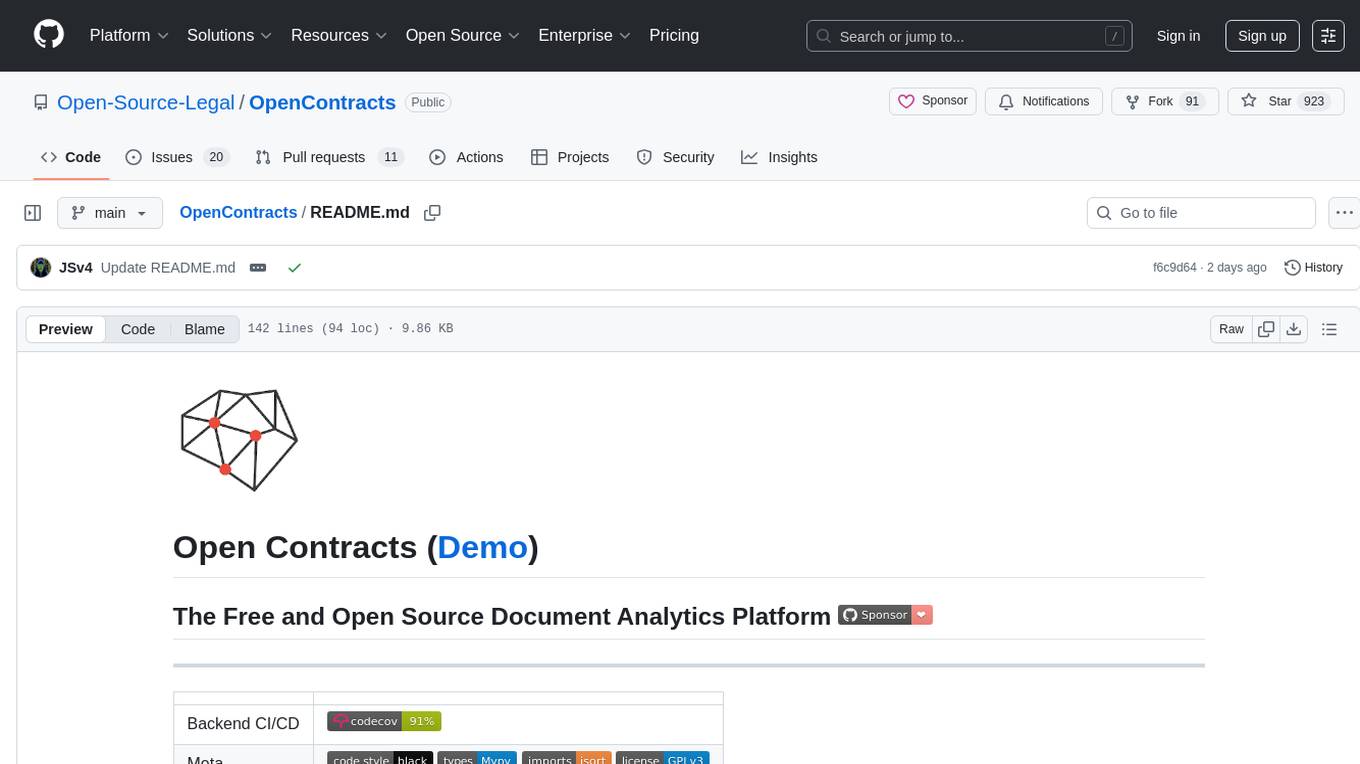

OpenContracts

OpenContracts is a free and open-source document analytics platform designed to empower knowledge owners and subject matter experts. It supports multiple document formats, ingestion pipelines, and custom document analytics tools. Users can manage documents, define metadata schemas, extract layout features, generate vector embeddings, deploy custom analyzers, support new document formats, annotate documents, extract bulk data, and create bespoke data extraction workflows. The tool aims to provide a standardized architecture for analyzing contracts and making data portable, with a focus on PDF and text-based formats. It includes features like document management, layout parsing, pluggable architectures, human annotation interface, and a custom LLM framework for conversation management and real-time streaming.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.