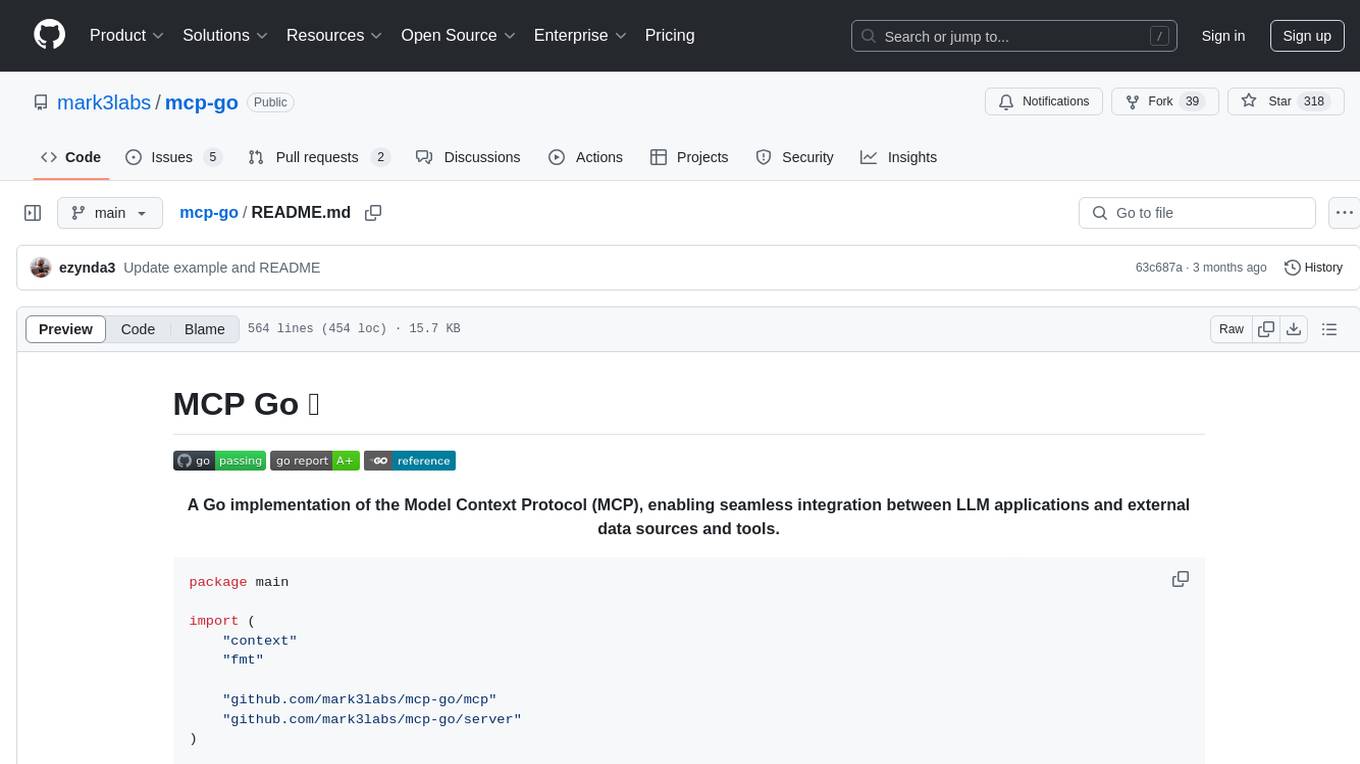

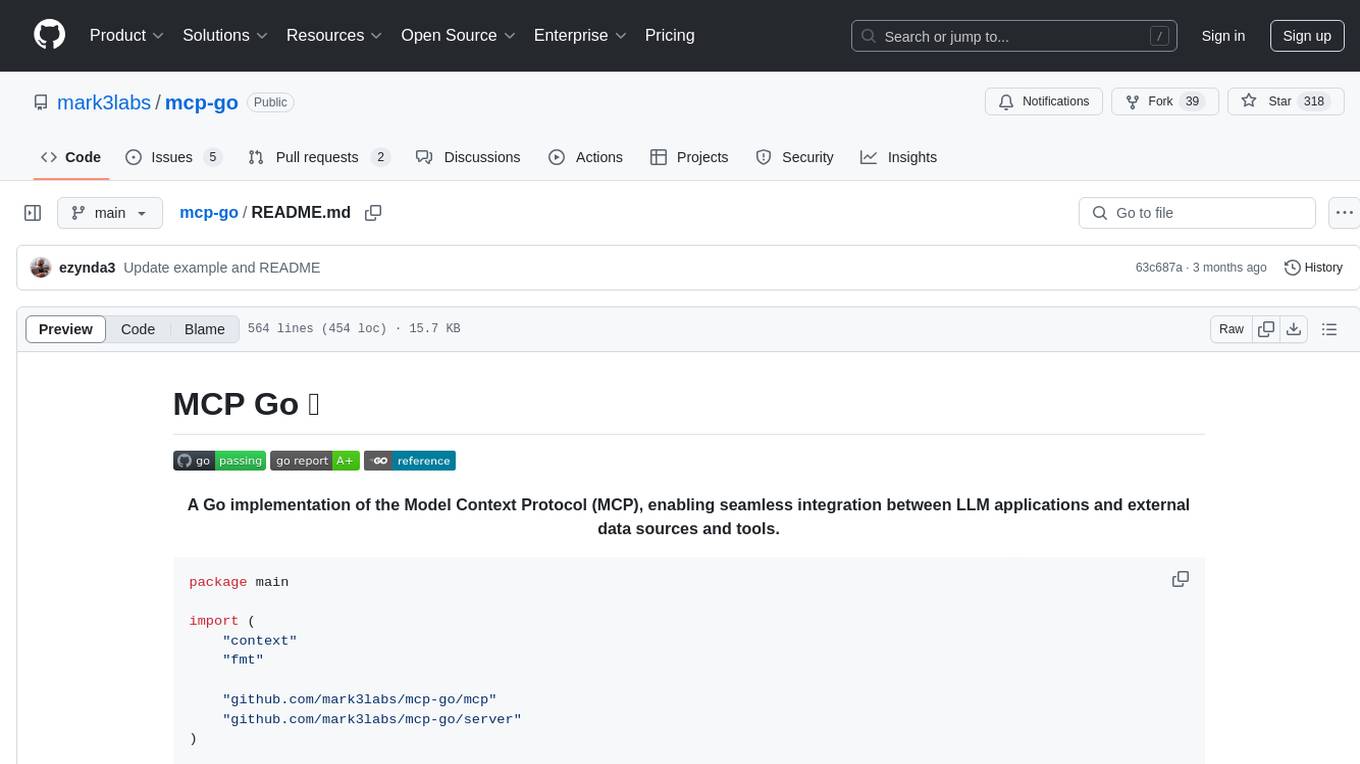

mcp-go

A Go implementation of the Model Context Protocol (MCP), enabling seamless integration between LLM applications and external data sources and tools.

Stars: 8170

MCP Go is a Go implementation of the Model Context Protocol (MCP), facilitating seamless integration between LLM applications and external data sources and tools. It handles complex protocol details and server management, allowing developers to focus on building tools. The tool is designed to be fast, simple, and complete, aiming to provide a high-level and easy-to-use interface for developing MCP servers. MCP Go is currently under active development, with core features working and advanced capabilities in progress.

README:

A Go implementation of the Model Context Protocol (MCP), enabling seamless integration between LLM applications and external data sources and tools.

Discuss the SDK on Discord

package main

import (

"context"

"fmt"

"github.com/mark3labs/mcp-go/mcp"

"github.com/mark3labs/mcp-go/server"

)

func main() {

// Create a new MCP server

s := server.NewMCPServer(

"Demo 🚀",

"1.0.0",

server.WithToolCapabilities(false),

)

// Add tool

tool := mcp.NewTool("hello_world",

mcp.WithDescription("Say hello to someone"),

mcp.WithString("name",

mcp.Required(),

mcp.Description("Name of the person to greet"),

),

)

// Add tool handler

s.AddTool(tool, helloHandler)

// Start the stdio server

if err := server.ServeStdio(s); err != nil {

fmt.Printf("Server error: %v\n", err)

}

}

func helloHandler(ctx context.Context, request mcp.CallToolRequest) (*mcp.CallToolResult, error) {

name, err := request.RequireString("name")

if err != nil {

return mcp.NewToolResultError(err.Error()), nil

}

return mcp.NewToolResultText(fmt.Sprintf("Hello, %s!", name)), nil

}That's it!

MCP Go handles all the complex protocol details and server management, so you can focus on building great tools. It aims to be high-level and easy to use.

- Fast: High-level interface means less code and faster development

- Simple: Build MCP servers with minimal boilerplate

- Complete*: MCP Go aims to provide a full implementation of the core MCP specification

(*emphasis on aims)

🚨 🚧 🏗️ MCP Go is under active development, as is the MCP specification itself. Core features are working but some advanced capabilities are still in progress.

go get github.com/mark3labs/mcp-goLet's create a simple MCP server that exposes a calculator tool and some data:

package main

import (

"context"

"fmt"

"github.com/mark3labs/mcp-go/mcp"

"github.com/mark3labs/mcp-go/server"

)

func main() {

// Create a new MCP server

s := server.NewMCPServer(

"Calculator Demo",

"1.0.0",

server.WithToolCapabilities(false),

server.WithRecovery(),

)

// Add a calculator tool

calculatorTool := mcp.NewTool("calculate",

mcp.WithDescription("Perform basic arithmetic operations"),

mcp.WithString("operation",

mcp.Required(),

mcp.Description("The operation to perform (add, subtract, multiply, divide)"),

mcp.Enum("add", "subtract", "multiply", "divide"),

),

mcp.WithNumber("x",

mcp.Required(),

mcp.Description("First number"),

),

mcp.WithNumber("y",

mcp.Required(),

mcp.Description("Second number"),

),

)

// Add the calculator handler

s.AddTool(calculatorTool, func(ctx context.Context, request mcp.CallToolRequest) (*mcp.CallToolResult, error) {

// Using helper functions for type-safe argument access

op, err := request.RequireString("operation")

if err != nil {

return mcp.NewToolResultError(err.Error()), nil

}

x, err := request.RequireFloat("x")

if err != nil {

return mcp.NewToolResultError(err.Error()), nil

}

y, err := request.RequireFloat("y")

if err != nil {

return mcp.NewToolResultError(err.Error()), nil

}

var result float64

switch op {

case "add":

result = x + y

case "subtract":

result = x - y

case "multiply":

result = x * y

case "divide":

if y == 0 {

return mcp.NewToolResultError("cannot divide by zero"), nil

}

result = x / y

}

return mcp.NewToolResultText(fmt.Sprintf("%.2f", result)), nil

})

// Start the server

if err := server.ServeStdio(s); err != nil {

fmt.Printf("Server error: %v\n", err)

}

}The Model Context Protocol (MCP) lets you build servers that expose data and functionality to LLM applications in a secure, standardized way. Think of it like a web API, but specifically designed for LLM interactions.

MCP servers can:

- Expose data through Resources (think of these sort of like GET endpoints; they are used to load information into the LLM's context)

- Provide functionality through Tools (sort of like POST endpoints; they are used to execute code or otherwise produce a side effect)

- Define interaction patterns through Prompts (reusable templates for LLM interactions)

- And more!

mcp-go implements the Model Context Protocol specification version 2025-11-25, with backward compatibility for versions 2025-06-18, 2025-03-26, and 2024-11-05.

Show Server Examples

The server is your core interface to the MCP protocol. It handles connection management, protocol compliance, and message routing:

// Create a basic server

s := server.NewMCPServer(

"My Server", // Server name

"1.0.0", // Version

)

// Start the server using stdio

if err := server.ServeStdio(s); err != nil {

log.Fatalf("Server error: %v", err)

}Show Resource Examples

Resources are how you expose data to LLMs. They can be anything - files, API responses, database queries, system information, etc. Resources can be:- Static (fixed URI)

- Dynamic (using URI templates)

Here's a simple example of a static resource:

// Static resource example - exposing a README file

resource := mcp.NewResource(

"docs://readme",

"Project README",

mcp.WithResourceDescription("The project's README file"),

mcp.WithMIMEType("text/markdown"),

)

// Add resource with its handler

s.AddResource(resource, func(ctx context.Context, request mcp.ReadResourceRequest) ([]mcp.ResourceContents, error) {

content, err := os.ReadFile("README.md")

if err != nil {

return nil, err

}

return []mcp.ResourceContents{

mcp.TextResourceContents{

URI: "docs://readme",

MIMEType: "text/markdown",

Text: string(content),

},

}, nil

})And here's an example of a dynamic resource using a template:

// Dynamic resource example - user profiles by ID

template := mcp.NewResourceTemplate(

"users://{id}/profile",

"User Profile",

mcp.WithTemplateDescription("Returns user profile information"),

mcp.WithTemplateMIMEType("application/json"),

)

// Add template with its handler

s.AddResourceTemplate(template, func(ctx context.Context, request mcp.ReadResourceRequest) ([]mcp.ResourceContents, error) {

// Extract ID from the URI using regex matching

// The server automatically matches URIs to templates

userID := extractIDFromURI(request.Params.URI)

profile, err := getUserProfile(userID) // Your DB/API call here

if err != nil {

return nil, err

}

return []mcp.ResourceContents{

mcp.TextResourceContents{

URI: request.Params.URI,

MIMEType: "application/json",

Text: profile,

},

}, nil

})The examples are simple but demonstrate the core concepts. Resources can be much more sophisticated - serving multiple contents, integrating with databases or external APIs, etc.

Show Tool Examples

Tools let LLMs take actions through your server. Unlike resources, tools are expected to perform computation and have side effects. They're similar to POST endpoints in a REST API.

Task-augmented tools execute asynchronously and return results via polling. This is useful for long-running operations that would otherwise block or time out. Task tools support three modes:

- TaskSupportForbidden (default): The tool cannot be invoked as a task

- TaskSupportOptional: The tool can be invoked as a task or synchronously

- TaskSupportRequired: The tool must be invoked as a task

// Example: A tool that requires task execution

processBatchTool := mcp.NewTool("process_batch",

mcp.WithDescription("Process a batch of items asynchronously"),

mcp.WithTaskSupport(mcp.TaskSupportRequired),

mcp.WithArray("items",

mcp.Description("Array of items to process"),

mcp.WithStringItems(),

mcp.Required(),

),

)

// Task tool handler returns CreateTaskResult instead of CallToolResult

s.AddTaskTool(processBatchTool, func(ctx context.Context, request mcp.CallToolRequest) (*mcp.CreateTaskResult, error) {

items := request.GetStringSlice("items", []string{})

// Long-running work here

for i, item := range items {

select {

case <-ctx.Done():

// Task was cancelled

return nil, ctx.Err()

default:

// Process item...

processItem(item)

}

}

// Return result - task ID and metadata are managed by the server

return &mcp.CreateTaskResult{

Task: mcp.Task{

// Task fields (ID, status, etc.) are populated by the server

},

}, nil

})

// Enable task capabilities when creating the server

s := server.NewMCPServer(

"Task Server",

"1.0.0",

server.WithTaskCapabilities(

true, // listTasks: allows clients to list all tasks

true, // cancel: allows clients to cancel running tasks

true, // toolCallTasks: enables task augmentation for tools

),

server.WithMaxConcurrentTasks(10), // Optional: limit concurrent running tasks

)Task execution flow:

- Client calls tool with task parameter

- Server immediately returns task ID

- Tool executes asynchronously in the background

- Client polls

tasks/resultto retrieve the result - Server sends task status notifications on completion

For optional task tools, the same tool can be called synchronously (without task parameter) or asynchronously (with task parameter):

// Tool with optional task support

analyzeTool := mcp.NewTool("analyze_data",

mcp.WithDescription("Analyze data - can run sync or async"),

mcp.WithTaskSupport(mcp.TaskSupportOptional),

mcp.WithString("data", mcp.Required()),

)

// Use AddTaskTool for hybrid tools that support both modes

s.AddTaskTool(analyzeTool, func(ctx context.Context, request mcp.CallToolRequest) (*mcp.CreateTaskResult, error) {

// This handler runs when called as a task

data := request.GetString("data", "")

result := analyzeData(data)

return &mcp.CreateTaskResult{

Task: mcp.Task{},

}, nil

})

// The server automatically handles both sync and async invocations

// When called without task param: executes handler and returns immediately

// When called with task param: executes handler asynchronouslyTo prevent resource exhaustion, you can limit the number of concurrent running tasks:

s := server.NewMCPServer(

"Task Server",

"1.0.0",

server.WithTaskCapabilities(true, true, true),

server.WithMaxConcurrentTasks(10), // Allow up to 10 concurrent running tasks

)When the limit is reached, new task creation requests will fail with an error. Completed, failed, or cancelled tasks don't count toward the limit - only tasks in "working" status. If WithMaxConcurrentTasks is not specified or set to 0, there is no limit on concurrent tasks.

For traditional synchronous tools that execute and return results immediately:

Simple calculation example:

calculatorTool := mcp.NewTool("calculate",

mcp.WithDescription("Perform basic arithmetic calculations"),

mcp.WithString("operation",

mcp.Required(),

mcp.Description("The arithmetic operation to perform"),

mcp.Enum("add", "subtract", "multiply", "divide"),

),

mcp.WithNumber("x",

mcp.Required(),

mcp.Description("First number"),

),

mcp.WithNumber("y",

mcp.Required(),

mcp.Description("Second number"),

),

)

s.AddTool(calculatorTool, func(ctx context.Context, request mcp.CallToolRequest) (*mcp.CallToolResult, error) {

args := request.GetArguments()

op := args["operation"].(string)

x := args["x"].(float64)

y := args["y"].(float64)

var result float64

switch op {

case "add":

result = x + y

case "subtract":

result = x - y

case "multiply":

result = x * y

case "divide":

if y == 0 {

return mcp.NewToolResultError("cannot divide by zero"), nil

}

result = x / y

}

return mcp.FormatNumberResult(result), nil

})HTTP request example:

httpTool := mcp.NewTool("http_request",

mcp.WithDescription("Make HTTP requests to external APIs"),

mcp.WithString("method",

mcp.Required(),

mcp.Description("HTTP method to use"),

mcp.Enum("GET", "POST", "PUT", "DELETE"),

),

mcp.WithString("url",

mcp.Required(),

mcp.Description("URL to send the request to"),

mcp.Pattern("^https?://.*"),

),

mcp.WithString("body",

mcp.Description("Request body (for POST/PUT)"),

),

)

s.AddTool(httpTool, func(ctx context.Context, request mcp.CallToolRequest) (*mcp.CallToolResult, error) {

args := request.GetArguments()

method := args["method"].(string)

url := args["url"].(string)

body := ""

if b, ok := args["body"].(string); ok {

body = b

}

// Create and send request

var req *http.Request

var err error

if body != "" {

req, err = http.NewRequest(method, url, strings.NewReader(body))

} else {

req, err = http.NewRequest(method, url, nil)

}

if err != nil {

return mcp.NewToolResultErrorFromErr("unable to create request", err), nil

}

client := &http.Client{}

resp, err := client.Do(req)

if err != nil {

return mcp.NewToolResultErrorFromErr("unable to execute request", err), nil

}

defer resp.Body.Close()

// Return response

respBody, err := io.ReadAll(resp.Body)

if err != nil {

return mcp.NewToolResultErrorFromErr("unable to read request response", err), nil

}

return mcp.NewToolResultText(fmt.Sprintf("Status: %d\nBody: %s", resp.StatusCode, string(respBody))), nil

})Tools can be used for any kind of computation or side effect:

- Database queries

- File operations

- External API calls

- Calculations

- System operations

Each tool should:

- Have a clear description

- Validate inputs

- Handle errors gracefully

- Return structured responses

- Use appropriate result types

Show Prompt Examples

Prompts are reusable templates that help LLMs interact with your server effectively. They're like "best practices" encoded into your server. Here are some examples:

// Simple greeting prompt

s.AddPrompt(mcp.NewPrompt("greeting",

mcp.WithPromptDescription("A friendly greeting prompt"),

mcp.WithArgument("name",

mcp.ArgumentDescription("Name of the person to greet"),

),

), func(ctx context.Context, request mcp.GetPromptRequest) (*mcp.GetPromptResult, error) {

name := request.Params.Arguments["name"]

if name == "" {

name = "friend"

}

return mcp.NewGetPromptResult(

"A friendly greeting",

[]mcp.PromptMessage{

mcp.NewPromptMessage(

mcp.RoleAssistant,

mcp.NewTextContent(fmt.Sprintf("Hello, %s! How can I help you today?", name)),

),

},

), nil

})

// Code review prompt with embedded resource

s.AddPrompt(mcp.NewPrompt("code_review",

mcp.WithPromptDescription("Code review assistance"),

mcp.WithArgument("pr_number",

mcp.ArgumentDescription("Pull request number to review"),

mcp.RequiredArgument(),

),

), func(ctx context.Context, request mcp.GetPromptRequest) (*mcp.GetPromptResult, error) {

prNumber := request.Params.Arguments["pr_number"]

if prNumber == "" {

return nil, fmt.Errorf("pr_number is required")

}

return mcp.NewGetPromptResult(

"Code review assistance",

[]mcp.PromptMessage{

mcp.NewPromptMessage(

mcp.RoleUser,

mcp.NewTextContent("Review the changes and provide constructive feedback."),

),

mcp.NewPromptMessage(

mcp.RoleAssistant,

mcp.NewEmbeddedResource(mcp.ResourceContents{

URI: fmt.Sprintf("git://pulls/%s/diff", prNumber),

MIMEType: "text/x-diff",

}),

),

},

), nil

})

// Database query builder prompt

s.AddPrompt(mcp.NewPrompt("query_builder",

mcp.WithPromptDescription("SQL query builder assistance"),

mcp.WithArgument("table",

mcp.ArgumentDescription("Name of the table to query"),

mcp.RequiredArgument(),

),

), func(ctx context.Context, request mcp.GetPromptRequest) (*mcp.GetPromptResult, error) {

tableName := request.Params.Arguments["table"]

if tableName == "" {

return nil, fmt.Errorf("table name is required")

}

return mcp.NewGetPromptResult(

"SQL query builder assistance",

[]mcp.PromptMessage{

mcp.NewPromptMessage(

mcp.RoleUser,

mcp.NewTextContent("Help construct efficient and safe queries for the provided schema."),

),

mcp.NewPromptMessage(

mcp.RoleUser,

mcp.NewEmbeddedResource(mcp.ResourceContents{

URI: fmt.Sprintf("db://schema/%s", tableName),

MIMEType: "application/json",

}),

),

},

), nil

})Prompts can include:

- System instructions

- Required arguments

- Embedded resources

- Multiple messages

- Different content types (text, images, etc.)

- Custom URI schemes

For examples, see the examples/ directory.

Key examples include:

-

examples/task_tool/- Demonstrates task-augmented tools with TaskSupportRequired and TaskSupportOptional modes - Additional examples covering resources, prompts, and more in the examples directory

MCP-Go supports stdio, SSE and streamable-HTTP transport layers. For SSE transport, you can use SetConnectionLostHandler() to detect and handle disconnections for implementing reconnection logic.

MCP-Go provides a robust session management system that allows you to:

- Maintain separate state for each connected client

- Register and track client sessions

- Send notifications to specific clients

- Provide per-session tool customization

Show Session Management Examples

// Create a server with session capabilities

s := server.NewMCPServer(

"Session Demo",

"1.0.0",

server.WithToolCapabilities(true),

)

// Implement your own ClientSession

type MySession struct {

id string

notifChannel chan mcp.JSONRPCNotification

isInitialized bool

// Add custom fields for your application

}

// Implement the ClientSession interface

func (s *MySession) SessionID() string {

return s.id

}

func (s *MySession) NotificationChannel() chan<- mcp.JSONRPCNotification {

return s.notifChannel

}

func (s *MySession) Initialize() {

s.isInitialized = true

}

func (s *MySession) Initialized() bool {

return s.isInitialized

}

// Register a session

session := &MySession{

id: "user-123",

notifChannel: make(chan mcp.JSONRPCNotification, 10),

}

if err := s.RegisterSession(context.Background(), session); err != nil {

log.Printf("Failed to register session: %v", err)

}

// Send notification to a specific client

err := s.SendNotificationToSpecificClient(

session.SessionID(),

"notification/update",

map[string]any{"message": "New data available!"},

)

if err != nil {

log.Printf("Failed to send notification: %v", err)

}

// Unregister session when done

s.UnregisterSession(context.Background(), session.SessionID())For more advanced use cases, you can implement the SessionWithTools interface to support per-session tool customization:

// Implement SessionWithTools interface for per-session tools

type MyAdvancedSession struct {

MySession // Embed the basic session

sessionTools map[string]server.ServerTool

}

// Implement additional methods for SessionWithTools

func (s *MyAdvancedSession) GetSessionTools() map[string]server.ServerTool {

return s.sessionTools

}

func (s *MyAdvancedSession) SetSessionTools(tools map[string]server.ServerTool) {

s.sessionTools = tools

}

// Create and register a session with tools support

advSession := &MyAdvancedSession{

MySession: MySession{

id: "user-456",

notifChannel: make(chan mcp.JSONRPCNotification, 10),

},

sessionTools: make(map[string]server.ServerTool),

}

if err := s.RegisterSession(context.Background(), advSession); err != nil {

log.Printf("Failed to register session: %v", err)

}

// Add session-specific tools

userSpecificTool := mcp.NewTool(

"user_data",

mcp.WithDescription("Access user-specific data"),

)

// You can use AddSessionTool (similar to AddTool)

err := s.AddSessionTool(

advSession.SessionID(),

userSpecificTool,

func(ctx context.Context, req mcp.CallToolRequest) (*mcp.CallToolResult, error) {

// This handler is only available to this specific session

return mcp.NewToolResultText("User-specific data for " + advSession.SessionID()), nil

},

)

if err != nil {

log.Printf("Failed to add session tool: %v", err)

}

// Or use AddSessionTools directly with ServerTool

/*

err := s.AddSessionTools(

advSession.SessionID(),

server.ServerTool{

Tool: userSpecificTool,

Handler: func(ctx context.Context, req mcp.CallToolRequest) (*mcp.CallToolResult, error) {

// This handler is only available to this specific session

return mcp.NewToolResultText("User-specific data for " + advSession.SessionID()), nil

},

},

)

if err != nil {

log.Printf("Failed to add session tool: %v", err)

}

*/

// Delete session-specific tools when no longer needed

err = s.DeleteSessionTools(advSession.SessionID(), "user_data")

if err != nil {

log.Printf("Failed to delete session tool: %v", err)

}You can also apply filters to control which tools are available to certain sessions:

// Add a tool filter that only shows tools with certain prefixes

s := server.NewMCPServer(

"Tool Filtering Demo",

"1.0.0",

server.WithToolCapabilities(true),

server.WithToolFilter(func(ctx context.Context, tools []mcp.Tool) []mcp.Tool {

// Get session from context

session := server.ClientSessionFromContext(ctx)

if session == nil {

return tools // Return all tools if no session

}

// Example: filter tools based on session ID prefix

if strings.HasPrefix(session.SessionID(), "admin-") {

// Admin users get all tools

return tools

} else {

// Regular users only get tools with "public-" prefix

var filteredTools []mcp.Tool

for _, tool := range tools {

if strings.HasPrefix(tool.Name, "public-") {

filteredTools = append(filteredTools, tool)

}

}

return filteredTools

}

}),

)The session context is automatically passed to tool and resource handlers:

s.AddTool(mcp.NewTool("session_aware"), func(ctx context.Context, req mcp.CallToolRequest) (*mcp.CallToolResult, error) {

// Get the current session from context

session := server.ClientSessionFromContext(ctx)

if session == nil {

return mcp.NewToolResultError("No active session"), nil

}

return mcp.NewToolResultText("Hello, session " + session.SessionID()), nil

})

// When using handlers in HTTP/SSE servers, you need to pass the context with the session

httpHandler := func(w http.ResponseWriter, r *http.Request) {

// Get session from somewhere (like a cookie or header)

session := getSessionFromRequest(r)

// Add session to context

ctx := s.WithContext(r.Context(), session)

// Use this context when handling requests

// ...

}Hook into the request lifecycle by creating a Hooks object with your

selection among the possible callbacks. This enables telemetry across all

functionality, and observability of various facts, for example the ability

to count improperly-formatted requests, or to log the agent identity during

initialization.

Add the Hooks to the server at the time of creation using the

server.WithHooks option.

Add middleware to tool call handlers using the server.WithToolHandlerMiddleware option. Middlewares can be registered on server creation and are applied on every tool call.

A recovery middleware option is available to recover from panics in a tool call and can be added to the server with the server.WithRecovery option.

Server hooks and request handlers are generated. Regenerate them by running:

go generate ./...You need go installed and the goimports tool available. The generator runs

goimports automatically to format and fix imports.

When users are filling in argument values for a specific prompt (identified by name) or resource template (identified by URI), servers can provide contextual suggestions.

To enable completion support, use the server.WithCompletions() option when creating your server.

You can provide completion logic for both prompt arguments and resource template arguments by implementing the respective interfaces and passing them to the server as options.

Show Completion Provider Examples

type MyPromptCompletionProvider struct{}

func (p *MyPromptCompletionProvider) CompletePromptArgument(

ctx context.Context,

promptName string,

argument mcp.CompleteArgument,

context mcp.CompleteContext,

) (*mcp.Completion, error) {

// Example: provide style suggestions for a "code_review" prompt

if promptName == "code_review" && argument.Name == "style" {

styles := []string{"formal", "casual", "technical", "creative"}

var suggestions []string

// Filter based on current input

for _, style := range styles {

if strings.HasPrefix(style, argument.Value) {

suggestions = append(suggestions, style)

}

}

return &mcp.Completion{

Values: suggestions,

}, nil

}

// Return empty suggestions for unhandled cases

return &mcp.Completion{Values: []string{}}, nil

}

type MyResourceCompletionProvider struct{}

func (p *MyResourceCompletionProvider) CompleteResourceArgument(

ctx context.Context,

uri string,

argument mcp.CompleteArgument,

context mcp.CompleteContext,

) (*mcp.Completion, error) {

// Example: provide file path completions

if uri == "file:///{path}" && argument.Name == "path" {

// You can access previously completed arguments from context.Arguments

// context.Arguments is a map[string]string of already-resolved arguments

paths := getMatchingPaths(argument.Value) // Your custom logic

return &mcp.Completion{

Values: paths[:min(len(paths), 100)], // Max 100 items

Total: len(paths), // Total available matches

HasMore: len(paths) > 100, // More results available

}, nil

}

return &mcp.Completion{Values: []string{}}, nil

}

// Register the provider

mcpServer := server.NewMCPServer(

"my-server",

"1.0.0",

server.WithCompletions(),

server.WithPromptCompletionProvider(&MyPromptCompletionProvider{}),

server.WithResourceCompletionProvider(&MyResourceCompletionProvider{}),

)For prompts or resource templates with multiple arguments, the CompleteContext parameter provides access to previously completed arguments. This allows you to provide contextual suggestions based on earlier choices.

Show Completion Context Example

func (p *MyProvider) CompleteResourceArgument(

ctx context.Context,

uri string,

argument mcp.CompleteArgument,

context mcp.CompleteContext,

) (*mcp.Completion, error) {

// Access previously completed arguments

if previousValue, ok := context.Arguments["previous_arg"]; ok {

// Provide suggestions based on previous_arg value

return getSuggestionsFor(argument.Value, previousValue), nil

}

return &mcp.Completion{Values: []string{}}, nil

}When returning completion results:

- Maximum 100 items per response

- Use

Totalto indicate the total number of available matches - Use

HasMoreto signal if additional results exist beyond the returned values

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mcp-go

Similar Open Source Tools

mcp-go

MCP Go is a Go implementation of the Model Context Protocol (MCP), facilitating seamless integration between LLM applications and external data sources and tools. It handles complex protocol details and server management, allowing developers to focus on building tools. The tool is designed to be fast, simple, and complete, aiming to provide a high-level and easy-to-use interface for developing MCP servers. MCP Go is currently under active development, with core features working and advanced capabilities in progress.

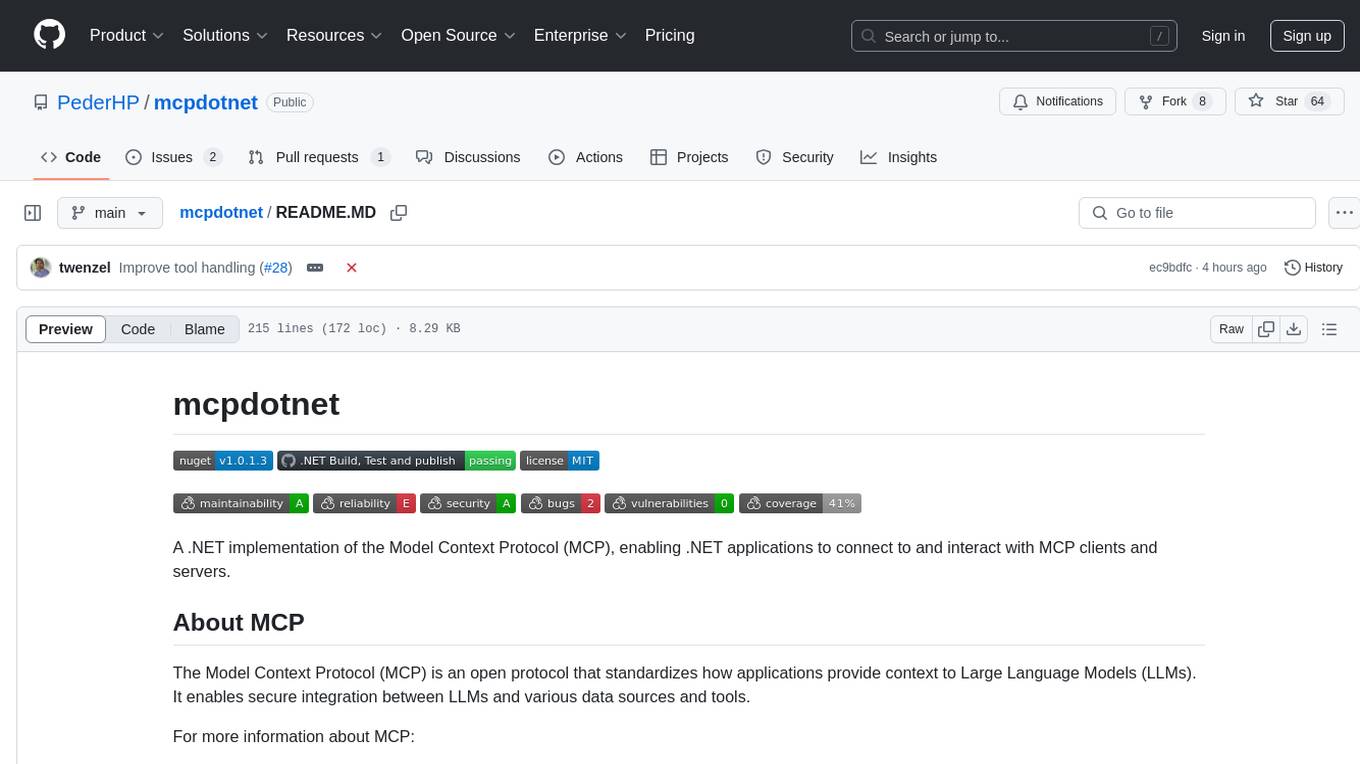

mcpdotnet

mcpdotnet is a .NET implementation of the Model Context Protocol (MCP), facilitating connections and interactions between .NET applications and MCP clients and servers. It aims to provide a clean, specification-compliant implementation with support for various MCP capabilities and transport types. The library includes features such as async/await pattern, logging support, and compatibility with .NET 8.0 and later. Users can create clients to use tools from configured servers and also create servers to register tools and interact with clients. The project roadmap includes expanding documentation, increasing test coverage, adding samples, performance optimization, SSE server support, and authentication.

bellman

Bellman is a unified interface to interact with language and embedding models, supporting various vendors like VertexAI/Gemini, OpenAI, Anthropic, VoyageAI, and Ollama. It consists of a library for direct interaction with models and a service 'bellmand' for proxying requests with one API key. Bellman simplifies switching between models, vendors, and common tasks like chat, structured data, tools, and binary input. It addresses the lack of official SDKs for major players and differences in APIs, providing a single proxy for handling different models. The library offers clients for different vendors implementing common interfaces for generating and embedding text, enabling easy interchangeability between models.

com.openai.unity

com.openai.unity is an OpenAI package for Unity that allows users to interact with OpenAI's API through RESTful requests. It is independently developed and not an official library affiliated with OpenAI. Users can fine-tune models, create assistants, chat completions, and more. The package requires Unity 2021.3 LTS or higher and can be installed via Unity Package Manager or Git URL. Various features like authentication, Azure OpenAI integration, model management, thread creation, chat completions, audio processing, image generation, file management, fine-tuning, batch processing, embeddings, and content moderation are available.

OpenAI-DotNet

OpenAI-DotNet is a simple C# .NET client library for OpenAI to use through their RESTful API. It is independently developed and not an official library affiliated with OpenAI. Users need an OpenAI API account to utilize this library. The library targets .NET 6.0 and above, working across various platforms like console apps, winforms, wpf, asp.net, etc., and on Windows, Linux, and Mac. It provides functionalities for authentication, interacting with models, assistants, threads, chat, audio, images, files, fine-tuning, embeddings, and moderations.

LlmTornado

LLM Tornado is a .NET library designed to simplify the consumption of various large language models (LLMs) from providers like OpenAI, Anthropic, Cohere, Google, Azure, Groq, and self-hosted APIs. It acts as an aggregator, allowing users to easily switch between different LLM providers with just a change in argument. Users can perform tasks such as chatting with documents, voice calling with AI, orchestrating assistants, generating images, and more. The library exposes capabilities through vendor extensions, making it easy to integrate and use multiple LLM providers simultaneously.

instructor-go

Instructor Go is a library that simplifies working with structured outputs from large language models (LLMs). Built on top of `invopop/jsonschema` and utilizing `jsonschema` Go struct tags, it provides a user-friendly API for managing validation, retries, and streaming responses without changing code logic. The library supports LLM provider APIs such as OpenAI, Anthropic, Cohere, and Google, capturing and returning usage data in responses. Users can easily add metadata to struct fields using `jsonschema` tags to enhance model awareness and streamline workflows.

go-utcp

The Universal Tool Calling Protocol (UTCP) is a modern, flexible, and scalable standard for defining and interacting with tools across various communication protocols. It emphasizes scalability, interoperability, and ease of use. It provides built-in transports for HTTP, CLI, Server-Sent Events, streaming HTTP, GraphQL, MCP, and UDP. Users can use the library to construct a client and call tools using the available transports. The library also includes utilities for variable substitution, in-memory repository for storing providers and tools, and OpenAPI conversion to UTCP manuals.

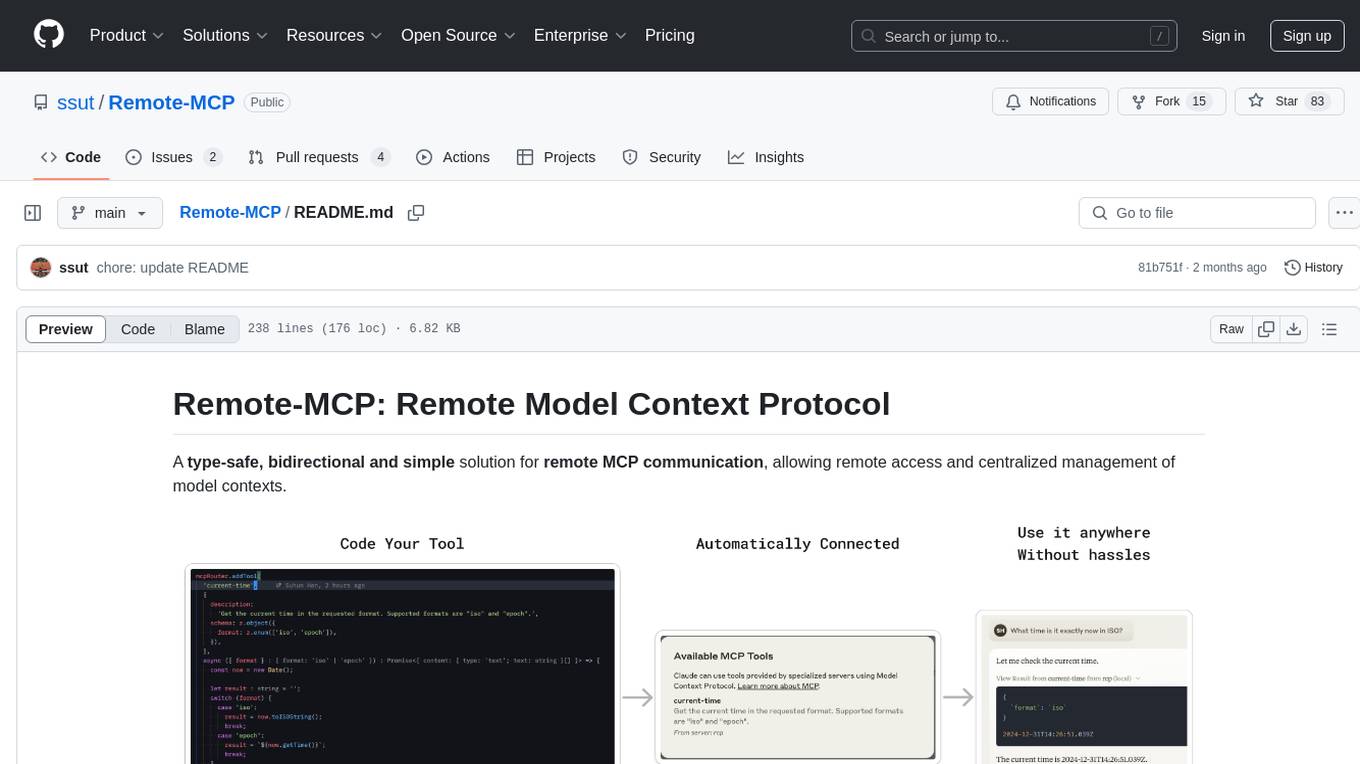

Remote-MCP

Remote-MCP is a type-safe, bidirectional, and simple solution for remote MCP communication, enabling remote access and centralized management of model contexts. It provides a bridge for immediate remote access to a remote MCP server from a local MCP client, without waiting for future official implementations. The repository contains client and server libraries for creating and connecting to remotely accessible MCP services. The core features include basic type-safe client/server communication, MCP command/tool/prompt support, custom headers, and ongoing work on crash-safe handling and event subscription system.

java-genai

Java idiomatic SDK for the Gemini Developer APIs and Vertex AI APIs. The SDK provides a Client class for interacting with both APIs, allowing seamless switching between the 2 backends without code rewriting. It supports features like generating content, embedding content, generating images, upscaling images, editing images, and generating videos. The SDK also includes options for setting API versions, HTTP request parameters, client behavior, and response schemas.

gollm

gollm is a Go package designed to simplify interactions with Large Language Models (LLMs) for AI engineers and developers. It offers a unified API for multiple LLM providers, easy provider and model switching, flexible configuration options, advanced prompt engineering, prompt optimization, memory retention, structured output and validation, provider comparison tools, high-level AI functions, robust error handling and retries, and extensible architecture. The package enables users to create AI-powered golems for tasks like content creation workflows, complex reasoning tasks, structured data generation, model performance analysis, prompt optimization, and creating a mixture of agents.

dashscope-sdk

DashScope SDK for .NET is an unofficial SDK maintained by Cnblogs, providing various APIs for text embedding, generation, multimodal generation, image synthesis, and more. Users can interact with the SDK to perform tasks such as text completion, chat generation, function calls, file operations, and more. The project is under active development, and users are advised to check the Release Notes before upgrading.

Ollama

Ollama SDK for .NET is a fully generated C# SDK based on OpenAPI specification using OpenApiGenerator. It supports automatic releases of new preview versions, source generator for defining tools natively through C# interfaces, and all modern .NET features. The SDK provides support for all Ollama API endpoints including chats, embeddings, listing models, pulling and creating new models, and more. It also offers tools for interacting with weather data and providing weather-related information to users.

agent-sdk-go

Agent Go SDK is a powerful Go framework for building production-ready AI agents that seamlessly integrates memory management, tool execution, multi-LLM support, and enterprise features into a flexible, extensible architecture. It offers core capabilities like multi-model intelligence, modular tool ecosystem, advanced memory management, and MCP integration. The SDK is enterprise-ready with built-in guardrails, complete observability, and support for enterprise multi-tenancy. It provides a structured task framework, declarative configuration, and zero-effort bootstrapping for development experience. The SDK supports environment variables for configuration and includes features like creating agents with YAML configuration, auto-generating agent configurations, using MCP servers with an agent, and CLI tool for headless usage.

UniChat

UniChat is a pipeline tool for creating online and offline chat-bots in Unity. It leverages Unity.Sentis and text vector embedding technology to enable offline mode text content search based on vector databases. The tool includes a chain toolkit for embedding LLM and Agent in games, along with middleware components for Text to Speech, Speech to Text, and Sub-classifier functionalities. UniChat also offers a tool for invoking tools based on ReActAgent workflow, allowing users to create personalized chat scenarios and character cards. The tool provides a comprehensive solution for designing flexible conversations in games while maintaining developer's ideas.

whetstone.chatgpt

Whetstone.ChatGPT is a simple light-weight library that wraps the Open AI API with support for dependency injection. It supports features like GPT 4, GPT 3.5 Turbo, chat completions, audio transcription and translation, vision completions, files, fine tunes, images, embeddings, moderations, and response streaming. The library provides a video walkthrough of a Blazor web app built on it and includes examples such as a command line bot. It offers quickstarts for dependency injection, chat completions, completions, file handling, fine tuning, image generation, and audio transcription.

For similar tasks

mcp-go

MCP Go is a Go implementation of the Model Context Protocol (MCP), facilitating seamless integration between LLM applications and external data sources and tools. It handles complex protocol details and server management, allowing developers to focus on building tools. The tool is designed to be fast, simple, and complete, aiming to provide a high-level and easy-to-use interface for developing MCP servers. MCP Go is currently under active development, with core features working and advanced capabilities in progress.

mcp-for-beginners

The Model Context Protocol (MCP) Curriculum for Beginners is an open-source framework designed to standardize interactions between AI models and client applications. It offers a structured learning path with practical coding examples and real-world use cases in popular programming languages like C#, Java, JavaScript, Rust, Python, and TypeScript. Whether you're an AI developer, system architect, or software engineer, this guide provides comprehensive resources for mastering MCP fundamentals and implementation strategies.

MCP-Nest

A NestJS module to effortlessly expose tools, resources, and prompts for AI using the Model Context Protocol (MCP). It allows defining tools, resources, and prompts in a familiar NestJS way, supporting multi-transport, tool validation, interactive tool calls, request context access, fine-grained authorization, resource serving, dynamic resources, prompt templates, guard-based authentication, dependency injection, server mutation, and instrumentation. It provides features for building ChatGPT widgets and MCP apps.

For similar jobs

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.

AI-in-a-Box

AI-in-a-Box is a curated collection of solution accelerators that can help engineers establish their AI/ML environments and solutions rapidly and with minimal friction, while maintaining the highest standards of quality and efficiency. It provides essential guidance on the responsible use of AI and LLM technologies, specific security guidance for Generative AI (GenAI) applications, and best practices for scaling OpenAI applications within Azure. The available accelerators include: Azure ML Operationalization in-a-box, Edge AI in-a-box, Doc Intelligence in-a-box, Image and Video Analysis in-a-box, Cognitive Services Landing Zone in-a-box, Semantic Kernel Bot in-a-box, NLP to SQL in-a-box, Assistants API in-a-box, and Assistants API Bot in-a-box.

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

generative-ai-cdk-constructs

The AWS Generative AI Constructs Library is an open-source extension of the AWS Cloud Development Kit (AWS CDK) that provides multi-service, well-architected patterns for quickly defining solutions in code to create predictable and repeatable infrastructure, called constructs. The goal of AWS Generative AI CDK Constructs is to help developers build generative AI solutions using pattern-based definitions for their architecture. The patterns defined in AWS Generative AI CDK Constructs are high level, multi-service abstractions of AWS CDK constructs that have default configurations based on well-architected best practices. The library is organized into logical modules using object-oriented techniques to create each architectural pattern model.

model_server

OpenVINO™ Model Server (OVMS) is a high-performance system for serving models. Implemented in C++ for scalability and optimized for deployment on Intel architectures, the model server uses the same architecture and API as TensorFlow Serving and KServe while applying OpenVINO for inference execution. Inference service is provided via gRPC or REST API, making deploying new algorithms and AI experiments easy.

dify-helm

Deploy langgenius/dify, an LLM based chat bot app on kubernetes with helm chart.