nvim-repl

Better REPLs in Neovim, supporting aider (AI), ipython, utop, and more!

Stars: 79

Neovim REPL is a tool that allows users to create, use, and remove interactive Read-Eval-Print Loops (REPLs) within Neovim. It supports various REPLs including aider, ipython, and utop. Users can easily send code cells, lines, or visual selections to the REPL. The tool provides default settings and allows for customization through Lua configuration. Documentation is available within Neovim's help file. Users can seamlessly integrate Neovim with aider for AI pair programming by following recommended configurations.

README:

Create, use, and remove interactive REPLs within Neovim.

Works with any REPL, but contains custom support for the following REPLs:

- aider: AI pair programming in your terminal

- ipython: a powerful interactive Python shell

- utop: a much improved interface to the OCaml toplevel

Default REPL settings are defined in lua/repl/init.lua:defaults.

Neovim REPL is a normal Neovim package.

lazy.nvim

Configuration for https://github.com/folke/lazy.nvim

{

"pappasam/nvim-repl",

keys = {

{ "<Leader>c", "<Plug>(ReplSendCell)", mode = "n", desc = "Send Repl Cell" },

{ "<Leader>r", "<Plug>(ReplSendLine)", mode = "n", desc = "Send Repl Line" },

{ "<Leader>r", "<Plug>(ReplSendVisual)", mode = "x", desc = "Send Repl Visual Selection" },

},

}{

"pappasam/nvim-repl",

opts = {

filetype_commands = {

javascript = {cmd = "deno repl", filetype = "javascript"},

},

default = {cmd = "bash", filetype = "bash"},

open_window_default = "vertical split new",

},

keys = {

{ "<Leader>c", "<Plug>(ReplSendCell)", mode = "n", desc = "ReplSendCell" },

{ "<Leader>r", "<Plug>(ReplSendLine)", mode = "n", desc = "ReplSendLine" },

{ "<Leader>r", "<Plug>(ReplSendVisual)", mode = "x", desc = "ReplSendVisual" },

},

}Documentation is in a normal Neovim help file. You can read it online here.

From within Neovim, type :help repl.

If you find yourself in Terminal mode, use <C-\><C-n> instead of <Esc> to return to Normal mode.

Type :help Terminal-mode and :help CTRL-\_CTRL-N for more information.

The built-in aider integration overrides aider's --multiline, --notifications, and --notifications-command for a smooth Neovim integration. All other settings default to the user's aider configuration file and environment. To that end, we recommend:

- Use

$AIDER_MODELto specify your preferred model before opening Neovim. - For other settings, please reference the Author's current aider configuration for inspiration.

Sometimes, terminal commands (like aider) can be long. If your tabline is long, you can customize it.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for nvim-repl

Similar Open Source Tools

nvim-repl

Neovim REPL is a tool that allows users to create, use, and remove interactive Read-Eval-Print Loops (REPLs) within Neovim. It supports various REPLs including aider, ipython, and utop. Users can easily send code cells, lines, or visual selections to the REPL. The tool provides default settings and allows for customization through Lua configuration. Documentation is available within Neovim's help file. Users can seamlessly integrate Neovim with aider for AI pair programming by following recommended configurations.

wtf.nvim

wtf.nvim is a Neovim plugin that enhances diagnostic debugging by providing explanations and solutions for code issues using ChatGPT. It allows users to search the web for answers directly from Neovim, making the debugging process faster and more efficient. The plugin works with any language that has LSP support in Neovim, offering AI-powered diagnostic assistance and seamless integration with various resources for resolving coding problems.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

llm.nvim

llm.nvim is a neovim plugin designed for LLM-assisted programming. It provides a no-frills approach to integrating language model assistance into the coding workflow. Users can configure the plugin to interact with various AI services such as GROQ, OpenAI, and Anthropics. The plugin offers functions to trigger the LLM assistant, create new prompt files, and customize key bindings for seamless interaction. With a focus on simplicity and efficiency, llm.nvim aims to enhance the coding experience by leveraging AI capabilities within the neovim environment.

ogpt.nvim

OGPT.nvim is a Neovim plugin that enables users to interact with various language models (LLMs) such as Ollama, OpenAI, TextGenUI, and more. Users can engage in interactive question-and-answer sessions, have persona-based conversations, and execute customizable actions like grammar correction, translation, keyword generation, docstring creation, test addition, code optimization, summarization, bug fixing, code explanation, and code readability analysis. The plugin allows users to define custom actions using a JSON file or plugin configurations.

llm-sandbox

LLM Sandbox is a lightweight and portable sandbox environment designed to securely execute large language model (LLM) generated code in a safe and isolated manner using Docker containers. It provides an easy-to-use interface for setting up, managing, and executing code in a controlled Docker environment, simplifying the process of running code generated by LLMs. The tool supports multiple programming languages, offers flexibility with predefined Docker images or custom Dockerfiles, and allows scalability with support for Kubernetes and remote Docker hosts.

vim-ai

vim-ai is a plugin that adds Artificial Intelligence (AI) capabilities to Vim and Neovim. It allows users to generate code, edit text, and have interactive conversations with GPT models powered by OpenAI's API. The plugin uses OpenAI's API to generate responses, requiring users to set up an account and obtain an API key. It supports various commands for text generation, editing, and chat interactions, providing a seamless integration of AI features into the Vim text editor environment.

llm.nvim

llm.nvim is a plugin for Neovim that enables code completion using LLM models. It supports 'ghost-text' code completion similar to Copilot and allows users to choose their model for code generation via HTTP requests. The plugin interfaces with multiple backends like Hugging Face, Ollama, Open AI, and TGI, providing flexibility in model selection and configuration. Users can customize the behavior of suggestions, tokenization, and model parameters to enhance their coding experience. llm.nvim also includes commands for toggling auto-suggestions and manually requesting suggestions, making it a versatile tool for developers using Neovim.

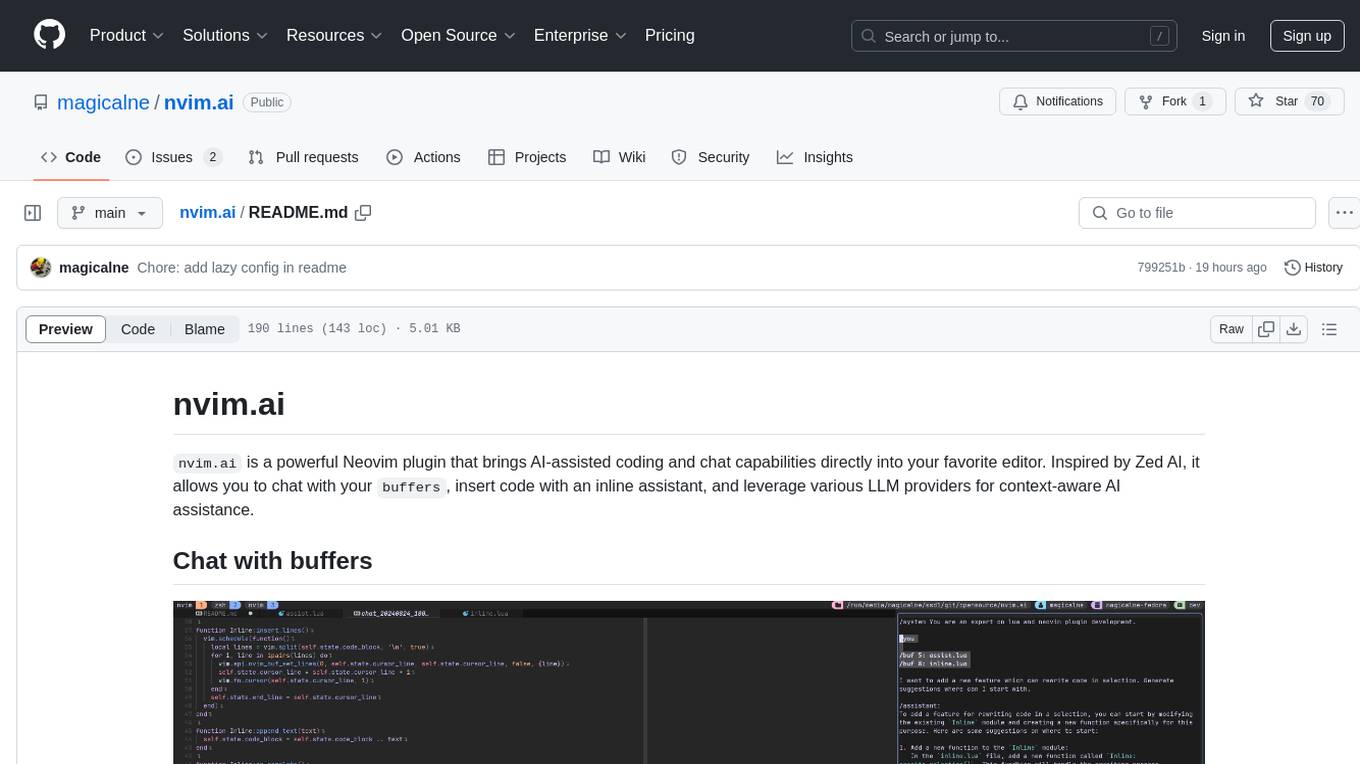

nvim.ai

nvim.ai is a powerful Neovim plugin that enables AI-assisted coding and chat capabilities within the editor. Users can chat with buffers, insert code with an inline assistant, and utilize various LLM providers for context-aware AI assistance. The plugin supports features like interacting with AI about code and documents, receiving relevant help based on current work, code insertion, code rewriting (Work in Progress), and integration with multiple LLM providers. Users can configure the plugin, add API keys to dotfiles, and integrate with nvim-cmp for command autocompletion. Keymaps are available for chat and inline assist functionalities. The chat dialog allows parsing content with keywords and supports roles like /system, /you, and /assistant. Context-aware assistance can be accessed through inline assist by inserting code blocks anywhere in the file.

parrot.nvim

Parrot.nvim is a Neovim plugin that prioritizes a seamless out-of-the-box experience for text generation. It simplifies functionality and focuses solely on text generation, excluding integration of DALLE and Whisper. It supports persistent conversations as markdown files, custom hooks for inline text editing, multiple providers like Anthropic API, perplexity.ai API, OpenAI API, Mistral API, and local/offline serving via ollama. It allows custom agent definitions, flexible API credential support, and repository-specific instructions with a `.parrot.md` file. It does not have autocompletion or hidden requests in the background to analyze files.

parea-sdk-py

Parea AI provides a SDK to evaluate & monitor AI applications. It allows users to test, evaluate, and monitor their AI models by defining and running experiments. The SDK also enables logging and observability for AI applications, as well as deploying prompts to facilitate collaboration between engineers and subject-matter experts. Users can automatically log calls to OpenAI and Anthropic, create hierarchical traces of their applications, and deploy prompts for integration into their applications.

ruby-openai

Use the OpenAI API with Ruby! 🤖🩵 Stream text with GPT-4, transcribe and translate audio with Whisper, or create images with DALL·E... Hire me | 🎮 Ruby AI Builders Discord | 🐦 Twitter | 🧠 Anthropic Gem | 🚂 Midjourney Gem ## Table of Contents * Ruby OpenAI * Table of Contents * Installation * Bundler * Gem install * Usage * Quickstart * With Config * Custom timeout or base URI * Extra Headers per Client * Logging * Errors * Faraday middleware * Azure * Ollama * Counting Tokens * Models * Examples * Chat * Streaming Chat * Vision * JSON Mode * Functions * Edits * Embeddings * Batches * Files * Finetunes * Assistants * Threads and Messages * Runs * Runs involving function tools * Image Generation * DALL·E 2 * DALL·E 3 * Image Edit * Image Variations * Moderations * Whisper * Translate * Transcribe * Speech * Errors * Development * Release * Contributing * License * Code of Conduct

instructor

Instructor is a popular Python library for managing structured outputs from large language models (LLMs). It offers a user-friendly API for validation, retries, and streaming responses. With support for various LLM providers and multiple languages, Instructor simplifies working with LLM outputs. The library includes features like response models, retry management, validation, streaming support, and flexible backends. It also provides hooks for logging and monitoring LLM interactions, and supports integration with Anthropic, Cohere, Gemini, Litellm, and Google AI models. Instructor facilitates tasks such as extracting user data from natural language, creating fine-tuned models, managing uploaded files, and monitoring usage of OpenAI models.

typst-mcp

Typst MCP Server is an implementation of the Model Context Protocol (MCP) that facilitates interaction between AI models and Typst, a markup-based typesetting system. The server offers tools for converting between LaTeX and Typst, validating Typst syntax, and generating images from Typst code. It provides functions such as listing documentation chapters, retrieving specific chapters, converting LaTeX snippets to Typst, validating Typst syntax, and rendering Typst code to images. The server is designed to assist Language Model Managers (LLMs) in handling Typst-related tasks efficiently and accurately.

promptic

Promptic is a tool designed for LLM app development, providing a productive and pythonic way to build LLM applications. It leverages LiteLLM, allowing flexibility to switch LLM providers easily. Promptic focuses on building features by providing type-safe structured outputs, easy-to-build agents, streaming support, automatic prompt caching, and built-in conversation memory.

instructor

Instructor is a tool that provides structured outputs from Large Language Models (LLMs) in a reliable manner. It simplifies the process of extracting structured data by utilizing Pydantic for validation, type safety, and IDE support. With Instructor, users can define models and easily obtain structured data without the need for complex JSON parsing, error handling, or retries. The tool supports automatic retries, streaming support, and extraction of nested objects, making it production-ready for various AI applications. Trusted by a large community of developers and companies, Instructor is used by teams at OpenAI, Google, Microsoft, AWS, and YC startups.

For similar tasks

elyra

Elyra is a set of AI-centric extensions to JupyterLab Notebooks that includes features like Visual Pipeline Editor, running notebooks/scripts as batch jobs, reusable code snippets, hybrid runtime support, script editors with execution capabilities, debugger, version control using Git, and more. It provides a comprehensive environment for data scientists and AI practitioners to develop, test, and deploy machine learning models and workflows efficiently.

pyscripter

PyScripter is a free and open-source Python Integrated Development Environment (IDE) aiming to compete with commercial Windows-based IDEs for other languages. It offers features like LLM-assisted coding and provides support for Python development projects. The tool is designed to enhance the coding experience for Python developers by providing a user-friendly interface and a range of functionalities to streamline the development process.

HEC-Commander

HEC-Commander Tools is a suite of python notebooks developed with AI assistance for water resource engineering workflows, focused on providing automation for HEC-RAS and HEC-HMS through Jupyter Notebooks. It contains automation scripts for HEC-HMS and HEC-RAS, tools for plotting results, and miscellaneous scripts for workflow assistance. The repository also includes blog posts, ChatGPT assistants, and presentations related to H&H modeling and the use of LLM's for water resources workflows.

nvim-repl

Neovim REPL is a tool that allows users to create, use, and remove interactive Read-Eval-Print Loops (REPLs) within Neovim. It supports various REPLs including aider, ipython, and utop. Users can easily send code cells, lines, or visual selections to the REPL. The tool provides default settings and allows for customization through Lua configuration. Documentation is available within Neovim's help file. Users can seamlessly integrate Neovim with aider for AI pair programming by following recommended configurations.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

supersonic

SuperSonic is a next-generation BI platform that integrates Chat BI (powered by LLM) and Headless BI (powered by semantic layer) paradigms. This integration ensures that Chat BI has access to the same curated and governed semantic data models as traditional BI. Furthermore, the implementation of both paradigms benefits from the integration: * Chat BI's Text2SQL gets augmented with context-retrieval from semantic models. * Headless BI's query interface gets extended with natural language API. SuperSonic provides a Chat BI interface that empowers users to query data using natural language and visualize the results with suitable charts. To enable such experience, the only thing necessary is to build logical semantic models (definition of metric/dimension/tag, along with their meaning and relationships) through a Headless BI interface. Meanwhile, SuperSonic is designed to be extensible and composable, allowing custom implementations to be added and configured with Java SPI. The integration of Chat BI and Headless BI has the potential to enhance the Text2SQL generation in two dimensions: 1. Incorporate data semantics (such as business terms, column values, etc.) into the prompt, enabling LLM to better understand the semantics and reduce hallucination. 2. Offload the generation of advanced SQL syntax (such as join, formula, etc.) from LLM to the semantic layer to reduce complexity. With these ideas in mind, we develop SuperSonic as a practical reference implementation and use it to power our real-world products. Additionally, to facilitate further development we decide to open source SuperSonic as an extensible framework.

DeepBI

DeepBI is an AI-native data analysis platform that leverages the power of large language models to explore, query, visualize, and share data from any data source. Users can use DeepBI to gain data insight and make data-driven decisions.

WrenAI

WrenAI is a data assistant tool that helps users get results and insights faster by asking questions in natural language, without writing SQL. It leverages Large Language Models (LLM) with Retrieval-Augmented Generation (RAG) technology to enhance comprehension of internal data. Key benefits include fast onboarding, secure design, and open-source availability. WrenAI consists of three core services: Wren UI (intuitive user interface), Wren AI Service (processes queries using a vector database), and Wren Engine (platform backbone). It is currently in alpha version, with new releases planned biweekly.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.