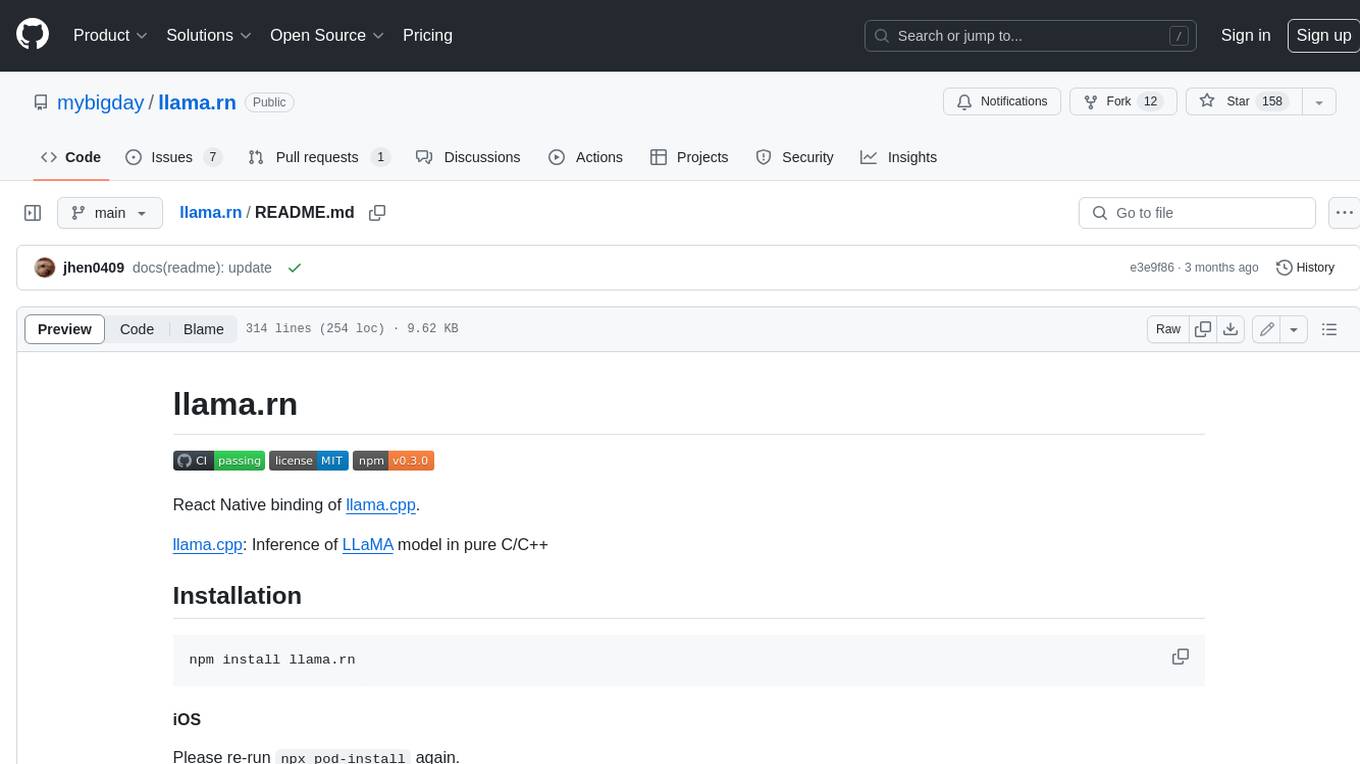

llama.rn

React Native binding of llama.cpp

Stars: 671

React Native binding of llama.cpp, which is an inference of LLaMA model in pure C/C++. This tool allows you to use the LLaMA model in your React Native applications for various tasks such as text completion, tokenization, detokenization, and embedding. It provides a convenient interface to interact with the LLaMA model and supports features like grammar sampling and mocking for testing purposes.

README:

React Native binding of llama.cpp.

llama.cpp: Inference of LLaMA model in pure C/C++

npm install llama.rnPlease re-run npx pod-install again.

By default, llama.rn will use pre-built rnllama.xcframework for iOS. If you want to build from source, please set RNLLAMA_BUILD_FROM_SOURCE to 1 in your Podfile.

Add proguard rule if it's enabled in project (android/app/proguard-rules.pro):

# llama.rn

-keep class com.rnllama.** { *; }

By default, llama.rn will use pre-built libraries for Android. If you want to build from source, please set rnllamaBuildFromSource to true in android/gradle.properties.

- Confirm the target device exposes an OpenCL-capable GPU (Qualcomm Adreno 700+ devices are currently supported & tested).

- Add

<uses-native-library android:name="libOpenCL.so" android:required="false" />to your app manifest so the loader can be loaded at runtime. - Configure

n_gpu_layers(> 0) when callinginitLlamato offload layers to the GPU. The native result exposesgpu,gpuDevice, andreasonNoGPUso you can confirm runtime behaviour.

You can search HuggingFace for available models (Keyword: GGUF).

For get a GGUF model or quantize manually, see quantize documentation in llama.cpp.

💡 You can find complete examples in the example project.

Load model info only:

import { loadLlamaModelInfo } from 'llama.rn'

const modelPath = 'file://<path to gguf model>'

console.log('Model Info:', await loadLlamaModelInfo(modelPath))Initialize a Llama context & do completion:

import { initLlama } from 'llama.rn'

// Initial a Llama context with the model (may take a while)

const context = await initLlama({

model: modelPath,

use_mlock: true,

n_ctx: 2048,

n_gpu_layers: 99, // number of layers to store in GPU memory (Metal/OpenCL)

// embedding: true, // use embedding

})

const stopWords = ['</s>', '<|end|>', '<|eot_id|>', '<|end_of_text|>', '<|im_end|>', '<|EOT|>', '<|END_OF_TURN_TOKEN|>', '<|end_of_turn|>', '<|endoftext|>']

// Do chat completion

const msgResult = await context.completion(

{

messages: [

{

role: 'system',

content: 'This is a conversation between user and assistant, a friendly chatbot.',

},

{

role: 'user',

content: 'Hello!',

},

],

n_predict: 100,

stop: stopWords,

// ...other params

},

(data) => {

// This is a partial completion callback

const { token } = data

},

)

console.log('Result:', msgResult.text)

console.log('Timings:', msgResult.timings)

// Or do text completion

const textResult = await context.completion(

{

prompt: 'This is a conversation between user and llama, a friendly chatbot. respond in simple markdown.\n\nUser: Hello!\nLlama:',

n_predict: 100,

stop: [...stopWords, 'Llama:', 'User:'],

// ...other params

},

(data) => {

// This is a partial completion callback

const { token } = data

},

)

console.log('Result:', textResult.text)

console.log('Timings:', textResult.timings)The binding's deisgn inspired by server.cpp example in llama.cpp:

-

/completionand/chat/completions:context.completion(params, partialCompletionCallback) -

/tokenize:context.tokenize(content) -

/detokenize:context.detokenize(tokens) -

/embedding:context.embedding(content) -

/rerank:context.rerank(query, documents, params) - ... Other methods

Please visit the Documentation for more details.

You can also visit the example to see how to use it.

llama.rn supports multimodal capabilities including vision (images) and audio processing. This allows you to interact with models that can understand both text and media content.

Images (Vision):

- JPEG, PNG, BMP, GIF, TGA, HDR, PIC, PNM

- Base64 encoded images (data URLs)

- Local file paths

- * Not supported HTTP URLs yet

Audio:

- WAV, MP3 formats

- Base64 encoded audio (data URLs)

- Local file paths

- * Not supported HTTP URLs yet

First, you need a multimodal model and its corresponding multimodal projector (mmproj) file, see how to obtain mmproj for more details.

import { initLlama } from 'llama.rn'

// First initialize the model context

const context = await initLlama({

model: 'path/to/your/multimodal-model.gguf',

n_ctx: 4096,

n_gpu_layers: 99, // Recommended for multimodal models

// Important: Disable context shifting for multimodal

ctx_shift: false,

})

// Initialize multimodal support with mmproj file

const success = await context.initMultimodal({

path: 'path/to/your/mmproj-model.gguf',

use_gpu: true, // Recommended for better performance

})

// Check if multimodal is enabled

console.log('Multimodal enabled:', await context.isMultimodalEnabled())

if (success) {

console.log('Multimodal support initialized!')

// Check what modalities are supported

const support = await context.getMultimodalSupport()

console.log('Vision support:', support.vision)

console.log('Audio support:', support.audio)

} else {

console.log('Failed to initialize multimodal support')

}

// Release multimodal context

await context.releaseMultimodal()const result = await context.completion({

messages: [

{

role: 'user',

content: [

{

type: 'text',

text: 'What do you see in this image?',

},

{

type: 'image_url',

image_url: {

url: 'file:///path/to/image.jpg',

// or base64: 'data:image/jpeg;base64,/9j/4AAQSkZJRgABAQEAYABgAAD...'

},

},

],

},

],

n_predict: 100,

temperature: 0.1,

})

console.log('AI Response:', result.text)// Method 1: Using structured message content (Recommended)

const result = await context.completion({

messages: [

{

role: 'user',

content: [

{

type: 'text',

text: 'Transcribe or describe this audio:',

},

{

type: 'input_audio',

input_audio: {

data: 'data:audio/wav;base64,UklGRiQAAABXQVZFZm10...',

// or url: 'file:///path/to/audio.wav',

format: 'wav', // or 'mp3'

},

},

],

},

],

n_predict: 200,

})

console.log('Transcription:', result.text)// Tokenize text with media

const tokenizeResult = await context.tokenize(

'Describe this image: <__media__>',

{

media_paths: ['file:///path/to/image.jpg']

}

)

console.log('Tokens:', tokenizeResult.tokens)

console.log('Has media:', tokenizeResult.has_media)

console.log('Media positions:', tokenizeResult.chunk_pos_media)-

Context Shifting: Multimodal models require

ctx_shift: falseto maintain media token positioning -

Memory: Multimodal models require more memory; use adequate

n_ctxand consider GPU offloading -

Media Markers: The system automatically handles

<__media__>markers in prompts. When using structured message content, media items are automatically replaced with this marker - Model Compatibility: Ensure your model supports the media type you're trying to process

llama.rn has universal tool call support by using minja (as Jinja template parser) and chat.cpp in llama.cpp.

Example:

import { initLlama } from 'llama.rn'

const context = await initLlama({

// ...params

})

const { text, tool_calls } = await context.completion({

// ...params

jinja: true, // Enable Jinja template parser

tool_choice: 'auto',

tools: [

{

type: 'function',

function: {

name: 'ipython',

description:

'Runs code in an ipython interpreter and returns the result of the execution after 60 seconds.',

parameters: {

type: 'object',

properties: {

code: {

type: 'string',

description: 'The code to run in the ipython interpreter.',

},

},

required: ['code'],

},

},

},

],

messages: [

{

role: 'system',

content: 'You are a helpful assistant that can answer questions and help with tasks.',

},

{

role: 'user',

content: 'Test',

},

],

})

console.log('Result:', text)

// If tool_calls is not empty, it means the model has called the tool

if (tool_calls) console.log('Tool Calls:', tool_calls)You can check chat.cpp for models has native tool calling support, or it will fallback to GENERIC type tool call.

The generic tool call will be always JSON object as output, the output will be like {"response": "..."} when it not decided to use tool call.

GBNF (GGML BNF) is a format for defining formal grammars to constrain model outputs in llama.cpp. For example, you can use it to force the model to generate valid JSON, or speak only in emojis.

You can see GBNF Guide for more details.

llama.rn provided a built-in function to convert JSON Schema to GBNF:

Example gbnf grammar:

root ::= object

value ::= object | array | string | number | ("true" | "false" | "null") ws

object ::=

"{" ws (

string ":" ws value

("," ws string ":" ws value)*

)? "}" ws

array ::=

"[" ws (

value

("," ws value)*

)? "]" ws

string ::=

"\"" (

[^"\\\x7F\x00-\x1F] |

"\\" (["\\bfnrt] | "u" [0-9a-fA-F]{4}) # escapes

)* "\"" ws

number ::= ("-"? ([0-9] | [1-9] [0-9]{0,15})) ("." [0-9]+)? ([eE] [-+]? [0-9] [1-9]{0,15})? ws

# Optional space: by convention, applied in this grammar after literal chars when allowed

ws ::= | " " | "\n" [ \t]{0,20}

import { initLlama } from 'llama.rn'

const gbnf = '...'

const context = await initLlama({

// ...params

grammar: gbnf,

})

const { text } = await context.completion({

// ...params

messages: [

{

role: 'system',

content: 'You are a helpful assistant that can answer questions and help with tasks.',

},

{

role: 'user',

content: 'Test',

},

],

})

console.log('Result:', text)Also, this is how json_schema works in response_format during completion, it converts the json_schema to gbnf grammar.

The session file is a binary file that contains the state of the context, it can saves time of prompt processing.

const context = await initLlama({ ...params })

// After prompt processing or completion ...

// Save the session

await context.saveSession('<path to save session>')

// Load the session

await context.loadSession('<path to load session>')- * Session is currently not supported save state from multimodal context, so it only stores the text chunk before the first media chunk.

The embedding API is used to get the embedding of a text.

const context = await initLlama({

...params,

embedding: true,

})

const { embedding } = await context.embedding('Hello, world!')- You can use model like nomic-ai/nomic-embed-text-v1.5-GGUF for better embedding quality.

- You can use DB like op-sqlite with sqlite-vec support to store and search embeddings.

The rerank API is used to rank documents based on their relevance to a query. This is particularly useful for improving search results and implementing retrieval-augmented generation (RAG) systems.

const context = await initLlama({

...params,

embedding: true, // Required for reranking

pooling_type: 'rank', // Use rank pooling for rerank models

})

// Rerank documents based on relevance to query

const results = await context.rerank(

'What is artificial intelligence?', // query

[

'AI is a branch of computer science.',

'The weather is nice today.',

'Machine learning is a subset of AI.',

'I like pizza.',

], // documents to rank

{

normalize: 1, // Optional: normalize scores (default: from model config)

}

)

// Results are automatically sorted by score (highest first)

results.forEach((result, index) => {

console.log(`Rank ${index + 1}:`, {

score: result.score,

document: result.document,

originalIndex: result.index,

})

})-

Model Requirements: Reranking requires models with

RANKpooling type (e.g., reranker models) -

Embedding Enabled: The context must have

embedding: trueto use rerank functionality - Automatic Sorting: Results are returned sorted by relevance score in descending order

- Document Access: Each result includes the original document text and its index in the input array

- Score Interpretation: Higher scores indicate higher relevance to the query

- jinaai - jina-reranker-v2-base-multilingual-GGUF

- BAAI - bge-reranker-v2-m3-GGUF

- Other models with "rerank" or "reranker" in their name and GGUF format

We have provided a mock version of llama.rn for testing purpose you can use on Jest:

jest.mock('llama.rn', () => require('llama.rn/jest/mock'))iOS:

- The Extended Virtual Addressing and Increased Memory Limit capabilities are recommended to enable on iOS project.

- Metal:

- We have tested to know some devices is not able to use Metal (GPU) due to llama.cpp used SIMD-scoped operation, you can check if your device is supported in Metal feature set tables, Apple7 GPU will be the minimum requirement.

- It's also not supported in iOS simulator due to this limitation, we used constant buffers more than 14.

Android:

- Currently only supported arm64-v8a / x86_64 platform, this means you can't initialize a context on another platforms. The 64-bit platform are recommended because it can allocate more memory for the model.

- The OpenCL backend is supported, but currently it limited to Qualcomm Adreno GPU and Q4_0 / Q6_K data types. Please check OpenCL backend for more details.

See the contributing guide to learn how to contribute to the repository and the development workflow.

- BRICKS: Our product for building interactive signage in simple way. We provide LLM functions as Generator LLM/Assistant.

- ChatterUI: Simple frontend for LLMs built in react-native.

- PocketPal AI: An app that brings language models directly to your phone.

-

llama.node: An another Node.js binding of

llama.cppbut made API same asllama.rn.

MIT

Made with create-react-native-library

Built and maintained by BRICKS.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llama.rn

Similar Open Source Tools

llama.rn

React Native binding of llama.cpp, which is an inference of LLaMA model in pure C/C++. This tool allows you to use the LLaMA model in your React Native applications for various tasks such as text completion, tokenization, detokenization, and embedding. It provides a convenient interface to interact with the LLaMA model and supports features like grammar sampling and mocking for testing purposes.

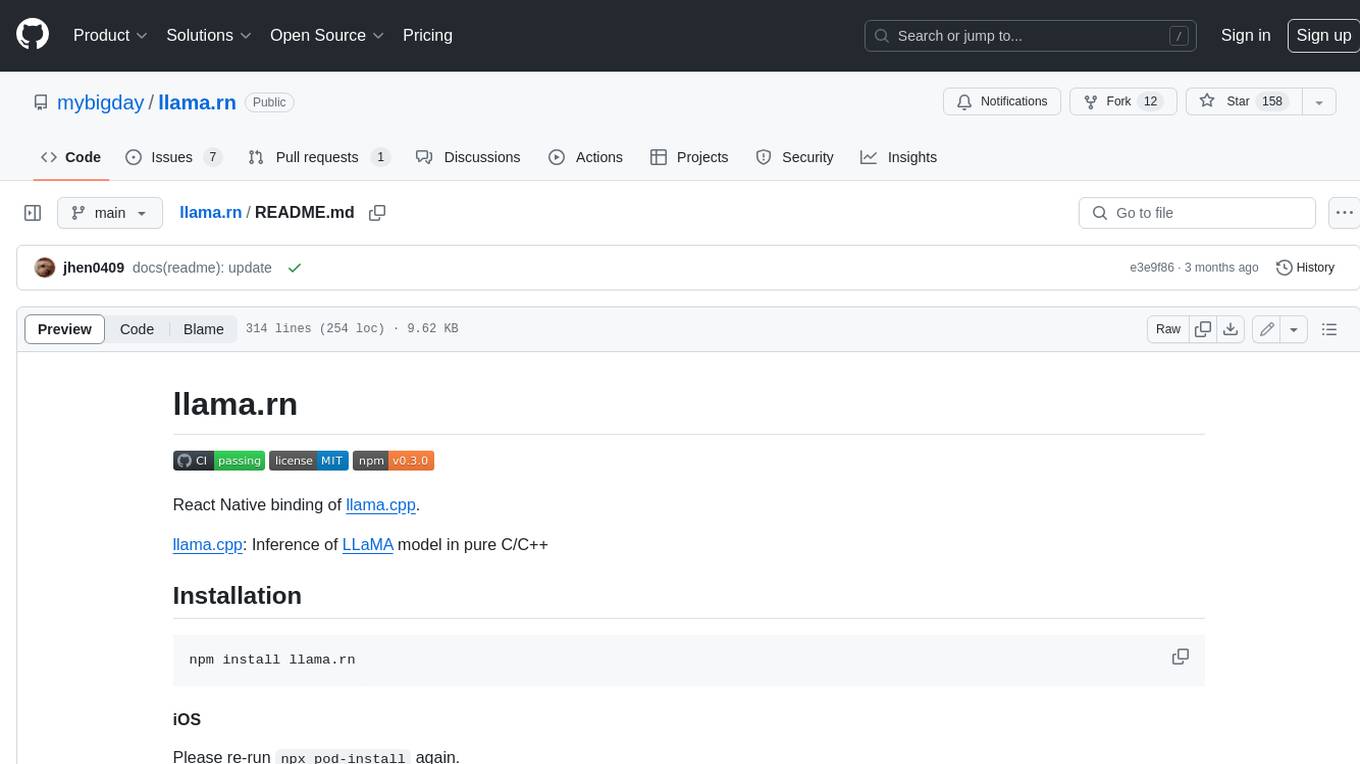

awesome-VLLMs

This repository contains a collection of pre-trained Very Large Language Models (VLLMs) that can be used for various natural language processing tasks. The models are fine-tuned on large text corpora and can be easily integrated into existing NLP pipelines for tasks such as text generation, sentiment analysis, and language translation. The repository also provides code examples and tutorials to help users get started with using these powerful language models in their projects.

MaiBot

MaiBot is an intelligent QQ group chat bot based on a large language model. It is developed using the nonebot2 framework, with LLM providing conversation abilities, MongoDB for data persistence support, and NapCat as the QQ protocol endpoint support. The project is in active development stage, with features like chat functionality, emoji functionality, schedule management, memory function, knowledge base function, and relationship function planned for future updates. The project aims to create a 'life form' active in QQ group chats, focusing on companionship and creating a more human-like presence rather than a perfect assistant. The application generates content from AI models, so users are advised to discern carefully and not use it for illegal purposes.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

MoonshotAI-Cookbook

The MoonshotAI-Cookbook provides example code and guides for accomplishing common tasks with the MoonshotAI API. To run these examples, you'll need an MoonshotAI account and associated API key. Most code examples are written in Python, though the concepts can be applied in any language.

llm-universe

This project is a tutorial on developing large model applications for novice developers. It aims to provide a comprehensive introduction to large model development, focusing on Alibaba Cloud servers and integrating personal knowledge assistant projects. The tutorial covers the following topics: 1. **Introduction to Large Models**: A simplified introduction for novice developers on what large models are, their characteristics, what LangChain is, and how to develop an LLM application. 2. **How to Call Large Model APIs**: This section introduces various methods for calling APIs of well-known domestic and foreign large model products, including calling native APIs, encapsulating them as LangChain LLMs, and encapsulating them as Fastapi calls. It also provides a unified encapsulation for various large model APIs, such as Baidu Wenxin, Xunfei Xinghuo, and Zh譜AI. 3. **Knowledge Base Construction**: Loading, processing, and vector database construction of different types of knowledge base documents. 4. **Building RAG Applications**: Integrating LLM into LangChain to build a retrieval question and answer chain, and deploying applications using Streamlit. 5. **Verification and Iteration**: How to implement verification and iteration in large model development, and common evaluation methods. The project consists of three main parts: 1. **Introduction to LLM Development**: A simplified version of V1 aims to help beginners get started with LLM development quickly and conveniently, understand the general process of LLM development, and build a simple demo. 2. **LLM Development Techniques**: More advanced LLM development techniques, including but not limited to: Prompt Engineering, processing of multiple types of source data, optimizing retrieval, recall ranking, Agent framework, etc. 3. **LLM Application Examples**: Introduce some successful open source cases, analyze the ideas, core concepts, and implementation frameworks of these application examples from the perspective of this course, and help beginners understand what kind of applications they can develop through LLM. Currently, the first part has been completed, and everyone is welcome to read and learn; the second and third parts are under creation. **Directory Structure Description**: requirements.txt: Installation dependencies in the official environment notebook: Notebook source code file docs: Markdown documentation file figures: Pictures data_base: Knowledge base source file used

LLMs-playground

LLMs-playground is a repository containing code examples and tutorials for learning and experimenting with Large Language Models (LLMs). It provides a hands-on approach to understanding how LLMs work and how to fine-tune them for specific tasks. The repository covers various LLM architectures, pre-training techniques, and fine-tuning strategies, making it a valuable resource for researchers, students, and practitioners interested in natural language processing and machine learning. By exploring the code and following the tutorials, users can gain practical insights into working with LLMs and apply their knowledge to real-world projects.

llm_recipes

This repository showcases the author's experiments with Large Language Models (LLMs) for text generation tasks. It includes dataset preparation, preprocessing, model fine-tuning using libraries such as Axolotl and HuggingFace, and model evaluation.

Main

This repository contains material related to the new book _Synthetic Data and Generative AI_ by the author, including code for NoGAN, DeepResampling, and NoGAN_Hellinger. NoGAN is a tabular data synthesizer that outperforms GenAI methods in terms of speed and results, utilizing state-of-the-art quality metrics. DeepResampling is a fast NoGAN based on resampling and Bayesian Models with hyperparameter auto-tuning. NoGAN_Hellinger combines NoGAN and DeepResampling with the Hellinger model evaluation metric.

NeMo-Guardrails

NeMo Guardrails is an open-source toolkit for easily adding _programmable guardrails_ to LLM-based conversational applications. Guardrails (or "rails" for short) are specific ways of controlling the output of a large language model, such as not talking about politics, responding in a particular way to specific user requests, following a predefined dialog path, using a particular language style, extracting structured data, and more.

llms-from-scratch-rs

This project provides Rust code that follows the text 'Build An LLM From Scratch' by Sebastian Raschka. It translates PyTorch code into Rust using the Candle crate, aiming to build a GPT-style LLM. Users can clone the repo, run examples/exercises, and access the same datasets as in the book. The project includes chapters on understanding large language models, working with text data, coding attention mechanisms, implementing a GPT model, pretraining unlabeled data, fine-tuning for classification, and fine-tuning to follow instructions.

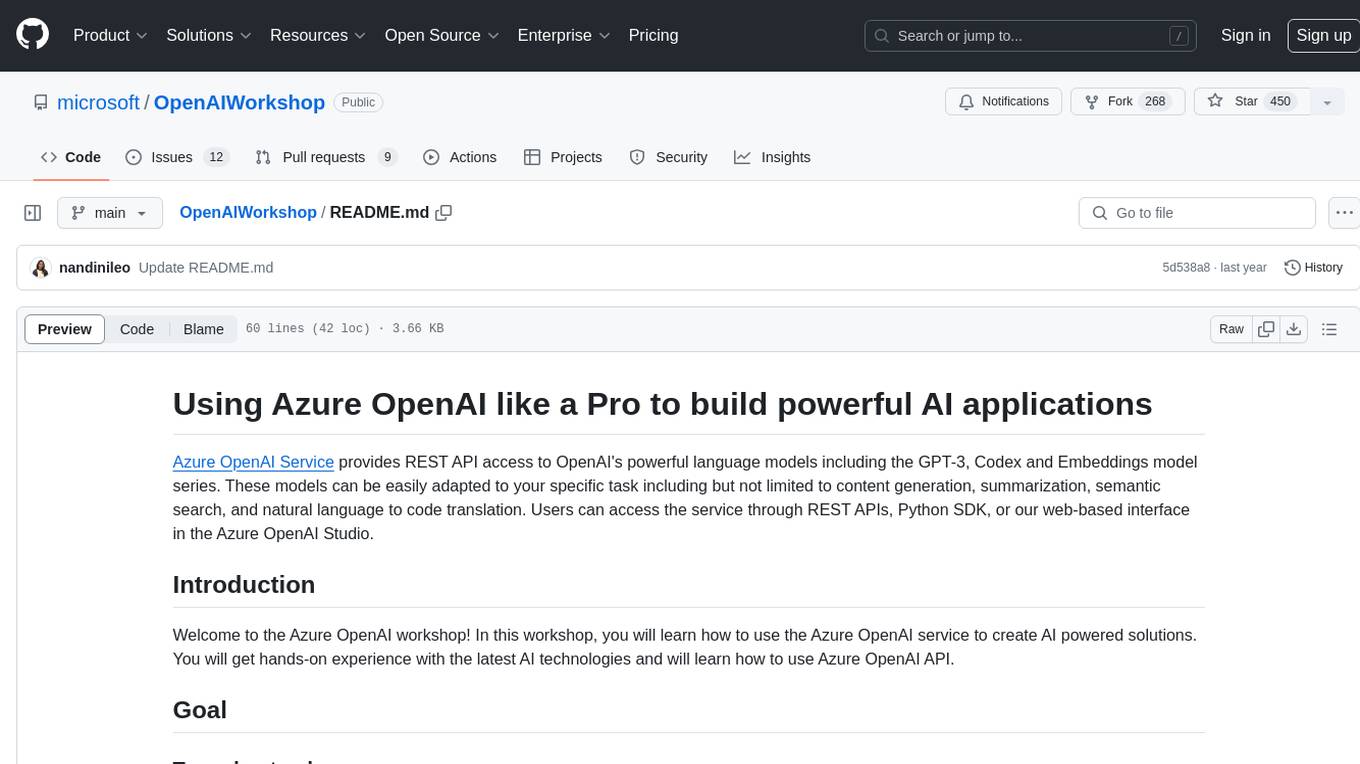

OpenAIWorkshop

Azure OpenAI Service provides REST API access to OpenAI's powerful language models including GPT-3, Codex and Embeddings. Users can easily adapt models for content generation, summarization, semantic search, and natural language to code translation. The workshop covers basics, prompt engineering, common NLP tasks, generative tasks, conversational dialog, and learning methods. It guides users to build applications with PowerApp, query SQL data, create data pipelines, and work with proprietary datasets. Target audience includes Power Users, Software Engineers, Data Scientists, and AI architects and Managers.

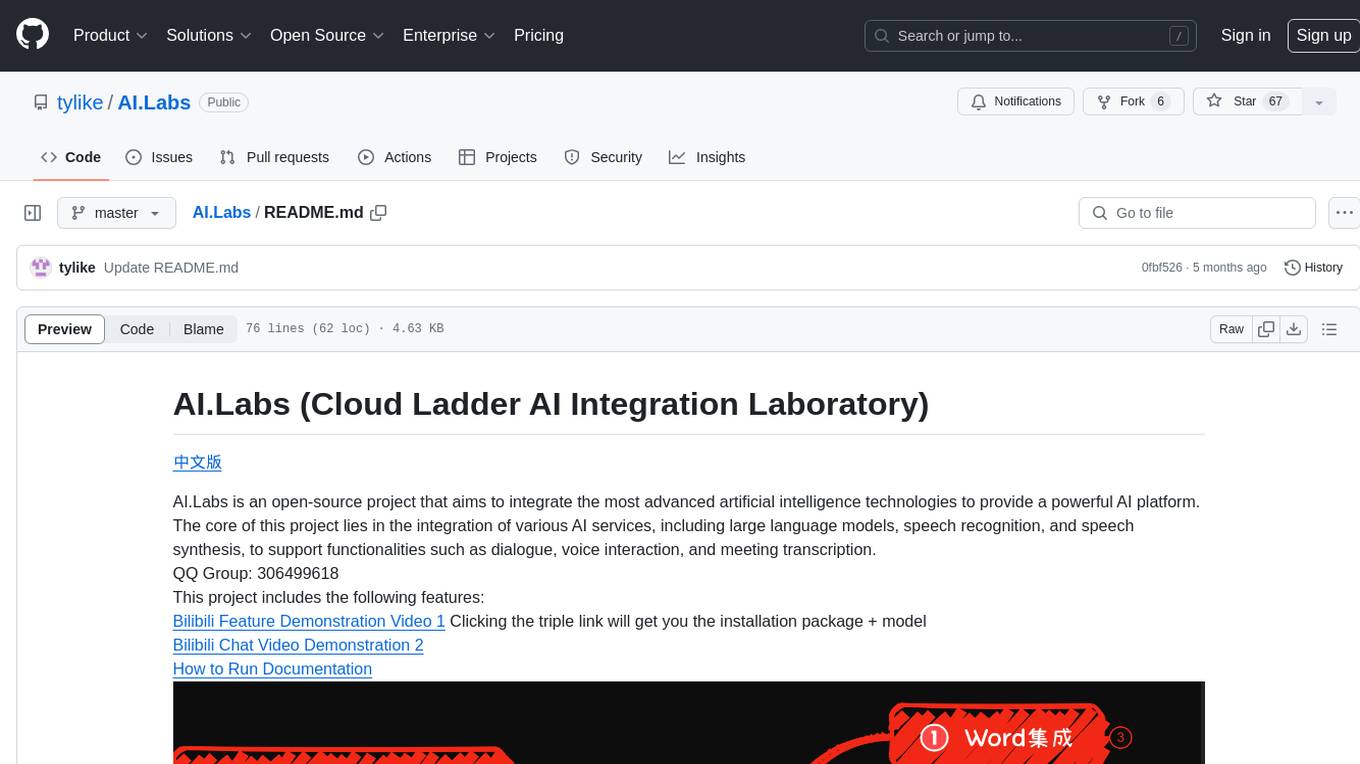

AI.Labs

AI.Labs is an open-source project that integrates advanced artificial intelligence technologies to create a powerful AI platform. It focuses on integrating AI services like large language models, speech recognition, and speech synthesis for functionalities such as dialogue, voice interaction, and meeting transcription. The project also includes features like a large language model dialogue system, speech recognition for meeting transcription, speech-to-text voice synthesis, integration of translation and chat, and uses technologies like C#, .Net, SQLite database, XAF, OpenAI API, TTS, and STT.

helix

HelixML is a private GenAI platform that allows users to deploy the best of open AI in their own data center or VPC while retaining complete data security and control. It includes support for fine-tuning models with drag-and-drop functionality. HelixML brings the best of open source AI to businesses in an ergonomic and scalable way, optimizing the tradeoff between GPU memory and latency.

ai

This repository contains a collection of AI algorithms and models for various machine learning tasks. It provides implementations of popular algorithms such as neural networks, decision trees, and support vector machines. The code is well-documented and easy to understand, making it suitable for both beginners and experienced developers. The repository also includes example datasets and tutorials to help users get started with building and training AI models. Whether you are a student learning about AI or a professional working on machine learning projects, this repository can be a valuable resource for your development journey.

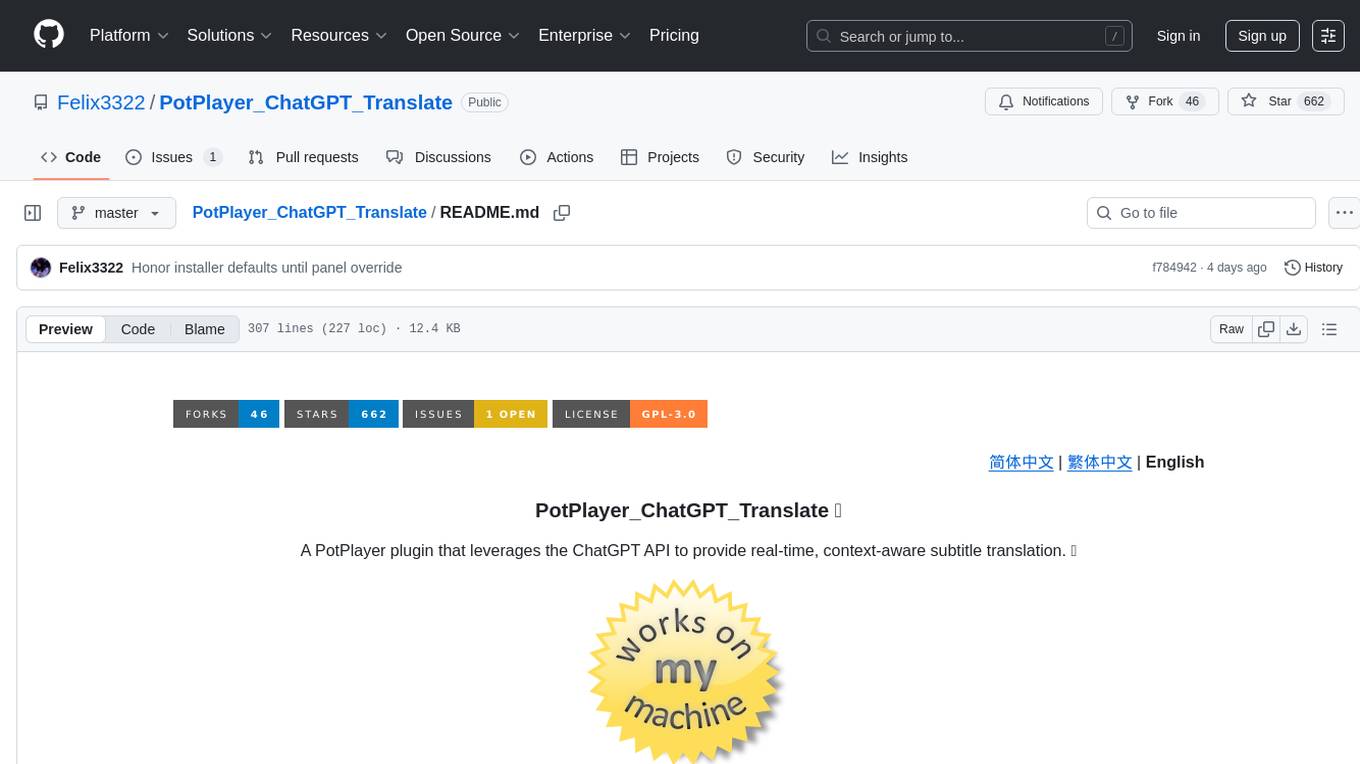

PotPlayer_ChatGPT_Translate

PotPlayer_ChatGPT_Translate is a GitHub repository that provides a script to integrate ChatGPT with PotPlayer for real-time translation of chat messages during video playback. The script utilizes the power of ChatGPT's natural language processing capabilities to translate chat messages in various languages, enhancing the viewing experience for users who consume video content with subtitles or chat interactions. By seamlessly integrating ChatGPT with PotPlayer, this tool offers a convenient solution for users to enjoy multilingual content without the need for manual translation efforts. The repository includes detailed instructions on how to set up and use the script, making it accessible for both novice and experienced users interested in leveraging AI-powered translation services within the PotPlayer environment.

For similar tasks

llama.rn

React Native binding of llama.cpp, which is an inference of LLaMA model in pure C/C++. This tool allows you to use the LLaMA model in your React Native applications for various tasks such as text completion, tokenization, detokenization, and embedding. It provides a convenient interface to interact with the LLaMA model and supports features like grammar sampling and mocking for testing purposes.

For similar jobs

h2ogpt

h2oGPT is an Apache V2 open-source project that allows users to query and summarize documents or chat with local private GPT LLMs. It features a private offline database of any documents (PDFs, Excel, Word, Images, Video Frames, Youtube, Audio, Code, Text, MarkDown, etc.), a persistent database (Chroma, Weaviate, or in-memory FAISS) using accurate embeddings (instructor-large, all-MiniLM-L6-v2, etc.), and efficient use of context using instruct-tuned LLMs (no need for LangChain's few-shot approach). h2oGPT also offers parallel summarization and extraction, reaching an output of 80 tokens per second with the 13B LLaMa2 model, HYDE (Hypothetical Document Embeddings) for enhanced retrieval based upon LLM responses, a variety of models supported (LLaMa2, Mistral, Falcon, Vicuna, WizardLM. With AutoGPTQ, 4-bit/8-bit, LORA, etc.), GPU support from HF and LLaMa.cpp GGML models, and CPU support using HF, LLaMa.cpp, and GPT4ALL models. Additionally, h2oGPT provides Attention Sinks for arbitrarily long generation (LLaMa-2, Mistral, MPT, Pythia, Falcon, etc.), a UI or CLI with streaming of all models, the ability to upload and view documents through the UI (control multiple collaborative or personal collections), Vision Models LLaVa, Claude-3, Gemini-Pro-Vision, GPT-4-Vision, Image Generation Stable Diffusion (sdxl-turbo, sdxl) and PlaygroundAI (playv2), Voice STT using Whisper with streaming audio conversion, Voice TTS using MIT-Licensed Microsoft Speech T5 with multiple voices and Streaming audio conversion, Voice TTS using MPL2-Licensed TTS including Voice Cloning and Streaming audio conversion, AI Assistant Voice Control Mode for hands-free control of h2oGPT chat, Bake-off UI mode against many models at the same time, Easy Download of model artifacts and control over models like LLaMa.cpp through the UI, Authentication in the UI by user/password via Native or Google OAuth, State Preservation in the UI by user/password, Linux, Docker, macOS, and Windows support, Easy Windows Installer for Windows 10 64-bit (CPU/CUDA), Easy macOS Installer for macOS (CPU/M1/M2), Inference Servers support (oLLaMa, HF TGI server, vLLM, Gradio, ExLLaMa, Replicate, OpenAI, Azure OpenAI, Anthropic), OpenAI-compliant, Server Proxy API (h2oGPT acts as drop-in-replacement to OpenAI server), Python client API (to talk to Gradio server), JSON Mode with any model via code block extraction. Also supports MistralAI JSON mode, Claude-3 via function calling with strict Schema, OpenAI via JSON mode, and vLLM via guided_json with strict Schema, Web-Search integration with Chat and Document Q/A, Agents for Search, Document Q/A, Python Code, CSV frames (Experimental, best with OpenAI currently), Evaluate performance using reward models, and Quality maintained with over 1000 unit and integration tests taking over 4 GPU-hours.

mistral.rs

Mistral.rs is a fast LLM inference platform written in Rust. We support inference on a variety of devices, quantization, and easy-to-use application with an Open-AI API compatible HTTP server and Python bindings.

ollama

Ollama is a lightweight, extensible framework for building and running language models on the local machine. It provides a simple API for creating, running, and managing models, as well as a library of pre-built models that can be easily used in a variety of applications. Ollama is designed to be easy to use and accessible to developers of all levels. It is open source and available for free on GitHub.

llama-cpp-agent

The llama-cpp-agent framework is a tool designed for easy interaction with Large Language Models (LLMs). Allowing users to chat with LLM models, execute structured function calls and get structured output (objects). It provides a simple yet robust interface and supports llama-cpp-python and OpenAI endpoints with GBNF grammar support (like the llama-cpp-python server) and the llama.cpp backend server. It works by generating a formal GGML-BNF grammar of the user defined structures and functions, which is then used by llama.cpp to generate text valid to that grammar. In contrast to most GBNF grammar generators it also supports nested objects, dictionaries, enums and lists of them.

llama_ros

This repository provides a set of ROS 2 packages to integrate llama.cpp into ROS 2. By using the llama_ros packages, you can easily incorporate the powerful optimization capabilities of llama.cpp into your ROS 2 projects by running GGUF-based LLMs and VLMs.

MITSUHA

OneReality is a virtual waifu/assistant that you can speak to through your mic and it'll speak back to you! It has many features such as: * You can speak to her with a mic * It can speak back to you * Has short-term memory and long-term memory * Can open apps * Smarter than you * Fluent in English, Japanese, Korean, and Chinese * Can control your smart home like Alexa if you set up Tuya (more info in Prerequisites) It is built with Python, Llama-cpp-python, Whisper, SpeechRecognition, PocketSphinx, VITS-fast-fine-tuning, VITS-simple-api, HyperDB, Sentence Transformers, and Tuya Cloud IoT.

wenxin-starter

WenXin-Starter is a spring-boot-starter for Baidu's "Wenxin Qianfan WENXINWORKSHOP" large model, which can help you quickly access Baidu's AI capabilities. It fully integrates the official API documentation of Wenxin Qianfan. Supports text-to-image generation, built-in dialogue memory, and supports streaming return of dialogue. Supports QPS control of a single model and supports queuing mechanism. Plugins will be added soon.

FlexFlow

FlexFlow Serve is an open-source compiler and distributed system for **low latency**, **high performance** LLM serving. FlexFlow Serve outperforms existing systems by 1.3-2.0x for single-node, multi-GPU inference and by 1.4-2.4x for multi-node, multi-GPU inference.