gp.nvim

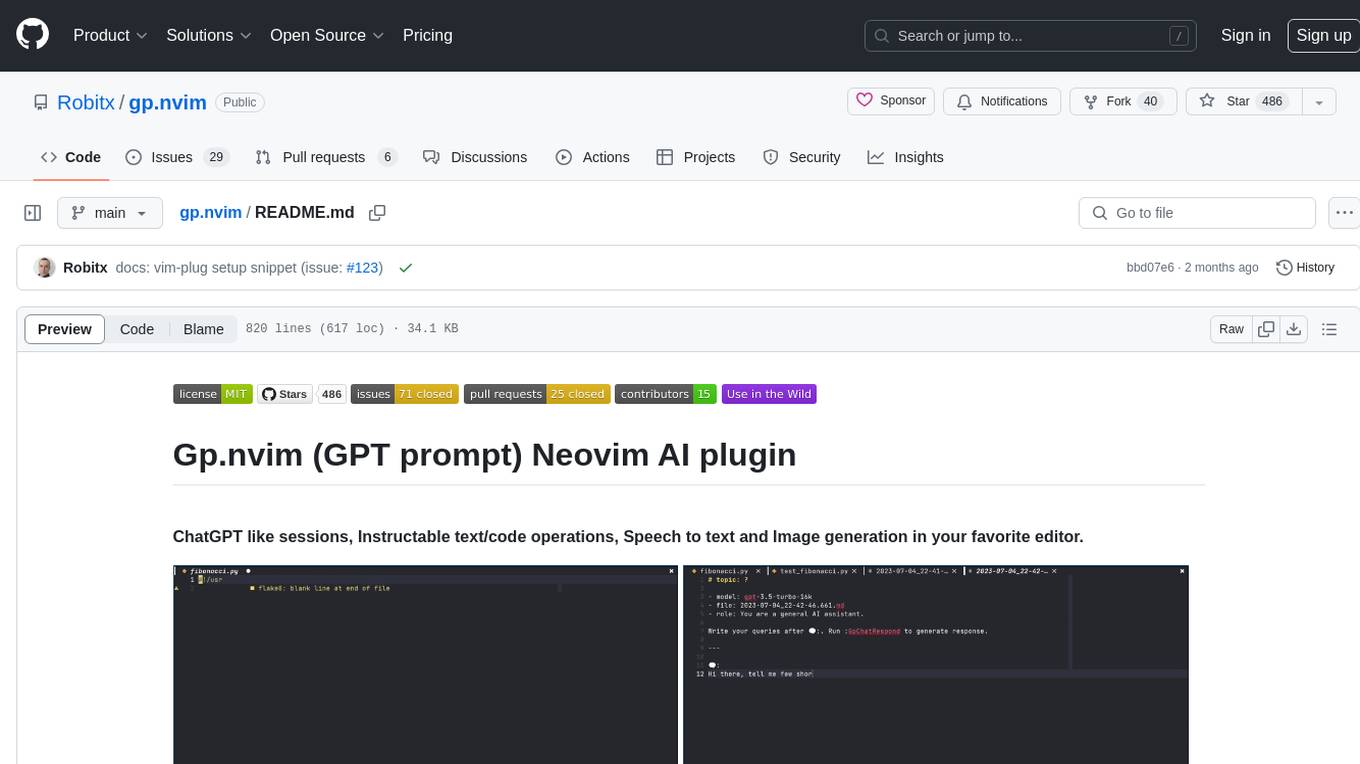

Gp.nvim (GPT prompt) Neovim AI plugin: ChatGPT sessions & Instructable text/code operations & Speech to text [OpenAI, Ollama, Anthropic, ..]

Stars: 762

Gp.nvim (GPT prompt) Neovim AI plugin provides a seamless integration of GPT models into Neovim, offering features like streaming responses, extensibility via hook functions, minimal dependencies, ChatGPT-like sessions, instructable text/code operations, speech-to-text support, and image generation directly within Neovim. The plugin aims to enhance the Neovim experience by leveraging the power of AI models in a user-friendly and native way.

README:

ChatGPT like sessions, Instructable text/code operations, Speech to text and Image generation in your favorite editor.

- 5-min-demo (December 2023)

- older-5-min-demo (screen capture, no sound)

The goal is to extend Neovim with the power of GPT models in a simple unobtrusive extensible way.

Trying to keep things as native as possible - reusing and integrating well with the natural features of (Neo)vim.

-

Streaming responses

- no spinner wheel and waiting for the full answer

- response generation can be canceled half way through

- properly working undo (response can be undone with a single

u)

-

Infinitely extensible via hook functions specified as part of the config

- hooks have access to everything in the plugin and are automatically registered as commands

- see 5. Configuration and Extend functionality sections for details

-

Minimum dependencies (

neovim,curl,grepand optionallysox)- zero dependencies on other lua plugins to minimize chance of breakage

-

ChatGPT like sessions

- just good old neovim buffers formated as markdown with autosave and few buffer bound shortcuts

- last chat also quickly accessible via toggable popup window

- chat finder - management popup for searching, previewing, deleting and opening chat sessions

-

Instructable text/code operations

- templating mechanism to combine user instructions, selections etc into the gpt query

- multimodal - same command works for normal/insert mode, with selection or a range

- many possible output targets - rewrite, prepend, append, new buffer, popup

- non interactive command mode available for common repetitive tasks implementable as simple hooks

(explain something in a popup window, write unit tests for selected code into a new buffer,

finish selected code based on comments in it, etc.) - custom instructions per repository with

.gp.mdfile

(instruct gpt to generate code using certain libs, packages, conventions and so on)

-

Speech to text support

- a mouth is 2-4x faster than fingers when it comes to outputting words - use it where it makes sense

(dicating comments and notes, asking gpt questions, giving instructions for code operations, ..)

- a mouth is 2-4x faster than fingers when it comes to outputting words - use it where it makes sense

-

Image generation

- be even less tempted to open the browser with the ability to generate images directly from Neovim

Snippets for your preferred package manager:

-- lazy.nvim

{

"robitx/gp.nvim",

config = function()

local conf = {

-- For customization, refer to Install > Configuration in the Documentation/Readme

}

require("gp").setup(conf)

-- Setup shortcuts here (see Usage > Shortcuts in the Documentation/Readme)

end,

}-- packer.nvim

use({

"robitx/gp.nvim",

config = function()

local conf = {

-- For customization, refer to Install > Configuration in the Documentation/Readme

}

require("gp").setup(conf)

-- Setup shortcuts here (see Usage > Shortcuts in the Documentation/Readme)

end,

})-- vim-plug

Plug 'robitx/gp.nvim'

local conf = {

-- For customization, refer to Install > Configuration in the Documentation/Readme

}

require("gp").setup(conf)

-- Setup shortcuts here (see Usage > Shortcuts in the Documentation/Readme)Make sure you have OpenAI API key. Get one here and use it in the 4. Configuration. Also consider setting up usage limits so you won't get suprised at the end of the month.

The OpenAI API key can be passed to the plugin in multiple ways:

| Method | Example | Security Level |

|---|---|---|

| hardcoded string | openai_api_key: "sk-...", |

Low |

| default env var | set OPENAI_API_KEY environment variable in shell config |

Medium |

| custom env var | openai_api_key = os.getenv("CUSTOM_ENV_NAME"), |

Medium |

| read from file | openai_api_key = { "cat", "path_to_api_key" }, |

Medium-High |

| password manager | openai_api_key = { "bw", "get", "password", "OAI_API_KEY" }, |

High |

If openai_api_key is a table, Gp runs it asynchronously to avoid blocking Neovim (password managers can take a second or two).

The following LLM providers are currently supported besides OpenAI:

- Ollama for local/offline open-source models. The plugin assumes you have the Ollama service up and running with configured models available (the default Ollama agent uses Llama3).

- GitHub Copilot with a Copilot license (zbirenbaum/copilot.lua or github/copilot.vim for autocomplete). You can access the underlying GPT-4 model without paying anything extra (essentially unlimited GPT-4 access).

- Perplexity.ai Pro users have $5/month free API credits available (the default PPLX agent uses Mixtral-8x7b).

- Anthropic to access Claude models, which currently outperform GPT-4 in some benchmarks.

- Google Gemini with a quite generous free range but some geo-restrictions (EU).

- Any other "OpenAI chat/completions" compatible endpoint (Azure, LM Studio, etc.)

Below is an example of the relevant configuration part enabling some of these. The secret field has the same capabilities as openai_api_key (which is still supported for compatibility).

providers = {

openai = {

endpoint = "https://api.openai.com/v1/chat/completions",

secret = os.getenv("OPENAI_API_KEY"),

},

-- azure = {...},

copilot = {

endpoint = "https://api.githubcopilot.com/chat/completions",

secret = {

"bash",

"-c",

"cat ~/.config/github-copilot/hosts.json | sed -e 's/.*oauth_token...//;s/\".*//'",

},

},

pplx = {

endpoint = "https://api.perplexity.ai/chat/completions",

secret = os.getenv("PPLX_API_KEY"),

},

ollama = {

endpoint = "http://localhost:11434/v1/chat/completions",

},

googleai = {

endpoint = "https://generativelanguage.googleapis.com/v1beta/models/{{model}}:streamGenerateContent?key={{secret}}",

secret = os.getenv("GOOGLEAI_API_KEY"),

},

anthropic = {

endpoint = "https://api.anthropic.com/v1/messages",

secret = os.getenv("ANTHROPIC_API_KEY"),

},

},Each of these providers has some agents preconfigured. Below is an example of how to disable predefined ChatGPT3-5 agent and create a custom one. If the provider field is missing, OpenAI is assumed for backward compatibility.

agents = {

{

name = "ChatGPT3-5",

disable = true,

},

{

name = "MyCustomAgent",

provider = "copilot",

chat = true,

command = true,

model = { model = "gpt-4-turbo" },

system_prompt = "Answer any query with just: Sure thing..",

},

},

The core plugin only needs curl installed to make calls to OpenAI API and grep for ChatFinder. So Linux, BSD and Mac OS should be covered.

Voice commands (:GpWhisper*) depend on SoX (Sound eXchange) to handle audio recording and processing:

- Mac OS:

brew install sox - Ubuntu/Debian:

apt-get install sox libsox-fmt-mp3 - Arch Linux:

pacman -S sox - Redhat/CentOS:

yum install sox - NixOS:

nix-env -i sox

Below is a linked snippet with the default values, but I suggest starting with minimal config possible (just openai_api_key if you don't have OPENAI_API_KEY env set up). Defaults change over time to improve things, options might get deprecated and so on - it's better to change only things where the default doesn't fit your needs.

Open a fresh chat in the current window. It can be either empty or include the visual selection or specified range as context. This command also supports subcommands for layout specification:

-

:GpChatNew vsplitOpen a fresh chat in a vertical split window. -

:GpChatNew splitOpen a fresh chat in a horizontal split window. -

:GpChatNew tabnewOpen a fresh chat in a new tab. -

:GpChatNew popupOpen a fresh chat in a popup window.

Paste the selection or specified range into the latest chat, simplifying the addition of code from multiple files into a single chat buffer. This command also supports subcommands for layout specification:

-

:GpChatPaste vsplitPaste into the latest chat in a vertical split window. -

:GpChatPaste splitPaste into the latest chat in a horizontal split window. -

:GpChatPaste tabnewPaste into the latest chat in a new tab. -

:GpChatPaste popupPaste into the latest chat in a popup window.

Open chat in a toggleable popup window, showing the last active chat or a fresh one with selection or a range as a context. This command also supports subcommands for layout specification:

-

:GpChatToggle vsplitToggle chat in a vertical split window. -

:GpChatToggle splitToggle chat in a horizontal split window. -

:GpChatToggle tabnewToggle chat in a new tab. -

:GpChatToggle popupToggle chat in a popup window.

Open a dialog to search through chats.

Request a new GPT response for the current chat. Usin:GpChatRespond N request a new GPT response with only the last N messages as context, using everything from the end up to the Nth instance of 🗨:.. (N=1 is like asking a question in a new chat).

Delete the current chat. By default requires confirmation before delete, which can be disabled in config using chat_confirm_delete = false,.

Opens a dialog for entering a prompt. After providing prompt instructions into the dialog, the generated response replaces the current line in normal/insert mode, selected lines in visual mode, or the specified range (e.g., :%GpRewrite applies the rewrite to the entire buffer).

:GpRewrite {prompt} Executes directly with specified {prompt} instructions, bypassing the dialog. Suitable for mapping repetitive tasks to keyboard shortcuts or for automation using headless Neovim via terminal or shell scripts.

Similar to :GpRewrite, but the answer is added after the current line, visual selection, or range.

Similar to :GpRewrite, but the answer is added before the current line, visual selection, or range.

Similar to :GpRewrite, but the answer is added into a new buffer in the current window.

Similar to :GpRewrite, but the answer is added into a new horizontal split window.

Similar to :GpRewrite, but the answer is added into a new vertical split window.

Similar to :GpRewrite, but the answer is added into a new tab.

Similar to :GpRewrite, but the answer is added into a pop-up window.

Example hook command to develop code from comments in a visual selection or specified range.

Provides custom context per repository:

-

opens

.gp.mdfile for a given repository in a toggable window. -

appends selection/range to the context file when used in visual/range mode.

-

also supports subcommands for layout specification:

-

:GpContext vsplitOpen.gp.mdin a vertical split window. -

:GpContext splitOpen.gp.mdin a horizontal split window. -

:GpContext tabnewOpen.gp.mdin a new tab. -

:GpContext popupOpen.gp.mdin a popup window.

-

-

refer to Custom Instructions for more details.

Transcription replaces the current line, visual selection or range in the current buffer. Use your mouth to ask a question in a chat buffer instead of writing it by hand, dictate some comments for the code, notes or even your next novel..

For the rest of the whisper commands, the transcription is used as an editable prompt for the equivalent non whisper command - GpWhisperRewrite dictates instructions for GpRewrite etc.

You can override the default language by setting {lang} with the 2 letter shortname of your language (e.g. "en" for English, "fr" for French etc).

Similar to :GpRewrite, but the prompt instruction dialog uses transcribed spoken instructions.

Similar to :GpAppend, but the prompt instruction dialog uses transcribed spoken instructions for adding content after the current line, visual selection, or range.

Similar to :GpPrepend, but the prompt instruction dialog uses transcribed spoken instructions for adding content before the current line, selection, or range.

Similar to :GpEnew, but the prompt instruction dialog uses transcribed spoken instructions for opening content in a new buffer within the current window.

Similar to :GpNew, but the prompt instruction dialog uses transcribed spoken instructions for opening content in a new horizontal split window.

Similar to :GpVnew, but the prompt instruction dialog uses transcribed spoken instructions for opening content in a new vertical split window.

Similar to :GpTabnew, but the prompt instruction dialog uses transcribed spoken instructions for opening content in a new tab.

Similar to :GpPopup, but the prompt instruction dialog uses transcribed spoken instructions for displaying content in a pop-up window.

Cycles between available agents based on the current buffer (chat agents if current buffer is a chat and command agents otherwise). The agent setting is persisted on disk across Neovim instances.

Displays currently used agents for chat and command instructions.

Choose a new agent based on its name, listing options based on the current buffer (chat agents if current buffer is a chat and command agents otherwise). The agent setting is persisted on disk across Neovim instances.

Opens a dialog for entering a prompt describing wanted images. When the generation is done it opens dialog for storing the image to the disk.

Displays currently used image agent (configuration).

Choose a new "image agent" based on its name. In the context of images, agent is basically a configuration for model, image size, quality and so on. The agent setting is persisted on disk across Neovim instances.

Stops all currently running responses and jobs.

Inspects the GPT prompt plugin object in a new scratch buffer.

Commands like GpRewrite, GpAppend etc. run asynchronously and generate event GpDone, so you can define autocmd (like auto formating) to run when gp finishes:

vim.api.nvim_create_autocmd({ "User" }, {

pattern = {"GpDone"},

callback = function(event)

print("event fired:\n", vim.inspect(event))

-- local b = event.buf

-- DO something

end,

})By calling :GpContext you can make .gp.md markdown file in a root of a repository. Commands such as :GpRewrite, :GpAppend etc. will respect instructions provided in this file (works better with gpt4, gpt 3.5 doesn't always listen to system commands). For example:

Use C++17.

Use Testify library when writing Go tests.

Use Early return/Guard Clauses pattern to avoid excessive nesting.

...Here is another example.

GpDone event + .gp.md custom instructions provide a possibility to run gp.nvim using headless (neo)vim from terminal or shell script. So you can let gp run edits accross many files if you put it in a loop.

test file:

1

2

3

4

5

.gp.md file:

If user says hello, please respond with:

```

Ahoy there!

```

calling gp.nvim from terminal/script:

- register autocommand to save and quit nvim when Gp is done

- second jumps to occurrence of something I want to rewrite/append/prepend to (in this case number

3) - selecting the line

- calling gp.nvim acction

$ nvim --headless -c "autocmd User GpDone wq" -c "/3" -c "normal V" -c "GpAppend hello there" test

resulting test file:

1

2

3

Ahoy there!

4

5

There are no default global shortcuts to mess with your own config. Below are examples for you to adjust or just use directly.

You can use the good old vim.keymap.set and paste the following after require("gp").setup(conf) call

(or anywhere you keep shortcuts if you want them at one place).

local function keymapOptions(desc)

return {

noremap = true,

silent = true,

nowait = true,

desc = "GPT prompt " .. desc,

}

end

-- Chat commands

vim.keymap.set({"n", "i"}, "<C-g>c", "<cmd>GpChatNew<cr>", keymapOptions("New Chat"))

vim.keymap.set({"n", "i"}, "<C-g>t", "<cmd>GpChatToggle<cr>", keymapOptions("Toggle Chat"))

vim.keymap.set({"n", "i"}, "<C-g>f", "<cmd>GpChatFinder<cr>", keymapOptions("Chat Finder"))

vim.keymap.set("v", "<C-g>c", ":<C-u>'<,'>GpChatNew<cr>", keymapOptions("Visual Chat New"))

vim.keymap.set("v", "<C-g>p", ":<C-u>'<,'>GpChatPaste<cr>", keymapOptions("Visual Chat Paste"))

vim.keymap.set("v", "<C-g>t", ":<C-u>'<,'>GpChatToggle<cr>", keymapOptions("Visual Toggle Chat"))

vim.keymap.set({ "n", "i" }, "<C-g><C-x>", "<cmd>GpChatNew split<cr>", keymapOptions("New Chat split"))

vim.keymap.set({ "n", "i" }, "<C-g><C-v>", "<cmd>GpChatNew vsplit<cr>", keymapOptions("New Chat vsplit"))

vim.keymap.set({ "n", "i" }, "<C-g><C-t>", "<cmd>GpChatNew tabnew<cr>", keymapOptions("New Chat tabnew"))

vim.keymap.set("v", "<C-g><C-x>", ":<C-u>'<,'>GpChatNew split<cr>", keymapOptions("Visual Chat New split"))

vim.keymap.set("v", "<C-g><C-v>", ":<C-u>'<,'>GpChatNew vsplit<cr>", keymapOptions("Visual Chat New vsplit"))

vim.keymap.set("v", "<C-g><C-t>", ":<C-u>'<,'>GpChatNew tabnew<cr>", keymapOptions("Visual Chat New tabnew"))

-- Prompt commands

vim.keymap.set({"n", "i"}, "<C-g>r", "<cmd>GpRewrite<cr>", keymapOptions("Inline Rewrite"))

vim.keymap.set({"n", "i"}, "<C-g>a", "<cmd>GpAppend<cr>", keymapOptions("Append (after)"))

vim.keymap.set({"n", "i"}, "<C-g>b", "<cmd>GpPrepend<cr>", keymapOptions("Prepend (before)"))

vim.keymap.set("v", "<C-g>r", ":<C-u>'<,'>GpRewrite<cr>", keymapOptions("Visual Rewrite"))

vim.keymap.set("v", "<C-g>a", ":<C-u>'<,'>GpAppend<cr>", keymapOptions("Visual Append (after)"))

vim.keymap.set("v", "<C-g>b", ":<C-u>'<,'>GpPrepend<cr>", keymapOptions("Visual Prepend (before)"))

vim.keymap.set("v", "<C-g>i", ":<C-u>'<,'>GpImplement<cr>", keymapOptions("Implement selection"))

vim.keymap.set({"n", "i"}, "<C-g>gp", "<cmd>GpPopup<cr>", keymapOptions("Popup"))

vim.keymap.set({"n", "i"}, "<C-g>ge", "<cmd>GpEnew<cr>", keymapOptions("GpEnew"))

vim.keymap.set({"n", "i"}, "<C-g>gn", "<cmd>GpNew<cr>", keymapOptions("GpNew"))

vim.keymap.set({"n", "i"}, "<C-g>gv", "<cmd>GpVnew<cr>", keymapOptions("GpVnew"))

vim.keymap.set({"n", "i"}, "<C-g>gt", "<cmd>GpTabnew<cr>", keymapOptions("GpTabnew"))

vim.keymap.set("v", "<C-g>gp", ":<C-u>'<,'>GpPopup<cr>", keymapOptions("Visual Popup"))

vim.keymap.set("v", "<C-g>ge", ":<C-u>'<,'>GpEnew<cr>", keymapOptions("Visual GpEnew"))

vim.keymap.set("v", "<C-g>gn", ":<C-u>'<,'>GpNew<cr>", keymapOptions("Visual GpNew"))

vim.keymap.set("v", "<C-g>gv", ":<C-u>'<,'>GpVnew<cr>", keymapOptions("Visual GpVnew"))

vim.keymap.set("v", "<C-g>gt", ":<C-u>'<,'>GpTabnew<cr>", keymapOptions("Visual GpTabnew"))

vim.keymap.set({"n", "i"}, "<C-g>x", "<cmd>GpContext<cr>", keymapOptions("Toggle Context"))

vim.keymap.set("v", "<C-g>x", ":<C-u>'<,'>GpContext<cr>", keymapOptions("Visual Toggle Context"))

vim.keymap.set({"n", "i", "v", "x"}, "<C-g>s", "<cmd>GpStop<cr>", keymapOptions("Stop"))

vim.keymap.set({"n", "i", "v", "x"}, "<C-g>n", "<cmd>GpNextAgent<cr>", keymapOptions("Next Agent"))

-- optional Whisper commands with prefix <C-g>w

vim.keymap.set({"n", "i"}, "<C-g>ww", "<cmd>GpWhisper<cr>", keymapOptions("Whisper"))

vim.keymap.set("v", "<C-g>ww", ":<C-u>'<,'>GpWhisper<cr>", keymapOptions("Visual Whisper"))

vim.keymap.set({"n", "i"}, "<C-g>wr", "<cmd>GpWhisperRewrite<cr>", keymapOptions("Whisper Inline Rewrite"))

vim.keymap.set({"n", "i"}, "<C-g>wa", "<cmd>GpWhisperAppend<cr>", keymapOptions("Whisper Append (after)"))

vim.keymap.set({"n", "i"}, "<C-g>wb", "<cmd>GpWhisperPrepend<cr>", keymapOptions("Whisper Prepend (before) "))

vim.keymap.set("v", "<C-g>wr", ":<C-u>'<,'>GpWhisperRewrite<cr>", keymapOptions("Visual Whisper Rewrite"))

vim.keymap.set("v", "<C-g>wa", ":<C-u>'<,'>GpWhisperAppend<cr>", keymapOptions("Visual Whisper Append (after)"))

vim.keymap.set("v", "<C-g>wb", ":<C-u>'<,'>GpWhisperPrepend<cr>", keymapOptions("Visual Whisper Prepend (before)"))

vim.keymap.set({"n", "i"}, "<C-g>wp", "<cmd>GpWhisperPopup<cr>", keymapOptions("Whisper Popup"))

vim.keymap.set({"n", "i"}, "<C-g>we", "<cmd>GpWhisperEnew<cr>", keymapOptions("Whisper Enew"))

vim.keymap.set({"n", "i"}, "<C-g>wn", "<cmd>GpWhisperNew<cr>", keymapOptions("Whisper New"))

vim.keymap.set({"n", "i"}, "<C-g>wv", "<cmd>GpWhisperVnew<cr>", keymapOptions("Whisper Vnew"))

vim.keymap.set({"n", "i"}, "<C-g>wt", "<cmd>GpWhisperTabnew<cr>", keymapOptions("Whisper Tabnew"))

vim.keymap.set("v", "<C-g>wp", ":<C-u>'<,'>GpWhisperPopup<cr>", keymapOptions("Visual Whisper Popup"))

vim.keymap.set("v", "<C-g>we", ":<C-u>'<,'>GpWhisperEnew<cr>", keymapOptions("Visual Whisper Enew"))

vim.keymap.set("v", "<C-g>wn", ":<C-u>'<,'>GpWhisperNew<cr>", keymapOptions("Visual Whisper New"))

vim.keymap.set("v", "<C-g>wv", ":<C-u>'<,'>GpWhisperVnew<cr>", keymapOptions("Visual Whisper Vnew"))

vim.keymap.set("v", "<C-g>wt", ":<C-u>'<,'>GpWhisperTabnew<cr>", keymapOptions("Visual Whisper Tabnew"))Or go more fancy by using which-key.nvim plugin:

require("which-key").add({

-- VISUAL mode mappings

-- s, x, v modes are handled the same way by which_key

{

mode = { "v" },

nowait = true,

remap = false,

{ "<C-g><C-t>", ":<C-u>'<,'>GpChatNew tabnew<cr>", desc = "ChatNew tabnew" },

{ "<C-g><C-v>", ":<C-u>'<,'>GpChatNew vsplit<cr>", desc = "ChatNew vsplit" },

{ "<C-g><C-x>", ":<C-u>'<,'>GpChatNew split<cr>", desc = "ChatNew split" },

{ "<C-g>a", ":<C-u>'<,'>GpAppend<cr>", desc = "Visual Append (after)" },

{ "<C-g>b", ":<C-u>'<,'>GpPrepend<cr>", desc = "Visual Prepend (before)" },

{ "<C-g>c", ":<C-u>'<,'>GpChatNew<cr>", desc = "Visual Chat New" },

{ "<C-g>g", group = "generate into new .." },

{ "<C-g>ge", ":<C-u>'<,'>GpEnew<cr>", desc = "Visual GpEnew" },

{ "<C-g>gn", ":<C-u>'<,'>GpNew<cr>", desc = "Visual GpNew" },

{ "<C-g>gp", ":<C-u>'<,'>GpPopup<cr>", desc = "Visual Popup" },

{ "<C-g>gt", ":<C-u>'<,'>GpTabnew<cr>", desc = "Visual GpTabnew" },

{ "<C-g>gv", ":<C-u>'<,'>GpVnew<cr>", desc = "Visual GpVnew" },

{ "<C-g>i", ":<C-u>'<,'>GpImplement<cr>", desc = "Implement selection" },

{ "<C-g>n", "<cmd>GpNextAgent<cr>", desc = "Next Agent" },

{ "<C-g>p", ":<C-u>'<,'>GpChatPaste<cr>", desc = "Visual Chat Paste" },

{ "<C-g>r", ":<C-u>'<,'>GpRewrite<cr>", desc = "Visual Rewrite" },

{ "<C-g>s", "<cmd>GpStop<cr>", desc = "GpStop" },

{ "<C-g>t", ":<C-u>'<,'>GpChatToggle<cr>", desc = "Visual Toggle Chat" },

{ "<C-g>w", group = "Whisper" },

{ "<C-g>wa", ":<C-u>'<,'>GpWhisperAppend<cr>", desc = "Whisper Append" },

{ "<C-g>wb", ":<C-u>'<,'>GpWhisperPrepend<cr>", desc = "Whisper Prepend" },

{ "<C-g>we", ":<C-u>'<,'>GpWhisperEnew<cr>", desc = "Whisper Enew" },

{ "<C-g>wn", ":<C-u>'<,'>GpWhisperNew<cr>", desc = "Whisper New" },

{ "<C-g>wp", ":<C-u>'<,'>GpWhisperPopup<cr>", desc = "Whisper Popup" },

{ "<C-g>wr", ":<C-u>'<,'>GpWhisperRewrite<cr>", desc = "Whisper Rewrite" },

{ "<C-g>wt", ":<C-u>'<,'>GpWhisperTabnew<cr>", desc = "Whisper Tabnew" },

{ "<C-g>wv", ":<C-u>'<,'>GpWhisperVnew<cr>", desc = "Whisper Vnew" },

{ "<C-g>ww", ":<C-u>'<,'>GpWhisper<cr>", desc = "Whisper" },

{ "<C-g>x", ":<C-u>'<,'>GpContext<cr>", desc = "Visual GpContext" },

},

-- NORMAL mode mappings

{

mode = { "n" },

nowait = true,

remap = false,

{ "<C-g><C-t>", "<cmd>GpChatNew tabnew<cr>", desc = "New Chat tabnew" },

{ "<C-g><C-v>", "<cmd>GpChatNew vsplit<cr>", desc = "New Chat vsplit" },

{ "<C-g><C-x>", "<cmd>GpChatNew split<cr>", desc = "New Chat split" },

{ "<C-g>a", "<cmd>GpAppend<cr>", desc = "Append (after)" },

{ "<C-g>b", "<cmd>GpPrepend<cr>", desc = "Prepend (before)" },

{ "<C-g>c", "<cmd>GpChatNew<cr>", desc = "New Chat" },

{ "<C-g>f", "<cmd>GpChatFinder<cr>", desc = "Chat Finder" },

{ "<C-g>g", group = "generate into new .." },

{ "<C-g>ge", "<cmd>GpEnew<cr>", desc = "GpEnew" },

{ "<C-g>gn", "<cmd>GpNew<cr>", desc = "GpNew" },

{ "<C-g>gp", "<cmd>GpPopup<cr>", desc = "Popup" },

{ "<C-g>gt", "<cmd>GpTabnew<cr>", desc = "GpTabnew" },

{ "<C-g>gv", "<cmd>GpVnew<cr>", desc = "GpVnew" },

{ "<C-g>n", "<cmd>GpNextAgent<cr>", desc = "Next Agent" },

{ "<C-g>r", "<cmd>GpRewrite<cr>", desc = "Inline Rewrite" },

{ "<C-g>s", "<cmd>GpStop<cr>", desc = "GpStop" },

{ "<C-g>t", "<cmd>GpChatToggle<cr>", desc = "Toggle Chat" },

{ "<C-g>w", group = "Whisper" },

{ "<C-g>wa", "<cmd>GpWhisperAppend<cr>", desc = "Whisper Append (after)" },

{ "<C-g>wb", "<cmd>GpWhisperPrepend<cr>", desc = "Whisper Prepend (before)" },

{ "<C-g>we", "<cmd>GpWhisperEnew<cr>", desc = "Whisper Enew" },

{ "<C-g>wn", "<cmd>GpWhisperNew<cr>", desc = "Whisper New" },

{ "<C-g>wp", "<cmd>GpWhisperPopup<cr>", desc = "Whisper Popup" },

{ "<C-g>wr", "<cmd>GpWhisperRewrite<cr>", desc = "Whisper Inline Rewrite" },

{ "<C-g>wt", "<cmd>GpWhisperTabnew<cr>", desc = "Whisper Tabnew" },

{ "<C-g>wv", "<cmd>GpWhisperVnew<cr>", desc = "Whisper Vnew" },

{ "<C-g>ww", "<cmd>GpWhisper<cr>", desc = "Whisper" },

{ "<C-g>x", "<cmd>GpContext<cr>", desc = "Toggle GpContext" },

},

-- INSERT mode mappings

{

mode = { "i" },

nowait = true,

remap = false,

{ "<C-g><C-t>", "<cmd>GpChatNew tabnew<cr>", desc = "New Chat tabnew" },

{ "<C-g><C-v>", "<cmd>GpChatNew vsplit<cr>", desc = "New Chat vsplit" },

{ "<C-g><C-x>", "<cmd>GpChatNew split<cr>", desc = "New Chat split" },

{ "<C-g>a", "<cmd>GpAppend<cr>", desc = "Append (after)" },

{ "<C-g>b", "<cmd>GpPrepend<cr>", desc = "Prepend (before)" },

{ "<C-g>c", "<cmd>GpChatNew<cr>", desc = "New Chat" },

{ "<C-g>f", "<cmd>GpChatFinder<cr>", desc = "Chat Finder" },

{ "<C-g>g", group = "generate into new .." },

{ "<C-g>ge", "<cmd>GpEnew<cr>", desc = "GpEnew" },

{ "<C-g>gn", "<cmd>GpNew<cr>", desc = "GpNew" },

{ "<C-g>gp", "<cmd>GpPopup<cr>", desc = "Popup" },

{ "<C-g>gt", "<cmd>GpTabnew<cr>", desc = "GpTabnew" },

{ "<C-g>gv", "<cmd>GpVnew<cr>", desc = "GpVnew" },

{ "<C-g>n", "<cmd>GpNextAgent<cr>", desc = "Next Agent" },

{ "<C-g>r", "<cmd>GpRewrite<cr>", desc = "Inline Rewrite" },

{ "<C-g>s", "<cmd>GpStop<cr>", desc = "GpStop" },

{ "<C-g>t", "<cmd>GpChatToggle<cr>", desc = "Toggle Chat" },

{ "<C-g>w", group = "Whisper" },

{ "<C-g>wa", "<cmd>GpWhisperAppend<cr>", desc = "Whisper Append (after)" },

{ "<C-g>wb", "<cmd>GpWhisperPrepend<cr>", desc = "Whisper Prepend (before)" },

{ "<C-g>we", "<cmd>GpWhisperEnew<cr>", desc = "Whisper Enew" },

{ "<C-g>wn", "<cmd>GpWhisperNew<cr>", desc = "Whisper New" },

{ "<C-g>wp", "<cmd>GpWhisperPopup<cr>", desc = "Whisper Popup" },

{ "<C-g>wr", "<cmd>GpWhisperRewrite<cr>", desc = "Whisper Inline Rewrite" },

{ "<C-g>wt", "<cmd>GpWhisperTabnew<cr>", desc = "Whisper Tabnew" },

{ "<C-g>wv", "<cmd>GpWhisperVnew<cr>", desc = "Whisper Vnew" },

{ "<C-g>ww", "<cmd>GpWhisper<cr>", desc = "Whisper" },

{ "<C-g>x", "<cmd>GpContext<cr>", desc = "Toggle GpContext" },

},

})You can extend/override the plugin functionality with your own, by putting functions into config.hooks.

Hooks have access to everything (see InspectPlugin example in defaults) and are

automatically registered as commands (GpInspectPlugin).

Here are some more examples:

-

:GpUnitTests-- example of adding command which writes unit tests for the selected code UnitTests = function(gp, params) local template = "I have the following code from {{filename}}:\n\n" .. "```{{filetype}}\n{{selection}}\n```\n\n" .. "Please respond by writing table driven unit tests for the code above." local agent = gp.get_command_agent() gp.Prompt(params, gp.Target.vnew, agent, template) end,

-

:GpExplain-- example of adding command which explains the selected code Explain = function(gp, params) local template = "I have the following code from {{filename}}:\n\n" .. "```{{filetype}}\n{{selection}}\n```\n\n" .. "Please respond by explaining the code above." local agent = gp.get_chat_agent() gp.Prompt(params, gp.Target.popup, agent, template) end,

-

:GpCodeReview-- example of usig enew as a function specifying type for the new buffer CodeReview = function(gp, params) local template = "I have the following code from {{filename}}:\n\n" .. "```{{filetype}}\n{{selection}}\n```\n\n" .. "Please analyze for code smells and suggest improvements." local agent = gp.get_chat_agent() gp.Prompt(params, gp.Target.enew("markdown"), agent, template) end,

-

:GpTranslator-- example of adding command which opens new chat dedicated for translation Translator = function(gp, params) local chat_system_prompt = "You are a Translator, please translate between English and Chinese." gp.cmd.ChatNew(params, chat_system_prompt) -- -- you can also create a chat with a specific fixed agent like this: -- local agent = gp.get_chat_agent("ChatGPT4o") -- gp.cmd.ChatNew(params, chat_system_prompt, agent) end,

-

:GpBufferChatNew-- example of making :%GpChatNew a dedicated command which -- opens new chat with the entire current buffer as a context BufferChatNew = function(gp, _) -- call GpChatNew command in range mode on whole buffer vim.api.nvim_command("%" .. gp.config.cmd_prefix .. "ChatNew") end,

The raw plugin text editing method Prompt has following signature:

---@param params table # vim command parameters such as range, args, etc.

---@param target integer | function | table # where to put the response

---@param agent table # obtained from get_command_agent or get_chat_agent

---@param template string # template with model instructions

---@param prompt string | nil # nil for non interactive commads

---@param whisper string | nil # predefined input (e.g. obtained from Whisper)

---@param callback function | nil # callback(response) after completing the prompt

Prompt(params, target, agent, template, prompt, whisper, callback)-

paramsis a table passed to neovim user commands,Promptcurrently uses:-

range, line1, line2to work with ranges -

argsso instructions can be passed directly after command (:GpRewrite something something)

params = { args = "", bang = false, count = -1, fargs = {}, line1 = 1352, line2 = 1352, mods = "", name = "GpChatNew", range = 0, reg = "", smods = { browse = false, confirm = false, emsg_silent = false, hide = false, horizontal = false, keepalt = false, keepjumps = false, keepmarks = false, keeppatterns = false, lockmarks = false, noautocmd = false, noswapfile = false, sandbox = false, silent = false, split = "", tab = -1, unsilent = false, verbose = -1, vertical = false } }

-

-

targetspecifying where to direct GPT response- enew/new/vnew/tabnew can be used as a function so you can pass in a filetype

for the new buffer (

enew/enew()/enew("markdown")/..)

M.Target = { rewrite = 0, -- for replacing the selection, range or the current line append = 1, -- for appending after the selection, range or the current line prepend = 2, -- for prepending before the selection, range or the current line popup = 3, -- for writing into the popup window -- for writing into a new buffer ---@param filetype nil | string # nil = same as the original buffer ---@return table # a table with type=4 and filetype=filetype enew = function(filetype) return { type = 4, filetype = filetype } end, --- for creating a new horizontal split ---@param filetype nil | string # nil = same as the original buffer ---@return table # a table with type=5 and filetype=filetype new = function(filetype) return { type = 5, filetype = filetype } end, --- for creating a new vertical split ---@param filetype nil | string # nil = same as the original buffer ---@return table # a table with type=6 and filetype=filetype vnew = function(filetype) return { type = 6, filetype = filetype } end, --- for creating a new tab ---@param filetype nil | string # nil = same as the original buffer ---@return table # a table with type=7 and filetype=filetype tabnew = function(filetype) return { type = 7, filetype = filetype } end, }

- enew/new/vnew/tabnew can be used as a function so you can pass in a filetype

for the new buffer (

-

agenttable obtainable viaget_command_agentandget_chat_agentmethods which have following signature:---@param name string | nil ---@return table # { cmd_prefix, name, model, system_prompt, provider } get_command_agent(name)

-

template-

template of the user message send to gpt

-

string can include variables below:

name Description {{filetype}}filetype of the current buffer {{selection}}last or currently selected text {{command}}instructions provided by the user

-

-

prompt- string used similarly as bash/zsh prompt in terminal, when plugin asks for user command to gpt.

- if

nil, user is not asked to provide input (for specific predefined commands - document this, explain that, write tests ..) - simple

🤖 ~might be used or you could use different msg to convey info about the method which is called

(🤖 rewrite ~,🤖 popup ~,🤖 enew ~,🤖 inline ~, etc.)

-

whisper- optional string serving as a default for input prompt (for example generated from speech by Whisper)

-

callback- optional callback function allowing post processing logic on the prompt response

(for example letting the model to generate commit message and using the callback to make actual commit)

- optional callback function allowing post processing logic on the prompt response

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for gp.nvim

Similar Open Source Tools

gp.nvim

Gp.nvim (GPT prompt) Neovim AI plugin provides a seamless integration of GPT models into Neovim, offering features like streaming responses, extensibility via hook functions, minimal dependencies, ChatGPT-like sessions, instructable text/code operations, speech-to-text support, and image generation directly within Neovim. The plugin aims to enhance the Neovim experience by leveraging the power of AI models in a user-friendly and native way.

ruby-openai

Use the OpenAI API with Ruby! 🤖🩵 Stream text with GPT-4, transcribe and translate audio with Whisper, or create images with DALL·E... Hire me | 🎮 Ruby AI Builders Discord | 🐦 Twitter | 🧠 Anthropic Gem | 🚂 Midjourney Gem ## Table of Contents * Ruby OpenAI * Table of Contents * Installation * Bundler * Gem install * Usage * Quickstart * With Config * Custom timeout or base URI * Extra Headers per Client * Logging * Errors * Faraday middleware * Azure * Ollama * Counting Tokens * Models * Examples * Chat * Streaming Chat * Vision * JSON Mode * Functions * Edits * Embeddings * Batches * Files * Finetunes * Assistants * Threads and Messages * Runs * Runs involving function tools * Image Generation * DALL·E 2 * DALL·E 3 * Image Edit * Image Variations * Moderations * Whisper * Translate * Transcribe * Speech * Errors * Development * Release * Contributing * License * Code of Conduct

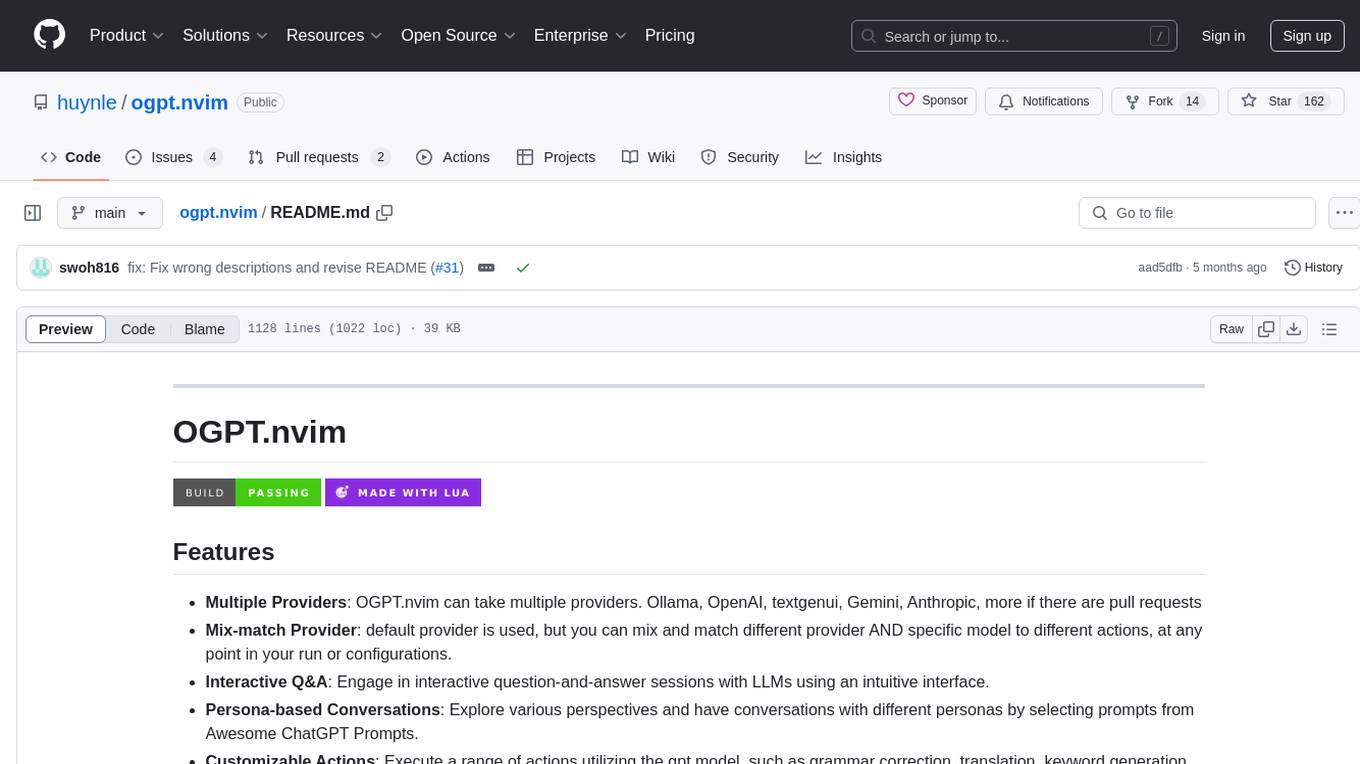

ogpt.nvim

OGPT.nvim is a Neovim plugin that enables users to interact with various language models (LLMs) such as Ollama, OpenAI, TextGenUI, and more. Users can engage in interactive question-and-answer sessions, have persona-based conversations, and execute customizable actions like grammar correction, translation, keyword generation, docstring creation, test addition, code optimization, summarization, bug fixing, code explanation, and code readability analysis. The plugin allows users to define custom actions using a JSON file or plugin configurations.

promptic

Promptic is a tool designed for LLM app development, providing a productive and pythonic way to build LLM applications. It leverages LiteLLM, allowing flexibility to switch LLM providers easily. Promptic focuses on building features by providing type-safe structured outputs, easy-to-build agents, streaming support, automatic prompt caching, and built-in conversation memory.

crush

Crush is a versatile tool designed to enhance coding workflows in your terminal. It offers support for multiple LLMs, allows for flexible switching between models, and enables session-based work management. Crush is extensible through MCPs and works across various operating systems. It can be installed using package managers like Homebrew and NPM, or downloaded directly. Crush supports various APIs like Anthropic, OpenAI, Groq, and Google Gemini, and allows for customization through environment variables. The tool can be configured locally or globally, and supports LSPs for additional context. Crush also provides options for ignoring files, allowing tools, and configuring local models. It respects `.gitignore` files and offers logging capabilities for troubleshooting and debugging.

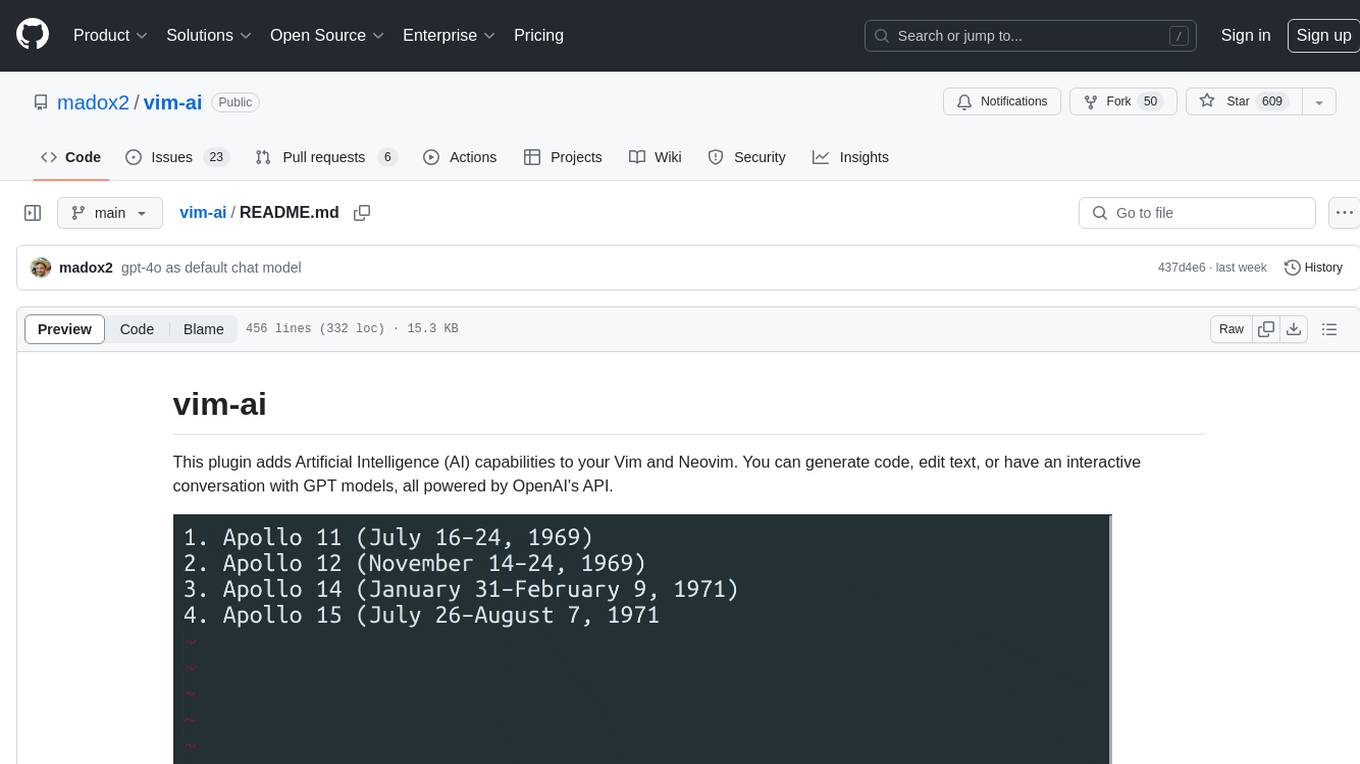

vim-ai

vim-ai is a plugin that adds Artificial Intelligence (AI) capabilities to Vim and Neovim. It allows users to generate code, edit text, and have interactive conversations with GPT models powered by OpenAI's API. The plugin uses OpenAI's API to generate responses, requiring users to set up an account and obtain an API key. It supports various commands for text generation, editing, and chat interactions, providing a seamless integration of AI features into the Vim text editor environment.

ollama-ex

Ollama is a powerful tool for running large language models locally or on your own infrastructure. It provides a full implementation of the Ollama API, support for streaming requests, and tool use capability. Users can interact with Ollama in Elixir to generate completions, chat messages, and perform streaming requests. The tool also supports function calling on compatible models, allowing users to define tools with clear descriptions and arguments. Ollama is designed to facilitate natural language processing tasks and enhance user interactions with language models.

jambo

Jambo is a Python package that automatically converts JSON Schema definitions into Pydantic models. It streamlines schema validation and enforces type safety using Pydantic's validation features. The tool supports various JSON Schema features like strings, integers, floats, booleans, arrays, nested objects, and more. It enforces constraints such as minLength, maxLength, pattern, minimum, maximum, uniqueItems, and provides a zero-config approach for generating models. Jambo is designed to simplify the process of dynamically generating Pydantic models for AI frameworks.

llm.nvim

llm.nvim is a neovim plugin designed for LLM-assisted programming. It provides a no-frills approach to integrating language model assistance into the coding workflow. Users can configure the plugin to interact with various AI services such as GROQ, OpenAI, and Anthropics. The plugin offers functions to trigger the LLM assistant, create new prompt files, and customize key bindings for seamless interaction. With a focus on simplicity and efficiency, llm.nvim aims to enhance the coding experience by leveraging AI capabilities within the neovim environment.

ling

Ling is a workflow framework supporting streaming of structured content from large language models. It enables quick responses to content streams, reducing waiting times. Ling parses JSON data streams character by character in real-time, outputting content in jsonuri format. It facilitates immediate front-end processing by converting content during streaming input. The framework supports data stream output via JSONL protocol, correction of token errors in JSON output, complex asynchronous workflows, status messages during streaming output, and Server-Sent Events.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

jupyter-mcp-server

Jupyter MCP Server is a Model Context Protocol (MCP) server implementation that enables real-time interaction with Jupyter Notebooks. It allows AI to edit, document, and execute code for data analysis and visualization. The server offers features like real-time control, smart execution, and MCP compatibility. Users can use tools such as insert_execute_code_cell, append_markdown_cell, get_notebook_info, and read_cell for advanced interactions with Jupyter notebooks.

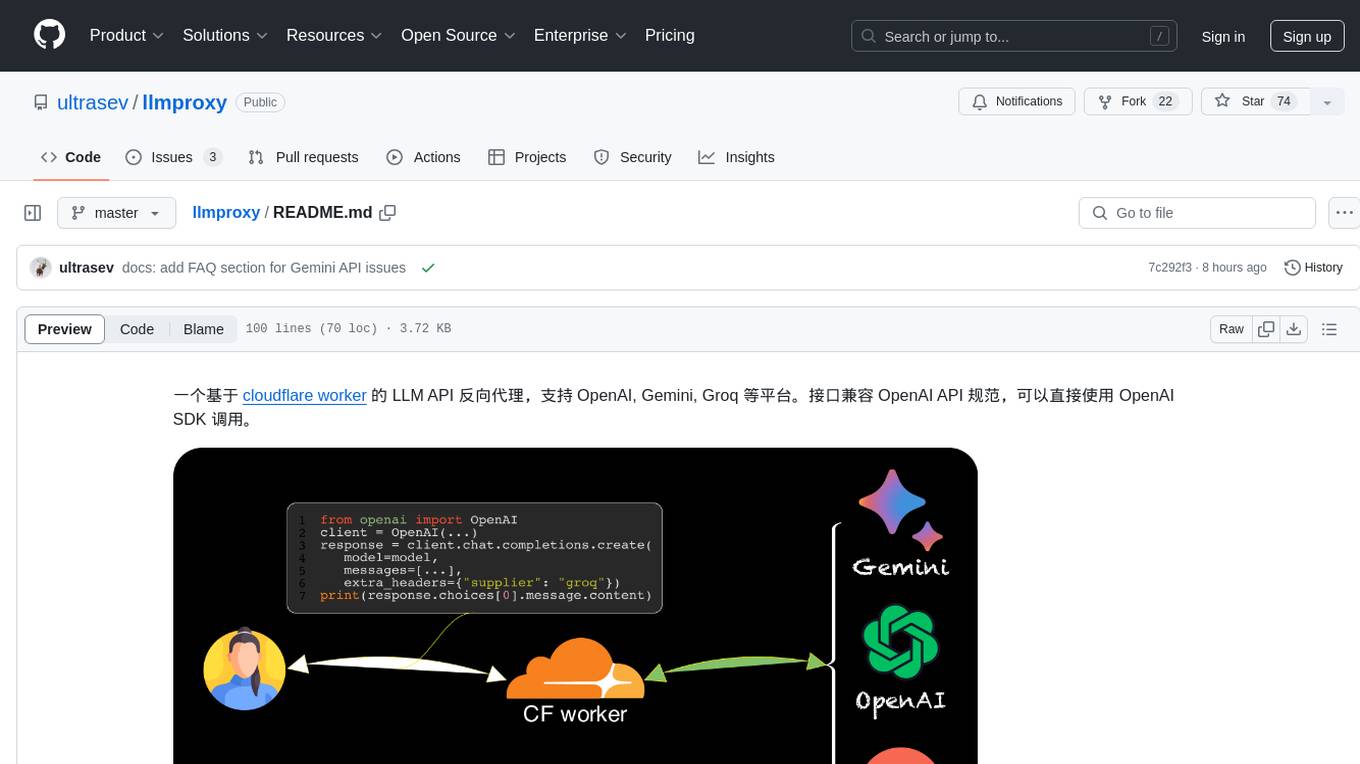

llmproxy

llmproxy is a reverse proxy for LLM API based on Cloudflare Worker, supporting platforms like OpenAI, Gemini, and Groq. The interface is compatible with the OpenAI API specification and can be directly accessed using the OpenAI SDK. It provides a convenient way to interact with various AI platforms through a unified API endpoint, enabling seamless integration and usage in different applications.

lmstudio.js

lmstudio.js is a pre-release alpha client SDK for LM Studio, allowing users to use local LLMs in JS/TS/Node. It is currently undergoing rapid development with breaking changes expected. Users can follow LM Studio's announcements on Twitter and Discord. The SDK provides API usage for loading models, predicting text, setting up the local LLM server, and more. It supports features like custom loading progress tracking, model unloading, structured output prediction, and cancellation of predictions. Users can interact with LM Studio through the CLI tool 'lms' and perform tasks like text completion, conversation, and getting prediction statistics.

mcp

Semgrep MCP Server is a beta server under active development for using Semgrep to scan code for security vulnerabilities. It provides a Model Context Protocol (MCP) for various coding tools to get specialized help in tasks. Users can connect to Semgrep AppSec Platform, scan code for vulnerabilities, customize Semgrep rules, analyze and filter scan results, and compare results. The tool is published on PyPI as semgrep-mcp and can be installed using pip, pipx, uv, poetry, or other methods. It supports CLI and Docker environments for running the server. Integration with VS Code is also available for quick installation. The project welcomes contributions and is inspired by core technologies like Semgrep and MCP, as well as related community projects and tools.

For similar tasks

generative-ai

This repository contains notebooks, code samples, sample apps, and other resources that demonstrate how to use, develop and manage generative AI workflows using Generative AI on Google Cloud, powered by Vertex AI. For more Vertex AI samples, please visit the Vertex AI samples Github repository.

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

generative-ai-for-beginners

This course has 18 lessons. Each lesson covers its own topic so start wherever you like! Lessons are labeled either "Learn" lessons explaining a Generative AI concept or "Build" lessons that explain a concept and code examples in both **Python** and **TypeScript** when possible. Each lesson also includes a "Keep Learning" section with additional learning tools. **What You Need** * Access to the Azure OpenAI Service **OR** OpenAI API - _Only required to complete coding lessons_ * Basic knowledge of Python or Typescript is helpful - *For absolute beginners check out these Python and TypeScript courses. * A Github account to fork this entire repo to your own GitHub account We have created a **Course Setup** lesson to help you with setting up your development environment. Don't forget to star (🌟) this repo to find it easier later. ## 🧠 Ready to Deploy? If you are looking for more advanced code samples, check out our collection of Generative AI Code Samples in both **Python** and **TypeScript**. ## 🗣️ Meet Other Learners, Get Support Join our official AI Discord server to meet and network with other learners taking this course and get support. ## 🚀 Building a Startup? Sign up for Microsoft for Startups Founders Hub to receive **free OpenAI credits** and up to **$150k towards Azure credits to access OpenAI models through Azure OpenAI Services**. ## 🙏 Want to help? Do you have suggestions or found spelling or code errors? Raise an issue or Create a pull request ## 📂 Each lesson includes: * A short video introduction to the topic * A written lesson located in the README * Python and TypeScript code samples supporting Azure OpenAI and OpenAI API * Links to extra resources to continue your learning ## 🗃️ Lessons | | Lesson Link | Description | Additional Learning | | :-: | :------------------------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------: | ------------------------------------------------------------------------------ | | 00 | Course Setup | **Learn:** How to Setup Your Development Environment | Learn More | | 01 | Introduction to Generative AI and LLMs | **Learn:** Understanding what Generative AI is and how Large Language Models (LLMs) work. | Learn More | | 02 | Exploring and comparing different LLMs | **Learn:** How to select the right model for your use case | Learn More | | 03 | Using Generative AI Responsibly | **Learn:** How to build Generative AI Applications responsibly | Learn More | | 04 | Understanding Prompt Engineering Fundamentals | **Learn:** Hands-on Prompt Engineering Best Practices | Learn More | | 05 | Creating Advanced Prompts | **Learn:** How to apply prompt engineering techniques that improve the outcome of your prompts. | Learn More | | 06 | Building Text Generation Applications | **Build:** A text generation app using Azure OpenAI | Learn More | | 07 | Building Chat Applications | **Build:** Techniques for efficiently building and integrating chat applications. | Learn More | | 08 | Building Search Apps Vector Databases | **Build:** A search application that uses Embeddings to search for data. | Learn More | | 09 | Building Image Generation Applications | **Build:** A image generation application | Learn More | | 10 | Building Low Code AI Applications | **Build:** A Generative AI application using Low Code tools | Learn More | | 11 | Integrating External Applications with Function Calling | **Build:** What is function calling and its use cases for applications | Learn More | | 12 | Designing UX for AI Applications | **Learn:** How to apply UX design principles when developing Generative AI Applications | Learn More | | 13 | Securing Your Generative AI Applications | **Learn:** The threats and risks to AI systems and methods to secure these systems. | Learn More | | 14 | The Generative AI Application Lifecycle | **Learn:** The tools and metrics to manage the LLM Lifecycle and LLMOps | Learn More | | 15 | Retrieval Augmented Generation (RAG) and Vector Databases | **Build:** An application using a RAG Framework to retrieve embeddings from a Vector Databases | Learn More | | 16 | Open Source Models and Hugging Face | **Build:** An application using open source models available on Hugging Face | Learn More | | 17 | AI Agents | **Build:** An application using an AI Agent Framework | Learn More | | 18 | Fine-Tuning LLMs | **Learn:** The what, why and how of fine-tuning LLMs | Learn More |

cog-comfyui

Cog-comfyui allows users to run ComfyUI workflows on Replicate. ComfyUI is a visual programming tool for creating and sharing generative art workflows. With cog-comfyui, users can access a variety of pre-trained models and custom nodes to create their own unique artworks. The tool is easy to use and does not require any coding experience. Users simply need to upload their API JSON file and any necessary input files, and then click the "Run" button. Cog-comfyui will then generate the output image or video file.

ai-notes

Notes on AI state of the art, with a focus on generative and large language models. These are the "raw materials" for the https://lspace.swyx.io/ newsletter. This repo used to be called https://github.com/sw-yx/prompt-eng, but was renamed because Prompt Engineering is Overhyped. This is now an AI Engineering notes repo.

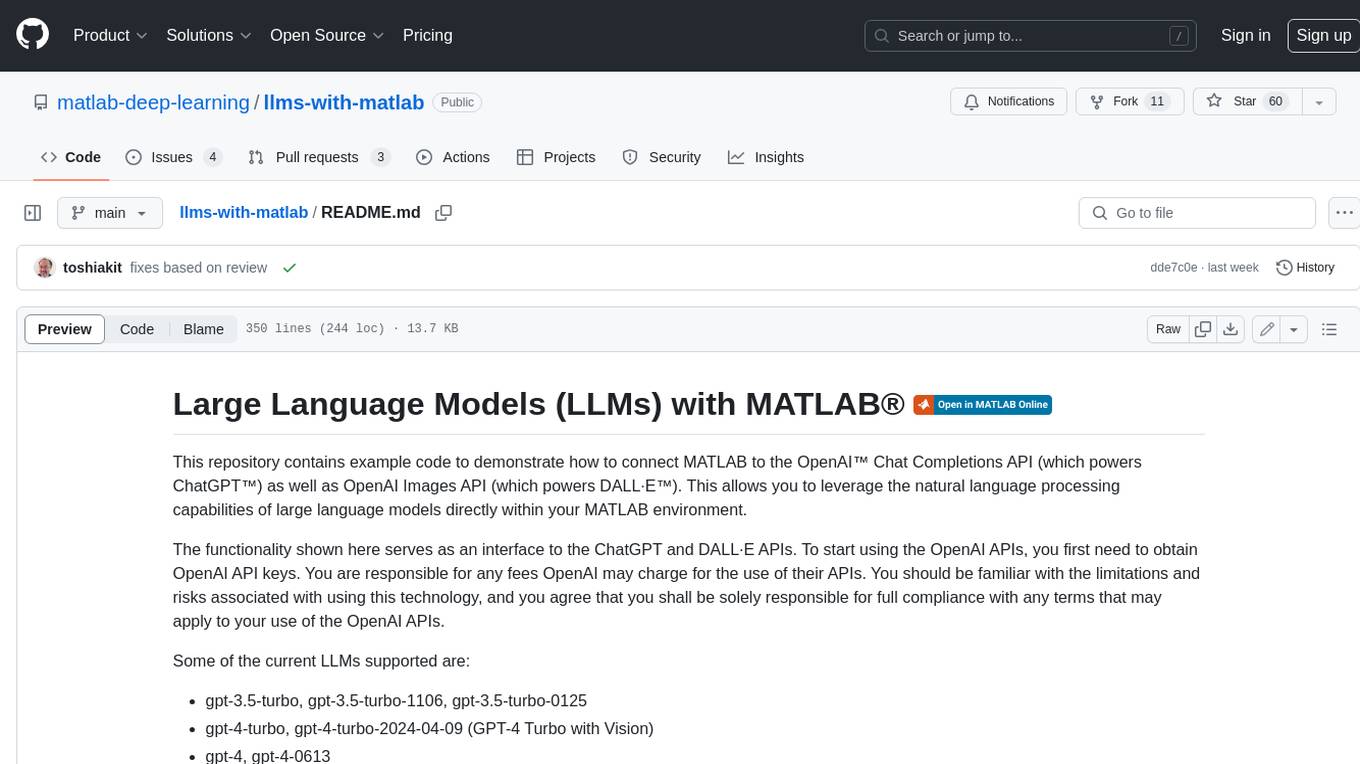

llms-with-matlab

This repository contains example code to demonstrate how to connect MATLAB to the OpenAI™ Chat Completions API (which powers ChatGPT™) as well as OpenAI Images API (which powers DALL·E™). This allows you to leverage the natural language processing capabilities of large language models directly within your MATLAB environment.

xef

xef.ai is a one-stop library designed to bring the power of modern AI to applications and services. It offers integration with Large Language Models (LLM), image generation, and other AI services. The library is packaged in two layers: core libraries for basic AI services integration and integrations with other libraries. xef.ai aims to simplify the transition to modern AI for developers by providing an idiomatic interface, currently supporting Kotlin. Inspired by LangChain and Hugging Face, xef.ai may transmit source code and user input data to third-party services, so users should review privacy policies and take precautions. Libraries are available in Maven Central under the `com.xebia` group, with `xef-core` as the core library. Developers can add these libraries to their projects and explore examples to understand usage.

CushyStudio

CushyStudio is a generative AI platform designed for creatives of any level to effortlessly create stunning images, videos, and 3D models. It offers CushyApps, a collection of visual tools tailored for different artistic tasks, and CushyKit, an extensive toolkit for custom apps development and task automation. Users can dive into the AI revolution, unleash their creativity, share projects, and connect with a vibrant community. The platform aims to simplify the AI art creation process and provide a user-friendly environment for designing interfaces, adding custom logic, and accessing various tools.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.