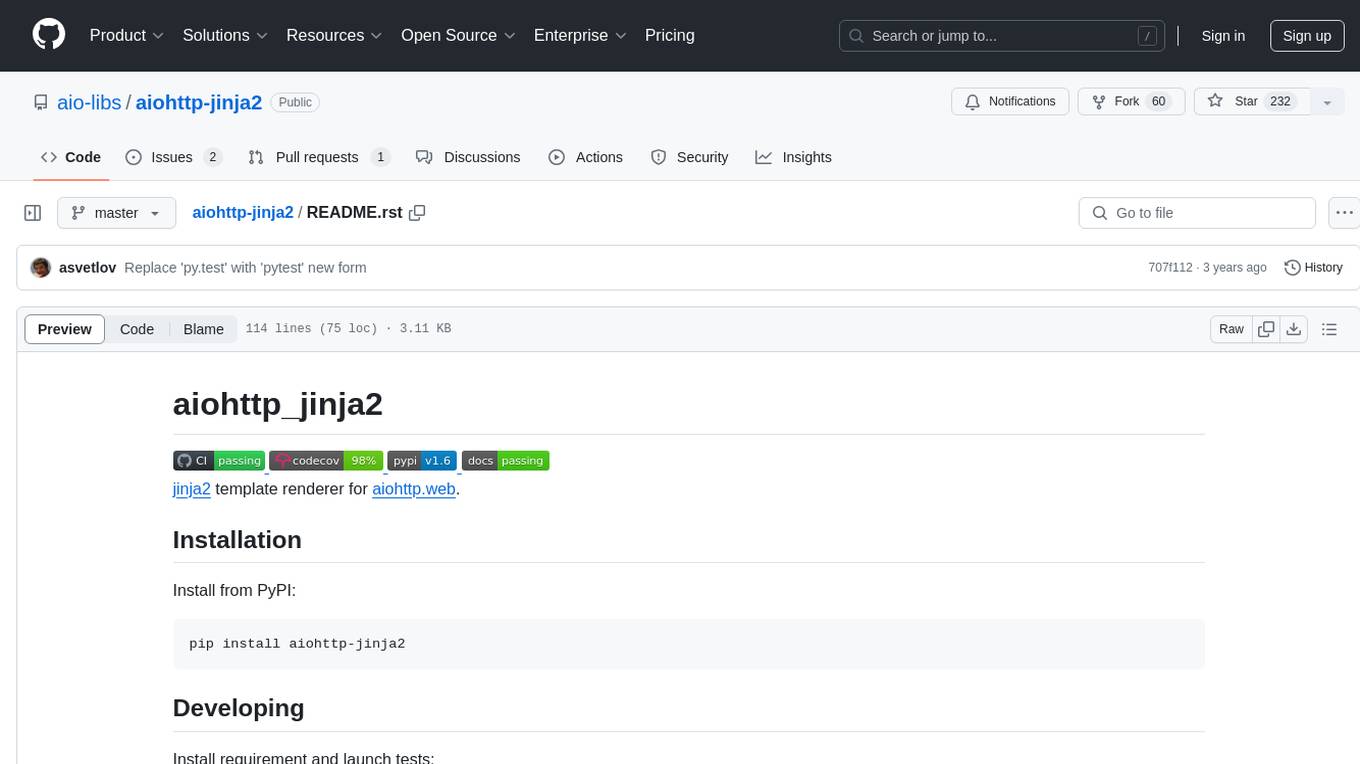

aiohttp-jinja2

jinja2 template renderer for aiohttp.web

Stars: 236

aiohttp_jinja2 is a Jinja2 template renderer for aiohttp.web, allowing users to render templates in web applications built with aiohttp. It provides a convenient way to set up Jinja2 environment, use template engine in web handlers, and perform complex processing like setting response headers. The tool simplifies the process of rendering HTML text based on templates and passing context data to templates for dynamic content generation.

README:

.. image:: https://github.com/aio-libs/aiohttp-jinja2/workflows/CI/badge.svg :target: https://github.com/aio-libs/aiohttp-jinja2/actions?query=workflow%3ACI .. image:: https://codecov.io/gh/aio-libs/aiohttp-jinja2/branch/master/graph/badge.svg :target: https://codecov.io/gh/aio-libs/aiohttp-jinja2 .. image:: https://img.shields.io/pypi/v/aiohttp-jinja2.svg :target: https://pypi.python.org/pypi/aiohttp-jinja2 .. image:: https://readthedocs.org/projects/aiohttp-jinja/badge/?version=latest :target: http://aiohttp-jinja2.aio-libs.org/en/latest/?badge=latest

jinja2_ template renderer for aiohttp.web__.

.. _jinja2: http://jinja.pocoo.org

.. _aiohttp_web: https://aiohttp.readthedocs.io/en/latest/web.html

__ aiohttp_web_

Install from PyPI::

pip install aiohttp-jinja2

Install requirement and launch tests::

pip install -r requirements-dev.txt

pytest tests

Before template rendering you have to setup jinja2 environment first:

.. code-block:: python

app = web.Application()

aiohttp_jinja2.setup(app,

loader=jinja2.FileSystemLoader('/path/to/templates/folder'))

Import:

.. code-block:: python

import aiohttp_jinja2

import jinja2

After that you may to use template engine in your web-handlers. The most convenient way is to decorate a web-handler.

Using the function based web handlers:

.. code-block:: python

@aiohttp_jinja2.template('tmpl.jinja2')

def handler(request):

return {'name': 'Andrew', 'surname': 'Svetlov'}

Or for Class Based Views <https://aiohttp.readthedocs.io/en/stable/web_quickstart.html#class-based-views>:

.. code-block:: python

class Handler(web.View):

@aiohttp_jinja2.template('tmpl.jinja2')

async def get(self):

return {'name': 'Andrew', 'surname': 'Svetlov'}

On handler call the aiohttp_jinja2.template decorator will pass

returned dictionary {'name': 'Andrew', 'surname': 'Svetlov'} into

template named tmpl.jinja2 for getting resulting HTML text.

If you need more complex processing (set response headers for example)

you may call render_template function.

Using a function based web handler:

.. code-block:: python

async def handler(request):

context = {'name': 'Andrew', 'surname': 'Svetlov'}

response = aiohttp_jinja2.render_template('tmpl.jinja2',

request,

context)

response.headers['Content-Language'] = 'ru'

return response

Or, again, a class based view:

.. code-block:: python

class Handler(web.View):

async def get(self):

context = {'name': 'Andrew', 'surname': 'Svetlov'}

response = aiohttp_jinja2.render_template('tmpl.jinja2',

self.request,

context)

response.headers['Content-Language'] = 'ru'

return response

aiohttp_jinja2 is offered under the Apache 2 license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiohttp-jinja2

Similar Open Source Tools

aiohttp-jinja2

aiohttp_jinja2 is a Jinja2 template renderer for aiohttp.web, allowing users to render templates in web applications built with aiohttp. It provides a convenient way to set up Jinja2 environment, use template engine in web handlers, and perform complex processing like setting response headers. The tool simplifies the process of rendering HTML text based on templates and passing context data to templates for dynamic content generation.

aiohttp-debugtoolbar

aiohttp_debugtoolbar provides a debug toolbar for aiohttp web applications. It is a port of pyramid_debugtoolbar and offers basic functionality such as basic panels, intercepting redirects, pretty printing exceptions, an interactive python console, and showing source code. The library is still in early development stages and offers various debug panels for monitoring different aspects of the web application. It is a useful tool for developers working with aiohttp to debug and optimize their applications.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

js-genai

The Google Gen AI JavaScript SDK is an experimental SDK for TypeScript and JavaScript developers to build applications powered by Gemini. It supports both the Gemini Developer API and Vertex AI. The SDK is designed to work with Gemini 2.0 features. Users can access API features through the GoogleGenAI classes, which provide submodules for querying models, managing caches, creating chats, uploading files, and starting live sessions. The SDK also allows for function calling to interact with external systems. Users can find more samples in the GitHub samples directory.

flapi

flAPI is a powerful service that automatically generates read-only APIs for datasets by utilizing SQL templates. Built on top of DuckDB, it offers features like automatic API generation, support for Model Context Protocol (MCP), connecting to multiple data sources, caching, security implementation, and easy deployment. The tool allows users to create APIs without coding and enables the creation of AI tools alongside REST endpoints using SQL templates. It supports unified configuration for REST endpoints and MCP tools/resources, concurrent servers for REST API and MCP server, and automatic tool discovery. The tool also provides DuckLake-backed caching for modern, snapshot-based caching with features like full refresh, incremental sync, retention, compaction, and audit logs.

python-genai

The Google Gen AI SDK is a Python library that provides access to Google AI and Vertex AI services. It allows users to create clients for different services, work with parameter types, models, generate content, call functions, handle JSON response schemas, stream text and image content, perform async operations, count and compute tokens, embed content, generate and upscale images, edit images, work with files, create and get cached content, tune models, distill models, perform batch predictions, and more. The SDK supports various features like automatic function support, manual function declaration, JSON response schema support, streaming for text and image content, async methods, tuning job APIs, distillation, batch prediction, and more.

aiocache

Aiocache is an asyncio cache library that supports multiple backends such as memory, redis, and memcached. It provides a simple interface for functions like add, get, set, multi_get, multi_set, exists, increment, delete, clear, and raw. Users can easily install and use the library for caching data in Python applications. Aiocache allows for easy instantiation of caches and setup of cache aliases for reusing configurations. It also provides support for backends, serializers, and plugins to customize cache operations. The library offers detailed documentation and examples for different use cases and configurations.

ai-nodejs

This repository serves as a companion to the Build AI-Powered Apps with OpenAI and Node.js course on Frontend Masters. It includes course notes and provides alternative approaches for deprecated Langchain methods by installing the Langchain community module and importing loaders for document processing from PDFs and YouTube videos.

partialjson

PartialJson is a Python library that allows users to parse partial and incomplete JSON data with ease. With just 3 lines of Python code, users can parse JSON data that may be missing key elements or contain errors. The library provides a simple solution for handling JSON data that may not be well-formed or complete, making it a valuable tool for data processing and manipulation tasks.

xsai

xsAI is an extra-small AI SDK designed for Browser, Node.js, Deno, Bun, or Edge Runtime. It provides a series of utils to help users utilize OpenAI or OpenAI-compatible APIs. The SDK is lightweight and efficient, using a variety of methods to minimize its size. It is runtime-agnostic, working seamlessly across different environments without depending on Node.js Built-in Modules. Users can easily install specific utils like generateText or streamText, and leverage tools like weather to perform tasks such as getting the weather in a location.

ax

Ax is a Typescript library that allows users to build intelligent agents inspired by agentic workflows and the Stanford DSP paper. It seamlessly integrates with multiple Large Language Models (LLMs) and VectorDBs to create RAG pipelines or collaborative agents capable of solving complex problems. The library offers advanced features such as streaming validation, multi-modal DSP, and automatic prompt tuning using optimizers. Users can easily convert documents of any format to text, perform smart chunking, embedding, and querying, and ensure output validation while streaming. Ax is production-ready, written in Typescript, and has zero dependencies.

ai-cms-grapesjs

The Aimeos GrapesJS CMS extension provides a simple to use but powerful page editor for creating content pages based on extensible components. It integrates seamlessly with Laravel applications and allows users to easily manage and display CMS content. The tool also supports Google reCAPTCHA v3 for enhanced security. Users can create and customize pages with various components and manage multi-language setups effortlessly. The extension simplifies the process of creating and managing content pages, making it ideal for developers and businesses looking to enhance their website's content management capabilities.

instructor

Instructor is a popular Python library for managing structured outputs from large language models (LLMs). It offers a user-friendly API for validation, retries, and streaming responses. With support for various LLM providers and multiple languages, Instructor simplifies working with LLM outputs. The library includes features like response models, retry management, validation, streaming support, and flexible backends. It also provides hooks for logging and monitoring LLM interactions, and supports integration with Anthropic, Cohere, Gemini, Litellm, and Google AI models. Instructor facilitates tasks such as extracting user data from natural language, creating fine-tuned models, managing uploaded files, and monitoring usage of OpenAI models.

aiobotocore

aiobotocore is an async client for Amazon services using botocore and aiohttp/asyncio. It provides a mostly full-featured asynchronous version of botocore, allowing users to interact with various AWS services asynchronously. The library supports operations such as uploading objects to S3, getting object properties, listing objects, and deleting objects. It also offers context manager examples for managing resources efficiently. aiobotocore supports multiple AWS services like S3, DynamoDB, SNS, SQS, CloudFormation, and Kinesis, with basic methods tested for each service. Users can run tests using moto for mocked tests or against personal Amazon keys. Additionally, the tool enables type checking and code completion for better development experience.

flo-ai

Flo AI is a Python framework that enables users to build production-ready AI agents and teams with minimal code. It allows users to compose complex AI architectures using pre-built components while maintaining the flexibility to create custom components. The framework supports composable, production-ready, YAML-first, and flexible AI systems. Users can easily create AI agents and teams, manage teams of AI agents working together, and utilize built-in support for Retrieval-Augmented Generation (RAG) and compatibility with Langchain tools. Flo AI also provides tools for output parsing and formatting, tool logging, data collection, and JSON output collection. It is MIT Licensed and offers detailed documentation, tutorials, and examples for AI engineers and teams to accelerate development, maintainability, scalability, and testability of AI systems.

client-js

The Mistral JavaScript client is a library that allows you to interact with the Mistral AI API. With this client, you can perform various tasks such as listing models, chatting with streaming, chatting without streaming, and generating embeddings. To use the client, you can install it in your project using npm and then set up the client with your API key. Once the client is set up, you can use it to perform the desired tasks. For example, you can use the client to chat with a model by providing a list of messages. The client will then return the response from the model. You can also use the client to generate embeddings for a given input. The embeddings can then be used for various downstream tasks such as clustering or classification.

For similar tasks

aiohttp-jinja2

aiohttp_jinja2 is a Jinja2 template renderer for aiohttp.web, allowing users to render templates in web applications built with aiohttp. It provides a convenient way to set up Jinja2 environment, use template engine in web handlers, and perform complex processing like setting response headers. The tool simplifies the process of rendering HTML text based on templates and passing context data to templates for dynamic content generation.

For similar jobs

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.

pluto

Pluto is a development tool dedicated to helping developers **build cloud and AI applications more conveniently** , resolving issues such as the challenging deployment of AI applications and open-source models. Developers are able to write applications in familiar programming languages like **Python and TypeScript** , **directly defining and utilizing the cloud resources necessary for the application within their code base** , such as AWS SageMaker, DynamoDB, and more. Pluto automatically deduces the infrastructure resource needs of the app through **static program analysis** and proceeds to create these resources on the specified cloud platform, **simplifying the resources creation and application deployment process**.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

aioconsole

aioconsole is a Python package that provides asynchronous console and interfaces for asyncio. It offers asynchronous equivalents to input, print, exec, and code.interact, an interactive loop running the asynchronous Python console, customization and running of command line interfaces using argparse, stream support to serve interfaces instead of using standard streams, and the apython script to access asyncio code at runtime without modifying the sources. The package requires Python version 3.8 or higher and can be installed from PyPI or GitHub. It allows users to run Python files or modules with a modified asyncio policy, replacing the default event loop with an interactive loop. aioconsole is useful for scenarios where users need to interact with asyncio code in a console environment.

aiosqlite

aiosqlite is a Python library that provides a friendly, async interface to SQLite databases. It replicates the standard sqlite3 module but with async versions of all the standard connection and cursor methods, along with context managers for automatically closing connections and cursors. It allows interaction with SQLite databases on the main AsyncIO event loop without blocking execution of other coroutines while waiting for queries or data fetches. The library also replicates most of the advanced features of sqlite3, such as row factories and total changes tracking.