ai-nodejs

None

Stars: 74

This repository serves as a companion to the Build AI-Powered Apps with OpenAI and Node.js course on Frontend Masters. It includes course notes and provides alternative approaches for deprecated Langchain methods by installing the Langchain community module and importing loaders for document processing from PDFs and YouTube videos.

README:

This repo is a companion to the Build AI-Powered Apps with OpenAI and Node.js course on Frontend Masters.

Document QA Query Function Lesson

A few of the Langchain methods used in this course have been deprecated. Here's an alternative approach:

Install the Langchain community module

npm i @langchain/communityImport the loaders

import { PDFLoader } from '@langchain/community/document_loaders/fs/pdf'

import { YoutubeLoader } from '@langchain/community/document_loaders/web/youtube'Create the loaders using the community methods:

In docsFromYTVideo:

const loader = YoutubeLoader.createFromUrl(video, { language: 'en', addVideoInfo: true, })

return loader.load( new CharacterTextSplitter({ separator: ' ', chunkSize: 2500, chunkOverlap: 200, }) )In docsFromPDF:

const docsFromPDF = async () => { const loader = new PDFLoader('./xbox.pdf')

return loader.load( new CharacterTextSplitter({ separator: ' ', chunkSize: 2500, chunkOverlap: 200, }) ) }For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-nodejs

Similar Open Source Tools

ai-nodejs

This repository serves as a companion to the Build AI-Powered Apps with OpenAI and Node.js course on Frontend Masters. It includes course notes and provides alternative approaches for deprecated Langchain methods by installing the Langchain community module and importing loaders for document processing from PDFs and YouTube videos.

dexter

Dexter is a set of mature LLM tools used in production at Dexa, with a focus on real-world RAG (Retrieval Augmented Generation). It is a production-quality RAG that is extremely fast and minimal, and handles caching, throttling, and batching for ingesting large datasets. It also supports optional hybrid search with SPLADE embeddings, and is a minimal TS package with full typing that uses `fetch` everywhere and supports Node.js 18+, Deno, Cloudflare Workers, Vercel edge functions, etc. Dexter has full docs and includes examples for basic usage, caching, Redis caching, AI function, AI runner, and chatbot.

js-genai

The Google Gen AI JavaScript SDK is an experimental SDK for TypeScript and JavaScript developers to build applications powered by Gemini. It supports both the Gemini Developer API and Vertex AI. The SDK is designed to work with Gemini 2.0 features. Users can access API features through the GoogleGenAI classes, which provide submodules for querying models, managing caches, creating chats, uploading files, and starting live sessions. The SDK also allows for function calling to interact with external systems. Users can find more samples in the GitHub samples directory.

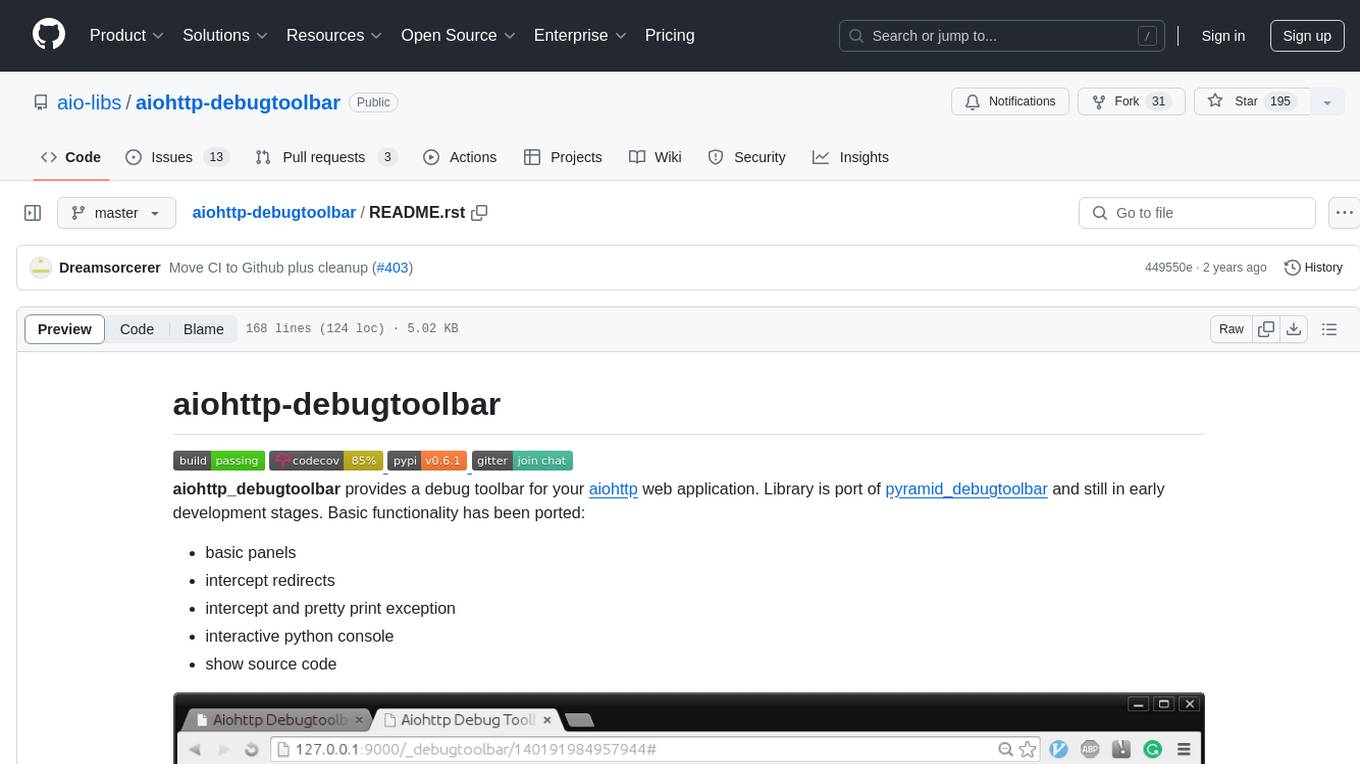

aiohttp-debugtoolbar

aiohttp_debugtoolbar provides a debug toolbar for aiohttp web applications. It is a port of pyramid_debugtoolbar and offers basic functionality such as basic panels, intercepting redirects, pretty printing exceptions, an interactive python console, and showing source code. The library is still in early development stages and offers various debug panels for monitoring different aspects of the web application. It is a useful tool for developers working with aiohttp to debug and optimize their applications.

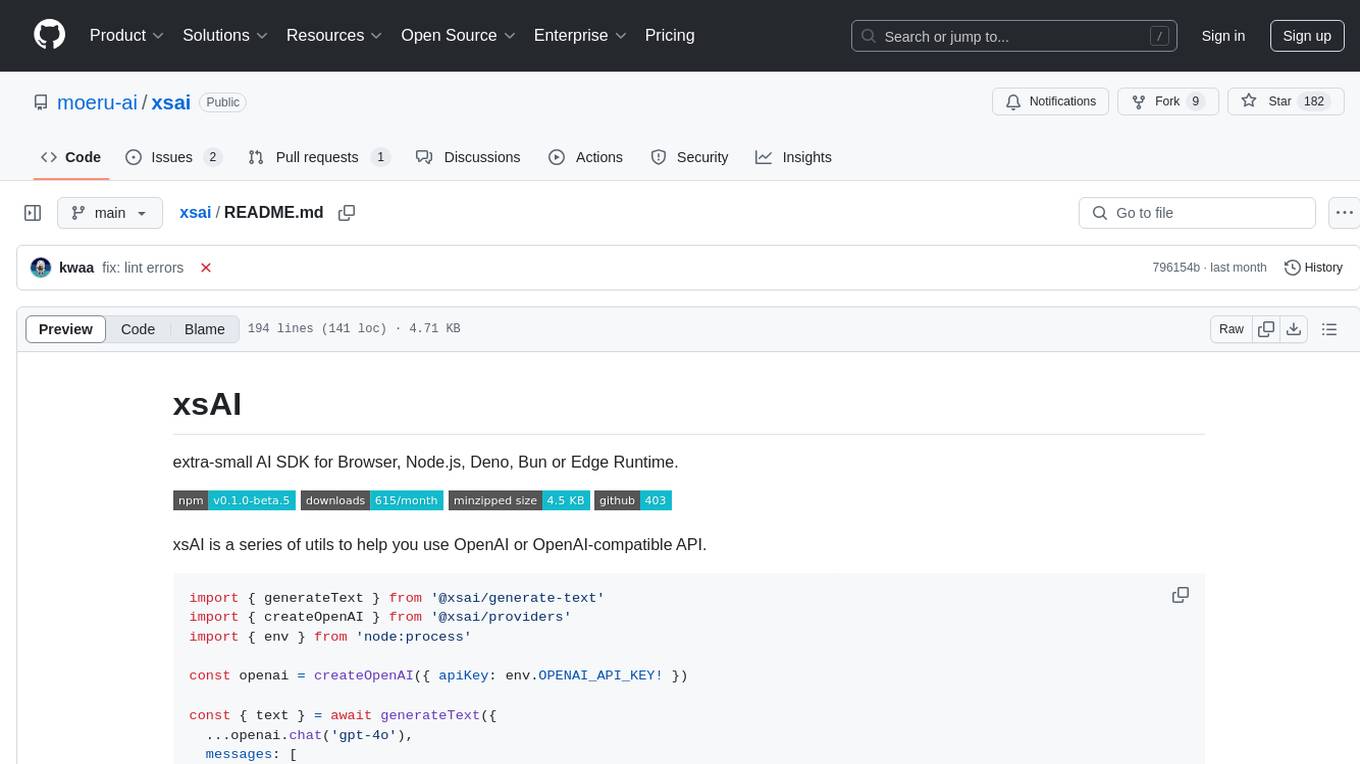

xsai

xsAI is an extra-small AI SDK designed for Browser, Node.js, Deno, Bun, or Edge Runtime. It provides a series of utils to help users utilize OpenAI or OpenAI-compatible APIs. The SDK is lightweight and efficient, using a variety of methods to minimize its size. It is runtime-agnostic, working seamlessly across different environments without depending on Node.js Built-in Modules. Users can easily install specific utils like generateText or streamText, and leverage tools like weather to perform tasks such as getting the weather in a location.

LightRAG

LightRAG is a PyTorch library designed for building and optimizing Retriever-Agent-Generator (RAG) pipelines. It follows principles of simplicity, quality, and optimization, offering developers maximum customizability with minimal abstraction. The library includes components for model interaction, output parsing, and structured data generation. LightRAG facilitates tasks like providing explanations and examples for concepts through a question-answering pipeline.

volga

Volga is a general purpose real-time data processing engine in Python for modern AI/ML systems. It aims to be a Python-native alternative to Flink/Spark Streaming with extended functionality for real-time AI/ML workloads. It provides a hybrid push+pull architecture, Entity API for defining data entities and feature pipelines, DataStream API for general data processing, and customizable data connectors. Volga can run on a laptop or a distributed cluster, making it suitable for building custom real-time AI/ML feature platforms or general data pipelines without relying on third-party platforms.

instructor

Instructor is a popular Python library for managing structured outputs from large language models (LLMs). It offers a user-friendly API for validation, retries, and streaming responses. With support for various LLM providers and multiple languages, Instructor simplifies working with LLM outputs. The library includes features like response models, retry management, validation, streaming support, and flexible backends. It also provides hooks for logging and monitoring LLM interactions, and supports integration with Anthropic, Cohere, Gemini, Litellm, and Google AI models. Instructor facilitates tasks such as extracting user data from natural language, creating fine-tuned models, managing uploaded files, and monitoring usage of OpenAI models.

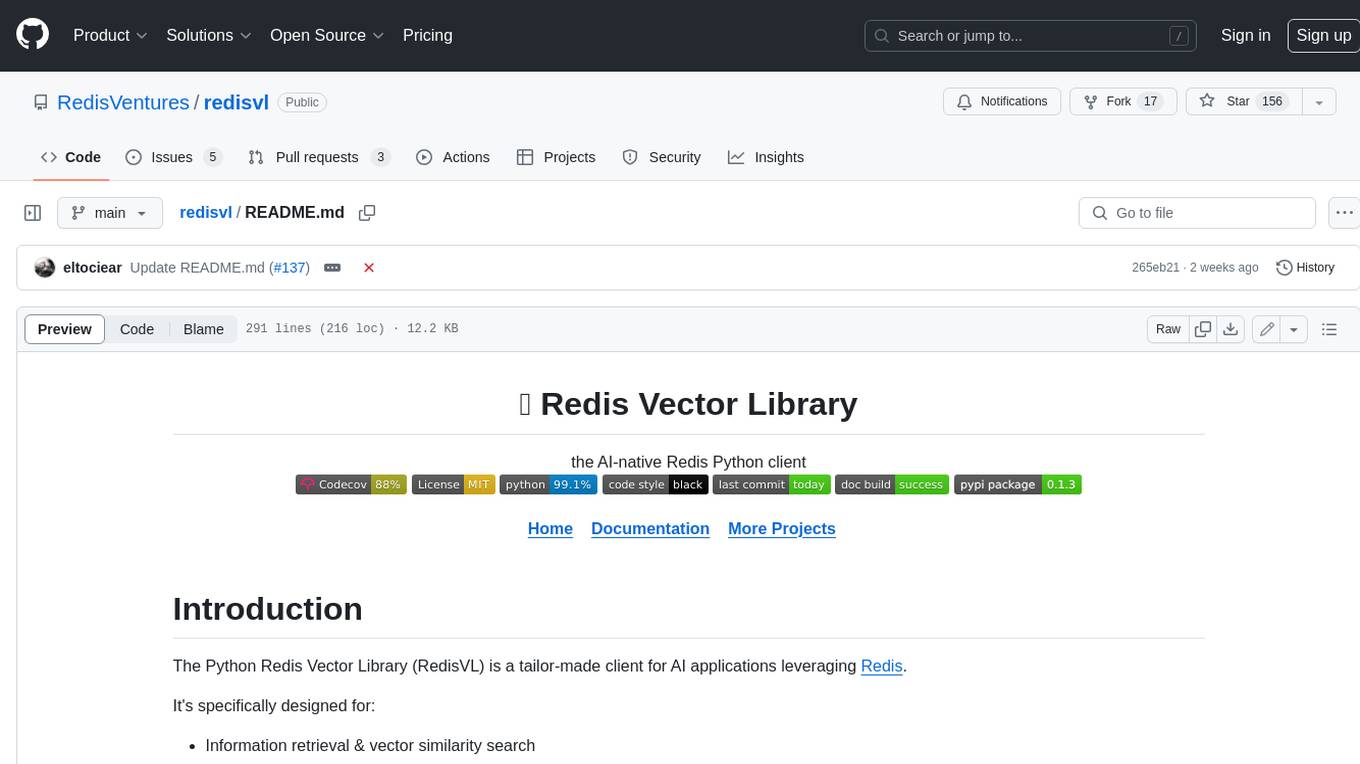

redisvl

Redis Vector Library (RedisVL) is a Python client library for building AI applications on top of Redis. It provides a high-level interface for managing vector indexes, performing vector search, and integrating with popular embedding models and providers. RedisVL is designed to make it easy for developers to build and deploy AI applications that leverage the speed, flexibility, and reliability of Redis.

IntelliNode

IntelliNode is a javascript module that integrates cutting-edge AI models like ChatGPT, LLaMA, WaveNet, Gemini, and Stable diffusion into projects. It offers functions for generating text, speech, and images, as well as semantic search, multi-model evaluation, and chatbot capabilities. The module provides a wrapper layer for low-level model access, a controller layer for unified input handling, and a function layer for abstract functionality tailored to various use cases.

mcphub.nvim

MCPHub.nvim is a powerful Neovim plugin that integrates MCP (Model Context Protocol) servers into your workflow. It offers a centralized config file for managing servers and tools, with an intuitive UI for testing resources. Ideal for LLM integration, it provides programmatic API access and interactive testing through the `:MCPHub` command.

react-native-vercel-ai

Run Vercel AI package on React Native, Expo, Web and Universal apps. Currently React Native fetch API does not support streaming which is used as a default on Vercel AI. This package enables you to use AI library on React Native but the best usage is when used on Expo universal native apps. On mobile you get back responses without streaming with the same API of `useChat` and `useCompletion` and on web it will fallback to `ai/react`

client-python

The Mistral Python Client is a tool inspired by cohere-python that allows users to interact with the Mistral AI API. It provides functionalities to access and utilize the AI capabilities offered by Mistral. Users can easily install the client using pip and manage dependencies using poetry. The client includes examples demonstrating how to use the API for various tasks, such as chat interactions. To get started, users need to obtain a Mistral API Key and set it as an environment variable. Overall, the Mistral Python Client simplifies the integration of Mistral AI services into Python applications.

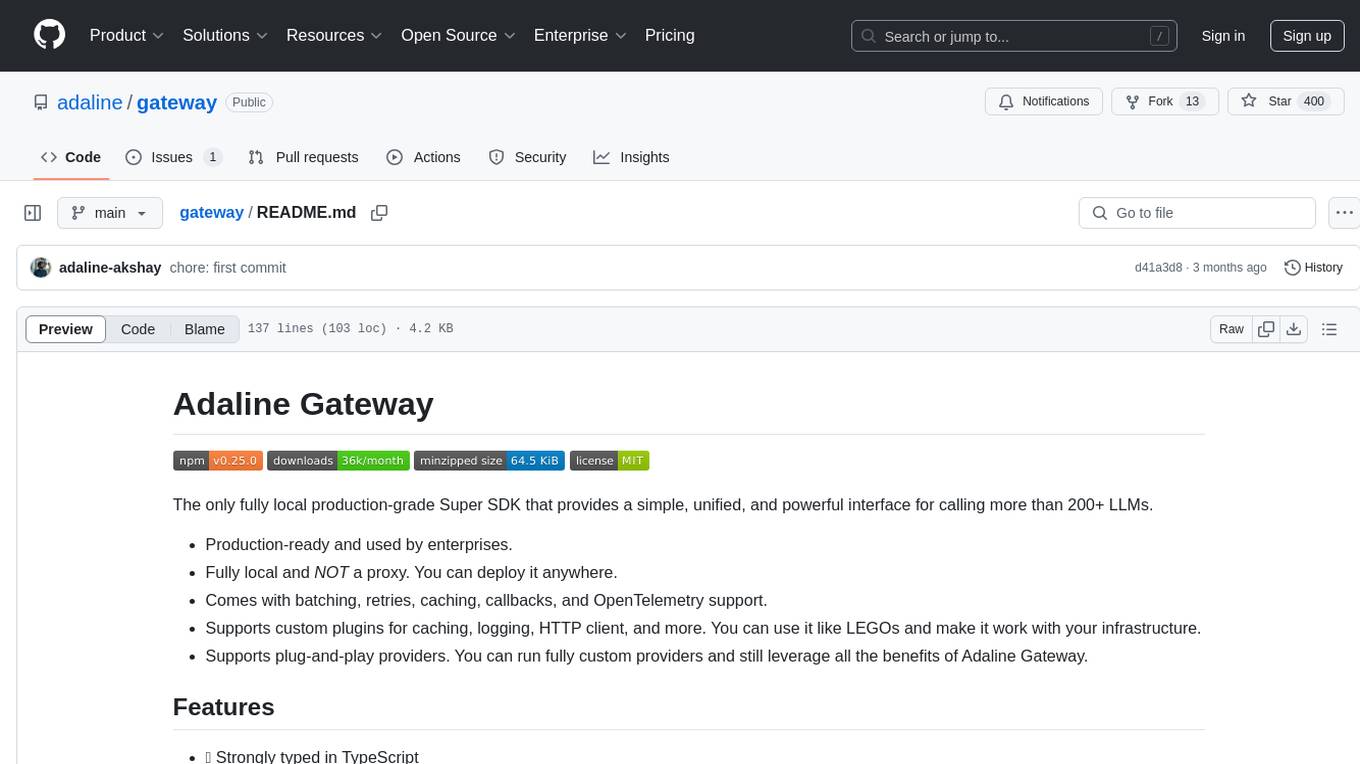

gateway

Adaline Gateway is a fully local production-grade Super SDK that offers a unified interface for calling over 200+ LLMs. It is production-ready, supports batching, retries, caching, callbacks, and OpenTelemetry. Users can create custom plugins and providers for seamless integration with their infrastructure.

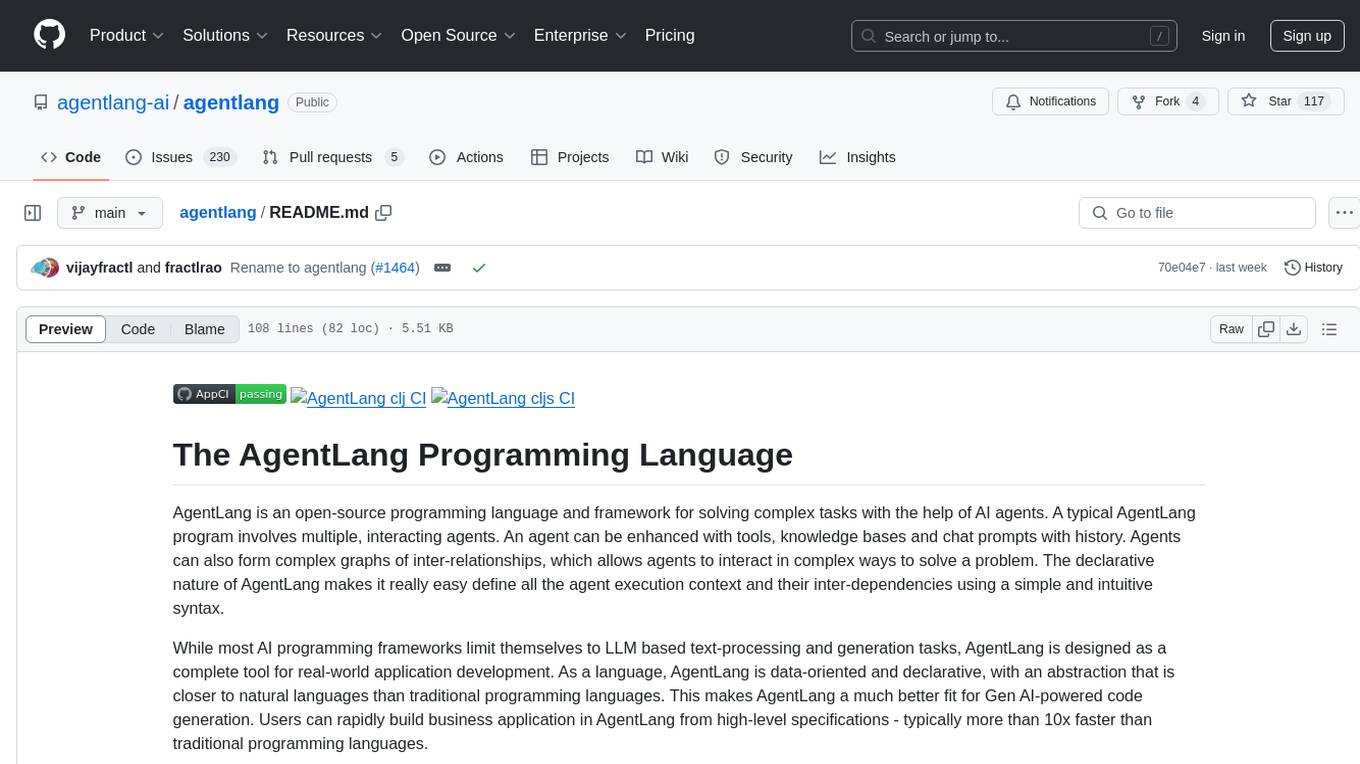

agentlang

AgentLang is an open-source programming language and framework designed for solving complex tasks with the help of AI agents. It allows users to build business applications rapidly from high-level specifications, making it more efficient than traditional programming languages. The language is data-oriented and declarative, with a syntax that is intuitive and closer to natural languages. AgentLang introduces innovative concepts such as first-class AI agents, graph-based hierarchical data model, zero-trust programming, declarative dataflow, resolvers, interceptors, and entity-graph-database mapping.

suno-api

Suno AI API is an open-source project that allows developers to integrate the music generation capabilities of Suno.ai into their own applications. The API provides a simple and convenient way to generate music, lyrics, and other audio content using Suno.ai's powerful AI models. With Suno AI API, developers can easily add music generation functionality to their apps, websites, and other projects.

For similar tasks

gemini-pro-vision-playground

Gemini Pro Vision Playground is a simple project aimed at assisting developers in utilizing the Gemini Pro Vision and Gemini Pro AI models for building applications. It provides a playground environment for experimenting with these models and integrating them into apps. The project includes instructions for setting up the Google AI API key and running the development server to visualize the results. Developers can learn more about the Gemini API documentation and Next.js framework through the provided resources. The project encourages contributions and feedback from the community.

shards

Shards is a high-performance, multi-platform, type-safe programming language designed for visual development. It is a dataflow visual programming language that enables building full-fledged apps and games without traditional coding. Shards features automatic type checking, optimized shard implementations for high performance, and an intuitive visual workflow for beginners. The language allows seamless round-trip engineering between code and visual models, empowering users to create multi-platform apps easily. Shards also powers an upcoming AI-powered game creation system, enabling real-time collaboration and game development in a low to no-code environment.

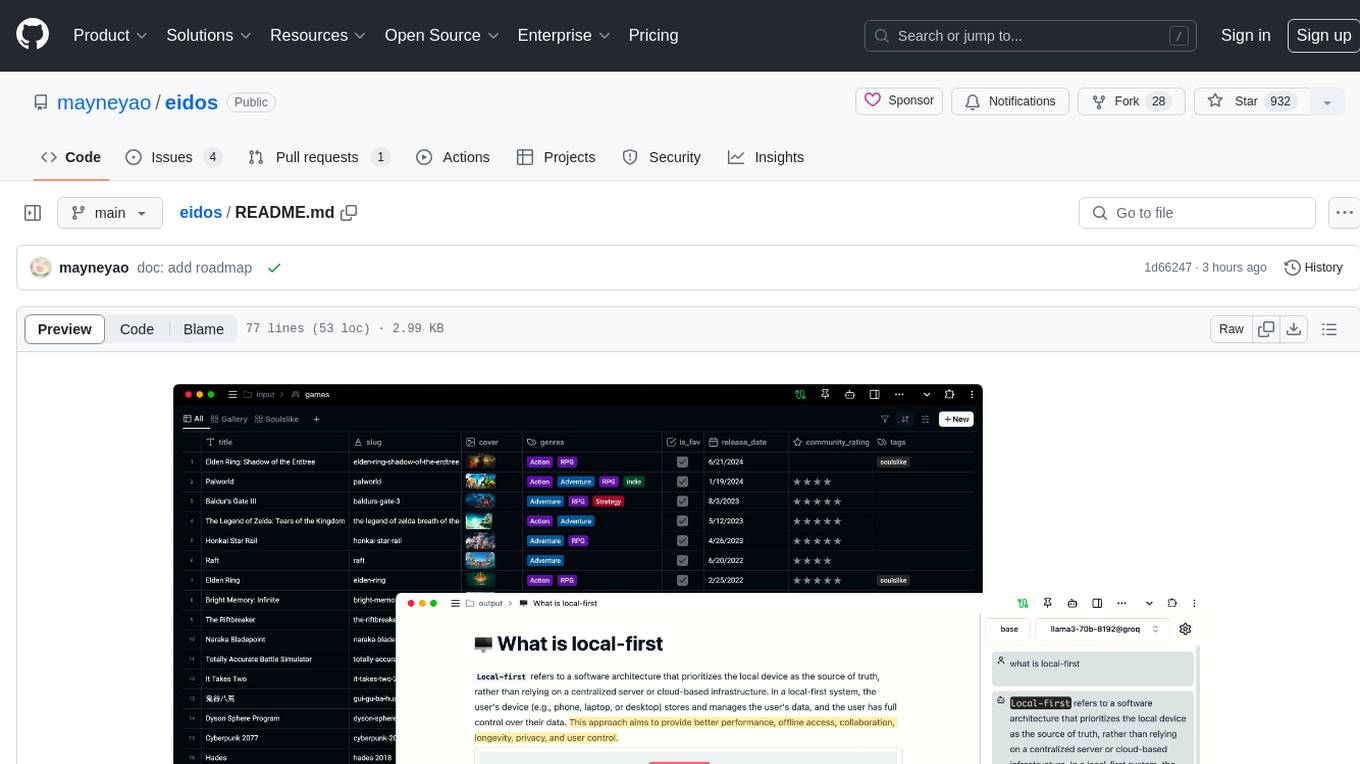

eidos

Eidos is an extensible framework for managing personal data in one place. It runs inside the browser as a PWA with offline support. It integrates AI features for translation, summarization, and data interaction. Users can customize Eidos with Prompt extension, JavaScript for Formula functions, TypeScript/JavaScript for data processing logic, and build apps using any framework. Eidos is developer-friendly with API & SDK, and uses SQLite standardization for data tables.

ai-nodejs

This repository serves as a companion to the Build AI-Powered Apps with OpenAI and Node.js course on Frontend Masters. It includes course notes and provides alternative approaches for deprecated Langchain methods by installing the Langchain community module and importing loaders for document processing from PDFs and YouTube videos.

NeoHaskell

NeoHaskell is a newcomer-friendly and productive dialect of Haskell. It aims to be easy to learn and use, while also powerful enough for app development with minimal effort and maximum confidence. The project prioritizes design and documentation before implementation, with ongoing work on design documents for community sharing.

BlackFriday-GPTs-Prompts

BlackFriday-GPTs-Prompts is a repository that provides a collection of prompts and jailbreaks for various purposes such as programming, marketing, academic, job hunting, game, creative tasks, prompt engineering, business, productivity, and lifestyle. It introduces AiDark.net, an autonomous AI software engineer named Devin, capable of working collaboratively or independently on tasks for review. The repository offers prompts that can be used in GPTOS, along with demo videos showcasing an Android self-coding app builder.

AIDE-Plus

AIDE-Plus is a comprehensive tool for Android app development, offering support for various Java syntax versions, Gradle and Maven build systems, ProGuard, AndroidX, CMake builds, APK/AAB generation, code coloring customization, data binding, and APK signing. It also provides features like AAPT2, D8, runtimeOnly, compileOnly, libgdxNatives, manifest merging, Shizuku installation support, and syntax auto-completion. The tool aims to streamline the development process and enhance the user experience by addressing common issues and providing advanced functionalities.

superplatform

Superplatform is a microservices platform focused on distributed AI management and development. It enables users to self-host AI models, build backendless AI apps, develop microservices-based AI applications, and deploy third-party AI apps easily. The platform supports running open-source AI models privately, building apps leveraging AI models, and utilizing a microservices-based communal backend for diverse projects.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.