llm-client

JS/TS library to make to easy to build with LLMs. Full support for various LLMs and VectorDBs, Agents, Function Calling, Chain-of-Thought, RAG, Semantic Router and more. Based on the popular Stanford DSP paper. Create and compose efficient prompts using prompt signatures. 🌵 🦙 🔥 ❤️ 🖖🏼

Stars: 540

LLMClient is a JavaScript/TypeScript library that simplifies working with large language models (LLMs) by providing an easy-to-use interface for building and composing efficient prompts using prompt signatures. These signatures enable the automatic generation of typed prompts, allowing developers to leverage advanced capabilities like reasoning, function calling, RAG, ReAcT, and Chain of Thought. The library supports various LLMs and vector databases, making it a versatile tool for a wide range of applications.

README:

JS/TS library to make to easy to build with LLMs. Full support for various LLMs and VectorDBs, Agents, Function Calling, Chain-of-Thought, RAG, Semantic Router and more. Based on the popular Stanford DSP paper. Create and compose efficient prompts using prompt signatures. 🌵 🦙 🔥 ❤️ 🖖🏼

LLMClient is an easy to use library build around "Prompt Signatures" from the Stanford DSP paper. This library will automatically generate efficient and typed prompts from prompt signatures like question:string -> answer:string.

Build powerful workflows using components like RAG, ReAcT, Chain of Thought, Function calling, Agents, etc all built on prompt signatures and easy to compose together to build whatever you want. Using prompt signatures automatically gives you the ability to fine tune your prompt programs using optimizers. Tune with a larger model and have your program run efficiently on a smaller model. The tuning here is not the traditional model tuning but what we call prompt tuning.

- Support for various LLMs and Vector DBs

- Prompts auto-generated from simple signatures

- Multi-Hop RAG, ReAcT, CoT, Function Calling and more

- Build Agents that can call other agents

- Convert docs of any format to text

- RAG, smart chunking, embedding, querying

- Automatic prompt tuning using optimizers

- OpenTelemetry tracing / observability

- Production ready Typescript code

- Lite weight, zero-dependencies

Efficient type-safe prompts are auto-generated from a simple signature. A prompt signature is made of a "task description" inputField:type "field description" -> outputField:type". The idea behind prompt signatures is based off work done in the "Demonstrate-Search-Predict" paper.

You can have multiple input and output fields and each field has one of these types string, number, boolean, json or a array of any of these eg. string[]. When a type is not defined it defaults to string. When the json type if used the underlying AI is encouraged to generate correct JSON.

| Provider | Best Models | Tested |

|---|---|---|

| OpenAI | GPT: 4o, 4T, 4, 3.5 | 🟢 100% |

| Azure OpenAI | GPT: 4, 4T, 3.5 | 🟢 100% |

| Together | Several OSS Models | 🟢 100% |

| Cohere | CommandR, Command | 🟢 100% |

| Anthropic | Claude 2, Claude 3 | 🟢 100% |

| Mistral | 7B, 8x7B, S, M & L | 🟢 100% |

| Groq | Lama2-70B, Mixtral-8x7b | 🟢 100% |

| DeepSeek | Chat and Code | 🟢 100% |

| Ollama | All models | 🟢 100% |

| Google Gemini | Gemini: Flash, Pro | 🟢 100% |

| Hugging Face | OSS Model | 🟡 50% |

import { AI, ChainOfThought, OpenAIArgs } from 'llmclient';

const textToSummarize = `

The technological singularity—or simply the singularity[1]—is a hypothetical future point in time at which technological growth becomes uncontrollable and irreversible, resulting in unforeseeable changes to human civilization.[2][3] ...`;

const ai = AI('openai', { apiKey: process.env.OPENAI_APIKEY } as OpenAIArgs);

const gen = new ChainOfThought(

ai,

`textToSummarize -> shortSummary "summarize in 5 to 10 words"`

);

const res = await gen.forward({ textToSummarize });

console.log('>', res);Use the agent prompt (framework) to build agents that work with other agents to complete tasks. Agents are easy to build with prompt signatures. Try out the agent example.

# npm run tsx ./src/examples/agent.ts

const researcher = new Agent(ai, {

name: 'researcher',

description: 'Researcher agent',

signature: `physicsQuestion "physics questions" -> answer "reply in bullet points"`

});

const summarizer = new Agent(ai, {

name: 'summarizer',

description: 'Summarizer agent',

signature: `text "text so summarize" -> shortSummary "summarize in 5 to 10 words"`

});

const agent = new Agent(ai, {

name: 'agent',

description: 'A an agent to research complex topics',

signature: `question -> answer`,

agents: [researcher, summarizer]

});

agent.forward({ questions: "How many atoms are there in the universe" })A special router that uses no LLM calls only embeddings to route user requests smartly.

Use the Router to efficiently route user queries to specific routes designed to handle certain types of questions or tasks. Each route is tailored to a particular domain or service area. Instead of using a slow or expensive LLM to decide how input from the user should be handled use our fast "Semantic Router" that uses inexpensive and fast embedding queries.

# npm run tsx ./src/examples/routing.ts

const customerSupport = new Route('customerSupport', [

'how can I return a product?',

'where is my order?',

'can you help me with a refund?',

'I need to update my shipping address',

'my product arrived damaged, what should I do?'

]);

const technicalSupport = new Route('technicalSupport', [

'how do I install your software?',

'I’m having trouble logging in',

'can you help me configure my settings?',

'my application keeps crashing',

'how do I update to the latest version?'

]);

const ai = AI('openai', { apiKey: process.env.OPENAI_APIKEY } as OpenAIArgs);

const router = new Router(ai);

await router.setRoutes(

[customerSupport, technicalSupport],

{ filename: 'router.json' }

);

const tag = await router.forward('I need help with my order');

if (tag === "customerSupport") {

...

}

if (tag === "technicalSupport") {

...

}Vector databases are critical to building LLM workflows. We have clean abstractions over popular vector db's as well as our own quick in memory vector database.

| Provider | Tested |

|---|---|

| In Memory | 🟢 100% |

| Weaviate | 🟢 100% |

| Cloudflare | 🟡 50% |

| Pinecone | 🟡 50% |

// Create embeddings from text using an LLM

const ret = await this.ai.embed({ texts: 'hello world' });

// Create an in memory vector db

const db = new DB('memory');

// Insert into vector db

await this.db.upsert({

id: 'abc',

table: 'products',

values: ret.embeddings[0]

});

// Query for similar entries using embeddings

const matches = await this.db.query({

table: 'products',

values: embeddings[0]

});Alternatively you can use the DBManager which handles smart chunking, embedding and querying everything

for you, it makes things almost too easy.

const manager = new DBManager({ ai, db });

await manager.insert(text);

const matches = await manager.query(

'John von Neumann on human intelligence and singularity.'

);

console.log(matches);Using documents like PDF, DOCX, PPT, XLS, etc with LLMs is a huge pain. We make it easy with the help of Apache Tika an open source document processing engine.

Launch Apache Tika

docker run -p 9998:9998 apache/tikaConvert documents to text and embed them for retrieval using the DBManager it also supports a reranker and query rewriter. Two default implementations DefaultResultReranker and DefaultQueryRewriter are available to use.

const tika = new ApacheTika();

const text = await tika.convert('/path/to/document.pdf');

const manager = new DBManager({ ai, db });

await manager.insert(text);

const matches = await manager.query('Find some text');

console.log(matches);Ability to trace and observe your llm workflow is critical to building production workflows. OpenTelemetry is an industry standard and we support the new gen_ai attribute namespace.

import { trace } from "@opentelemetry/api";

import {

BasicTracerProvider,

ConsoleSpanExporter,

SimpleSpanProcessor,

} from "@opentelemetry/sdk-trace-base";

const provider = new BasicTracerProvider();

provider.addSpanProcessor(new SimpleSpanProcessor(new ConsoleSpanExporter()));

trace.setGlobalTracerProvider(provider);

const tracer = trace.getTracer("test");

const ai = AI("ollama", {

model: "nous-hermes2",

options: { tracer },

} as unknown as OllamaArgs);

const gen = new ChainOfThought(

ai,

`text -> shortSummary "summarize in 5 to 10 words"`

);

const res = await gen.forward({ text });{

"traceId": "ddc7405e9848c8c884e53b823e120845",

"name": "Chat Request",

"id": "d376daad21da7a3c",

"kind": "SERVER",

"timestamp": 1716622997025000,

"duration": 14190456.542,

"attributes": {

"gen_ai.system": "Ollama",

"gen_ai.request.model": "nous-hermes2",

"gen_ai.request.max_tokens": 500,

"gen_ai.request.temperature": 0.1,

"gen_ai.request.top_p": 0.9,

"gen_ai.request.frequency_penalty": 0.5,

"gen_ai.request.llm_is_streaming": false,

"http.request.method": "POST",

"url.full": "http://localhost:11434/v1/chat/completions",

"gen_ai.usage.completion_tokens": 160,

"gen_ai.usage.prompt_tokens": 290

},

}Alternatively you can use the DBManager which handles smart chunking, embedding and querying everything

for you, it makes things almost too easy.

const manager = new DBManager({ ai, db });

await manager.insert(text);

const matches = await manager.query(

'John von Neumann on human intelligence and singularity.'

);

console.log(matches);You can tune your prompts using a larger model to help them run more efficiently and give you better results. This is done by using an optimizer like BootstrapFewShot with and examples from the popular HotPotQA dataset. The optimizer generates demonstrations demos which when used with the prompt help improve its efficiency.

// Download the HotPotQA dataset from huggingface

const hf = new HFDataLoader();

const examples = await hf.getData<{ question: string; answer: string }>({

dataset: 'hotpot_qa',

split: 'train',

count: 100,

fields: ['question', 'answer']

});

const ai = AI('openai', { apiKey: process.env.OPENAI_APIKEY } as OpenAIArgs);

// Setup the program to tune

const program = new ChainOfThought<{ question: string }, { answer: string }>(

ai,

`question -> answer "in short 2 or 3 words"`

);

// Setup a Bootstrap Few Shot optimizer to tune the above program

const optimize = new BootstrapFewShot<{ question: string }, { answer: string }>(

{

program,

examples

}

);

// Setup a evaluation metric em, f1 scores are a popular way measure retrieval performance.

const metricFn: MetricFn = ({ prediction, example }) =>

emScore(prediction.answer as string, example.answer as string);

// Run the optimizer and save the result

await optimize.compile(metricFn, { filename: 'demos.json' });And to use the generated demos with the above ChainOfThought program

const ai = AI('openai', { apiKey: process.env.OPENAI_APIKEY } as OpenAIArgs);

// Setup the program to use the tuned data

const program = new ChainOfThought<{ question: string }, { answer: string }>(

ai,

`question -> answer "in short 2 or 3 words"`

);

// load tuning data

program.loadDemos('demos.json');

const res = await program.forward({

question: 'What castle did David Gregory inherit?'

});

console.log(res);Use the tsx command to run the examples it makes node run typescript code. It also support using a .env file to pass the AI API Keys as opposed to putting them in the commandline.

OPENAI_APIKEY=openai_key npm run tsx ./src/examples/marketing.ts| Example | Description |

|---|---|

| customer-support.ts | Extract valuable details from customer communications |

| food-search.ts | Use multiple APIs are used to find dinning options |

| marketing.ts | Generate short effective marketing sms messages |

| vectordb.ts | Chunk, embed and search text |

| fibonacci.ts | Use the JS code interpreter to compute fibonacci |

| summarize.ts | Generate a short summary of a large block of text |

| chain-of-thought.ts | Use chain-of-thought prompting to answer questions |

| rag.ts | Use multi-hop retrieval to answer questions |

| rag-docs.ts | Convert PDF to text and embed for rag search |

| react.ts | Use function calling and reasoning to answer questions |

| agent.ts | Agent framework, agents can use other agents, tools etc |

| qna-tune.ts | Use an optimizer to improve prompt efficiency |

| qna-use-tuned.ts | Use the optimized tuned prompts |

Often you need the LLM to reason through a task and fetch and update external data related to this task. This is where reasoning meets function (API) calling. It's built-in so you get all of the magic automatically. Just define the functions you wish to you, a schema for the response object and thats it.

There are even some useful built-in functions like a Code Interpreter that the LLM can use to write and execute JS code.

We support providers like OpenAI that offer multiple parallel function calling and the standard single function calling.

| Function | Description |

|---|---|

| Code Interpreter | Used by the LLM to execute JS code in a sandboxed env. |

| Embeddings Adapter | Wrapper to fetch and pass embedding to your function |

Large language models (LLMs) are getting really powerful and have reached a point where they can work as the backend for your entire product. However there is still a lot of manage a lot of complexity to manage from using the right prompts, models, etc. Our goal is to package all this complexity into a well maintained easy to use library that can work with all the LLMs out there. Additionally we are using the latest research to add useful new capabilities like DSP to the library.

// Pick a LLM

const ai = new OpenAI({ apiKey: process.env.OPENAI_APIKEY } as OpenAIArgs);// Can be sub classed to build you own memory backends

const mem = new Memory();const cot = new ChainOfThought(ai, `question:string -> answer:string`, { mem });const res = await cot.forward({ question: 'Are we in a simulation?' });const res = await ai.chat([

{ role: "system", content: "Help the customer with his questions" }

{ role: "user", content: "I'm looking for a Macbook Pro M2 With 96GB RAM?" }

]);// define one or more functions and a function handler

const functions = [

{

name: 'getCurrentWeather',

description: 'get the current weather for a location',

parameters: {

type: 'object',

properties: {

location: {

type: 'string',

description: 'location to get weather for'

},

units: {

type: 'string',

enum: ['imperial', 'metric'],

default: 'imperial',

description: 'units to use'

}

},

required: ['location']

},

func: async (args: Readonly<{ location: string; units: string }>) => {

return `The weather in ${args.location} is 72 degrees`;

}

}

];const cot = new ReAct(ai, `question:string -> answer:string`, { functions });const ai = new OpenAI({ apiKey: process.env.OPENAI_APIKEY } as OpenAIArgs);

ai.setOptions({ debug: true });We're happy to help reach out if you have questions or join the Discord twitter/dosco

Improve the function naming and description be very clear on what the function does. Also ensure the function parameter's also have good descriptions. The descriptions don't have to be very long but need to be clear.

You can pass a configuration object as the second parameter when creating a new LLM object

const apiKey = process.env.OPENAI_APIKEY;

const conf = OpenAIBestConfig();

const ai = new OpenAI({ apiKey, conf } as OpenAIArgs);const conf = OpenAIDefaultConfig(); // or OpenAIBestOptions()

conf.maxTokens = 2000;const conf = OpenAIDefaultConfig(); // or OpenAIBestOptions()

conf.model = OpenAIModel.GPT4Turbo;For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm-client

Similar Open Source Tools

llm-client

LLMClient is a JavaScript/TypeScript library that simplifies working with large language models (LLMs) by providing an easy-to-use interface for building and composing efficient prompts using prompt signatures. These signatures enable the automatic generation of typed prompts, allowing developers to leverage advanced capabilities like reasoning, function calling, RAG, ReAcT, and Chain of Thought. The library supports various LLMs and vector databases, making it a versatile tool for a wide range of applications.

parrot.nvim

Parrot.nvim is a Neovim plugin that prioritizes a seamless out-of-the-box experience for text generation. It simplifies functionality and focuses solely on text generation, excluding integration of DALLE and Whisper. It supports persistent conversations as markdown files, custom hooks for inline text editing, multiple providers like Anthropic API, perplexity.ai API, OpenAI API, Mistral API, and local/offline serving via ollama. It allows custom agent definitions, flexible API credential support, and repository-specific instructions with a `.parrot.md` file. It does not have autocompletion or hidden requests in the background to analyze files.

monacopilot

Monacopilot is a powerful and customizable AI auto-completion plugin for the Monaco Editor. It supports multiple AI providers such as Anthropic, OpenAI, Groq, and Google, providing real-time code completions with an efficient caching system. The plugin offers context-aware suggestions, customizable completion behavior, and framework agnostic features. Users can also customize the model support and trigger completions manually. Monacopilot is designed to enhance coding productivity by providing accurate and contextually appropriate completions in daily spoken language.

LightRAG

LightRAG is a PyTorch library designed for building and optimizing Retriever-Agent-Generator (RAG) pipelines. It follows principles of simplicity, quality, and optimization, offering developers maximum customizability with minimal abstraction. The library includes components for model interaction, output parsing, and structured data generation. LightRAG facilitates tasks like providing explanations and examples for concepts through a question-answering pipeline.

suno-api

Suno AI API is an open-source project that allows developers to integrate the music generation capabilities of Suno.ai into their own applications. The API provides a simple and convenient way to generate music, lyrics, and other audio content using Suno.ai's powerful AI models. With Suno AI API, developers can easily add music generation functionality to their apps, websites, and other projects.

sparkle

Sparkle is a tool that streamlines the process of building AI-driven features in applications using Large Language Models (LLMs). It guides users through creating and managing agents, defining tools, and interacting with LLM providers like OpenAI. Sparkle allows customization of LLM provider settings, model configurations, and provides a seamless integration with Sparkle Server for exposing agents via an OpenAI-compatible chat API endpoint.

syncode

SynCode is a novel framework for the grammar-guided generation of Large Language Models (LLMs) that ensures syntactically valid output with respect to defined Context-Free Grammar (CFG) rules. It supports general-purpose programming languages like Python, Go, SQL, JSON, and more, allowing users to define custom grammars using EBNF syntax. The tool compares favorably to other constrained decoders and offers features like fast grammar-guided generation, compatibility with HuggingFace Language Models, and the ability to work with various decoding strategies.

wtf.nvim

wtf.nvim is a Neovim plugin that enhances diagnostic debugging by providing explanations and solutions for code issues using ChatGPT. It allows users to search the web for answers directly from Neovim, making the debugging process faster and more efficient. The plugin works with any language that has LSP support in Neovim, offering AI-powered diagnostic assistance and seamless integration with various resources for resolving coding problems.

syncode

SynCode is a novel framework for the grammar-guided generation of Large Language Models (LLMs) that ensures syntactically valid output based on a Context-Free Grammar (CFG). It supports various programming languages like Python, Go, SQL, Math, JSON, and more. Users can define custom grammars using EBNF syntax. SynCode offers fast generation, seamless integration with HuggingFace Language Models, and the ability to sample with different decoding strategies.

mistral-inference

Mistral Inference repository contains minimal code to run 7B, 8x7B, and 8x22B models. It provides model download links, installation instructions, and usage guidelines for running models via CLI or Python. The repository also includes information on guardrailing, model platforms, deployment, and references. Users can interact with models through commands like mistral-demo, mistral-chat, and mistral-common. Mistral AI models support function calling and chat interactions for tasks like testing models, chatting with models, and using Codestral as a coding assistant. The repository offers detailed documentation and links to blogs for further information.

instructor

Instructor is a popular Python library for managing structured outputs from large language models (LLMs). It offers a user-friendly API for validation, retries, and streaming responses. With support for various LLM providers and multiple languages, Instructor simplifies working with LLM outputs. The library includes features like response models, retry management, validation, streaming support, and flexible backends. It also provides hooks for logging and monitoring LLM interactions, and supports integration with Anthropic, Cohere, Gemini, Litellm, and Google AI models. Instructor facilitates tasks such as extracting user data from natural language, creating fine-tuned models, managing uploaded files, and monitoring usage of OpenAI models.

magentic

Easily integrate Large Language Models into your Python code. Simply use the `@prompt` and `@chatprompt` decorators to create functions that return structured output from the LLM. Mix LLM queries and function calling with regular Python code to create complex logic.

vinagent

Vinagent is a lightweight and flexible library designed for building smart agent assistants across various industries. It provides a simple yet powerful foundation for creating AI-powered customer service bots, data analysis assistants, or domain-specific automation agents. With its modular tool system, users can easily extend their agent's capabilities by integrating a wide range of tools that are self-contained, well-documented, and can be registered dynamically. Vinagent allows users to scale and adapt their agents to new tasks or environments effortlessly.

next-token-prediction

Next-Token Prediction is a language model tool that allows users to create high-quality predictions for the next word, phrase, or pixel based on a body of text. It can be used as an alternative to well-known decoder-only models like GPT and Mistral. The tool provides options for simple usage with built-in data bootstrap or advanced customization by providing training data or creating it from .txt files. It aims to simplify methodologies, provide autocomplete, autocorrect, spell checking, search/lookup functionalities, and create pixel and audio transformers for various prediction formats.

promptic

Promptic is a tool designed for LLM app development, providing a productive and pythonic way to build LLM applications. It leverages LiteLLM, allowing flexibility to switch LLM providers easily. Promptic focuses on building features by providing type-safe structured outputs, easy-to-build agents, streaming support, automatic prompt caching, and built-in conversation memory.

LLM.swift

LLM.swift is a simple and readable library that allows you to interact with large language models locally with ease for macOS, iOS, watchOS, tvOS, and visionOS. It's a lightweight abstraction layer over `llama.cpp` package, so that it stays as performant as possible while is always up to date. Theoretically, any model that works on `llama.cpp` should work with this library as well. It's only a single file library, so you can copy, study and modify the code however you want.

For similar tasks

promptfoo

Promptfoo is a tool for testing and evaluating LLM output quality. With promptfoo, you can build reliable prompts, models, and RAGs with benchmarks specific to your use-case, speed up evaluations with caching, concurrency, and live reloading, score outputs automatically by defining metrics, use as a CLI, library, or in CI/CD, and use OpenAI, Anthropic, Azure, Google, HuggingFace, open-source models like Llama, or integrate custom API providers for any LLM API.

llm-client

LLMClient is a JavaScript/TypeScript library that simplifies working with large language models (LLMs) by providing an easy-to-use interface for building and composing efficient prompts using prompt signatures. These signatures enable the automatic generation of typed prompts, allowing developers to leverage advanced capabilities like reasoning, function calling, RAG, ReAcT, and Chain of Thought. The library supports various LLMs and vector databases, making it a versatile tool for a wide range of applications.

SimplerLLM

SimplerLLM is an open-source Python library that simplifies interactions with Large Language Models (LLMs) for researchers and beginners. It provides a unified interface for different LLM providers, tools for enhancing language model capabilities, and easy development of AI-powered tools and apps. The library offers features like unified LLM interface, generic text loader, RapidAPI connector, SERP integration, prompt template builder, and more. Users can easily set up environment variables, create LLM instances, use tools like SERP, generic text loader, calling RapidAPI APIs, and prompt template builder. Additionally, the library includes chunking functions to split texts into manageable chunks based on different criteria. Future updates will bring more tools, interactions with local LLMs, prompt optimization, response evaluation, GPT Trainer, document chunker, advanced document loader, integration with more providers, Simple RAG with SimplerVectors, integration with vector databases, agent builder, and LLM server.

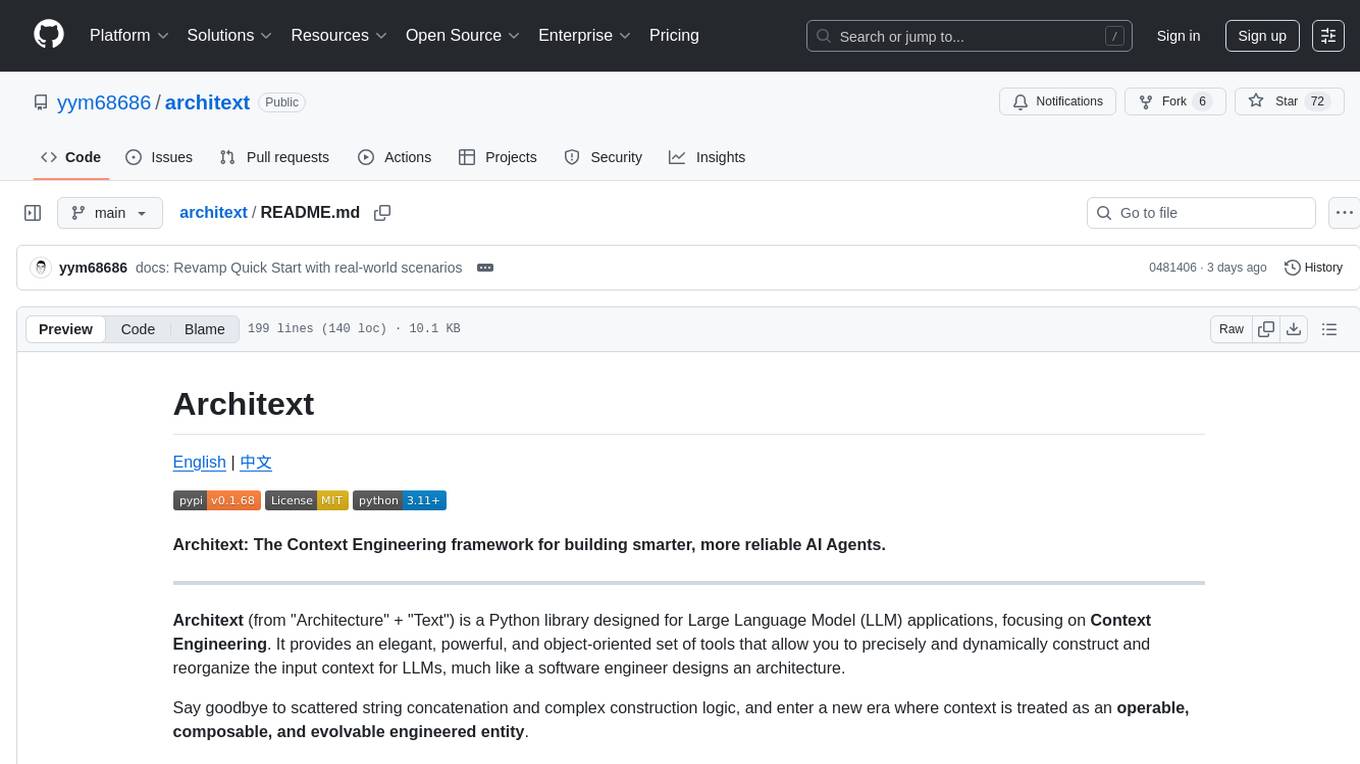

architext

Architext is a Python library designed for Large Language Model (LLM) applications, focusing on Context Engineering. It provides tools to construct and reorganize input context for LLMs dynamically. The library aims to elevate context construction from ad-hoc to systematic engineering, enabling precise manipulation of context content for AI Agents.

Awesome-LLM-Robotics

This repository contains a curated list of **papers using Large Language/Multi-Modal Models for Robotics/RL**. Template from awesome-Implicit-NeRF-Robotics Please feel free to send me pull requests or email to add papers! If you find this repository useful, please consider citing and STARing this list. Feel free to share this list with others! ## Overview * Surveys * Reasoning * Planning * Manipulation * Instructions and Navigation * Simulation Frameworks * Citation

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

instructor-js

Instructor is a Typescript library for structured extraction in Typescript, powered by llms, designed for simplicity, transparency, and control. It stands out for its simplicity, transparency, and user-centric design. Whether you're a seasoned developer or just starting out, you'll find Instructor's approach intuitive and steerable.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.