blendsql

Query language for blending SQL and local language models across structured + unstructured data, with type constraints.

Stars: 160

BlendSQL is a superset of SQLite designed for problem decomposition and hybrid question-answering with Large Language Models (LLMs). It allows users to blend operations over heterogeneous data sources like tables, text, and images, combining the structured and interpretable reasoning of SQL with the generalizable reasoning of LLMs. Users can oversee all calls (LLM + SQL) within a unified query language, enabling tasks such as building LLM chatbots for travel planning and answering complex questions by injecting 'ingredients' as callable functions.

README:

SELECT {{

LLMQA(

'Describe BlendSQL in 50 words.',

context=(

SELECT content[0:5000] AS "README"

FROM read_text('https://raw.githubusercontent.com/parkervg/blendsql/main/README.md')

)

)

}} AS answerSQL 🤝 LLMs

Check out our online documentation for a more comprehensive overview.

Join our Discord server for more discussion!

pip install uv && uv pip install blendsql

import pandas as pd

from blendsql import BlendSQL

from blendsql.models import VLLM

model = VLLM("RedHatAI/gemma-3-12b-it-quantized.w4a16", base_url="http://localhost:8000/v1/")

# Prepare our BlendSQL connection

bsql = BlendSQL(

{

"People": pd.DataFrame(

{

"Name": [

"George Washington",

"John Adams",

"Thomas Jefferson",

"James Madison",

"James Monroe",

"Alexander Hamilton",

"Sabrina Carpenter",

"Charli XCX",

"Elon Musk",

"Michelle Obama",

"Elvis Presley",

],

"Known_For": [

"Established federal government, First U.S. President",

"XYZ Affair, Alien and Sedition Acts",

"Louisiana Purchase, Declaration of Independence",

"War of 1812, Constitution",

"Monroe Doctrine, Missouri Compromise",

"Created national bank, Federalist Papers",

"Nonsense, Emails I Cant Send, Mean Girls musical",

"Crash, How Im Feeling Now, Boom Clap",

"Tesla, SpaceX, Twitter/X acquisition",

"Lets Move campaign, Becoming memoir",

"14 Grammys, King of Rock n Roll",

],

}

),

"Eras": pd.DataFrame({"Years": ["1700-1800", "1800-1900", "1900-2000", "2000-Now"]}),

},

model=model,

verbose=True,

)

smoothie = bsql.execute(

"""

SELECT * FROM People P

WHERE P.Name IN {{

LLMQA('First 3 presidents of the U.S?', quantifier='{3}')

}}

""",

infer_gen_constraints=True, # Is `True` by default

)

smoothie.print_summary()

# ┌───────────────────┬───────────────────────────────────────────────────────┐

# │ Name │ Known_For │

# ├───────────────────┼───────────────────────────────────────────────────────┤

# │ George Washington │ Established federal government, First U.S. Preside... │

# │ John Adams │ XYZ Affair, Alien and Sedition Acts │

# │ Thomas Jefferson │ Louisiana Purchase, Declaration of Independence │

# └───────────────────┴───────────────────────────────────────────────────────┘

# ┌────────────┬──────────────────────┬─────────────────┬─────────────────────┐

# │ Time (s) │ # Generation Calls │ Prompt Tokens │ Completion Tokens │

# ├────────────┼──────────────────────┼─────────────────┼─────────────────────┤

# │ 1.25158 │ 1 │ 296 │ 16 │

# └────────────┴──────────────────────┴─────────────────┴─────────────────────┘

smoothie = bsql.execute(

"""

SELECT GROUP_CONCAT(Name, ', ') AS 'Names',

{{

LLMMap(

'In which time period was this person born?',

p.Name,

options=Eras.Years

)

}} AS Born

FROM People p

GROUP BY Born

""",

)

smoothie.print_summary()

# ┌───────────────────────────────────────────────────────┬───────────┐

# │ Names │ Born │

# ├───────────────────────────────────────────────────────┼───────────┤

# │ George Washington, John Adams, Thomas Jefferson, J... │ 1700-1800 │

# │ Sabrina Carpenter, Charli XCX, Elon Musk, Michelle... │ 2000-Now │

# │ Elvis Presley │ 1900-2000 │

# └───────────────────────────────────────────────────────┴───────────┘

# ┌────────────┬──────────────────────┬─────────────────┬─────────────────────┐

# │ Time (s) │ # Generation Calls │ Prompt Tokens │ Completion Tokens │

# ├────────────┼──────────────────────┼─────────────────┼─────────────────────┤

# │ 1.03858 │ 2 │ 544 │ 75 │

# └────────────┴──────────────────────┴─────────────────┴─────────────────────┘

smoothie = bsql.execute("""

SELECT {{

LLMQA(

'Describe BlendSQL in 50 words.',

context=(

SELECT content[0:5000] AS "README"

FROM read_text('https://raw.githubusercontent.com/parkervg/blendsql/main/README.md')

)

)

}} AS answer

""")

smoothie.print_summary()

# ┌─────────────────────────────────────────────────────┐

# │ answer │

# ├─────────────────────────────────────────────────────┤

# │ BlendSQL is a Python library that combines SQL a... │

# └─────────────────────────────────────────────────────┘

# ┌────────────┬──────────────────────┬─────────────────┬─────────────────────┐

# │ Time (s) │ # Generation Calls │ Prompt Tokens │ Completion Tokens │

# ├────────────┼──────────────────────┼─────────────────┼─────────────────────┤

# │ 4.07617 │ 1 │ 1921 │ 50 │

# └────────────┴──────────────────────┴─────────────────┴─────────────────────┘BlendSQL is a superset of SQL for problem decomposition and hybrid question-answering with LLMs.

As a result, we can Blend together...

- 🥤 ...operations over heterogeneous data sources (e.g. tables, text, images)

- 🥤 ...the structured & interpretable reasoning of SQL with the generalizable reasoning of LLMs

This is embodied in a few different ways - early exit LLM functions when LIMIT clauses are used, use the outputs of previous LLM functions to filter the input of future LLM functions, etc.

But, at a higher level: Existing DBMS (database management systems) are already highly optimized, and many very smart people get paid a lot of money to keep them at the cutting-edge. Rather than reinvent the wheel, we can leverage their optimizations and only pull the subset of data into memory that is logically required to pass to the language model functions. We then prep the database state via temporary tables, and finally sync back to the native SQL dialect and execute. In this way, BlendSQL 'compiles to SQL'.

For more info on query execution in BlendSQL, see Section 2.4 here.

- (2/4/26) Optimized VLLM integration, particularly for

LLMMap- Define max concurrent async calls via

blendsql.config.set_async_limit(32)

- Define max concurrent async calls via

- (11/7/25) 📝New paper: Play by the Type Rules: Inferring Constraints for LLM Functions in Declarative Programs

- (5/30/25) Created a Discord server

- (5/6/25): New blog post: Language Models, SQL, and Types, Oh My!

- (5/1/15): Single-page function documentation

- (10/26/24) New tutorial! blendsql-by-example.ipynb

- Supports many DBMS 💾

- SQLite, PostgreSQL, DuckDB, Pandas (aka duckdb in a trenchcoat)

- Optimized async-based parallelism with vLLM ✨

- Write your normal queries - smart parsing optimizes what is passed to external functions 🧠

- Traverses abstract syntax tree with sqlglot to minimize LLM function calls 🌳

- Constrained decoding with guidance 🚀

- We only generate syntactically valid outputs according to query syntax + database contents

- LLM function caching, built on diskcache 🔑

On a dataset of complex questions converted to executable declarative programs (e.g. How many test takers are there at the school/s in a county with population over 2 million?), BlendSQL is 53% faster than the pandas-based LOTUS. See Section 4 of Play by the Type Rules: Inferring Constraints for LLM Functions in Declarative Programs for more details.

Many DBMS allow for the creation of Python user-defined functions (UDFs), like DuckDB. So why not just use those to embed language model functions instead of BlendSQL? The below plot adds the DuckDB UDF approach to the same benchmark we did above - where DuckDB UDFs come in with at average of 133.2 seconds per query.

The reason for this? DuckDB uses a generalized query optimizer, very good at many different optimizations. But when we introduce a UDF with an unknown cost, many values get passed to the highly expensive language model functions that could have been filtered out via vanilla SQL expressions first (JOIN, WHERE, LIMIT, etc.).

This highlights an important point about the value-add of BlendSQL. While you can just import the individual language model functions and call them on data (see here) - if you know the larger query context where the function output will be used, you should use the BlendSQL query optimizer (bsql.execute()), built specifically for language model functions. As demonstrated above, it makes a huge difference for large database contexts, and out-of-the-box UDFs without the ability to assign cost don't cut it.

[!TIP] How do we know the BlendSQL optimizer is passing the minimal required data to the language model functions? Check out our extensive test suite for examples.

The below examples can use this model initialization logic to define the variable model. See here for more information on blendsql models.

from blendsql.models import VLLM

model = VLLM("RedHatAI/gemma-3-12b-it-quantized.w4a16", base_url="http://localhost:8000/v1/")For all the below examples, use smoothie.print_summary() to get an overview of the inputs and outputs.

import pandas as pd

from blendsql import BlendSQL

if __name__ == "__main__":

bsql = BlendSQL(

{

"posts": pd.DataFrame(

{"content": ["I hate this product", "I love this product"]}

)

},

model=model,

verbose=True,

)

smoothie = bsql.execute(

"""

SELECT {{

LLMMap(

'What is the sentiment of this text?',

content,

options=('positive', 'negative', 'neutral')

)

}} AS classification FROM posts

"""

)

print(smoothie.df)Some question answering tasks require hybrid reasoning - some information is present in a given table, but some information exists only in external free text documents.

import pandas as pd

from blendsql import BlendSQL

bsql = BlendSQL(

{

"world_aquatic_championships": pd.DataFrame(

[

{

"Medal": "Silver",

"Name": "Dana Vollmer",

"Sport": "Swimming",

"Event": "Women's 100 m butterfly",

"Time/Score": "56.87",

"Date": "July 25",

},

{

"Medal": "Gold",

"Name": "Ryan Lochte",

"Sport": "Swimming",

"Event": "Men's 200 m freestyle",

"Time/Score": "1:44.44",

},

{

"Medal": "Gold",

"Name": "Rebecca Soni",

"Sport": "Swimming",

"Event": "Women's 100 m breaststroke",

"Time/Score": "1:05.05",

"Date": "July 26",

},

{

"Medal": "Gold",

"Name": "Elizabeth Beisel",

"Sport": "Swimming",

"Event": "Women's 400 m individual medley",

"Time/Score": "4:31.78",

"Date": "July 31",

},

]

)

},

model=model,

verbose=True, # Set `verbose=True` to see the query plan as it executes

)

_ = bsql.model.model_obj # Models are lazy loaded by default. Use this line if you want to pre-load models before execution.We can now create a custom function that will:

- Fill in our f-string templatized question with values in the database

- Batch-retrieve top

krelevant documents for each unrolled question - Batch-apply the provied language model to generate a type constrained output given the document contexts

from blendsql.search import TavilySearch, FaissVectorStore

from blendsql.ingredients import LLMMap

USE_TAVILY = True # This requires a `.env` file with a `TAVILY_API_KEY` variable defined

if USE_TAVILY:

context_searcher = TavilySearch(k=3)

else:

# We can also define a local FAISS vector store

context_searcher = FaissVectorStore(

model_name_or_path="sentence-transformers/all-mpnet-base-v2",

documents=[

"Ryan Steven Lochte (/ˈlɒkti/ LOK-tee; born August 3, 1984) is an American former[2] competition swimmer and 12-time Olympic medalist.",

"Rebecca Soni (born March 18, 1987) is an American former competition swimmer and breaststroke specialist.",

"Elizabeth Lyon Beisel (/ˈbaɪzəl/; born August 18, 1992) is an American competition swimmer who specializes in backstroke and individual medley events."

],

k=3

)

DocumentSearchMap = LLMMap.from_args(

context_searcher=context_searcher

)

# This line registers our new function in our `BlendSQL` connection context

# Replacement scans allow us to now reference the function by the variable name we initialized it to (`DocumentSearchMap`)

bsql.ingredients = {DocumentSearchMap}

# Define a blendsql program to answer: 'What is the name of the oldest person who won gold?'

smoothie = bsql.execute(

"""

SELECT Name FROM world_aquatic_championships w

WHERE Medal = 'Gold'

/* By default, blendsql infers type constraints given expression context. */

/* So below, the return_type will be constrained to an integer (`\d+`) */

ORDER BY {{DocumentSearchMap('What year was {} born?', w.Name)}} ASC LIMIT 1

"""

)

print(smoothie.df)

# ┌─────────────┐

# │ Name │

# ├─────────────┤

# │ Ryan Lochte │

# └─────────────┘To analyze the prompts we sent to the model, we can access GLOBAL_HISTORY.

from blendsql import GLOBAL_HISTORY

# This is a list

print(GLOBAL_HISTORY)Notice in the above example - what if two athletes were born in the same year, but different days?

In this case, simply fetching the year of birth isn't enough for the ordering we need to do. For cases when the required datatype is unable to be inferred via expression context, you can override the inferred default via passing return_type. The following are valid. All below can be wrapped in a List[...] type.

return_type Argument |

Regex | DB Mapping Logic |

|---|---|---|

any |

N.A. | N.A. The DB implicitly casts the type, if type affinity is supported (e.g. SQLite does this). |

str |

N.A. | N.A. Same behavior as any, but the language model is prompted with the cue that the return type should look like a string. |

int |

r"-?(\d+)" |

|

float |

r"-?(\d+(\.\d+)?)" |

|

bool |

r"(t|f|true|false|True|False)" |

|

substring (*Only valid for LLMMap) |

complicated - see https://github.com/guidance-ai/guidance/blob/main/guidance/library/_substring.py#L11 | |

date |

r"\d{4}-\d{2}-\d{2}" |

The ISO8601 is inserted into the query as a date type. This differs for different DBMS - in DuckDB, it would be '1992-09-20'::DATE

|

smoothie = bsql.execute(

"""

SELECT Name FROM world_aquatic_championships w

WHERE Medal = 'Gold'

/* Defining `return_type = 'date'` will constrain generation to a date format, and handle type conversion to the respective database context for you. */

/* For example, DuckDB and SQLite stores dates as a ISO8601 string */

ORDER BY {{DocumentSearchMap('When was {} born?', w.Name, return_type='date')}} ASC LIMIT 1

"""

)Below we use the scalar LLMQA function to do a search over our documents with the question formatted with a value from the structured european_countries table.

import pandas as pd

from blendsql import BlendSQL

from blendsql.search import FaissVectorStore

from blendsql.ingredients import LLMQA

bsql = BlendSQL(

{

"documents": pd.DataFrame(

[

{

"title": "Steve Nash",

"content": "Steve Nash played college basketball at Santa Clara University",

},

{

"title": "E.F. Codd",

"content": 'Edgar Frank "Ted" Codd (19 August 1923 – 18 April 2003) was a British computer scientist who, while working for IBM, invented the relational model for database management, the theoretical basis for relational databases and relational database management systems.',

},

{

"title": "George Washington (February 22, 1732 – December 14, 1799) was a Founding Father and the first president of the United States, serving from 1789 to 1797."

},

{

"title": "Thomas Jefferson",

"content": "Thomas Jefferson (April 13, 1743 – July 4, 1826) was an American Founding Father and the third president of the United States from 1801 to 1809.",

},

{

"title": "John Adams",

"content": "John Adams (October 30, 1735 – July 4, 1826) was an American Founding Father who was the second president of the United States from 1797 to 1801.",

},

]

),

"european_countries": pd.DataFrame(

[

{

"Country": "Portugal",

"Area (km²)": 91568,

"Population (As of 2011)": 10555853,

"Population density (per km²)": 115.2,

"Capital": "Lisbon",

},

{

"Country": "Sweden",

"Area (km²)": 449964,

"Population (As of 2011)": 9088728,

"Population density (per km²)": 20.1,

"Capital": "Stockholm",

},

{

"Country": "United Kingdom",

"Area (km²)": 244820,

"Population (As of 2011)": 62300000,

"Population density (per km²)": 254.4,

"Capital": "London",

},

]

),

},

model=model,

verbose=True,

)

USE_SEARCH = True

if USE_SEARCH:

LLMQA = LLMQA.from_args(

context_searcher=FaissVectorStore(

model_name_or_path="sentence-transformers/all-mpnet-base-v2",

documents=bsql.db.execute_to_list("SELECT DISTINCT title || content FROM documents"),

k=3

)

)

bsql.ingredients = {LLMQA}

smoothie = bsql.execute(

"""

SELECT {{

LLMQA(

'Who is from {}?',

/* The below subquery gets executed, and the result is inserted into the below `{}`. */

(

SELECT Country FROM european_countries c

WHERE Capital = 'London'

)

)

}} AS answer

"""

)

print(smoothie.df)

# ┌────────────┐

# │ answer │

# ├────────────┤

# │ E.F. Codd │

# └────────────┘from blendsql import BlendSQL

from blendsql.search import HybridSearch

from blendsql.ingredients import LLMMap

MultiLabelMap = LLMMap.from_args(

few_shot_examples=[

{

"question": "What medical conditions does the patient have?",

"mapping": {

"Patient experienced severe nausea and vomiting after taking the prescribed medication. The symptoms started within 2 hours of administration and persisted for 24 hours.": [

"nausea",

"vomiting",

"gastrointestinal distress",

],

"Subject reported persistent headache and dizziness following drug treatment. These symptoms interfered with daily activities and lasted for several days.": [

"headache",

"dizziness",

"neurological symptoms",

],

},

"column_name": "patient_description",

"table_name": "w",

"return_type": "list[str]",

}

],

# Below, `a_long_list_of_unique_reactions` is a list[str] containing all 24k possible labels

options_searcher=HybridSearch(

documents=a_long_list_of_unique_reactions, model_name_or_path="intfloat/e5-base-v2", k=5

),

)

bsql = BlendSQL(

{

"w": {

"patient_description": [

"Patient complained of severe stomach pain and diarrhea after taking the medication. The gastrointestinal symptoms were debilitating and required medical attention."

"Subject experienced extreme fatigue and muscle weakness following medication administration. Energy levels remained critically low for 48-72 hours post-treatment."

},

},

model=model,

verbose=True,

ingredients=[MultiLabelMap],

)Since we've configured our MultiLabelMap function with an options_searcher, for each new input to the function, it will:

- Fetch the

kmost similar options according to our similarity criteria (in this case, Hybrid BM25 + vector search). - Restrict LLM generation for each value to the

kvalue-level retrieved options.

Combining this with the return_type and quantifier argument, we have a powerful multi-label predictor.

smoothie = bsql.execute(

"""

SELECT patient_description,

{{

MultiLabelMap(

'What medical conditions does the patient have?',

patient_description,

return_type='list[str]',

quantifier='{5}'

)

}} AS prediction

FROM w

"""

)[!NOTE] You may be asking - "In the above query, why do we need to specify the

return_type? I thought the whole thing with BlendSQL was that it would infer constraints for me?" While that's true, type inference has a limit. If a query is just selecting the output of some generic LLM function, the expression context doesn't give us any hints as to what return type the user wants - a string? list? integer? In cases like these, it's important to set thereturn_typeto explicitly define the output space for the model.

For the LLM-based ingredients in BlendSQL, few-shot prompting can be vital. In LLMMap, LLMQA and LLMJoin, we provide an interface to pass custom few-shot examples.

from blendsql import BlendSQL

from blendsql.ingredients.builtin import LLMMap, DEFAULT_MAP_FEW_SHOT

ingredients = {

LLMMap.from_args(

few_shot_examples=[

*DEFAULT_MAP_FEW_SHOT,

{

"question": "Is this a sport?",

"mapping": {

"Soccer": True,

"Chair": False,

"Banana": False,

"Golf": True

},

# Below are optional

"column_name": "Items",

"table_name": "Table",

"return_type": "boolean"

}

],

# How many inference values to pass to model at once

batch_size=5,

)

}

bsql = BlendSQL(db, ingredients=ingredients)from blendsql import BlendSQL

from blendsql.ingredients.builtin import LLMQA, DEFAULT_QA_FEW_SHOT

ingredients = {

LLMQA.from_args(

few_shot_examples=[

*DEFAULT_QA_FEW_SHOT,

{

"question": "Which weighs the most?",

"context": {

{

"Animal": ["Dog", "Gorilla", "Hamster"],

"Weight": ["20 pounds", "350 lbs", "100 grams"]

}

},

"answer": "Gorilla",

# Below are optional

"options": ["Dog", "Gorilla", "Hamster"]

}

],

# Lambda to turn the pd.DataFrame to a serialized string

context_formatter=lambda df: df.to_markdown(

index=False

)

)

}

bsql = BlendSQL(db, ingredients=ingredients)from blendsql import BlendSQL

from blendsql.ingredients.builtin import LLMJoin, DEFAULT_JOIN_FEW_SHOT

ingredients = {

LLMJoin.from_args(

few_shot_examples=[

*DEFAULT_JOIN_FEW_SHOT,

{

"join_criteria": "Join the state to its capital.",

"left_values": ["California", "Massachusetts", "North Carolina"],

"right_values": ["Sacramento", "Boston", "Chicago"],

"mapping": {

"California": "Sacramento",

"Massachusetts": "Boston",

"North Carolina": "-"

}

}

],

)

}

bsql = BlendSQL(db, ingredients=ingredients)@inproceedings{glenn2025play,

title={Play by the Type Rules: Inferring Constraints for Small Language Models in Declarative Programs},

author={Glenn, Parker and Samuel, Alfy and Liu, Daben},

booktitle={EurIPS 2025 Workshop: AI for Tabular Data}

}

@article{glenn2024blendsql,

title={BlendSQL: A Scalable Dialect for Unifying Hybrid Question Answering in Relational Algebra},

author={Parker Glenn and Parag Pravin Dakle and Liang Wang and Preethi Raghavan},

year={2024},

eprint={2402.17882},

archivePrefix={arXiv},

primaryClass={cs.CL}

}Special thanks to those below for inspiring this project. Definitely recommend checking out the linked work below, and citing when applicable!

- The authors of Binding Language Models in Symbolic Languages

- This paper was the primary inspiration for BlendSQL.

- The authors of EHRXQA: A Multi-Modal Question Answering Dataset for Electronic Health Records with Chest X-ray Images

- As far as I can tell, the first publication to propose unifying model calls within SQL

- Served as the inspiration for the vqa-ingredient.ipynb example

- The authors of Grammar Prompting for Domain-Specific Language Generation with Large Language Models

- The maintainers of the Guidance library for powering the constrained decoding capabilities of BlendSQL

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for blendsql

Similar Open Source Tools

blendsql

BlendSQL is a superset of SQLite designed for problem decomposition and hybrid question-answering with Large Language Models (LLMs). It allows users to blend operations over heterogeneous data sources like tables, text, and images, combining the structured and interpretable reasoning of SQL with the generalizable reasoning of LLMs. Users can oversee all calls (LLM + SQL) within a unified query language, enabling tasks such as building LLM chatbots for travel planning and answering complex questions by injecting 'ingredients' as callable functions.

shell_gpt

ShellGPT is a command-line productivity tool powered by AI large language models (LLMs). This command-line tool offers streamlined generation of shell commands, code snippets, documentation, eliminating the need for external resources (like Google search). Supports Linux, macOS, Windows and compatible with all major Shells like PowerShell, CMD, Bash, Zsh, etc.

codemap

Codemap is a project brain tool designed to provide instant architectural context for AI projects without consuming excessive tokens. It offers features such as tree visualization, file filtering, dependency flow analysis, and remote repository support. Codemap can be integrated with Claude for automatic context at session start and supports multi-agent handoff for seamless collaboration between different tools. The tool is powered by ast-grep and supports 18 languages for dependency analysis, making it versatile for various project types.

FinMem-LLM-StockTrading

This repository contains the Python source code for FINMEM, a Performance-Enhanced Large Language Model Trading Agent with Layered Memory and Character Design. It introduces FinMem, a novel LLM-based agent framework devised for financial decision-making, encompassing three core modules: Profiling, Memory with layered processing, and Decision-making. FinMem's memory module aligns closely with the cognitive structure of human traders, offering robust interpretability and real-time tuning. The framework enables the agent to self-evolve its professional knowledge, react agilely to new investment cues, and continuously refine trading decisions in the volatile financial environment. It presents a cutting-edge LLM agent framework for automated trading, boosting cumulative investment returns.

gpt-all-star

GPT-All-Star is an AI-powered code generation tool designed for scratch development of web applications with team collaboration of autonomous AI agents. The primary focus of this research project is to explore the potential of autonomous AI agents in software development. Users can organize their team, choose leaders for each step, create action plans, and work together to complete tasks. The tool supports various endpoints like OpenAI, Azure, and Anthropic, and provides functionalities for project management, code generation, and team collaboration.

memsearch

Memsearch is a tool that allows users to give their AI agents persistent memory in a few lines of code. It enables users to write memories as markdown and search them semantically. Inspired by OpenClaw's markdown-first memory architecture, Memsearch is pluggable into any agent framework. The tool offers features like smart deduplication, live sync, and a ready-made Claude Code plugin for building agent memory.

Shannon

Shannon is a battle-tested infrastructure for AI agents that solves problems at scale, such as runaway costs, non-deterministic failures, and security concerns. It offers features like intelligent caching, deterministic replay of workflows, time-travel debugging, WASI sandboxing, and hot-swapping between LLM providers. Shannon allows users to ship faster with zero configuration multi-agent setup, multiple AI patterns, time-travel debugging, and hot configuration changes. It is production-ready with features like WASI sandbox, token budget control, policy engine (OPA), and multi-tenancy. Shannon helps scale without breaking by reducing costs, being provider agnostic, observable by default, and designed for horizontal scaling with Temporal workflow orchestration.

myclaw

myclaw is a personal AI assistant built on agentsdk-go that offers a CLI agent for single message or interactive REPL mode, full orchestration with channels, cron, and heartbeat, support for various messaging channels like Telegram, Feishu, WeCom, WhatsApp, and a web UI, multi-provider support for Anthropic and OpenAI models, image recognition and document processing, scheduled tasks with JSON persistence, long-term and daily memory storage, custom skill loading, and more. It provides a comprehensive solution for interacting with AI models and managing tasks efficiently.

lihil

Lihil is a performant, productive, and professional web framework designed to make Python the mainstream programming language for web development. It is 100% test covered and strictly typed, offering fast performance, ergonomic API, and built-in solutions for common problems. Lihil is suitable for enterprise web development, delivering robust and scalable solutions with best practices in microservice architecture and related patterns. It features dependency injection, OpenAPI docs generation, error response generation, data validation, message system, testability, and strong support for AI features. Lihil is ASGI compatible and uses starlette as its ASGI toolkit, ensuring compatibility with starlette classes and middlewares. The framework follows semantic versioning and has a roadmap for future enhancements and features.

solo-server

Solo Server is a lightweight server designed for managing hardware-aware inference. It provides seamless setup through a simple CLI and HTTP servers, an open model registry for pulling models from platforms like Ollama and Hugging Face, cross-platform compatibility for effortless deployment of AI models on hardware, and a configurable framework that auto-detects hardware components (CPU, GPU, RAM) and sets optimal configurations.

frankenterm

A swarm-native terminal platform designed to replace legacy terminal workflows for massive AI agent orchestration. `ft` is a full terminal platform for agent swarms with first-class observability, deterministic eventing, policy-gated automation, and machine-native control surfaces. It offers perfect observability, intelligent detection, event-driven automation, Robot Mode API, lexical + hybrid search, and a policy engine for safe multi-agent control. The platform is actively expanding with concepts learned from Ghostty and Zellij, purpose-built subsystems for agent swarms, and integrations from other projects like `/dp/asupersync`, `/dp/frankensqlite`, and `/frankentui`.

mimiclaw

MimiClaw is a pocket AI assistant that runs on a $5 chip, specifically designed for the ESP32-S3 board. It operates without Linux or Node.js, using pure C language. Users can interact with MimiClaw through Telegram, enabling it to handle various tasks and learn from local memory. The tool is energy-efficient, running on USB power 24/7. With MimiClaw, users can have a personal AI assistant on a chip the size of a thumb, making it convenient and accessible for everyday use.

vllm-mlx

vLLM-MLX is a tool that brings native Apple Silicon GPU acceleration to vLLM by integrating Apple's ML framework with unified memory and Metal kernels. It offers optimized LLM inference with KV cache and quantization, vision-language models for multimodal inference, speech-to-text and text-to-speech with native voices, text embeddings for semantic search and RAG, and more. Users can benefit from features like multimodal support for text, image, video, and audio, native GPU acceleration on Apple Silicon, compatibility with OpenAI API, Anthropic Messages API, reasoning models extraction, integration with external tools via Model Context Protocol, memory-efficient caching, and high throughput for multiple concurrent users.

httpjail

httpjail is a cross-platform tool designed for monitoring and restricting HTTP/HTTPS requests from processes using network isolation and transparent proxy interception. It provides process-level network isolation, HTTP/HTTPS interception with TLS certificate injection, script-based and JavaScript evaluation for custom request logic, request logging, default deny behavior, and zero-configuration setup. The tool operates on Linux and macOS, creating an isolated network environment for target processes and intercepting all HTTP/HTTPS traffic through a transparent proxy enforcing user-defined rules.

tinyclaw

TinyClaw is a lightweight wrapper around Claude Code that connects WhatsApp via QR code, processes messages sequentially, maintains conversation context, runs 24/7 in tmux, and is ready for multi-channel support. Its key innovation is the file-based queue system that prevents race conditions and enables multi-channel support. TinyClaw consists of components like whatsapp-client.js for WhatsApp I/O, queue-processor.js for message processing, heartbeat-cron.sh for health checks, and tinyclaw.sh as the main orchestrator with a CLI interface. It ensures no race conditions, is multi-channel ready, provides clean responses using claude -c -p, and supports persistent sessions. Security measures include local storage of WhatsApp session and queue files, channel-specific authentication, and running Claude with user permissions.

forge-orchestrator

Forge Orchestrator is a Rust CLI tool designed to coordinate and manage multiple AI tools seamlessly. It acts as a senior tech lead, preventing conflicts, capturing knowledge, and ensuring work aligns with specifications. With features like file locking, knowledge capture, and unified state management, Forge enhances collaboration and efficiency among AI tools. The tool offers a pluggable brain for intelligent decision-making and includes a Model Context Protocol server for real-time integration with AI tools. Forge is not a replacement for AI tools but a facilitator for making them work together effectively.

For similar tasks

blendsql

BlendSQL is a superset of SQLite designed for problem decomposition and hybrid question-answering with Large Language Models (LLMs). It allows users to blend operations over heterogeneous data sources like tables, text, and images, combining the structured and interpretable reasoning of SQL with the generalizable reasoning of LLMs. Users can oversee all calls (LLM + SQL) within a unified query language, enabling tasks such as building LLM chatbots for travel planning and answering complex questions by injecting 'ingredients' as callable functions.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

lollms

LoLLMs Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications.

LlamaIndexTS

LlamaIndex.TS is a data framework for your LLM application. Use your own data with large language models (LLMs, OpenAI ChatGPT and others) in Typescript and Javascript.

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

botpress

Botpress is a platform for building next-generation chatbots and assistants powered by OpenAI. It provides a range of tools and integrations to help developers quickly and easily create and deploy chatbots for various use cases.

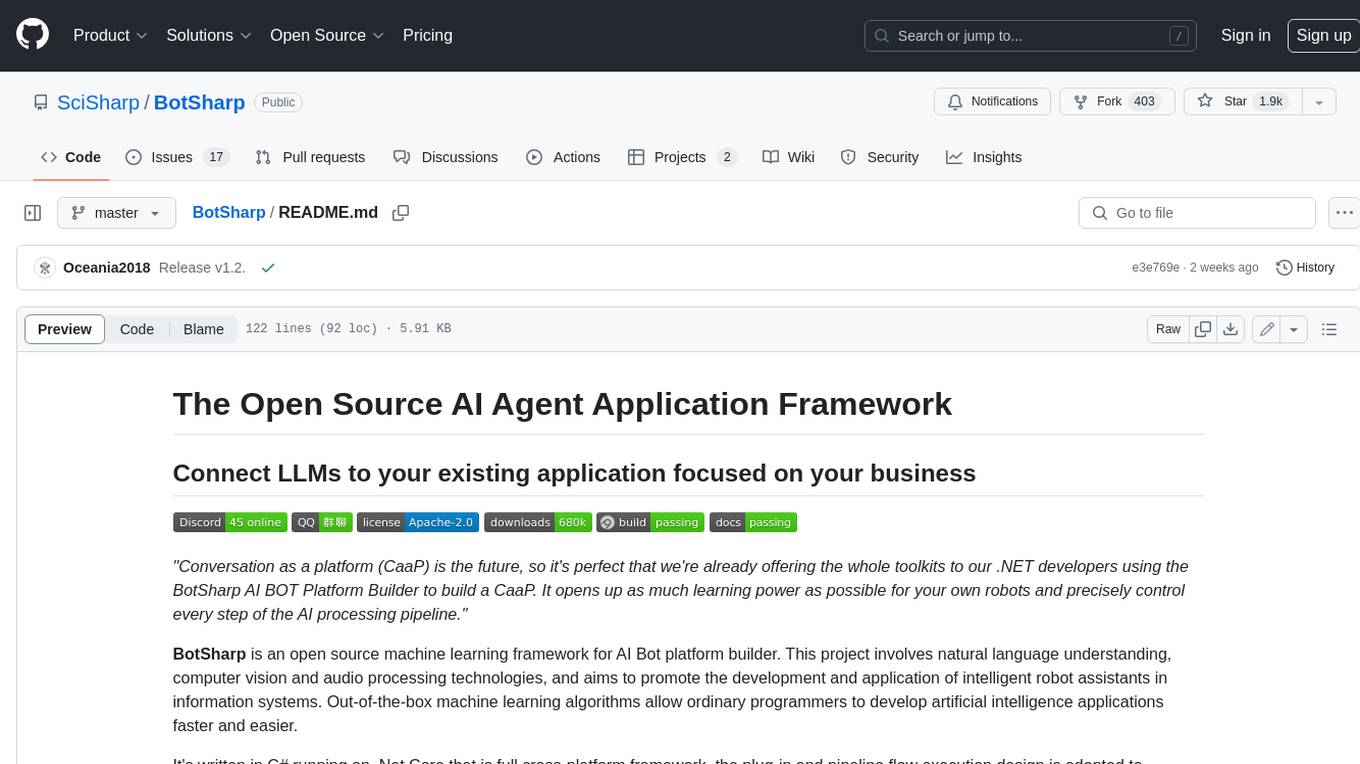

BotSharp

BotSharp is an open-source machine learning framework for building AI bot platforms. It provides a comprehensive set of tools and components for developing and deploying intelligent virtual assistants. BotSharp is designed to be modular and extensible, allowing developers to easily integrate it with their existing systems and applications. With BotSharp, you can quickly and easily create AI-powered chatbots, virtual assistants, and other conversational AI applications.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.