go-anthropic

Anthropic Claude API wrapper for Go

Stars: 130

Go-anthropic is an unofficial API wrapper for Anthropic Claude in Go. It supports completions, streaming completions, messages, streaming messages, vision, and tool use. Users can interact with the Anthropic Claude API to generate text completions, analyze messages, process images, and utilize specific tools for various tasks.

README:

Anthropic Claude API wrapper for Go (Unofficial).

This package has support for:

- Completions

- Streaming Completions

- Messages

- Streaming Messages

- Message Batching

- Vision and PDFs

- Tool use (with computer use)

- Prompt Caching

- Token Counting

go get github.com/liushuangls/go-anthropic/v2

Currently, go-anthropic requires Go version 1.21 or greater.

package main

import (

"errors"

"fmt"

"github.com/liushuangls/go-anthropic/v2"

)

func main() {

client := anthropic.NewClient("your anthropic api key")

resp, err := client.CreateMessages(context.Background(), anthropic.MessagesRequest{

Model: anthropic.ModelClaude3Haiku20240307,

Messages: []anthropic.Message{

anthropic.NewUserTextMessage("What is your name?"),

},

MaxTokens: 1000,

})

if err != nil {

var e *anthropic.APIError

if errors.As(err, &e) {

fmt.Printf("Messages error, type: %s, message: %s", e.Type, e.Message)

} else {

fmt.Printf("Messages error: %v\n", err)

}

return

}

fmt.Println(resp.Content[0].GetText())

}package main

import (

"errors"

"fmt"

"github.com/liushuangls/go-anthropic/v2"

)

func main() {

client := anthropic.NewClient("your anthropic api key")

resp, err := client.CreateMessagesStream(context.Background(), anthropic.MessagesStreamRequest{

MessagesRequest: anthropic.MessagesRequest{

Model: anthropic.ModelClaude3Haiku20240307,

Messages: []anthropic.Message{

anthropic.NewUserTextMessage("What is your name?"),

},

MaxTokens: 1000,

},

OnContentBlockDelta: func(data anthropic.MessagesEventContentBlockDeltaData) {

fmt.Printf("Stream Content: %s\n", data.Delta.Text)

},

})

if err != nil {

var e *anthropic.APIError

if errors.As(err, &e) {

fmt.Printf("Messages stream error, type: %s, message: %s", e.Type, e.Message)

} else {

fmt.Printf("Messages stream error: %v\n", err)

}

return

}

fmt.Println(resp.Content[0].GetText())

}Messages Vision example

package main

import (

"errors"

"fmt"

"github.com/liushuangls/go-anthropic/v2"

)

func main() {

client := anthropic.NewClient("your anthropic api key")

imagePath := "xxx"

imageMediaType := "image/jpeg"

imageFile, err := os.Open(imagePath)

if err != nil {

panic(err)

}

imageData, err := io.ReadAll(imageFile)

if err != nil {

panic(err)

}

resp, err := client.CreateMessages(context.Background(), anthropic.MessagesRequest{

Model: anthropic.ModelClaude3Opus20240229,

Messages: []anthropic.Message{

{

Role: anthropic.RoleUser,

Content: []anthropic.MessageContent{

anthropic.NewImageMessageContent(

anthropic.NewMessageContentSource(

anthropic.MessagesContentSourceTypeBase64,

imageMediaType,

imageData,

),

),

anthropic.NewTextMessageContent("Describe this image."),

},

},

},

MaxTokens: 1000,

})

if err != nil {

var e *anthropic.APIError

if errors.As(err, &e) {

fmt.Printf("Messages error, type: %s, message: %s", e.Type, e.Message)

} else {

fmt.Printf("Messages error: %v\n", err)

}

return

}

fmt.Println(*resp.Content[0].GetText())

}Messages Tool use example

package main

import (

"context"

"fmt"

"github.com/liushuangls/go-anthropic/v2"

"github.com/liushuangls/go-anthropic/v2/jsonschema"

)

func main() {

client := anthropic.NewClient(

"your anthropic api key",

)

request := anthropic.MessagesRequest{

Model: anthropic.ModelClaude3Haiku20240307,

Messages: []anthropic.Message{

anthropic.NewUserTextMessage("What is the weather like in San Francisco?"),

},

MaxTokens: 1000,

Tools: []anthropic.ToolDefinition{

{

Name: "get_weather",

Description: "Get the current weather in a given location",

InputSchema: jsonschema.Definition{

Type: jsonschema.Object,

Properties: map[string]jsonschema.Definition{

"location": {

Type: jsonschema.String,

Description: "The city and state, e.g. San Francisco, CA",

},

"unit": {

Type: jsonschema.String,

Enum: []string{"celsius", "fahrenheit"},

Description: "The unit of temperature, either 'celsius' or 'fahrenheit'",

},

},

Required: []string{"location"},

},

},

},

}

resp, err := client.CreateMessages(context.Background(), request)

if err != nil {

panic(err)

}

request.Messages = append(request.Messages, anthropic.Message{

Role: anthropic.RoleAssistant,

Content: resp.Content,

})

var toolUse *anthropic.MessageContentToolUse

for _, c := range resp.Content {

if c.Type == anthropic.MessagesContentTypeToolUse {

toolUse = c.MessageContentToolUse

}

}

if toolUse == nil {

panic("tool use not found")

}

request.Messages = append(request.Messages, anthropic.NewToolResultsMessage(toolUse.ID, "65 degrees", false))

resp, err = client.CreateMessages(context.Background(), request)

if err != nil {

panic(err)

}

fmt.Printf("Response: %+v\n", resp)

}Prompt Caching

doc: https://docs.anthropic.com/en/docs/build-with-claude/prompt-caching

package main

import (

"context"

"errors"

"fmt"

"github.com/liushuangls/go-anthropic/v2"

)

func main() {

client := anthropic.NewClient(

"your anthropic api key",

anthropic.WithBetaVersion(anthropic.BetaPromptCaching20240731),

)

resp, err := client.CreateMessages(

context.Background(),

anthropic.MessagesRequest{

Model: anthropic.ModelClaude3Haiku20240307,

MultiSystem: []anthropic.MessageSystemPart{

{

Type: "text",

Text: "You are an AI assistant tasked with analyzing literary works. Your goal is to provide insightful commentary on themes, characters, and writing style.",

},

{

Type: "text",

Text: "<the entire contents of Pride and Prejudice>",

CacheControl: &anthropic.MessageCacheControl{

Type: anthropic.CacheControlTypeEphemeral,

},

},

},

Messages: []anthropic.Message{

anthropic.NewUserTextMessage("Analyze the major themes in Pride and Prejudice.")

},

MaxTokens: 1000,

})

if err != nil {

var e *anthropic.APIError

if errors.As(err, &e) {

fmt.Printf("Messages error, type: %s, message: %s", e.Type, e.Message)

} else {

fmt.Printf("Messages error: %v\n", err)

}

return

}

fmt.Printf("Usage: %+v\n", resp.Usage)

fmt.Println(resp.Content[0].GetText())

}VertexAI example

If you are using a Google Credentials file, you can use the following code to create a client:package main

import (

"context"

"errors"

"fmt"

"os"

"github.com/liushuangls/go-anthropic/v2"

"golang.org/x/oauth2/google"

)

func main() {

credBytes, err := os.ReadFile("<path to your credentials file>")

if err != nil {

fmt.Println("Error reading file")

return

}

ts, err := google.JWTAccessTokenSourceWithScope(credBytes, "https://www.googleapis.com/auth/cloud-platform", "https://www.googleapis.com/auth/cloud-platform.read-only")

if err != nil {

fmt.Println("Error creating token source")

return

}

// use JWTAccessTokenSourceWithScope

token, err := ts.Token()

if err != nil {

fmt.Println("Error getting token")

return

}

fmt.Println(token.AccessToken)

client := anthropic.NewClient(token.AccessToken, anthropic.WithVertexAI("<YOUR PROJECTID>", "<YOUR LOCATION>"))

resp, err := client.CreateMessagesStream(context.Background(), anthropic.MessagesStreamRequest{

MessagesRequest: anthropic.MessagesRequest{

Model: anthropic.ModelClaude3Haiku20240307,

Messages: []anthropic.Message{

anthropic.NewUserTextMessage("What is your name?"),

},

MaxTokens: 1000,

},

OnContentBlockDelta: func(data anthropic.MessagesEventContentBlockDeltaData) {

fmt.Printf("Stream Content: %s\n", *data.Delta.Text)

},

})

if err != nil {

var e *anthropic.APIError

if errors.As(err, &e) {

fmt.Printf("Messages stream error, type: %s, message: %s", e.Type, e.Message)

} else {

fmt.Printf("Messages stream error: %v\n", err)

}

return

}

fmt.Println(resp.Content[0].GetText())

}Message Batching

doc: https://docs.anthropic.com/en/docs/build-with-claude/message-batches

package main

import (

"context"

"errors"

"fmt"

"os"

"github.com/liushuangls/go-anthropic/v2"

)

func main() {

client := anthropic.NewClient(

"your anthropic api key",

anthropic.WithBetaVersion(anthropic.BetaMessageBatches20240924),

)

resp, err := client.CreateBatch(context.Background(),

anthropic.BatchRequest{

Requests: []anthropic.InnerRequests{

{

CustomId: myId,

Params: anthropic.MessagesRequest{

Model: anthropic.ModelClaude3Haiku20240307,

MultiSystem: anthropic.NewMultiSystemMessages(

"you are an assistant",

"you are snarky",

),

MaxTokens: 10,

Messages: []anthropic.Message{

anthropic.NewUserTextMessage("What is your name?"),

anthropic.NewAssistantTextMessage("My name is Claude."),

anthropic.NewUserTextMessage("What is your favorite color?"),

},

},

},

},

},

)

if err != nil {

var e *anthropic.APIError

if errors.As(err, &e) {

fmt.Printf("Messages error, type: %s, message: %s", e.Type, e.Message)

} else {

fmt.Printf("Messages error: %v\n", err)

}

return

}

fmt.Println(resp)

retrieveResp, err := client.RetrieveBatch(ctx, resp.Id)

if err != nil {

var e *anthropic.APIError

if errors.As(err, &e) {

fmt.Printf("Messages error, type: %s, message: %s", e.Type, e.Message)

} else {

fmt.Printf("Messages error: %v\n", err)

}

return

}

fmt.Println(retrieveResp)

resultResp, err := client.RetrieveBatchResults(ctx, "batch_id_your-batch-here")

if err != nil {

var e *anthropic.APIError

if errors.As(err, &e) {

fmt.Printf("Messages error, type: %s, message: %s", e.Type, e.Message)

} else {

fmt.Printf("Messages error: %v\n", err)

}

return

}

fmt.Println(resultResp)

listResp, err := client.ListBatches(ctx, anthropic.ListBatchesRequest{})

if err != nil {

var e *anthropic.APIError

if errors.As(err, &e) {

fmt.Printf("Messages error, type: %s, message: %s", e.Type, e.Message)

} else {

fmt.Printf("Messages error: %v\n", err)

}

return

}

fmt.Println(listResp)

cancelResp, err := client.CancelBatch(ctx, "batch_id_your-batch-here")

if err != nil {

t.Fatalf("CancelBatch error: %s", err)

}

fmt.Println(cancelResp)Token Counting example

// TODO: add example!Anthropic provides several beta features that can be enabled using the following beta version identifiers:

| Beta Version Identifier | Code Constant | Description |

|---|---|---|

tools-2024-04-04 |

BetaTools20240404 |

Initial tools beta |

tools-2024-05-16 |

BetaTools20240516 |

Updated tools beta |

prompt-caching-2024-07-31 |

BetaPromptCaching20240731 |

Prompt caching beta |

message-batches-2024-09-24 |

BetaMessageBatches20240924 |

Message batching beta |

token-counting-2024-11-01 |

BetaTokenCounting20241101 |

Token counting beta |

max-tokens-3-5-sonnet-2024-07-15 |

BetaMaxTokens35Sonnet20240715 |

Max tokens beta for Sonnet model |

computer-use-2024-10-22 |

BetaComputerUse20241022 |

Computer use beta |

The following models are supported by go-anthropic. These models are also available for use on Google's Vertex AI platform as well.

| Model Name | Model String |

|---|---|

| ModelClaude2Dot0 | "claude-2.0" |

| ModelClaude2Dot1 | "claude-2.1" |

| ModelClaude3Opus20240229 | "claude-3-opus-20240229" |

| ModelClaude3Sonnet20240229 | "claude-3-sonnet-20240229" |

| ModelClaude3Dot5Sonnet20240620 | "claude-3-5-sonnet-20240620" |

| ModelClaude3Dot5Sonnet20241022 | "claude-3-5-sonnet-20241022" |

| ModelClaude3Dot5SonnetLatest | "claude-3-5-sonnet-latest" |

| ModelClaude3Haiku20240307 | "claude-3-haiku-20240307" |

| ModelClaude3Dot5HaikuLatest | "claude-3-5-haiku-latest" |

| ModelClaude3Dot5Haiku20241022 | "claude-3-5-haiku-20241022" |

Two exported enums are additionally provided:

-

RoleUser= "user": Input role type for user messages -

RoleAssistant= "assistant": Input role type for assistant/Claude messages

The following project had particular influence on go-anthropic's design.

Additionally, we thank anthropic for providing the API and documentation.

go-anthropic is licensed under the Apache License, Version 2.0. See LICENSE for the full license text.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for go-anthropic

Similar Open Source Tools

go-anthropic

Go-anthropic is an unofficial API wrapper for Anthropic Claude in Go. It supports completions, streaming completions, messages, streaming messages, vision, and tool use. Users can interact with the Anthropic Claude API to generate text completions, analyze messages, process images, and utilize specific tools for various tasks.

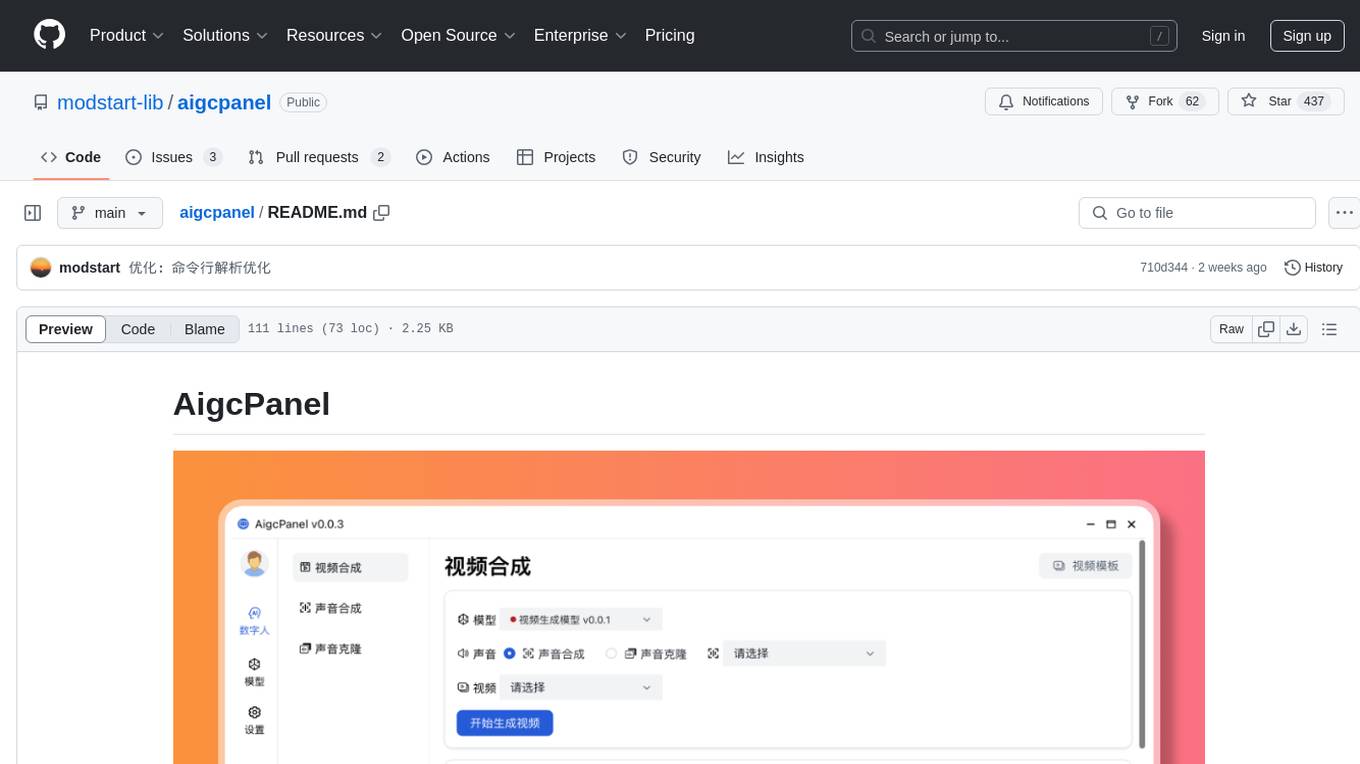

aigcpanel

AigcPanel is a simple and easy-to-use all-in-one AI digital human system that even beginners can use. It supports video synthesis, voice synthesis, voice cloning, simplifies local model management, and allows one-click import and use of AI models. It prohibits the use of this product for illegal activities and users must comply with the laws and regulations of the People's Republic of China.

tambo

tambo ai is a React library that simplifies the process of building AI assistants and agents in React by handling thread management, state persistence, streaming responses, AI orchestration, and providing a compatible React UI library. It eliminates React boilerplate for AI features, allowing developers to focus on creating exceptional user experiences with clean React hooks that seamlessly integrate with their codebase.

dashscope-sdk

DashScope SDK for .NET is an unofficial SDK maintained by Cnblogs, providing various APIs for text embedding, generation, multimodal generation, image synthesis, and more. Users can interact with the SDK to perform tasks such as text completion, chat generation, function calls, file operations, and more. The project is under active development, and users are advised to check the Release Notes before upgrading.

lagent

Lagent is a lightweight open-source framework that allows users to efficiently build large language model(LLM)-based agents. It also provides some typical tools to augment LLM. The overview of our framework is shown below:

bellman

Bellman is a unified interface to interact with language and embedding models, supporting various vendors like VertexAI/Gemini, OpenAI, Anthropic, VoyageAI, and Ollama. It consists of a library for direct interaction with models and a service 'bellmand' for proxying requests with one API key. Bellman simplifies switching between models, vendors, and common tasks like chat, structured data, tools, and binary input. It addresses the lack of official SDKs for major players and differences in APIs, providing a single proxy for handling different models. The library offers clients for different vendors implementing common interfaces for generating and embedding text, enabling easy interchangeability between models.

ogpt.nvim

OGPT.nvim is a Neovim plugin that enables users to interact with various language models (LLMs) such as Ollama, OpenAI, TextGenUI, and more. Users can engage in interactive question-and-answer sessions, have persona-based conversations, and execute customizable actions like grammar correction, translation, keyword generation, docstring creation, test addition, code optimization, summarization, bug fixing, code explanation, and code readability analysis. The plugin allows users to define custom actions using a JSON file or plugin configurations.

openai-scala-client

This is a no-nonsense async Scala client for OpenAI API supporting all the available endpoints and params including streaming, chat completion, vision, and voice routines. It provides a single service called OpenAIService that supports various calls such as Models, Completions, Chat Completions, Edits, Images, Embeddings, Batches, Audio, Files, Fine-tunes, Moderations, Assistants, Threads, Thread Messages, Runs, Run Steps, Vector Stores, Vector Store Files, and Vector Store File Batches. The library aims to be self-contained with minimal dependencies and supports API-compatible providers like Azure OpenAI, Azure AI, Anthropic, Google Vertex AI, Groq, Grok, Fireworks AI, OctoAI, TogetherAI, Cerebras, Mistral, Deepseek, Ollama, FastChat, and more.

openapi

The `@samchon/openapi` repository is a collection of OpenAPI types and converters for various versions of OpenAPI specifications. It includes an 'emended' OpenAPI v3.1 specification that enhances clarity by removing ambiguous and duplicated expressions. The repository also provides an application composer for LLM (Large Language Model) function calling from OpenAPI documents, allowing users to easily perform LLM function calls based on the Swagger document. Conversions to different versions of OpenAPI documents are also supported, all based on the emended OpenAPI v3.1 specification. Users can validate their OpenAPI documents using the `typia` library with `@samchon/openapi` types, ensuring compliance with standard specifications.

gp.nvim

Gp.nvim (GPT prompt) Neovim AI plugin provides a seamless integration of GPT models into Neovim, offering features like streaming responses, extensibility via hook functions, minimal dependencies, ChatGPT-like sessions, instructable text/code operations, speech-to-text support, and image generation directly within Neovim. The plugin aims to enhance the Neovim experience by leveraging the power of AI models in a user-friendly and native way.

island-ai

island-ai is a TypeScript toolkit tailored for developers engaging with structured outputs from Large Language Models. It offers streamlined processes for handling, parsing, streaming, and leveraging AI-generated data across various applications. The toolkit includes packages like zod-stream for interfacing with LLM streams, stream-hooks for integrating streaming JSON data into React applications, and schema-stream for JSON streaming parsing based on Zod schemas. Additionally, related packages like @instructor-ai/instructor-js focus on data validation and retry mechanisms, enhancing the reliability of data processing workflows.

airtable

A simple Golang package to access the Airtable API. It provides functionalities to interact with Airtable such as initializing client, getting tables, listing records, adding records, updating records, deleting records, and bulk deleting records. The package is compatible with Go 1.13 and above.

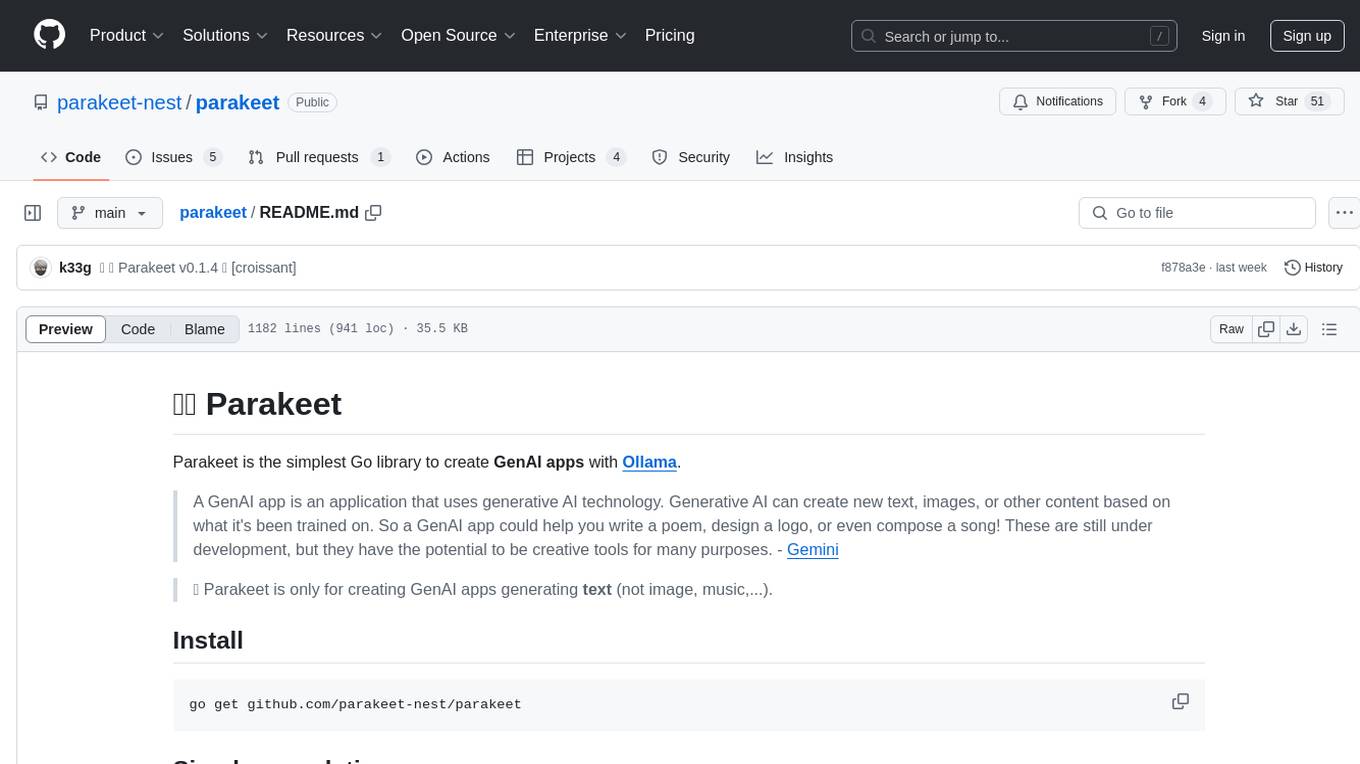

parakeet

Parakeet is a Go library for creating GenAI apps with Ollama. It enables the creation of generative AI applications that can generate text-based content. The library provides tools for simple completion, completion with context, chat completion, and more. It also supports function calling with tools and Wasm plugins. Parakeet allows users to interact with language models and create AI-powered applications easily.

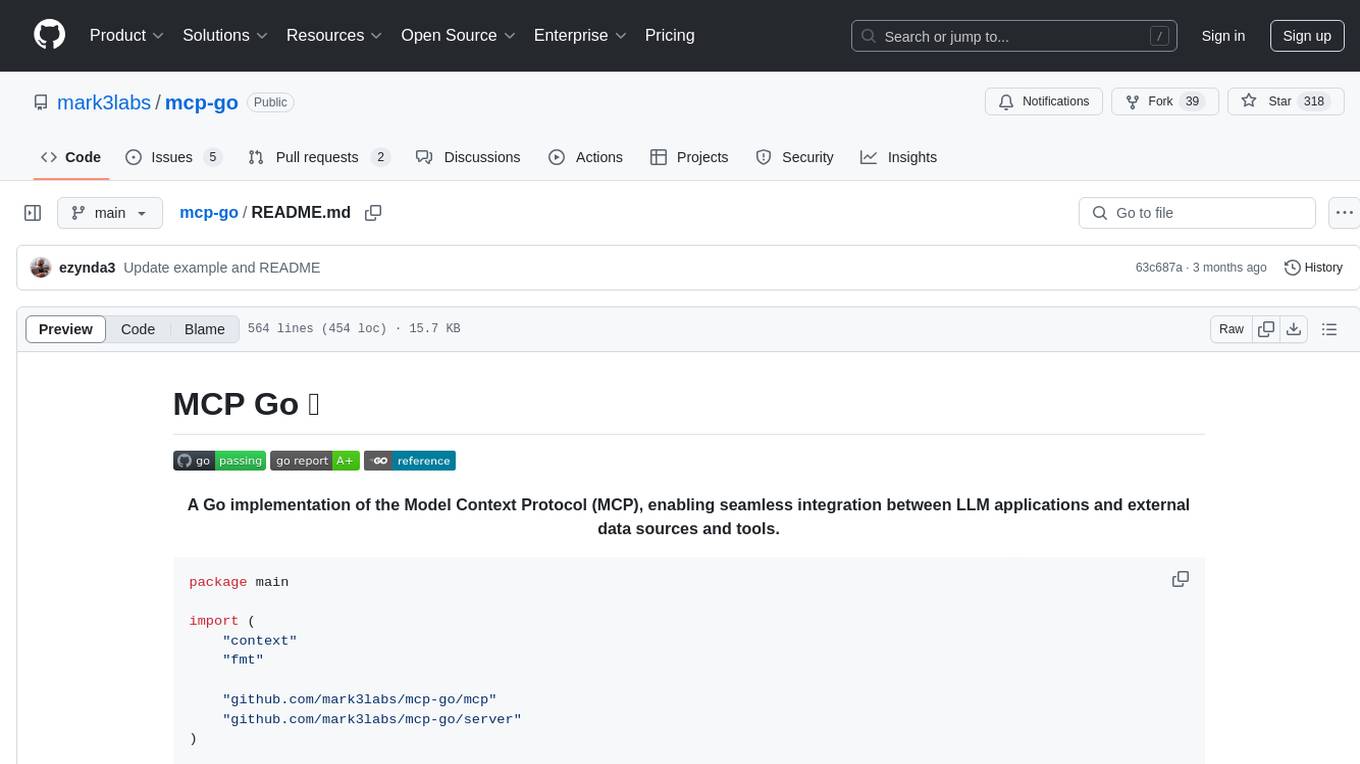

mcp-go

MCP Go is a Go implementation of the Model Context Protocol (MCP), facilitating seamless integration between LLM applications and external data sources and tools. It handles complex protocol details and server management, allowing developers to focus on building tools. The tool is designed to be fast, simple, and complete, aiming to provide a high-level and easy-to-use interface for developing MCP servers. MCP Go is currently under active development, with core features working and advanced capabilities in progress.

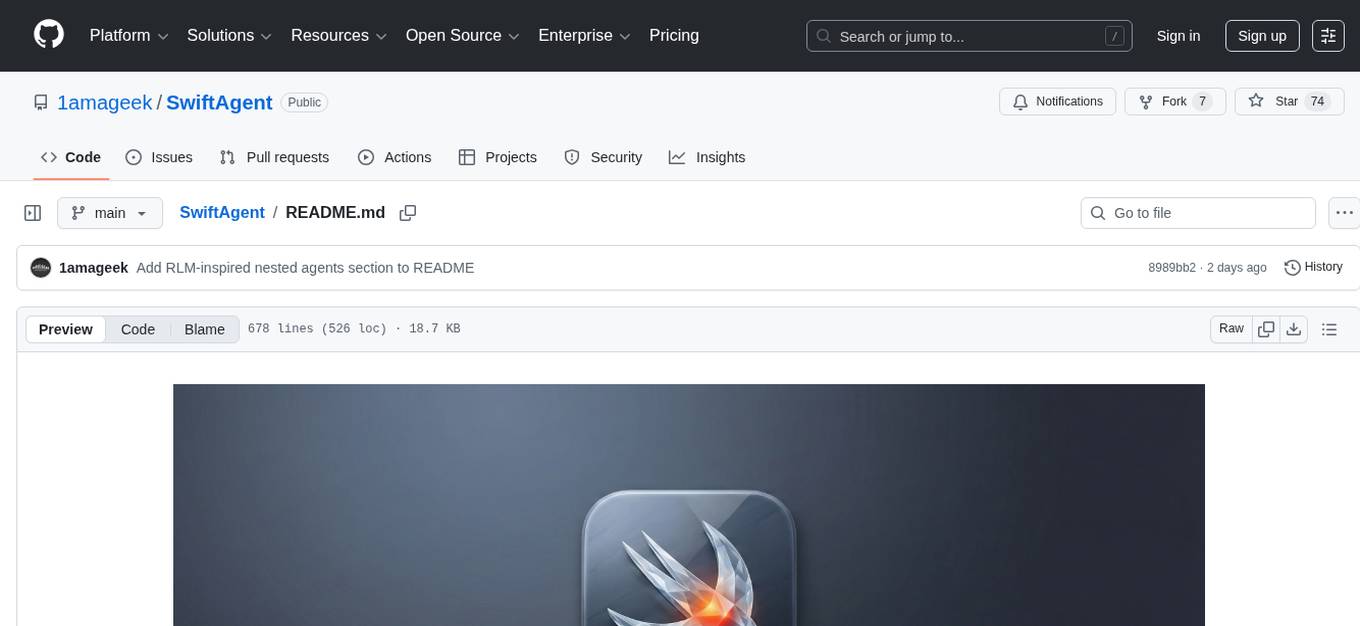

SwiftAgent

A type-safe, declarative framework for building AI agents in Swift, SwiftAgent is built on Apple FoundationModels. It allows users to compose agents by combining Steps in a declarative syntax similar to SwiftUI. The framework ensures compile-time checked input/output types, native Apple AI integration, structured output generation, and built-in security features like permission, sandbox, and guardrail systems. SwiftAgent is extensible with MCP integration, distributed agents, and a skills system. Users can install SwiftAgent with Swift 6.2+ on iOS 26+, macOS 26+, or Xcode 26+ using Swift Package Manager.

For similar tasks

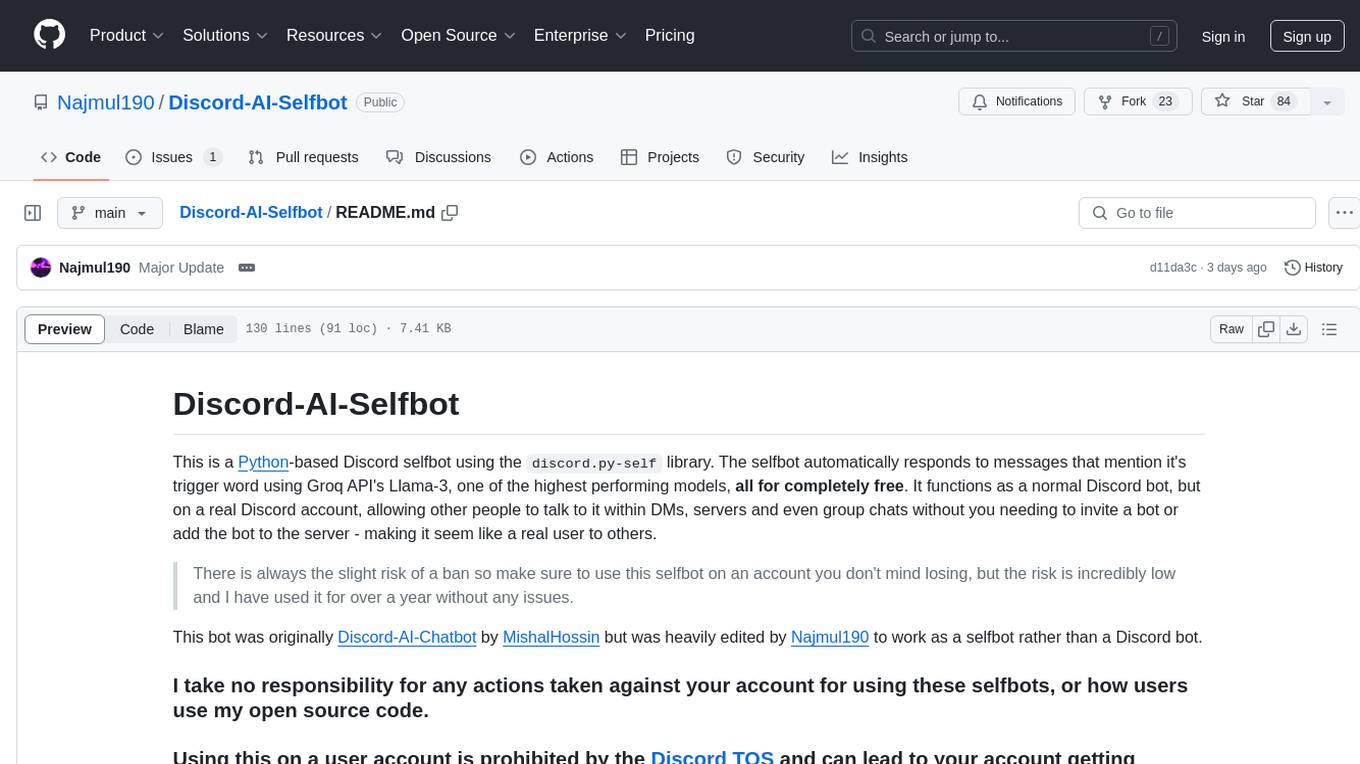

Discord-AI-Selfbot

Discord-AI-Selfbot is a Python-based Discord selfbot that uses the `discord.py-self` library to automatically respond to messages mentioning its trigger word using Groq API's Llama-3 model. It functions as a normal Discord bot on a real Discord account, enabling interactions in DMs, servers, and group chats without needing to invite a bot. The selfbot comes with features like custom AI instructions, free LLM model usage, mention and reply recognition, message handling, channel-specific responses, and a psychoanalysis command to analyze user messages for insights on personality.

go-anthropic

Go-anthropic is an unofficial API wrapper for Anthropic Claude in Go. It supports completions, streaming completions, messages, streaming messages, vision, and tool use. Users can interact with the Anthropic Claude API to generate text completions, analyze messages, process images, and utilize specific tools for various tasks.

UnrealOpenAIPlugin

UnrealOpenAIPlugin is a comprehensive Unreal Engine wrapper for the OpenAI API, supporting various endpoints such as Models, Completions, Chat, Images, Vision, Embeddings, Speech, Audio, Files, Moderations, Fine-tuning, and Functions. It provides support for both C++ and Blueprints, allowing users to interact with OpenAI services seamlessly within Unreal Engine projects. The plugin also includes tutorials, updates, installation instructions, authentication steps, examples of usage, blueprint nodes overview, C++ examples, plugin structure details, documentation references, tests, packaging guidelines, and limitations. Users can leverage this plugin to integrate powerful AI capabilities into their Unreal Engine projects effortlessly.

rwkv.cpp

rwkv.cpp is a port of BlinkDL/RWKV-LM to ggerganov/ggml, supporting FP32, FP16, and quantized INT4, INT5, and INT8 inference. It focuses on CPU but also supports cuBLAS. The project provides a C library rwkv.h and a Python wrapper. RWKV is a large language model architecture with models like RWKV v5 and v6. It requires only state from the previous step for calculations, making it CPU-friendly on large context lengths. Users are advised to test all available formats for perplexity and latency on a representative dataset before serious use.

runpod-worker-comfy

runpod-worker-comfy is a serverless API tool that allows users to run any ComfyUI workflow to generate an image. Users can provide input images as base64-encoded strings, and the generated image can be returned as a base64-encoded string or uploaded to AWS S3. The tool is built on Ubuntu + NVIDIA CUDA and provides features like built-in checkpoints and VAE models. Users can configure environment variables to upload images to AWS S3 and interact with the RunPod API to generate images. The tool also supports local testing and deployment to Docker hub using Github Actions.

gptscript

GPTScript is a framework that enables Large Language Models (LLMs) to interact with various systems, including local executables, applications with OpenAPI schemas, SDK libraries, or RAG-based solutions. It simplifies the integration of systems with LLMs using minimal prompts. Sample use cases include chatting with a local CLI, OpenAPI compliant endpoint, local files/directories, and running automated workflows.

VisionCraft

VisionCraft API is a free tool that offers access to over 3000 AI models for generating images, text, and GIFs. Users can interact with the API to utilize various models like StableDiffusion, LLM, and Text2GIF. The tool provides functionalities for image generation, text generation, and GIF generation. For any inquiries or assistance, users can contact the VisionCraft team through their Telegram Channel, VisionCraft API, or Telegram Bot.

local_multimodal_ai_chat

Local Multimodal AI Chat is a hands-on project that teaches you how to build a multimodal chat application. It integrates different AI models to handle audio, images, and PDFs in a single chat interface. This project is perfect for anyone interested in AI and software development who wants to gain practical experience with these technologies.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.