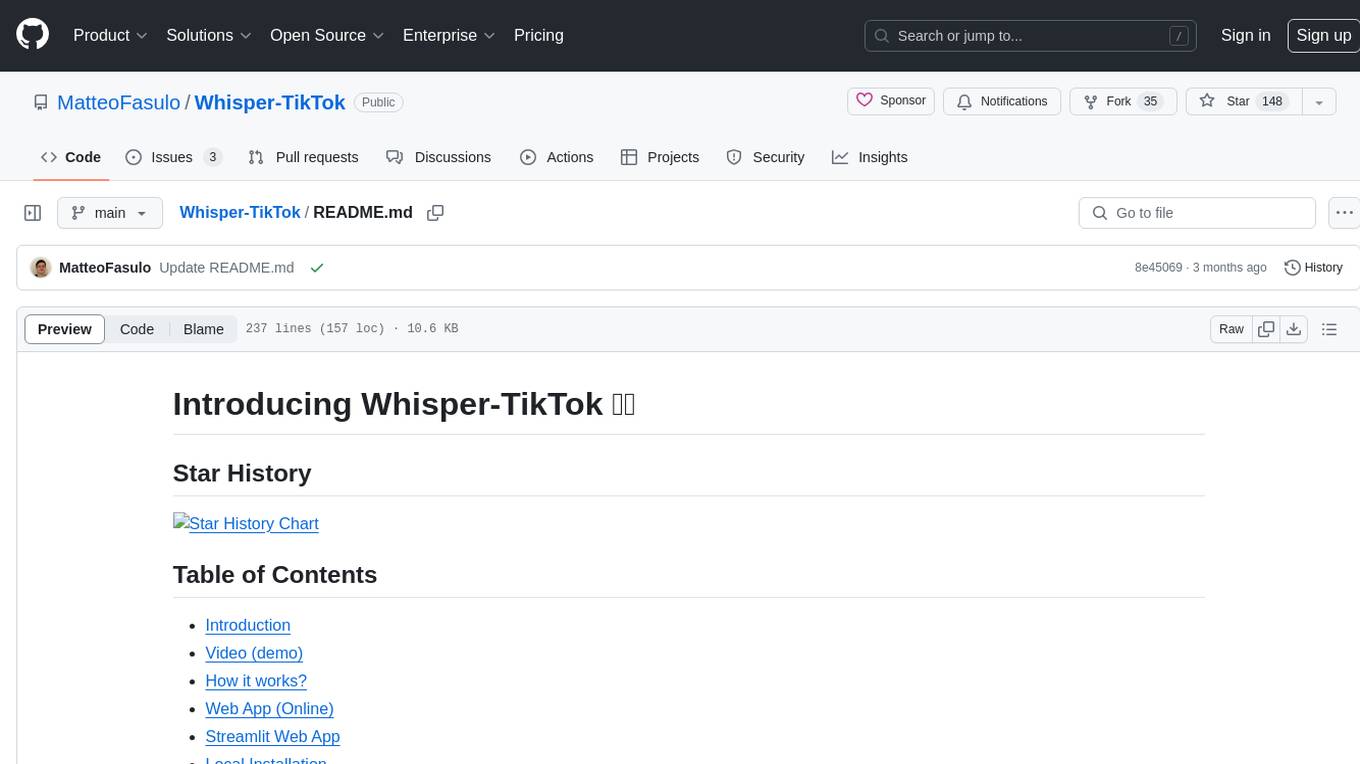

aigcpanel

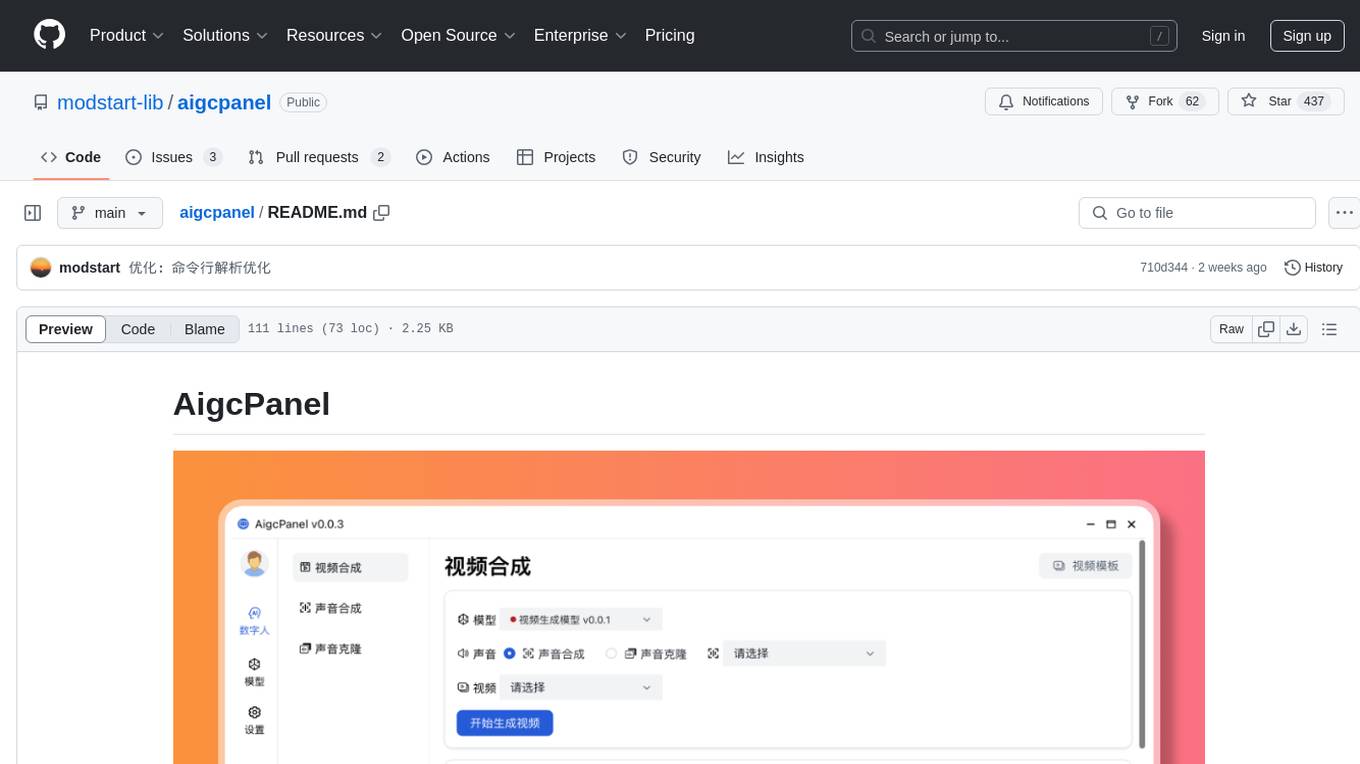

AigcPanel 是一个简单易用的一站式AI数字人系统,支持视频合成、声音合成、声音克隆,简化本地模型管理、一键导入和使用AI模型。

Stars: 656

AigcPanel is a simple and easy-to-use all-in-one AI digital human system that even beginners can use. It supports video synthesis, voice synthesis, voice cloning, simplifies local model management, and allows one-click import and use of AI models. It prohibits the use of this product for illegal activities and users must comply with the laws and regulations of the People's Republic of China.

README:

AigcPanel 是一个简单易用的一站式AI数字人系统,小白也可使用。

支持视频合成、声音合成、声音克隆,简化本地模型管理、一键导入和使用AI模型。

禁止使用本产品进行违法违规业务,使用本软件请遵守中华人民共和国法律法规。

- 支持视频数字人合成,支持视频画面和声音换口型匹配

- 支持语音合成、语音克隆,多种声音参数可设置

- 支持多模型导入、一键启动、模型设置、模型日志查看

- 支持国际化,支持简体中文、英语

- 支持多种模型一键启动包:

MuseTalk、cosyvoice

参考 demo 中的视频文件。

- 访问 https://aigcpanel.com 下载 Windows 安装包,一键安装即可

安装完成后,打开软件,下载模型一键启动包,即可使用。

如果有第三方一键启动的模型,可以按照以下方式接入。

模型文件夹格式,只需要编写 config.json 和 server.js 两个文件即可。

|- 模型文件夹/

|-|- config.json - 模型配置文件

|-|- server.js - 模型对接文件

|-|- xxx - 其他模型文件,推荐将模型文件放在 model 文件夹下

{

"name": "server-xxx", // 模型名称

"version": "0.1.0", // 模型版本

"title": "语音模型", // 模型标题

"description": "模型描述", // 模型描述

"platformName": "win", // 支持系统,win, osx, linux

"platformArch": "x86", // 支持架构,x86, arm64

"entry": "server/main", // 入口文件,一键启动包文件

"functions": [

"videoGen", // 支持视频生成

"soundTTS", // 支持语音合成

"soundClone" // 支持语音克隆

],

"settings": [ // 模型配置项,可以显示在模型配置页面

{

"name": "port",

"type": "text",

"title": "服务端口",

"default": "",

"placeholder": "留空会检测使用随机端口"

}

]

}以下以 MuseTalk 为例

const serverRuntime = {

port: 0,

}

let shellController = null

module.exports = {

ServerApi: null,

_url() {

return `http://localhost:${serverRuntime.port}/`

},

async _client() {

return await this.ServerApi.GradioClient.connect(this._url());

},

_send(serverInfo, type, data) {

this.ServerApi.event.sendChannel(serverInfo.eventChannelName, {type, data})

},

// 模型初始化

async init(ServerApi) {

this.ServerApi = ServerApi;

},

// 模型启动

async start(serverInfo) {

console.log('start', JSON.stringify(serverInfo))

this._send(serverInfo, 'starting', serverInfo)

let command = []

if (serverInfo.setting?.['port']) {

serverRuntime.port = serverInfo.setting.port

} else if (!serverRuntime.port || !await this.ServerApi.app.isPortAvailable(serverRuntime.port)) {

serverRuntime.port = await this.ServerApi.app.availablePort(50617)

}

if (serverInfo.setting?.['startCommand']) {

command.push(serverInfo.setting.startCommand)

} else {

//command.push(`"${serverInfo.localPath}/server/main"`)

command.push(`"${serverInfo.localPath}/server/.ai/python.exe"`)

command.push('-u')

command.push(`"${serverInfo.localPath}/server/run.py"`)

if (serverInfo.setting?.['gpuMode'] === 'cpu') {

command.push('--gpu_mode=cpu')

}

}

shellController = await this.ServerApi.app.spawnShell(command, {

cwd: `${serverInfo.localPath}/server`,

env: {

GRADIO_SERVER_PORT: serverRuntime.port,

PATH: [

process.env.PATH,

`${serverInfo.localPath}/server`,

`${serverInfo.localPath}/server/.ai/ffmpeg/bin`,

].join(';')

},

stdout: (data) => {

this.ServerApi.file.appendText(serverInfo.logFile, data)

},

stderr: (data) => {

this.ServerApi.file.appendText(serverInfo.logFile, data)

},

success: (data) => {

this._send(serverInfo, 'success', serverInfo)

},

error: (data, code) => {

this.ServerApi.file.appendText(serverInfo.logFile, data)

this._send(serverInfo, 'error', serverInfo)

},

})

},

// 模型启动检测

async ping(serverInfo) {

try {

const res = await this.ServerApi.request(`${this._url()}info`)

return true

} catch (e) {

}

return false

},

// 模型停止

async stop(serverInfo) {

this._send(serverInfo, 'stopping', serverInfo)

try {

shellController.stop()

shellController = null

} catch (e) {

console.log('stop error', e)

}

this._send(serverInfo, 'stopped', serverInfo)

},

// 模型配置

async config() {

return {

"code": 0,

"msg": "ok",

"data": {

"httpUrl": shellController ? this._url() : null,

"functions": {

"videoGen": {

"param": [

{

name: "box",

type: "inputNumber",

title: "嘴巴张开度",

defaultValue: -7,

placeholder: "",

tips: '嘴巴张开度可以控制生成视频中嘴巴的张开程度',

min: -9,

max: 9,

step: 1,

}

]

},

}

}

}

},

// 视频生成

async videoGen(serverInfo, data) {

console.log('videoGen', serverInfo, data)

const client = await this._client()

const resultData = {

// success, querying, retry

type: 'success',

start: 0,

end: 0,

jobId: '',

data: {

filePath: null

}

}

resultData.start = Date.now()

const result = await client.predict("/predict", [

this.ServerApi.GradioHandleFile(data.videoFile),

this.ServerApi.GradioHandleFile(data.soundFile),

parseInt(data.param.box)

]);

// console.log('videoGen.result', JSON.stringify(result))

resultData.end = Date.now()

resultData.data.filePath = result.data[0].value.video.path

return {

code: 0,

msg: 'ok',

data: resultData

}

},

}electronvue3typescript

仅在 node 20 测试过

# 安装依赖

npm install

# 调试运行

npm run dev

# 打包

npm run build| 微信群 | QQ群 |

|---|---|

|

|

AGPL-3.0

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aigcpanel

Similar Open Source Tools

aigcpanel

AigcPanel is a simple and easy-to-use all-in-one AI digital human system that even beginners can use. It supports video synthesis, voice synthesis, voice cloning, simplifies local model management, and allows one-click import and use of AI models. It prohibits the use of this product for illegal activities and users must comply with the laws and regulations of the People's Republic of China.

MateChat

MateChat is a UI library for intelligent scenarios in front-end development, allowing easy construction of AI applications. It has been used in the intelligent transformation of multiple applications within Huawei and has supported the development of intelligent assistants such as CodeArts and InsCode AI IDE. The library offers components tailored for intelligent scenarios, out-of-the-box functionality, support for multiple scenarios and themes, and continuous evolution of features.

MCP-Chinese-Getting-Started-Guide

The Model Context Protocol (MCP) is an innovative open-source protocol that redefines the interaction between large language models (LLMs) and the external world. MCP provides a standardized approach for any large language model to easily connect to various data sources and tools, enabling seamless access and processing of information. MCP acts as a USB-C interface for AI applications, offering a standardized way for AI models to connect to different data sources and tools. The core functionalities of MCP include Resources, Prompts, Tools, Sampling, Roots, and Transports. This guide focuses on developing an MCP server for network search using Python and uv management. It covers initializing the project, installing dependencies, creating a server, implementing tool execution methods, and running the server. Additionally, it explains how to debug the MCP server using the Inspector tool, how to call tools from the server, and how to connect multiple MCP servers. The guide also introduces the Sampling feature, which allows pre- and post-tool execution operations, and demonstrates how to integrate MCP servers into LangChain for AI applications.

YesImBot

YesImBot, also known as Athena, is a Koishi plugin designed to allow large AI models to participate in group chat discussions. It offers easy customization of the bot's name, personality, emotions, and other messages. The plugin supports load balancing multiple API interfaces for large models, provides immersive context awareness, blocks potentially harmful messages, and automatically fetches high-quality prompts. Users can adjust various settings for the bot and customize system prompt words. The ultimate goal is to seamlessly integrate the bot into group chats without detection, with ongoing improvements and features like message recognition, emoji sending, multimodal image support, and more.

go-anthropic

Go-anthropic is an unofficial API wrapper for Anthropic Claude in Go. It supports completions, streaming completions, messages, streaming messages, vision, and tool use. Users can interact with the Anthropic Claude API to generate text completions, analyze messages, process images, and utilize specific tools for various tasks.

Senparc.AI

Senparc.AI is an AI extension package for the Senparc ecosystem, focusing on LLM (Large Language Models) interaction. It provides modules for standard interfaces and basic functionalities, as well as interfaces using SemanticKernel for plug-and-play capabilities. The package also includes a library for supporting the 'PromptRange' ecosystem, compatible with various systems and frameworks. Users can configure different AI platforms and models, define AI interface parameters, and run AI functions easily. The package offers examples and commands for dialogue, embedding, and DallE drawing operations.

glm-free-api

GLM AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, intelligent agent dialogue support, AI drawing support, online search support, long document interpretation support, image parsing support. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository also includes six other free APIs for various services like Moonshot AI, StepChat, Qwen, Metaso, Spark, and Emohaa. The tool supports tasks such as chat completions, AI drawing, document interpretation, image parsing, and refresh token survival check.

qwen-free-api

Qwen AI Free service supports high-speed streaming output, multi-turn dialogue, watermark-free AI drawing, long document interpretation, image parsing, zero-configuration deployment, multi-token support, automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository provides various free APIs for different AI services. Users can access the service through different deployment methods like Docker, Docker-compose, Render, Vercel, and native deployment. It offers interfaces for chat completions, AI drawing, document interpretation, image parsing, and token checking. Users need to provide 'login_tongyi_ticket' for authorization. The project emphasizes research, learning, and personal use only, discouraging commercial use to avoid service pressure on the official platform.

step-free-api

The StepChat Free service provides high-speed streaming output, multi-turn dialogue support, online search support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. Additionally, it provides seven other free APIs for various services. The repository includes a disclaimer about using reverse APIs and encourages users to avoid commercial use to prevent service pressure on the official platform. It offers online testing links, showcases different demos, and provides deployment guides for Docker, Docker-compose, Render, Vercel, and native deployments. The repository also includes information on using multiple accounts, optimizing Nginx reverse proxy, and checking the liveliness of refresh tokens.

spark-free-api

Spark AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, AI drawing support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository includes multiple free-api projects for various AI services. Users can access the API for tasks such as chat completions, AI drawing, document interpretation, image analysis, and ssoSessionId live checking. The project also provides guidelines for deployment using Docker, Docker-compose, Render, Vercel, and native deployment methods. It recommends using custom clients for faster and simpler access to the free-api series projects.

mcp-fusion

MCP Fusion is a Model-View-Agent framework for the Model Context Protocol, providing structured perception for AI agents with validated data, domain rules, UI blocks, and action affordances in every response. It introduces the MVA pattern, where a Presenter layer sits between data and the AI agent, ensuring consistent, validated, contextually-rich data across the API surface. The tool facilitates schema validation, system rules, UI blocks, cognitive guardrails, and action affordances for domain entities. It offers tools for defining actions, prompts, middleware, error handling, type-safe clients, observability, streaming progress, and more, all integrated with the Model Context Protocol SDK and Zod for type safety and validation.

herc.ai

Herc.ai is a powerful library for interacting with the Herc.ai API. It offers free access to users and supports all languages. Users can benefit from Herc.ai's features unlimitedly with a one-time subscription and API key. The tool provides functionalities for question answering and text-to-image generation, with support for various models and customization options. Herc.ai can be easily integrated into CLI, CommonJS, TypeScript, and supports beta models for advanced usage. Developed by FiveSoBes and Luppux Development.

ddddocr

ddddocr is a Rust version of a simple OCR API server that provides easy deployment for captcha recognition without relying on the OpenCV library. It offers a user-friendly general-purpose captcha recognition Rust library. The tool supports recognizing various types of captchas, including single-line text, transparent black PNG images, target detection, and slider matching algorithms. Users can also import custom OCR training models and utilize the OCR API server for flexible OCR result control and range limitation. The tool is cross-platform and can be easily deployed.

illufly

illufly is an Agent framework with self-evolution capabilities, aiming to quickly create value based on self-evolution. It is designed to have self-evolution capabilities in various scenarios such as intent guessing, Q&A experience, data recall rate, and tool planning ability. The framework supports continuous dialogue, built-in RAG support, and self-evolution during conversations. It also provides tools for managing experience data and supports multiple agents collaboration.

TechFlow

TechFlow is a platform that allows users to build their own AI workflows through drag-and-drop functionality. It features a visually appealing interface with clear layout and intuitive navigation. TechFlow supports multiple models beyond Language Models (LLM) and offers flexible integration capabilities. It provides a powerful SDK for developers to easily integrate generated workflows into existing systems, enhancing flexibility and scalability. The platform aims to embed AI capabilities as modules into existing functionalities to enhance business competitiveness.

For similar tasks

InvokeAI

InvokeAI is a leading creative engine built to empower professionals and enthusiasts alike. Generate and create stunning visual media using the latest AI-driven technologies. InvokeAI offers an industry leading Web Interface, interactive Command Line Interface, and also serves as the foundation for multiple commercial products.

Open-Sora-Plan

Open-Sora-Plan is a project that aims to create a simple and scalable repo to reproduce Sora (OpenAI, but we prefer to call it "ClosedAI"). The project is still in its early stages, but the team is working hard to improve it and make it more accessible to the open-source community. The project is currently focused on training an unconditional model on a landscape dataset, but the team plans to expand the scope of the project in the future to include text2video experiments, training on video2text datasets, and controlling the model with more conditions.

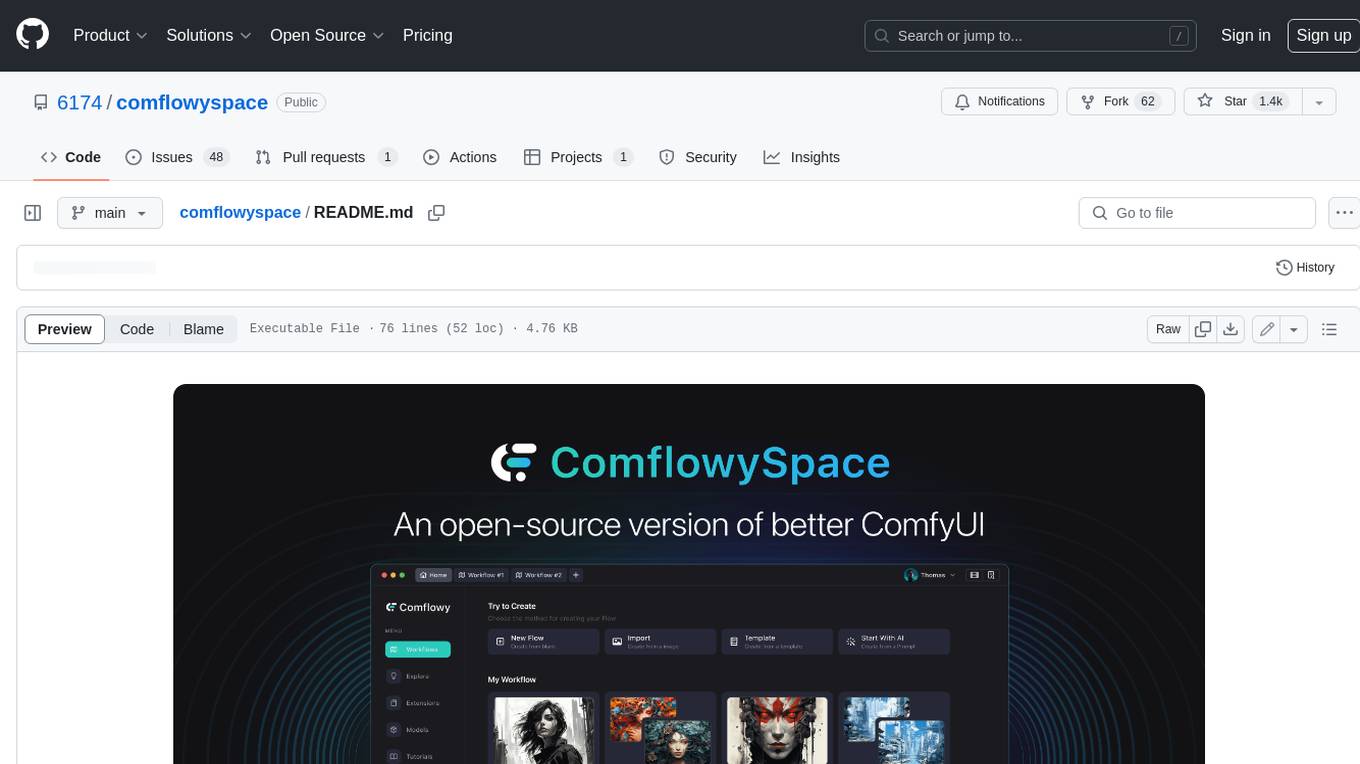

comflowyspace

Comflowyspace is an open-source AI image and video generation tool that aims to provide a more user-friendly and accessible experience than existing tools like SDWebUI and ComfyUI. It simplifies the installation, usage, and workflow management of AI image and video generation, making it easier for users to create and explore AI-generated content. Comflowyspace offers features such as one-click installation, workflow management, multi-tab functionality, workflow templates, and an improved user interface. It also provides tutorials and documentation to lower the learning curve for users. The tool is designed to make AI image and video generation more accessible and enjoyable for a wider range of users.

Rewind-AI-Main

Rewind AI is a free and open-source AI-powered video editing tool that allows users to easily create and edit videos. It features a user-friendly interface, a wide range of editing tools, and support for a variety of video formats. Rewind AI is perfect for beginners and experienced video editors alike.

MoneyPrinterTurbo

MoneyPrinterTurbo is a tool that can automatically generate video content based on a provided theme or keyword. It can create video scripts, materials, subtitles, and background music, and then compile them into a high-definition short video. The tool features a web interface and an API interface, supporting AI-generated video scripts, customizable scripts, multiple HD video sizes, batch video generation, customizable video segment duration, multilingual video scripts, multiple voice synthesis options, subtitle generation with font customization, background music selection, access to high-definition and copyright-free video materials, and integration with various AI models like OpenAI, moonshot, Azure, and more. The tool aims to simplify the video creation process and offers future plans to enhance voice synthesis, add video transition effects, provide more video material sources, offer video length options, include free network proxies, enable real-time voice and music previews, support additional voice synthesis services, and facilitate automatic uploads to YouTube platform.

Dough

Dough is a tool for crafting videos with AI, allowing users to guide video generations with precision using images and example videos. Users can create guidance frames, assemble shots, and animate them by defining parameters and selecting guidance videos. The tool aims to help users make beautiful and unique video creations, providing control over the generation process. Setup instructions are available for Linux and Windows platforms, with detailed steps for installation and running the app.

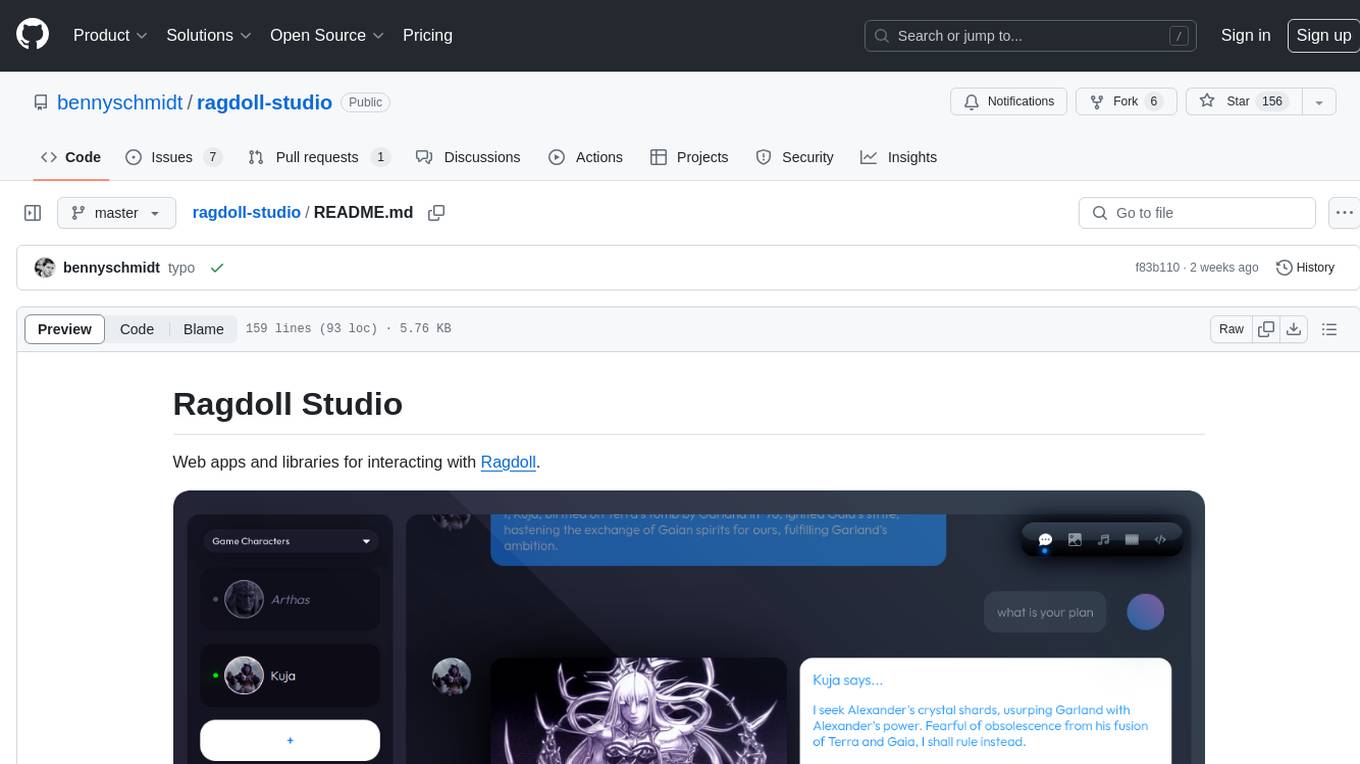

ragdoll-studio

Ragdoll Studio is a platform offering web apps and libraries for interacting with Ragdoll, enabling users to go beyond fine-tuning and create flawless creative deliverables, rich multimedia, and engaging experiences. It provides various modes such as Story Mode for creating and chatting with characters, Vector Mode for producing vector art, Raster Mode for producing raster art, Video Mode for producing videos, Audio Mode for producing audio, and 3D Mode for producing 3D objects. Users can export their content in various formats and share their creations on the community site. The platform consists of a Ragdoll API and a front-end React application for seamless usage.

Whisper-TikTok

Discover Whisper-TikTok, an innovative AI-powered tool that leverages the prowess of Edge TTS, OpenAI-Whisper, and FFMPEG to craft captivating TikTok videos. Whisper-TikTok effortlessly generates accurate transcriptions from audio files and integrates Microsoft Edge Cloud Text-to-Speech API for vibrant voiceovers. The program orchestrates the synthesis of videos using a structured JSON dataset, generating mesmerizing TikTok content in minutes.

For similar jobs

Thor

Thor is a powerful AI model management tool designed for unified management and usage of various AI models. It offers features such as user, channel, and token management, data statistics preview, log viewing, system settings, external chat link integration, and Alipay account balance purchase. Thor supports multiple AI models including OpenAI, Kimi, Starfire, Claudia, Zhilu AI, Ollama, Tongyi Qianwen, AzureOpenAI, and Tencent Hybrid models. It also supports various databases like SqlServer, PostgreSql, Sqlite, and MySql, allowing users to choose the appropriate database based on their needs.

aigcpanel

AigcPanel is a simple and easy-to-use all-in-one AI digital human system that even beginners can use. It supports video synthesis, voice synthesis, voice cloning, simplifies local model management, and allows one-click import and use of AI models. It prohibits the use of this product for illegal activities and users must comply with the laws and regulations of the People's Republic of China.

Qwen-TensorRT-LLM

Qwen-TensorRT-LLM is a project developed for the NVIDIA TensorRT Hackathon 2023, focusing on accelerating inference for the Qwen-7B-Chat model using TRT-LLM. The project offers various functionalities such as FP16/BF16 support, INT8 and INT4 quantization options, Tensor Parallel for multi-GPU parallelism, web demo setup with gradio, Triton API deployment for maximum throughput/concurrency, fastapi integration for openai requests, CLI interaction, and langchain support. It supports models like qwen2, qwen, and qwen-vl for both base and chat models. The project also provides tutorials on Bilibili and blogs for adapting Qwen models in NVIDIA TensorRT-LLM, along with hardware requirements and quick start guides for different model types and quantization methods.

dl_model_infer

This project is a c++ version of the AI reasoning library that supports the reasoning of tensorrt models. It provides accelerated deployment cases of deep learning CV popular models and supports dynamic-batch image processing, inference, decode, and NMS. The project has been updated with various models and provides tutorials for model exports. It also includes a producer-consumer inference model for specific tasks. The project directory includes implementations for model inference applications, backend reasoning classes, post-processing, pre-processing, and target detection and tracking. Speed tests have been conducted on various models, and onnx downloads are available for different models.

joliGEN

JoliGEN is an integrated framework for training custom generative AI image-to-image models. It implements GAN, Diffusion, and Consistency models for various image translation tasks, including domain and style adaptation with conservation of semantics. The tool is designed for real-world applications such as Controlled Image Generation, Augmented Reality, Dataset Smart Augmentation, and Synthetic to Real transforms. JoliGEN allows for fast and stable training with a REST API server for simplified deployment. It offers a wide range of options and parameters with detailed documentation available for models, dataset formats, and data augmentation.

ai-edge-torch

AI Edge Torch is a Python library that supports converting PyTorch models into a .tflite format for on-device applications on Android, iOS, and IoT devices. It offers broad CPU coverage with initial GPU and NPU support, closely integrating with PyTorch and providing good coverage of Core ATen operators. The library includes a PyTorch converter for model conversion and a Generative API for authoring mobile-optimized PyTorch Transformer models, enabling easy deployment of Large Language Models (LLMs) on mobile devices.

awesome-RK3588

RK3588 is a flagship 8K SoC chip by Rockchip, integrating Cortex-A76 and Cortex-A55 cores with NEON coprocessor for 8K video codec. This repository curates resources for developing with RK3588, including official resources, RKNN models, projects, development boards, documentation, tools, and sample code.

cl-waffe2

cl-waffe2 is an experimental deep learning framework in Common Lisp, providing fast, systematic, and customizable matrix operations, reverse mode tape-based Automatic Differentiation, and neural network model building and training features accelerated by a JIT Compiler. It offers abstraction layers, extensibility, inlining, graph-level optimization, visualization, debugging, systematic nodes, and symbolic differentiation. Users can easily write extensions and optimize their networks without overheads. The framework is designed to eliminate barriers between users and developers, allowing for easy customization and extension.