openai-scala-client

Scala client for OpenAI API and other major LLM providers

Stars: 242

This is a no-nonsense async Scala client for OpenAI API supporting all the available endpoints and params including streaming, chat completion, vision, and voice routines. It provides a single service called OpenAIService that supports various calls such as Models, Completions, Chat Completions, Edits, Images, Embeddings, Batches, Audio, Files, Fine-tunes, Moderations, Assistants, Threads, Thread Messages, Runs, Run Steps, Vector Stores, Vector Store Files, and Vector Store File Batches. The library aims to be self-contained with minimal dependencies and supports API-compatible providers like Azure OpenAI, Azure AI, Anthropic, Google Vertex AI, Groq, Grok, Fireworks AI, OctoAI, TogetherAI, Cerebras, Mistral, Deepseek, Ollama, FastChat, and more.

README:

This is a no-nonsense async Scala client for OpenAI API supporting all the available endpoints and params including streaming, the newest chat completion, responses API, assistants API, tools, vision, and voice routines (as defined here), provided in a single, convenient service called OpenAIService. The supported calls are:

- Models: listModels, and retrieveModel

- Completions: createCompletion

- Chat Completions: createChatCompletion, createChatFunCompletion (deprecated), and createChatToolCompletion

- Edits: createEdit (deprecated)

- Images: createImage, createImageEdit, and createImageVariation

- Embeddings: createEmbeddings

- Batches: createBatch, retrieveBatch, cancelBatch, and listBatches

- Audio: createAudioTranscription, createAudioTranslation, and createAudioSpeech

- Files: listFiles, uploadFile, deleteFile, retrieveFile, and retrieveFileContent

- Fine-tunes: createFineTune, listFineTunes, retrieveFineTune, cancelFineTune, listFineTuneEvents, listFineTuneCheckpoints, and deleteFineTuneModel

- Moderations: createModeration

- Assistants: createAssistant, listAssistants, retrieveAssistant, modifyAssistant, and deleteAssistant

- Threads: createThread, retrieveThread, modifyThread, and deleteThread

- Thread Messages: createThreadMessage, retrieveThreadMessage, modifyThreadMessage, listThreadMessages, retrieveThreadMessageFile, and listThreadMessageFiles

- Runs: createRun, createThreadAndRun, listRuns, retrieveRun, modifyRun, submitToolOutputs, and cancelRun

- Run Steps: listRunSteps, and retrieveRunStep

- Vector Stores: createVectorStore, listVectorStores, retrieveVectorStore, modifyVectorStore, and deleteVectorStore

- Vector Store Files: createVectorStoreFile, listVectorStoreFiles, retrieveVectorStoreFile, and deleteVectorStoreFile

- Vector Store File Batches: createVectorStoreFileBatch, retrieveVectorStoreFileBatch, cancelVectorStoreFileBatch, and listVectorStoreBatchFiles

- Responses (🔥 New): createModelResponse, getModelResponse, deleteModelResponse, and listModelResponseInputItems

Note that in order to be consistent with the OpenAI API naming, the service function names match exactly the API endpoint titles/descriptions in camelCase.

Also, we aimed for the library to be self-contained with the fewest dependencies possible. Therefore, we implemented our own generic WS client (currently with Play WS backend, which can be swapped for other engines in the future). Additionally, if dependency injection is required, we use the scala-guice library.

👉 No time to read a lengthy tutorial? Sure, we hear you! Check out the examples to see how to use the lib in practice.

In addition to OpenAI, this library supports many other LLM providers. For providers that aren't natively compatible with the chat completion API, we've implemented adapters to streamline integration (see examples).

| Provider | JSON/Structured Output | Tools Support | Description |

|---|---|---|---|

| OpenAI | Full | Standard + Responses API | Full API support |

| Azure OpenAI | Full | Standard + Responses API | OpenAI on Azure |

| Anthropic | Implied | Claude models | |

| Azure AI | Varies | Open-source models | |

| Cerebras | Only JSON object mode | Fast inference | |

| Deepseek | Only JSON object mode | Chinese provider | |

| FastChat | Varies | Local LLMs | |

| Fireworks AI | Only JSON object mode | Cloud provider | |

| Google Gemini (🔥 New) | Full | Yes | Google's models |

| Google Vertex AI | Full | Yes | Gemini models |

| Grok | Full | x.AI models | |

| Groq | Only JSON object mode | Fast inference | |

| Mistral | Only JSON object mode | Open-source leader | |

| Novita (🔥 New) | Only JSON object mode | Cloud provider | |

| Octo AI | Only JSON object mode | Cloud provider (obsolete) | |

| Ollama | Varies | Local LLMs | |

| Perplexity Sonar (🔥 New) | Only implied | Search-based AI | |

| TogetherAI | Only JSON object mode | Cloud provider |

👉 For background information how the project started read an article about the lib/client on Medium.

Also try out our Scala client for Pinecone vector database, or use both clients together! This demo project shows how to generate and store OpenAI embeddings into Pinecone and query them afterward. The OpenAI + Pinecone combo is commonly used for autonomous AI agents, such as babyAGI and AutoGPT.

✔️ Important: this is a "community-maintained" library and, as such, has no relation to OpenAI company.

The currently supported Scala versions are 2.12, 2.13, and 3.

To install the library, add the following dependency to your build.sbt

"io.cequence" %% "openai-scala-client" % "1.3.0.RC.1"

or to pom.xml (if you use maven)

<dependency>

<groupId>io.cequence</groupId>

<artifactId>openai-scala-client_2.12</artifactId>

<version>1.3.0.RC.1</version>

</dependency>

If you want streaming support, use "io.cequence" %% "openai-scala-client-stream" % "1.3.0.RC.1" instead.

- Env. variables:

OPENAI_SCALA_CLIENT_API_KEYand optionally alsoOPENAI_SCALA_CLIENT_ORG_ID(if you have one) - File config (default): openai-scala-client.conf

I. Obtaining OpenAIService

First you need to provide an implicit execution context as well as akka materializer, e.g., as

implicit val ec = ExecutionContext.global

implicit val materializer = Materializer(ActorSystem())Then you can obtain a service in one of the following ways.

- Default config (expects env. variable(s) to be set as defined in

Configsection)

val service = OpenAIServiceFactory()- Custom config

val config = ConfigFactory.load("path_to_my_custom_config")

val service = OpenAIServiceFactory(config)- Without config

val service = OpenAIServiceFactory(

apiKey = "your_api_key",

orgId = Some("your_org_id") // if you have one

)- For Azure with API Key

val service = OpenAIServiceFactory.forAzureWithApiKey(

resourceName = "your-resource-name",

deploymentId = "your-deployment-id", // usually model name such as "gpt-35-turbo"

apiVersion = "2023-05-15", // newest version

apiKey = "your_api_key"

)- Minimal

OpenAICoreServicesupportinglistModels,createCompletion,createChatCompletion, andcreateEmbeddingscalls - provided e.g. by FastChat service running on the port 8000

val service = OpenAICoreServiceFactory("http://localhost:8000/v1/")-

OpenAIChatCompletionServiceproviding solelycreateChatCompletion

- Azure AI - e.g. Cohere R+ model

val service = OpenAIChatCompletionServiceFactory.forAzureAI(

endpoint = sys.env("AZURE_AI_COHERE_R_PLUS_ENDPOINT"),

region = sys.env("AZURE_AI_COHERE_R_PLUS_REGION"),

accessToken = sys.env("AZURE_AI_COHERE_R_PLUS_ACCESS_KEY")

)-

Anthropic - requires

openai-scala-anthropic-clientlib andANTHROPIC_API_KEY

val service = AnthropicServiceFactory.asOpenAI() // or AnthropicServiceFactory.bedrockAsOpenAI-

Google Vertex AI - requires

openai-scala-google-vertexai-clientlib andVERTEXAI_LOCATION+VERTEXAI_PROJECT_ID

val service = VertexAIServiceFactory.asOpenAI()-

Google Gemini - requires

openai-scala-google-gemini-clientlib andGOOGLE_API_KEY

val service = GeminiServiceFactory.asOpenAI()-

Perplexity Sonar - requires

openai-scala-perplexity-clientlib andSONAR_API_KEY

val service = SonarServiceFactory.asOpenAI()-

Novita - requires

NOVITA_API_KEY

val service = OpenAIChatCompletionServiceFactory(ChatProviderSettings.novita)

// or with streaming

val service = OpenAIChatCompletionServiceFactory.withStreaming(ChatProviderSettings.novita)-

Groq - requires

GROQ_API_KEY"

val service = OpenAIChatCompletionServiceFactory(ChatProviderSettings.groq)

// or with streaming

val service = OpenAIChatCompletionServiceFactory.withStreaming(ChatProviderSettings.groq)-

Grok - requires

GROK_API_KEY"

val service = OpenAIChatCompletionServiceFactory(ChatProviderSettings.grok)

// or with streaming

val service = OpenAIChatCompletionServiceFactory.withStreaming(ChatProviderSettings.grok)-

Fireworks AI - requires

FIREWORKS_API_KEY"

val service = OpenAIChatCompletionServiceFactory(ChatProviderSettings.fireworks)

// or with streaming

val service = OpenAIChatCompletionServiceFactory.withStreaming(ChatProviderSettings.fireworks)-

Octo AI - requires

OCTOAI_TOKEN

val service = OpenAIChatCompletionServiceFactory(ChatProviderSettings.octoML)

// or with streaming

val service = OpenAIChatCompletionServiceFactory.withStreaming(ChatProviderSettings.octoML)-

TogetherAI requires

TOGETHERAI_API_KEY

val service = OpenAIChatCompletionServiceFactory(ChatProviderSettings.togetherAI)

// or with streaming

val service = OpenAIChatCompletionServiceFactory.withStreaming(ChatProviderSettings.togetherAI)-

Cerebras requires

CEREBRAS_API_KEY

val service = OpenAIChatCompletionServiceFactory(ChatProviderSettings.cerebras)

// or with streaming

val service = OpenAIChatCompletionServiceFactory.withStreaming(ChatProviderSettings.cerebras)-

Mistral requires

MISTRAL_API_KEY

val service = OpenAIChatCompletionServiceFactory(ChatProviderSettings.mistral)

// or with streaming

val service = OpenAIChatCompletionServiceFactory.withStreaming(ChatProviderSettings.mistral) val service = OpenAIChatCompletionServiceFactory(

coreUrl = "http://localhost:11434/v1/"

)or with streaming

val service = OpenAIChatCompletionServiceFactory.withStreaming(

coreUrl = "http://localhost:11434/v1/"

)- Note that services with additional streaming support -

createCompletionStreamedandcreateChatCompletionStreamedprovided by OpenAIStreamedServiceExtra (requiresopenai-scala-client-streamlib)

import io.cequence.openaiscala.service.StreamedServiceTypes.OpenAIStreamedService

import io.cequence.openaiscala.service.OpenAIStreamedServiceImplicits._

val service: OpenAIStreamedService = OpenAIServiceFactory.withStreaming()similarly for a chat-completion service

import io.cequence.openaiscala.service.OpenAIStreamedServiceImplicits._

val service = OpenAIChatCompletionServiceFactory.withStreaming(

coreUrl = "https://api.fireworks.ai/inference/v1/",

authHeaders = Seq(("Authorization", s"Bearer ${sys.env("FIREWORKS_API_KEY")}"))

)or only if streaming is required

val service: OpenAIChatCompletionStreamedServiceExtra =

OpenAIChatCompletionStreamedServiceFactory(

coreUrl = "https://api.fireworks.ai/inference/v1/",

authHeaders = Seq(("Authorization", s"Bearer ${sys.env("FIREWORKS_API_KEY")}"))

)- Via dependency injection (requires

openai-scala-guicelib)

class MyClass @Inject() (openAIService: OpenAIService) {...}II. Calling functions

Full documentation of each call with its respective inputs and settings is provided in OpenAIService. Since all the calls are async they return responses wrapped in Future.

There is a new project openai-scala-client-examples where you can find a lot of ready-to-use examples!

- List models

service.listModels.map(models =>

models.foreach(println)

)- Retrieve model

service.retrieveModel(ModelId.text_davinci_003).map(model =>

println(model.getOrElse("N/A"))

)- Create chat completion

val createChatCompletionSettings = CreateChatCompletionSettings(

model = ModelId.gpt_4o

)

val messages = Seq(

SystemMessage("You are a helpful assistant."),

UserMessage("Who won the world series in 2020?"),

AssistantMessage("The Los Angeles Dodgers won the World Series in 2020."),

UserMessage("Where was it played?"),

)

service.createChatCompletion(

messages = messages,

settings = createChatCompletionSettings

).map { chatCompletion =>

println(chatCompletion.contentHead)

}- Create chat completion for functions

val messages = Seq(

SystemMessage("You are a helpful assistant."),

UserMessage("What's the weather like in San Francisco, Tokyo, and Paris?")

)

// as a param type we can use "number", "string", "boolean", "object", "array", and "null"

val tools = Seq(

FunctionSpec(

name = "get_current_weather",

description = Some("Get the current weather in a given location"),

parameters = Map(

"type" -> "object",

"properties" -> Map(

"location" -> Map(

"type" -> "string",

"description" -> "The city and state, e.g. San Francisco, CA"

),

"unit" -> Map(

"type" -> "string",

"enum" -> Seq("celsius", "fahrenheit")

)

),

"required" -> Seq("location")

)

)

)

// if we want to force the model to use the above function as a response

// we can do so by passing: responseToolChoice = Some("get_current_weather")`

service.createChatToolCompletion(

messages = messages,

tools = tools,

responseToolChoice = None, // means "auto"

settings = CreateChatCompletionSettings(ModelId.gpt_4o)

).map { response =>

val chatFunCompletionMessage = response.choices.head.message

val toolCalls = chatFunCompletionMessage.tool_calls.collect {

case (id, x: FunctionCallSpec) => (id, x)

}

println(

"tool call ids : " + toolCalls.map(_._1).mkString(", ")

)

println(

"function/tool call names : " + toolCalls.map(_._2.name).mkString(", ")

)

println(

"function/tool call arguments : " + toolCalls.map(_._2.arguments).mkString(", ")

)

}- Create chat completion with JSON/structured output

val messages = Seq(

SystemMessage("Give me the most populous capital cities in JSON format."),

UserMessage("List only african countries")

)

val capitalsSchema = JsonSchema.Object(

properties = Map(

"countries" -> JsonSchema.Array(

items = JsonSchema.Object(

properties = Map(

"country" -> JsonSchema.String(

description = Some("The name of the country")

),

"capital" -> JsonSchema.String(

description = Some("The capital city of the country")

)

),

required = Seq("country", "capital")

)

)

),

required = Seq("countries")

)

val jsonSchemaDef = JsonSchemaDef(

name = "capitals_response",

strict = true,

structure = capitalsSchema

)

service

.createChatCompletion(

messages = messages,

settings = CreateChatCompletionSettings(

model = ModelId.o3_mini,

max_tokens = Some(1000),

response_format_type = Some(ChatCompletionResponseFormatType.json_schema),

jsonSchema = Some(jsonSchemaDef)

)

)

.map { response =>

val json = Json.parse(response.contentHead)

println(Json.prettyPrint(json))

}- Create chat completion with JSON/structured output using a handly implicit function (

createChatCompletionWithJSON[T]) that handles JSON extraction with a potential repair, as well as deserialization to an object T.

import io.cequence.openaiscala.service.OpenAIChatCompletionExtra._

...

service

.createChatCompletionWithJSON[JsObject](

messages = messages,

settings = CreateChatCompletionSettings(

model = ModelId.o3_mini,

max_tokens = Some(1000),

response_format_type = Some(ChatCompletionResponseFormatType.json_schema),

jsonSchema = Some(jsonSchemaDef)

)

)

.map { json =>

println(Json.prettyPrint(json))

}- Failover to alternative models if the primary one fails

import io.cequence.openaiscala.service.OpenAIChatCompletionExtra._

val messages = Seq(

SystemMessage("You are a helpful weather assistant."),

UserMessage("What is the weather like in Norway?")

)

service

.createChatCompletionWithFailover(

messages = messages,

settings = CreateChatCompletionSettings(

model = ModelId.o3_mini

),

failoverModels = Seq(ModelId.gpt_4_5_preview, ModelId.gpt_4o),

retryOnAnyError = true,

failureMessage = "Weather assistant failed to provide a response."

)

.map { response =>

print(response.contentHead)

}- Failover with JSON/structured output

import io.cequence.openaiscala.service.OpenAIChatCompletionExtra._

val capitalsSchema = JsonSchema.Object(

properties = Map(

"countries" -> JsonSchema.Array(

items = JsonSchema.Object(

properties = Map(

"country" -> JsonSchema.String(

description = Some("The name of the country")

),

"capital" -> JsonSchema.String(

description = Some("The capital city of the country")

)

),

required = Seq("country", "capital")

)

)

),

required = Seq("countries")

)

val jsonSchemaDef = JsonSchemaDef(

name = "capitals_response",

strict = true,

structure = capitalsSchema

)

// Define the chat messages

val messages = Seq(

SystemMessage("Give me the most populous capital cities in JSON format."),

UserMessage("List only african countries")

)

// Call the service with failover support

service

.createChatCompletionWithJSON[JsObject](

messages = messages,

settings = CreateChatCompletionSettings(

model = ModelId.o3_mini, // Primary model

max_tokens = Some(1000),

response_format_type = Some(ChatCompletionResponseFormatType.json_schema),

jsonSchema = Some(jsonSchemaDef)

),

failoverModels = Seq(

ModelId.gpt_4_5_preview, // First fallback model

ModelId.gpt_4o // Second fallback model

),

maxRetries = Some(3), // Maximum number of retries per model

retryOnAnyError = true, // Retry on any error, not just retryable ones

taskNameForLogging = Some("capitals-query") // For better logging

)

.map { json =>

println(Json.prettyPrint(json))

}- Responses API - basic usage with textual inputs / messages

import io.cequence.openaiscala.domain.responsesapi.Inputs

service

.createModelResponse(

Inputs.Text("What is the capital of France?")

)

.map { response =>

println(response.outputText.getOrElse("N/A"))

} import io.cequence.openaiscala.domain.responsesapi.Input

service

.createModelResponse(

Inputs.Items(

Input.ofInputSystemTextMessage(

"You are a helpful assistant. Be verbose and detailed and don't be afraid to use emojis."

),

Input.ofInputUserTextMessage("What is the capital of France?")

)

)

.map { response =>

println(response.outputText.getOrElse("N/A"))

}- Responses API - image input

import io.cequence.openaiscala.domain.responsesapi.{Inputs, Input}

import io.cequence.openaiscala.domain.responsesapi.InputMessageContent

import io.cequence.openaiscala.domain.ChatRole

service

.createModelResponse(

Inputs.Items(

Input.ofInputMessage(

Seq(

InputMessageContent.Text("what is in this image?"),

InputMessageContent.Image(

imageUrl = Some(

"https://upload.wikimedia.org/wikipedia/commons/thumb/d/dd/Gfp-wisconsin-madison-the-nature-boardwalk.jpg/2560px-Gfp-wisconsin-madison-the-nature-boardwalk.jpg"

)

)

),

role = ChatRole.User

)

)

)

.map { response =>

println(response.outputText.getOrElse("N/A"))

}- Responses API - tool use (file search)

service

.createModelResponse(

Inputs.Text("What are the attributes of an ancient brown dragon?"),

settings = CreateModelResponseSettings(

model = ModelId.gpt_4o_2024_08_06,

tools = Seq(

FileSearchTool(

vectorStoreIds = Seq("vs_1234567890"),

maxNumResults = Some(20),

filters = None,

rankingOptions = None

)

)

)

)

.map { response =>

println(response.outputText.getOrElse("N/A"))

// citations

val citations: Seq[Annotation.FileCitation] = response.outputMessageContents.collect {

case e: OutputText =>

e.annotations.collect { case citation: Annotation.FileCitation => citation }

}.flatten

println("Citations:")

citations.foreach { citation =>

println(s"${citation.fileId} - ${citation.filename}")

}

}- Responses API - tool use (web search)

service

.createModelResponse(

Inputs.Text("What was a positive news story from today?"),

settings = CreateModelResponseSettings(

model = ModelId.gpt_4o_2024_08_06,

tools = Seq(WebSearchTool())

)

)

.map { response =>

println(response.outputText.getOrElse("N/A"))

// citations

val citations: Seq[Annotation.UrlCitation] = response.outputMessageContents.collect {

case e: OutputText =>

e.annotations.collect { case citation: Annotation.UrlCitation => citation }

}.flatten

println("Citations:")

citations.foreach { citation =>

println(s"${citation.title} - ${citation.url}")

}

}- Responses API - tool use (function call)

service

.createModelResponse(

Inputs.Text("What is the weather like in Boston today?"),

settings = CreateModelResponseSettings(

model = ModelId.gpt_4o_2024_08_06,

tools = Seq(

FunctionTool(

name = "get_current_weather",

parameters = JsonSchema.Object(

properties = Map(

"location" -> JsonSchema.String(

description = Some("The city and state, e.g. San Francisco, CA")

),

"unit" -> JsonSchema.String(

`enum` = Seq("celsius", "fahrenheit")

)

),

required = Seq("location", "unit")

),

description = Some("Get the current weather in a given location"),

strict = true

)

),

toolChoice = Some(ToolChoice.Mode.Auto)

)

)

.map { response =>

val functionCall = response.outputFunctionCalls.headOption

.getOrElse(throw new RuntimeException("No function call output found"))

println(

s"""Function Call Details:

|Name: ${functionCall.name}

|Arguments: ${functionCall.arguments}

|Call ID: ${functionCall.callId}

|ID: ${functionCall.id}

|Status: ${functionCall.status}""".stripMargin

)

val toolsUsed = response.tools.map(_.typeString)

println(s"${toolsUsed.size} tools used: ${toolsUsed.mkString(", ")}")

}- Count expected used tokens before calling

createChatCompletionsorcreateChatFunCompletions, this helps you select proper model and reduce costs. This is an experimental feature and it may not work for all models. Requiresopenai-scala-count-tokenslib.

An example how to count message tokens:

import io.cequence.openaiscala.service.OpenAICountTokensHelper

import io.cequence.openaiscala.domain.{AssistantMessage, BaseMessage, FunctionSpec, ModelId, SystemMessage, UserMessage}

class MyCompletionService extends OpenAICountTokensHelper {

def exec = {

val model = ModelId.gpt_4_turbo_2024_04_09

// messages to be sent to OpenAI

val messages: Seq[BaseMessage] = Seq(

SystemMessage("You are a helpful assistant."),

UserMessage("Who won the world series in 2020?"),

AssistantMessage("The Los Angeles Dodgers won the World Series in 2020."),

UserMessage("Where was it played?"),

)

val tokenCount = countMessageTokens(model, messages)

}

}An example how to count message tokens when a function is involved:

import io.cequence.openaiscala.service.OpenAICountTokensHelper

import io.cequence.openaiscala.domain.{BaseMessage, FunctionSpec, ModelId, SystemMessage, UserMessage}

class MyCompletionService extends OpenAICountTokensHelper {

def exec = {

val model = ModelId.gpt_4_turbo_2024_04_09

// messages to be sent to OpenAI

val messages: Seq[BaseMessage] =

Seq(

SystemMessage("You are a helpful assistant."),

UserMessage("What's the weather like in San Francisco, Tokyo, and Paris?")

)

// function to be called

val function: FunctionSpec = FunctionSpec(

name = "getWeather",

parameters = Map(

"type" -> "object",

"properties" -> Map(

"location" -> Map(

"type" -> "string",

"description" -> "The city to get the weather for"

),

"unit" -> Map("type" -> "string", "enum" -> List("celsius", "fahrenheit"))

)

)

)

val tokenCount = countFunMessageTokens(model, messages, Seq(function), Some(function.name))

}

}✔️ Important: After you are done using the service, you should close it by calling service.close. Otherwise, the underlying resources/threads won't be released.

III. Using adapters

Adapters for OpenAI services (chat completion, core, or full) are provided by OpenAIServiceAdapters. The adapters are used to distribute the load between multiple services, retry on transient errors, route, or provide additional functionality. See examples for more details.

Note that the adapters can be arbitrarily combined/stacked.

- Round robin load distribution

val adapters = OpenAIServiceAdapters.forFullService

val service1 = OpenAIServiceFactory("your-api-key1")

val service2 = OpenAIServiceFactory("your-api-key2")

val service = adapters.roundRobin(service1, service2)- Random order load distribution

val adapters = OpenAIServiceAdapters.forFullService

val service1 = OpenAIServiceFactory("your-api-key1")

val service2 = OpenAIServiceFactory("your-api-key2")

val service = adapters.randomOrder(service1, service2)- Logging function calls

val adapters = OpenAIServiceAdapters.forFullService

val rawService = OpenAIServiceFactory()

val service = adapters.log(

rawService,

"openAIService",

logger.log

)- Retry on transient errors (e.g. rate limit error)

val adapters = OpenAIServiceAdapters.forFullService

implicit val retrySettings: RetrySettings = RetrySettings(maxRetries = 10).constantInterval(10.seconds)

val service = adapters.retry(

OpenAIServiceFactory(),

Some(println(_)) // simple logging

)- Retry on a specific function using RetryHelpers directly

class MyCompletionService @Inject() (

val actorSystem: ActorSystem,

implicit val ec: ExecutionContext,

implicit val scheduler: Scheduler

)(val apiKey: String)

extends RetryHelpers {

val service: OpenAIService = OpenAIServiceFactory(apiKey)

implicit val retrySettings: RetrySettings =

RetrySettings(interval = 10.seconds)

def ask(prompt: String): Future[String] =

for {

completion <- service

.createChatCompletion(

List(MessageSpec(ChatRole.User, prompt))

)

.retryOnFailure

} yield completion.choices.head.message.content

}- Route chat completion calls based on models

val adapters = OpenAIServiceAdapters.forFullService

// OctoAI

val octoMLService = OpenAIChatCompletionServiceFactory(

coreUrl = "https://text.octoai.run/v1/",

authHeaders = Seq(("Authorization", s"Bearer ${sys.env("OCTOAI_TOKEN")}"))

)

// Anthropic

val anthropicService = AnthropicServiceFactory.asOpenAI()

// OpenAI

val openAIService = OpenAIServiceFactory()

val service: OpenAIService =

adapters.chatCompletionRouter(

// OpenAI service is default so no need to specify its models here

serviceModels = Map(

octoMLService -> Seq(NonOpenAIModelId.mixtral_8x22b_instruct),

anthropicService -> Seq(

NonOpenAIModelId.claude_2_1,

NonOpenAIModelId.claude_3_opus_20240229,

NonOpenAIModelId.claude_3_haiku_20240307

)

),

openAIService

)- Chat-to-completion adapter

val adapters = OpenAIServiceAdapters.forCoreService

val service = adapters.chatToCompletion(

OpenAICoreServiceFactory(

coreUrl = "https://api.fireworks.ai/inference/v1/",

authHeaders = Seq(("Authorization", s"Bearer ${sys.env("FIREWORKS_API_KEY")}"))

)

)-

Wen Scala 3?

Feb 2023. You are right; we chose the shortest month to do so :)Done! -

I got a timeout exception. How can I change the timeout setting?

You can do it either by passing the

timeoutsparam toOpenAIServiceFactoryor, if you use your own configuration file, then you can simply add it there as:

openai-scala-client {

timeouts {

requestTimeoutSec = 200

readTimeoutSec = 200

connectTimeoutSec = 5

pooledConnectionIdleTimeoutSec = 60

}

}

-

I got an exception like

com.typesafe.config.ConfigException$UnresolvedSubstitution: openai-scala-client.conf @ jar:file:.../io/cequence/openai-scala-client_2.13/0.0.1/openai-scala-client_2.13-0.0.1.jar!/openai-scala-client.conf: 4: Could not resolve substitution to a value: ${OPENAI_SCALA_CLIENT_API_KEY}. What should I do?Set the env. variable

OPENAI_SCALA_CLIENT_API_KEY. If you don't have one register here. -

It all looks cool. I want to chat with you about your research and development?

Just shoot us an email at [email protected].

This library is available and published as open source under the terms of the MIT License.

This project is open-source and welcomes any contribution or feedback (here).

Development of this library has been supported by - Cequence.io -

The future of contracting

Created and maintained by Peter Banda.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for openai-scala-client

Similar Open Source Tools

openai-scala-client

This is a no-nonsense async Scala client for OpenAI API supporting all the available endpoints and params including streaming, chat completion, vision, and voice routines. It provides a single service called OpenAIService that supports various calls such as Models, Completions, Chat Completions, Edits, Images, Embeddings, Batches, Audio, Files, Fine-tunes, Moderations, Assistants, Threads, Thread Messages, Runs, Run Steps, Vector Stores, Vector Store Files, and Vector Store File Batches. The library aims to be self-contained with minimal dependencies and supports API-compatible providers like Azure OpenAI, Azure AI, Anthropic, Google Vertex AI, Groq, Grok, Fireworks AI, OctoAI, TogetherAI, Cerebras, Mistral, Deepseek, Ollama, FastChat, and more.

rust-genai

genai is a multi-AI providers library for Rust that aims to provide a common and ergonomic single API to various generative AI providers such as OpenAI, Anthropic, Cohere, Ollama, and Gemini. It focuses on standardizing chat completion APIs across major AI services, prioritizing ergonomics and commonality. The library initially focuses on text chat APIs and plans to expand to support images, function calling, and more in the future versions. Version 0.1.x will have breaking changes in patches, while version 0.2.x will follow semver more strictly. genai does not provide a full representation of a given AI provider but aims to simplify the differences at a lower layer for ease of use.

openapi

The `@samchon/openapi` repository is a collection of OpenAPI types and converters for various versions of OpenAPI specifications. It includes an 'emended' OpenAPI v3.1 specification that enhances clarity by removing ambiguous and duplicated expressions. The repository also provides an application composer for LLM (Large Language Model) function calling from OpenAPI documents, allowing users to easily perform LLM function calls based on the Swagger document. Conversions to different versions of OpenAPI documents are also supported, all based on the emended OpenAPI v3.1 specification. Users can validate their OpenAPI documents using the `typia` library with `@samchon/openapi` types, ensuring compliance with standard specifications.

mediapipe-rs

MediaPipe-rs is a Rust library designed for MediaPipe tasks on WasmEdge WASI-NN. It offers easy-to-use low-code APIs similar to mediapipe-python, with low overhead and flexibility for custom media input. The library supports various tasks like object detection, image classification, gesture recognition, and more, including TfLite models, TF Hub models, and custom models. Users can create task instances, run sessions for pre-processing, inference, and post-processing, and speed up processing by reusing sessions. The library also provides support for audio tasks using audio data from symphonia, ffmpeg, or raw audio. Users can choose between CPU, GPU, or TPU devices for processing.

Code

A3S Code is an embeddable AI coding agent framework in Rust that allows users to build agents capable of reading, writing, and executing code with tool access, planning, and safety controls. It is production-ready with features like permission system, HITL confirmation, skill-based tool restrictions, and error recovery. The framework is extensible with 19 trait-based extension points and supports lane-based priority queue for scalable multi-machine task distribution.

agents-flex

Agents-Flex is a LLM Application Framework like LangChain base on Java. It provides a set of tools and components for building LLM applications, including LLM Visit, Prompt and Prompt Template Loader, Function Calling Definer, Invoker and Running, Memory, Embedding, Vector Storage, Resource Loaders, Document, Splitter, Loader, Parser, LLMs Chain, and Agents Chain.

acte

Acte is a framework designed to build GUI-like tools for AI Agents. It aims to address the issues of cognitive load and freedom degrees when interacting with multiple APIs in complex scenarios. By providing a graphical user interface (GUI) for Agents, Acte helps reduce cognitive load and constraints interaction, similar to how humans interact with computers through GUIs. The tool offers APIs for starting new sessions, executing actions, and displaying screens, accessible via HTTP requests or the SessionManager class.

lagent

Lagent is a lightweight open-source framework that allows users to efficiently build large language model(LLM)-based agents. It also provides some typical tools to augment LLM. The overview of our framework is shown below:

SwiftAgent

A type-safe, declarative framework for building AI agents in Swift, SwiftAgent is built on Apple FoundationModels. It allows users to compose agents by combining Steps in a declarative syntax similar to SwiftUI. The framework ensures compile-time checked input/output types, native Apple AI integration, structured output generation, and built-in security features like permission, sandbox, and guardrail systems. SwiftAgent is extensible with MCP integration, distributed agents, and a skills system. Users can install SwiftAgent with Swift 6.2+ on iOS 26+, macOS 26+, or Xcode 26+ using Swift Package Manager.

langchain-rust

LangChain Rust is a library for building applications with Large Language Models (LLMs) through composability. It provides a set of tools and components that can be used to create conversational agents, document loaders, and other applications that leverage LLMs. LangChain Rust supports a variety of LLMs, including OpenAI, Azure OpenAI, Ollama, and Anthropic Claude. It also supports a variety of embeddings, vector stores, and document loaders. LangChain Rust is designed to be easy to use and extensible, making it a great choice for developers who want to build applications with LLMs.

lingo.dev

Replexica AI automates software localization end-to-end, producing authentic translations instantly across 60+ languages. Teams can do localization 100x faster with state-of-the-art quality, reaching more paying customers worldwide. The tool offers a GitHub Action for CI/CD automation and supports various formats like JSON, YAML, CSV, and Markdown. With lightning-fast AI localization, auto-updates, native quality translations, developer-friendly CLI, and scalability for startups and enterprise teams, Replexica is a top choice for efficient and effective software localization.

Conduit

Conduit is a unified Swift 6.2 SDK for local and cloud LLM inference, providing a single Swift-native API that can target Anthropic, OpenRouter, Ollama, MLX, HuggingFace, and Apple’s Foundation Models without rewriting your prompt pipeline. It allows switching between local, cloud, and system providers with minimal code changes, supports downloading models from HuggingFace Hub for local MLX inference, generates Swift types directly from LLM responses, offers privacy-first options for on-device running, and is built with Swift 6.2 concurrency features like actors, Sendable types, and AsyncSequence.

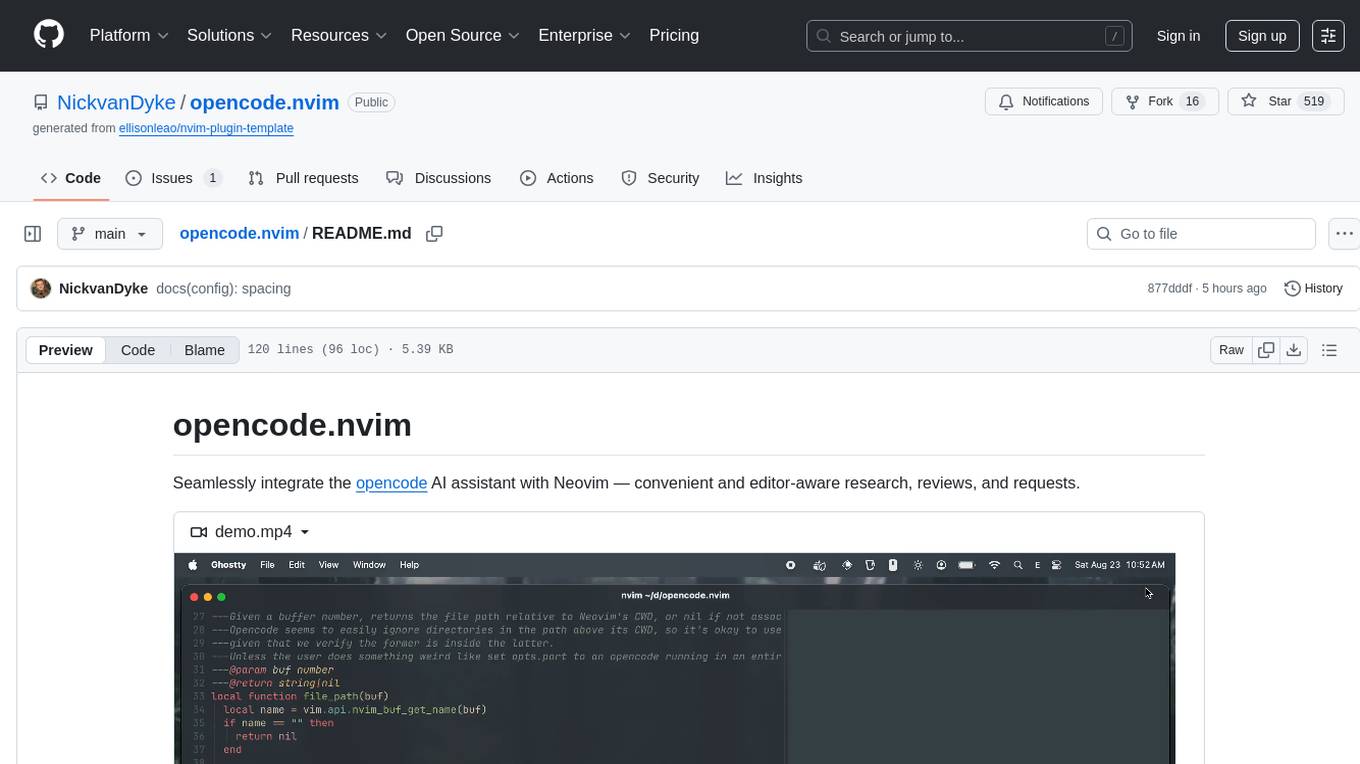

opencode.nvim

Opencode.nvim is a Neovim plugin that provides a simple and efficient way to browse, search, and open files in a project. It enhances the file navigation experience by offering features like fuzzy finding, file preview, and quick access to frequently used files. With Opencode.nvim, users can easily navigate through their project files, jump to specific locations, and manage their workflow more effectively. The plugin is designed to improve productivity and streamline the development process by simplifying file handling tasks within Neovim.

Webscout

Webscout is an all-in-one Python toolkit for web search, AI interaction, digital utilities, and more. It provides access to diverse search engines, cutting-edge AI models, temporary communication tools, media utilities, developer helpers, and powerful CLI interfaces through a unified library. With features like comprehensive search leveraging Google and DuckDuckGo, AI powerhouse for accessing various AI models, YouTube toolkit for video and transcript management, GitAPI for GitHub data extraction, Tempmail & Temp Number for privacy, Text-to-Speech conversion, GGUF conversion & quantization, SwiftCLI for CLI interfaces, LitPrinter for styled console output, LitLogger for logging, LitAgent for user agent generation, Text-to-Image generation, Scout for web parsing and crawling, Awesome Prompts for specialized tasks, Weather Toolkit, and AI Search Providers.

exstruct

ExStruct is an Excel structured extraction engine that reads Excel workbooks and outputs structured data as JSON, including cells, table candidates, shapes, charts, smartart, merged cell ranges, print areas/views, auto page-break areas, and hyperlinks. It offers different output modes, formula map extraction, table detection tuning, CLI rendering options, and graceful fallback in case Excel COM is unavailable. The tool is designed to fit LLM/RAG pipelines and provides benchmark reports for accuracy and utility. It supports various formats like JSON, YAML, and TOON, with optional extras for rendering and full extraction targeting Windows + Excel environments.

For similar tasks

jvm-openai

jvm-openai is a minimalistic unofficial OpenAI API client for the JVM, written in Java. It serves as a Java client for OpenAI API with a focus on simplicity and minimal dependencies. The tool provides support for various OpenAI APIs and endpoints, including Audio, Chat, Embeddings, Fine-tuning, Batch, Files, Uploads, Images, Models, Moderations, Assistants, Threads, Messages, Runs, Run Steps, Vector Stores, Vector Store Files, Vector Store File Batches, Invites, Users, Projects, Project Users, Project Service Accounts, Project API Keys, and Audit Logs. Users can easily integrate this tool into their Java projects to interact with OpenAI services efficiently.

openai-scala-client

This is a no-nonsense async Scala client for OpenAI API supporting all the available endpoints and params including streaming, chat completion, vision, and voice routines. It provides a single service called OpenAIService that supports various calls such as Models, Completions, Chat Completions, Edits, Images, Embeddings, Batches, Audio, Files, Fine-tunes, Moderations, Assistants, Threads, Thread Messages, Runs, Run Steps, Vector Stores, Vector Store Files, and Vector Store File Batches. The library aims to be self-contained with minimal dependencies and supports API-compatible providers like Azure OpenAI, Azure AI, Anthropic, Google Vertex AI, Groq, Grok, Fireworks AI, OctoAI, TogetherAI, Cerebras, Mistral, Deepseek, Ollama, FastChat, and more.

embedJs

EmbedJs is a NodeJS framework that simplifies RAG application development by efficiently processing unstructured data. It segments data, creates relevant embeddings, and stores them in a vector database for quick retrieval.

mistral-ai-kmp

Mistral AI SDK for Kotlin Multiplatform (KMP) allows communication with Mistral API to get AI models, start a chat with the assistant, and create embeddings. The library is based on Mistral API documentation and built with Kotlin Multiplatform and Ktor client library. Sample projects like ZeChat showcase the capabilities of Mistral AI SDK. Users can interact with different Mistral AI models through ZeChat apps on Android, Desktop, and Web platforms. The library is not yet published on Maven, but users can fork the project and use it as a module dependency in their apps.

pgai

pgai simplifies the process of building search and Retrieval Augmented Generation (RAG) AI applications with PostgreSQL. It brings embedding and generation AI models closer to the database, allowing users to create embeddings, retrieve LLM chat completions, reason over data for classification, summarization, and data enrichment directly from within PostgreSQL in a SQL query. The tool requires an OpenAI API key and a PostgreSQL client to enable AI functionality in the database. Users can install pgai from source, run it in a pre-built Docker container, or enable it in a Timescale Cloud service. The tool provides functions to handle API keys using psql or Python, and offers various AI functionalities like tokenizing, detokenizing, embedding, chat completion, and content moderation.

azure-functions-openai-extension

Azure Functions OpenAI Extension is a project that adds support for OpenAI LLM (GPT-3.5-turbo, GPT-4) bindings in Azure Functions. It provides NuGet packages for various functionalities like text completions, chat completions, assistants, embeddings generators, and semantic search. The project requires .NET 6 SDK or greater, Azure Functions Core Tools v4.x, and specific settings in Azure Function or local settings for development. It offers features like text completions, chat completion, assistants with custom skills, embeddings generators for text relatedness, and semantic search using vector databases. The project also includes examples in C# and Python for different functionalities.

openai-kit

OpenAIKit is a Swift package designed to facilitate communication with the OpenAI API. It provides methods to interact with various OpenAI services such as chat, models, completions, edits, images, embeddings, files, moderations, and speech to text. The package encourages the use of environment variables to securely inject the OpenAI API key and organization details. It also offers error handling for API requests through the `OpenAIKit.APIErrorResponse`.

VectorETL

VectorETL is a lightweight ETL framework designed to assist Data & AI engineers in processing data for AI applications quickly. It streamlines the conversion of diverse data sources into vector embeddings and storage in various vector databases. The framework supports multiple data sources, embedding models, and vector database targets, simplifying the creation and management of vector search systems for semantic search, recommendation systems, and other vector-based operations.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.