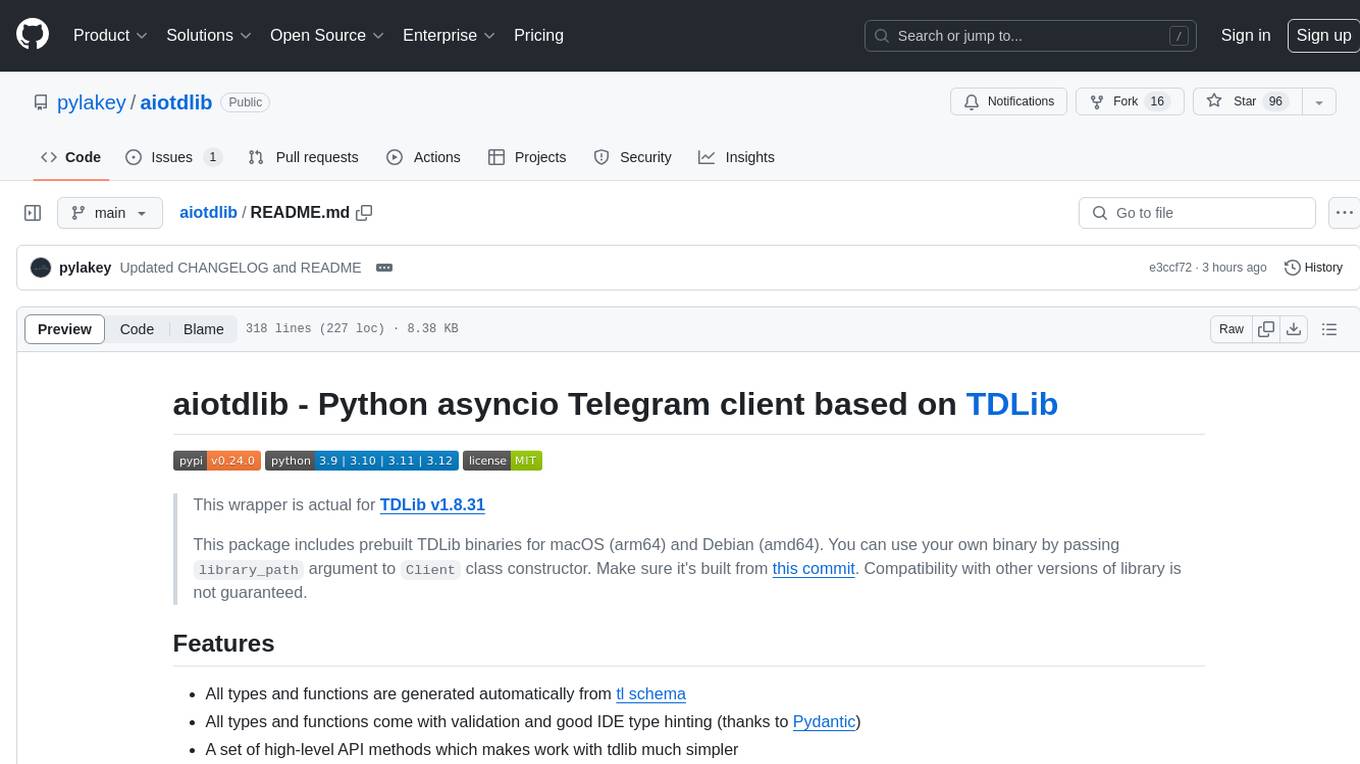

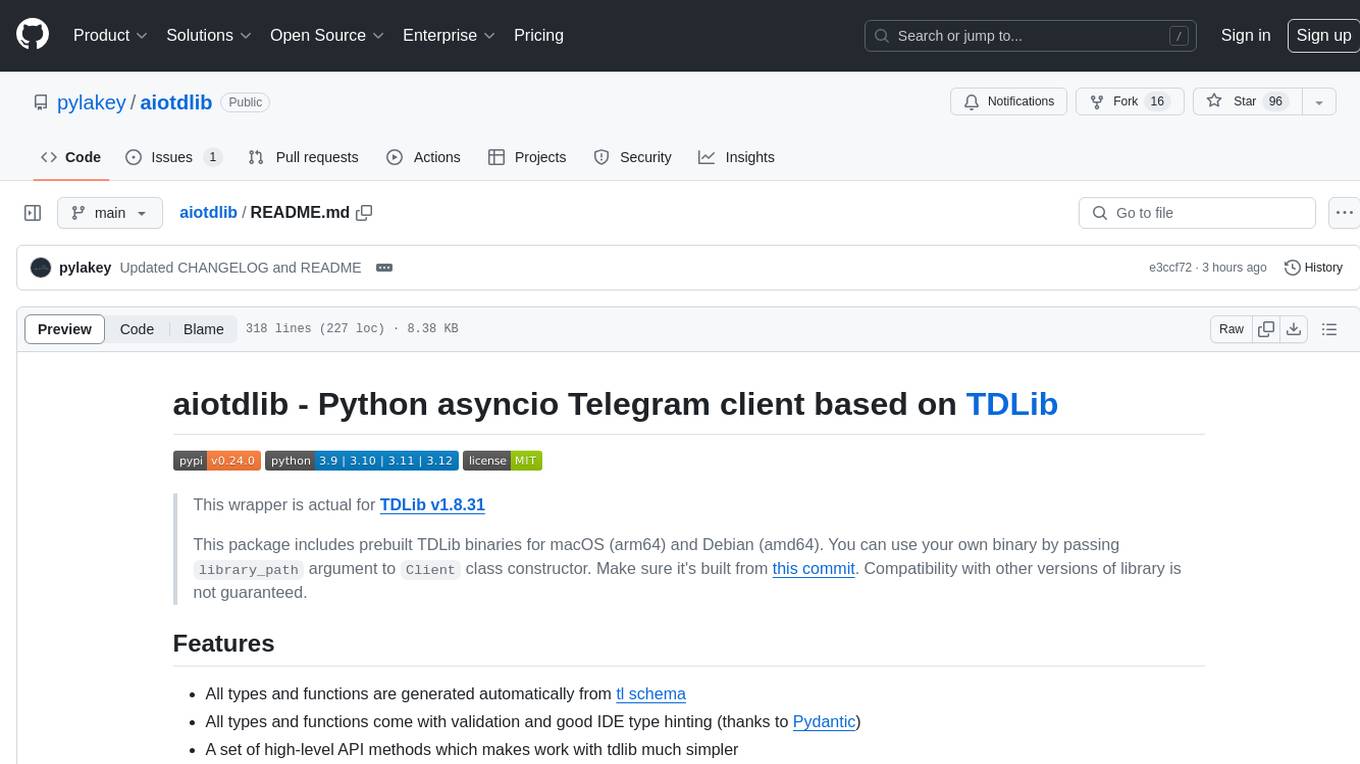

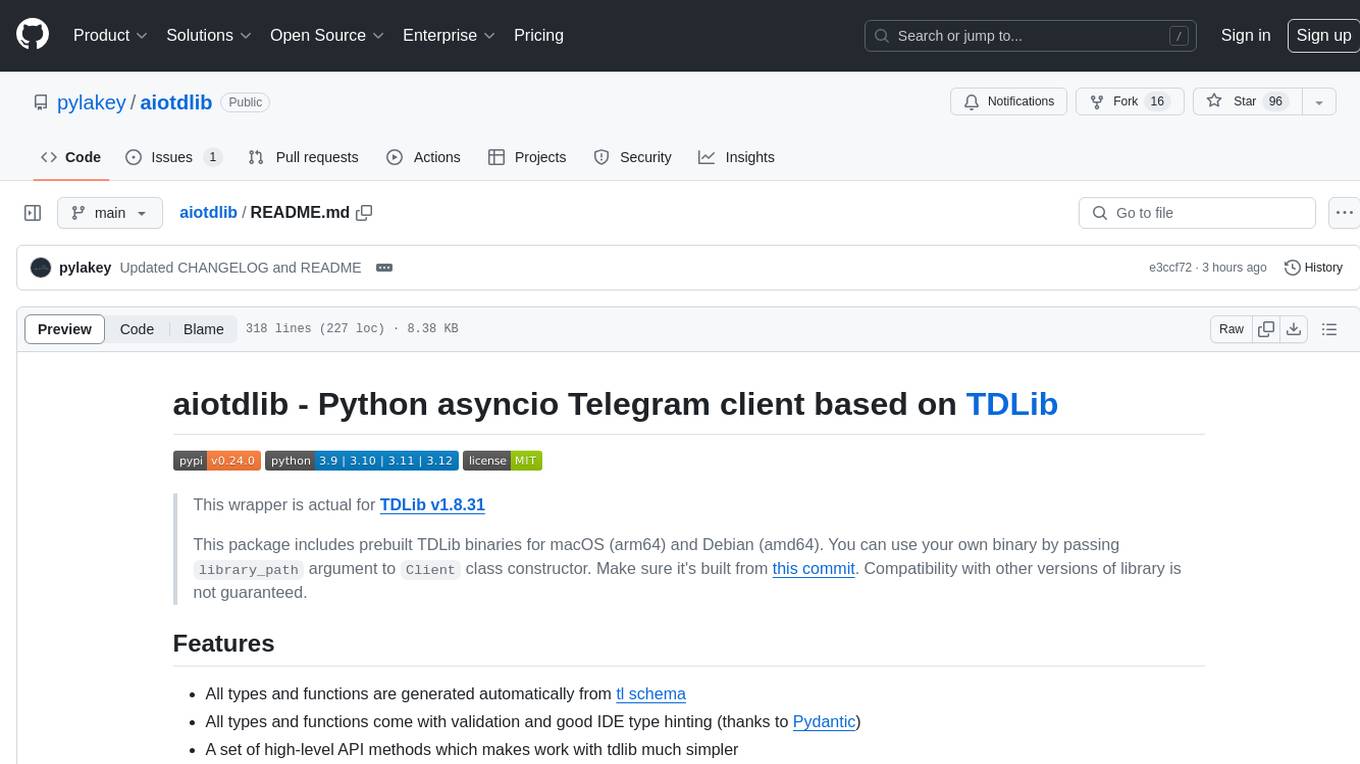

aiotdlib

Python asyncio Telegram client based on TDLib https://github.com/tdlib/td

Stars: 96

aiotdlib is a Python asyncio Telegram client based on TDLib. It provides automatic generation of types and functions from tl schema, validation, good IDE type hinting, and high-level API methods for simpler work with tdlib. The package includes prebuilt TDLib binaries for macOS (arm64) and Debian Bullseye (amd64). Users can use their own binary by passing `library_path` argument to `Client` class constructor. Compatibility with other versions of the library is not guaranteed. The tool requires Python 3.9+ and users need to get their `api_id` and `api_hash` from Telegram docs for installation and usage.

README:

aiotdlib - Python asyncio Telegram client based on TDLib

This wrapper is actual for TDLib v1.8.30 (958fed6e8e440afe87b57c98216a5c8d3f3caed8)

This package includes prebuilt TDLib binaries for macOS (arm64) and Debian Bullseye (amd64). You can use your own binary by passing

library_pathargument toClientclass constructor. Make sure it's built from this commit. Compatibility with other versions of library is not guaranteed.

- All types and functions are generated automatically from tl schema

- All types and functions come with validation and good IDE type hinting (thanks to Pydantic)

- A set of high-level API methods which makes work with tdlib much simpler

- Python 3.9+

- Get your api_id and api_hash. Read more in Telegram docs

pip install aiotdlibor if you use Poetry

poetry add aiotdlibimport asyncio

import logging

from aiotdlib import Client, ClientSettings

API_ID = 123456

API_HASH = ""

PHONE_NUMBER = ""

async def main():

client = Client(

settings=ClientSettings(

api_id=API_ID,

api_hash=API_HASH,

phone_number=PHONE_NUMBER

)

)

async with client:

me = await client.api.get_me()

logging.info(f"Successfully logged in as {me.model_dump_json()}")

if __name__ == '__main__':

logging.basicConfig(level=logging.INFO)

asyncio.run(main())Any parameter of Client class could be also set via environment variables with prefix AIOTDLIB_*.

import asyncio

import logging

from aiotdlib import Client

async def main():

async with Client() as client:

me = await client.api.get_me()

logging.info(f"Successfully logged in as {me.model_dump_json()}")

if __name__ == '__main__':

logging.basicConfig(level=logging.INFO)

asyncio.run(main())and run it like this:

export AIOTDLIB_API_ID=123456

export AIOTDLIB_API_HASH=<my_api_hash>

export AIOTDLIB_BOT_TOKEN=<my_bot_token>

python main.pyimport asyncio

import logging

from aiotdlib import Client, ClientSettings

from aiotdlib.api import API, BaseObject, UpdateNewMessage

API_ID = 123456

API_HASH = ""

PHONE_NUMBER = ""

async def on_update_new_message(client: Client, update: UpdateNewMessage):

chat_id = update.message.chat_id

# api field of client instance contains all TDLib functions, for example get_chat

chat = await client.api.get_chat(chat_id)

logging.info(f'Message received in chat {chat.title}')

async def any_event_handler(client: Client, update: BaseObject):

logging.info(f'Event of type {update.ID} received')

async def main():

client = Client(

settings=ClientSettings(

api_id=API_ID,

api_hash=API_HASH,

phone_number=PHONE_NUMBER

)

)

# Registering event handler for 'updateNewMessage' event

# You can register many handlers for certain event type

client.add_event_handler(on_update_new_message, update_type=API.Types.UPDATE_NEW_MESSAGE)

# You can register handler for special event type "*".

# It will be called for each received event

client.add_event_handler(any_event_handler, update_type=API.Types.ANY)

async with client:

# idle() will run client until it's stopped

await client.idle()

if __name__ == '__main__':

logging.basicConfig(level=logging.INFO)

asyncio.run(main())import logging

from aiotdlib import Client, ClientSettings

from aiotdlib.api import UpdateNewMessage

API_ID = 123456

API_HASH = ""

BOT_TOKEN = ""

bot = Client(

settings=ClientSettings(

api_id=API_ID,

api_hash=API_HASH,

bot_token=BOT_TOKEN

)

)

# Note: bot_command_handler method is universal and can be used directly or as decorator

# Registering handler for '/help' command

@bot.bot_command_handler(command='help')

async def on_help_command(client: Client, update: UpdateNewMessage):

# Each command handler registered with this method will update update.EXTRA field

# with command related data: {'bot_command': 'help', 'bot_command_args': []}

await client.send_text(update.message.chat_id, "I will help you!")

async def on_start_command(client: Client, update: UpdateNewMessage):

# So this will print "{'bot_command': 'help', 'bot_command_args': []}"

print(update.EXTRA)

await client.send_text(update.message.chat_id, "Have a good day! :)")

async def on_custom_command(client: Client, update: UpdateNewMessage):

# So when you send a message "/custom 1 2 3 test"

# So this will print "{'bot_command': 'custom', 'bot_command_args': ['1', '2', '3', 'test']}"

print(update.EXTRA)

if __name__ == '__main__':

logging.basicConfig(level=logging.INFO)

# Registering handler for '/start' command

bot.bot_command_handler(on_start_command, command='start')

bot.bot_command_handler(on_custom_command, command='custom')

bot.run()import asyncio

import logging

from aiotdlib import Client

from aiotdlib import ClientSettings

from aiotdlib import ClientProxySettings

from aiotdlib import ClientProxyType

API_ID = 123456

API_HASH = ""

PHONE_NUMBER = ""

async def main():

client = Client(

settings=ClientSettings(

api_id=API_ID,

api_hash=API_HASH,

phone_number=PHONE_NUMBER,

proxy_settings=ClientProxySettings(

host="10.0.0.1",

port=3333,

type=ClientProxyType.SOCKS5,

username="aiotdlib",

password="somepassword",

)

)

)

async with client:

await client.idle()

if __name__ == '__main__':

logging.basicConfig(level=logging.INFO)

asyncio.run(main())import asyncio

import logging

from aiotdlib import Client, HandlerCallable, ClientSettings

from aiotdlib.api import API, BaseObject, UpdateNewMessage

API_ID = 12345

API_HASH = ""

PHONE_NUMBER = ""

async def some_pre_updates_work(event: BaseObject):

logging.info(f"Before call all update handlers for event {event.ID}")

async def some_post_updates_work(event: BaseObject):

logging.info(f"After call all update handlers for event {event.ID}")

# Note that call_next argument would always be passed as keyword argument,

# so it should be called "call_next" only.

async def my_middleware(client: Client, event: BaseObject, /, *, call_next: HandlerCallable):

# Middlewares useful for opening database connections for example

await some_pre_updates_work(event)

try:

await call_next(client, event)

finally:

await some_post_updates_work(event)

async def on_update_new_message(client: Client, update: UpdateNewMessage):

logging.info('on_update_new_message handler called')

async def main():

client = Client(

settings=ClientSettings(

api_id=API_ID,

api_hash=API_HASH,

phone_number=PHONE_NUMBER

)

)

client.add_event_handler(on_update_new_message, update_type=API.Types.UPDATE_NEW_MESSAGE)

# Registering middleware.

# Note that middleware would be called for EVERY EVENT.

# Don't use them for long-running tasks as it could be heavy performance hit

# You can add as much middlewares as you want.

# They would be called in order you've added them

client.add_middleware(my_middleware)

async with client:

await client.idle()

if __name__ == '__main__':

logging.basicConfig(level=logging.INFO)

asyncio.run(main())This project is licensed under the terms of the MIT license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiotdlib

Similar Open Source Tools

aiotdlib

aiotdlib is a Python asyncio Telegram client based on TDLib. It provides automatic generation of types and functions from tl schema, validation, good IDE type hinting, and high-level API methods for simpler work with tdlib. The package includes prebuilt TDLib binaries for macOS (arm64) and Debian Bullseye (amd64). Users can use their own binary by passing `library_path` argument to `Client` class constructor. Compatibility with other versions of the library is not guaranteed. The tool requires Python 3.9+ and users need to get their `api_id` and `api_hash` from Telegram docs for installation and usage.

acte

Acte is a framework designed to build GUI-like tools for AI Agents. It aims to address the issues of cognitive load and freedom degrees when interacting with multiple APIs in complex scenarios. By providing a graphical user interface (GUI) for Agents, Acte helps reduce cognitive load and constraints interaction, similar to how humans interact with computers through GUIs. The tool offers APIs for starting new sessions, executing actions, and displaying screens, accessible via HTTP requests or the SessionManager class.

aio-pika

Aio-pika is a wrapper around aiormq for asyncio and humans. It provides a completely asynchronous API, object-oriented API, transparent auto-reconnects with complete state recovery, Python 3.7+ compatibility, transparent publisher confirms support, transactions support, and complete type-hints coverage.

llm-sandbox

LLM Sandbox is a lightweight and portable sandbox environment designed to securely execute large language model (LLM) generated code in a safe and isolated manner using Docker containers. It provides an easy-to-use interface for setting up, managing, and executing code in a controlled Docker environment, simplifying the process of running code generated by LLMs. The tool supports multiple programming languages, offers flexibility with predefined Docker images or custom Dockerfiles, and allows scalability with support for Kubernetes and remote Docker hosts.

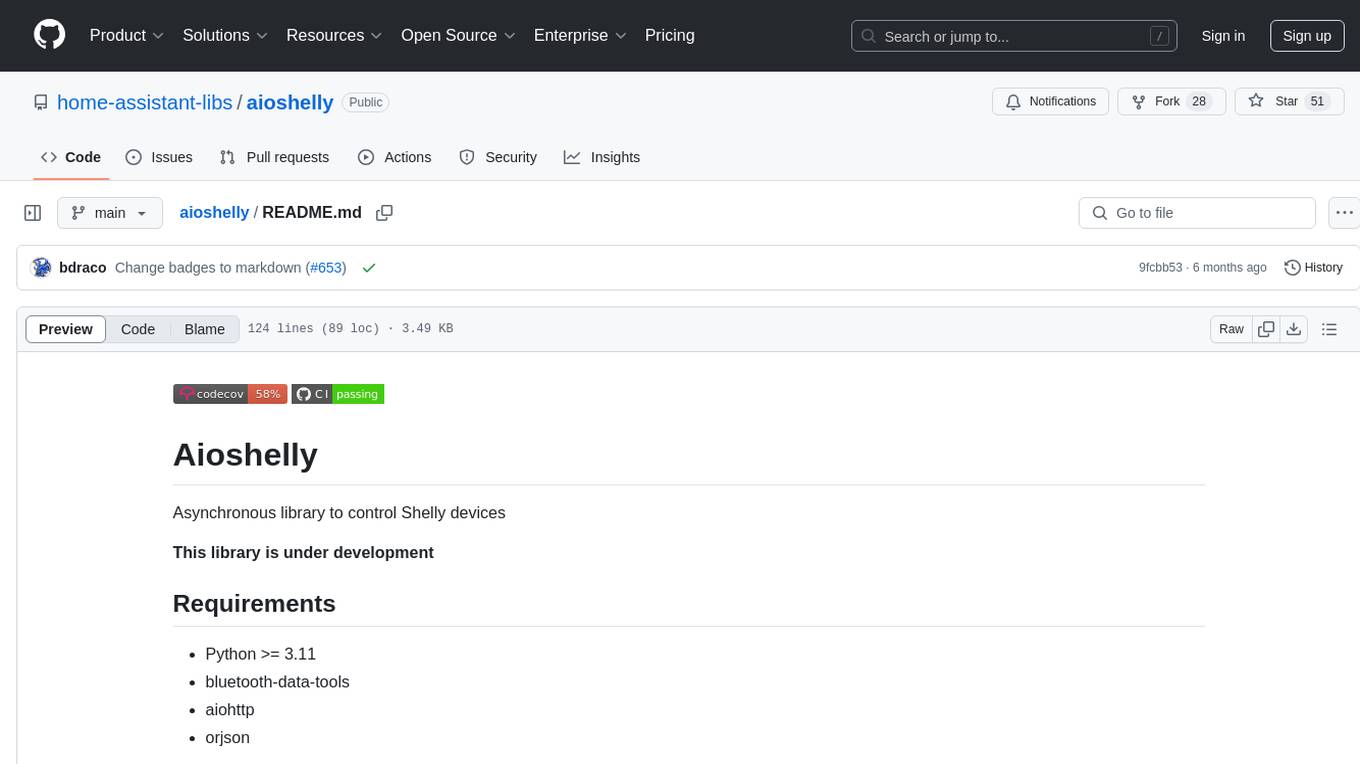

aioshelly

Aioshelly is an asynchronous library designed to control Shelly devices. It is currently under development and requires Python version 3.11 or higher, along with dependencies like bluetooth-data-tools, aiohttp, and orjson. The library provides examples for interacting with Gen1 devices using CoAP protocol and Gen2/Gen3 devices using RPC and WebSocket protocols. Users can easily connect to Shelly devices, retrieve status information, and perform various actions through the provided APIs. The repository also includes example scripts for quick testing and usage guidelines for contributors to maintain consistency with the Shelly API.

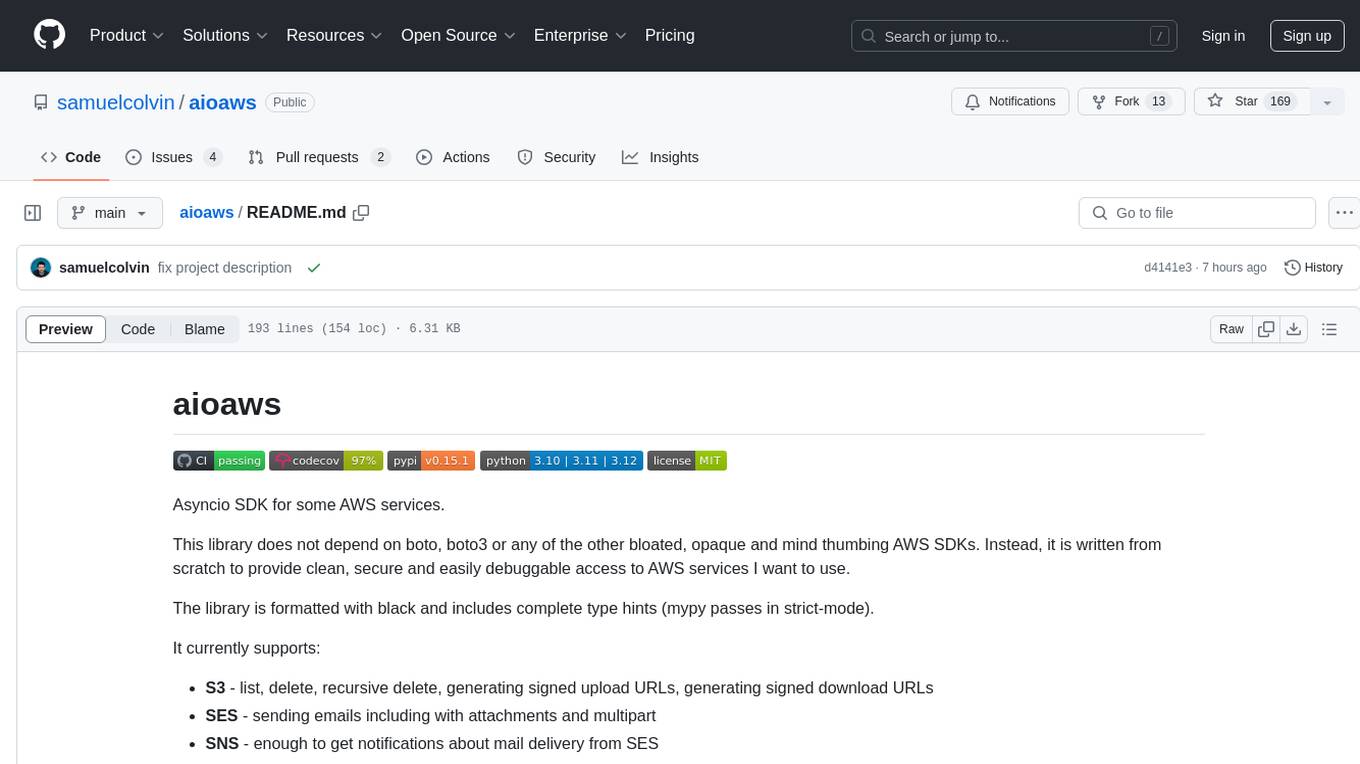

aioaws

Aioaws is an asyncio SDK for some AWS services, providing clean, secure, and easily debuggable access to services like S3, SES, and SNS. It is written from scratch without dependencies on boto or boto3, formatted with black, and includes complete type hints. The library supports various functionalities such as listing, deleting, and generating signed URLs for S3 files, sending emails with attachments and multipart content via SES, and receiving notifications about mail delivery from SES. It also offers AWS Signature Version 4 authentication and has minimal dependencies like aiofiles, cryptography, httpx, and pydantic.

client-python

The Mistral Python Client is a tool inspired by cohere-python that allows users to interact with the Mistral AI API. It provides functionalities to access and utilize the AI capabilities offered by Mistral. Users can easily install the client using pip and manage dependencies using poetry. The client includes examples demonstrating how to use the API for various tasks, such as chat interactions. To get started, users need to obtain a Mistral API Key and set it as an environment variable. Overall, the Mistral Python Client simplifies the integration of Mistral AI services into Python applications.

capsule

Capsule is a secure and durable runtime for AI agents, designed to coordinate tasks in isolated environments. It allows for long-running workflows, large-scale processing, autonomous decision-making, and multi-agent systems. Tasks run in WebAssembly sandboxes with isolated execution, resource limits, automatic retries, and lifecycle tracking. It enables safe execution of untrusted code within AI agent systems.

instructor

Instructor is a tool that provides structured outputs from Large Language Models (LLMs) in a reliable manner. It simplifies the process of extracting structured data by utilizing Pydantic for validation, type safety, and IDE support. With Instructor, users can define models and easily obtain structured data without the need for complex JSON parsing, error handling, or retries. The tool supports automatic retries, streaming support, and extraction of nested objects, making it production-ready for various AI applications. Trusted by a large community of developers and companies, Instructor is used by teams at OpenAI, Google, Microsoft, AWS, and YC startups.

aiocache

Aiocache is an asyncio cache library that supports multiple backends such as memory, redis, and memcached. It provides a simple interface for functions like add, get, set, multi_get, multi_set, exists, increment, delete, clear, and raw. Users can easily install and use the library for caching data in Python applications. Aiocache allows for easy instantiation of caches and setup of cache aliases for reusing configurations. It also provides support for backends, serializers, and plugins to customize cache operations. The library offers detailed documentation and examples for different use cases and configurations.

java-genai

Java idiomatic SDK for the Gemini Developer APIs and Vertex AI APIs. The SDK provides a Client class for interacting with both APIs, allowing seamless switching between the 2 backends without code rewriting. It supports features like generating content, embedding content, generating images, upscaling images, editing images, and generating videos. The SDK also includes options for setting API versions, HTTP request parameters, client behavior, and response schemas.

flyte-sdk

Flyte 2 SDK is a pure Python tool for type-safe, distributed orchestration of agents, ML pipelines, and more. It allows users to write data pipelines, ML training jobs, and distributed compute in Python without any DSL constraints. With features like async-first parallelism and fine-grained observability, Flyte 2 offers a seamless workflow experience. Users can leverage core concepts like TaskEnvironments for container configuration, pure Python workflows for flexibility, and async parallelism for distributed execution. Advanced features include sub-task observability with tracing and remote task execution. The tool also provides native Jupyter integration for running and monitoring workflows directly from notebooks. Configuration and deployment are made easy with configuration files and commands for deploying and running workflows. Flyte 2 is licensed under the Apache 2.0 License.

com.openai.unity

com.openai.unity is an OpenAI package for Unity that allows users to interact with OpenAI's API through RESTful requests. It is independently developed and not an official library affiliated with OpenAI. Users can fine-tune models, create assistants, chat completions, and more. The package requires Unity 2021.3 LTS or higher and can be installed via Unity Package Manager or Git URL. Various features like authentication, Azure OpenAI integration, model management, thread creation, chat completions, audio processing, image generation, file management, fine-tuning, batch processing, embeddings, and content moderation are available.

mcphub.nvim

MCPHub.nvim is a powerful Neovim plugin that integrates MCP (Model Context Protocol) servers into your workflow. It offers a centralized config file for managing servers and tools, with an intuitive UI for testing resources. Ideal for LLM integration, it provides programmatic API access and interactive testing through the `:MCPHub` command.

js-genai

The Google Gen AI JavaScript SDK is an experimental SDK for TypeScript and JavaScript developers to build applications powered by Gemini. It supports both the Gemini Developer API and Vertex AI. The SDK is designed to work with Gemini 2.0 features. Users can access API features through the GoogleGenAI classes, which provide submodules for querying models, managing caches, creating chats, uploading files, and starting live sessions. The SDK also allows for function calling to interact with external systems. Users can find more samples in the GitHub samples directory.

OpenAI-DotNet

OpenAI-DotNet is a simple C# .NET client library for OpenAI to use through their RESTful API. It is independently developed and not an official library affiliated with OpenAI. Users need an OpenAI API account to utilize this library. The library targets .NET 6.0 and above, working across various platforms like console apps, winforms, wpf, asp.net, etc., and on Windows, Linux, and Mac. It provides functionalities for authentication, interacting with models, assistants, threads, chat, audio, images, files, fine-tuning, embeddings, and moderations.

For similar tasks

writer-framework

Writer Framework is an open-source framework for creating AI applications. It allows users to build user interfaces using a visual editor and write the backend code in Python. The framework is fast, flexible, and developer-friendly, providing separation of concerns between UI and business logic. It is reactive and state-driven, allowing for highly customizable elements without the need for CSS. Writer Framework is designed to be fast, with minimal overhead on Python code, and uses WebSockets for synchronization. It is contained in a standard Python package, supports local code editing with instant refreshes, and enables editing the UI while the app is running.

aiotdlib

aiotdlib is a Python asyncio Telegram client based on TDLib. It provides automatic generation of types and functions from tl schema, validation, good IDE type hinting, and high-level API methods for simpler work with tdlib. The package includes prebuilt TDLib binaries for macOS (arm64) and Debian Bullseye (amd64). Users can use their own binary by passing `library_path` argument to `Client` class constructor. Compatibility with other versions of the library is not guaranteed. The tool requires Python 3.9+ and users need to get their `api_id` and `api_hash` from Telegram docs for installation and usage.

writer-framework

Writer Framework is an open-source framework for creating AI applications. It allows users to build user interfaces using a visual editor and write the backend code in Python. The framework is fast, flexible, and provides separation of concerns between UI and business logic. It is reactive and state-driven, highly customizable without requiring CSS, fast in event handling, developer-friendly with easy installation and quick start options, and contains full documentation for using its AI module and deployment options.

mcp-svelte-docs

A Model Context Protocol (MCP) server providing authoritative Svelte 5 and SvelteKit definitions extracted directly from TypeScript declarations. Get precise syntax, parameters, and examples for all Svelte 5 concepts through a single, unified interface. The server offers a 'svelte_definition' tool that covers various Svelte 5 runes, modern features, event handling, migration guidance, TypeScript interfaces, and advanced patterns. It aims to provide up-to-date, type-safe, and comprehensive documentation for Svelte developers.

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

venom

Venom is a high-performance system developed with JavaScript to create a bot for WhatsApp, support for creating any interaction, such as customer service, media sending, sentence recognition based on artificial intelligence and all types of design architecture for WhatsApp.

Whatsapp-Ai-BOT

This WhatsApp AI chatbot is built using NodeJS technology and powered by OpenAI. It leverages the advanced deep learning models of ChatGPT, Playground, and DALL·E from OpenAI to provide a unique text-based and image-based conversational experience for users. The bot has two main features: ChatGPT (text) and DALL-E (Text To Image). To use these features, simply use the commands /ai, /img, and /sc respectively. The bot's code is encrypted to protect it from prying eyes, but the key to unlock the full potential of this amazing creation can be obtained by contacting the developer. The bot is free to use, but a PRIME version is available with additional features such as history mode, prime support, and customizable options.

IBRAHIM-AI-10.10

BMW MD is a simple WhatsApp user BOT created by Ibrahim Tech. It allows users to scan pairing codes or QR codes to connect to WhatsApp and deploy the bot on Heroku. The bot can be used to perform various tasks such as sending messages, receiving messages, and managing contacts. It is released under the MIT License and contributions are welcome.

For similar jobs

EDDI

E.D.D.I (Enhanced Dialog Driven Interface) is an enterprise-certified chatbot middleware that offers advanced prompt and conversation management for Conversational AI APIs. Developed in Java using Quarkus, it is lean, RESTful, scalable, and cloud-native. E.D.D.I is highly scalable and designed to efficiently manage conversations in AI-driven applications, with seamless API integration capabilities. Notable features include configurable NLP and Behavior rules, support for multiple chatbots running concurrently, and integration with MongoDB, OAuth 2.0, and HTML/CSS/JavaScript for UI. The project requires Java 21, Maven 3.8.4, and MongoDB >= 5.0 to run. It can be built as a Docker image and deployed using Docker or Kubernetes, with additional support for integration testing and monitoring through Prometheus and Kubernetes endpoints.

empower-functions

Empower Functions is a family of large language models (LLMs) that provide GPT-4 level capabilities for real-world 'tool using' use cases. These models offer compatibility support to be used as drop-in replacements, enabling interactions with external APIs by recognizing when a function needs to be called and generating JSON containing necessary arguments based on user inputs. This capability is crucial for building conversational agents and applications that convert natural language into API calls, facilitating tasks such as weather inquiries, data extraction, and interactions with knowledge bases. The models can handle multi-turn conversations, choose between tools or standard dialogue, ask for clarification on missing parameters, integrate responses with tool outputs in a streaming fashion, and efficiently execute multiple functions either in parallel or sequentially with dependencies.

aiotdlib

aiotdlib is a Python asyncio Telegram client based on TDLib. It provides automatic generation of types and functions from tl schema, validation, good IDE type hinting, and high-level API methods for simpler work with tdlib. The package includes prebuilt TDLib binaries for macOS (arm64) and Debian Bullseye (amd64). Users can use their own binary by passing `library_path` argument to `Client` class constructor. Compatibility with other versions of the library is not guaranteed. The tool requires Python 3.9+ and users need to get their `api_id` and `api_hash` from Telegram docs for installation and usage.

amadeus-java

Amadeus Java SDK provides a rich set of APIs for the travel industry, allowing developers to access various functionalities such as flight search, booking, airport information, and more. The SDK simplifies interaction with the Amadeus API by providing self-contained code examples and detailed documentation. Developers can easily make API calls, handle responses, and utilize features like pagination and logging. The SDK supports various endpoints for tasks like flight search, booking management, airport information retrieval, and travel analytics. It also offers functionalities for hotel search, booking, and sentiment analysis. Overall, the Amadeus Java SDK is a comprehensive tool for integrating Amadeus APIs into Java applications.

llms

LLMs is a universal LLM API transformation server designed to standardize requests and responses between different LLM providers such as Anthropic, Gemini, and Deepseek. It uses a modular transformer system to handle provider-specific API formats, supporting real-time streaming responses and converting data into standardized formats. The server transforms requests and responses to and from unified formats, enabling seamless communication between various LLM providers.

java-genai

Java idiomatic SDK for the Gemini Developer APIs and Vertex AI APIs. The SDK provides a Client class for interacting with both APIs, allowing seamless switching between the 2 backends without code rewriting. It supports features like generating content, embedding content, generating images, upscaling images, editing images, and generating videos. The SDK also includes options for setting API versions, HTTP request parameters, client behavior, and response schemas.

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.