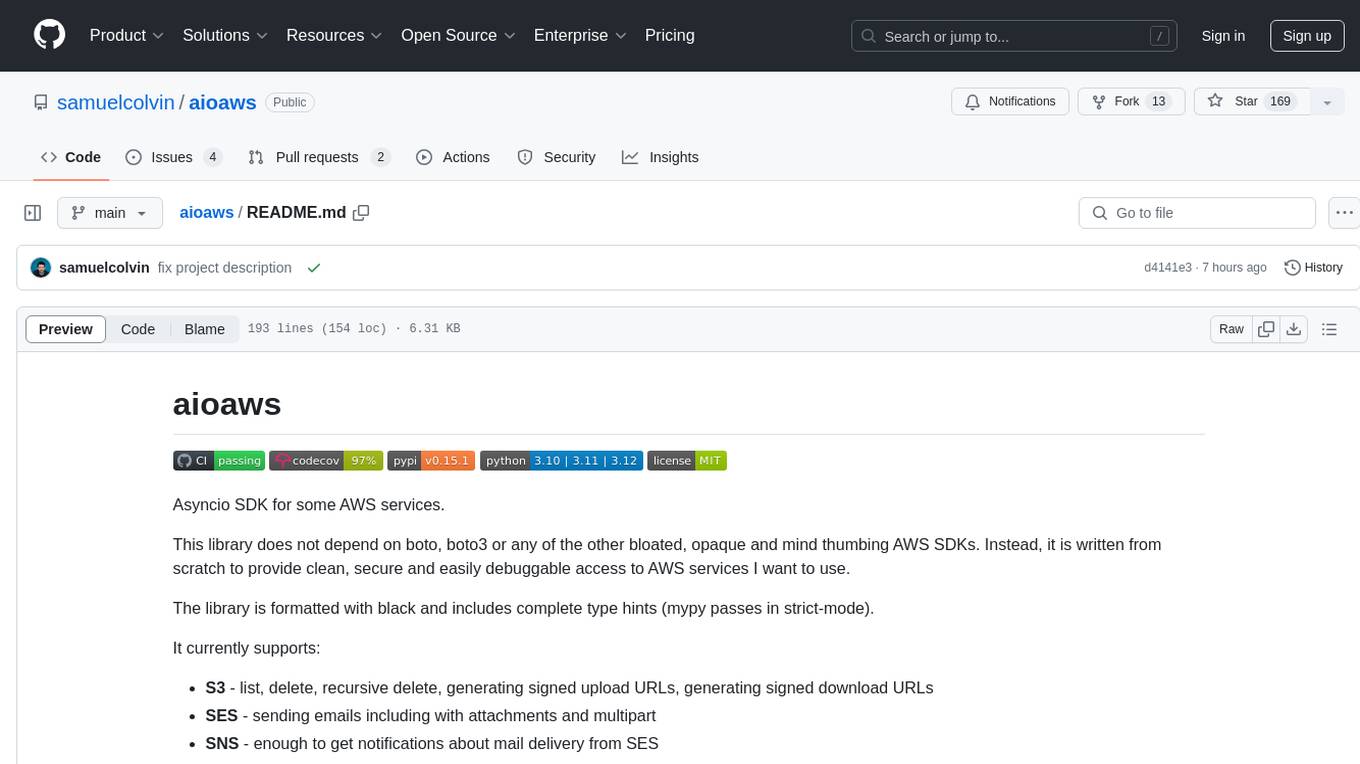

aioaws

Asyncio compatible SDK for aws services.

Stars: 168

Aioaws is an asyncio SDK for some AWS services, providing clean, secure, and easily debuggable access to services like S3, SES, and SNS. It is written from scratch without dependencies on boto or boto3, formatted with black, and includes complete type hints. The library supports various functionalities such as listing, deleting, and generating signed URLs for S3 files, sending emails with attachments and multipart content via SES, and receiving notifications about mail delivery from SES. It also offers AWS Signature Version 4 authentication and has minimal dependencies like aiofiles, cryptography, httpx, and pydantic.

README:

Asyncio SDK for some AWS services.

This library does not depend on boto, boto3 or any of the other bloated, opaque and mind thumbing AWS SDKs. Instead, it is written from scratch to provide clean, secure and easily debuggable access to AWS services I want to use.

The library is formatted with black and includes complete type hints (mypy passes in strict-mode).

It currently supports:

- S3 - list, delete, recursive delete, generating signed upload URLs, generating signed download URLs

- SES - sending emails including with attachments and multipart

- SNS - enough to get notifications about mail delivery from SES

-

AWS Signature Version 4

authentication for any AWS service (this is the only clean & modern implementation of AWS4 I know of in python, see

core.py)

The only dependencies of aioaws, are:

- aiofiles - for asynchronous reading of files

- cryptography - for verifying SNS signatures

- httpx - for HTTP requests

- pydantic - for validating responses

pip install aioawsimport asyncio

from aioaws.s3 import S3Client, S3Config

from httpx import AsyncClient

# requires `pip install devtools`

from devtools import debug

async def s3_demo(client: AsyncClient):

s3 = S3Client(client, S3Config('<access key>', '<secret key>', '<region>', 'my_bucket_name.com'))

# upload a file:

await s3.upload('path/to/upload-to.txt', b'this the content')

# list all files in a bucket

files = [f async for f in s3.list()]

debug(files)

"""

[

S3File(

key='path/to/upload-to.txt',

last_modified=datetime.datetime(...),

size=16,

e_tag='...',

storage_class='STANDARD',

),

]

"""

# list all files with a given prefix in a bucket

files = [f async for f in s3.list('path/to/')]

debug(files)

# # delete a file

# await s3.delete('path/to/file.txt')

# # delete two files

# await s3.delete('path/to/file1.txt', 'path/to/file2.txt')

# delete recursively based on a prefix

await s3.delete_recursive('path/to/')

# generate an upload link suitable for sending to a browser to enabled

# secure direct file upload (see below)

upload_data = s3.signed_upload_url(

path='path/to/',

filename='demo.png',

content_type='image/png',

size=123,

)

debug(upload_data)

"""

{

'url': 'https://my_bucket_name.com/',

'fields': {

'Key': 'path/to/demo.png',

'Content-Type': 'image/png',

'AWSAccessKeyId': '<access key>',

'Content-Disposition': 'attachment; filename="demo.png"',

'Policy': '...',

'Signature': '...',

},

}

"""

# generate a temporary link to allow yourself or a client to download a file

download_url = s3.signed_download_url('path/to/demo.png', max_age=60)

print(download_url)

#> https://my_bucket_name.com/path/to/demo.png?....

async def main():

async with AsyncClient(timeout=30) as client:

await s3_demo(client)

asyncio.run(main())upload_data shown in the above example can be used in JS with something like this:

const formData = new FormData()

for (let [name, value] of Object.entries(upload_data.fields)) {

formData.append(name, value)

}

const fileField = document.querySelector('input[type="file"]')

formData.append('file', fileField.files[0])

const response = await fetch(upload_data.url, {method: 'POST', body: formData})(in the request to get upload_data you would need to provide the file size and content-type in order

for them for the upload shown here to succeed)

To send an email with SES:

import asyncio

from pathlib import Path

from httpx import AsyncClient

from aioaws.ses import SesConfig, SesClient, SesRecipient, SesAttachment

async def ses_demo(client: AsyncClient):

ses_client = SesClient(client, SesConfig('<access key>', '<secret key>', '<region>'))

message_id = await ses_client.send_email(

SesRecipient('[email protected]', 'Sender', 'Name'),

'This is the subject',

[SesRecipient('[email protected]', 'John', 'Doe')],

'this is the plain text body',

html_body='<b>This is the HTML body.<b>',

bcc=[SesRecipient(...)],

attachments=[

SesAttachment(b'this is content', 'attachment-name.txt', 'text/plain'),

SesAttachment(Path('foobar.png')),

],

unsubscribe_link='https:://example.com/unsubscribe',

configuration_set='SES configuration set',

message_tags={'ses': 'tags', 'go': 'here'},

)

print('SES message ID:', message_id)

async def main():

async with AsyncClient() as client:

await ses_demo(client)

asyncio.run(main())Receiving data about SES webhooks from SNS (assuming you're using FastAPI)

from aioaws.ses import SesWebhookInfo

from aioaws.sns import SnsWebhookError

from fastapi import Request

from httpx import AsyncClient

async_client = AsyncClient...

@app.post('/ses-webhook/', include_in_schema=False)

async def ses_webhook(request: Request):

request_body = await request.body()

try:

webhook_info = await SesWebhookInfo.build(request_body, async_client)

except SnsWebhookError as e:

debug(message=e.message, details=e.details, headers=e.headers)

raise ...

debug(webhook_info)

...See here

for more information about what's provided in a SesWebhookInfo.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aioaws

Similar Open Source Tools

aioaws

Aioaws is an asyncio SDK for some AWS services, providing clean, secure, and easily debuggable access to services like S3, SES, and SNS. It is written from scratch without dependencies on boto or boto3, formatted with black, and includes complete type hints. The library supports various functionalities such as listing, deleting, and generating signed URLs for S3 files, sending emails with attachments and multipart content via SES, and receiving notifications about mail delivery from SES. It also offers AWS Signature Version 4 authentication and has minimal dependencies like aiofiles, cryptography, httpx, and pydantic.

hezar

Hezar is an all-in-one AI library designed specifically for the Persian community. It brings together various AI models and tools, making it easy to use AI with just a few lines of code. The library seamlessly integrates with Hugging Face Hub, offering a developer-friendly interface and task-based model interface. In addition to models, Hezar provides tools like word embeddings, tokenizers, feature extractors, and more. It also includes supplementary ML tools for deployment, benchmarking, and optimization.

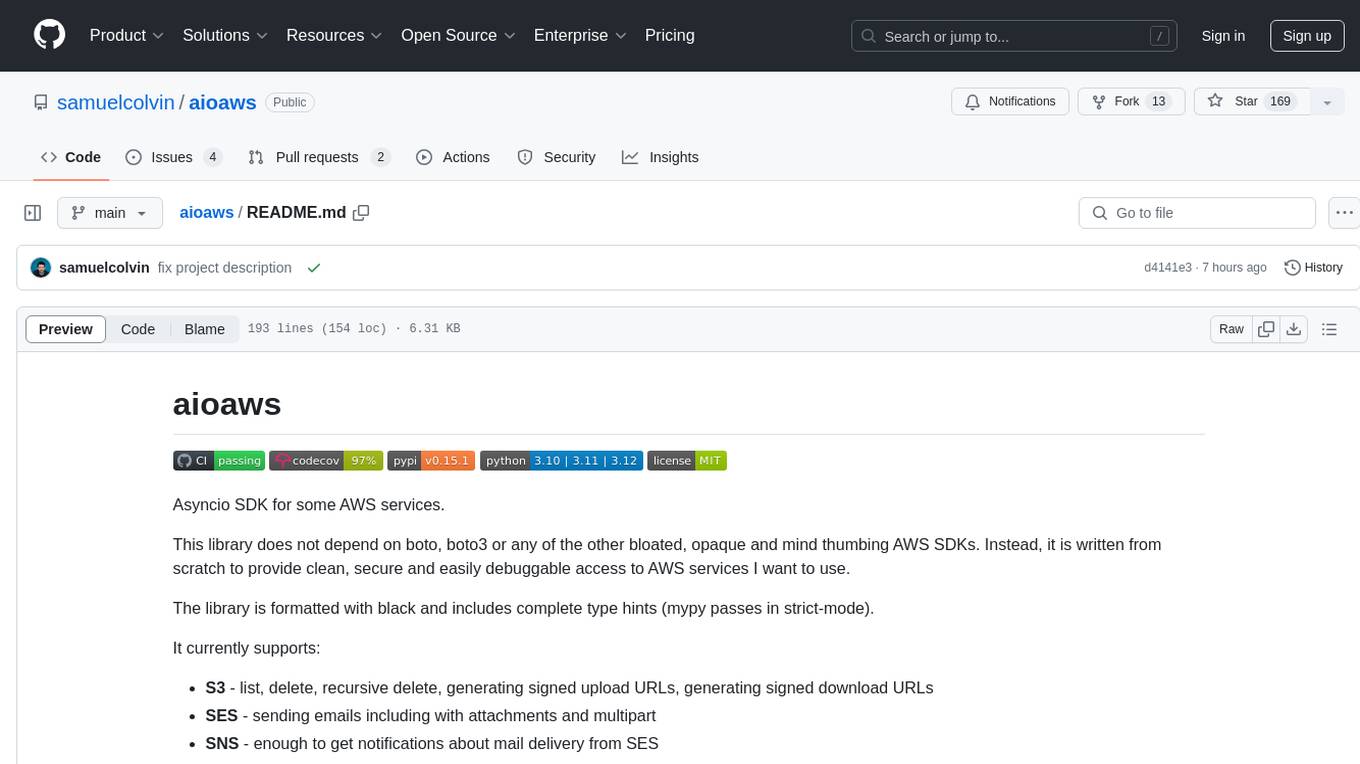

amadeus-node

Amadeus Node SDK provides a rich set of APIs for the travel industry. It allows developers to interact with various endpoints related to flights, hotels, activities, and more. The SDK simplifies making API calls, handling promises, pagination, logging, and debugging. It supports a wide range of functionalities such as flight search, booking, seat maps, flight status, points of interest, hotel search, sentiment analysis, trip predictions, and more. Developers can easily integrate the SDK into their Node.js applications to access Amadeus APIs and build travel-related applications.

volga

Volga is a general purpose real-time data processing engine in Python for modern AI/ML systems. It aims to be a Python-native alternative to Flink/Spark Streaming with extended functionality for real-time AI/ML workloads. It provides a hybrid push+pull architecture, Entity API for defining data entities and feature pipelines, DataStream API for general data processing, and customizable data connectors. Volga can run on a laptop or a distributed cluster, making it suitable for building custom real-time AI/ML feature platforms or general data pipelines without relying on third-party platforms.

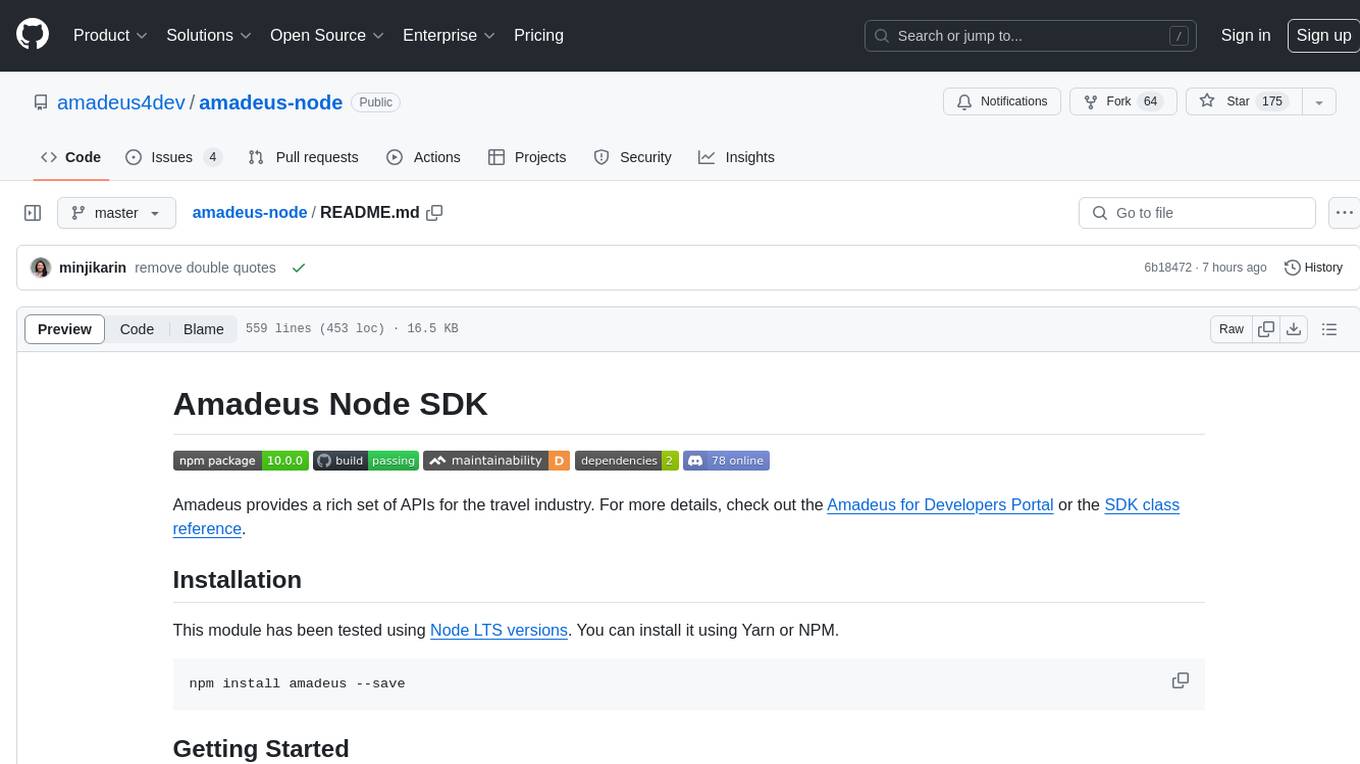

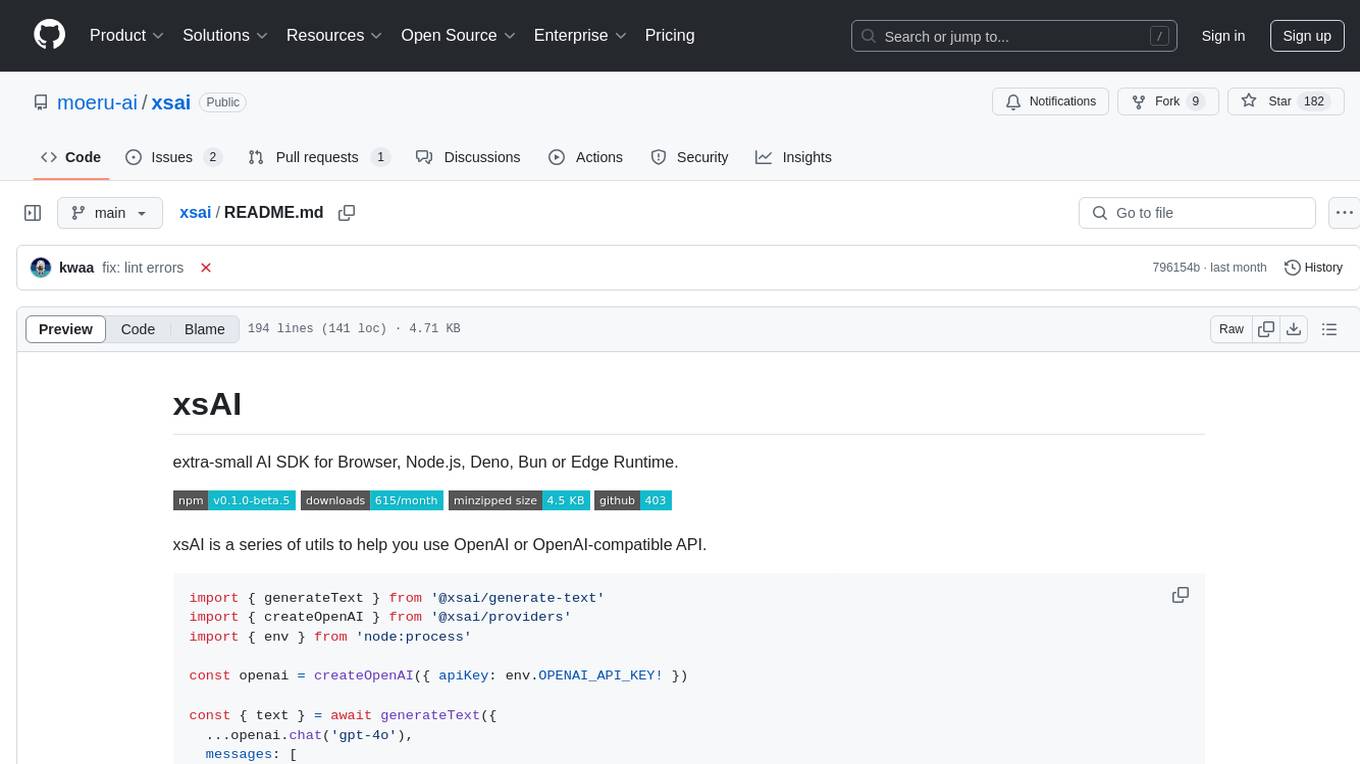

xsai

xsAI is an extra-small AI SDK designed for Browser, Node.js, Deno, Bun, or Edge Runtime. It provides a series of utils to help users utilize OpenAI or OpenAI-compatible APIs. The SDK is lightweight and efficient, using a variety of methods to minimize its size. It is runtime-agnostic, working seamlessly across different environments without depending on Node.js Built-in Modules. Users can easily install specific utils like generateText or streamText, and leverage tools like weather to perform tasks such as getting the weather in a location.

laravel-slower

Laravel Slower is a powerful package designed for Laravel developers to optimize the performance of their applications by identifying slow database queries and providing AI-driven suggestions for optimal indexing strategies and performance improvements. It offers actionable insights for debugging and monitoring database interactions, enhancing efficiency and scalability.

ai-nodejs

This repository serves as a companion to the Build AI-Powered Apps with OpenAI and Node.js course on Frontend Masters. It includes course notes and provides alternative approaches for deprecated Langchain methods by installing the Langchain community module and importing loaders for document processing from PDFs and YouTube videos.

amadeus-python

Amadeus Python SDK provides a rich set of APIs for the travel industry. It allows users to make API calls for various travel-related tasks such as flight offers search, hotel bookings, trip purpose prediction, flight delay prediction, airport on-time performance, travel recommendations, and more. The SDK conveniently maps API paths to similar paths, making it easy to interact with the Amadeus APIs. Users can initialize the client with their API key and secret, make API calls, handle responses, and enable logging for debugging purposes. The SDK documentation includes detailed information about each SDK method, arguments, and return types.

langfun

Langfun is a Python library that aims to make language models (LM) fun to work with. It enables a programming model that flows naturally, resembling the human thought process. Langfun emphasizes the reuse and combination of language pieces to form prompts, thereby accelerating innovation. Unlike other LM frameworks, which feed program-generated data into the LM, langfun takes a distinct approach: It starts with natural language, allowing for seamless interactions between language and program logic, and concludes with natural language and optional structured output. Consequently, langfun can aptly be described as Language as functions, capturing the core of its methodology.

sparkle

Sparkle is a tool that streamlines the process of building AI-driven features in applications using Large Language Models (LLMs). It guides users through creating and managing agents, defining tools, and interacting with LLM providers like OpenAI. Sparkle allows customization of LLM provider settings, model configurations, and provides a seamless integration with Sparkle Server for exposing agents via an OpenAI-compatible chat API endpoint.

aimeos-headless

Aimeos headless distribution is an ultra-fast, cloud-native, and API-first headless ecommerce solution for Laravel. It offers a full-featured e-commerce package with features like JSON REST API, GraphQL API, multi-vendor support, subscriptions, block/tier pricing, admin backend, and more. The distribution is highly customizable, extensible, and suitable for multi-tenant e-commerce SaaS solutions. It supports multiple languages, AI-based text translation, and provides secure and high-quality source code. Aimeos is designed for AWS, Google, Azure, and Kubernetes based clouds, and can handle a wide range of products efficiently.

funcchain

Funcchain is a Python library that allows you to easily write cognitive systems by leveraging Pydantic models as output schemas and LangChain in the backend. It provides a seamless integration of LLMs into your apps, utilizing OpenAI Functions or LlamaCpp grammars (json-schema-mode) for efficient structured output. Funcchain compiles the Funcchain syntax into LangChain runnables, enabling you to invoke, stream, or batch process your pipelines effortlessly.

ShannonBase

ShannonBase is a HTAP database provided by Shannon Data AI, designed for big data and AI. It extends MySQL with native embedding support, machine learning capabilities, a JavaScript engine, and a columnar storage engine. ShannonBase supports multimodal data types and natively integrates LightGBM for training and prediction. It leverages embedding algorithms and vector data type for ML/RAG tasks, providing Zero Data Movement, Native Performance Optimization, and Seamless SQL Integration. The tool includes a lightweight JavaScript engine for writing stored procedures in SQL or JavaScript.

aioshelly

Aioshelly is an asynchronous library designed to control Shelly devices. It is currently under development and requires Python version 3.11 or higher, along with dependencies like bluetooth-data-tools, aiohttp, and orjson. The library provides examples for interacting with Gen1 devices using CoAP protocol and Gen2/Gen3 devices using RPC and WebSocket protocols. Users can easily connect to Shelly devices, retrieve status information, and perform various actions through the provided APIs. The repository also includes example scripts for quick testing and usage guidelines for contributors to maintain consistency with the Shelly API.

ai-cms-grapesjs

The Aimeos GrapesJS CMS extension provides a simple to use but powerful page editor for creating content pages based on extensible components. It integrates seamlessly with Laravel applications and allows users to easily manage and display CMS content. The tool also supports Google reCAPTCHA v3 for enhanced security. Users can create and customize pages with various components and manage multi-language setups effortlessly. The extension simplifies the process of creating and managing content pages, making it ideal for developers and businesses looking to enhance their website's content management capabilities.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

For similar tasks

aioaws

Aioaws is an asyncio SDK for some AWS services, providing clean, secure, and easily debuggable access to services like S3, SES, and SNS. It is written from scratch without dependencies on boto or boto3, formatted with black, and includes complete type hints. The library supports various functionalities such as listing, deleting, and generating signed URLs for S3 files, sending emails with attachments and multipart content via SES, and receiving notifications about mail delivery from SES. It also offers AWS Signature Version 4 authentication and has minimal dependencies like aiofiles, cryptography, httpx, and pydantic.

OctoPrint-OctoEverywhere

OctoEverywhere is a cloud-based tool designed to provide free, private, and unlimited remote access to OctoPrint and Klipper printers' web control portals from anywhere. It offers features such as free AI failure detection, webcam streaming, mobile app integration, live streaming, printer notifications, secure portal sharing, plugin functionality, and multicam support. With a high Trustpilot rating and a large user base, OctoEverywhere aims to empower the maker community with easy and efficient printer management.

shark-chat-js

Shark Chat is a feature-rich chat application built with Trpc, Tailwind CSS, Ably, Redis, Cloudinary, Drizzle ORM, and Next.js. It allows users to create, update, and delete chat groups, send messages with markdown support, reference messages, embed links, send images/files, have direct messages, manage group members, upload images, receive notifications, use AI-powered features, delete accounts, and switch between light and dark modes. The project is 100% TypeScript and can be played with online or locally after setting up various third-party services.

jd_scripts

jd_scripts is a repository containing scripts for automating various tasks on the JD platform. The scripts provide instructions for setting up and using the tools to enhance user experience and efficiency in managing JD accounts and assets. Users can automate processes such as receiving notifications, redeeming rewards, participating in group purchases, and monitoring ticket availability. The repository also includes resources for optimizing performance and security measures to safeguard user accounts. With a focus on simplifying interactions with the JD platform, jd_scripts offers a comprehensive solution for maximizing benefits and convenience for JD users.

ai-trend-publish

AI TrendPublish is an AI-based trend discovery and content publishing system that supports multi-source data collection, intelligent summarization, and automatic publishing to WeChat official accounts. It features data collection from various sources, AI-powered content processing using DeepseekAI Together, key information extraction, intelligent title generation, automatic article publishing to WeChat official accounts with custom templates and scheduled tasks, notification system integration with Bark for task status updates and error alerts. The tool offers multiple templates for content customization and is built using Node.js + TypeScript with AI services from DeepseekAI Together, data sources including Twitter/X API and FireCrawl, and uses node-cron for scheduling tasks and EJS as the template engine.

HuggingArxivLLM

HuggingArxiv is a tool designed to push research papers related to large language models from Arxiv. It helps users stay updated with the latest developments in the field of large language models by providing notifications and access to relevant papers.

clearcam

Clearcam is a tool that allows users to turn their RTSP enabled camera or old iPhone into a state-of-the-art AI security camera. Users can install and run NVR + inference with homebrew or in Python from source. The tool also provides an iOS app for remote viewing of live camera feeds, receiving notifications on events, viewing event clips, and ensuring end-to-end encryption on all data. Users can sign up for Clearcam Premium to access additional features and functionalities.

Avalonia-Assistant

Avalonia-Assistant is an open-source desktop intelligent assistant that aims to provide a user-friendly interactive experience based on the Avalonia UI framework and the integration of Semantic Kernel with OpenAI or other large LLM models. By utilizing Avalonia-Assistant, you can perform various desktop operations through text or voice commands, enhancing your productivity and daily office experience.

For similar jobs

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.

pluto

Pluto is a development tool dedicated to helping developers **build cloud and AI applications more conveniently** , resolving issues such as the challenging deployment of AI applications and open-source models. Developers are able to write applications in familiar programming languages like **Python and TypeScript** , **directly defining and utilizing the cloud resources necessary for the application within their code base** , such as AWS SageMaker, DynamoDB, and more. Pluto automatically deduces the infrastructure resource needs of the app through **static program analysis** and proceeds to create these resources on the specified cloud platform, **simplifying the resources creation and application deployment process**.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

aioconsole

aioconsole is a Python package that provides asynchronous console and interfaces for asyncio. It offers asynchronous equivalents to input, print, exec, and code.interact, an interactive loop running the asynchronous Python console, customization and running of command line interfaces using argparse, stream support to serve interfaces instead of using standard streams, and the apython script to access asyncio code at runtime without modifying the sources. The package requires Python version 3.8 or higher and can be installed from PyPI or GitHub. It allows users to run Python files or modules with a modified asyncio policy, replacing the default event loop with an interactive loop. aioconsole is useful for scenarios where users need to interact with asyncio code in a console environment.

aiosqlite

aiosqlite is a Python library that provides a friendly, async interface to SQLite databases. It replicates the standard sqlite3 module but with async versions of all the standard connection and cursor methods, along with context managers for automatically closing connections and cursors. It allows interaction with SQLite databases on the main AsyncIO event loop without blocking execution of other coroutines while waiting for queries or data fetches. The library also replicates most of the advanced features of sqlite3, such as row factories and total changes tracking.