ai-trend-publish

TrendPublish: 全自动 AI 内容生成与发布系统 | 微信公众号自动化 | 多源数据抓取 (Twitter/X、网站) | DeepseekAI、千问、讯飞模型 | 智能内容分析排序 | 定时发布 | 多模板支持 | Node.js | TypeScript | AI 技术趋势跟踪工具

Stars: 1208

AI TrendPublish is an AI-based trend discovery and content publishing system that supports multi-source data collection, intelligent summarization, and automatic publishing to WeChat official accounts. It features data collection from various sources, AI-powered content processing using DeepseekAI Together, key information extraction, intelligent title generation, automatic article publishing to WeChat official accounts with custom templates and scheduled tasks, notification system integration with Bark for task status updates and error alerts. The tool offers multiple templates for content customization and is built using Node.js + TypeScript with AI services from DeepseekAI Together, data sources including Twitter/X API and FireCrawl, and uses node-cron for scheduling tasks and EJS as the template engine.

README:

基于 Deno 开发的趋势发现和内容发布系统,支持多源数据采集、智能总结和自动发布到微信公众号。

🌰 示例公众号:AISPACE科技空间

即刻关注,体验 AI 智能创作的内容~

- 运行环境: Deno v2.0.0 或更高版本

- 开发语言: TypeScript

- 操作系统: Windows/Linux/MacOS

Windows (PowerShell):

irm https://deno.land/install.ps1 | iexMacOS/Linux:

curl -fsSL https://deno.land/install.sh | shgit clone https://github.com/OpenAISpace/ai-trend-publish

cd ai-trend-publishcp .env.example .env

# 编辑 .env 文件配置必要的环境变量# 开发模式(支持热重载)

deno task start

# 测试运行

deno task test

# 编译Windows版本

deno task build:win

# 编译Mac版本

deno task build:mac-x64 # Intel芯片

deno task build:mac-arm64 # M系列芯片

# 编译Linux版本

deno task build:linux-x64 # x64架构

deno task build:linux-arm64 # ARM架构

# 编译所有平台版本

deno task build:all-

🤖 多源数据采集

- Twitter/X 内容抓取

- 网站内容抓取 (基于 FireCrawl)

- 支持自定义数据源配置

-

🧠 AI 智能处理

- 使用 DeepseekAI Together 千问 万象 讯飞 进行内容总结

- 关键信息提取

- 智能标题生成

-

📢 自动发布

- 微信公众号文章发布

- 自定义文章模板

- 定时发布任务

-

📱 通知系统

- Bark 通知集成

- 任务执行状态通知

- 错误告警

TrendPublish 提供了多种精美的文章模板。查看 模板展示页面 了解更多详情。

- [x] 微信公众号文章发布

- [x] 大模型每周排行榜

- [x] 热门AI相关仓库推荐

- [x] 添加通义千问(Qwen)支持

- [x] 支持多模型配置(如 DEEPSEEK_MODEL="deepseek-chat|deepseek-reasoner")

- [x] 支持指定特定模型(如 AI_CONTENT_RANKER_LLM_PROVIDER="DEEPSEEK:deepseek-reasoner")

- [ ] 热门AI相关论文推荐

- [ ] 热门AI相关工具推荐

- [ ] FireCrawl 自动注册免费续期

- [ ] 内容插入相关图片

- [x] 内容去重

- [ ] 降低AI率

- [ ] 文章图片优化

- [ ] ...

- [ ] 提供exe可视化界面

- 运行环境: Deno + TypeScript

- AI 服务: DeepseekAI Together 千问 万象 讯飞

-

数据源:

- Twitter/X API

- FireCrawl

- 模板引擎: EJS

-

开发工具:

- Deno

- TypeScript

- Deno (v2+)

- TypeScript

- 克隆项目

git clone https://github.com/OpenAISpace/ai-trend-publish- 配置环境变量

cp .env.example .env

# 编辑 .env 文件配置必要的环境变量在 .env 文件中配置以下必要的环境变量:

# ===================================

# 基础服务配置

# ===================================

# LLM 服务配置

# OpenAI API配置

OPENAI_BASE_URL="https://api.openai.com/v1"

OPENAI_API_KEY="your_api_key"

OPENAI_MODEL="gpt-3.5-turbo"

# DeepseekAI API配置 https://api-docs.deepseek.com/

DEEPSEEK_BASE_URL="https://api.deepseek.com/v1"

DEEPSEEK_API_KEY="your_api_key"

# 支持配置多个模型,使用 | 分隔

DEEPSEEK_MODEL="deepseek-chat|deepseek-reasoner"

# 讯飞API配置 https://www.xfyun.cn/

XUNFEI_API_KEY="your_api_key"

# 通义千问API配置 https://help.aliyun.com/zh/dashscope/developer-reference/api-details

QWEN_BASE_URL="https://dashscope.aliyuncs.com/api/v1"

QWEN_API_KEY="your_api_key"

QWEN_MODEL="qwen-max"

# 自定义LLM API配置(需要兼容OpenAI API格式)

CUSTOM_LLM_BASE_URL="your_api_base_url"

CUSTOM_LLM_API_KEY="your_api_key"

CUSTOM_LLM_MODEL="your_model_name"

# 默认使用的LLM提供者

# 可选值: OPENAI | DEEPSEEK | XUNFEI | QWEN | CUSTOM

# 也可以指定具体模型,格式为 "提供者:模型名称",例如 "DEEPSEEK:deepseek-reasoner"

DEFAULT_LLM_PROVIDER="DEEPSEEK"

# ===================================

# 模块功能配置

# ===================================

# 注意:使用以下配置前,请确保已在上方正确配置了对应的 LLM 服务参数

# 内容排名和摘要模块LLM提供者配置

# 可选值: OPENAI | DEEPSEEK | XUNFEI | QWEN | CUSTOM

# 也可以指定具体模型,格式为 "提供者:模型名称",例如 "DEEPSEEK:deepseek-reasoner"

AI_CONTENT_RANKER_LLM_PROVIDER="DEEPSEEK:deepseek-reasoner"

AI_SUMMARIZER_LLM_PROVIDER="DEEPSEEK"

# 模板配置

# 文章模板类型配置,可选值: default | modern | tech | mianpro | random

ARTICLE_TEMPLATE_TYPE="default"

# HelloGitHub模板类型配置,可选值: weixin | random

HELLOGITHUB_TEMPLATE_TYPE="default"

# AIBench模板类型配置,可选值: default | random

AIBENCH_TEMPLATE_TYPE="default"

# 文章数量配置

ARTICLE_NUM=10

# 数据存储配置

ENABLE_DB=true

DB_HOST=localhost

DB_PORT=3306

DB_USER=root

DB_PASSWORD=password

DB_DATABASE=trendfinder

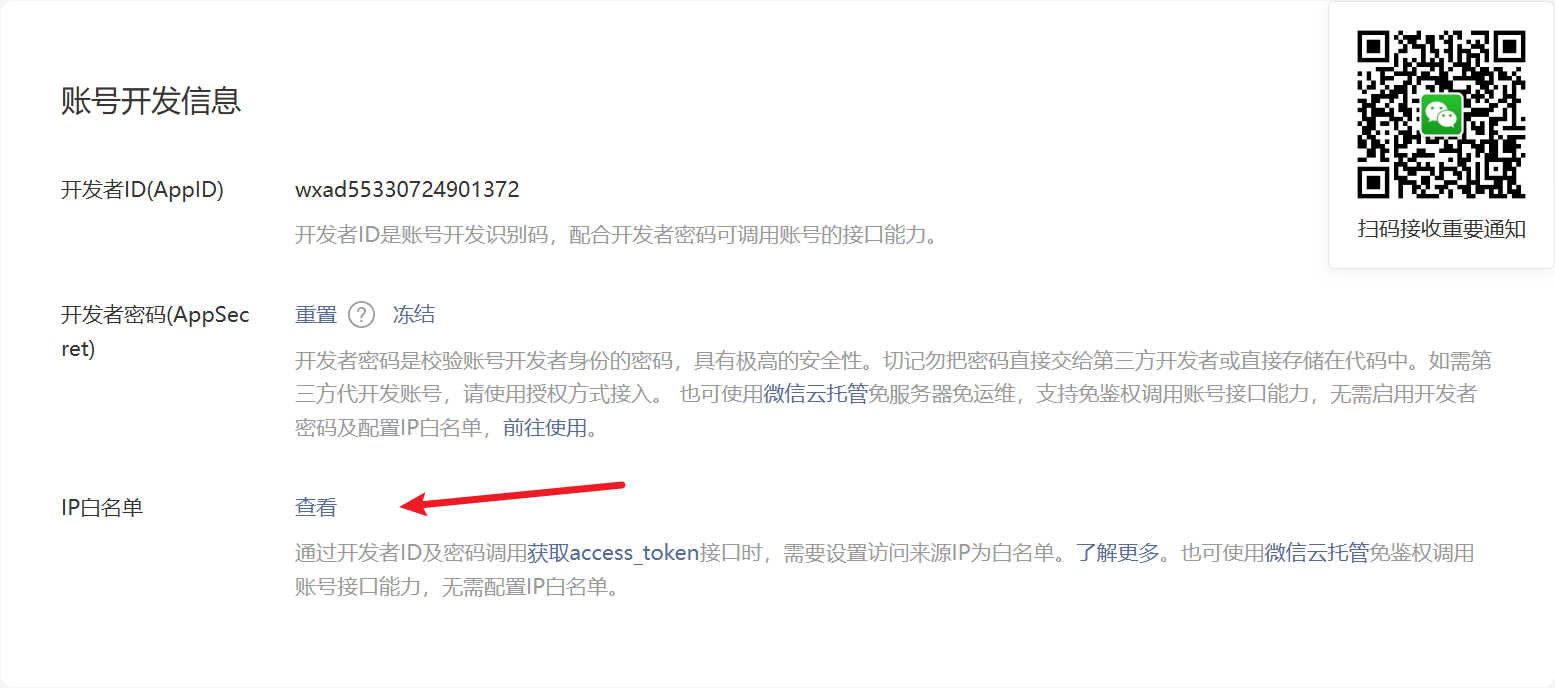

# 微信公众号配置

WEIXIN_APP_ID="your_app_id"

WEIXIN_APP_SECRET="your_app_secret"

# 微信文章配置

NEED_OPEN_COMMENT=false

ONLY_FANS_CAN_COMMENT=false

AUTHOR="your_name"

# 数据抓取配置

# FireCrawl配置 https://www.firecrawl.dev/

FIRE_CRAWL_API_KEY="your_api_key"

# Twitter API配置 https://twitterapi.io/

X_API_BEARER_TOKEN="your_api_key"

# ===================================

# 其他通用配置

# ===================================

# 通知服务配置

ENABLE_BARK=false

BARK_URL="your_key"

# 钉钉通知配置 关键词为:通知

ENABLE_DINGDING=true # 是否启用钉钉通知

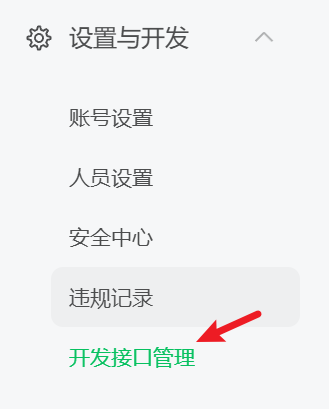

DINGDING_WEBHOOK="your_webhook_url" # 钉钉机器人的 Webhook URL在使用微信公众号相关功能前,请先将本机IP添加到公众号后台的IP白名单中。

- 查看本机IP: IP查询工具

- 登录微信公众号后台,添加IP白名单

- 启动项目

# 测试模式

deno task test

# 运行

deno start start

详细运行时间见 src\controllers\cron.ts- 在服务器上安装 Deno

Windows:

irm https://deno.land/install.ps1 | iexLinux/MacOS:

curl -fsSL https://deno.land/install.sh | sh- 克隆项目

git clone https://github.com/OpenAISpace/ai-trend-publish.git

cd ai-trend-publish- 配置环境变量

cp .env.example .env

# 编辑 .env 文件配置必要的环境变量- 启动服务

# 开发模式(支持热重载)

deno task start

# 测试模式运行

deno task test

# 使用PM2进行进程管理(推荐)

npm install -g pm2

pm2 start --interpreter="deno" --interpreter-args="run --allow-all" src/main.ts- 设置开机自启(可选)

# 使用PM2设置开机自启

pm2 startup

pm2 save- 拉取代码

git clone https://github.com/OpenAISpace/ai-trend-publish.git- 构建 Docker 镜像:

# 构建镜像

docker build -t ai-trend-publsih .- 运行容器:

# 方式1:通过环境变量文件运行

docker run -d --env-file .env --name ai-trend-publsih-container ai-trend-publsih

# 方式2:直接指定环境变量运行

docker run -d \

-e XXXX=XXXX \

...其他环境变量... \

--name ai-trend-publsih-container \

ai-trend-publsih项目已配置 GitHub Actions 自动部署流程:

- 推送代码到 main 分支会自动触发部署

- 也可以在 GitHub Actions 页面手动触发部署

- 确保在 GitHub Secrets 中配置以下环境变量:

-

SERVER_HOST: 服务器地址 -

SERVER_USER: 服务器用户名 -

SSH_PRIVATE_KEY: SSH 私钥 - 其他必要的环境变量(参考 .env.example)

-

本项目支持自定义模板开发,主要包含以下几个部分:

查看 src/modules/render/interfaces

目录下的类型定义文件,了解各个渲染模块需要的数据结构

在 src/templates 目录下按照对应模块开发 EJS 模板

在对应的渲染器类中注册新模板,如 WeixinArticleTemplateRenderer:

npx ts-node -r tsconfig-paths/register src\modules\render\test\test.weixin.template.ts

- Fork 本仓库

- 创建特性分支 (

git checkout -b feature/amazing-feature) - 提交更改 (

git commit -m 'Add some amazing feature') - 推送到分支 (

git push origin feature/amazing-feature) - 提交 Pull Request

感谢以下贡献者对项目的支持:

本项目采用 MIT 许可证 - 详见 LICENSE 文件

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-trend-publish

Similar Open Source Tools

ai-trend-publish

AI TrendPublish is an AI-based trend discovery and content publishing system that supports multi-source data collection, intelligent summarization, and automatic publishing to WeChat official accounts. It features data collection from various sources, AI-powered content processing using DeepseekAI Together, key information extraction, intelligent title generation, automatic article publishing to WeChat official accounts with custom templates and scheduled tasks, notification system integration with Bark for task status updates and error alerts. The tool offers multiple templates for content customization and is built using Node.js + TypeScript with AI services from DeepseekAI Together, data sources including Twitter/X API and FireCrawl, and uses node-cron for scheduling tasks and EJS as the template engine.

HuaTuoAI

HuaTuoAI is an artificial intelligence image classification system specifically designed for traditional Chinese medicine. It utilizes deep learning techniques, such as Convolutional Neural Networks (CNN), to accurately classify Chinese herbs and ingredients based on input images. The project aims to unlock the secrets of plants, depict the unknown realm of Chinese medicine using technology and intelligence, and perpetuate ancient cultural heritage.

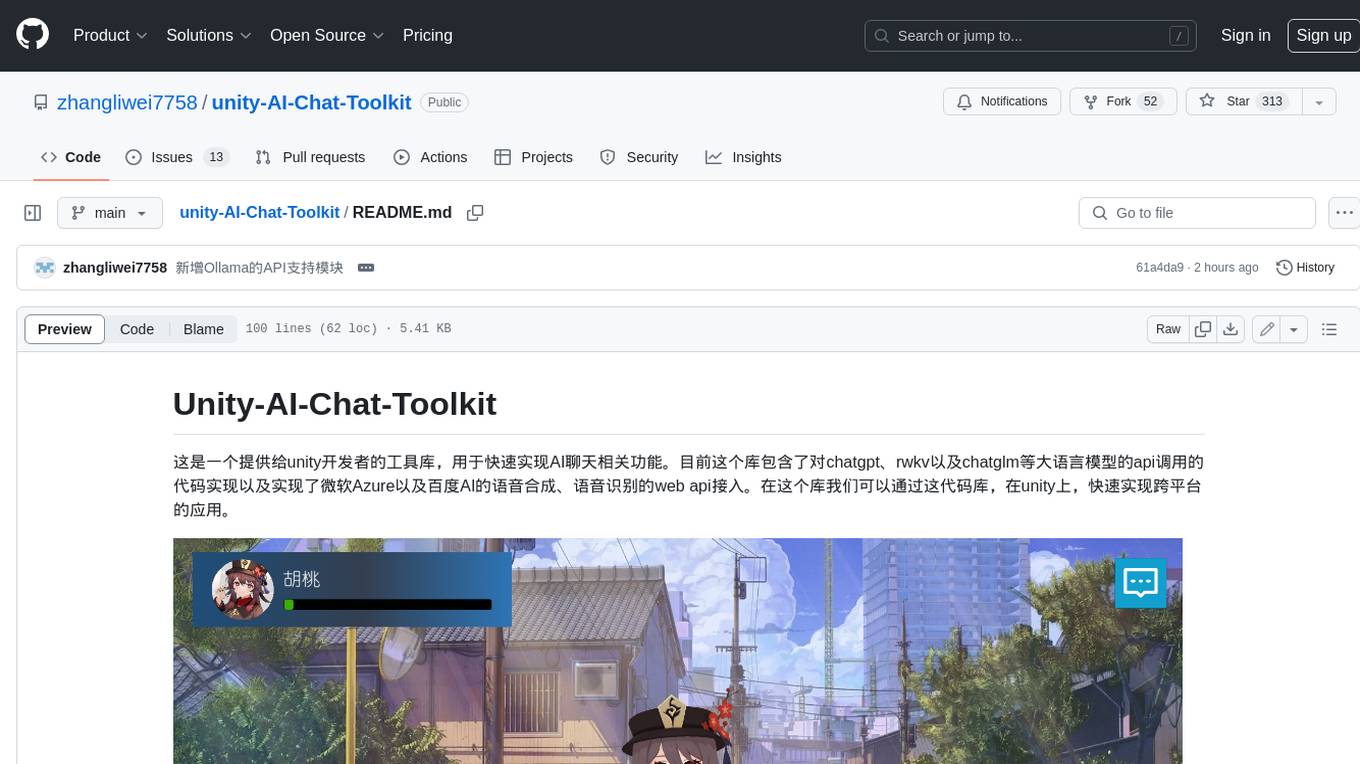

unity-AI-Chat-Toolkit

The Unity-AI-Chat-Toolkit is a toolset for Unity developers to quickly implement AI chat-related functions. Currently, this library includes code implementations for API calls to large language models such as ChatGPT, RKV, and ChatGLM, as well as web API access to Microsoft Azure and Baidu AI for speech synthesis and speech recognition. With this library, we can quickly implement cross-platform applications on Unity.

Thor

Thor is a powerful AI model management tool designed for unified management and usage of various AI models. It offers features such as user, channel, and token management, data statistics preview, log viewing, system settings, external chat link integration, and Alipay account balance purchase. Thor supports multiple AI models including OpenAI, Kimi, Starfire, Claudia, Zhilu AI, Ollama, Tongyi Qianwen, AzureOpenAI, and Tencent Hybrid models. It also supports various databases like SqlServer, PostgreSql, Sqlite, and MySql, allowing users to choose the appropriate database based on their needs.

Streamer-Sales

Streamer-Sales is a large model for live streamers that can explain products based on their characteristics and inspire users to make purchases. It is designed to enhance sales efficiency and user experience, whether for online live sales or offline store promotions. The model can deeply understand product features and create tailored explanations in vivid and precise language, sparking user's desire to purchase. It aims to revolutionize the shopping experience by providing detailed and unique product descriptions to engage users effectively.

astrbot_plugin_self_learning

AstrBot Self-Learning Plugin is a full-featured AI self-learning chat personification solution. It enables chatbots to learn specific user conversation styles, understand slang, manage social relationships and likability, evolve adaptive personalities, guide conversations based on goals, and provide a visual management interface through WebUI. The plugin leverages real-time message capture, multidimensional data analysis, expression pattern learning, dynamic personality optimization, and goal-driven dialogues to enhance chatbot capabilities.

CookHero

CookHero is a personalized diet management platform that integrates LLM, RAG, Agent, multimodal capabilities, and nutrition data analysis. It serves as a recipe library and a 'diet management hero assistant' that helps with planning, recording, data visualization, and providing suggestions to make cooking and health goals achievable in daily life. The platform offers intelligent Q&A, personalized recommendations, meal planning, AI recording, nutrition analysis, deep understanding through dialogue, real-time search, and more. CookHero targets kitchen beginners, fitness/weight loss/sugar control individuals, health advocates, allergy-prone users, and families, aiming to make cooking more professional, intelligent, and sustainable.

TechFlow

TechFlow is a platform that allows users to build their own AI workflows through drag-and-drop functionality. It features a visually appealing interface with clear layout and intuitive navigation. TechFlow supports multiple models beyond Language Models (LLM) and offers flexible integration capabilities. It provides a powerful SDK for developers to easily integrate generated workflows into existing systems, enhancing flexibility and scalability. The platform aims to embed AI capabilities as modules into existing functionalities to enhance business competitiveness.

MINI_LLM

This project is a personal implementation and reproduction of a small-parameter Chinese LLM. It mainly refers to these two open source projects: https://github.com/charent/Phi2-mini-Chinese and https://github.com/DLLXW/baby-llama2-chinese. It includes the complete process of pre-training, SFT instruction fine-tuning, DPO, and PPO (to be done). I hope to share it with everyone and hope that everyone can work together to improve it!

ddddocr

ddddocr is a Rust version of a simple OCR API server that provides easy deployment for captcha recognition without relying on the OpenCV library. It offers a user-friendly general-purpose captcha recognition Rust library. The tool supports recognizing various types of captchas, including single-line text, transparent black PNG images, target detection, and slider matching algorithms. Users can also import custom OCR training models and utilize the OCR API server for flexible OCR result control and range limitation. The tool is cross-platform and can be easily deployed.

ailab

The 'ailab' project is an experimental ground for code generation combining AI (especially coding agents) and Deno. It aims to manage configuration files defining coding rules and modes in Deno projects, enhancing the quality and efficiency of code generation by AI. The project focuses on defining clear rules and modes for AI coding agents, establishing best practices in Deno projects, providing mechanisms for type-safe code generation and validation, applying test-driven development (TDD) workflow to AI coding, and offering implementation examples utilizing design patterns like adapter pattern.

AILZ80ASM

AILZ80ASM is a Z80 assembler that runs in a .NET 8 environment written in C#. It can be used to assemble Z80 assembly code and generate output files in various formats. The tool supports various command-line options for customization and provides features like macros, conditional assembly, and error checking. AILZ80ASM offers good performance metrics with fast assembly times and efficient output file sizes. It also includes support for handling different file encodings and provides a range of built-in functions for working with labels, expressions, and data types.

dify-chat

Dify Chat Web is an AI conversation web app based on the Dify API, compatible with DeepSeek, Dify Chatflow/Workflow applications, and Agent Mind Chain output information. It supports multiple scenarios, flexible deployment without backend dependencies, efficient integration with reusable React components, and style customization for unique business system styles.

LangChain-SearXNG

LangChain-SearXNG is an open-source AI search engine built on LangChain and SearXNG. It supports faster and more accurate search and question-answering functionalities. Users can deploy SearXNG and set up Python environment to run LangChain-SearXNG. The tool integrates AI models like OpenAI and ZhipuAI for search queries. It offers two search modes: Searxng and ZhipuWebSearch, allowing users to control the search workflow based on input parameters. LangChain-SearXNG v2 version enhances response speed and content quality compared to the previous version, providing a detailed configuration guide and showcasing the effectiveness of different search modes through comparisons.

huge-ai-search

Huge AI Search MCP Server integrates Google AI Mode search into clients like Cursor, Claude Code, and Codex, supporting continuous follow-up questions and source links. It allows AI clients to directly call 'huge-ai-search' for online searches, providing AI summary results and source links. The tool supports text and image searches, with the ability to ask follow-up questions in the same session. It requires Microsoft Edge for installation and supports various IDEs like VS Code. The tool can be used for tasks such as searching for specific information, asking detailed questions, and avoiding common pitfalls in development tasks.

For similar tasks

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

basehub

JavaScript / TypeScript SDK for BaseHub, the first AI-native content hub. **Features:** * ✨ Infers types from your BaseHub repository... _meaning IDE autocompletion works great._ * 🏎️ No dependency on graphql... _meaning your bundle is more lightweight._ * 🌐 Works everywhere `fetch` is supported... _meaning you can use it anywhere._

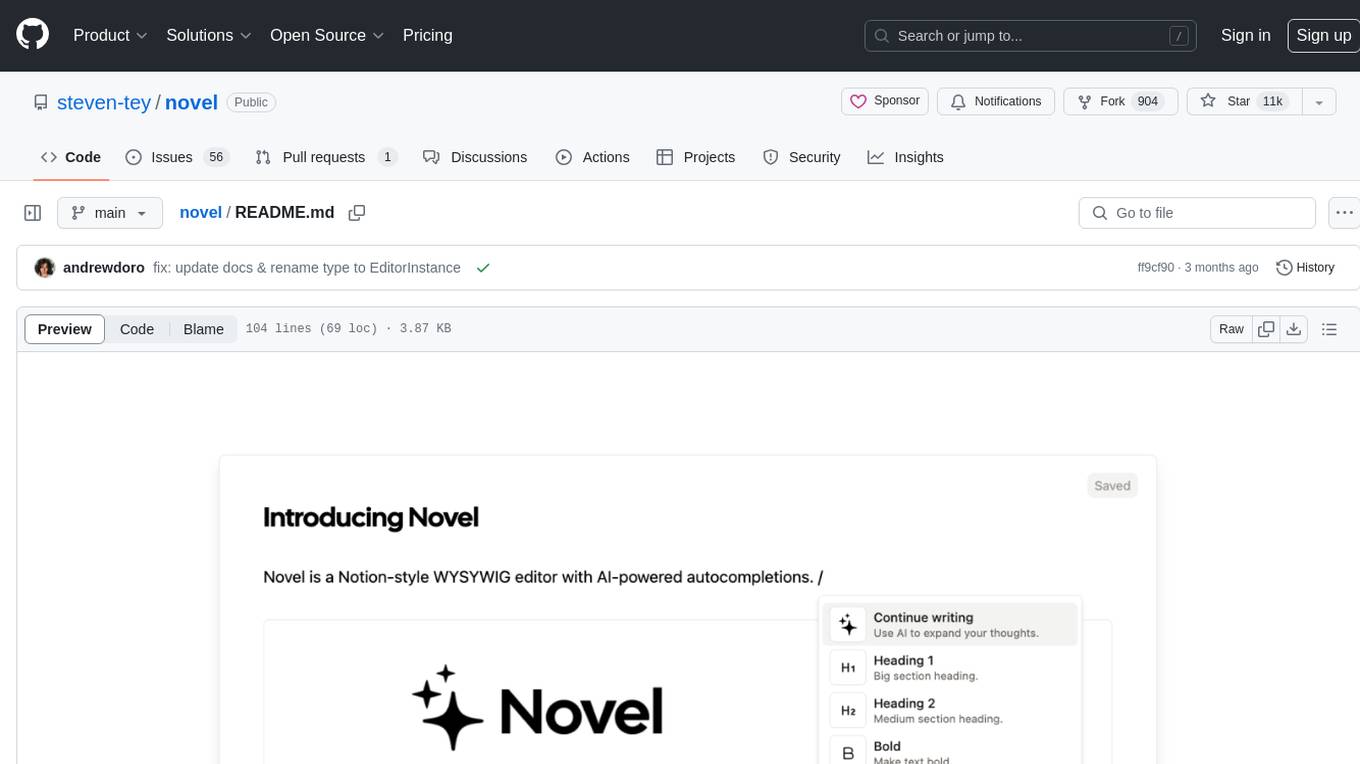

novel

Novel is an open-source Notion-style WYSIWYG editor with AI-powered autocompletions. It allows users to easily create and edit content with the help of AI suggestions. The tool is built on a modern tech stack and supports cross-framework development. Users can deploy their own version of Novel to Vercel with one click and contribute to the project by reporting bugs or making feature enhancements through pull requests.

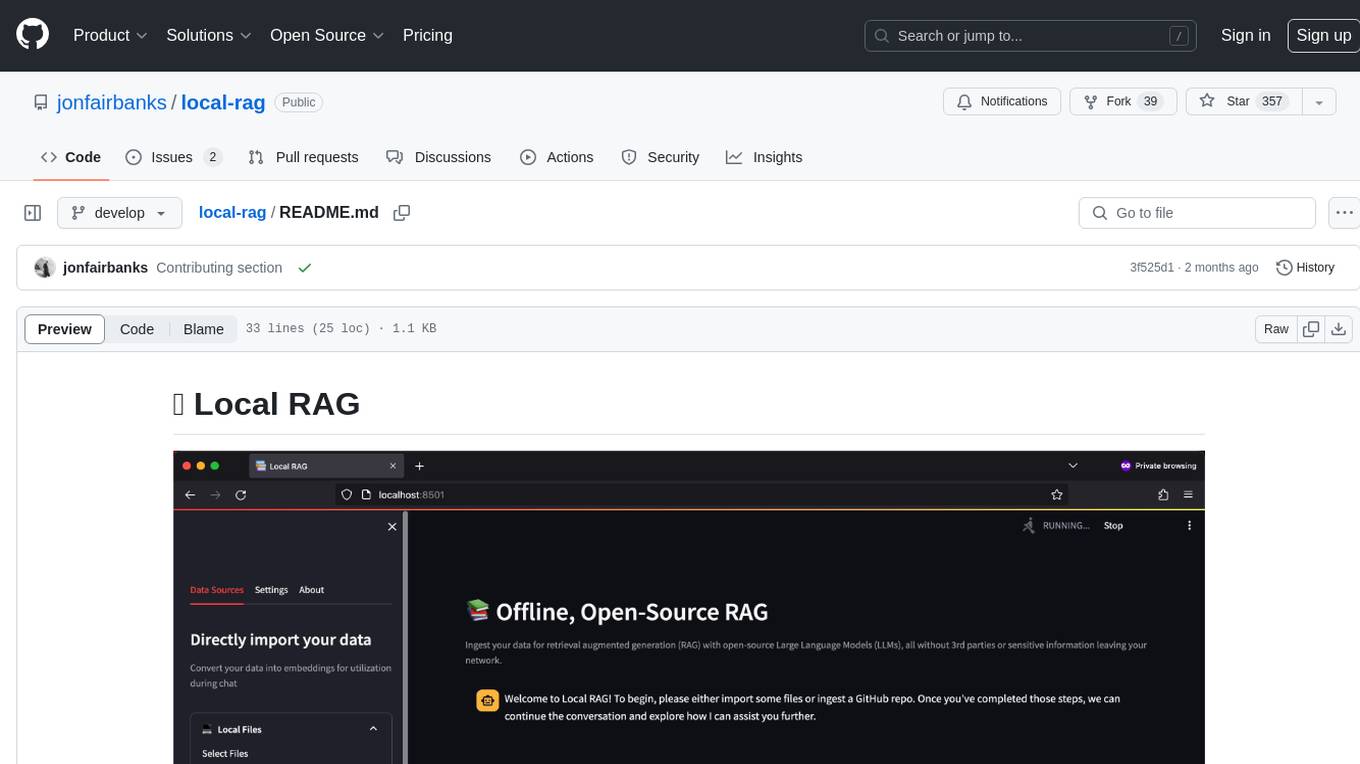

local-rag

Local RAG is an offline, open-source tool that allows users to ingest files for retrieval augmented generation (RAG) using large language models (LLMs) without relying on third parties or exposing sensitive data. It supports offline embeddings and LLMs, multiple sources including local files, GitHub repos, and websites, streaming responses, conversational memory, and chat export. Users can set up and deploy the app, learn how to use Local RAG, explore the RAG pipeline, check planned features, known bugs and issues, access additional resources, and contribute to the project.

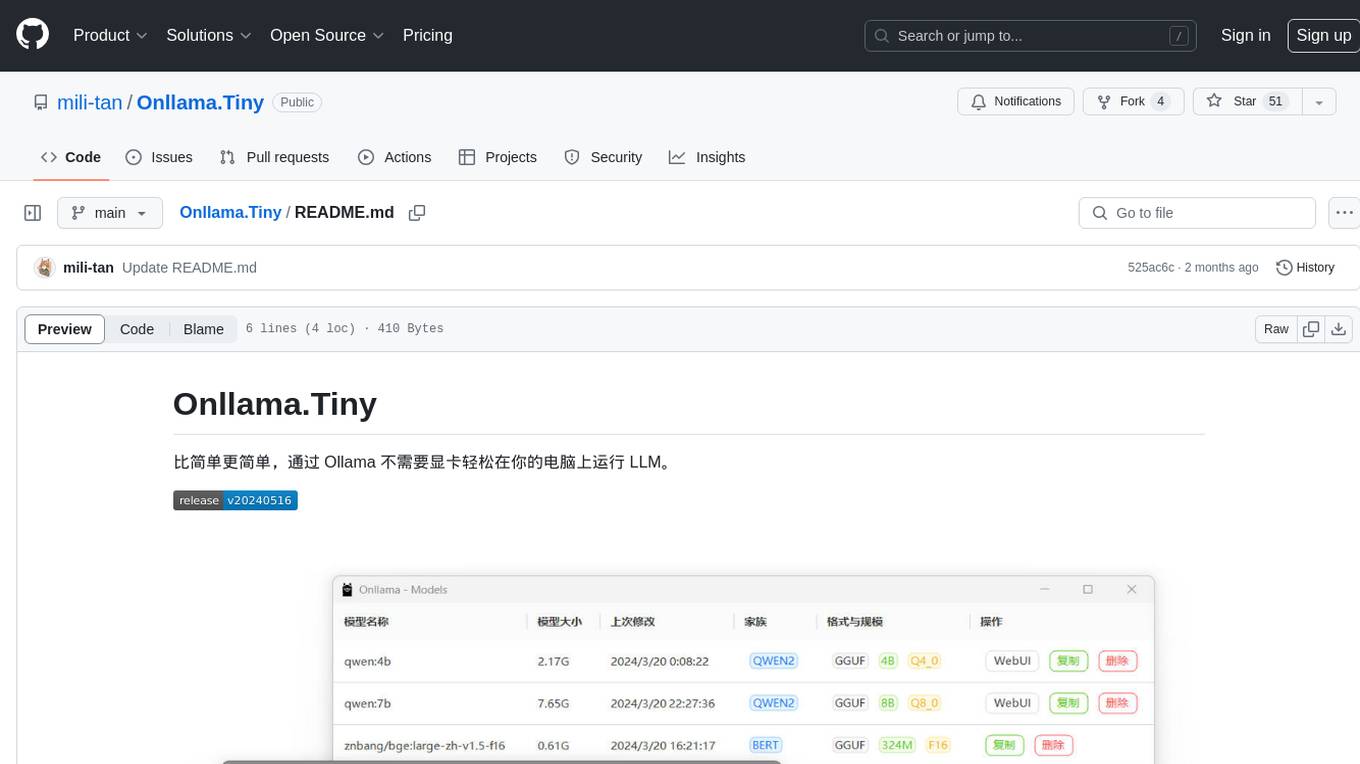

Onllama.Tiny

Onllama.Tiny is a lightweight tool that allows you to easily run LLM on your computer without the need for a dedicated graphics card. It simplifies the process of running LLM, making it more accessible for users. The tool provides a user-friendly interface and streamlines the setup and configuration required to run LLM on your machine. With Onllama.Tiny, users can quickly set up and start using LLM for various applications and projects.

ComfyUI-BRIA_AI-RMBG

ComfyUI-BRIA_AI-RMBG is an unofficial implementation of the BRIA Background Removal v1.4 model for ComfyUI. The tool supports batch processing, including video background removal, and introduces a new mask output feature. Users can install the tool using ComfyUI Manager or manually by cloning the repository. The tool includes nodes for automatically loading the Removal v1.4 model and removing backgrounds. Updates include support for batch processing and the addition of a mask output feature.

enterprise-h2ogpte

Enterprise h2oGPTe - GenAI RAG is a repository containing code examples, notebooks, and benchmarks for the enterprise version of h2oGPTe, a powerful AI tool for generating text based on the RAG (Retrieval-Augmented Generation) architecture. The repository provides resources for leveraging h2oGPTe in enterprise settings, including implementation guides, performance evaluations, and best practices. Users can explore various applications of h2oGPTe in natural language processing tasks, such as text generation, content creation, and conversational AI.

semantic-kernel-docs

The Microsoft Semantic Kernel Documentation GitHub repository contains technical product documentation for Semantic Kernel. It serves as the home of technical content for Microsoft products and services. Contributors can learn how to make contributions by following the Docs contributor guide. The project follows the Microsoft Open Source Code of Conduct.

For similar jobs

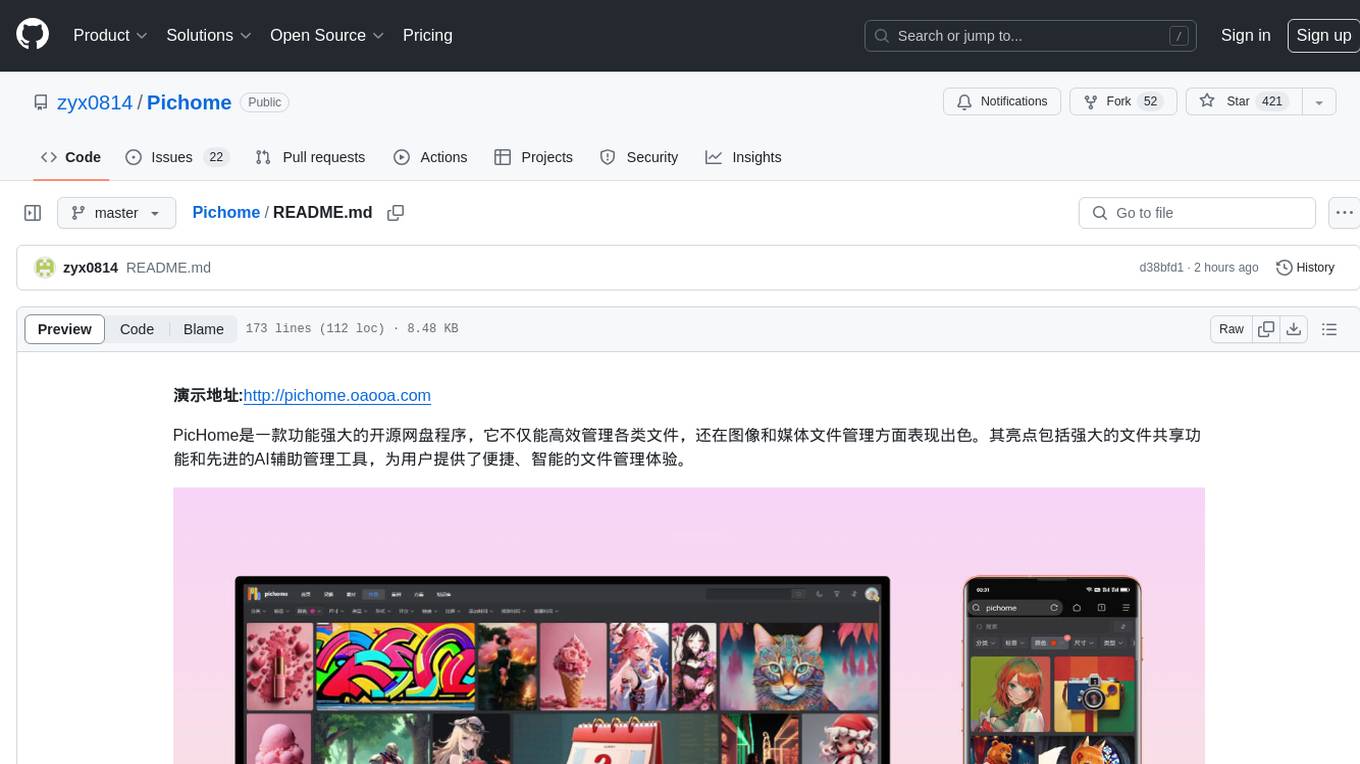

Pichome

PicHome is a powerful open-source cloud storage program that efficiently manages various types of files and excels in image and media file management. Its highlights include robust file sharing features and advanced AI-assisted management tools, providing users with a convenient and intelligent file management experience. The program offers diverse list modes, customizable file information display, enhanced quick file preview, advanced tagging, custom cover and preview images, multiple preview images, and multi-library management. Additionally, PicHome features strong file sharing capabilities, allowing users to share entire libraries, create personalized showcase web pages, and build complete data sharing websites. The AI-assisted management aspect includes AI file renaming, tagging, description writing, batch annotation, and file Q&A services, all aimed at improving file management efficiency. PicHome supports a wide range of file formats and can be applied in various scenarios such as e-commerce, gaming, design, development, enterprises, schools, labs, media, and entertainment institutions.

machine-learning-research

The 'machine-learning-research' repository is a comprehensive collection of resources related to mathematics, machine learning, deep learning, artificial intelligence, data science, and various scientific fields. It includes materials such as courses, tutorials, books, podcasts, communities, online courses, papers, and dissertations. The repository covers topics ranging from fundamental math skills to advanced machine learning concepts, with a focus on applications in healthcare, genetics, computational biology, precision health, and AI in science. It serves as a valuable resource for individuals interested in learning and researching in the fields of machine learning and related disciplines.

Awesome-TimeSeries-SpatioTemporal-LM-LLM

Awesome-TimeSeries-SpatioTemporal-LM-LLM is a curated list of Large (Language) Models and Foundation Models for Temporal Data, including Time Series, Spatio-temporal, and Event Data. The repository aims to summarize recent advances in Large Models and Foundation Models for Time Series and Spatio-Temporal Data with resources such as papers, code, and data. It covers various applications like General Time Series Analysis, Transportation, Finance, Healthcare, Event Analysis, Climate, Video Data, and more. The repository also includes related resources, surveys, and papers on Large Language Models, Foundation Models, and their applications in AIOps.

moon

Moon is a monitoring and alerting platform suitable for multiple domains, supporting various application scenarios such as cloud-native, Internet of Things (IoT), and Artificial Intelligence (AI). It simplifies operational work of cloud-native monitoring, boasts strong IoT and AI support capabilities, and meets diverse monitoring needs across industries. Capable of real-time data monitoring, intelligent alerts, and fault response for various fields.

DownEdit

DownEdit is a fast and powerful program for downloading and editing videos from platforms like TikTok, Douyin, and Kuaishou. It allows users to effortlessly grab videos, make bulk edits, and utilize advanced AI features for generating videos, images, and sounds in bulk. The tool offers features like video, photo, and sound editing, downloading videos without watermarks, bulk AI generation, and AI editing for content enhancement.

ai-trend-publish

AI TrendPublish is an AI-based trend discovery and content publishing system that supports multi-source data collection, intelligent summarization, and automatic publishing to WeChat official accounts. It features data collection from various sources, AI-powered content processing using DeepseekAI Together, key information extraction, intelligent title generation, automatic article publishing to WeChat official accounts with custom templates and scheduled tasks, notification system integration with Bark for task status updates and error alerts. The tool offers multiple templates for content customization and is built using Node.js + TypeScript with AI services from DeepseekAI Together, data sources including Twitter/X API and FireCrawl, and uses node-cron for scheduling tasks and EJS as the template engine.

llm.hunyuan.T1

Hunyuan-T1 is a cutting-edge large-scale hybrid Mamba reasoning model driven by reinforcement learning. It has been officially released as an upgrade to the Hunyuan Thinker-1-Preview model. The model showcases exceptional performance in deep reasoning tasks, leveraging the TurboS base and Mamba architecture to enhance inference capabilities and align with human preferences. With a focus on reinforcement learning training, the model excels in various reasoning tasks across different domains, showcasing superior abilities in mathematical, logical, scientific, and coding reasoning. Through innovative training strategies and alignment with human preferences, Hunyuan-T1 demonstrates remarkable performance in public benchmarks and internal evaluations, positioning itself as a leading model in the field of reasoning.

DownEdit

DownEdit is a fast and powerful program for downloading and editing videos from top platforms like TikTok, Douyin, and Kuaishou. Effortlessly grab videos from user profiles, make bulk edits throughout the entire directory with just one click. Advanced Chat & AI features let you download, edit, and generate videos, images, and sounds in bulk. Exciting new features are coming soon—stay tuned!