slack-machine

A simple, yet powerful and extendable Slack bot

Stars: 762

Slack Machine is a simple, yet powerful and extendable Slack bot framework. More than just a bot, Slack Machine is a framework that helps you develop your Slack workspace into a ChatOps powerhouse. Slack Machine is built with an intuitive plugin system that lets you build bots quickly, but also allows for easy code organization.

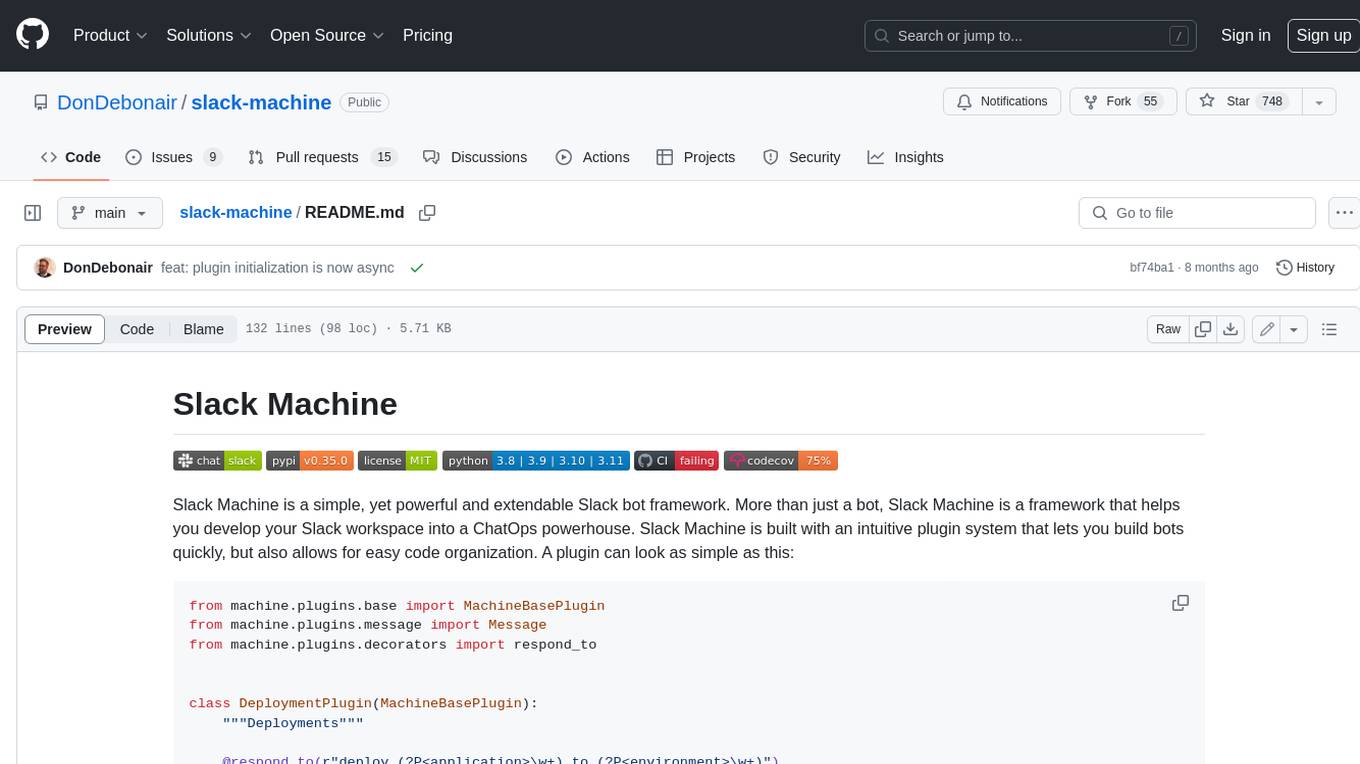

README:

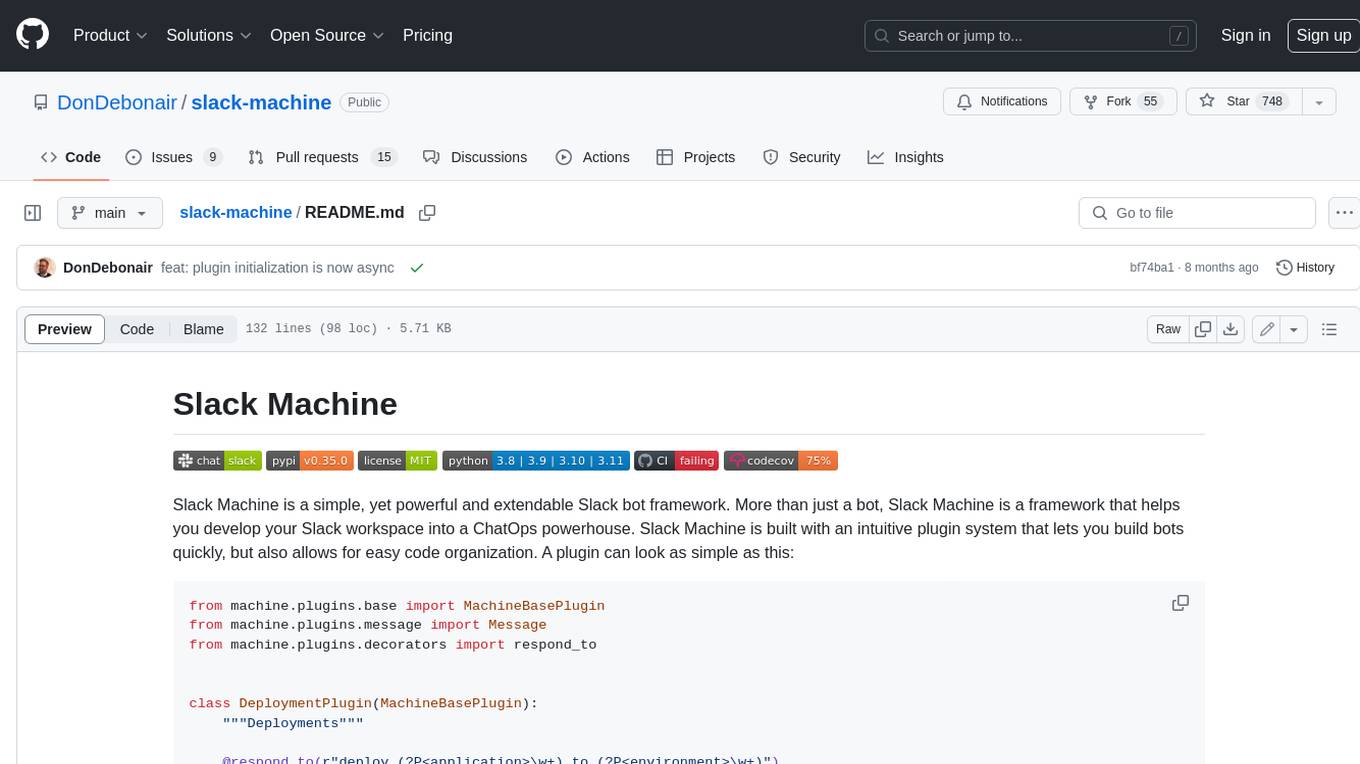

Slack Machine is a simple, yet powerful and extendable Slack bot framework. More than just a bot, Slack Machine is a framework that helps you develop your Slack workspace into a ChatOps powerhouse. Slack Machine is built with an intuitive plugin system that lets you build bots quickly, but also allows for easy code organization. A plugin can look as simple as this:

from machine.plugins.base import MachineBasePlugin

from machine.plugins.message import Message

from machine.plugins.decorators import respond_to

class DeploymentPlugin(MachineBasePlugin):

"""Deployments"""

@respond_to(r"deploy (?P<application>\w+) to (?P<environment>\w+)")

async def deploy(self, msg: Message, application, environment):

"""deploy <application> <environment>: deploy application to target environment"""

await msg.say(f"Deploying {application} to {environment}")Dropped support for Python 3.8 (v0.38.0)

As of v0.38.0, support for Python 3.8 has been dropped. Python 3.8 has reached end-of-life on 2024-10-07.

- Get started with mininal configuration

- Built on top of the Slack Events API for smoothly responding to events in semi real-time. Uses Socket Mode so your bot doesn't need to be exposed to the internet!

- Support for rich interactions using the Slack Web API

- High-level API for maximum convenience when building plugins

- Low-level API for maximum flexibility

- Built on top of AsyncIO to ensure good performance by handling communication with Slack concurrently

- Listen and respond to any regular expression

- Respond to Slash Commands

- Capture parts of messages to use as variables in your functions

- Respond to messages in channels, groups and direct message conversations

- Respond with reactions

- Respond in threads

- Respond with ephemeral messages

- Send DMs to any user

- Support for blocks

- Support for message attachments [Legacy 🏚]

- Support for interactive elements

- Support for modals

- Listen and respond to any Slack event supported by the Events API

- Store and retrieve any kind of data in persistent storage (currently Redis, DynamoDB, SQLite and in-memory storage are supported)

- Schedule actions and messages

- Emit and listen for events

- Help texts for Plugins

- Support for shortcuts

- ... and much more

You can add Slack Machine to your uv project by running:

uv add slack-machineor add it to your Poetry project:

poetry add slack-machineLastly, you can install it using pip (not recommended):

$ pip install slack-machineIt is strongly recommended that you install slack-machine inside a

virtual environment!

-

Create a directory for your Slack Machine bot:

mkdir my-slack-bot && cd my-slack-bot -

Add a

local_settings.pyfile to your bot directory:touch local_settings.py -

Create a new app in Slack: https://api.slack.com/apps

-

Choose to create an app from an App manifest

-

Copy/paste the following manifest:

manifest.yaml -

Add the Slack App and Bot tokens to your

local_settings.pylike this:SLACK_APP_TOKEN = "xapp-my-app-token" SLACK_BOT_TOKEN = "xoxb-my-bot-token" -

Start the bot with

slack-machine -

...

-

Profit!

You can find the documentation for Slack Machine here: https://dondebonair.github.io/slack-machine/

Go read it to learn how to properly configure Slack Machine, write plugins, and more!

There is also an example plugin that shows off many of the features of Slack Machine: Slack Machine Kitchensink Plugin

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for slack-machine

Similar Open Source Tools

slack-machine

Slack Machine is a simple, yet powerful and extendable Slack bot framework. More than just a bot, Slack Machine is a framework that helps you develop your Slack workspace into a ChatOps powerhouse. Slack Machine is built with an intuitive plugin system that lets you build bots quickly, but also allows for easy code organization.

robocorp

Robocorp is a platform that allows users to create, deploy, and operate Python automations and AI actions. It provides an easy way to extend the capabilities of AI agents, assistants, and copilots with custom actions written in Python. Users can create and deploy tools, skills, loaders, and plugins that securely connect any AI Assistant platform to their data and applications. The Robocorp Action Server makes Python scripts compatible with ChatGPT and LangChain by automatically creating and exposing an API based on function declaration, type hints, and docstrings. It simplifies the process of developing and deploying AI actions, enabling users to interact with AI frameworks effortlessly.

actions

Sema4.ai Action Server is a tool that allows users to build semantic actions in Python to connect AI agents with real-world applications. It enables users to create custom actions, skills, loaders, and plugins that securely connect any AI Assistant platform to data and applications. The tool automatically creates and exposes an API based on function declaration, type hints, and docstrings by adding '@action' to Python scripts. It provides an end-to-end stack supporting various connections between AI and user's apps and data, offering ease of use, security, and scalability.

merlinn

Merlinn is an open-source AI-powered on-call engineer that automatically jumps into incidents & alerts, providing useful insights and RCA in real time. It integrates with popular observability tools, lives inside Slack, offers an intuitive UX, and prioritizes security. Users can self-host Merlinn, use it for free, and benefit from automatic RCA, Slack integration, integrations with various tools, intuitive UX, and security features.

torchchat

torchchat is a codebase showcasing the ability to run large language models (LLMs) seamlessly. It allows running LLMs using Python in various environments such as desktop, server, iOS, and Android. The tool supports running models via PyTorch, chatting, generating text, running chat in the browser, and running models on desktop/server without Python. It also provides features like AOT Inductor for faster execution, running in C++ using the runner, and deploying and running on iOS and Android. The tool supports popular hardware and OS including Linux, Mac OS, Android, and iOS, with various data types and execution modes available.

parllama

PAR LLAMA is a Text UI application for managing and using LLMs, designed with Textual and Rich and PAR AI Core. It runs on major OS's including Windows, Windows WSL, Mac, and Linux. Supports Dark and Light mode, custom themes, and various workflows like Ollama chat, image chat, and OpenAI provider chat. Offers features like custom prompts, themes, environment variables configuration, and remote instance connection. Suitable for managing and using LLMs efficiently.

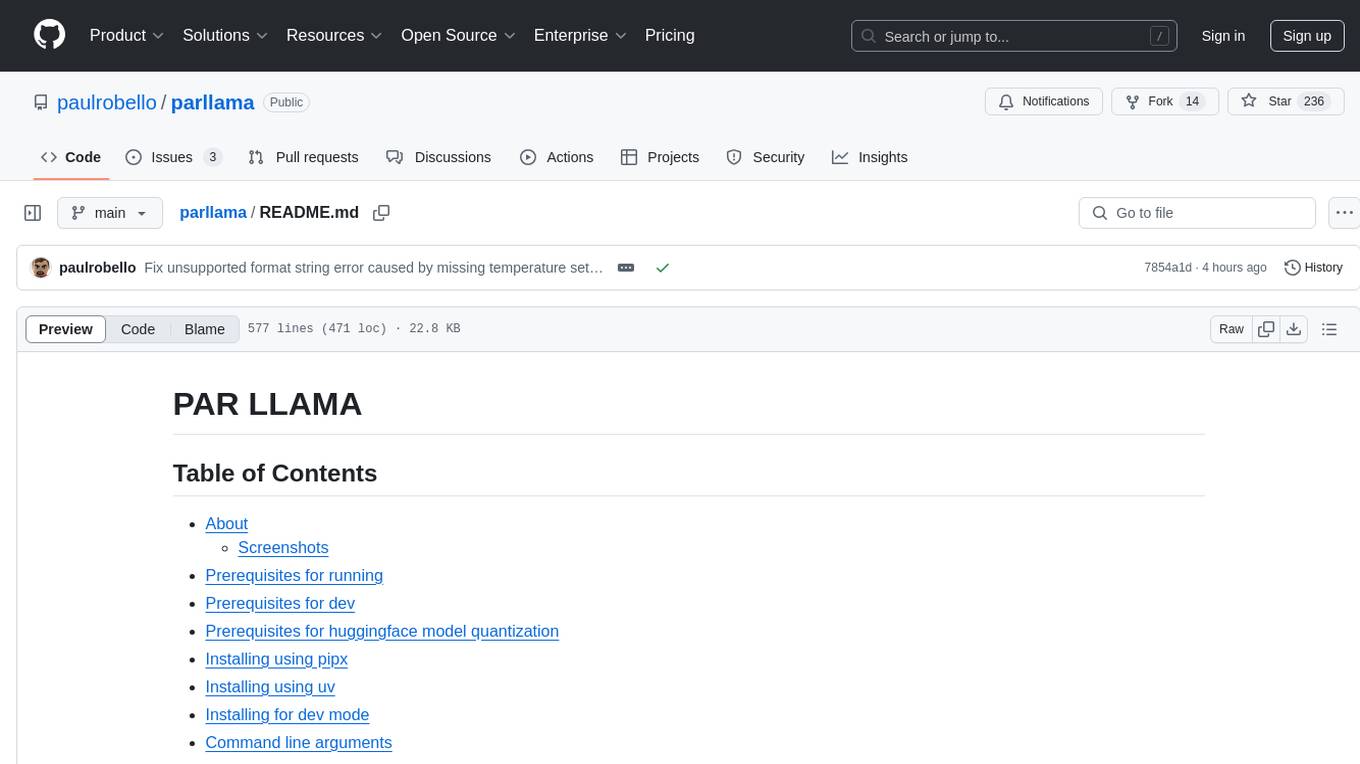

TerminalGPT

TerminalGPT is a terminal-based ChatGPT personal assistant app that allows users to interact with OpenAI GPT-3.5 and GPT-4 language models. It offers advantages over browser-based apps, such as continuous availability, faster replies, and tailored answers. Users can use TerminalGPT in their IDE terminal, ensuring seamless integration with their workflow. The tool prioritizes user privacy by not using conversation data for model training and storing conversations locally on the user's machine.

Fabric

Fabric is an open-source framework designed to augment humans using AI by organizing prompts by real-world tasks. It addresses the integration problem of AI by creating and organizing prompts for various tasks. Users can create, collect, and organize AI solutions in a single place for use in their favorite tools. Fabric also serves as a command-line interface for those focused on the terminal. It offers a wide range of features and capabilities, including support for multiple AI providers, internationalization, speech-to-text, AI reasoning, model management, web search, text-to-speech, desktop notifications, and more. The project aims to help humans flourish by leveraging AI technology to solve human problems and enhance creativity.

voice-chat-ai

Voice Chat AI is a project that allows users to interact with different AI characters using speech. Users can choose from various characters with unique personalities and voices, and have conversations or role play with them. The project supports OpenAI, xAI, or Ollama language models for chat, and provides text-to-speech synthesis using XTTS, OpenAI TTS, or ElevenLabs. Users can seamlessly integrate visual context into conversations by having the AI analyze their screen. The project offers easy configuration through environment variables and can be run via WebUI or Terminal. It also includes a huge selection of built-in characters for engaging conversations.

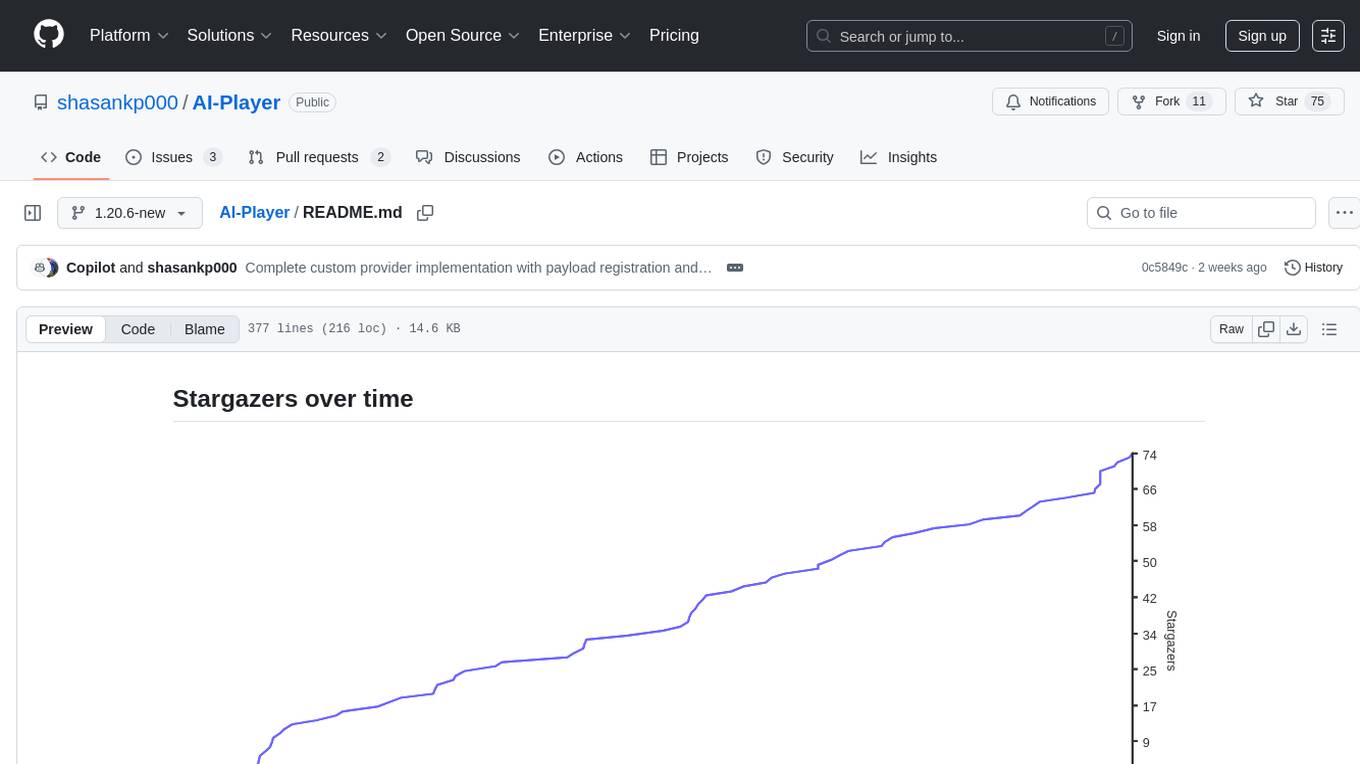

AI-Player

AI-Player is a Minecraft mod that adds an 'intelligent' second player to the game to combat loneliness while playing solo. It aims to enhance gameplay by providing companionship and interactive features. The mod leverages advanced AI algorithms and integrates with external tools to enhance the player experience. Developed with a focus on addressing the social aspect of gaming, AI-Player is a community-driven project that continues to evolve with user feedback and contributions.

testzeus-hercules

Hercules is the world’s first open-source testing agent designed to handle the toughest testing tasks for modern web applications. It turns simple Gherkin steps into fully automated end-to-end tests, making testing simple, reliable, and efficient. Hercules adapts to various platforms like Salesforce and is suitable for CI/CD pipelines. It aims to democratize and disrupt test automation, making top-tier testing accessible to everyone. The tool is transparent, reliable, and community-driven, empowering teams to deliver better software. Hercules offers multiple ways to get started, including using PyPI package, Docker, or building and running from source code. It supports various AI models, provides detailed installation and usage instructions, and integrates with Nuclei for security testing and WCAG for accessibility testing. The tool is production-ready, open core, and open source, with plans for enhanced LLM support, advanced tooling, improved DOM distillation, community contributions, extensive documentation, and a bounty program.

superflows

Superflows is an open-source alternative to OpenAI's Assistant API. It allows developers to easily add an AI assistant to their software products, enabling users to ask questions in natural language and receive answers or have tasks completed by making API calls. Superflows can analyze data, create plots, answer questions based on static knowledge, and even write code. It features a developer dashboard for configuration and testing, stateful streaming API, UI components, and support for multiple LLMs. Superflows can be set up in the cloud or self-hosted, and it provides comprehensive documentation and support.

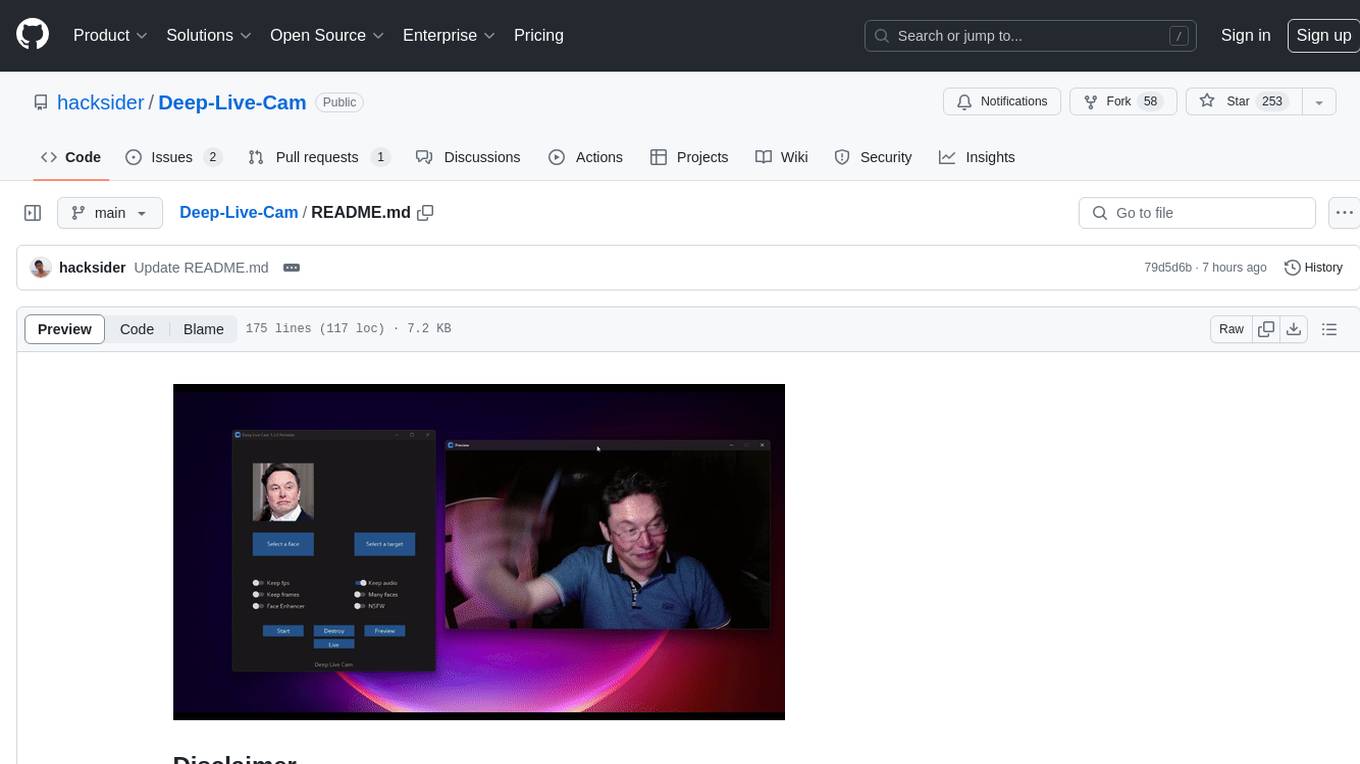

Deep-Live-Cam

Deep-Live-Cam is a software tool designed to assist artists in tasks such as animating custom characters or using characters as models for clothing. The tool includes built-in checks to prevent unethical applications, such as working on inappropriate media. Users are expected to use the tool responsibly and adhere to local laws, especially when using real faces for deepfake content. The tool supports both CPU and GPU acceleration for faster processing and provides a user-friendly GUI for swapping faces in images or videos.

aiconfig

AIConfig is a framework that makes it easy to build generative AI applications for production. It manages generative AI prompts, models and model parameters as JSON-serializable configs that can be version controlled, evaluated, monitored and opened in a local editor for rapid prototyping. It allows you to store and iterate on generative AI behavior separately from your application code, offering a streamlined AI development workflow.

depthai

This repository contains a demo application for DepthAI, a tool that can load different networks, create pipelines, record video, and more. It provides documentation for installation and usage, including running programs through Docker. Users can explore DepthAI features via command line arguments or a clickable QT interface. Supported models include various AI models for tasks like face detection, human pose estimation, and object detection. The tool collects anonymous usage statistics by default, which can be disabled. Users can report issues to the development team for support and troubleshooting.

gpt-engineer

GPT-Engineer is a tool that allows you to specify a software in natural language, sit back and watch as an AI writes and executes the code, and ask the AI to implement improvements.

For similar tasks

slack-machine

Slack Machine is a simple, yet powerful and extendable Slack bot framework. More than just a bot, Slack Machine is a framework that helps you develop your Slack workspace into a ChatOps powerhouse. Slack Machine is built with an intuitive plugin system that lets you build bots quickly, but also allows for easy code organization.

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

venom

Venom is a high-performance system developed with JavaScript to create a bot for WhatsApp, support for creating any interaction, such as customer service, media sending, sentence recognition based on artificial intelligence and all types of design architecture for WhatsApp.

Whatsapp-Ai-BOT

This WhatsApp AI chatbot is built using NodeJS technology and powered by OpenAI. It leverages the advanced deep learning models of ChatGPT, Playground, and DALL·E from OpenAI to provide a unique text-based and image-based conversational experience for users. The bot has two main features: ChatGPT (text) and DALL-E (Text To Image). To use these features, simply use the commands /ai, /img, and /sc respectively. The bot's code is encrypted to protect it from prying eyes, but the key to unlock the full potential of this amazing creation can be obtained by contacting the developer. The bot is free to use, but a PRIME version is available with additional features such as history mode, prime support, and customizable options.

IBRAHIM-AI-10.10

BMW MD is a simple WhatsApp user BOT created by Ibrahim Tech. It allows users to scan pairing codes or QR codes to connect to WhatsApp and deploy the bot on Heroku. The bot can be used to perform various tasks such as sending messages, receiving messages, and managing contacts. It is released under the MIT License and contributions are welcome.

wppconnect

WPPConnect is an open source project developed by the JavaScript community with the aim of exporting functions from WhatsApp Web to the node, which can be used to support the creation of any interaction, such as customer service, media sending, intelligence recognition based on phrases artificial and many other things.

aio-pika

Aio-pika is a wrapper around aiormq for asyncio and humans. It provides a completely asynchronous API, object-oriented API, transparent auto-reconnects with complete state recovery, Python 3.7+ compatibility, transparent publisher confirms support, transactions support, and complete type-hints coverage.

shark-chat-js

Shark Chat is a feature-rich chat application built with Trpc, Tailwind CSS, Ably, Redis, Cloudinary, Drizzle ORM, and Next.js. It allows users to create, update, and delete chat groups, send messages with markdown support, reference messages, embed links, send images/files, have direct messages, manage group members, upload images, receive notifications, use AI-powered features, delete accounts, and switch between light and dark modes. The project is 100% TypeScript and can be played with online or locally after setting up various third-party services.

For similar jobs

slack-machine

Slack Machine is a simple, yet powerful and extendable Slack bot framework. More than just a bot, Slack Machine is a framework that helps you develop your Slack workspace into a ChatOps powerhouse. Slack Machine is built with an intuitive plugin system that lets you build bots quickly, but also allows for easy code organization.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students