aio-pika

AMQP 0.9 client designed for asyncio and humans.

Stars: 1181

Aio-pika is a wrapper around aiormq for asyncio and humans. It provides a completely asynchronous API, object-oriented API, transparent auto-reconnects with complete state recovery, Python 3.7+ compatibility, transparent publisher confirms support, transactions support, and complete type-hints coverage.

README:

.. _documentation: https://aio-pika.readthedocs.org/ .. _adopted official RabbitMQ tutorial: https://aio-pika.readthedocs.io/en/latest/rabbitmq-tutorial/1-introduction.html

.. image:: https://readthedocs.org/projects/aio-pika/badge/?version=latest :target: https://aio-pika.readthedocs.org/ :alt: ReadTheDocs

.. image:: https://coveralls.io/repos/github/mosquito/aio-pika/badge.svg?branch=master :target: https://coveralls.io/github/mosquito/aio-pika :alt: Coveralls

.. image:: https://github.com/mosquito/aio-pika/workflows/tests/badge.svg :target: https://github.com/mosquito/aio-pika/actions?query=workflow%3Atests :alt: Github Actions

.. image:: https://img.shields.io/pypi/v/aio-pika.svg :target: https://pypi.python.org/pypi/aio-pika/ :alt: Latest Version

.. image:: https://img.shields.io/pypi/wheel/aio-pika.svg :target: https://pypi.python.org/pypi/aio-pika/

.. image:: https://img.shields.io/pypi/pyversions/aio-pika.svg :target: https://pypi.python.org/pypi/aio-pika/

.. image:: https://img.shields.io/pypi/l/aio-pika.svg :target: https://pypi.python.org/pypi/aio-pika/

A wrapper around aiormq_ for asyncio and humans.

Check out the examples and the tutorial in the documentation_.

If you are a newcomer to RabbitMQ, please start with the adopted official RabbitMQ tutorial_.

.. _aiormq: http://github.com/mosquito/aiormq/

.. note::

Since version 5.0.0 this library doesn't use pika as AMQP connector.

Versions below 5.0.0 contains or requires pika's source code.

.. note:: The version 7.0.0 has breaking API changes, see CHANGELOG.md for migration hints.

- Completely asynchronous API.

- Object oriented API.

- Transparent auto-reconnects with complete state recovery with

connect_robust(e.g. declared queues or exchanges, consuming state and bindings). - Python 3.7+ compatible.

- For python 3.5 users, aio-pika is available via

aio-pika<7. - Transparent

publisher confirms_ support. -

Transactions_ support. - Complete type-hints coverage.

.. _Transactions: https://www.rabbitmq.com/semantics.html .. _publisher confirms: https://www.rabbitmq.com/confirms.html

.. code-block:: shell

pip install aio-pika

Simple consumer:

.. code-block:: python

import asyncio

import aio_pika

import aio_pika.abc

async def main(loop):

# Connecting with the given parameters is also possible.

# aio_pika.connect_robust(host="host", login="login", password="password")

# You can only choose one option to create a connection, url or kw-based params.

connection = await aio_pika.connect_robust(

"amqp://guest:[email protected]/", loop=loop

)

async with connection:

queue_name = "test_queue"

# Creating channel

channel: aio_pika.abc.AbstractChannel = await connection.channel()

# Declaring queue

queue: aio_pika.abc.AbstractQueue = await channel.declare_queue(

queue_name,

auto_delete=True

)

async with queue.iterator() as queue_iter:

# Cancel consuming after __aexit__

async for message in queue_iter:

async with message.process():

print(message.body)

if queue.name in message.body.decode():

break

if __name__ == "__main__":

loop = asyncio.get_event_loop()

loop.run_until_complete(main(loop))

loop.close()

Simple publisher:

.. code-block:: python

import asyncio

import aio_pika

import aio_pika.abc

async def main(loop):

# Explicit type annotation

connection: aio_pika.RobustConnection = await aio_pika.connect_robust(

"amqp://guest:[email protected]/", loop=loop

)

routing_key = "test_queue"

channel: aio_pika.abc.AbstractChannel = await connection.channel()

await channel.default_exchange.publish(

aio_pika.Message(

body='Hello {}'.format(routing_key).encode()

),

routing_key=routing_key

)

await connection.close()

if __name__ == "__main__":

loop = asyncio.get_event_loop()

loop.run_until_complete(main(loop))

loop.close()

Get single message example:

.. code-block:: python

import asyncio

from aio_pika import connect_robust, Message

async def main(loop):

connection = await connect_robust(

"amqp://guest:[email protected]/",

loop=loop

)

queue_name = "test_queue"

routing_key = "test_queue"

# Creating channel

channel = await connection.channel()

# Declaring exchange

exchange = await channel.declare_exchange('direct', auto_delete=True)

# Declaring queue

queue = await channel.declare_queue(queue_name, auto_delete=True)

# Binding queue

await queue.bind(exchange, routing_key)

await exchange.publish(

Message(

bytes('Hello', 'utf-8'),

content_type='text/plain',

headers={'foo': 'bar'}

),

routing_key

)

# Receiving message

incoming_message = await queue.get(timeout=5)

# Confirm message

await incoming_message.ack()

await queue.unbind(exchange, routing_key)

await queue.delete()

await connection.close()

if __name__ == "__main__":

loop = asyncio.get_event_loop()

loop.run_until_complete(main(loop))

There are more examples and the RabbitMQ tutorial in the documentation_.

aiormq is a pure python AMQP client library. It is under the hood of aio-pika and might to be used when you really loving works with the protocol low level.

Following examples demonstrates the user API.

Simple consumer:

.. code-block:: python

import asyncio

import aiormq

async def on_message(message):

"""

on_message doesn't necessarily have to be defined as async.

Here it is to show that it's possible.

"""

print(f" [x] Received message {message!r}")

print(f"Message body is: {message.body!r}")

print("Before sleep!")

await asyncio.sleep(5) # Represents async I/O operations

print("After sleep!")

async def main():

# Perform connection

connection = await aiormq.connect("amqp://guest:guest@localhost/")

# Creating a channel

channel = await connection.channel()

# Declaring queue

declare_ok = await channel.queue_declare('helo')

consume_ok = await channel.basic_consume(

declare_ok.queue, on_message, no_ack=True

)

loop = asyncio.get_event_loop()

loop.run_until_complete(main())

loop.run_forever()

Simple publisher:

.. code-block:: python

import asyncio

from typing import Optional

import aiormq

from aiormq.abc import DeliveredMessage

MESSAGE: Optional[DeliveredMessage] = None

async def main():

global MESSAGE

body = b'Hello World!'

# Perform connection

connection = await aiormq.connect("amqp://guest:guest@localhost//")

# Creating a channel

channel = await connection.channel()

declare_ok = await channel.queue_declare("hello", auto_delete=True)

# Sending the message

await channel.basic_publish(body, routing_key='hello')

print(f" [x] Sent {body}")

MESSAGE = await channel.basic_get(declare_ok.queue)

print(f" [x] Received message from {declare_ok.queue!r}")

loop = asyncio.get_event_loop()

loop.run_until_complete(main())

assert MESSAGE is not None

assert MESSAGE.routing_key == "hello"

assert MESSAGE.body == b'Hello World!'

PATIO is an acronym for Python Asynchronous Tasks for AsyncIO - an easily extensible library, for distributed task execution, like celery, only targeting asyncio as the main design approach.

patio-rabbitmq provides you with the ability to use RPC over RabbitMQ services with extremely simple implementation:

.. code-block:: python

from patio import Registry, ThreadPoolExecutor from patio_rabbitmq import RabbitMQBroker

rpc = Registry(project="patio-rabbitmq", auto_naming=False)

@rpc("sum") def sum(*args): return sum(args)

async def main(): async with ThreadPoolExecutor(rpc, max_workers=16) as executor: async with RabbitMQBroker( executor, amqp_url="amqp://guest:guest@localhost/", ) as broker: await broker.join()

And the caller side might be written like this:

.. code-block:: python

import asyncio

from patio import NullExecutor, Registry

from patio_rabbitmq import RabbitMQBroker

async def main():

async with NullExecutor(Registry(project="patio-rabbitmq")) as executor:

async with RabbitMQBroker(

executor, amqp_url="amqp://guest:guest@localhost/",

) as broker:

print(await asyncio.gather(

*[

broker.call("mul", i, i, timeout=1) for i in range(10)

]

))

FastStream is a powerful and easy-to-use Python library for building asynchronous services that interact with event streams..

If you need no deep dive into RabbitMQ details, you can use more high-level FastStream interfaces:

.. code-block:: python

from faststream import FastStream from faststream.rabbit import RabbitBroker

broker = RabbitBroker("amqp://guest:guest@localhost:5672/") app = FastStream(broker)

@broker.subscriber("user") async def user_created(user_id: int): assert isinstance(user_id, int) return f"user-{user_id}: created"

@app.after_startup async def pub_smth(): assert ( await broker.publish(1, "user", rpc=True) ) == "user-1: created"

Also, FastStream validates messages by pydantic, generates your project AsyncAPI spec, supports In-Memory testing, RPC calls, and more.

In fact, it is a high-level wrapper on top of aio-pika, so you can use both of these libraries' advantages at the same time.

Socket.IO_ is a transport protocol that enables real-time bidirectional event-based communication between clients (typically, though not always, web browsers) and a server. This package provides Python implementations of both, each with standard and asyncio variants.

Also this package is suitable for building messaging services over RabbitMQ via aio-pika adapter:

.. code-block:: python

import socketio from aiohttp import web

sio = socketio.AsyncServer(client_manager=socketio.AsyncAioPikaManager()) app = web.Application() sio.attach(app)

@sio.event async def chat_message(sid, data): print("message ", data)

if name == 'main': web.run_app(app)

And a client is able to call chat_message the following way:

.. code-block:: python

import asyncio import socketio

sio = socketio.AsyncClient()

async def main(): await sio.connect('http://localhost:8080') await sio.emit('chat_message', {'response': 'my response'})

if name == 'main': asyncio.run(main())

Taskiq is an asynchronous distributed task queue for python. The project takes inspiration from big projects such as Celery and Dramatiq. But taskiq can send and run both the sync and async functions.

The library provides you with aio-pika broker for running tasks too.

.. code-block:: python

from taskiq_aio_pika import AioPikaBroker

broker = AioPikaBroker()

@broker.task async def test() -> None: print("nothing")

async def main(): await broker.startup() await test.kiq()

With over 25 million downloads, Rasa Open Source is the most popular open source framework for building chat and voice-based AI assistants.

With Rasa, you can build contextual assistants on:

- Facebook Messenger

- Slack

- Google Hangouts

- Webex Teams

- Microsoft Bot Framework

- Rocket.Chat

- Mattermost

- Telegram

- Twilio

Your own custom conversational channels or voice assistants as:

- Alexa Skills

- Google Home Actions

Rasa helps you build contextual assistants capable of having layered conversations with lots of back-and-forth. In order for a human to have a meaningful exchange with a contextual assistant, the assistant needs to be able to use context to build on things that were previously discussed – Rasa enables you to build assistants that can do this in a scalable way.

And it also uses aio-pika to interact with RabbitMQ deep inside!

This software follows Semantic Versioning_

Setting up development environment

Clone the project:

.. code-block:: shell

git clone https://github.com/mosquito/aio-pika.git

cd aio-pika

Create a new virtualenv for aio-pika_:

.. code-block:: shell

python3 -m venv env

source env/bin/activate

Install all requirements for aio-pika_:

.. code-block:: shell

pip install -e '.[develop]'

Running Tests

NOTE: In order to run the tests locally you need to run a RabbitMQ instance with default user/password (guest/guest) and port (5672).

The Makefile provides a command to run an appropriate RabbitMQ Docker image:

.. code-block:: bash

make rabbitmq

To test just run:

.. code-block:: bash

make test

Editing Documentation

To iterate quickly on the documentation live in your browser, try:

.. code-block:: bash

nox -s docs -- serve

Creating Pull Requests

Please feel free to create pull requests, but you should describe your use cases and add some examples.

Changes should follow a few simple rules:

- When your changes break the public API, you must increase the major version.

- When your changes are safe for public API (e.g. added an argument with default value)

- You have to add test cases (see

tests/folder) - You must add docstrings

- Feel free to add yourself to

"thank's to" section_

.. _"thank's to" section: https://github.com/mosquito/aio-pika/blob/master/docs/source/index.rst#thanks-for-contributing .. _Semantic Versioning: http://semver.org/ .. _aio-pika: https://github.com/mosquito/aio-pika/ .. _faststream: https://github.com/airtai/faststream .. _patio: https://github.com/patio-python/patio .. _patio-rabbitmq: https://github.com/patio-python/patio-rabbitmq .. _Socket.IO: https://socket.io/ .. _python-socketio: https://python-socketio.readthedocs.io/en/latest/intro.html .. _taskiq: https://github.com/taskiq-python/taskiq .. _taskiq-aio-pika: https://github.com/taskiq-python/taskiq-aio-pika .. _Rasa: https://rasa.com/docs/rasa/

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aio-pika

Similar Open Source Tools

aio-pika

Aio-pika is a wrapper around aiormq for asyncio and humans. It provides a completely asynchronous API, object-oriented API, transparent auto-reconnects with complete state recovery, Python 3.7+ compatibility, transparent publisher confirms support, transactions support, and complete type-hints coverage.

aiotdlib

aiotdlib is a Python asyncio Telegram client based on TDLib. It provides automatic generation of types and functions from tl schema, validation, good IDE type hinting, and high-level API methods for simpler work with tdlib. The package includes prebuilt TDLib binaries for macOS (arm64) and Debian Bullseye (amd64). Users can use their own binary by passing `library_path` argument to `Client` class constructor. Compatibility with other versions of the library is not guaranteed. The tool requires Python 3.9+ and users need to get their `api_id` and `api_hash` from Telegram docs for installation and usage.

acte

Acte is a framework designed to build GUI-like tools for AI Agents. It aims to address the issues of cognitive load and freedom degrees when interacting with multiple APIs in complex scenarios. By providing a graphical user interface (GUI) for Agents, Acte helps reduce cognitive load and constraints interaction, similar to how humans interact with computers through GUIs. The tool offers APIs for starting new sessions, executing actions, and displaying screens, accessible via HTTP requests or the SessionManager class.

aiomysql

aiomysql is a driver for accessing a MySQL database from the asyncio framework. It is based on PyMySQL and aims to provide the same API and functionality. Internally, aiomysql is a modified version of PyMySQL with async IO calls. It supports SQLAlchemy integration and offers a familiar experience for aiopg users.

volga

Volga is a general purpose real-time data processing engine in Python for modern AI/ML systems. It aims to be a Python-native alternative to Flink/Spark Streaming with extended functionality for real-time AI/ML workloads. It provides a hybrid push+pull architecture, Entity API for defining data entities and feature pipelines, DataStream API for general data processing, and customizable data connectors. Volga can run on a laptop or a distributed cluster, making it suitable for building custom real-time AI/ML feature platforms or general data pipelines without relying on third-party platforms.

llm-sandbox

LLM Sandbox is a lightweight and portable sandbox environment designed to securely execute large language model (LLM) generated code in a safe and isolated manner using Docker containers. It provides an easy-to-use interface for setting up, managing, and executing code in a controlled Docker environment, simplifying the process of running code generated by LLMs. The tool supports multiple programming languages, offers flexibility with predefined Docker images or custom Dockerfiles, and allows scalability with support for Kubernetes and remote Docker hosts.

aioshelly

Aioshelly is an asynchronous library designed to control Shelly devices. It is currently under development and requires Python version 3.11 or higher, along with dependencies like bluetooth-data-tools, aiohttp, and orjson. The library provides examples for interacting with Gen1 devices using CoAP protocol and Gen2/Gen3 devices using RPC and WebSocket protocols. Users can easily connect to Shelly devices, retrieve status information, and perform various actions through the provided APIs. The repository also includes example scripts for quick testing and usage guidelines for contributors to maintain consistency with the Shelly API.

mcphub.nvim

MCPHub.nvim is a powerful Neovim plugin that integrates MCP (Model Context Protocol) servers into your workflow. It offers a centralized config file for managing servers and tools, with an intuitive UI for testing resources. Ideal for LLM integration, it provides programmatic API access and interactive testing through the `:MCPHub` command.

funcchain

Funcchain is a Python library that allows you to easily write cognitive systems by leveraging Pydantic models as output schemas and LangChain in the backend. It provides a seamless integration of LLMs into your apps, utilizing OpenAI Functions or LlamaCpp grammars (json-schema-mode) for efficient structured output. Funcchain compiles the Funcchain syntax into LangChain runnables, enabling you to invoke, stream, or batch process your pipelines effortlessly.

pocketgroq

PocketGroq is a tool that provides advanced functionalities for text generation, web scraping, web search, and AI response evaluation. It includes features like an Autonomous Agent for answering questions, web crawling and scraping capabilities, enhanced web search functionality, and flexible integration with Ollama server. Users can customize the agent's behavior, evaluate responses using AI, and utilize various methods for text generation, conversation management, and Chain of Thought reasoning. The tool offers comprehensive methods for different tasks, such as initializing RAG, error handling, and tool management. PocketGroq is designed to enhance development processes and enable the creation of AI-powered applications with ease.

instructor

Instructor is a tool that provides structured outputs from Large Language Models (LLMs) in a reliable manner. It simplifies the process of extracting structured data by utilizing Pydantic for validation, type safety, and IDE support. With Instructor, users can define models and easily obtain structured data without the need for complex JSON parsing, error handling, or retries. The tool supports automatic retries, streaming support, and extraction of nested objects, making it production-ready for various AI applications. Trusted by a large community of developers and companies, Instructor is used by teams at OpenAI, Google, Microsoft, AWS, and YC startups.

LightRAG

LightRAG is a repository hosting the code for LightRAG, a system that supports seamless integration of custom knowledge graphs, Oracle Database 23ai, Neo4J for storage, and multiple file types. It includes features like entity deletion, batch insert, incremental insert, and graph visualization. LightRAG provides an API server implementation for RESTful API access to RAG operations, allowing users to interact with it through HTTP requests. The repository also includes evaluation scripts, code for reproducing results, and a comprehensive code structure.

client-python

The Mistral Python Client is a tool inspired by cohere-python that allows users to interact with the Mistral AI API. It provides functionalities to access and utilize the AI capabilities offered by Mistral. Users can easily install the client using pip and manage dependencies using poetry. The client includes examples demonstrating how to use the API for various tasks, such as chat interactions. To get started, users need to obtain a Mistral API Key and set it as an environment variable. Overall, the Mistral Python Client simplifies the integration of Mistral AI services into Python applications.

flutter_gen_ai_chat_ui

A modern, high-performance Flutter chat UI kit for building beautiful messaging interfaces. Features streaming text animations, markdown support, file attachments, and extensive customization options. Perfect for AI assistants, customer support, team chat, social messaging, and any conversational application. Production Ready, Cross-Platform, High Performance, Fully Customizable. Core features include dark/light mode, word-by-word streaming with animations, enhanced markdown support, speech-to-text integration, responsive layout, RTL language support, high performance message handling, improved pagination support. AI-specific features include customizable welcome message, example questions component, persistent example questions, AI typing indicators, streaming markdown rendering. New AI Actions System with function calling support, generative UI, human-in-the-loop confirmation dialogs, real-time status updates, type-safe parameters, event streaming, error handling. UI components include customizable message bubbles, custom bubble builder, multiple input field styles, loading indicators, smart scroll management, enhanced theme customization, better code block styling.

agent-sdk-go

Agent Go SDK is a powerful Go framework for building production-ready AI agents that seamlessly integrates memory management, tool execution, multi-LLM support, and enterprise features into a flexible, extensible architecture. It offers core capabilities like multi-model intelligence, modular tool ecosystem, advanced memory management, and MCP integration. The SDK is enterprise-ready with built-in guardrails, complete observability, and support for enterprise multi-tenancy. It provides a structured task framework, declarative configuration, and zero-effort bootstrapping for development experience. The SDK supports environment variables for configuration and includes features like creating agents with YAML configuration, auto-generating agent configurations, using MCP servers with an agent, and CLI tool for headless usage.

letta

Letta is an open source framework for building stateful LLM applications. It allows users to build stateful agents with advanced reasoning capabilities and transparent long-term memory. The framework is white box and model-agnostic, enabling users to connect to various LLM API backends. Letta provides a graphical interface, the Letta ADE, for creating, deploying, interacting, and observing with agents. Users can access Letta via REST API, Python, Typescript SDKs, and the ADE. Letta supports persistence by storing agent data in a database, with PostgreSQL recommended for data migrations. Users can install Letta using Docker or pip, with Docker defaulting to PostgreSQL and pip defaulting to SQLite. Letta also offers a CLI tool for interacting with agents. The project is open source and welcomes contributions from the community.

For similar tasks

aio-pika

Aio-pika is a wrapper around aiormq for asyncio and humans. It provides a completely asynchronous API, object-oriented API, transparent auto-reconnects with complete state recovery, Python 3.7+ compatibility, transparent publisher confirms support, transactions support, and complete type-hints coverage.

sdnext

SD.Next is an Image Diffusion implementation with advanced features. It offers multiple UI options, diffusion models, and built-in controls for text, image, batch, and video processing. The tool is multiplatform, supporting Windows, Linux, MacOS, nVidia, AMD, IntelArc/IPEX, DirectML, OpenVINO, ONNX+Olive, and ZLUDA. It provides optimized processing with the latest torch developments, including model compile, quantize, and compress functionalities. SD.Next also features Interrogate/Captioning with various models, queue management, automatic updates, and mobile compatibility.

KAI-Scheduler

KAI Scheduler is a robust, efficient, and scalable Kubernetes scheduler optimized for GPU resource allocation in AI and machine learning workloads. It supports batch scheduling, bin packing, spread scheduling, workload priority, hierarchical queues, resource distribution, fairness policies, workload consolidation, elastic workloads, dynamic resource allocation, GPU sharing, and works in both cloud and on-premise environments.

Minimalistic-Comfy-Wrapper-WebUI

Minimalistic Comfy Wrapper WebUI is a user interface extension for ComfyUI that provides an additional inference-focused UI. It dynamically adapts to workflows, allowing users to change node titles and refresh the UI. The tool ensures stability by storing data in the browser's local storage, supports working with the same workflows in Comfy and the webui, offers better queues management, prompt presets, batch support, a minimalist image editor, and mobile-friendly UI. Users can install it from the ComfyUI manager and customize node titles for input and output nodes. The tool is designed for users who prefer a simpler interface for inference tasks and want to work with ComfyUI workflows from a different perspective.

venom

Venom is a high-performance system developed with JavaScript to create a bot for WhatsApp, support for creating any interaction, such as customer service, media sending, sentence recognition based on artificial intelligence and all types of design architecture for WhatsApp.

IBRAHIM-AI-10.10

BMW MD is a simple WhatsApp user BOT created by Ibrahim Tech. It allows users to scan pairing codes or QR codes to connect to WhatsApp and deploy the bot on Heroku. The bot can be used to perform various tasks such as sending messages, receiving messages, and managing contacts. It is released under the MIT License and contributions are welcome.

wppconnect

WPPConnect is an open source project developed by the JavaScript community with the aim of exporting functions from WhatsApp Web to the node, which can be used to support the creation of any interaction, such as customer service, media sending, intelligence recognition based on phrases artificial and many other things.

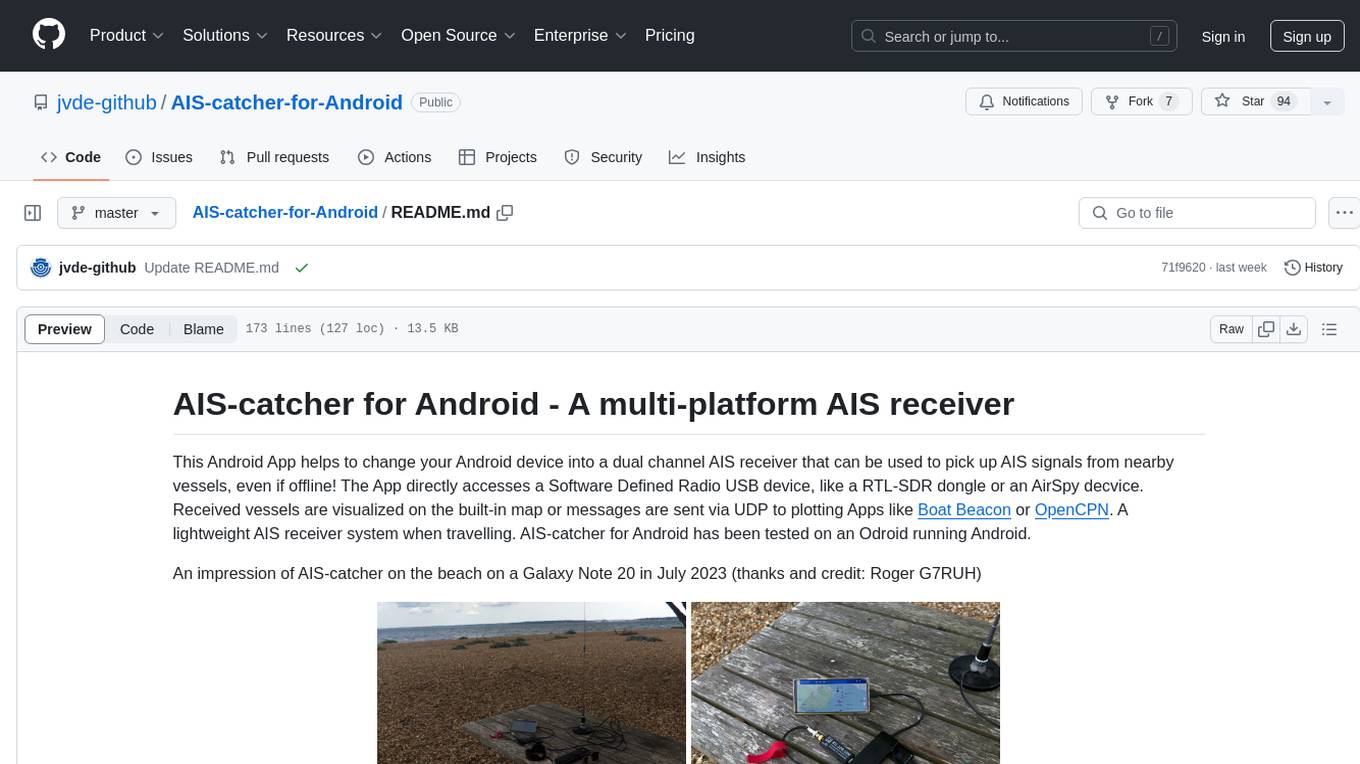

AIS-catcher-for-Android

AIS-catcher for Android is a multi-platform AIS receiver app that transforms your Android device into a dual channel AIS receiver. It directly accesses a Software Defined Radio USB device to pick up AIS signals from nearby vessels, visualizing them on a built-in map or sending messages via UDP to plotting apps. The app requires a RTL-SDR dongle or an AirSpy device, a simple antenna, an Android device with USB connector, and an OTG cable. It is designed for research and educational purposes under the GPL license, with no warranty. Users are responsible for prudent use and compliance with local regulations. The app is not intended for navigation or safety purposes.

For similar jobs

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.

AI-in-a-Box

AI-in-a-Box is a curated collection of solution accelerators that can help engineers establish their AI/ML environments and solutions rapidly and with minimal friction, while maintaining the highest standards of quality and efficiency. It provides essential guidance on the responsible use of AI and LLM technologies, specific security guidance for Generative AI (GenAI) applications, and best practices for scaling OpenAI applications within Azure. The available accelerators include: Azure ML Operationalization in-a-box, Edge AI in-a-box, Doc Intelligence in-a-box, Image and Video Analysis in-a-box, Cognitive Services Landing Zone in-a-box, Semantic Kernel Bot in-a-box, NLP to SQL in-a-box, Assistants API in-a-box, and Assistants API Bot in-a-box.

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

generative-ai-cdk-constructs

The AWS Generative AI Constructs Library is an open-source extension of the AWS Cloud Development Kit (AWS CDK) that provides multi-service, well-architected patterns for quickly defining solutions in code to create predictable and repeatable infrastructure, called constructs. The goal of AWS Generative AI CDK Constructs is to help developers build generative AI solutions using pattern-based definitions for their architecture. The patterns defined in AWS Generative AI CDK Constructs are high level, multi-service abstractions of AWS CDK constructs that have default configurations based on well-architected best practices. The library is organized into logical modules using object-oriented techniques to create each architectural pattern model.

model_server

OpenVINO™ Model Server (OVMS) is a high-performance system for serving models. Implemented in C++ for scalability and optimized for deployment on Intel architectures, the model server uses the same architecture and API as TensorFlow Serving and KServe while applying OpenVINO for inference execution. Inference service is provided via gRPC or REST API, making deploying new algorithms and AI experiments easy.

dify-helm

Deploy langgenius/dify, an LLM based chat bot app on kubernetes with helm chart.