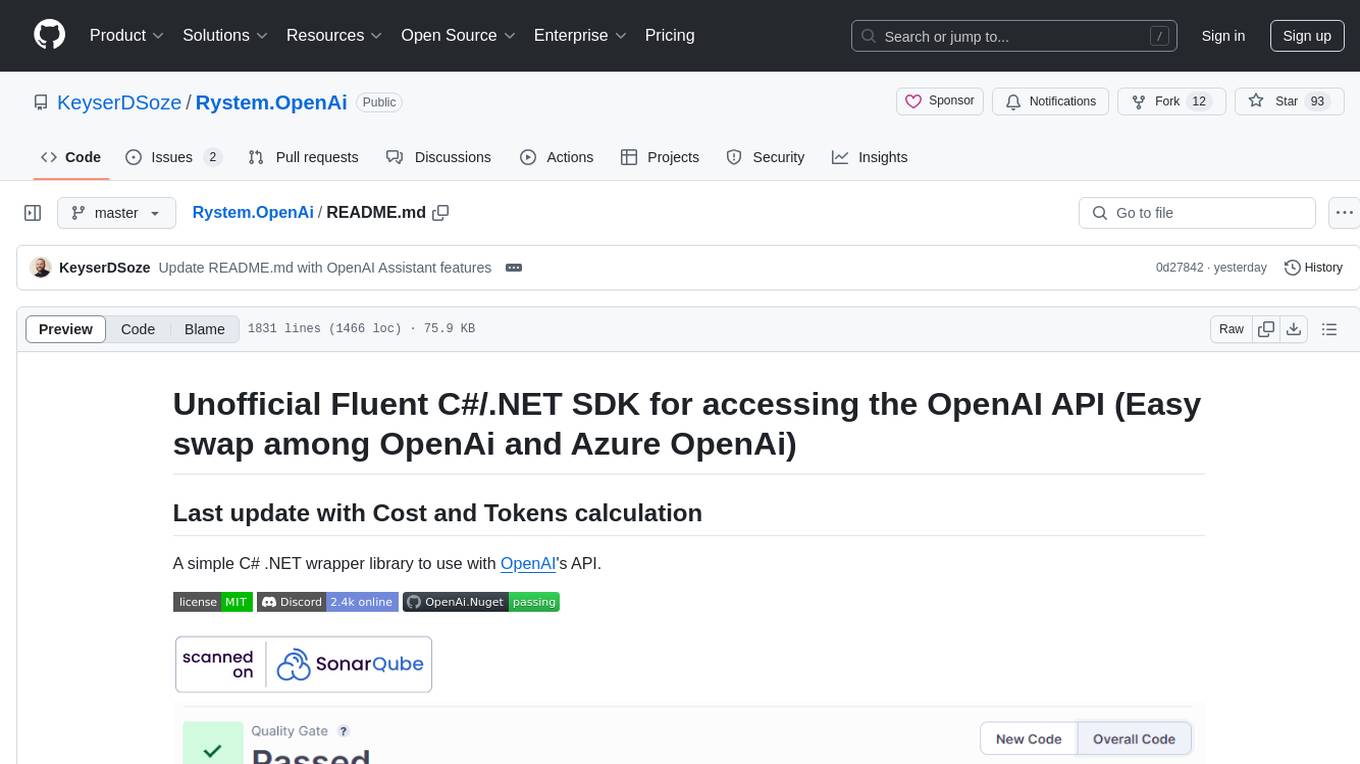

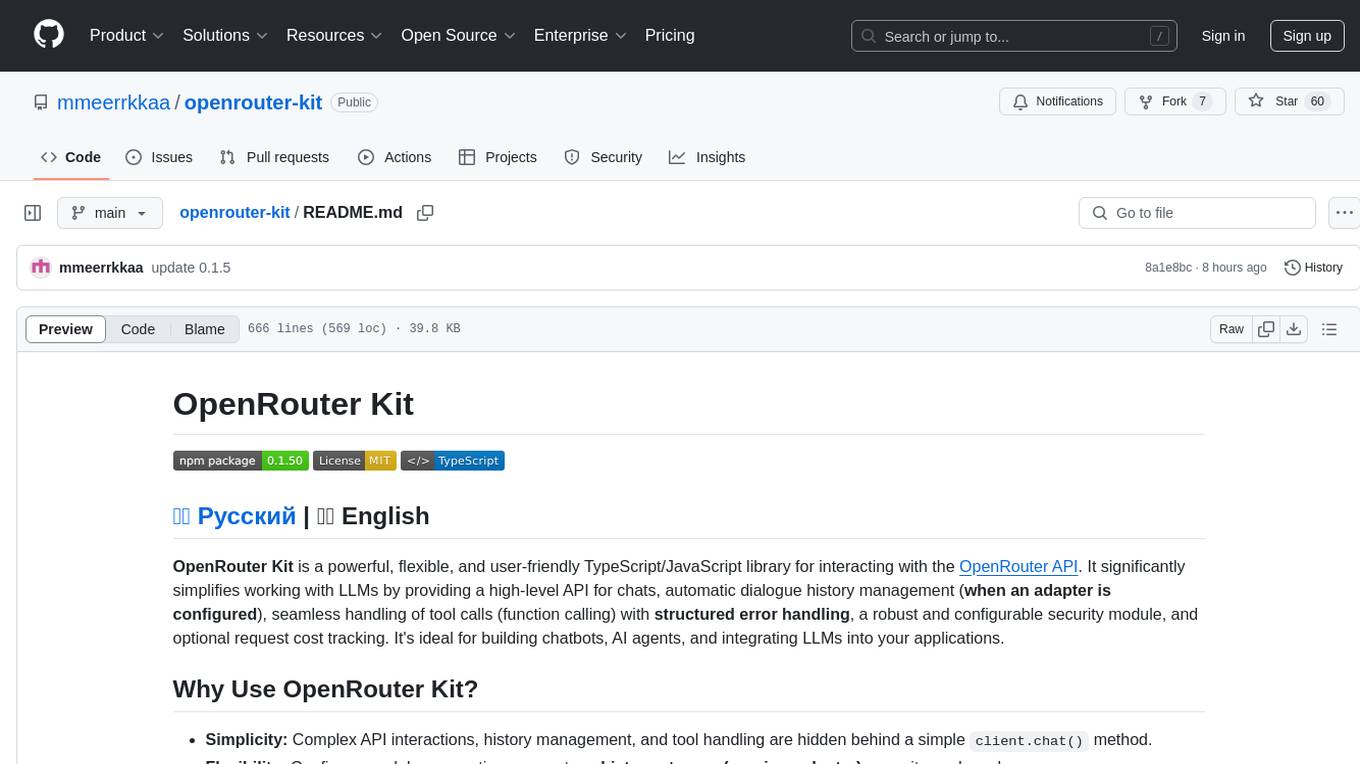

openrouter-kit

Powerful & flexible TypeScript SDK for the OpenRouter API. Streamlines building LLM applications with easy chat, adapter-based history, secure tool calling (function calling), cost tracking, and plugin support.

Stars: 59

OpenRouter Kit is a powerful TypeScript/JavaScript library for interacting with the OpenRouter API. It simplifies working with LLMs by providing a high-level API for chats, dialogue history management, tool calls with error handling, security module, and cost tracking. Ideal for building chatbots, AI agents, and integrating LLMs into applications.

README:

🇷🇺 Русский | 🇬🇧 English

OpenRouter Kit is a powerful, flexible, and user-friendly TypeScript/JavaScript library for interacting with the OpenRouter API. It significantly simplifies working with LLMs by providing a high-level API for chats, automatic dialogue history management (when an adapter is configured), seamless handling of tool calls (function calling) with structured error handling, a robust and configurable security module, and optional request cost tracking. It's ideal for building chatbots, AI agents, and integrating LLMs into your applications.

-

Simplicity: Complex API interactions, history management, and tool handling are hidden behind a simple

client.chat()method. - Flexibility: Configure models, generation parameters, history storage (requires adapter), security, and much more.

- Security: The built-in security module helps protect your applications and users when using tools.

- Extensibility: Use plugins and middleware to add custom logic without modifying the library core.

- Reliability: Fully typed with TypeScript, predictable error handling (including structured tool errors), and resource management.

-

🤖 Universal Chat: Simple and powerful API (

client.chat) for interacting with any model available via OpenRouter.- Returns a structured

ChatCompletionResultobject with content (content), token usage info (usage), model used (model), number of tool calls (toolCallsCount), finish reason (finishReason), execution time (durationMs), request ID (id), and calculated cost (cost, optional).

- Returns a structured

-

📜 History Management (via Adapters): Requires

historyAdapterconfiguration. Automatic loading, saving, and (potentially) trimming of dialogue history for each user or group whenuseris passed toclient.chat().- Flexible history system based on adapters (

IHistoryStorage). - Includes built-in adapters for memory (

MemoryHistoryStorage) and disk (DiskHistoryStorage, JSON files). Exported from the main module. - Easily plug in custom adapters (Redis, MongoDB, API, etc.) or use the provided plugin (

createRedisHistoryPlugin). - Configure cache TTL and cleanup intervals via client options (

historyTtl,historyCleanupInterval). History limit management is delegated to the adapter.

- Flexible history system based on adapters (

-

🛠️ Tool Handling (Function Calling): Seamless integration for model-invoked calls to your functions.

- Define tools using the

Toolinterface and JSON Schema for argument validation. - Automatic argument parsing, schema validation, and security checks.

- Execution of your

executefunctions with context (ToolContext, includinguserInfo). - Automatic sending of results back to the model to get the final response.

-

Structured Tool Error Handling: Errors occurring during parsing, validation, security checks, or tool execution are formatted as a JSON string (

{"errorType": "...", "errorMessage": "...", "details": ...}) and sent back to the model in therole: 'tool'message, potentially allowing the LLM to understand and react to the issue better. - Configurable limit on the maximum number of tool call rounds (

maxToolCalls) to prevent infinite loops.

- Define tools using the

-

🛡️ Security Module: Comprehensive and configurable protection for your applications.

-

Authentication: Built-in JWT support (generation, validation, caching) via

AuthManager. Easily extensible for other methods (api-key,custom). -

Access Control (ACL): Flexible configuration of tool access (

AccessControlManager) based on roles (roles), API keys (allowedApiKeys), permissions (scopes), or explicit rules (allow/deny). Default policy (deny-all/allow-all). -

Rate Limiting: Apply limits (

RateLimitManager) on tool calls for users or roles with configurable periods and limits. Important: The defaultRateLimitManagerimplementation stores state in memory and is not suitable for distributed systems (multiple processes/servers). Custom adapters or plugins using external storage (e.g., Redis) are required for such scenarios. -

Argument Sanitization: Checks (

ArgumentSanitizer) tool arguments for potentially dangerous patterns (SQLi, XSS, command injection, etc.) using global, tool-specific, and custom rules. Supports audit-only mode (auditOnlyMode). -

Event System: Subscribe to security events (

access:denied,ratelimit:exceeded,security:dangerous_args,token:invalid,user:authenticated, etc.) for monitoring and logging.

-

Authentication: Built-in JWT support (generation, validation, caching) via

-

📈 Cost Tracking: (Optional)

- Automatic calculation of approximate cost for each

chat()call based on token usage (usage) and OpenRouter model pricing. - Periodic background updates of model prices from the OpenRouter API (

/models). -

getCreditBalance()method to check your current OpenRouter credit balance. - Access cached prices via

getModelPrices().

- Automatic calculation of approximate cost for each

-

⚙️ Flexible Configuration: Configure API key, default model, endpoint (

apiEndpointfor chat, base URL for other requests determined automatically), timeouts, proxy, headers (Referer,X-Title), fallback models (modelFallbacks), response format (responseFormat), tool call limit (maxToolCalls), cost tracking (enableCostTracking), history adapter (historyAdapter), and many other parameters viaOpenRouterConfig. - 💡 Typing: Fully written in TypeScript, providing strong typing, autocompletion, and type checking during development.

-

🚦 Error Handling: Clear hierarchy of custom errors (

APIError,ValidationError,SecurityError,RateLimitError,ToolError,ConfigError, etc.) inheriting fromOpenRouterError, with codes (ErrorCode) and details for easy handling. Includes amapErrorfunction for normalizing errors. -

📝 Logging: Built-in flexible logger (

Logger) with prefix support and debug mode (debug). - ✨ Ease of Use: High-level API hiding the complexity of underlying interactions with LLMs, history, and tools.

-

🧹 Resource Management:

client.destroy()method for proper resource cleanup (timers, caches, event listeners), preventing leaks in long-running applications. -

🧩 Plugin System: Extend client capabilities without modifying the core.

- Support for external and custom plugins via

client.use(plugin). - Plugins can add middleware, replace managers (history, security, cost), subscribe to events, and extend the client API.

- Support for external and custom plugins via

-

🔗 Middleware Chain: Flexible request and response processing before and after API calls.

- Add middleware functions via

client.useMiddleware(fn). - Middleware can modify requests (

ctx.request), responses (ctx.response), implement auditing, access control, logging, cost limiting, caching, and more.

- Add middleware functions via

npm install openrouter-kit

# or

yarn add openrouter-kit

# or

pnpm add openrouter-kitTypeScript:

import { OpenRouterClient, MemoryHistoryStorage } from 'openrouter-kit'; // Import directly

const client = new OpenRouterClient({

apiKey: process.env.OPENROUTER_API_KEY || 'sk-or-v1-...',

// !!! IMPORTANT: Provide an adapter to enable history !!!

historyAdapter: new MemoryHistoryStorage(), // Use the imported class

enableCostTracking: true,

debug: false,

});

async function main() {

try {

console.log('Sending request...');

const result = await client.chat({

prompt: 'Say hi to the world!',

model: 'google/gemini-2.0-flash-001',

user: 'test-user-1', // User ID for history tracking

});

console.log('--- Result ---');

console.log('Model Response:', result.content);

console.log('Token Usage:', result.usage);

console.log('Model Used:', result.model);

console.log('Tool Calls Count:', result.toolCallsCount);

console.log('Finish Reason:', result.finishReason);

console.log('Duration (ms):', result.durationMs);

if (result.cost !== null) {

console.log('Estimated Cost (USD):', result.cost.toFixed(8));

}

console.log('Request ID:', result.id);

console.log('\nChecking balance...');

const balance = await client.getCreditBalance();

console.log(`Credit Balance: Used $${balance.usage.toFixed(4)} of $${balance.limit.toFixed(2)}`);

// Get history (will work since historyAdapter was provided)

const historyManager = client.getHistoryManager();

if (historyManager) {

// Key is generated internally by the client, but showing format for example

const historyKey = `user:test-user-1`;

const history = await historyManager.getHistory(historyKey);

console.log(`\nMessages stored in history for ${historyKey}: ${history.length}`);

}

} catch (error: any) {

console.error(`\n--- Error ---`);

console.error(`Message: ${error.message}`);

if (error.code) console.error(`Error Code: ${error.code}`);

if (error.statusCode) console.error(`HTTP Status: ${error.statusCode}`);

if (error.details) console.error(`Details:`, error.details);

// console.error(error.stack);

} finally {

console.log('\nShutting down and releasing resources...');

await client.destroy();

console.log('Resources released.');

}

}

main();JavaScript (CommonJS):

const { OpenRouterClient, MemoryHistoryStorage } = require("openrouter-kit"); // Destructure from main export

const client = new OpenRouterClient({

apiKey: process.env.OPENROUTER_API_KEY || 'sk-or-v1-...',

// !!! IMPORTANT: Provide an adapter to enable history !!!

historyAdapter: new MemoryHistoryStorage(), // Use the imported class

enableCostTracking: true,

});

async function main() {

try {

const result = await client.chat({

prompt: 'Hello, world!',

user: 'commonjs-user' // Specify user for history saving

});

console.log('Model Response:', result.content);

console.log('Usage:', result.usage);

console.log('Cost:', result.cost);

// Get history

const historyManager = client.getHistoryManager();

if (historyManager) {

// Key is generated internally, showing format for example

const historyKey = 'user:commonjs-user';

const history = await historyManager.getHistory(historyKey);

console.log(`\nHistory for '${historyKey}': ${history.length} messages`);

}

} catch (error) {

console.error(`Error: ${error.message}`, error.details || error);

} finally {

await client.destroy();

}

}

main();This example demonstrates using dialogue history and tool calling. Note the required historyAdapter and the corresponding require.

// taxi-bot.js (CommonJS)

const { OpenRouterClient, MemoryHistoryStorage } = require("openrouter-kit"); // Destructure

const readline = require('readline').createInterface({

input: process.stdin,

output: process.stdout

});

// Example proxy config (if needed)

// const proxyConfig = {

// host: "",

// port: "",

// user: "",

// pass: "",

// };

const client = new OpenRouterClient({

apiKey: process.env.OPENROUTER_API_KEY || "sk-or-v1-...", // Replace!

model: "google/gemini-2.0-flash-001",

// !!! IMPORTANT: Provide an adapter to enable history !!!

historyAdapter: new MemoryHistoryStorage(), // Use imported class

// proxy: proxyConfig,

enableCostTracking: true,

debug: false, // Set true for verbose logs

// security: { /* ... */ } // Security config can be added here

});

let orderAccepted = false;

// --- Tool Definitions ---

const taxiTools = [

{

type: "function",

function: {

name: "estimateRideCost",

description: "Estimates the cost of a taxi ride between two addresses.",

parameters: {

type: "object",

properties: {

from: { type: "string", description: "Pickup address (e.g., '1 Lenin St, Moscow')" },

to: { type: "string", description: "Destination address (e.g., '10 Tverskaya St, Moscow')" }

},

required: ["from", "to"]

},

},

// Executable function for the tool

execute: async (args) => {

console.log(`[Tool estimateRideCost] Calculating cost from ${args.from} to ${args.to}...`);

// Simulate cost calculation

const cost = Math.floor(Math.random() * 900) + 100;

console.log(`[Tool estimateRideCost] Calculated cost: ${cost} RUB`);

// Return an object to be serialized to JSON

return { estimatedCost: cost, currency: "RUB" };

}

},

{

type: "function",

function: {

name: "acceptOrder",

description: "Accepts and confirms a taxi order, assigns a driver.",

parameters: {

type: "object",

properties: {

from: { type: "string", description: "Confirmed pickup address" },

to: { type: "string", description: "Confirmed destination address" },

estimatedCost: { type: "number", description: "Approximate ride cost (if known)"}

},

required: ["from", "to"]

},

},

// Executable function with context access

execute: async (args, context) => {

console.log(`[Tool acceptOrder] Accepting order from ${args.from} to ${args.to}...`);

// Example of using context (if security is configured)

console.log(`[Tool acceptOrder] Order initiated by user: ${context?.userInfo?.userId || 'anonymous'}`);

// Simulate driver assignment

const driverNumber = Math.floor(Math.random() * 100) + 1;

orderAccepted = true; // Set flag to end the loop

// Return a string for the model to relay to the user

return `Order successfully accepted! Driver #${driverNumber} has been assigned and will arrive shortly at ${args.from}. Destination: ${args.to}.`;

}

}

];

function askQuestion(query) {

return new Promise((resolve) => {

readline.question(query, (answer) => {

resolve(answer);

});

});

}

const systemPrompt = `You are a friendly and efficient taxi service operator named "Kit". Your task is to help the customer book a taxi.

1. Clarify the pickup address ('from') and destination address ('to') if the customer hasn't provided them. Be polite.

2. Once the addresses are known, you MUST use the 'estimateRideCost' tool to inform the customer of the approximate cost.

3. Wait for the customer to confirm they accept the cost and are ready to order (e.g., with words like "book it", "okay", "yes", "sounds good").

4. After customer confirmation, use the 'acceptOrder' tool, passing it the 'from' and 'to' addresses.

5. After calling 'acceptOrder', inform the customer of the result returned by the tool.

6. Do not invent driver numbers or order statuses yourself; rely on the response from the 'acceptOrder' tool.

7. If the user asks something unrelated to booking a taxi, politely steer the conversation back to the topic.`;

async function chatWithTaxiBot() {

const userId = `taxi-user-${Date.now()}`;

console.log(`\nKit Bot: Hello! I'm your virtual assistant... (Session ID: ${userId})`);

try {

while (!orderAccepted) {

const userMessage = await askQuestion("You: ");

if (userMessage.toLowerCase() === 'exit' || userMessage.toLowerCase() === 'quit') {

console.log("Kit Bot: Thank you for contacting us! Goodbye.");

break;

}

console.log("Kit Bot: One moment, processing your request...");

// Pass user: userId, history will be managed automatically

// because historyAdapter was provided

const result = await client.chat({

user: userId, // Key for history

prompt: userMessage,

systemPrompt: systemPrompt,

tools: taxiTools, // Provide available tools

temperature: 0.5,

maxToolCalls: 5 // Limit tool call cycles

});

// Output the assistant's final response

console.log(`\nKit Bot: ${result.content}\n`);

// Output debug info if enabled

if (client.isDebugMode()) {

console.log(`[Debug] Model: ${result.model}, Tool Calls: ${result.toolCallsCount}, Cost: ${result.cost !== null ? '$' + result.cost.toFixed(8) : 'N/A'}, Reason: ${result.finishReason}`);

}

// Check the flag set by the acceptOrder tool

if (orderAccepted) {

console.log("Kit Bot: If you have any more questions, I'm here to help!");

// Optionally break here if the conversation should end after ordering

}

}

} catch (error) {

console.error("\n--- An Error Occurred ---");

// Use instanceof to check error type

if (error instanceof Error) {

console.error(`Type: ${error.constructor.name}`);

console.error(`Message: ${error.message}`);

// Access custom fields via 'any' or type assertion

if ((error as any).code) console.error(`Code: ${(error as any).code}`);

if ((error as any).statusCode) console.error(`Status: ${(error as any).statusCode}`);

if ((error as any).details) console.error(`Details:`, (error as any).details);

} else {

console.error("Unknown error:", error);

}

} finally {

readline.close();

await client.destroy(); // Release resources

console.log("\nClient stopped. Session ended.");

}

}

chatWithTaxiBot();The main class for interacting with the library.

An object passed when creating the client (new OpenRouterClient(config)). Key fields:

-

apiKey(string, required): Your OpenRouter API key. -

apiEndpoint?(string): Override the chat completions API endpoint URL (default:https://openrouter.ai/api/v1/chat/completions). -

model?(string): Default model identifier for requests. -

debug?(boolean): Enable detailed logging (default:false). -

proxy?(string | object): HTTP/HTTPS proxy settings. -

referer?(string): Value for theHTTP-Refererheader. -

title?(string): Value for theX-Titleheader. -

axiosConfig?(object): Additional configuration passed directly to Axios. -

historyAdapter?(IHistoryStorage): Required for history management. An instance of a history storage adapter (e.g.,new MemoryHistoryStorage()). -

historyTtl?(number): Time-to-live (TTL) for entries in theUnifiedHistoryManagercache (milliseconds). -

historyCleanupInterval?(number): Interval for cleaning expired entries from theUnifiedHistoryManagercache (milliseconds). -

providerPreferences?(object): Settings specific to OpenRouter model providers. -

modelFallbacks?(string[]): List of fallback models to try if the primary model fails. -

responseFormat?(ResponseFormat | null): Default response format for all requests. -

maxToolCalls?(number): Default maximum tool call cycles perchat()invocation (default: 10). -

strictJsonParsing?(boolean): Throw an error on invalid JSON response (if JSON format requested)? (default:false, returnsnull). -

security?(SecurityConfig): Configuration for the security module (uses the baseSecurityConfigtype from./types). -

enableCostTracking?(boolean): Enable cost tracking (default:false). -

priceRefreshIntervalMs?(number): Interval for refreshing model prices (default: 6 hours). -

initialModelPrices?(object): Provide initial model prices to avoid the first price fetch. -

Deprecated fields (ignored if

historyAdapteris present):historyStorage,chatsFolder,maxHistoryEntries,historyAutoSave.

-

chat(options: OpenRouterRequestOptions): Promise<ChatCompletionResult>: The primary method for sending chat requests. Takes anoptionsobject (seeOpenRouterRequestOptionsintypes/index.ts). Manages history only ifuseris passed ANDhistoryAdapteris configured. -

getHistoryManager(): UnifiedHistoryManager: Returns the history manager instance (if created). -

getSecurityManager(): SecurityManager | null: Returns the security manager instance (if configured). -

getCostTracker(): CostTracker | null: Returns the cost tracker instance (if enabled). -

getCreditBalance(): Promise<CreditBalance>: Fetches the OpenRouter account credit balance. -

getModelPrices(): Record<string, ModelPricingInfo>: Returns the cached model prices. -

refreshModelPrices(): Promise<void>: Forces a background refresh of model prices. -

createAccessToken(userInfo, expiresIn?): string: Generates a JWT (ifsecurity.userAuthentication.type === 'jwt'). -

use(plugin): Promise<void>: Registers a plugin. -

useMiddleware(fn): void: Registers middleware. -

on(event, handler)/off(event, handler): Subscribe/unsubscribe from client events ('error') or security module events (prefixed events likesecurity:,user:,token:,access:,ratelimit:,tool:). -

destroy(): Promise<void>: Releases resources (timers, listeners).

-

Plugins: Modules extending client functionality. Registered via

client.use(plugin). Can initialize services, replace standard managers (setSecurityManager,setCostTracker), add middleware. -

Middleware: Functions executed sequentially for each

client.chat()call. Allow modification of requests (ctx.request), responses (ctx.response), or performing side effects (logging, auditing). Registered viaclient.useMiddleware(fn).

To enable automatic dialogue history management (loading, saving, trimming when user is passed to client.chat()), you MUST configure historyAdapter in OpenRouterConfig. Without it, history features will not work.

-

Adapter (

IHistoryStorage): Defines the interface for storage (load,save,delete,listKeys,destroy?). -

UnifiedHistoryManager: Internal component using the adapter and managing an in-memory cache (with TTL and cleanup). -

Built-in Adapters:

-

MemoryHistoryStorage: Stores history in RAM (default if no adapter specified). -

DiskHistoryStorage: Stores history in JSON files on disk.

-

-

Usage:

import { OpenRouterClient, MemoryHistoryStorage, DiskHistoryStorage } from 'openrouter-kit'; // Using MemoryHistoryStorage const clientMemory = new OpenRouterClient({ /*...,*/ historyAdapter: new MemoryHistoryStorage() }); // Using DiskHistoryStorage const clientDisk = new OpenRouterClient({ /*...,*/ historyAdapter: new DiskHistoryStorage('./my-chat-histories') });

-

Redis Plugin: Use

createRedisHistoryPluginfor easy Redis integration (requiresioredis). -

Cache Settings:

historyTtl,historyCleanupIntervalinOpenRouterConfigcontrol theUnifiedHistoryManager's cache behavior.

Allows LLM models to invoke your JavaScript/TypeScript functions to retrieve external information, interact with other APIs, or perform real-world actions.

-

Defining a Tool (

Tool): Define each tool as an object conforming to theToolinterface. Key fields:-

type: 'function'(currently the only supported type). -

function: An object describing the function for the LLM:-

name(string): A unique name the model will use to call the function. -

description(string, optional): A clear description of what the function does and when to use it. Crucial for the model to understand the tool's purpose. -

parameters(object, optional): A JSON Schema object describing the structure, types, and required fields of the arguments your function expects. The library uses this schema to validate arguments received from the model. Omit if no arguments are needed.

-

-

execute: (args: any, context?: ToolContext) => Promise<any> | any: Your async or sync function that gets executed when the model requests this tool call.-

args: An object containing the arguments passed by the model, already parsed from the JSON string and (if a schema was provided) validated againstparameters. -

context?: An optionalToolContextobject containing additional call information, such as:-

userInfo?: TheUserAuthInfoobject for the authenticated user (ifSecurityManageris used andaccessTokenwas passed). -

securityManager?: TheSecurityManagerinstance (if used).

-

-

-

security(ToolSecurity, optional): An object defining tool-specific security rules likerequiredRole,requiredScopes, orrateLimit. These are checked by theSecurityManagerbeforeexecuteis called.

-

-

Using in

client.chat():- Pass an array of your defined tools to the

toolsoption of theclient.chat()method. - The library handles the complex interaction flow:

- Sends tool definitions (name, description, parameters schema) to the model with your prompt.

- If the model decides to call one or more tools, it returns a response with

finish_reason: 'tool_calls'and a list oftool_calls. - The library intercepts this response. For each requested

toolCall:- Finds the corresponding tool in your

toolsarray by name. - Parses the arguments string (

toolCall.function.arguments) into a JavaScript object. - Validates the arguments object against the JSON Schema in

tool.function.parameters(if provided). -

Performs security checks via

SecurityManager(if configured): verifies user (userInfo) access rights, applies rate limits, and checks arguments for dangerous content. - If all checks pass, calls your

tool.execute(parsedArgs, context)function. - Waits for the result (or catches errors) from your

executefunction. -

Formats the result (or a structured error) into a JSON string and sends it back to the model in a new message with

role: 'tool'and the correspondingtool_call_id.

- Finds the corresponding tool in your

- The model receives the tool call results and generates the final, coherent response to the user (now with

role: 'assistant').

- The

maxToolCallsoption (inclient.chat()or client config) limits the maximum number of these request-call-result cycles to prevent infinite loops if the model keeps requesting tools. - The

toolChoiceoption controls the model's tool usage:'auto'(default),'none'(disallow calls), or force a specific function call{ type: "function", function: { name: "my_tool_name" } }.

- Pass an array of your defined tools to the

-

Result: The final model response (after all potential tool calls) is available in

ChatCompletionResult.content. TheChatCompletionResult.toolCallsCountfield indicates how many tools were successfully called and executed during the singleclient.chat()invocation.

Provides multi-layered protection when using tools, crucial if tools perform actions or access data. Activated by passing a security: SecurityConfig object to the OpenRouterClient constructor.

Components:

-

AuthManager: Handles user authentication. Supports JWT out-of-the-box (generation, validation, caching). UsesjwtSecretfrom config. Extensible viacustomAuthenticator. -

AccessControlManager: Checks if the authenticated (or anonymous, if allowed) user has permission to call a specific tool based onsecurity.toolAccessandsecurity.rolesrules. ConsidersdefaultPolicy. -

RateLimitManager: Tracks and enforces tool call frequency limits per user based on configuration (security.roles,security.toolAccess, ortool.security). Important: Default implementation is in-memory and not suitable for distributed systems. -

ArgumentSanitizer: Analyzes arguments passed to toolexecutefunctions for potentially harmful patterns (SQLi, XSS, OS commands, etc.) using regex and blocklists defined insecurity.dangerousArguments. Supports blocking or audit-only (auditOnlyMode).

Configuration (SecurityConfig):

Defines the security module's behavior in detail. Uses extended types (ExtendedSecurityConfig, ExtendedUserAuthInfo, etc.) exported by the library. Key fields:

-

defaultPolicy('deny-all'|'allow-all'): Action if no explicit access rule matches?'deny-all'recommended. -

requireAuthentication(boolean): Must a validaccessTokenbe present for any tool call? -

allowUnauthenticatedAccess(boolean): IfrequireAuthentication: false, can anonymous users call tools (if the tool itself allows it)? -

userAuthentication(UserAuthConfig): Authentication method setup (type: 'jwt',jwtSecret,customAuthenticator). Set a strongjwtSecretif using JWT! -

toolAccess(Record<string, ToolAccessConfig>): Access rules per tool name or for all ('*'). Includesallow,roles,scopes,rateLimit,allowedApiKeys. -

roles(RolesConfig): Definitions of roles and their permissions/limits (allowedTools,rateLimits). -

dangerousArguments(ExtendedDangerousArgumentsConfig): Argument sanitization settings (globalPatterns,toolSpecificPatterns,blockedValues,auditOnlyMode).

Usage:

- Pass the

securityConfigobject to theOpenRouterClientconstructor. - For authenticated requests, pass the access token via

client.chat({ accessToken: '...' }). - The library automatically calls

securityManager.checkToolAccessAndArgs()before executing any tool. This performs all configured checks (auth, ACL, rate limits, args). - If any check fails, an appropriate error (

AuthorizationError,AccessDeniedError,RateLimitError,SecurityError) is thrown, and theexecutefunction is not called. - Use

client.createAccessToken()to generate JWTs (if configured). - Subscribe to security events (

client.on('access:denied', ...)etc.) for monitoring.

Provides an approximate cost estimate for each client.chat() call based on token usage and OpenRouter model prices.

-

Enabling: Set

enableCostTracking: trueinOpenRouterConfig. -

Mechanism:

-

CostTrackerinstance is created on client initialization. - It fetches current prices for all models from the OpenRouter API (

/models) initially (unlessinitialModelPricesis provided) and periodically thereafter (priceRefreshIntervalMs, default 6 hours). - Prices (cost per million input/output tokens) are cached in memory.

- After a successful

client.chat()call, the library gets token usage (usage) from the API response. -

costTracker.calculateCost(model, usage)is called, using cached prices and usage data to compute the cost. It accounts for prompt, completion, and intermediate tool call tokens. - The calculated value (USD number) or

null(if prices are unknown or tracking is off) is added to thecostfield of the returnedChatCompletionResult.

-

-

Related Client Methods:

-

getCreditBalance(): Promise<CreditBalance>: Fetches current credit limit and usage from your OpenRouter account. -

getModelPrices(): Record<string, ModelPricingInfo>: Returns the current cache of model prices used by the tracker. -

refreshModelPrices(): Promise<void>: Manually triggers a background refresh of the model price cache. -

getCostTracker(): CostTracker | null: Accesses theCostTrackerinstance (if enabled).

-

- Accuracy: Remember this is an estimate. Actual billing might differ slightly due to rounding or price changes not yet reflected in the cache.

Instructs the model to generate its response content in JSON format, useful for structured data extraction.

-

Configuration: Set via the

responseFormatoption inOpenRouterConfig(for a default) or in theclient.chat()options (for a specific request). -

Types:

-

{ type: 'json_object' }: Instructs the model to return any valid JSON object. -

{ type: 'json_schema', json_schema: { name: string, schema: object, strict?: boolean, description?: string } }: Requires the model to return JSON conforming to the provided JSON Schema.-

name: An arbitrary name for your schema. -

schema: The JSON Schema object describing the expected JSON structure. -

strict(optional): Request strict schema adherence (model-dependent). -

description(optional): A description of the schema for the model.

-

-

-

Example:

import { OpenRouterClient, MemoryHistoryStorage } from 'openrouter-kit'; const client = new OpenRouterClient({ /* ... */ historyAdapter: new MemoryHistoryStorage() }); const userSchema = { type: "object", properties: { name: { type: "string"}, age: {type: "number"} }, required: ["name", "age"] }; async function getUserData() { const result = await client.chat({ prompt: 'Generate JSON for a user: name Alice, age 30.', responseFormat: { type: 'json_schema', json_schema: { name: 'UserData', schema: userSchema } } }); console.log(result.content); // Expect { name: "Alice", age: 30 } }

-

Parsing Error Handling:

- If

strictJsonParsing: false(default): If the model returns invalid JSON or JSON not matching the schema,ChatCompletionResult.contentwill benull. - If

strictJsonParsing: true: AValidationErrorwill be thrown in the same situation.

- If

-

Model Support: Not all models guarantee support for

responseFormat. Check specific model documentation.

The library uses a structured error system for easier debugging and control flow.

-

Base Class

OpenRouterError: All library errors inherit from this. Contains:-

message: Human-readable error description. -

code(ErrorCode): String code for programmatic identification (e.g.,API_ERROR,VALIDATION_ERROR,RATE_LIMIT_ERROR). -

statusCode?(number): HTTP status code from the API response, if applicable. -

details?(any): Additional context or the original error.

-

-

Subclasses: Specific error types for different situations:

-

APIError: Error returned by the OpenRouter API (status >= 400). -

ValidationError: Data validation failure (config, args, JSON/schema response). -

NetworkError: Network issue connecting to the API. -

AuthenticationError: Invalid API key or missing authentication (typically 401). -

AuthorizationError: Invalid or expired access token (typically 401). -

AccessDeniedError: Authenticated user lacks permissions (typically 403). -

RateLimitError: Request limit exceeded (typically 429). Containsdetails.timeLeft(ms). -

ToolError: Error during toolexecutefunction execution. -

ConfigError: Invalid library configuration. -

SecurityError: General security failure (includesDANGEROUS_ARGS). -

TimeoutError: API request timed out.

-

-

Enum

ErrorCode: Contains all possible string error codes (e.g.,ErrorCode.API_ERROR,ErrorCode.TOOL_ERROR). -

Function

mapError(error): Internally used to convert Axios and standardErrorobjects into anOpenRouterErrorsubclass. Exported for potential external use. -

Handling Recommendations:

- Use

try...catchblocks around client method calls (client.chat(),client.getCreditBalance(), etc.). - Check error type using

instanceof(e.g.,if (error instanceof RateLimitError)) or the code (if (error.code === ErrorCode.VALIDATION_ERROR)). - Inspect

error.statusCodeanderror.detailsfor more context. - Subscribe to the global client

'error'event (client.on('error', handler)) for centralized logging or monitoring of unexpected errors.

- Use

import { OpenRouterClient, MemoryHistoryStorage, OpenRouterError, RateLimitError, ValidationError, ErrorCode } from 'openrouter-kit';

const client = new OpenRouterClient({ /* ... */ historyAdapter: new MemoryHistoryStorage() });

async function safeChat() {

try {

const result = await client.chat({ prompt: "..." });

// ... process successful result ...

} catch (error: any) {

if (error instanceof RateLimitError) {

const retryAfter = Math.ceil((error.details?.timeLeft || 1000) / 1000); // Seconds

console.warn(`Rate limit exceeded! Please try again in ${retryAfter} seconds.`);

} else if (error.code === ErrorCode.VALIDATION_ERROR) {

console.error(`Validation Error: ${error.message}`, error.details);

} else if (error.code === ErrorCode.TOOL_ERROR && error.message.includes('Maximum tool call depth')) {

console.error(`Tool call limit reached: ${error.message}`);

} else if (error instanceof OpenRouterError) {

console.error(`OpenRouter Kit Error (${error.code}, Status: ${error.statusCode || 'N/A'}): ${error.message}`);

if(error.details) console.error('Details:', error.details);

} else {

console.error(`Unknown error: ${error.message}`);

}

}

}The library uses a built-in logger for debug information and event messages.

-

Activation: Set

debug: trueinOpenRouterConfigwhen creating the client. -

Levels: Uses standard console levels:

console.debug,console.log,console.warn,console.error. Whendebug: false, only critical initialization warnings or errors are shown. -

Prefixes: Messages are automatically prefixed with the component source (e.g.,

[OpenRouterClient],[SecurityManager],[CostTracker],[UnifiedHistoryManager]) for easier debugging. -

Customization: While direct logger replacement isn't a standard API feature, you can pass custom loggers to some components (like

HistoryManagerif created manually) or use plugins/middleware to intercept and redirect logs. -

isDebugMode()Method: Check the client's current debug status usingclient.isDebugMode().

Route requests to the OpenRouter API through an HTTP/HTTPS proxy using the proxy option in OpenRouterConfig.

-

Formats:

-

URL String: Full proxy URL, including protocol, optional authentication, host, and port.

proxy: 'http://user:[email protected]:8080'proxy: 'https://secureproxy.com:9000' -

Object: A structured object with fields:

-

host(string, required): Proxy server hostname or IP. -

port(number | string, required): Proxy server port. -

user?(string, optional): Username for proxy authentication. -

pass?(string, optional): Password for proxy authentication.

proxy: { host: '192.168.1.100', port: 8888, // can also be '8888' user: 'proxyUser', pass: 'proxyPassword' }

-

-

URL String: Full proxy URL, including protocol, optional authentication, host, and port.

-

Mechanism: The library uses

https-proxy-agentto route HTTPS traffic through the specified HTTP/HTTPS proxy.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for openrouter-kit

Similar Open Source Tools

openrouter-kit

OpenRouter Kit is a powerful TypeScript/JavaScript library for interacting with the OpenRouter API. It simplifies working with LLMs by providing a high-level API for chats, dialogue history management, tool calls with error handling, security module, and cost tracking. Ideal for building chatbots, AI agents, and integrating LLMs into applications.

react-native-rag

React Native RAG is a library that enables private, local RAGs to supercharge LLMs with a custom knowledge base. It offers modular and extensible components like `LLM`, `Embeddings`, `VectorStore`, and `TextSplitter`, with multiple integration options. The library supports on-device inference, vector store persistence, and semantic search implementation. Users can easily generate text responses, manage documents, and utilize custom components for advanced use cases.

generative-ai-python

The Google AI Python SDK is the easiest way for Python developers to build with the Gemini API. The Gemini API gives you access to Gemini models created by Google DeepMind. Gemini models are built from the ground up to be multimodal, so you can reason seamlessly across text, images, and code.

js-genai

The Google Gen AI JavaScript SDK is an experimental SDK for TypeScript and JavaScript developers to build applications powered by Gemini. It supports both the Gemini Developer API and Vertex AI. The SDK is designed to work with Gemini 2.0 features. Users can access API features through the GoogleGenAI classes, which provide submodules for querying models, managing caches, creating chats, uploading files, and starting live sessions. The SDK also allows for function calling to interact with external systems. Users can find more samples in the GitHub samples directory.

LightRAG

LightRAG is a repository hosting the code for LightRAG, a system that supports seamless integration of custom knowledge graphs, Oracle Database 23ai, Neo4J for storage, and multiple file types. It includes features like entity deletion, batch insert, incremental insert, and graph visualization. LightRAG provides an API server implementation for RESTful API access to RAG operations, allowing users to interact with it through HTTP requests. The repository also includes evaluation scripts, code for reproducing results, and a comprehensive code structure.

model.nvim

model.nvim is a tool designed for Neovim users who want to utilize AI models for completions or chat within their text editor. It allows users to build prompts programmatically with Lua, customize prompts, experiment with multiple providers, and use both hosted and local models. The tool supports features like provider agnosticism, programmatic prompts in Lua, async and multistep prompts, streaming completions, and chat functionality in 'mchat' filetype buffer. Users can customize prompts, manage responses, and context, and utilize various providers like OpenAI ChatGPT, Google PaLM, llama.cpp, ollama, and more. The tool also supports treesitter highlights and folds for chat buffers.

java-genai

Java idiomatic SDK for the Gemini Developer APIs and Vertex AI APIs. The SDK provides a Client class for interacting with both APIs, allowing seamless switching between the 2 backends without code rewriting. It supports features like generating content, embedding content, generating images, upscaling images, editing images, and generating videos. The SDK also includes options for setting API versions, HTTP request parameters, client behavior, and response schemas.

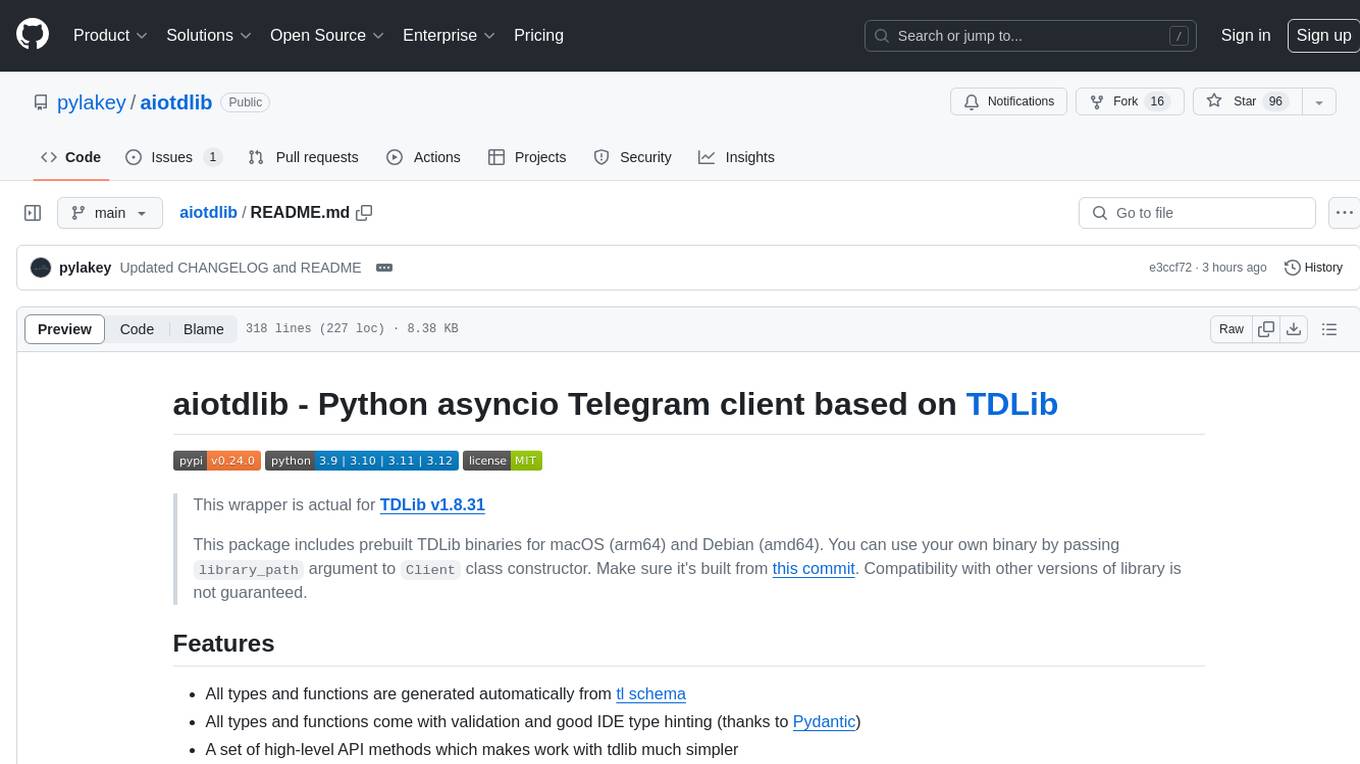

aiotdlib

aiotdlib is a Python asyncio Telegram client based on TDLib. It provides automatic generation of types and functions from tl schema, validation, good IDE type hinting, and high-level API methods for simpler work with tdlib. The package includes prebuilt TDLib binaries for macOS (arm64) and Debian Bullseye (amd64). Users can use their own binary by passing `library_path` argument to `Client` class constructor. Compatibility with other versions of the library is not guaranteed. The tool requires Python 3.9+ and users need to get their `api_id` and `api_hash` from Telegram docs for installation and usage.

pocketgroq

PocketGroq is a tool that provides advanced functionalities for text generation, web scraping, web search, and AI response evaluation. It includes features like an Autonomous Agent for answering questions, web crawling and scraping capabilities, enhanced web search functionality, and flexible integration with Ollama server. Users can customize the agent's behavior, evaluate responses using AI, and utilize various methods for text generation, conversation management, and Chain of Thought reasoning. The tool offers comprehensive methods for different tasks, such as initializing RAG, error handling, and tool management. PocketGroq is designed to enhance development processes and enable the creation of AI-powered applications with ease.

sonarqube-mcp-server

The SonarQube MCP Server is a Model Context Protocol (MCP) server that enables seamless integration with SonarQube Server or Cloud for code quality and security. It supports the analysis of code snippets directly within the agent context. The server provides various tools for analyzing code, managing issues, accessing metrics, and interacting with SonarQube projects. It also supports advanced features like dependency risk analysis, enterprise portfolio management, and system health checks. The server can be configured for different transport modes, proxy settings, and custom certificates. Telemetry data collection can be disabled if needed.

flapi

flAPI is a powerful service that automatically generates read-only APIs for datasets by utilizing SQL templates. Built on top of DuckDB, it offers features like automatic API generation, support for Model Context Protocol (MCP), connecting to multiple data sources, caching, security implementation, and easy deployment. The tool allows users to create APIs without coding and enables the creation of AI tools alongside REST endpoints using SQL templates. It supports unified configuration for REST endpoints and MCP tools/resources, concurrent servers for REST API and MCP server, and automatic tool discovery. The tool also provides DuckLake-backed caching for modern, snapshot-based caching with features like full refresh, incremental sync, retention, compaction, and audit logs.

Webscout

WebScout is a versatile tool that allows users to search for anything using Google, DuckDuckGo, and phind.com. It contains AI models, can transcribe YouTube videos, generate temporary email and phone numbers, has TTS support, webai (terminal GPT and open interpreter), and offline LLMs. It also supports features like weather forecasting, YT video downloading, temp mail and number generation, text-to-speech, advanced web searches, and more.

docutranslate

Docutranslate is a versatile tool for translating documents efficiently. It supports multiple file formats and languages, making it ideal for businesses and individuals needing quick and accurate translations. The tool uses advanced algorithms to ensure high-quality translations while maintaining the original document's formatting. With its user-friendly interface, Docutranslate simplifies the translation process and saves time for users. Whether you need to translate legal documents, technical manuals, or personal letters, Docutranslate is the go-to solution for all your document translation needs.

minuet-ai.nvim

Minuet AI is a Neovim plugin that integrates with nvim-cmp to provide AI-powered code completion using multiple AI providers such as OpenAI, Claude, Gemini, Codestral, and Huggingface. It offers customizable configuration options and streaming support for completion delivery. Users can manually invoke completion or use cost-effective models for auto-completion. The plugin requires API keys for supported AI providers and allows customization of system prompts. Minuet AI also supports changing providers, toggling auto-completion, and provides solutions for input delay issues. Integration with lazyvim is possible, and future plans include implementing RAG on the codebase and virtual text UI support.

aiocache

Aiocache is an asyncio cache library that supports multiple backends such as memory, redis, and memcached. It provides a simple interface for functions like add, get, set, multi_get, multi_set, exists, increment, delete, clear, and raw. Users can easily install and use the library for caching data in Python applications. Aiocache allows for easy instantiation of caches and setup of cache aliases for reusing configurations. It also provides support for backends, serializers, and plugins to customize cache operations. The library offers detailed documentation and examples for different use cases and configurations.

For similar tasks

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

lollms

LoLLMs Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications.

LlamaIndexTS

LlamaIndex.TS is a data framework for your LLM application. Use your own data with large language models (LLMs, OpenAI ChatGPT and others) in Typescript and Javascript.

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

botpress

Botpress is a platform for building next-generation chatbots and assistants powered by OpenAI. It provides a range of tools and integrations to help developers quickly and easily create and deploy chatbots for various use cases.

BotSharp

BotSharp is an open-source machine learning framework for building AI bot platforms. It provides a comprehensive set of tools and components for developing and deploying intelligent virtual assistants. BotSharp is designed to be modular and extensible, allowing developers to easily integrate it with their existing systems and applications. With BotSharp, you can quickly and easily create AI-powered chatbots, virtual assistants, and other conversational AI applications.

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

For similar jobs

goat

GOAT (Great Onchain Agent Toolkit) is an open-source framework designed to simplify the process of making AI agents perform onchain actions by providing a provider-agnostic solution that abstracts away the complexities of interacting with blockchain tools such as wallets, token trading, and smart contracts. It offers a catalog of ready-made blockchain actions for agent developers and allows dApp/smart contract developers to develop plugins for easy access by agents. With compatibility across popular agent frameworks, support for multiple blockchains and wallet providers, and customizable onchain functionalities, GOAT aims to streamline the integration of blockchain capabilities into AI agents.

typedai

TypedAI is a TypeScript-first AI platform designed for developers to create and run autonomous AI agents, LLM based workflows, and chatbots. It offers advanced autonomous agents, software developer agents, pull request code review agent, AI chat interface, Slack chatbot, and supports various LLM services. The platform features configurable Human-in-the-loop settings, functional callable tools/integrations, CLI and Web UI interface, and can be run locally or deployed on the cloud with multi-user/SSO support. It leverages the Python AI ecosystem through executing Python scripts/packages and provides flexible run/deploy options like single user mode, Firestore & Cloud Run deployment, and multi-user SSO enterprise deployment. TypedAI also includes UI examples, code examples, and automated LLM function schemas for seamless development and execution of AI workflows.

appworld

AppWorld is a high-fidelity execution environment of 9 day-to-day apps, operable via 457 APIs, populated with digital activities of ~100 people living in a simulated world. It provides a benchmark of natural, diverse, and challenging autonomous agent tasks requiring rich and interactive coding. The repository includes implementations of AppWorld apps and APIs, along with tests. It also introduces safety features for code execution and provides guides for building agents and extending the benchmark.

mcp-agent

mcp-agent is a simple, composable framework designed to build agents using the Model Context Protocol. It handles the lifecycle of MCP server connections and implements patterns for building production-ready AI agents in a composable way. The framework also includes OpenAI's Swarm pattern for multi-agent orchestration in a model-agnostic manner, making it the simplest way to build robust agent applications. It is purpose-built for the shared protocol MCP, lightweight, and closer to an agent pattern library than a framework. mcp-agent allows developers to focus on the core business logic of their AI applications by handling mechanics such as server connections, working with LLMs, and supporting external signals like human input.

openrouter-kit

OpenRouter Kit is a powerful TypeScript/JavaScript library for interacting with the OpenRouter API. It simplifies working with LLMs by providing a high-level API for chats, dialogue history management, tool calls with error handling, security module, and cost tracking. Ideal for building chatbots, AI agents, and integrating LLMs into applications.

starknet-agentic

Open-source stack for giving AI agents wallets, identity, reputation, and execution rails on Starknet. `starknet-agentic` is a monorepo with Cairo smart contracts for agent wallets, identity, reputation, and validation, TypeScript packages for MCP tools, A2A integration, and payment signing, reusable skills for common Starknet agent capabilities, and examples and docs for integration. It provides contract primitives + runtime tooling in one place for integrating agents. The repo includes various layers such as Agent Frameworks / Apps, Integration + Runtime Layer, Packages / Tooling Layer, Cairo Contract Layer, and Starknet L2. It aims for portability of agent integrations without giving up Starknet strengths, with a cross-chain interop strategy and skills marketplace. The repository layout consists of directories for contracts, packages, skills, examples, docs, and website.

SwiftAgent

A type-safe, declarative framework for building AI agents in Swift, SwiftAgent is built on Apple FoundationModels. It allows users to compose agents by combining Steps in a declarative syntax similar to SwiftUI. The framework ensures compile-time checked input/output types, native Apple AI integration, structured output generation, and built-in security features like permission, sandbox, and guardrail systems. SwiftAgent is extensible with MCP integration, distributed agents, and a skills system. Users can install SwiftAgent with Swift 6.2+ on iOS 26+, macOS 26+, or Xcode 26+ using Swift Package Manager.

agent-device

CLI tool for controlling iOS and Android devices for AI agents, with core commands like open, back, home, press, and more. It supports minimal dependencies, TypeScript execution on Node 22+, and is in early development. The tool allows for automation flows, session management, semantic finding, assertions, replay updates, and settings helpers for simulators. It also includes backends for iOS snapshots, app resolution, iOS-specific notes, testing, and building. Contributions are welcome, and the project is maintained by Callstack, a group of React and React Native enthusiasts.