nextlint

Rich text editor (WYSIWYG) written in Svelte, build on top of tiptap, prosemirror, AI prompt integrated. Dark/Light theme support

Stars: 145

Nextlint is a rich text editor (WYSIWYG) written in Svelte, using MeltUI headless UI and tailwindcss CSS framework. It is built on top of tiptap editor (headless editor) and prosemirror. Nextlint is easy to use, develop, and maintain. It has a prompt engine that helps to integrate with any AI API and enhance the writing experience. Dark/Light theme is supported and customizable.

README:

Rich text editor (WYSIWYG) written in Svelte, using MeltUI headless UI and tailwindcss CSS framework.

Built on top of tiptap editor(headless editor) and prosemirror. Easy to use, develop and maintain. A prompt engine that helps to integrate with any AI API, and enhance the writing experience.

Dark/Light theme is supported and customizable.

//npm

npm install @nextlint/svelte

//yarn

yarn add @nextlint/svelte

//pnmp

npm add @nextlint/svelteNexltint editor uses headless svelte components from MeltUI and styles it with tailwindcss. The theme tokens are inherited from Svelte Shadcn.

If you already have shadcn setup in your project then you can skip this part.

pnpm add -D tailwindcss postcss autoprefixer sass

npx tailwindcss init -pNow tailwind.config.js and postcss.config.js are created

// more detail at https://www.shadcn-svelte.com/docs/installation/manual

/** @type {import('tailwindcss').Config} */

module.exports = {

content: [

'./src/**/*.{svelte,js}',

'./node_modules/@nextlint/svelte/dist/**/*.{svelte,ts}'

],

theme: {

extend: {

colors: {

border: 'hsl(var(--border) / <alpha-value>)',

input: 'hsl(var(--input) / <alpha-value>)',

ring: 'hsl(var(--ring) / <alpha-value>)',

background: 'hsl(var(--background) / <alpha-value>)',

foreground: 'hsl(var(--foreground) / <alpha-value>)',

primary: {

DEFAULT: 'hsl(var(--primary) / <alpha-value>)',

foreground: 'hsl(var(--primary-foreground) / <alpha-value>)'

},

secondary: {

DEFAULT: 'hsl(var(--secondary) / <alpha-value>)',

foreground: 'hsl(var(--secondary-foreground) / <alpha-value>)'

},

destructive: {

DEFAULT: 'hsl(var(--destructive) / <alpha-value>)',

foreground: 'hsl(var(--destructive-foreground) / <alpha-value>)'

},

muted: {

DEFAULT: 'hsl(var(--muted) / <alpha-value>)',

foreground: 'hsl(var(--muted-foreground) / <alpha-value>)'

},

accent: {

DEFAULT: 'hsl(var(--accent) / <alpha-value>)',

foreground: 'hsl(var(--accent-foreground) / <alpha-value>)'

},

popover: {

DEFAULT: 'hsl(var(--popover) / <alpha-value>)',

foreground: 'hsl(var(--popover-foreground) / <alpha-value>)'

},

card: {

DEFAULT: 'hsl(var(--card) / <alpha-value>)',

foreground: 'hsl(var(--card-foreground) / <alpha-value>)'

}

},

borderRadius: {

lg: 'var(--radius)',

md: 'calc(var(--radius) - 2px)',

sm: 'calc(var(--radius) - 4px)'

},

fontFamily: {

sans: ['Inter']

}

}

},

plugins: []

};Theme can customize via css tokens. The default token is located at EditorTheme.scss.

To use the default theme, you need to wrap your SvelteEditor component with ThemeTheme:

<script lang="ts">

import {SvelteEditor} from '@nextlint/svelte/EditorTheme';

import EditorTheme from '@nextlint/svelte/EditorTheme';

</script>

<div class="editor">

<EditorTheme>

<SvelteEditor content="" placeholder="Start editing..." />

</EditorTheme>

</div>The EditorTheme basicaly just import the default theme we define in EditorTheme.scss:

<script lang="ts">

import './EditorTheme.scss';

</script>

//EditorTheme.svelte

<slot />Nexltint editor uses nextlint/core, which is a headless editor with existing plugins installed, can be used in any UI framework, compatible with tiptap and prosemirror plugins system.

Nextlint Svelte itself has some plugins completely written in Svelte and configurable

Support upload/embed/unsplash api

| Name | Type | Description |

|---|---|---|

content |

Content |

Initialize editor content |

onChange |

(editor:Editor)=>void |

A callback will call when the editor change |

placeholder? |

String |

The placeholder will be displayed when the editor empty |

onCreated? |

(editor:Editor)=>void |

A callback will trigger once when the editor is created |

plugins? |

PluginsOptions |

Customize plugins options |

extensions? |

Extensions |

Customize editor extension |

Type: HTMLContent | JSONContent | JSONContent[] | null

Initialize content, can be a JSONContent or a html markup.

// Can be string

<SvelteEditor

content="<p>this is a paragraph content</p>"

/>

// which is equal

<SvelteEditor

...

content={{

type:'docs'

attrs:{},

content:[{

type:'paragraph',

attrs:{},

content:[{

type:'text',

text:'this is a paragraph content'

}]

}]

}}

/>Type: String | undefined

Default: undefined

Placeholder will display when editor content is empty

<SvelteEditor ... content="" placeholder="Press 'space' to trigger AI prompt" />Type: (editor: Editor)=>void

The callback will fire when the editor changes ( update state or selection )

<script lang="ts">

let editor;

</script>

<SvelteEditor

...

onChange={_editor => {

editor = _editor;

}}

/>Type: (editor: Editor)=>void | undefined

Default: undefined

The callback will fire once the editor finishes initialize

<SvelteEditor

...

onCreated={editor => {

console.log('The editor is created and ready to use !');

}}

/>Type: PluginOptions | undefined

Default: undefined

type PluginOptions = {

image?: ImagePluginOptions;

gpt?: AskOptions;

dropCursor?: DropcursorOptions;

codeBlock?: NextlintCodeBlockOptions;

};Type: ImagePluginOptions|undefined

Default: undefined

Config the handleUpload function and setup API key to fetch images from unsplash

<SvelteEditor

...

plugins={

image: {

handleUpload:(file)=>{

// handle upload here

const blob = new Blob([file]);

const previewUrl = URL.createObjectURL(blob);

return previewUrl;

},

unsplash: {

accessKey: 'UNPLASH_API_KEY'

}

},

}

/>Type:AskOptions|undefined

Default: undefined

Trigger prompt in an empty line, get the question from the editor, call the handle function via this config and append the result to the editor. Allow to integrate with any AI out side the editor.

<SvelteEditor

...

plugins={

ask: async (question:string)=>{

// config any AI tool to get the result and return

// the result to the editor

return 'result from any AI Backend'

}

}

/>Type: DropcursorOptions|undefined

Default: undefined

Config dropCursor color/width/class.

<SvelteEditor

...

plugins={

dropCursor: {

width:'2px',

color:'#000',

}

}

/>Type: NextlintCodeBlockOptions|undefined

Default:

{

themes: {

dark: 'github-dark',

light: 'github-light'

},

langs: []

}The codeBlock theme will sync with the theme props.

https://github.com/lynhan318/nextlint/assets/32099104/d5d5c72d-787d-4b16-882f-2cba0dbfaa35

<SvelteEditor

//....

content={''}

onChange={editor.set}

theme="light"

plugins={{

codeBlock: {

langs: ['c', 'sh', 'javascript', 'html', 'typescript'],

themes: {

dark: 'vitesse-dark',

light: 'vitesse-light'

}

}

}}

/>Please follow the contribute guideline

The MIT License (MIT). Please see License File for more information.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for nextlint

Similar Open Source Tools

nextlint

Nextlint is a rich text editor (WYSIWYG) written in Svelte, using MeltUI headless UI and tailwindcss CSS framework. It is built on top of tiptap editor (headless editor) and prosemirror. Nextlint is easy to use, develop, and maintain. It has a prompt engine that helps to integrate with any AI API and enhance the writing experience. Dark/Light theme is supported and customizable.

opencode.nvim

Opencode.nvim is a neovim frontend for Opencode, a terminal-based AI coding agent. It provides a chat interface between neovim and the Opencode AI agent, capturing editor context to enhance prompts. The plugin maintains persistent sessions for continuous conversations with the AI assistant, similar to Cursor AI.

opencode.nvim

Opencode.nvim is a Neovim plugin that provides a simple and efficient way to browse, search, and open files in a project. It enhances the file navigation experience by offering features like fuzzy finding, file preview, and quick access to frequently used files. With Opencode.nvim, users can easily navigate through their project files, jump to specific locations, and manage their workflow more effectively. The plugin is designed to improve productivity and streamline the development process by simplifying file handling tasks within Neovim.

SwiftAgent

A type-safe, declarative framework for building AI agents in Swift, SwiftAgent is built on Apple FoundationModels. It allows users to compose agents by combining Steps in a declarative syntax similar to SwiftUI. The framework ensures compile-time checked input/output types, native Apple AI integration, structured output generation, and built-in security features like permission, sandbox, and guardrail systems. SwiftAgent is extensible with MCP integration, distributed agents, and a skills system. Users can install SwiftAgent with Swift 6.2+ on iOS 26+, macOS 26+, or Xcode 26+ using Swift Package Manager.

ai

The Vercel AI SDK is a library for building AI-powered streaming text and chat UIs. It provides React, Svelte, Vue, and Solid helpers for streaming text responses and building chat and completion UIs. The SDK also includes a React Server Components API for streaming Generative UI and first-class support for various AI providers such as OpenAI, Anthropic, Mistral, Perplexity, AWS Bedrock, Azure, Google Gemini, Hugging Face, Fireworks, Cohere, LangChain, Replicate, Ollama, and more. Additionally, it offers Node.js, Serverless, and Edge Runtime support, as well as lifecycle callbacks for saving completed streaming responses to a database in the same request.

capsule

Capsule is a secure and durable runtime for AI agents, designed to coordinate tasks in isolated environments. It allows for long-running workflows, large-scale processing, autonomous decision-making, and multi-agent systems. Tasks run in WebAssembly sandboxes with isolated execution, resource limits, automatic retries, and lifecycle tracking. It enables safe execution of untrusted code within AI agent systems.

opencode.nvim

opencode.nvim is a tool that integrates the opencode AI assistant with Neovim, allowing users to streamline editor-aware research, reviews, and requests. It provides features such as connecting to opencode instances, sharing editor context, input prompts with completions, executing commands, and monitoring state via statusline component. Users can define their own prompts, reload edited buffers in real-time, and forward Server-Sent-Events for automation. The tool offers sensible defaults with flexible configuration and API to fit various workflows, supporting ranges and dot-repeat in a Vim-like manner.

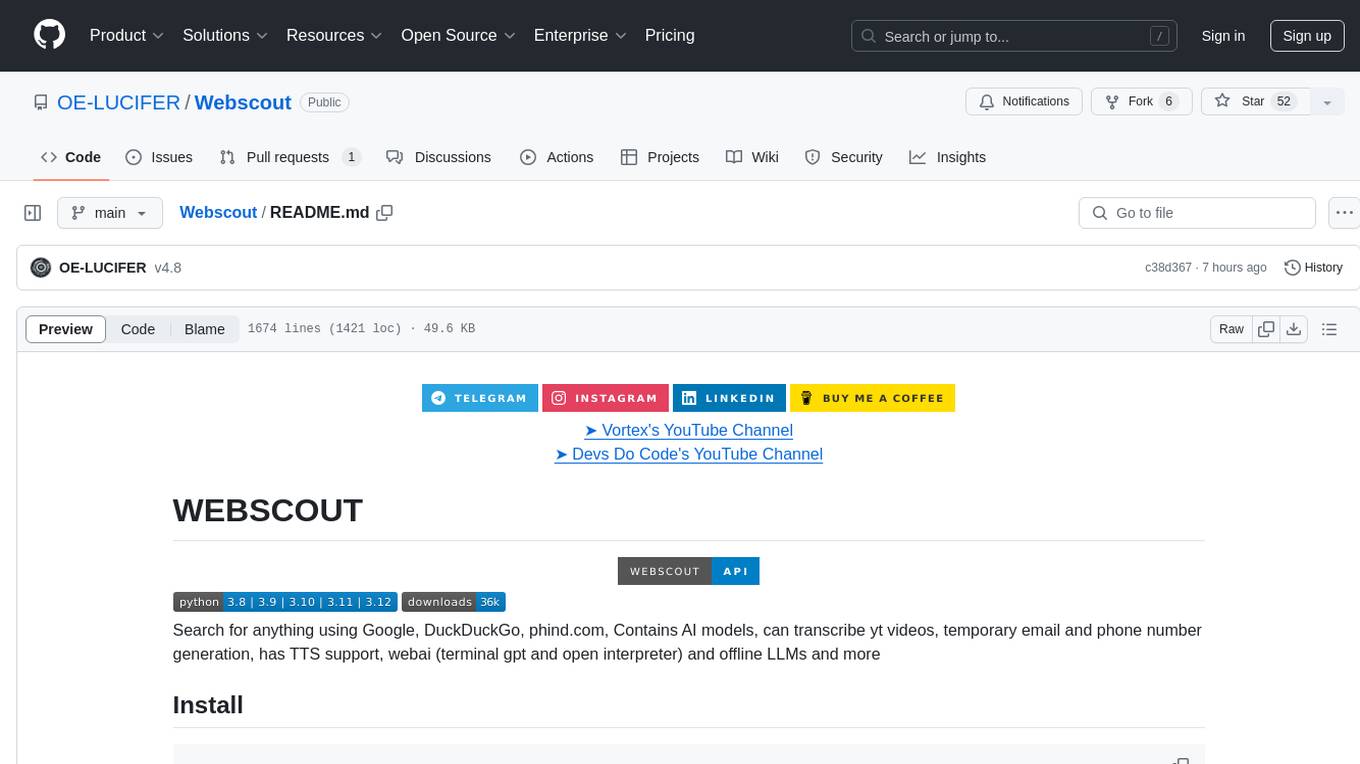

Webscout

Webscout is an all-in-one Python toolkit for web search, AI interaction, digital utilities, and more. It provides access to diverse search engines, cutting-edge AI models, temporary communication tools, media utilities, developer helpers, and powerful CLI interfaces through a unified library. With features like comprehensive search leveraging Google and DuckDuckGo, AI powerhouse for accessing various AI models, YouTube toolkit for video and transcript management, GitAPI for GitHub data extraction, Tempmail & Temp Number for privacy, Text-to-Speech conversion, GGUF conversion & quantization, SwiftCLI for CLI interfaces, LitPrinter for styled console output, LitLogger for logging, LitAgent for user agent generation, Text-to-Image generation, Scout for web parsing and crawling, Awesome Prompts for specialized tasks, Weather Toolkit, and AI Search Providers.

ax

Ax is a Typescript library that allows users to build intelligent agents inspired by agentic workflows and the Stanford DSP paper. It seamlessly integrates with multiple Large Language Models (LLMs) and VectorDBs to create RAG pipelines or collaborative agents capable of solving complex problems. The library offers advanced features such as streaming validation, multi-modal DSP, and automatic prompt tuning using optimizers. Users can easily convert documents of any format to text, perform smart chunking, embedding, and querying, and ensure output validation while streaming. Ax is production-ready, written in Typescript, and has zero dependencies.

react-native-rag

React Native RAG is a library that enables private, local RAGs to supercharge LLMs with a custom knowledge base. It offers modular and extensible components like `LLM`, `Embeddings`, `VectorStore`, and `TextSplitter`, with multiple integration options. The library supports on-device inference, vector store persistence, and semantic search implementation. Users can easily generate text responses, manage documents, and utilize custom components for advanced use cases.

Webscout

WebScout is a versatile tool that allows users to search for anything using Google, DuckDuckGo, and phind.com. It contains AI models, can transcribe YouTube videos, generate temporary email and phone numbers, has TTS support, webai (terminal GPT and open interpreter), and offline LLMs. It also supports features like weather forecasting, YT video downloading, temp mail and number generation, text-to-speech, advanced web searches, and more.

python-genai

The Google Gen AI SDK is a Python library that provides access to Google AI and Vertex AI services. It allows users to create clients for different services, work with parameter types, models, generate content, call functions, handle JSON response schemas, stream text and image content, perform async operations, count and compute tokens, embed content, generate and upscale images, edit images, work with files, create and get cached content, tune models, distill models, perform batch predictions, and more. The SDK supports various features like automatic function support, manual function declaration, JSON response schema support, streaming for text and image content, async methods, tuning job APIs, distillation, batch prediction, and more.

sdk

Varg is an AI video generation SDK that extends Vercel's AI SDK with capabilities for video, music, and lipsync. It allows users to generate images, videos, music, and more using familiar patterns and declarative JSX syntax. The SDK supports various models for image and video generation, speech synthesis, music generation, and background removal. Users can create reusable elements for character consistency, handle files from disk, URL, or buffer, and utilize layout helpers, transitions, and caption styles. Varg also offers a visual editor for video workflows with a code editor and node-based interface.

fittencode.nvim

Fitten Code AI Programming Assistant for Neovim provides fast completion using AI, asynchronous I/O, and support for various actions like document code, edit code, explain code, find bugs, generate unit test, implement features, optimize code, refactor code, start chat, and more. It offers features like accepting suggestions with Tab, accepting line with Ctrl + Down, accepting word with Ctrl + Right, undoing accepted text, automatic scrolling, and multiple HTTP/REST backends. It can run as a coc.nvim source or nvim-cmp source.

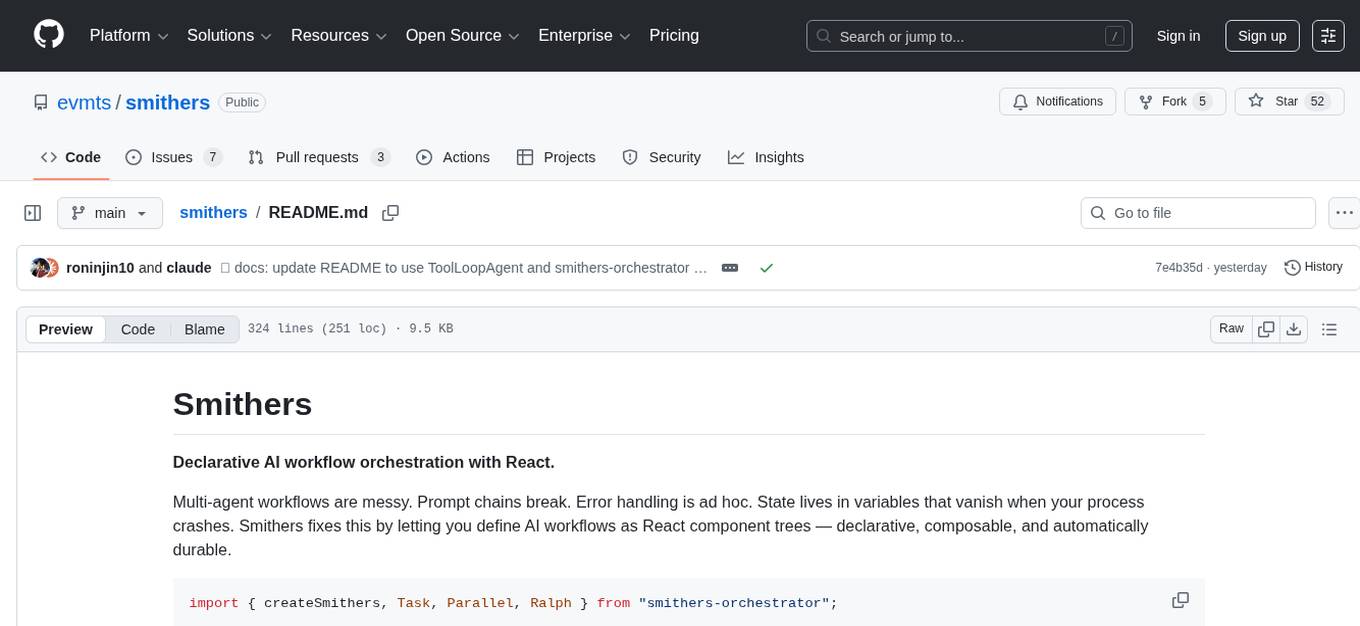

smithers

Smithers is a tool for declarative AI workflow orchestration using React components. It allows users to define complex multi-agent workflows as component trees, ensuring composability, durability, and error handling. The tool leverages React's re-rendering mechanism to persist outputs to SQLite, enabling crashed workflows to resume seamlessly. Users can define schemas for task outputs, create workflow instances, define agents, build workflow trees, and run workflows programmatically or via CLI. Smithers supports components for pipeline stages, structured output validation with Zod, MDX prompts, validation loops with Ralph, dynamic branching, and various built-in tools like read, edit, bash, grep, and write. The tool follows a clear workflow execution process involving defining, rendering, executing, re-rendering, and repeating tasks until completion, all while storing task results in SQLite for fault tolerance.

For similar tasks

ChatIDE

ChatIDE is an AI assistant that integrates with your IDE, allowing you to converse with OpenAI's ChatGPT or Anthropic's Claude within your development environment. It provides a seamless way to access AI-powered assistance while coding, enabling you to get real-time help, generate code snippets, debug errors, and brainstorm ideas without leaving your IDE.

nextlint

Nextlint is a rich text editor (WYSIWYG) written in Svelte, using MeltUI headless UI and tailwindcss CSS framework. It is built on top of tiptap editor (headless editor) and prosemirror. Nextlint is easy to use, develop, and maintain. It has a prompt engine that helps to integrate with any AI API and enhance the writing experience. Dark/Light theme is supported and customizable.

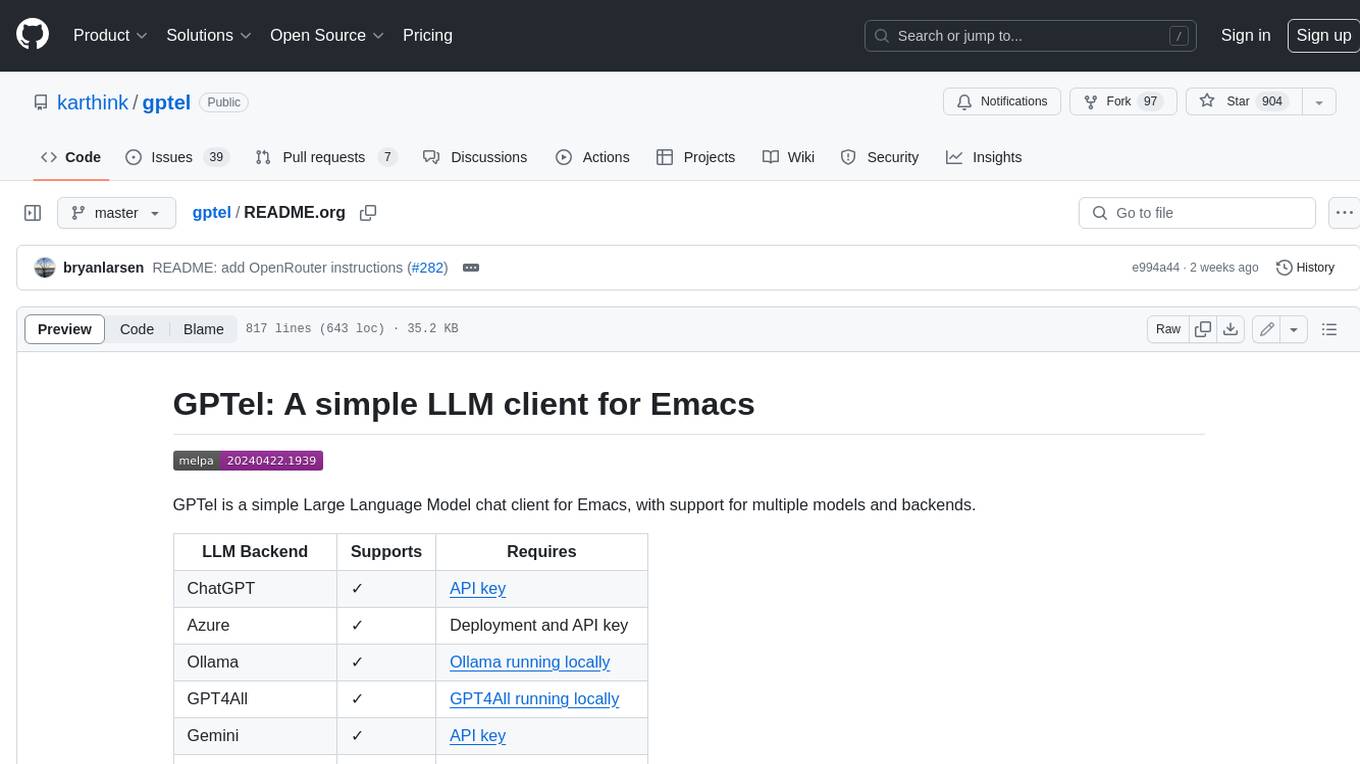

gptel

GPTel is a simple Large Language Model chat client for Emacs, with support for multiple models and backends. It's async and fast, streams responses, and interacts with LLMs from anywhere in Emacs. LLM responses are in Markdown or Org markup. Supports conversations and multiple independent sessions. Chats can be saved as regular Markdown/Org/Text files and resumed later. You can go back and edit your previous prompts or LLM responses when continuing a conversation. These will be fed back to the model. Don't like gptel's workflow? Use it to create your own for any supported model/backend with a simple API.

gollama

Gollama is a delightful tool that brings Ollama, your offline conversational AI companion, directly into your terminal. It provides a fun and interactive way to generate responses from various models without needing internet connectivity. Whether you're brainstorming ideas, exploring creative writing, or just looking for inspiration, Gollama is here to assist you. The tool offers an interactive interface, customizable prompts, multiple models selection, and visual feedback to enhance user experience. It can be installed via different methods like downloading the latest release, using Go, running with Docker, or building from source. Users can interact with Gollama through various options like specifying a custom base URL, prompt, model, and enabling raw output mode. The tool supports different modes like interactive, piped, CLI with image, and TUI with image. Gollama relies on third-party packages like bubbletea, glamour, huh, and lipgloss. The roadmap includes implementing piped mode, support for extracting codeblocks, copying responses/codeblocks to clipboard, GitHub Actions for automated releases, and downloading models directly from Ollama using the rest API. Contributions are welcome, and the project is licensed under the MIT License.

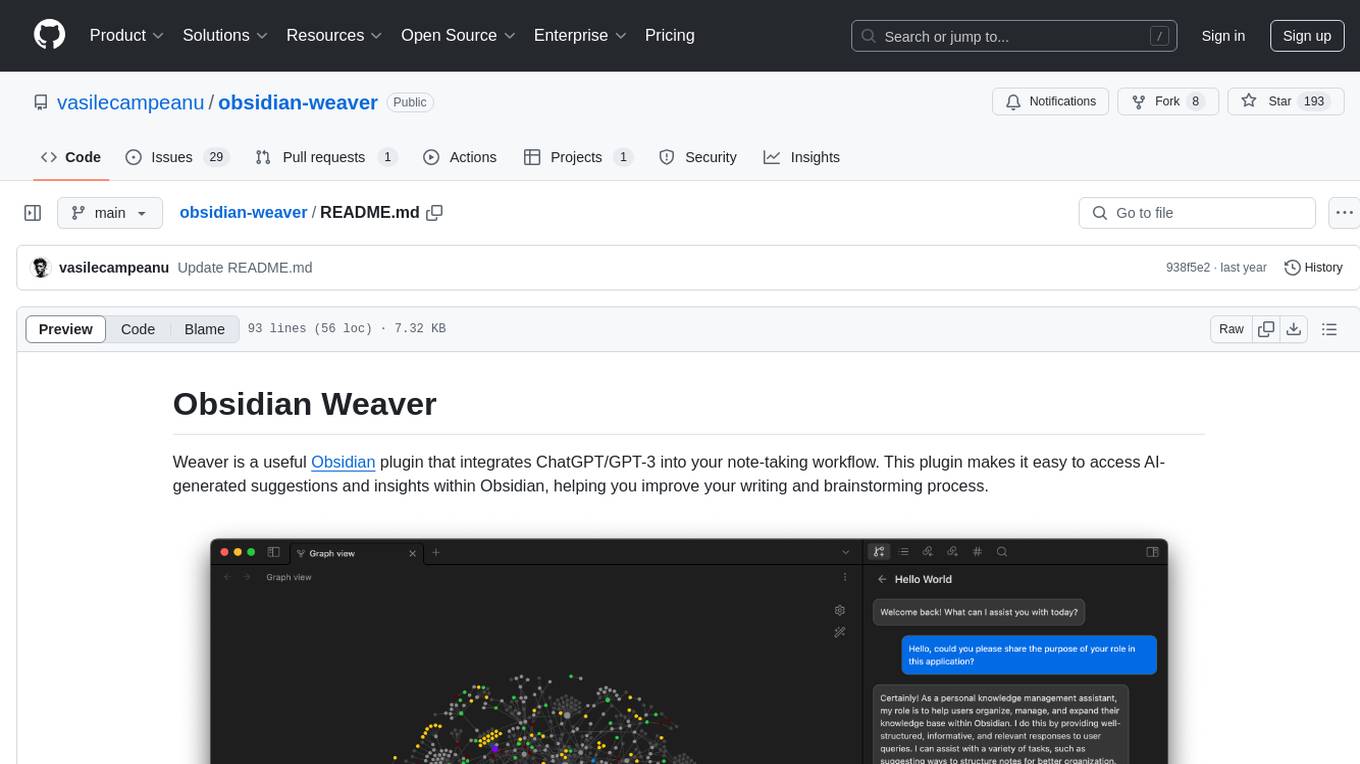

obsidian-weaver

Obsidian Weaver is a plugin that integrates ChatGPT/GPT-3 into the note-taking workflow of Obsidian. It allows users to easily access AI-generated suggestions and insights within Obsidian, enhancing the writing and brainstorming process. The plugin respects Obsidian's philosophy of storing notes locally, ensuring data security and privacy. Weaver offers features like creating new chat sessions with the AI assistant and receiving instant responses, all within the Obsidian environment. It provides a seamless integration with Obsidian's interface, making the writing process efficient and helping users stay focused. The plugin is constantly being improved with new features and updates to enhance the note-taking experience.

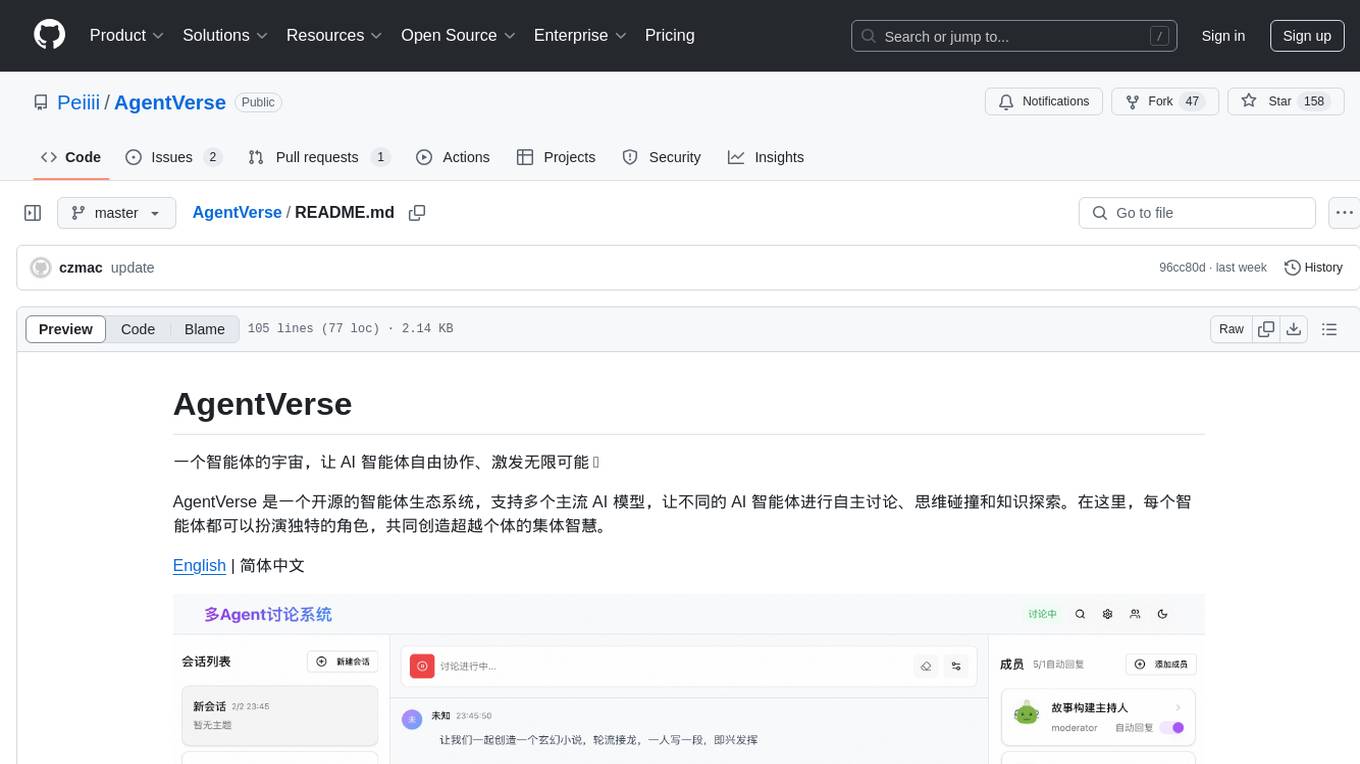

AgentVerse

AgentVerse is an open-source ecosystem for intelligent agents, supporting multiple mainstream AI models to facilitate autonomous discussions, thought collisions, and knowledge exploration. Each intelligent agent can play a unique role here, collectively creating wisdom beyond individuals.

Awesome-AI-Agents-HUB-for-CrewAI

A comprehensive repository featuring a curated collection of AI-powered projects and Multi Agent Systems (MAS) built with the Crew AI framework. It provides innovative AI solutions for various domains, including marketing automation, health planning, legal advice, and more. Users can explore and deploy AI agents, Multi Agent Systems, and advanced machine learning techniques through a diverse selection of projects that leverage state-of-the-art AI technologies like RAG (Retrieval-Augmented Generation). The projects offer practical applications and customizable solutions for integrating AI into existing workflows or new projects.

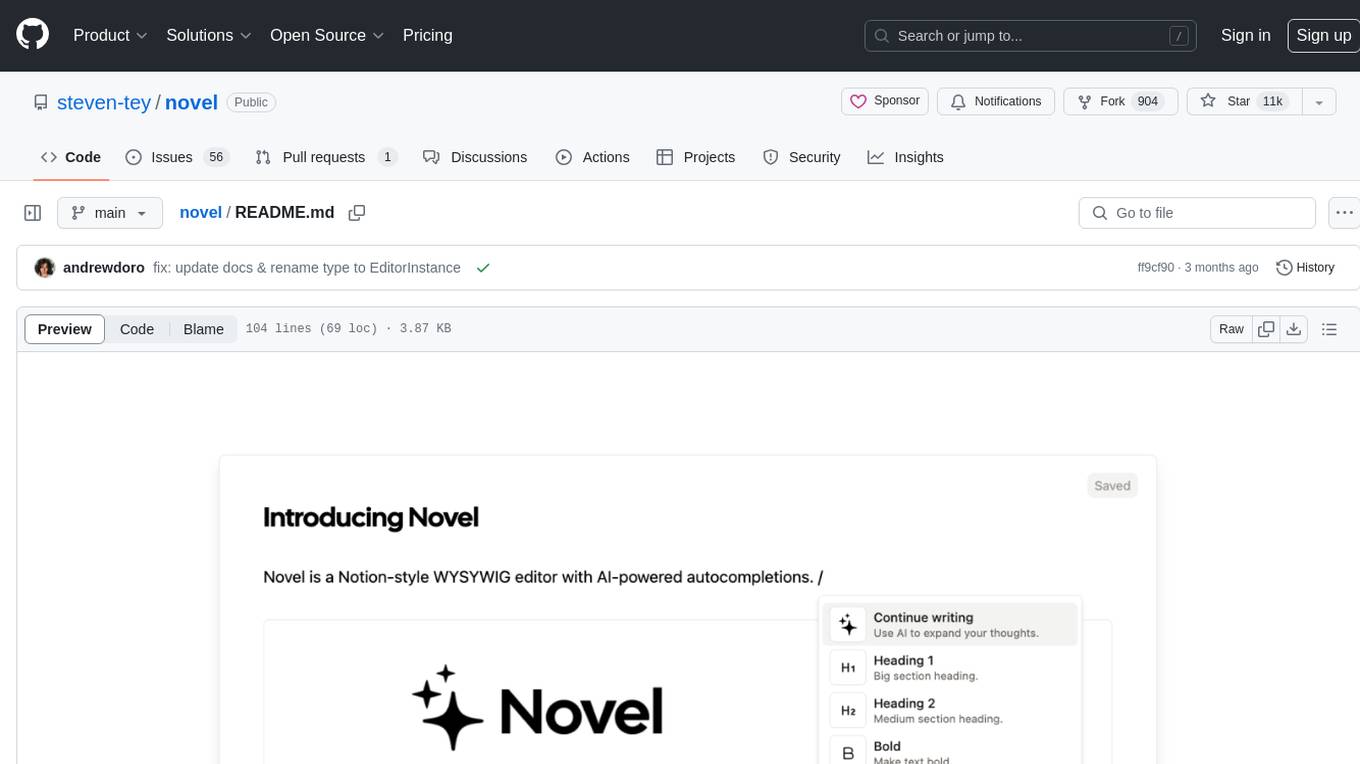

novel

Novel is an open-source Notion-style WYSIWYG editor with AI-powered autocompletions. It allows users to easily create and edit content with the help of AI suggestions. The tool is built on a modern tech stack and supports cross-framework development. Users can deploy their own version of Novel to Vercel with one click and contribute to the project by reporting bugs or making feature enhancements through pull requests.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

mikupad

mikupad is a lightweight and efficient language model front-end powered by ReactJS, all packed into a single HTML file. Inspired by the likes of NovelAI, it provides a simple yet powerful interface for generating text with the help of various backends.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

firecrawl

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It crawls all accessible subpages and provides clean markdown for each, without requiring a sitemap. The API is easy to use and can be self-hosted. It also integrates with Langchain and Llama Index. The Python SDK makes it easy to crawl and scrape websites in Python code.