gptel

A simple LLM client for Emacs

Stars: 2892

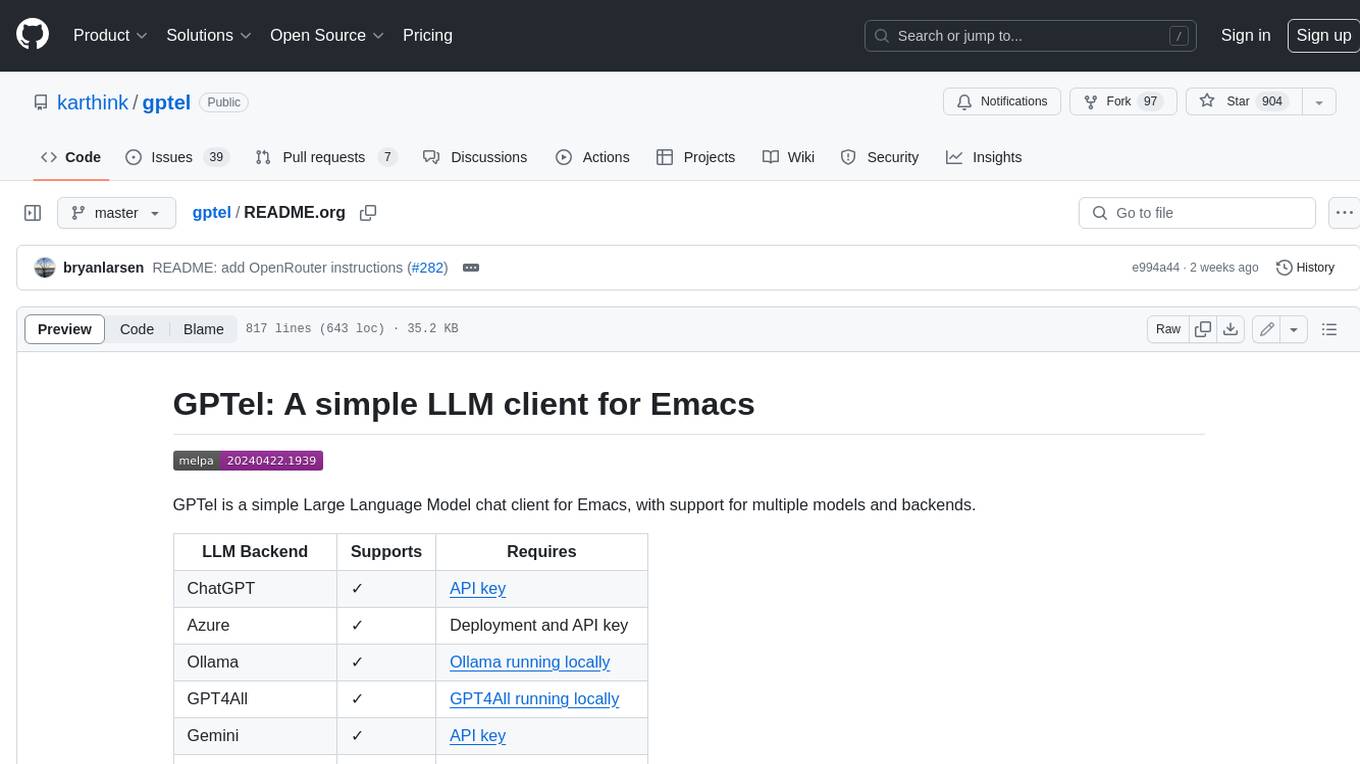

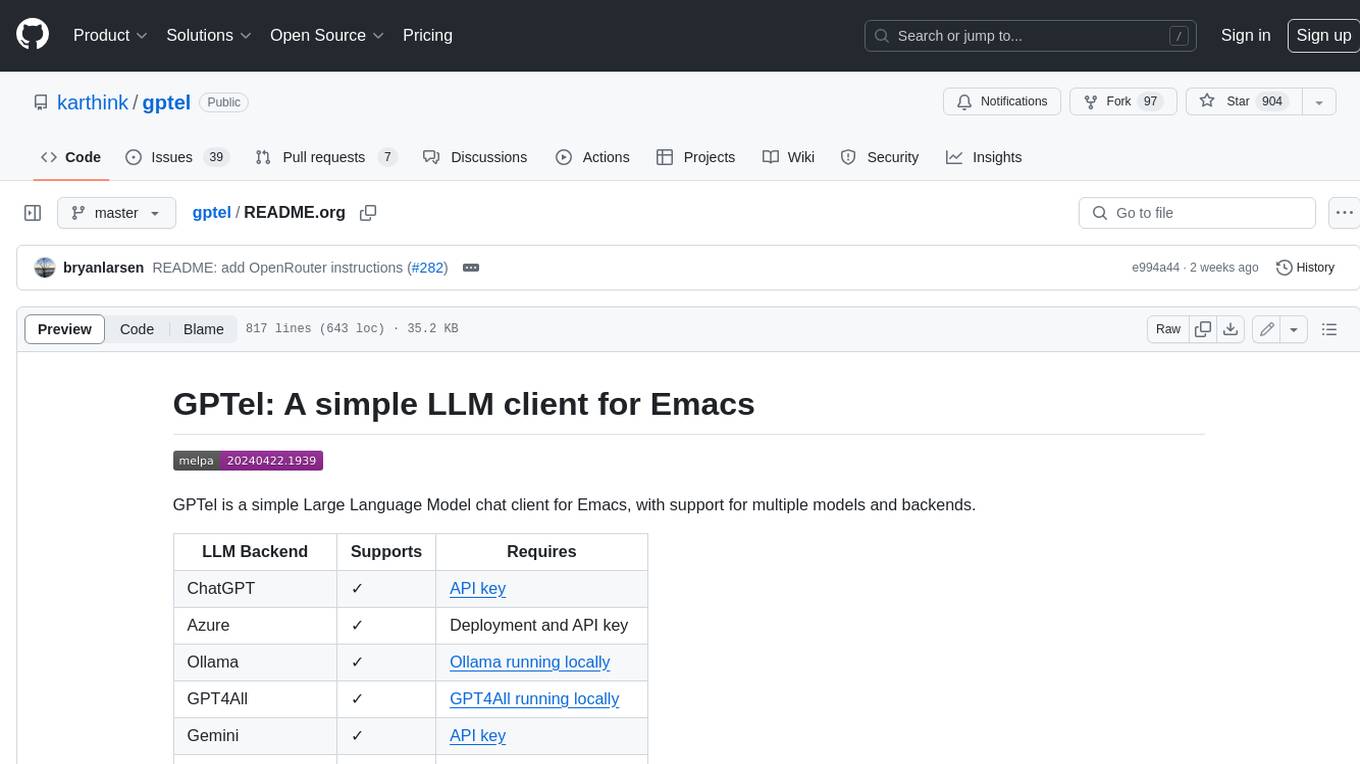

GPTel is a simple Large Language Model chat client for Emacs, with support for multiple models and backends. It's async and fast, streams responses, and interacts with LLMs from anywhere in Emacs. LLM responses are in Markdown or Org markup. Supports conversations and multiple independent sessions. Chats can be saved as regular Markdown/Org/Text files and resumed later. You can go back and edit your previous prompts or LLM responses when continuing a conversation. These will be fed back to the model. Don't like gptel's workflow? Use it to create your own for any supported model/backend with a simple API.

README:

#+title: gptel: A simple LLM client for Emacs

[[https://elpa.nongnu.org/nongnu/gptel.html][file:https://elpa.nongnu.org/nongnu/gptel.svg]] [[https://elpa.nongnu.org/nongnu-devel/gptel.html][file:https://elpa.nongnu.org/nongnu-devel/gptel.svg]] [[https://stable.melpa.org/#/gptel][file:https://stable.melpa.org/packages/gptel-badge.svg]] [[https://melpa.org/#/gptel][file:https://melpa.org/packages/gptel-badge.svg]]

gptel is a simple Large Language Model chat client for Emacs, with support for multiple models and backends. It works in the spirit of Emacs, available at any time and uniformly in any buffer.

General usage: ([[https://www.youtube.com/watch?v=bsRnh_brggM][YouTube Demo]])

https://user-images.githubusercontent.com/8607532/230516812-86510a09-a2fb-4cbd-b53f-cc2522d05a13.mp4

https://user-images.githubusercontent.com/8607532/230516816-ae4a613a-4d01-4073-ad3f-b66fa73c6e45.mp4

In-place usage

#+html:

https://github.com/user-attachments/assets/cec11aec-52f6-412e-9e7a-9358e8b9b1bf #+html:

Tool use

#+html:

https://github.com/user-attachments/assets/5f993659-4cfd-49fa-b5cd-19c55766b9b2 #+html:

#+html:

https://github.com/user-attachments/assets/8f57c20b-e1b0-4d86-b972-f46fb90ae1e7 #+html:

See also [[https://youtu.be/g1VMGhC5gRU][this youtube demo (2 minutes)]] by Armin Darvish.

https://github-production-user-asset-6210df.s3.amazonaws.com/8607532/278854024-ae1336c4-5b87-41f2-83e9-e415349d6a43.mp4

- Interact with LLMs from anywhere in Emacs (any buffer, shell, minibuffer, wherever).

- LLM responses are in Markdown or Org markup.

- Supports multiple independent conversations, one-off ad hoc interactions and anything in between.

- Supports tool-use to equip LLMs with agentic capabilities.

- Supports Model Context Protocol (MCP) integration using [[https://github.com/lizqwerscott/mcp.el][mcp.el]].

- Supports multi-modal input (include images, documents).

- Supports "reasoning" content in LLM responses.

- Save chats as regular Markdown/Org/Text files and resume them later.

- Edit your previous prompts or LLM responses when continuing a conversation. These will be fed back to the model.

- Supports introspection, so you can see /exactly/ what will be sent. Inspect and modify queries before sending them.

- Pause multi-stage requests at an intermediate stage and resume them later.

- Don't like gptel's workflow? Use it to create your own for any supported model/backend with a [[https://github.com/karthink/gptel/wiki/Defining-custom-gptel-commands][simple API]].

gptel uses Curl if available, but falls back to the built-in url-retrieve to work without external dependencies.

** Contents :toc:

- [[#installation][Installation]]

- [[#straight][Straight]]

- [[#manual][Manual]]

- [[#doom-emacs][Doom Emacs]]

- [[#spacemacs][Spacemacs]]

- [[#setup][Setup]]

- [[#chatgpt][ChatGPT]]

- [[#other-llm-backends][Other LLM backends]]

- [[#optional-securing-api-keys-with-authinfo][(Optional) Securing API keys with =authinfo=]]

- [[#azure][Azure]]

- [[#gpt4all][GPT4All]]

- [[#ollama][Ollama]]

- [[#open-webui][Open WebUI]]

- [[#gemini][Gemini]]

- [[#llamacpp-or-llamafile][Llama.cpp or Llamafile]]

- [[#kagi-fastgpt--summarizer][Kagi (FastGPT & Summarizer)]]

- [[#togetherai][together.ai]]

- [[#anyscale][Anyscale]]

- [[#perplexity][Perplexity]]

- [[#anthropic-claude][Anthropic (Claude)]]

- [[#groq][Groq]]

- [[#mistral-le-chat][Mistral Le Chat]]

- [[#openrouter][OpenRouter]]

- [[#privategpt][PrivateGPT]]

- [[#deepseek][DeepSeek]]

- [[#sambanova-deepseek][Sambanova (Deepseek)]]

- [[#cerebras][Cerebras]]

- [[#github-models][Github Models]]

- [[#novita-ai][Novita AI]]

- [[#xai][xAI]]

- [[#aiml-api][AI/ML API]]

- [[#github-copilotchat][GitHub CopilotChat]]

- [[#aws-bedrock][AWS Bedrock]]

- [[#moonshot-kimi][Moonshot (Kimi)]]

- [[#usage][Usage]]

- [[#in-any-buffer][In any buffer:]]

- [[#in-a-dedicated-chat-buffer][In a dedicated chat buffer:]]

- [[#including-media-images-documents-or-plain-text-files-with-requests][Including media (images, documents or plain-text files) with requests]]

- [[#save-and-restore-your-chat-sessions][Save and restore your chat sessions]]

- [[#setting-options-backend-model-request-parameters-system-prompts-and-more][Setting options (backend, model, request parameters, system prompts and more)]]

- [[#include-more-context-with-requests][Include more context with requests]]

- [[#handle-reasoning-content][Handle "reasoning" content]]

- [[#tool-use][Tool use]]

- [[#defining-gptel-tools][Defining gptel tools]]

- [[#selecting-tools][Selecting tools]]

- [[#model-context-protocol-mcp-integration][Model Context Protocol (MCP) integration]]

- [[#rewrite-refactor-or-fill-in-a-region][Rewrite, refactor or fill in a region]]

- [[#extra-org-mode-conveniences][Extra Org mode conveniences]]

- [[#introspection-examine-debug-or-modify-requests][Introspection (examine, debug or modify requests)]]

- [[#faq][FAQ]]

- [[#chat-buffer-ui][Chat buffer UI]]

- [[#i-want-the-window-to-scroll-automatically-as-the-response-is-inserted][I want the window to scroll automatically as the response is inserted]]

- [[#i-want-the-cursor-to-move-to-the-next-prompt-after-the-response-is-inserted][I want the cursor to move to the next prompt after the response is inserted]]

- [[#i-want-to-change-the-formatting-of-the-prompt-and-llm-response][I want to change the formatting of the prompt and LLM response]]

- [[#how-does-gptel-distinguish-between-user-prompts-and-llm-responses][How does gptel distinguish between user prompts and LLM responses?]]

- [[#transient-menu-behavior][Transient menu behavior]]

- [[#i-want-to-set-gptel-options-but-only-for-this-buffer][I want to set gptel options but only for this buffer]]

- [[#i-want-the-transient-menu-options-to-be-saved-so-i-only-need-to-set-them-once][I want the transient menu options to be saved so I only need to set them once]]

- [[#using-the-transient-menu-leaves-behind-extra-windows][Using the transient menu leaves behind extra windows]]

- [[#can-i-change-the-transient-menu-key-bindings][Can I change the transient menu key bindings?]]

- [[#doom-emacs-sending-a-query-from-the-gptel-menu-fails-because-of-a-key-conflict-with-org-mode][(Doom Emacs) Sending a query from the gptel menu fails because of a key conflict with Org mode]]

- [[#miscellaneous][Miscellaneous]]

- [[#i-want-to-use-gptel-in-a-way-thats-not-supported-by-gptel-send-or-the-options-menu][I want to use gptel in a way that's not supported by =gptel-send= or the options menu]]

- [[#chatgpt-i-get-the-error-http2-429-you-exceeded-your-current-quota][(ChatGPT) I get the error "(HTTP/2 429) You exceeded your current quota"]]

- [[#why-another-llm-client][Why another LLM client?]]

- [[#chat-buffer-ui][Chat buffer UI]]

- [[#additional-configuration][Additional Configuration]]

- [[#option-presets][Option presets]]

- [[#applying-presets-to-requests-automatically][Applying presets to requests automatically]]

- [[#option-presets][Option presets]]

- [[#alternatives][Alternatives]]

- [[#packages-using-gptel][Packages using gptel]]

- [[#acknowledgments][Acknowledgments]]

** Installation

Note: gptel requires Transient 0.7.4 or higher. Transient is a built-in package and Emacs does not update it by default. Ensure that =package-install-upgrade-built-in= is true, or update Transient manually.

- Release version: =M-x package-install= ⏎ =gptel= in Emacs.

- Development snapshot: Add MELPA or NonGNU-devel ELPA to your list of package sources, then install with =M-x package-install= ⏎ =gptel=.

- Optional: Install =markdown-mode=.

#+html:

*** Straight #+html:

#+begin_src emacs-lisp (straight-use-package 'gptel) #+end_src #+html:*** Manual #+html:

Note: gptel requires Transient 0.7.4 or higher. Transient is a built-in package and Emacs does not update it by default. Ensure that =package-install-upgrade-built-in= is true, or update Transient manually.Clone or download this repository and run =M-x package-install-file⏎= on the repository directory. #+html: #+html:

*** Doom Emacs #+html:

In =packages.el=#+begin_src emacs-lisp (package! gptel :recipe (:nonrecursive t)) #+end_src

In =config.el=

#+begin_src emacs-lisp (use-package! gptel :config (setq! gptel-api-key "your key")) #+end_src

"your key" can be the API key itself, or (safer) a function that returns the key. Setting =gptel-api-key= is optional, you will be asked for a key if it's not found.

#+html: #+html:

*** Spacemacs #+html:

In your =.spacemacs= file, add =llm-client= to =dotspacemacs-configuration-layers=.#+begin_src emacs-lisp (llm-client :variables llm-client-enable-gptel t) #+end_src #+html: ** Setup

gptel supports a number of LLM providers:

#+html:

*** ChatGPT Procure an [[https://platform.openai.com/account/api-keys][OpenAI API key]].

Optional: Set =gptel-api-key= to the key. Alternatively, you may choose a more secure method such as:

- Setting it to a custom function that returns the key.

- Leaving it set to the default =gptel-api-key-from-auth-source= function which reads keys from =~/.authinfo=. (See [[#optional-securing-api-keys-with-authinfo][authinfo details]])

*** Other LLM backends

ChatGPT is configured out of the box. If you want to use other LLM backends (like Ollama, Claude/Anthropic or Gemini) you need to register and configure them first.

As an example, registering a backend typically looks like the following:

#+begin_src emacs-lisp (gptel-make-anthropic "Claude" :stream t :key gptel-api-key) #+end_src

Once this backend is registered, you'll see model names prefixed by "Claude:" appear in gptel's menu.

See below for details on your preferred LLM provider, including local LLMs.

#+html:

**** (Optional) Securing API keys with =authinfo= #+html:

You can use Emacs' built-in support for =authinfo= to store API keys required by gptel. Add your API keys to =~/.authinfo=, and leave =gptel-api-key= set to its default. By default, the API endpoint DNS name (e.g. "api.openai.com") is used as HOST and "apikey" as USER.

#+begin_src authinfo machine api.openai.com login apikey password sk-secret-openai-api-key-goes-here machine api.anthropic.com login apikey password sk-secret-anthropic-api-key-goes-here #+end_src

#+html: #+html:

**** Azure #+html:

Register a backend with

#+begin_src emacs-lisp (gptel-make-azure "Azure-1" ;Name, whatever you'd like :protocol "https" ;Optional -- https is the default :host "YOUR_RESOURCE_NAME.openai.azure.com" :endpoint "/openai/deployments/YOUR_DEPLOYMENT_NAME/chat/completions?api-version=2023-05-15" ;or equivalent :stream t ;Enable streaming responses :key #'gptel-api-key :models '(gpt-3.5-turbo gpt-4)) #+end_src

Refer to the documentation of =gptel-make-azure= to set more parameters.

You can pick this backend from the menu when using gptel. (see [[#usage][Usage]]).

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'gpt-3.5-turbo gptel-backend (gptel-make-azure "Azure-1" :protocol "https" :host "YOUR_RESOURCE_NAME.openai.azure.com" :endpoint "/openai/deployments/YOUR_DEPLOYMENT_NAME/chat/completions?api-version=2023-05-15" :stream t :key #'gptel-api-key :models '(gpt-3.5-turbo gpt-4))) #+end_src

#+html: #+html:

**** GPT4All #+html:

Register a backend with

#+begin_src emacs-lisp (gptel-make-gpt4all "GPT4All" ;Name of your choosing :protocol "http" :host "localhost:4891" ;Where it's running :models '(mistral-7b-openorca.Q4_0.gguf)) ;Available models #+end_src

These are the required parameters, refer to the documentation of =gptel-make-gpt4all= for more.

You can pick this backend from the menu when using gptel (see [[#usage][Usage]]).

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above. Additionally you may want to increase the response token size since GPT4All uses very short (often truncated) responses by default.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-max-tokens 500 gptel-model 'mistral-7b-openorca.Q4_0.gguf gptel-backend (gptel-make-gpt4all "GPT4All" :protocol "http" :host "localhost:4891" :models '(mistral-7b-openorca.Q4_0.gguf))) #+end_src

#+html: #+html:

**** Ollama #+html:

Register a backend with #+begin_src emacs-lisp (gptel-make-ollama "Ollama" ;Any name of your choosing :host "localhost:11434" ;Where it's running :stream t ;Stream responses :models '(mistral:latest)) ;List of models #+end_src

These are the required parameters, refer to the documentation of =gptel-make-ollama= for more.

You can pick this backend from the menu when using gptel (see [[#usage][Usage]])

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'mistral:latest gptel-backend (gptel-make-ollama "Ollama" :host "localhost:11434" :stream t :models '(mistral:latest))) #+end_src

#+html:

#+html:

**** Open WebUI #+html:

[[https://openwebui.com/][Open WebUI]] is an open source, self-hosted system which provides a multi-user web chat interface and an API endpoint for accessing LLMs, especially LLMs running locally on inference servers like Ollama.

Because it presents an OpenAI-compatible endpoint, you use gptel-make-openai to register it as a backend.

For instance, you can use this form to register a backend for a local instance of Open Web UI served via http on port 3000:

#+begin_src emacs-lisp (gptel-make-openai "OpenWebUI" :host "localhost:3000" :protocol "http" :key "KEY_FOR_ACCESSING_OPENWEBUI" :endpoint "/api/chat/completions" :stream t :models '("gemma3n:latest")) #+end_src

Or if you are running Open Web UI on another host on your local network (box.local), serving via https with self-signed certificates, this will work:

#+begin_src emacs-lisp (gptel-make-openai "OpenWebUI" :host "box.local" :curl-args '("--insecure") ; needed for self-signed certs :key "KEY_FOR_ACCESSING_OPENWEBUI" :endpoint "/api/chat/completions" :stream t :models '("gemma3n:latest")) #+end_src

To find your API key in Open WebUI, click the user name in the bottom left, Settings, Account, and then Show by API Keys section.

Refer to the documentation of =gptel-make-openai= for more configuration options.

You can pick this backend from the menu when using gptel (see [[#usage][Usage]])

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model "gemma3n:latest" gptel-backend (gptel-make-openai "OpenWebUI" :host "localhost:3000" :protocol "http" :key "KEY_FOR_ACCESSING_OPENWEBUI" :endpoint "/api/chat/completions" :stream t :models '("gemma3n:latest"))) #+end_src

#+html:

#+html:

**** Gemini #+html:

Register a backend with

#+begin_src emacs-lisp ;; :key can be a function that returns the API key. (gptel-make-gemini "Gemini" :key "YOUR_GEMINI_API_KEY" :stream t) #+end_src

These are the required parameters, refer to the documentation of =gptel-make-gemini= for more.

You can pick this backend from the menu when using gptel (see [[#usage][Usage]])

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'gemini-2.5-pro-exp-03-25 gptel-backend (gptel-make-gemini "Gemini" :key "YOUR_GEMINI_API_KEY" :stream t)) #+end_src

#+html:

#+html:

**** Llama.cpp or Llamafile #+html:

(If using a llamafile, run a [[https://github.com/Mozilla-Ocho/llamafile#other-example-llamafiles][server llamafile]] instead of a "command-line llamafile", and a model that supports text generation.)

Register a backend with

#+begin_src emacs-lisp ;; Llama.cpp offers an OpenAI compatible API (gptel-make-openai "llama-cpp" ;Any name :stream t ;Stream responses :protocol "http" :host "localhost:8000" ;Llama.cpp server location :models '(test)) ;Any names, doesn't matter for Llama #+end_src

These are the required parameters, refer to the documentation of =gptel-make-openai= for more.

You can pick this backend from the menu when using gptel (see [[#usage][Usage]])

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'test gptel-backend (gptel-make-openai "llama-cpp" :stream t :protocol "http" :host "localhost:8000" :models '(test))) #+end_src

#+html: #+html:

**** Kagi (FastGPT & Summarizer) #+html:

Kagi's FastGPT model and the Universal Summarizer are both supported. A couple of notes:

-

Universal Summarizer: If there is a URL at point, the summarizer will summarize the contents of the URL. Otherwise the context sent to the model is the same as always: the buffer text upto point, or the contents of the region if the region is active.

-

Kagi models do not support multi-turn conversations, interactions are "one-shot". They also do not support streaming responses.

Register a backend with

#+begin_src emacs-lisp (gptel-make-kagi "Kagi" ;any name :key "YOUR_KAGI_API_KEY") ;can be a function that returns the key #+end_src

These are the required parameters, refer to the documentation of =gptel-make-kagi= for more.

You can pick this backend and the model (fastgpt/summarizer) from the transient menu when using gptel.

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'fastgpt gptel-backend (gptel-make-kagi "Kagi" :key "YOUR_KAGI_API_KEY")) #+end_src

The alternatives to =fastgpt= include =summarize:cecil=, =summarize:agnes=, =summarize:daphne= and =summarize:muriel=. The difference between the summarizer engines is [[https://help.kagi.com/kagi/api/summarizer.html#summarization-engines][documented here]].

#+html: #+html:

**** together.ai #+html:

Register a backend with

#+begin_src emacs-lisp ;; Together.ai offers an OpenAI compatible API (gptel-make-openai "TogetherAI" ;Any name you want :host "api.together.xyz" :key "your-api-key" ;can be a function that returns the key :stream t :models '(;; has many more, check together.ai mistralai/Mixtral-8x7B-Instruct-v0.1 codellama/CodeLlama-13b-Instruct-hf codellama/CodeLlama-34b-Instruct-hf)) #+end_src

You can pick this backend from the menu when using gptel (see [[#usage][Usage]])

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp

;; OPTIONAL configuration

(setq

gptel-model 'mistralai/Mixtral-8x7B-Instruct-v0.1

gptel-backend

(gptel-make-openai "TogetherAI"

:host "api.together.xyz"

:key "your-api-key"

:stream t

:models '(;; has many more, check together.ai

mistralai/Mixtral-8x7B-Instruct-v0.1

codellama/CodeLlama-13b-Instruct-hf

codellama/CodeLlama-34b-Instruct-hf)))

#+end_src

#+html: #+html:

**** Anyscale #+html:

Register a backend with

#+begin_src emacs-lisp ;; Anyscale offers an OpenAI compatible API (gptel-make-openai "Anyscale" ;Any name you want :host "api.endpoints.anyscale.com" :key "your-api-key" ;can be a function that returns the key :models '(;; has many more, check anyscale mistralai/Mixtral-8x7B-Instruct-v0.1)) #+end_src

You can pick this backend from the menu when using gptel (see [[#usage][Usage]])

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'mistralai/Mixtral-8x7B-Instruct-v0.1 gptel-backend (gptel-make-openai "Anyscale" :host "api.endpoints.anyscale.com" :key "your-api-key" :models '(;; has many more, check anyscale mistralai/Mixtral-8x7B-Instruct-v0.1))) #+end_src

#+html: #+html:

**** Perplexity #+html:

Register a backend with

#+begin_src emacs-lisp (gptel-make-perplexity "Perplexity" ;Any name you want :key "your-api-key" ;can be a function that returns the key :stream t) ;If you want responses to be streamed #+end_src

You can pick this backend from the menu when using gptel (see [[#usage][Usage]])

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'sonar gptel-backend (gptel-make-perplexity "Perplexity" :key "your-api-key" :stream t)) #+end_src

#+html: #+html:

**** Anthropic (Claude) #+html:

Register a backend with#+begin_src emacs-lisp (gptel-make-anthropic "Claude" ;Any name you want :stream t ;Streaming responses :key "your-api-key") #+end_src The =:key= can be a function that returns the key (more secure).

You can pick this backend from the menu when using gptel (see [[#usage][Usage]]).

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'claude-3-sonnet-20240229 ; "claude-3-opus-20240229" also available gptel-backend (gptel-make-anthropic "Claude" :stream t :key "your-api-key")) #+end_src

***** (Optional) Interim support for Claude 3.7 Sonnet

To use Claude 3.7 Sonnet model in its "thinking" mode, you can define a second Claude backend and select it via the UI or elisp:

#+begin_src emacs-lisp (gptel-make-anthropic "Claude-thinking" ;Any name you want :key "your-API-key" :stream t :models '(claude-sonnet-4-20250514 claude-3-7-sonnet-20250219) :request-params '(:thinking (:type "enabled" :budget_tokens 2048) :max_tokens 4096)) #+end_src

You can set the reasoning budget tokens and max tokens for this usage via the =:budget_tokens= and =:max_tokens= keys here, respectively.

You can control whether/how the reasoning output is shown via gptel's menu or =gptel-include-reasoning=, see [[#handle-reasoning-content][handling reasoning content]].

#+html: #+html:

**** Groq #+html:

Register a backend with

#+begin_src emacs-lisp ;; Groq offers an OpenAI compatible API (gptel-make-openai "Groq" ;Any name you want :host "api.groq.com" :endpoint "/openai/v1/chat/completions" :stream t :key "your-api-key" ;can be a function that returns the key :models '(llama-3.1-70b-versatile llama-3.1-8b-instant llama3-70b-8192 llama3-8b-8192 mixtral-8x7b-32768 gemma-7b-it)) #+end_src

You can pick this backend from the menu when using gptel (see [[#usage][Usage]]). Note that Groq is fast enough that you could easily set =:stream nil= and still get near-instant responses.

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'mixtral-8x7b-32768 gptel-backend (gptel-make-openai "Groq" :host "api.groq.com" :endpoint "/openai/v1/chat/completions" :stream t :key "your-api-key" :models '(llama-3.1-70b-versatile llama-3.1-8b-instant llama3-70b-8192 llama3-8b-8192 mixtral-8x7b-32768 gemma-7b-it))) #+end_src

#+html: #+html:

**** Mistral Le Chat #+html:

Register a backend with

#+begin_src emacs-lisp ;; Mistral offers an OpenAI compatible API (gptel-make-openai "MistralLeChat" ;Any name you want :host "api.mistral.ai" :endpoint "/v1/chat/completions" :protocol "https" :key "your-api-key" ;can be a function that returns the key :models '("mistral-small")) #+end_src

You can pick this backend from the menu when using gptel (see [[#usage][Usage]]).

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'mistral-small gptel-backend (gptel-make-openai "MistralLeChat" ;Any name you want :host "api.mistral.ai" :endpoint "/v1/chat/completions" :protocol "https" :key "your-api-key" ;can be a function that returns the key :models '("mistral-small"))) #+end_src

#+html: #+html:

**** OpenRouter #+html:

Register a backend with

#+begin_src emacs-lisp ;; OpenRouter offers an OpenAI compatible API (gptel-make-openai "OpenRouter" ;Any name you want :host "openrouter.ai" :endpoint "/api/v1/chat/completions" :stream t :key "your-api-key" ;can be a function that returns the key :models '(openai/gpt-3.5-turbo mistralai/mixtral-8x7b-instruct meta-llama/codellama-34b-instruct codellama/codellama-70b-instruct google/palm-2-codechat-bison-32k google/gemini-pro))

#+end_src

You can pick this backend from the menu when using gptel (see [[#usage][Usage]]).

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'mixtral-8x7b-32768 gptel-backend (gptel-make-openai "OpenRouter" ;Any name you want :host "openrouter.ai" :endpoint "/api/v1/chat/completions" :stream t :key "your-api-key" ;can be a function that returns the key :models '(openai/gpt-3.5-turbo mistralai/mixtral-8x7b-instruct meta-llama/codellama-34b-instruct codellama/codellama-70b-instruct google/palm-2-codechat-bison-32k google/gemini-pro)))

#+end_src

#+html: #+html:

**** PrivateGPT #+html:

Register a backend with

#+begin_src emacs-lisp (gptel-make-privategpt "privateGPT" ;Any name you want :protocol "http" :host "localhost:8001" :stream t :context t ;Use context provided by embeddings :sources t ;Return information about source documents :models '(private-gpt))

#+end_src

You can pick this backend from the menu when using gptel (see [[#usage][Usage]]).

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'private-gpt gptel-backend (gptel-make-privategpt "privateGPT" ;Any name you want :protocol "http" :host "localhost:8001" :stream t :context t ;Use context provided by embeddings :sources t ;Return information about source documents :models '(private-gpt)))

#+end_src

#+html: #+html:

**** DeepSeek #+html:

Register a backend with

#+begin_src emacs-lisp (gptel-make-deepseek "DeepSeek" ;Any name you want :stream t ;for streaming responses :key "your-api-key") ;can be a function that returns the key #+end_src

You can pick this backend from the menu when using gptel (see [[#usage][Usage]]).

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'deepseek-reasoner gptel-backend (gptel-make-deepseek "DeepSeek" :stream t :key "your-api-key")) #+end_src

#+html: #+html:

**** Sambanova (Deepseek) #+html: Sambanova offers various LLMs through their Samba Nova Cloud offering, with Deepseek-R1 being one of them. The token speed for Deepseek R1 via Sambanova is about 6 times faster than when accessed through deepseek.com

Register a backend with

#+begin_src emacs-lisp (gptel-make-openai "Sambanova" ;Any name you want :host "api.sambanova.ai" :endpoint "/v1/chat/completions" :stream t ;for streaming responses :key "your-api-key" ;can be a function that returns the key :models '(DeepSeek-R1)) #+end_src

You can pick this backend from the menu when using gptel (see [[#usage][Usage]]).

***** (Optional) Set as the default gptel backend The code aboves makes the backend available for selection. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Add these two lines to your configuration:

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'DeepSeek-R1) (setq gptel-backend (gptel-get-backend "Sambanova")) #+end_src

#+html: #+html:

**** Cerebras #+html:

Register a backend with

#+begin_src emacs-lisp ;; Cerebras offers an instant OpenAI compatible API (gptel-make-openai "Cerebras" :host "api.cerebras.ai" :endpoint "/v1/chat/completions" :stream t ;optionally nil as Cerebras is instant AI :key "your-api-key" ;can be a function that returns the key :models '(llama3.1-70b llama3.1-8b)) #+end_src

You can pick this backend from the menu when using gptel (see [[#usage][Usage]]).

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'llama3.1-8b gptel-backend (gptel-make-openai "Cerebras" :host "api.cerebras.ai" :endpoint "/v1/chat/completions" :stream nil :key "your-api-key" :models '(llama3.1-70b llama3.1-8b))) #+end_src

#+html: #+html:

**** Github Models #+html:

NOTE: [[https://docs.github.com/en/github-models/about-github-models][GitHub Models]] is /not/ GitHub Copilot! If you want to use GitHub Copilot chat via gptel, look at the instructions for GitHub CopilotChat below instead.

Register a backend with

#+begin_src emacs-lisp ;; Github Models offers an OpenAI compatible API (gptel-make-openai "Github Models" ;Any name you want :host "models.inference.ai.azure.com" :endpoint "/chat/completions?api-version=2024-05-01-preview" :stream t :key "your-github-token" :models '(gpt-4o)) #+end_src

You will need to create a github [[https://github.com/settings/personal-access-tokens][token]].

For all the available models, check the [[https://github.com/marketplace/models][marketplace]].

You can pick this backend from the menu when using (see [[#usage][Usage]]).

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'gpt-4o gptel-backend (gptel-make-openai "Github Models" ;Any name you want :host "models.inference.ai.azure.com" :endpoint "/chat/completions?api-version=2024-05-01-preview" :stream t :key "your-github-token" :models '(gpt-4o)) #+end_src

#+html: #+html:

**** Novita AI #+html:

Register a backend with

#+begin_src emacs-lisp ;; Novita AI offers an OpenAI compatible API (gptel-make-openai "NovitaAI" ;Any name you want :host "api.novita.ai" :endpoint "/v3/openai" :key "your-api-key" ;can be a function that returns the key :stream t :models '(;; has many more, check https://novita.ai/llm-api gryphe/mythomax-l2-13b meta-llama/llama-3-70b-instruct meta-llama/llama-3.1-70b-instruct)) #+end_src

You can pick this backend from the menu when using gptel (see [[#usage][Usage]])

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp

;; OPTIONAL configuration

(setq

gptel-model 'gryphe/mythomax-l2-13b

gptel-backend

(gptel-make-openai "NovitaAI"

:host "api.novita.ai"

:endpoint "/v3/openai"

:key "your-api-key"

:stream t

:models '(;; has many more, check https://novita.ai/llm-api

mistralai/Mixtral-8x7B-Instruct-v0.1

meta-llama/llama-3-70b-instruct

meta-llama/llama-3.1-70b-instruct)))

#+end_src

#+html: #+html:

**** xAI #+html:

Register a backend with

#+begin_src emacs-lisp (gptel-make-xai "xAI" ; Any name you want :stream t :key "your-api-key") ; can be a function that returns the key #+end_src

You can pick this backend from the menu when using gptel (see [[#usage][Usage]])

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp (setq gptel-model 'grok-3-latest gptel-backend (gptel-make-xai "xAI" ; Any name you want :key "your-api-key" ; can be a function that returns the key :stream t)) #+end_src

#+html: #+html:

**** AI/ML API #+html:

AI/ML API provides 300+ AI models including Deepseek, Gemini, ChatGPT. The models run at enterprise-grade rate limits and uptimes.

Register a backend with

#+begin_src emacs-lisp ;; AI/ML API offers an OpenAI compatible API (gptel-make-openai "AI/ML API" ;Any name you want :host "api.aimlapi.com" :endpoint "/v1/chat/completions" :stream t :key "your-api-key" ;can be a function that returns the key :models '(deepseek-chat gemini-pro gpt-4o)) #+end_src

You can pick this backend from the menu when using gptel (see [[#usage][Usage]]).

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'gpt-4o gptel-backend (gptel-make-openai "AI/ML API" :host "api.aimlapi.com" :endpoint "/v1/chat/completions" :stream t :key "your-api-key" :models '(deepseek-chat gemini-pro gpt-4o))) #+end_src

#+html: #+html:

**** GitHub CopilotChat #+html:

Register a backend with

#+begin_src emacs-lisp (gptel-make-gh-copilot "Copilot") #+end_src

You will be informed to login into =GitHub= as required. You can pick this backend from the menu when using gptel (see [[#usage][Usage]]).

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'claude-3.7-sonnet gptel-backend (gptel-make-gh-copilot "Copilot")) #+end_src

#+html: #+html:

**** AWS Bedrock #+html:

Register a backend with

#+begin_src emacs-lisp (gptel-make-bedrock "AWS" ;; optionally enable streaming :stream t :region "ap-northeast-1" ;; subset of gptel--bedrock-models :models '(claude-sonnet-4-20250514) ;; Model region for cross-region inference profiles. Required for models such ;; as Claude without on-demand throughput support. One of 'apac, 'eu or 'us. ;; https://docs.aws.amazon.com/bedrock/latest/userguide/inference-profiles-use.html :model-region 'apac) #+end_src

AWS has numerous credential provisions; we follow this order precedence,

- (argument)

:aws-bearer-token - (env. variable)

AWS_BEARER_TOKEN_BEDROCK - (argument)

:aws-profileIf this option is specified, the Bedrock-backend uses the shared AWS config and credentials files to obtain credentials based on the AWS Profile selected. If:aws-profileis set to the keyword:static, the IAM credentials are imported without a profile argument. - (env. varible)

AWS_PROFILE - (env. varible)

AWS_ACCESS_KEY_ID,AWS_SECRET_ACCESS_KEYandAWS_SESSION_TOKEN

NOTE: Unless AWS_BEARER_TOKEN_BEDROCK token is used, the Bedrock backend needs curl >= 8.9 in order for the sigv4 signing to work properly,

https://github.com/curl/curl/issues/11794

An error will be signalled if gptel-curl is NIL.

You can pick this backend from the menu when using gptel (see [[#usage][Usage]]).

***** (Optional) Set as the default gptel backend

The above code makes the backend available to select. If you want it to be the default backend for gptel, you can set this as the value of =gptel-backend=. Use this instead of the above.

#+begin_src emacs-lisp ;; OPTIONAL configuration (setq gptel-model 'claude-sonnet-4-20250514 gptel-backend (gptel-make-bedrock "AWS" ;; optionally enable streaming :stream t ;; optionally specify the aws profile ;; :profile :region "ap-northeast-1" ;; subset of gptel--bedrock-models :models '(claude-sonnet-4-20250514) ;; Model region for cross-region inference profiles. Required for models such ;; as Claude without on-demand throughput support. One of 'apac, 'eu or 'us. ;; https://docs.aws.amazon.com/bedrock/latest/userguide/inference-profiles-use.html :model-region 'apac)) #+end_src

#+html: #+html:

**** Moonshot (Kimi) #+html:

Register a backend with

#+begin_src emacs-lisp (gptel-make-openai "Moonshot" :host "api.moonshot.cn" ;; or "api.moonshot.ai" for the global site :key "your-api-key" :stream t ;; optionally enable streaming :models '(kimi-latest kimi-k2-0711-preview)) #+end_src

See [[https://platform.moonshot.ai/docs/pricing/chat][Moonshot.ai document]] for a complete list of models.

***** (Optional) Use the builtin search tool

Moonshot supports a builtin search tool that does not requires the user to provide the tool implementation. To use that, you first need to define the tool and add to =gptel-tools= (while it does not requires the client to provide the search implementation, it does expects the client to reply a tool call message with its given argument, to be consistent with other tool calls):

#+begin_src emacs-lisp (setq gptel-tools (list (gptel-make-tool :name "$web_search" :function (lambda (&optional search_result) (json-serialize `(:search_result ,search_result))) :description "Moonshot builtin web search. Only usable by moonshot model (kimi), ignore this if you are not." :args '((:name "search_result" :type object :optional t)) :category "web"))) #+end_src

Then you also need to add the tool declaration via =:request-params= because it needs a special =builtin_function= type:

#+begin_src emacs-lisp (gptel-make-openai "Moonshot" :host "api.moonshot.cn" ;; or "api.moonshot.ai" for the global site :key "your-api-key" :stream t ;; optionally enable streaming :models '(kimi-latest kimi-k2-0711-preview) :request-params '(:tools [(:type "builtin_function" :function (:name "$web_search"))])) #+end_src

Now the chat should be able to automatically use search. Try "what's new today" and you should expect the up-to-date news in response.

#+html: ** Usage

gptel provides a few powerful, general purpose and flexible commands. You can dynamically tweak their behavior to the needs of your task with /directives/, redirection options and more. There is a [[https://www.youtube.com/watch?v=bsRnh_brggM][video demo]] showing various uses of gptel -- but =gptel-send= might be all you need.

|-------------------+---------------------------------------------------------------------------------------------------| | To send queries | Description | |-------------------+---------------------------------------------------------------------------------------------------| | =gptel-send= | Send all text up to =(point)=, or the selection if region is active. Works anywhere in Emacs. | | =gptel= | Create a new dedicated chat buffer. Not required to use gptel. | | =gptel-rewrite= | Rewrite, refactor or change the selected region. Can diff/ediff changes before merging/applying. | |-------------------+---------------------------------------------------------------------------------------------------|

|---------------------+---------------------------------------------------------------| | To tweak behavior | | |---------------------+---------------------------------------------------------------| | =C-u= =gptel-send= | Transient menu for preferences, input/output redirection etc. | | =gptel-menu= | /(Same)/ | |---------------------+---------------------------------------------------------------|

|------------------+--------------------------------------------------------------------------------------------------------| | To add context | | |------------------+--------------------------------------------------------------------------------------------------------| | =gptel-add= | Add/remove a region or buffer to gptel's context. In Dired, add/remove marked files. | | =gptel-add-file= | Add a file (text or supported media type) to gptel's context. Also available from the transient menu. | |------------------+--------------------------------------------------------------------------------------------------------|

|----------------------------+-----------------------------------------------------------------------------------------| | Org mode bonuses | | |----------------------------+-----------------------------------------------------------------------------------------| | =gptel-org-set-topic= | Limit conversation context to an Org heading. (For branching conversations see below.) | | =gptel-org-set-properties= | Write gptel configuration as Org properties, for per-heading chat configuration. | |----------------------------+-----------------------------------------------------------------------------------------|

|------------------+-------------------------------------------------------------------------------------------| | GitHub Copilot | | |------------------+-------------------------------------------------------------------------------------------| | =gptel-gh-login= | Authenticate with GitHub Copilot. (Automatically handled, but can be forced if required.) | |------------------+-------------------------------------------------------------------------------------------|

*** In any buffer:

-

Call =M-x gptel-send= to send the text up to the cursor. The response will be inserted below. Continue the conversation by typing below the response.

-

If a region is selected, the conversation will be limited to its contents.

-

Call =M-x gptel-send= with a prefix argument (

C-u)- to set chat parameters (model, backend, system message etc) for this buffer,

- include quick instructions for the next request only,

- to add additional context -- regions, buffers or files -- to gptel,

- to read the prompt from or redirect the response elsewhere,

- or to replace the prompt with the response.

You can also define a "preset" bundle of options that are applied together, see [[#option-presets][Option presets]] below.

*** In a dedicated chat buffer:

Note: gptel works anywhere in Emacs. The dedicated chat buffer only adds some conveniences.

-

Run =M-x gptel= to start or switch to the chat buffer. It will ask you for the key if you skipped the previous step. Run it with a prefix-arg (=C-u M-x gptel=) to start a new session.

-

In the gptel buffer, send your prompt with =M-x gptel-send=, bound to =C-c RET=.

-

Set chat parameters (LLM provider, model, directives etc) for the session by calling =gptel-send= with a prefix argument (=C-u C-c RET=):

That's it. You can go back and edit previous prompts and responses if you want.

The default mode is =markdown-mode= if available, else =text-mode=. You can set =gptel-default-mode= to =org-mode= if desired.

You can also define a "preset" bundle of options that are applied together, see [[#option-presets][Option presets]] below.

#+html:

**** Including media (images, documents or plain-text files) with requests #+html:

gptel supports sending media in Markdown and Org chat buffers, but this feature is disabled by default.

- You can enable it globally, for all models that support it, by setting =gptel-track-media=.

- Or you can set it locally, just for the chat buffer, via the header line:

There are two ways to include media or plain-text files with requests:

- Adding media files to the context with =gptel-add-file=, described further below.

- Including links to media in chat buffers, described here:

To include plain-text files, images or other supported document types with requests in chat buffers, you can include links to them in the chat buffer. Such a link must be "standalone", i.e. on a line by itself surrounded by whitespace.

In Org mode, for example, the following are all valid ways of including an image with the request:

- "Standalone" file links: #+begin_src In this yaml file, I have some key-remapping configuration:

[[file:/path/to/remap.yaml]]

Could you explain what it does, and which program might be using it? #+end_src

#+begin_src Describe this picture

[[file:/path/to/screenshot.png]]

Focus specifically on the text content. #+end_src

- "Standalone" file link with description: #+begin_src Describe this picture

[[file:/path/to/screenshot.png][some picture]]

Focus specifically on the text content. #+end_src

- "Standalone", angle file link: #+begin_src Describe this picture

file:/path/to/screenshot.png

Focus specifically on the text content. #+end_src

The following links are not valid, and the text of the link will be sent instead of the file contents:

- Inline link: #+begin_src Describe this [[file:/path/to/screenshot.png][picture]].

Focus specifically on the text content. #+end_src

-

Link not "standalone": #+begin_src Describe this picture: [[file:/path/to/screenshot.png]] Focus specifically on the text content. #+end_src

-

Not a valid Org link: #+begin_src Describe the picture

file:/path/to/screenshot.png #+end_src

Similar criteria apply to Markdown chat buffers.

#+html: #+html:

**** Save and restore your chat sessions #+html:

Saving the file will save the state of the conversation as well. To resume the chat, open the file and turn on =gptel-mode= before editing the buffer.

#+html: *** Setting options (backend, model, request parameters, system prompts and more)

Most gptel options can be set from gptel's transient menu, available by calling =gptel-send= with a prefix-argument, or via =gptel-menu=. To change their default values in your configuration, see [[#additional-configuration][Additional Configuration]]. Chat buffer-specific options are also available via the header-line in chat buffers.

Selecting a model and backend can be done interactively via the =-m= command of =gptel-menu=. Available registered models are prefixed by the name of their backend with a string like =ChatGPT:gpt-4o-mini=, where =ChatGPT= is the backend name you used to register it and =gpt-4o-mini= is the name of the model.

*** Include more context with requests :PROPERTIES: :CUSTOM_ID: include-context :END:

By default, gptel will query the LLM with the active region or the buffer contents up to the cursor. Often it can be helpful to provide the LLM with additional context from outside the current buffer. For example, when you're in a chat buffer but want to ask questions about a (possibly changing) code buffer and auxiliary project files.

You can include additional text regions, buffers or files with gptel's queries in two ways. The first is via links in chat buffers, as described above (see "Including media with requests").

The second is globally via dedicated context commands: you can add a selected region, buffer or file to gptel's context from the menu, or call =gptel-add=. To add a file use =gptel-add= in Dired, or use the dedicated =gptel-add-file= command. Directories will have their files added recursively after prompting for confirmation.

This additional context is "live" and not a snapshot. Once added, the regions, buffers or files are scanned and included at the time of each query. When using multi-modal models, added files can be of any supported type -- typically images.

You can examine the active context from the menu: #+html: <img src="https://github.com/karthink/gptel/assets/8607532/63cd7fc8-6b3e-42ae-b6ca-06ff935bae9c" align="center" alt="Image showing gptel's menu with the "inspect context" command.">

And then browse through or remove context from the context buffer:

#+html:

By default, files in a version control system that are not project files ("gitignored" files) will not be added to the context. To be able to add these files, set =gptel-context-restrict-to-project-files= to =nil=. Note that remote files are always included, regardless of the value of =gptel-context-restrict-to-project-files=.

*** Handle "reasoning" content

Some LLMs include in their response a "thinking" or "reasoning" block. This text improves the quality of the LLM’s final output, but may not be interesting to you by itself. You can decide how you would like this "reasoning" content to be handled by gptel by setting the user option =gptel-include-reasoning=. You can include it in the LLM response (the default), omit it entirely, include it in the buffer but ignore it on subsequent conversation turns, or redirect it to another buffer. As with most options, you can specify this behavior from gptel's transient menu globally, buffer-locally or for the next request only.

When included with the response, reasoning content will be delimited by Org blocks or markdown backticks.

*** Tool use

gptel can provide the LLM with client-side elisp "tools", or function specifications, along with the request. If the LLM decides to run the tool, it supplies the tool call arguments, which gptel uses to run the tool in your Emacs session. The result is optionally returned to the LLM to complete the task.

This exchange can be used to equip the LLM with capabilities or knowledge beyond what is available out of the box -- for instance, you can get the LLM to control your Emacs frame, create or modify files and directories, or look up information relevant to your request via web search or in a local database. Here is a very simple example:

#+html:

https://github.com/user-attachments/assets/d1f8e2ac-62bb-49bc-850d-0a67aa0cd4c3 #+html:

To use tools in gptel, you need

- a model that supports this usage. All the flagship models support tool use, as do many of the smaller open models.

- Tool specifications that gptel understands. gptel does not currently include any tools out of the box.

#+html:

**** Defining gptel tools #+html:

Defining a gptel tool requires an elisp function and associated metadata. Here are two simple tool definitions:

To read the contents of an Emacs buffer:

#+begin_src emacs-lisp (gptel-make-tool :name "read_buffer" ; javascript-style snake_case name :function (lambda (buffer) ; the function that will run (unless (buffer-live-p (get-buffer buffer)) (error "error: buffer %s is not live." buffer)) (with-current-buffer buffer (buffer-substring-no-properties (point-min) (point-max)))) :description "return the contents of an emacs buffer" :args (list '(:name "buffer" :type string ; :type value must be a symbol :description "the name of the buffer whose contents are to be retrieved")) :category "emacs") ; An arbitrary label for grouping #+end_src

Besides the function itself, which can be named or anonymous (as above), the tool specification requires a =:name=, =:description= and a list of argument specifications in =:args=. Each argument specification is a plist with atleast the keys =:name=, =:type= and =:description=.

To create a text file:

#+begin_src emacs-lisp (gptel-make-tool :name "create_file" ; javascript-style snake_case name :function (lambda (path filename content) ; the function that runs (let ((full-path (expand-file-name filename path))) (with-temp-buffer (insert content) (write-file full-path)) (format "Created file %s in %s" filename path))) :description "Create a new file with the specified content" :args (list '(:name "path" ; a list of argument specifications :type string :description "The directory where to create the file") '(:name "filename" :type string :description "The name of the file to create") '(:name "content" :type string :description "The content to write to the file")) :category "filesystem") ; An arbitrary label for grouping #+end_src

With some prompting, you can get an LLM to write these tools for you.

Tools can also be asynchronous, use optional arguments and arguments with more structure (enums, arrays, objects etc). See =gptel-make-tool= for details.

#+html: #+html:

**** Selecting tools #+html:

Once defined, tools can be selected (globally, buffer-locally or for the next request only) from gptel's transient menu:From here you can also require confirmation for all tool calls, and decide if tool call results should be included in the LLM response. See [[#additional-configuration][Additional Configuration]] for doing these things via elisp.

#+html: #+html:

**** Model Context Protocol (MCP) integration #+html:

The [[https://modelcontextprotocol.io/introduction][Model Context Protocol]] (MCP) is a protocol for providing resources and tools to LLMs, and [[https://github.com/appcypher/awesome-mcp-servers][many MCP servers exist]] that provide LLM tools for file access, database connections, API integrations etc. The [[mcp.el]] package for Emacs can act as an MCP client and manage these tool calls for gptel.

To use MCP servers with gptel, you thus need three pieces:

- The [[https://github.com/lizqwerscott/mcp.el][mcp.el]] package for Emacs, [[https://melpa.org/#/mcp][available on MELPA]].

- MCP servers configured for and running via mcp.el.

- gptel and access to an LLM

gptel includes =gptel-integrations=, a small library to make this more convenient. This library is not automatically loaded by gptel, so if you would like to use it you have to require it:

#+begin_src emacs-lisp (require 'gptel-integrations) #+end_src

Once loaded, you can run the =gptel-mcp-connect= and =gptel-mcp-disconnect= commands to register and unregister MCP-provided tools in gptel. These will also show up in the tools menu in gptel, accessed via =M-x gptel-menu= or =M-x gptel-tools=:

MCP-provided tools can be used as normal with gptel. Here is a screencast of the process. (In this example the "github" MCP server is installed separately using npm.)

#+html:

https://github.com/user-attachments/assets/f3ea7ac0-a322-4a59-b5b2-b3f592554f8a #+html:

Here's an example of using these tools:

#+html:

https://github.com/user-attachments/assets/b48a6a24-a130-4da7-a2ee-6ea568e10c85 #+html:

#+html:

*** Rewrite, refactor or fill in a region

In any buffer: with a region selected, you can modify text, rewrite prose or refactor code with =gptel-rewrite=. Example with prose:

#+html:

https://github.com/user-attachments/assets/e3b436b3-9bde-4c1f-b2ce-3f7df1984933 #+html:

The result is previewed over the original text. By default, the buffer is not modified.

Pressing =RET= or clicking in the rewritten region should give you a list of options: you can iterate on, diff, ediff, merge or accept the replacement. Example with code:

#+html:

https://github.com/user-attachments/assets/4067fdb8-85d3-4264-9b64-d727353f68f9 #+html:

Acting on the LLM response:

If you would like one of these things to happen automatically, you can customize =gptel-rewrite-default-action=.

These options are also available from =gptel-rewrite=:

And you can call them directly when the cursor is in the rewritten region:

*** Extra Org mode conveniences

gptel offers a few extra conveniences in Org mode.

***** Limit conversation context to an Org heading

You can limit the conversation context to an Org heading with the command =gptel-org-set-topic=.

(This sets an Org property (=GPTEL_TOPIC=) under the heading. You can also add this property manually instead.)

***** Use branching context in Org mode (tree of conversations)

You can have branching conversations in Org mode, where each hierarchical outline path through the document is a separate conversation branch. This is also useful for limiting the context size of each query. See the variable =gptel-org-branching-context=.

If this variable is non-nil, you should probably edit =gptel-prompt-prefix-alist= and =gptel-response-prefix-alist= so that the prefix strings for org-mode are not Org headings, e.g.

#+begin_src emacs-lisp (setf (alist-get 'org-mode gptel-prompt-prefix-alist) "@user\n") (setf (alist-get 'org-mode gptel-response-prefix-alist) "@assistant\n") #+end_src

Otherwise, the default prompt prefix will make successive prompts sibling headings, and therefore on different conversation branches, which probably isn't what you want.

Note: using this option requires Org 9.7 or higher to be available. The [[https://github.com/ultronozm/ai-org-chat.el][ai-org-chat]] package uses gptel to provide this branching conversation behavior for older versions of Org.

***** Save gptel parameters to Org headings (reproducible chats)

You can declare the gptel model, backend, temperature, system message and other parameters as Org properties with the command =gptel-org-set-properties=. gptel queries under the corresponding heading will always use these settings, allowing you to create mostly reproducible LLM chat notebooks, and to have simultaneous chats with different models, model settings and directives under different Org headings.

*** Introspection (examine, debug or modify requests)

Set =gptel-expert-commands= to =t= to display additional options in gptel's transient menu.

#+html:

Examining prompts: you can examine and edit gptel request payloads before sending them.

- Pick one of the "dry run" options in the menu to produce a buffer containing the request payload.

- You can edit this buffer as you would like and send the request.

- You can also copy a Curl command corresponding to the request and invoke it from the shell.

Examining responses: You can turn on logging to examine the full response from an LLM.

- Set =gptel-log-level= to =info= or =debug=.

- Send a request.

- Open the log buffer from gptel's transient menu, or switch to the =gptel-log= buffer.

** FAQ *** Chat buffer UI #+html:

**** I want the window to scroll automatically as the response is inserted #+html:

To be minimally annoying, gptel does not move the cursor by default. Add the following to your configuration to enable auto-scrolling.

#+begin_src emacs-lisp (add-hook 'gptel-post-stream-hook 'gptel-auto-scroll) #+end_src

#+html: #+html:

**** I want the cursor to move to the next prompt after the response is inserted #+html:

To be minimally annoying, gptel does not move the cursor by default. Add the following to your configuration to move the cursor:

#+begin_src emacs-lisp (add-hook 'gptel-post-response-functions 'gptel-end-of-response) #+end_src

You can also call =gptel-end-of-response= as a command at any time.

#+html: #+html:

**** I want to change the formatting of the prompt and LLM response #+html:

For dedicated chat buffers: customize =gptel-prompt-prefix-alist= and =gptel-response-prefix-alist=. You can set a different pair for each major-mode.

Anywhere in Emacs: Use =gptel-pre-response-hook= and =gptel-post-response-functions=, which see.

#+html: #+html:

**** How does gptel distinguish between user prompts and LLM responses? #+html:

gptel uses [[https://www.gnu.org/software/emacs/manual/html_node/elisp/Text-Properties.html][text-properties]] to watermark LLM responses. Thus this text is interpreted as a response even if you copy it into another buffer. In regular buffers (buffers without =gptel-mode= enabled), you can turn off this tracking by unsetting =gptel-track-response=.

When restoring a chat state from a file on disk, gptel will apply these properties from saved metadata in the file when you turn on =gptel-mode=.

gptel does /not/ use any prefix or semantic/syntax element in the buffer (such as headings) to separate prompts and responses. The reason for this is that gptel aims to integrate as seamlessly as possible into your regular Emacs usage: LLM interaction is not the objective, it's just another tool at your disposal. So requiring a bunch of "user" and "assistant" tags in the buffer is noisy and restrictive. If you want these demarcations, you can customize =gptel-prompt-prefix-alist= and =gptel-response-prefix-alist=. Note that these prefixes are for your readability only and purely cosmetic.

#+html: *** Transient menu behavior #+html:

**** I want to set gptel options but only for this buffer :PROPERTIES: :ID: 748cbc00-0c92-4705-8839-619b2c80e566 :END: #+html:

In every menu used to set options, gptel provides a "scope" option, bound to the = key:

You can flip this switch before setting the option to =buffer= or =oneshot=. You only need to flip this switch once, it's a persistent setting. =buffer= sets the option buffer-locally, =oneshot= will set it for the next gptel request only. The default scope is global.

#+html: #+html:

**** I want the transient menu options to be saved so I only need to set them once #+html:

Any model options you set are saved according to the scope (see previous question). But the redirection options in the menu are set for the next query only:

You can make them persistent across this Emacs session by pressing C-x C-s:

(You can also cycle through presets you've saved with C-x p and C-x n.)

Now these will be enabled whenever you send a query from the transient menu. If you want to use these saved options without invoking the transient menu, you can use a keyboard macro:

#+begin_src emacs-lisp ;; Replace with your key to invoke the transient menu: (keymap-global-set "" "C-u C-c ") #+end_src

Or see this [[https://github.com/karthink/gptel/wiki/Commonly-requested-features#save-transient-flags][wiki entry]].

#+html: #+html:

**** Using the transient menu leaves behind extra windows #+html:

If using gptel's transient menus causes new/extra window splits to be created, check your value of =transient-display-buffer-action=. [[https://github.com/magit/transient/discussions/358][See this discussion]] for more context.

If you are using Helm, see [[https://github.com/magit/transient/discussions/361][Transient#361]].

In general, do not customize this Transient option unless you know what you're doing!

#+html: #+html:

**** Can I change the transient menu key bindings? #+html:

Yes, see =transient-suffix-put=. This changes the key to select a backend/model from "-m" to "M" in gptel's menu: #+begin_src emacs-lisp (transient-suffix-put 'gptel-menu (kbd "-m") :key "M") #+end_src

#+html: #+html:

**** (Doom Emacs) Sending a query from the gptel menu fails because of a key conflict with Org mode #+html:

Doom binds RET in Org mode to =+org/dwim-at-point=, which appears to conflict with gptel's transient menu bindings for some reason.

Two solutions:

- Press

C-minstead of the return key. - Change the send key from return to a key of your choice: #+begin_src emacs-lisp (transient-suffix-put 'gptel-menu (kbd "RET") :key "") #+end_src

#+html: *** Miscellaneous #+html:

**** I want to use gptel in a way that's not supported by =gptel-send= or the options menu #+html:

gptel's default usage pattern is simple, and will stay this way: Read input in any buffer and insert the response below it. Some custom behavior is possible with the transient menu (=C-u M-x gptel-send=).

For more programmable usage, gptel provides a general =gptel-request= function that accepts a custom prompt and a callback to act on the response. You can use this to build custom workflows not supported by =gptel-send=. See the documentation of =gptel-request=, and the [[https://github.com/karthink/gptel/wiki/Defining-custom-gptel-commands][wiki]] for examples.

#+html: #+html:

**** (ChatGPT) I get the error "(HTTP/2 429) You exceeded your current quota" #+html:

#+begin_quote (HTTP/2 429) You exceeded your current quota, please check your plan and billing details. #+end_quote

Using the ChatGPT (or any OpenAI) API requires [[https://platform.openai.com/account/billing/overview][adding credit to your account]].

#+html: #+html:

**** Why another LLM client? #+html:

Other Emacs clients for LLMs prescribe the format of the interaction (a comint shell, org-babel blocks, etc). I wanted:

- Something that is as free-form as possible: query the model using any text in any buffer, and redirect the response as required. Using a dedicated =gptel= buffer just adds some visual flair to the interaction.

- Integration with org-mode, not using a walled-off org-babel block, but as regular text. This way the model can generate code blocks that I can run.

#+html:

** Additional Configuration :PROPERTIES: :ID: f885adac-58a3-4eba-a6b7-91e9e7a17829 :END: #+html:

#+begin_src emacs-lisp :exports none :results list (let ((all)) (mapatoms (lambda (sym) (when (and (string-match-p "^gptel-[^-]" (symbol-name sym)) (get sym 'variable-documentation)) (push sym all)))) all) #+end_src

|-------------------------+--------------------------------------------------------------------| | Connection options | | |-------------------------+--------------------------------------------------------------------| | =gptel-use-curl= | Use Curl? (default), fallback to Emacs' built-in =url=. | | | You can also specify the Curl path here. | | =gptel-proxy= | Proxy server for requests, passed to curl via =--proxy=. | | =gptel-curl-extra-args= | Extra arguments passed to Curl. | | =gptel-api-key= | Variable/function that returns the API key for the active backend. | |-------------------------+--------------------------------------------------------------------|

|-----------------------+---------------------------------------------------------| | LLM request options | /(Note: not supported uniformly across LLMs)/ | |-----------------------+---------------------------------------------------------| | =gptel-backend= | Default LLM Backend. | | =gptel-model= | Default model to use, depends on the backend. | | =gptel-stream= | Enable streaming responses, if the backend supports it. | | =gptel-directives= | Alist of system directives, can switch on the fly. | | =gptel-max-tokens= | Maximum token count (in query + response). | | =gptel-temperature= | Randomness in response text, 0 to 2. | | =gptel-cache= | Cache prompts, system message or tools (Anthropic only) | | =gptel-use-context= | How/whether to include additional context | | =gptel-use-tools= | Disable, allow or force LLM tool-use | | =gptel-tools= | List of tools to include with requests | |-----------------------+---------------------------------------------------------|

|-------------------------------+----------------------------------------------------------------| | Chat UI options | | |-------------------------------+----------------------------------------------------------------| | =gptel-default-mode= | Major mode for dedicated chat buffers. | | =gptel-prompt-prefix-alist= | Text inserted before queries. | | =gptel-response-prefix-alist= | Text inserted before responses. | | =gptel-track-response= | Distinguish between user messages and LLM responses? | | =gptel-track-media= | Send text, images or other media from links? | | =gptel-confirm-tool-calls= | Confirm all tool calls? | | =gptel-include-tool-results= | Include tool results in the LLM response? | | =gptel-use-header-line= | Display status messages in header-line (default) or minibuffer | | =gptel-display-buffer-action= | Placement of the gptel chat buffer. | |-------------------------------+----------------------------------------------------------------|

|-------------------------------+-------------------------------------------------------| | Org mode UI options | | |-------------------------------+-------------------------------------------------------| | =gptel-org-branching-context= | Make each outline path a separate conversation branch | | =gptel-org-ignore-elements= | Ignore parts of the buffer when sending a query | |-------------------------------+-------------------------------------------------------|

|------------------------------------+-------------------------------------------------------------| | Hooks for customization | | |------------------------------------+-------------------------------------------------------------| | =gptel-save-state-hook= | Runs before saving the chat state to a file on disk | | =gptel-prompt-transform-functions= | Runs in a temp buffer to transform text before sending | | =gptel-post-request-hook= | Runs immediately after dispatching a =gptel-request=. | | =gptel-pre-response-hook= | Runs before inserting the LLM response into the buffer | | =gptel-post-response-functions= | Runs after inserting the full LLM response into the buffer | | =gptel-post-stream-hook= | Runs after each streaming insertion | | =gptel-context-wrap-function= | To include additional context formatted your way | | =gptel-rewrite-default-action= | Automatically diff, ediff, merge or replace refactored text | | =gptel-post-rewrite-functions= | Runs after a =gptel-rewrite= request succeeds | |------------------------------------+-------------------------------------------------------------|

#+html:

*** Option presets

If you use several LLMs for different tasks with accompanying system prompts (instructions) and tool configurations, manually adjusting =gptel= settings each time can become tedious. Presets are a bundle of gptel settings -- such as the model, backend, system message, and enabled tools -- that you can switch to at once.

Once defined, presets can be applied from gptel's transient menu:

To define a preset, use the =gptel-make-preset= function, which takes a name and keyword-value pairs of settings.

Presets can be used to set individual options. Here is an example of a preset to set the system message (and do nothing else): #+begin_src emacs-lisp (gptel-make-preset 'explain :system "Explain what this code does to a novice programmer.") #+end_src

More generally, you can specify a bundle of options: #+begin_src emacs-lisp (gptel-make-preset 'gpt4coding ;preset name, a symbol :description "A preset optimized for coding tasks" ;for your reference :backend "Claude" ;gptel backend or backend name :model 'claude-3-7-sonnet-20250219.1 :system "You are an expert coding assistant. Your role is to provide high-quality code solutions, refactorings, and explanations." :tools '("read_buffer" "modify_buffer")) ;gptel tools or tool names #+end_src

Besides a couple of special keys (=:description=, =:parents= to inherit other presets), there is no predefined list of keys. Instead, the key =:foo= corresponds to setting =gptel-foo= (preferred) or =gptel--foo=. So the preset can include the value of any gptel option. For example, the following preset sets =gptel-temperature= and =gptel-use-context=:

#+begin_src emacs-lisp (gptel-make-preset 'proofreader :description "Preset for proofreading tasks" :backend "ChatGPT" :model 'gpt-4.1-mini :tools '("read_buffer" "spell_check" "grammar_check") :temperature 0.7 ;sets gptel-temperature :use-context 'system) ;sets gptel-use-context #+end_src

Switching to a preset applies the specified settings without affecting other settings. Depending on the scope option (= in gptel's transient menu), presets can be applied globally, buffer-locally or for the next request only.

**** Applying presets to requests automatically

You can apply a preset to a /single/ query by including =@preset-name= in the prompt, where =preset-name= is the name of the preset. (The =oneshot= scope option in gptel's transient menus is another way to do this, [[id:748cbc00-0c92-4705-8839-619b2c80e566][see the FAQ.]])

For example, if you have a preset named =websearch= defined which includes tools for web access and search: #+begin_src emacs-lisp (gptel-make-preset 'websearch :description "Haiku with basic web search capability." :backend "Claude" :model 'claude-3-5-haiku-20241022 :tools '("search_web" "read_url" "get_youtube_meta")) #+end_src

The following query is sent with this preset applied:

#+begin_quote @websearch Are there any 13" e-ink monitors on the market? Create a table comparing them, sourcing specs and reviews from online sources. Also do the same for "transreflective-LCD" displays -- I'm not sure what exactly they're called but they're comparable to e-ink. #+end_quote

This =@preset-name= cookie only applies to the final user turn of the coversation that is sent. So the presence of the cookie in past messages/turns is not significant.

The =@preset-name= cookie can be anywhere in the prompt. For example: #+begin_quote

What do you make of the above description, @proofreader? #+end_quote

In chat buffers this prefix will be offered as a completion and fontified, making it easy to use and spot.

** Alternatives

Other Emacs clients for LLMs include

- [[https://github.com/ahyatt/llm][llm]]: llm provides a uniform API across language model providers for building LLM clients in Emacs, and is intended as a library for use by package authors. For similar scripting purposes, gptel provides the command =gptel-request=, which see.

- [[https://github.com/s-kostyaev/ellama][Ellama]]: A full-fledged LLM client built on llm, that supports many LLM providers (Ollama, Open AI, Vertex, GPT4All and more). Its usage differs from gptel in that it provides separate commands for dozens of common tasks, like general chat, summarizing code/text, refactoring code, improving grammar, translation and so on.

- [[https://github.com/xenodium/chatgpt-shell][chatgpt-shell]]: comint-shell based interaction with ChatGPT. Also supports DALL-E, executable code blocks in the responses, and more.

- [[https://github.com/rksm/org-ai][org-ai]]: Interaction through special =#+begin_ai ... #+end_ai= Org-mode blocks. Also supports DALL-E, querying ChatGPT with the contents of project files, and more.

- [[https://github.com/milanglacier/minuet-ai.el][Minuet]]: Code-completion using LLM. Supports fill-in-the-middle (FIM) completion for compatible models such as DeepSeek and Codestral.

There are several more: [[https://github.com/iwahbe/chat.el][chat.el]], [[https://github.com/stuhlmueller/gpt.el][gpt.el]], [[https://github.com/AnselmC/le-gpt.el][le-gpt]], [[https://github.com/stevemolitor/robby][robby]].

*** Packages using gptel

gptel is a general-purpose package for chat and ad-hoc LLM interaction. The following packages use gptel to provide additional or specialized functionality:

Lookup helpers: Calling gptel quickly for one-off interactions

- [[https://github.com/karthink/gptel-quick][gptel-quick]]: Quickly look up the region or text at point.

Task-driven workflows: Different interfaces to specify tasks for LLMs.

These differ from full "agentic" use in that the interactions are "one-shot", not chained.

- [[https://github.com/dolmens/gptel-aibo/][gptel-aibo]]: A writing assistant system built on top of gptel.

- [[https://github.com/daedsidog/evedel][Evedel]]: Instructed LLM Programmer/Assistant.

- [[https://github.com/lanceberge/elysium][Elysium]]: Request AI-generated changes as you code.

- [[https://github.com/ISouthRain/gptel-watch][gptel-watch]]: Automatically call gptel when typing lines that indicate intent.

Agentic use: Use LLMs as agents, with tool-use