simulflow

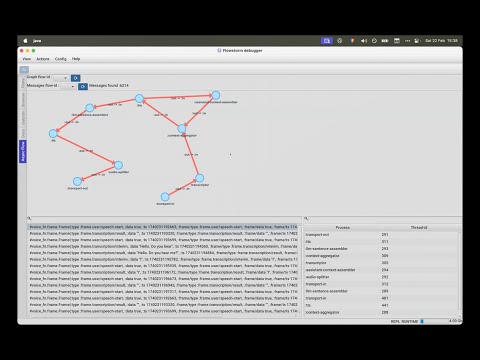

A Clojure library for building real-time voice-enabled AI pipelines. Simulflow handles the orchestration of speech recognition, audio processing, and AI service integration with the elegance of functional programming.

Stars: 87

Simulflow is a Clojure framework for building real-time voice-enabled AI applications using a data-driven, functional approach. It provides a composable pipeline architecture for processing audio, text, and AI interactions with built-in support for major AI providers. The framework uses processors that communicate through specialized frames to create voice-enabled AI agents, allowing for mental multitasking and rational thought. Simulflow offers a flow-based architecture, data-first design, streaming architecture, extensibility, flexible frame system, and built-in services for seamless integration with major AI providers. Users can easily swap components, add new functionality, or debug individual stages without affecting the entire system.

README:

Daydreaming is the first awakening of what we call simulflow. It is an essential tool of rational thought. With it you can clear the mind for better thinking. – Frank Herbert, Heretics of Dune

Bene Gesserit also have the ability to practice simulflow, literally the simultaneous flow of several threads of consciousness at any given time; mental multitasking, as it were. The combination of simulflow with their analytical abilities and Other Memory is responsible for the frightening intelligence of the average Bene Gesserit.

Simulflow, Dune Wiki

simulflow is a Clojure framework for building real-time voice-enabled AI applications using a data-driven, functional approach. Built on top of clojure.core.async.flow, it provides a composable pipeline architecture for processing audio, text, and AI interactions with built-in support for major AI providers.

[!WARNING] While Simulflow has been used in live, production applications - it's still under active development. Expect breaking changes to support new usecases

Simulflow is a framework that uses processors that communicate through specialized frames to create voice-enabled AI agents. Think of it as a data pipeline where each component transforms typed messages:

Microphone Transport → (audio-in frames) → Transcriptor → (transcription frames) →

Context Aggregation → (context to LLM) → LLM → (streams response) →

Text Assembler → (sentence frames) → Text-to-Speech → (audio-out frames) →

Audio Splitter → (chunked audio) → Speaker Transport

This pipeline approach makes it easy to swap components, add new functionality, or debug individual stages without affecting the entire system.

- Installation

- Requirements

- Video presentation

- Core Features

- Quick Start Example

- Examples

- Supported Providers

- Key Concepts

- Adding Custom Processes

- Built With

- Acknowledgements

- License

;; Add to your deps.edn

{:deps {com.shipclojure/simulflow {:mvn/version "0.1.8-alpha"}}};; Add to your project.clj

[com.shipclojure/simulflow "0.1.8-alpha"]<dependency>

<groupId>com.shipclojure</groupId>

<artifactId>simulflow</artifactId>

<version>0.1.8-alpha</version>

</dependency>-

Java 21+ - Required for virtual threads (Project Loom) support. If your java version doesn't support virtual threads,

simulflowdefaults to using normal threads. - Clojure 1.12+ - For core.async.flow and other modern Clojure features

-

Flow-Based Architecture: Built on

core.async.flowfor robust concurrent processing - Data-First Design: Define AI pipelines as data structures for easy configuration and modification

- Streaming Architecture: Efficient real-time audio and text processing

- Extensible: Seamless to add new processors to embed into AI flows

- Flexible Frame System: Type-safe message passing between pipeline components

- Built-in Services: Ready-to-use integrations with major AI providers

First, create a resources/secrets.edn:

{:deepgram {:api-key ""}

:elevenlabs {:api-key ""

:voice-id ""}

:groq {:api-key ""}

:openai {:new-api-sk ""}}Obtain the API keys from the respective providers and fill in the blank values.

Start a REPL and evaluate the snippets in the (comment ...) blocks to start the flows.

Allow Microphone access when prompted.

(ns simulflow-examples.local

{:clj-reload/no-unload true}

(:require

[clojure.core.async :as a]

[clojure.core.async.flow :as flow]

[simulflow.async :refer [vthread-loop]]

[simulflow.processors.activity-monitor :as activity-monitor]

[simulflow.processors.deepgram :as deepgram]

[simulflow.processors.elevenlabs :as xi]

[simulflow.processors.llm-context-aggregator :as context]

[simulflow.processors.openai :as openai]

[simulflow.secrets :refer [secret]]

[simulflow.transport :as transport]

[simulflow.transport.in :as transport-in]

[simulflow.transport.out :as transport-out]

[simulflow.utils.core :as u]

[simulflow.vad.silero :as silero]

[taoensso.telemere :as t]))

(defn make-local-flow

"This example showcases a voice AI agent for the local computer."

([] (make-local-flow {}))

([{:keys [llm-context extra-procs extra-conns debug? vad-analyser

language chunk-duration-ms]

:or {llm-context {:messages

[{:role "system"

:content "You are a voice agent operating via phone. Be

concise in your answers. The input you receive comes from a

speech-to-text (transcription) system that isn't always

efficient and may send unclear text. Ask for

clarification when you're unsure what the person said."}]}

language :en

debug? false

chunk-duration-ms 20

extra-procs {}

extra-conns []}}]

(flow/create-flow

{:procs

(u/deep-merge

{;; Capture audio from microphone and send raw-audio-input frames

:transport-in {:proc transport-in/microphone-transport-in

:args {:vad/analyser vad-analyser}}

;; raw-audio-input -> transcription frames

:transcriptor {:proc deepgram/deepgram-processor

:args {:transcription/api-key (secret [:deepgram :api-key])

:transcription/interim-results? true

:transcription/punctuate? false

:transcription/vad-events? false

:transcription/smart-format? true

:transcription/model :nova-2

:transcription/utterance-end-ms 1000

:transcription/language language}}

;; user transcription & llm message frames -> llm-context frames

:context-aggregator {:proc context/context-aggregator

:args {:llm/context llm-context

:aggregator/debug? debug?}}

;; Takes llm-context frames and produces llm-text-chunk & llm-tool-call-chunk frames

:llm {:proc openai/openai-llm-process

:args {:openai/api-key (secret [:openai :new-api-sk])

:llm/model "gpt-4o-mini"}}

;; llm-text-chunk & llm-tool-call-chunk -> llm-context-messages-append frames

:assistant-context-assembler {:proc context/assistant-context-assembler

:args {:debug? debug?}}

;; llm-text-chunk -> sentence speak frames (faster for text to speech)

:llm-sentence-assembler {:proc context/llm-sentence-assembler}

;; speak-frames -> audio-output-raw frames

:tts {:proc xi/elevenlabs-tts-process

:args {:elevenlabs/api-key (secret [:elevenlabs :api-key])

:elevenlabs/model-id "eleven_flash_v2_5"

:elevenlabs/voice-id (secret [:elevenlabs :voice-id])

:voice/stability 0.5

:voice/similarity-boost 0.8

:voice/use-speaker-boost? true

:pipeline/language language}}

;; audio-output-raw -> smaller audio-output-raw frames for realtime

:audio-splitter {:proc transport/audio-splitter

:args {:audio.out/duration-ms chunk-duration-ms}}

;; speakers out

:transport-out {:proc transport-out/realtime-speakers-out-processor

:args {:audio.out/sending-interval chunk-duration-ms

:audio.out/duration-ms chunk-duration-ms}}

:activity-monitor {:proc activity-monitor/process

:args {::activity-monitor/timeout-ms 5000}}}

extra-procs)

:conns (concat

[[[:transport-in :out] [:transcriptor :in]]

[[:transcriptor :out] [:context-aggregator :in]]

[[:transport-in :sys-out] [:context-aggregator :sys-in]]

[[:context-aggregator :out] [:llm :in]]

;; Aggregate full context

[[:llm :out] [:assistant-context-assembler :in]]

[[:assistant-context-assembler :out] [:context-aggregator :in]]

;; Assemble sentence by sentence for fast speech

[[:llm :out] [:llm-sentence-assembler :in]]

[[:llm-sentence-assembler :out] [:tts :in]]

[[:tts :out] [:audio-splitter :in]]

[[:audio-splitter :out] [:transport-out :in]]

;; Activity detection

[[:transport-out :sys-out] [:activity-monitor :sys-in]]

[[:transport-in :sys-out] [:activity-monitor :sys-in]]

[[:transcriptor :sys-out] [:activity-monitor :sys-in]]

[[:activity-monitor :out] [:context-aggregator :in]]

[[:activity-monitor :out] [:tts :in]]]

extra-conns)})))

(comment

(def local-ai (make-local-flow {:vad-analyser (silero/create-silero-vad)}))

;; Start local ai flow - starts paused

(let [{:keys [report-chan error-chan]} (flow/start local-ai)]

;; Resume local ai -> you can now speak with the AI

(flow/resume local-ai)

(vthread-loop []

(when-let [[msg c] (a/alts!! [report-chan error-chan])]

(when (map? msg)

(t/log! (cond-> {:level :debug :id (if (= c error-chan) :error :report)}

(= c error-chan) (assoc :error msg)) msg))

(recur))))

;; Stop the conversation

(flow/stop local-ai)

,)Which roughly translates to:

For more complete examples and use cases, see the examples directory:

- Local Example - Complete voice AI agent using microphone and speakers

- Twilio WebSocket - Telephony integration for phone-based voice AI

- Transport Examples - Different audio input/output configurations

These examples show real-world usage patterns and can be used as starting points for your own applications.

-

ElevenLabs

- Models:

eleven_multilingual_v2,eleven_turbo_v2,eleven_flash_v2and more. - Features: Real-time streaming, multiple voices, multilingual support

- Models:

-

Deepgram

- Models:

nova-2,nova-2-general,nova-2-meetingand more. - Features: Real-time transcription, punctuation, smart formatting

- Models:

-

OpenAI

- Models:

gpt-4o-mini(fastest, cheapest),gpt-4,gpt-3.5-turboand more - Features: Function calling, streaming responses

- Models:

-

Google

- Models:

gemini-2.0-flash(fastest, cheapest),gemini-2.5-flash, and more - Features: Function calling, streaming responses, thinking

- Models:

-

Groq

- Models:

llama-3.2-3b-previewllama-3.1-8b-instantllama-3.3-70b-versatileetc - Features: Function calling, streaming responses, thinking

- Models:

The core building block of simulflow pipelines:

- Composed of processes connected by channels

- Processes can be:

- Input/output handlers

- AI service integrations

- Data transformers

- Managed by

core.async.flowfor lifecycle control

The modality through which audio comes and goes from the voice ai pipeline. Example transport modalities:

- local (microphone + speakers)

- telephony (twilio through websocket)

- webRTC (browser support) - TODO

- async (through in & out core async channels)

You will see processors like :transport-in & :transport-out

The basic unit of data flow, representing typed messages like:

-

:simulflow.frame/audio-input-raw- Raw audio data from input transport -

:simulflow.frame/transcription-result- Transcribed text from speech-to-text -

:simulflow.frame/llm-text-chunk- LLM response text chunks -

:simulflow.frame/llm-tool-call-chunk- LLM tool call request chunks -

:simulflow.frame/audio-output-raw- Raw audio data for playback -

:simulflow.frame/speak-frame- Text for TTS processing -

:simulflow.frame/user-speech-start,:simulflow.frame/user-speech-stop- User speech events -

:simulflow.frame/bot-speech-start,:simulflow.frame/bot-speech-stop- Bot speech events -

:simulflow.frame/system-start,:simulflow.frame/system-stop- System control signals

Each frame has a type and optionally a schema for the data contained in it.

For development and debugging, you can enable frame schema validation to catch invalid frame data early. This should only be used during development as it adds runtime overhead:

# Enable frame schema checking via JVM property

clojure -J-Dsimulflow.frame.schema-checking=true -M:dev your-namespace

# Or add to your deps.edn :dev alias

{:aliases

{:dev {:jvm-opts ["-Dsimulflow.frame.schema-checking=true"]

...}}}When enabled, creating frames with invalid data will throw exceptions with detailed error messages:

;; This will throw if schema checking is enabled and data is invalid

(frame/audio-input-raw "invalid-data") ; Should be byte array

;; => ex-info "Invalid frame data" {...}Warning: Never enable schema checking in production as it significantly impacts performance.

Simulflow provides the defframe macro to easily define new frame types with automatic validation and helper functions.

The defframe macro creates three things for each frame type:

- Frame Creator Function - Creates frames with optional timestamp

- Frame Predicate Function - Tests if a value is a frame of that type

- Frame Schema - Malli schema for validation

(ns my.custom.frames

(:require [simulflow.frame :refer [defframe]]))

;; Define a custom frame type

(defframe custom-data

"Frame containing custom application data"

{:type ::custom-data-frame

:schema [:map

[:user-id :string]

[:action [:enum :create :update :delete]]

[:payload :any]]})This generates:

;; 1. Frame creator function (supports both arities)

(custom-data {:user-id "123" :action :create :payload {...}})

(custom-data {:user-id "123" :action :create :payload {...}} {:timestamp 1640995200000})

;; 2. Frame predicate function

(custom-data? some-frame) ;; => true/false

;; 3. Frame schema (for advanced validation)

custom-data-schema ;; => Malli schema definitionFrame creator functions support flexible timestamp handling:

;; Use current timestamp (default)

(custom-data {:user-id "123" :action :create :payload data})

;; Explicit timestamp as milliseconds

(custom-data data {:timestamp 1640995200000})

;; Explicit timestamp as java.util.Date

(custom-data data {:timestamp #inst "2022-01-01T00:00:00.000Z"})All frames have a consistent structure:

{:frame/type ::custom-data-frame ; Frame type keyword

:frame/data {:user-id "123" :action :create} ; Your data

:frame/ts #inst "2022-01-01T00:00:00.000Z" ; Timestamp

;; Plus metadata: ^{:type :simulflow.frame/frame}

}Use your custom frames in processor transform functions:

(defn my-processor-transform [state input-port data]

(cond

(custom-data? data)

(let [user-id (get-in data [:frame/data :user-id])]

[state {:out [(frame/system-start true)]}])

:else [state {}]))When frame schema checking is enabled, invalid data will be caught automatically:

;; This will throw if schema checking is enabled

(custom-data {:user-id 123 :action :invalid}) ; user-id should be string, action invalid

;; => ex-info "Invalid frame data" {:error {...}}See frame.clj for all possible frames.

Components that transform frames:

- Define input/output requirements

- Can maintain state

- Use core.async for async processing

- Implement the

flow/processprotocol

(defn custom-processor []

(flow/process

{:describe (fn [] {:ins {:in "Input channel"}

:outs {:out "Output channel"}})

:init identity

:transform (fn [state in msg]

[state {:out [(process-message msg)]}])}))Read core.async.flow docs for more information about flow precesses.

Simulflow processors are designed for modularity and reuse. Each processor can expose its core functionality as multi-arity functions that can be used independently or composed into custom processors.

Processors follow a standard multi-arity pattern that maps directly to core.async.flow lifecycle:

(defn processor-fn

([] {:ins {:in "Description"} :outs {:out "Description"} :params {...}}) ; 0-arity: describe

([config] {...}) ; 1-arity: init

([state transition] {...}) ; 2-arity: transition

([state input-port data] [state {...}])) ; 3-arity: transformHere's how you can reuse transport processor functions in your own custom processors:

(ns my-cool-processor

(:require [simulflow.transport :as transport]

[simulflow.frame :as frame]

[simulflow.utils.audio :as audio]))

(defn mic-transport-fn

"Custom microphone transport with audio processing"

([] (transport/mic-transport-in-describe))

([params] (transport/mic-transport-in-init! params))

([state transition]

(transport/mic-transport-in-transition state transition))

;; Custom transform with audio processing

([state _ {:keys [audio-data timestamp]}]

(let [processed-audio (audio/apply-noise-reduction audio-data)

float-audio (PCMConverter/convertToFloat32Buffer processed-audio)]

[state {:out [(frame/audio-input-raw float-audio {:timestamp timestamp})]}])))

;; Use in a flow

(def my-flow

(flow/create-flow

{:procs {:custom-mic {:proc (flow/process mic-transport-fn)

:args {:audio-in/sample-rate 16000}}}

:conns [[:custom-mic :out] [:next-processor :in]]}))You can also compose transform logic from multiple processors:

(defn hybrid-processor-fn

([] {:ins {:in "Mixed input"} :outs {:out "Processed output"}})

([params] {:config params})

([state transition] (when (= transition :stop) (cleanup state)))

([state input-port data]

(cond

;; Handle audio using transport transform

(frame/audio-input-raw? data)

(transport/mic-transport-transform state input-port data)

;; Handle text using LLM transform

(frame/llm-context? data)

(openai/transform state input-port data)

;; Custom handling for other frames

:else

[state {:out [(custom-transform data)]}])))- Reusability: Use processor logic across different flows

- Testability: Test individual transform functions in isolation

- Composability: Mix and match functionality from different processors

- Customization: Override specific behaviors while reusing core logic

- Debugging: Easier to debug individual components

This pattern enables building complex AI pipelines by composing smaller, well-tested components while maintaining the data-driven architecture that makes simulflow powerful.

- core.async - Concurrent processing

- core.async.flow - Flow control

- Hato - WebSocket support

- Malli - Schema validation

Voice-fn takes heavy inspiration from pipecat. Differences:

- simulflow uses a graph instead of a bidirectional queue for frame transport

- simulflow has a data centric implementation. The processors in simulflow are

pure functions in the

core.async.flowtransform syntax

MIT

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for simulflow

Similar Open Source Tools

simulflow

Simulflow is a Clojure framework for building real-time voice-enabled AI applications using a data-driven, functional approach. It provides a composable pipeline architecture for processing audio, text, and AI interactions with built-in support for major AI providers. The framework uses processors that communicate through specialized frames to create voice-enabled AI agents, allowing for mental multitasking and rational thought. Simulflow offers a flow-based architecture, data-first design, streaming architecture, extensibility, flexible frame system, and built-in services for seamless integration with major AI providers. Users can easily swap components, add new functionality, or debug individual stages without affecting the entire system.

kan-gpt

The KAN-GPT repository is a PyTorch implementation of Generative Pre-trained Transformers (GPTs) using Kolmogorov-Arnold Networks (KANs) for language modeling. It provides a model for generating text based on prompts, with a focus on improving performance compared to traditional MLP-GPT models. The repository includes scripts for training the model, downloading datasets, and evaluating model performance. Development tasks include integrating with other libraries, testing, and documentation.

CopilotKit

CopilotKit is an open-source framework for building, deploying, and operating fully custom AI Copilots, including in-app AI chatbots, AI agents, and AI Textareas. It provides a set of components and entry points that allow developers to easily integrate AI capabilities into their applications. CopilotKit is designed to be flexible and extensible, so developers can tailor it to their specific needs. It supports a variety of use cases, including providing app-aware AI chatbots that can interact with the application state and take action, drop-in replacements for textareas with AI-assisted text generation, and in-app agents that can access real-time application context and take action within the application.

evalplus

EvalPlus is a rigorous evaluation framework for LLM4Code, providing HumanEval+ and MBPP+ tests to evaluate large language models on code generation tasks. It offers precise evaluation and ranking, coding rigorousness analysis, and pre-generated code samples. Users can use EvalPlus to generate code solutions, post-process code, and evaluate code quality. The tool includes tools for code generation and test input generation using various backends.

libllm

libLLM is an open-source project designed for efficient inference of large language models (LLM) on personal computers and mobile devices. It is optimized to run smoothly on common devices, written in C++14 without external dependencies, and supports CUDA for accelerated inference. Users can build the tool for CPU only or with CUDA support, and run libLLM from the command line. Additionally, there are API examples available for Python and the tool can export Huggingface models.

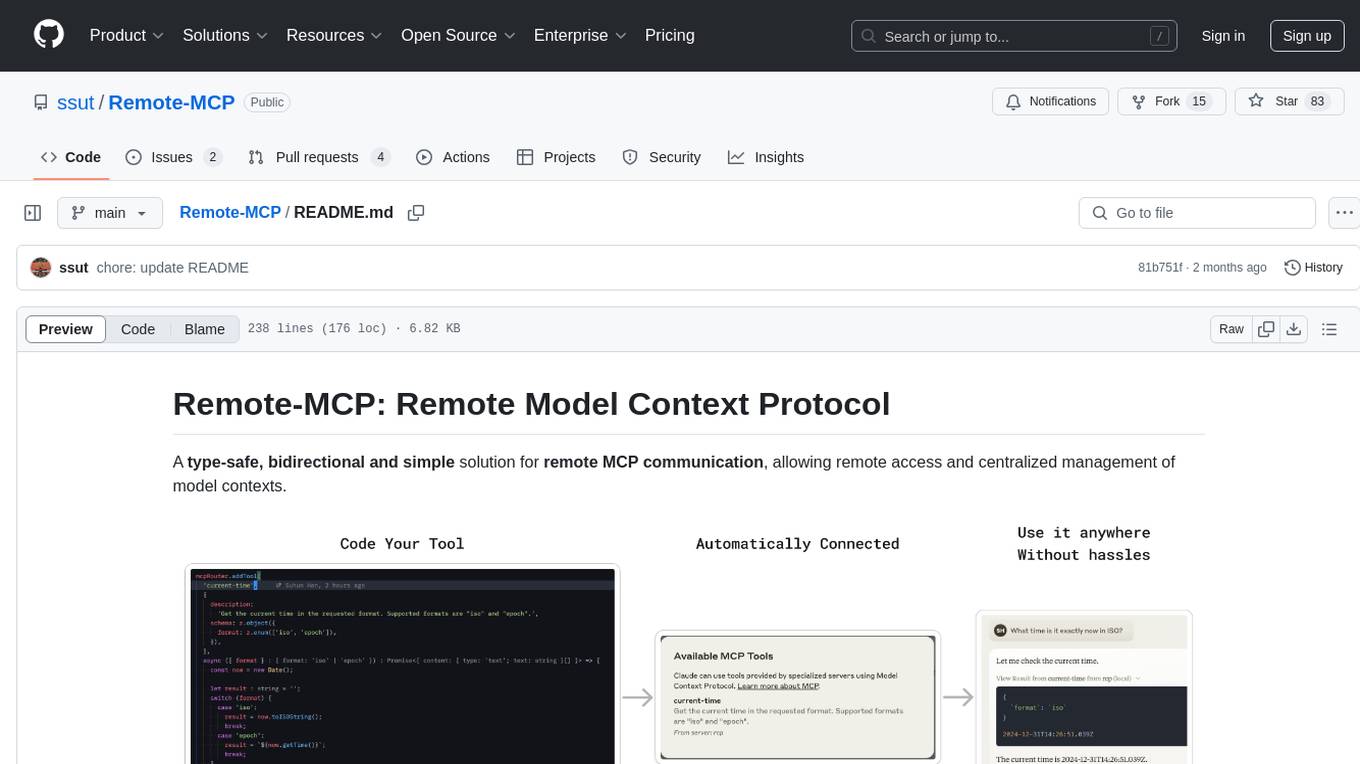

Remote-MCP

Remote-MCP is a type-safe, bidirectional, and simple solution for remote MCP communication, enabling remote access and centralized management of model contexts. It provides a bridge for immediate remote access to a remote MCP server from a local MCP client, without waiting for future official implementations. The repository contains client and server libraries for creating and connecting to remotely accessible MCP services. The core features include basic type-safe client/server communication, MCP command/tool/prompt support, custom headers, and ongoing work on crash-safe handling and event subscription system.

openlrc

Open-Lyrics is a Python library that transcribes voice files using faster-whisper and translates/polishes the resulting text into `.lrc` files in the desired language using LLM, e.g. OpenAI-GPT, Anthropic-Claude. It offers well preprocessed audio to reduce hallucination and context-aware translation to improve translation quality. Users can install the library from PyPI or GitHub and follow the installation steps to set up the environment. The tool supports GUI usage and provides Python code examples for transcription and translation tasks. It also includes features like utilizing context and glossary for translation enhancement, pricing information for different models, and a list of todo tasks for future improvements.

optscale

OptScale is an open-source FinOps and MLOps platform that provides cloud cost optimization for all types of organizations and MLOps capabilities like experiment tracking, model versioning, ML leaderboards.

tambo

tambo ai is a React library that simplifies the process of building AI assistants and agents in React by handling thread management, state persistence, streaming responses, AI orchestration, and providing a compatible React UI library. It eliminates React boilerplate for AI features, allowing developers to focus on creating exceptional user experiences with clean React hooks that seamlessly integrate with their codebase.

simba

Simba is an open source, portable Knowledge Management System (KMS) designed to seamlessly integrate with any Retrieval-Augmented Generation (RAG) system. It features a modern UI and modular architecture, allowing developers to focus on building advanced AI solutions without the complexities of knowledge management. Simba offers a user-friendly interface to visualize and modify document chunks, supports various vector stores and embedding models, and simplifies knowledge management for developers. It is community-driven, extensible, and aims to enhance AI functionality by providing a seamless integration with RAG-based systems.

VideoRefer

VideoRefer Suite is a tool designed to enhance the fine-grained spatial-temporal understanding capabilities of Video Large Language Models (Video LLMs). It consists of three primary components: Model (VideoRefer) for perceiving, reasoning, and retrieval for user-defined regions at any specified timestamps, Dataset (VideoRefer-700K) for high-quality object-level video instruction data, and Benchmark (VideoRefer-Bench) to evaluate object-level video understanding capabilities. The tool can understand any object within a video.

chrome-ai

Chrome AI is a Vercel AI provider for Chrome's built-in model (Gemini Nano). It allows users to create language models using Chrome's AI capabilities. The tool is under development and may contain errors and frequent changes. Users can install the ChromeAI provider module and use it to generate text, stream text, and generate objects. To enable AI in Chrome, users need to have Chrome version 127 or greater and turn on specific flags. The tool is designed for developers and researchers interested in experimenting with Chrome's built-in AI features.

serve

Jina-Serve is a framework for building and deploying AI services that communicate via gRPC, HTTP and WebSockets. It provides native support for major ML frameworks and data types, high-performance service design with scaling and dynamic batching, LLM serving with streaming output, built-in Docker integration and Executor Hub, one-click deployment to Jina AI Cloud, and enterprise-ready features with Kubernetes and Docker Compose support. Users can create gRPC-based AI services, build pipelines, scale services locally with replicas, shards, and dynamic batching, deploy to the cloud using Kubernetes, Docker Compose, or JCloud, and enable token-by-token streaming for responsive LLM applications.

ScaleLLM

ScaleLLM is a cutting-edge inference system engineered for large language models (LLMs), meticulously designed to meet the demands of production environments. It extends its support to a wide range of popular open-source models, including Llama3, Gemma, Bloom, GPT-NeoX, and more. ScaleLLM is currently undergoing active development. We are fully committed to consistently enhancing its efficiency while also incorporating additional features. Feel free to explore our **_Roadmap_** for more details. ## Key Features * High Efficiency: Excels in high-performance LLM inference, leveraging state-of-the-art techniques and technologies like Flash Attention, Paged Attention, Continuous batching, and more. * Tensor Parallelism: Utilizes tensor parallelism for efficient model execution. * OpenAI-compatible API: An efficient golang rest api server that compatible with OpenAI. * Huggingface models: Seamless integration with most popular HF models, supporting safetensors. * Customizable: Offers flexibility for customization to meet your specific needs, and provides an easy way to add new models. * Production Ready: Engineered with production environments in mind, ScaleLLM is equipped with robust system monitoring and management features to ensure a seamless deployment experience.

LTEngine

LTEngine is a free and open-source local AI machine translation API written in Rust. It is self-hosted and compatible with LibreTranslate. LTEngine utilizes large language models (LLMs) via llama.cpp, offering high-quality translations that rival or surpass DeepL for certain languages. It supports various accelerators like CUDA, Metal, and Vulkan, with the largest model 'gemma3-27b' fitting on a single consumer RTX 3090. LTEngine is actively developed, with a roadmap outlining future enhancements and features.

aio-pika

Aio-pika is a wrapper around aiormq for asyncio and humans. It provides a completely asynchronous API, object-oriented API, transparent auto-reconnects with complete state recovery, Python 3.7+ compatibility, transparent publisher confirms support, transactions support, and complete type-hints coverage.

For similar tasks

simulflow

Simulflow is a Clojure framework for building real-time voice-enabled AI applications using a data-driven, functional approach. It provides a composable pipeline architecture for processing audio, text, and AI interactions with built-in support for major AI providers. The framework uses processors that communicate through specialized frames to create voice-enabled AI agents, allowing for mental multitasking and rational thought. Simulflow offers a flow-based architecture, data-first design, streaming architecture, extensibility, flexible frame system, and built-in services for seamless integration with major AI providers. Users can easily swap components, add new functionality, or debug individual stages without affecting the entire system.

Applio

Applio is a VITS-based Voice Conversion tool focused on simplicity, quality, and performance. It features a user-friendly interface, cross-platform compatibility, and a range of customization options. Applio is suitable for various tasks such as voice cloning, voice conversion, and audio editing. Its key features include a modular codebase, hop length implementation, translations in over 30 languages, optimized requirements, streamlined installation, hybrid F0 estimation, easy-to-use UI, optimized code and dependencies, plugin system, overtraining detector, model search, enhancements in pretrained models, voice blender, accessibility improvements, new F0 extraction methods, output format selection, hashing system, model download system, TTS enhancements, split audio, Discord presence, Flask integration, and support tab.

agents

The LiveKit Agent Framework is designed for building real-time, programmable participants that run on servers. Easily tap into LiveKit WebRTC sessions and process or generate audio, video, and data streams. The framework includes plugins for common workflows, such as voice activity detection and speech-to-text. Agents integrates seamlessly with LiveKit server, offloading job queuing and scheduling responsibilities to it. This eliminates the need for additional queuing infrastructure. Agent code developed on your local machine can scale to support thousands of concurrent sessions when deployed to a server in production.

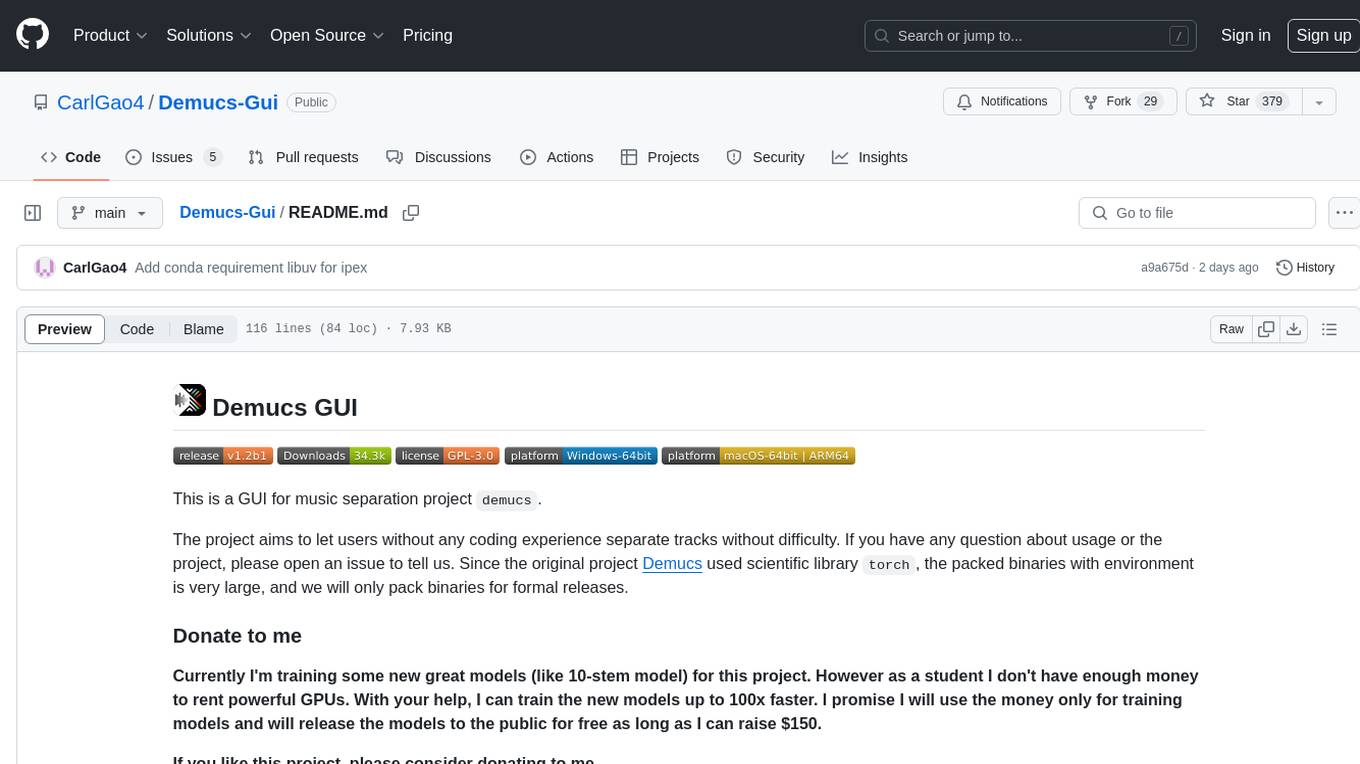

Demucs-Gui

Demucs GUI is a graphical user interface for the music separation project Demucs. It aims to allow users without coding experience to easily separate tracks. The tool provides a user-friendly interface for running the Demucs project, which originally used the scientific library torch. The GUI simplifies the process of separating tracks and provides support for different platforms such as Windows, macOS, and Linux. Users can donate to support the development of new models for the project, and the tool has specific system requirements including minimum system versions and hardware specifications.

liboai

liboai is a simple C++17 library for the OpenAI API, providing developers with access to OpenAI endpoints through a collection of methods and classes. It serves as a spiritual port of OpenAI's Python library, 'openai', with similar structure and features. The library supports various functionalities such as ChatGPT, Audio, Azure, Functions, Image DALL·E, Models, Completions, Edit, Embeddings, Files, Fine-tunes, Moderation, and Asynchronous Support. Users can easily integrate the library into their C++ projects to interact with OpenAI services.

Awesome-Colorful-LLM

Awesome-Colorful-LLM is a meticulously assembled anthology of vibrant multimodal research focusing on advancements propelled by large language models (LLMs) in domains such as Vision, Audio, Agent, Robotics, and Fundamental Sciences like Mathematics. The repository contains curated collections of works, datasets, benchmarks, projects, and tools related to LLMs and multimodal learning. It serves as a comprehensive resource for researchers and practitioners interested in exploring the intersection of language models and various modalities for tasks like image understanding, video pretraining, 3D modeling, document understanding, audio analysis, agent learning, robotic applications, and mathematical research.

ControlLLM

ControlLLM is a framework that empowers large language models to leverage multi-modal tools for solving complex real-world tasks. It addresses challenges like ambiguous user prompts, inaccurate tool selection, and inefficient tool scheduling by utilizing a task decomposer, a Thoughts-on-Graph paradigm, and an execution engine with a rich toolbox. The framework excels in tasks involving image, audio, and video processing, showcasing superior accuracy, efficiency, and versatility compared to existing methods.

VITA

VITA is an open-source interactive omni multimodal Large Language Model (LLM) capable of processing video, image, text, and audio inputs simultaneously. It stands out with features like Omni Multimodal Understanding, Non-awakening Interaction, and Audio Interrupt Interaction. VITA can respond to user queries without a wake-up word, track and filter external queries in real-time, and handle various query inputs effectively. The model utilizes state tokens and a duplex scheme to enhance the multimodal interactive experience.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.