VITA

✨✨VITA: Towards Open-Source Interactive Omni Multimodal LLM

Stars: 1080

VITA is an open-source interactive omni multimodal Large Language Model (LLM) capable of processing video, image, text, and audio inputs simultaneously. It stands out with features like Omni Multimodal Understanding, Non-awakening Interaction, and Audio Interrupt Interaction. VITA can respond to user queries without a wake-up word, track and filter external queries in real-time, and handle various query inputs effectively. The model utilizes state tokens and a duplex scheme to enhance the multimodal interactive experience.

README:

-

2024.12.20🌟 We are excited to introduce the VITA-1.5, a more powerful and more real-time version! -

2024.08.12🌟 We are very proud to launch VITA-1.0, the First-Ever open-source interactive omni multimodal LLM! We have submitted the open-source code, yet it is under review internally. We are moving the process forward as quickly as possible, stay tuned!

- VITA-1.5: An Open-Source Interactive Multimodal LLM

On 2024.08.12, we launched VITA-1.0, the first-ever open-source interactive omni-multimodal LLM. Now (2024.12.20), we bring a new version VITA-1.5!

We are excited to present VITA-1.5, which incorporates a series of advancements:

-

Significantly Reduced Interaction Latency. The end-to-end speech interaction latency has been reduced from about 4 seconds to 1.5 seconds, enabling near-instant interaction and greatly improving user experience.

-

Enhanced Multimodal Performance. The average performance on multimodal benchmarks such as MME, MMBench, and MathVista has been significantly increased from 59.8 to 70.8.

-

Improvement in Speech Processing. The speech processing capabilities have been refined to a new level, with ASR WER (Word Error Rate, Test Other) reduced from 18.4 to 7.5. Besides, we replace the independent TTS module of VITA-1.0 with an end-to-end TTS module, which accepts the LLM's embedding as input.

-

Progressive Training Strategy. By this manner, the adding of speech has little effect on other multi-modal performance (vision-language). The average image understanding performance only drops from 71.3 to 70.8.

- Evaluation on image and video understanding benchmarks.

- VITA-1.5 outperforms professional speech models on ASR benchmarks.

- Adding the audio modality has little effect on image and video understanding capability.

git clone https://github.com/VITA-MLLM/VITA

cd VITA

conda create -n vita python=3.10 -y

conda activate vita

pip install --upgrade pip

pip install -r requirements.txt

pip install flash-attn --no-build-isolation

- An example json file of the training data:

[

...

{

"set": "sharegpt4",

"id": "000000000164",

"conversations": [

{

"from": "human",

"value": "<image>\n<audio>\n"

},

{

"from": "gpt", // follow the setting of llave, "gpt" is only used to indicate that this is the ground truth of the model output

"value": "This is a well-organized kitchen with a clean, modern aesthetic. The kitchen features a white countertop against a white wall, creating a bright and airy atmosphere. "

}

],

"image": "coco/images/train2017/000000000164.jpg",

"audio": [

"new_value_dict_0717/output_wavs/f61cf238b7872b4903e1fc15dcb5a50c.wav"

]

},

...

]

- The

setfield is used to retrieve the image or video folder for data loading. You should add its key-value pair to theFolderDictin ./vita/config/dataset_config.py:

AudioFolder = ""

FolderDict = {

#### NaturalCap

"sharegpt4": "",

}

#### NaturalCap

ShareGPT4V = {"chat_path": ""}

- Set the JSON path for

"chat_path"in the corresponding dictionary in ./vita/config/dataset_config.py. - Set the audio folder path for

AudioFolderin ./vita/config/dataset_config.py. - Add the data class in

DataConfigin ./vita/config/init.py:

from .dataset_config import *

NaturalCap = [ShareGPT4V]

DataConfig = {

"Pretrain_video": NaturalCap,

}

-

Download the required weights: (1) VITA-1.5 checkpoint, (2) InternViT-300M-448px, and (3) Our pretrained audio encoder in Stage-2 audio-language alignment (refer to Fig. 3 in the paper).

-

Replace the paths in ./script/train/finetuneTaskNeg_qwen_nodes.sh:

...

--model_name_or_path VITA1.5_ckpt \

...

--vision_tower InternViT-300M-448px \

...

--audio_encoder audio-encoder-Qwen2-7B-1107-weight-base-11wh-tunning \

...

- Execute the following commands to start the training process:

export PYTHONPATH=./

export PYTORCH_CUDA_ALLOC_CONF=expandable_segments:True

OUTPUT_DIR=/mnt/cfs/lhj/videomllm_ckpt/outputs/vita_video_audio

bash script/train/finetuneTaskNeg_qwen_nodes.sh ${OUTPUT_DIR}

- Text query

CUDA_VISIBLE_DEVICES=2 python video_audio_demo.py \

--model_path [vita/path] \

--image_path asset/vita_newlog.jpg \

--model_type qwen2p5_instruct \

--conv_mode qwen2p5_instruct \

--question "Describe this images."

- Audio query

CUDA_VISIBLE_DEVICES=4 python video_audio_demo.py \

--model_path [vita/path] \

--image_path asset/vita_newlog.png \

--model_type qwen2p5_instruct \

--conv_mode qwen2p5_instruct \

--audio_path asset/q1.wav

- Noisy audio query

CUDA_VISIBLE_DEVICES=4 python video_audio_demo.py \

--model_path [vita/path] \

--image_path asset/vita_newlog.png \

--model_type qwen2p5_instruct \

--conv_mode qwen2p5_instruct \

--audio_path asset/q2.wav

We have accelerated the model using vLLM. Since VITA has not yet been integrated into vLLM, you need to make some modifications to the vLLM code to adapt it for VITA.

conda create -n vita_demo python==3.10

conda activate vita_demo

pip install -r web_demo/web_demo_requirements.txt

# Backup a new weight file

cp -rL VITA_ckpt/ demo_VITA_ckpt/

mv demo_VITA_ckpt/config.json demo_VITA_ckpt/origin_config.json

cd ./web_demo/vllm_tools

cp -rf qwen2p5_model_weight_file/* ../../demo_VITA_ckpt/

cp -rf vllm_file/* your_anaconda/envs/vita_demo/lib/python3.10/site-packages/vllm/model_executor/models/https://github.com/user-attachments/assets/43edd44a-8c8d-43ea-9d2b-beebe909377a

python -m web_demo.web_ability_demo demo_VITA_ckpt/To run the real-time interactive demo, you need to make the following preparations:

-

Prepare a VAD (Voice Activity Detection) module. You can choose to download silero_vad.onnx and silero_vad.jit, and place these files in the

./web_demo/wakeup_and_vad/resource/directory. -

For a better real-time interactive experience, you need to set

max_dynamic_patchto 1 indemo_VITA_ckpt/config.json. When you run the basic demo, you can set it to the default value of 12 to enhance the model's visual capabilities.

pip install flask==3.1.0 flask-socketio==5.5.0 cryptography==44.0.0 timm==1.0.12

python -m web_demo.server --model_path demo_VITA_ckpt --ip 0.0.0.0 --port 8081Modify the model path of vita_qwen2 in VLMEvalKit/vlmeval/config.py

vita_series = {

'vita': partial(VITA, model_path='/path/to/model'),

'vita_qwen2': partial(VITAQwen2, model_path='/path/to/model'),

}

Follow the instuctions in VLMEvalKit to set the GPT as the judge model.

If the openai api are not available, you can use a local model as the judge. In our experiments, we find that Qwen1.5-1.8B-Chat judge can work well compared to GPT-4, except in MM-Vet. To start the judge:

CUDA_VISIBLE_DEVICES=0 lmdeploy serve api_server /mnt/cfs/lhj/model_weights/Qwen1.5-1.8B-Chat --server-port 23333

Then configure the .env file in the VLMEvalKit folder:

OPENAI_API_KEY=sk-123456

OPENAI_API_BASE=http://0.0.0.0:23333/v1/chat/completions

LOCAL_LLM=/mnt/cfs/lhj/model_weights/Qwen1.5-1.8B-Chat

Evaluating on these benchmarks:

CUDA_VISIBLE_DEVICES=0 python run.py --data MMBench_TEST_EN_V11 MMBench_TEST_CN_V11 MMStar MMMU_DEV_VAL MathVista_MINI HallusionBench AI2D_TEST OCRBench MMVet MME --model vita_qwen2 --verbose

Download the Video-MME dataset and extract the frames, saving them as images to improve IO efficiency.

cd ./videomme

Run the model on Video-MME in the setting of wo/ subtitles:

VIDEO_TYPE="s,m,l"

NAMES=(lyd jyg wzh wzz zcy by dyh lfy)

for((i=0; i<${#NAMES[@]}; i++))

do

CUDA_VISIBLE_DEVICES=6 python yt_video_inference_qa_imgs.py \

--model-path [vita/path] \

--model_type qwen2p5_instruct \

--conv_mode qwen2p5_instruct \

--responsible_man ${NAMES[i]} \

--video_type $VIDEO_TYPE \

--output_dir qa_wo_sub \

--video_dir [Video-MME-imgs] | tee logs/infer.log

done

Run the model on Video-MME in the setting of w/ subtitles:

VIDEO_TYPE="s,m,l"

NAMES=(lyd jyg wzh wzz zcy by dyh lfy)

for((i=0; i<${#NAMES[@]}; i++))

do

CUDA_VISIBLE_DEVICES=7 python yt_video_inference_qa_imgs.py \

--model-path [vita/path] \

--model_type qwen2p5_instruct \

--conv_mode qwen2p5_instruct \

--responsible_man ${NAMES[i]} \

--video_type $VIDEO_TYPE \

--output_dir qa_w_sub \

--video_dir [Video-MME-imgs] \

--use_subtitles | tee logs/infer.log

done

Parse the results:

python parse_answer.py --video_types "s,m,l" --result_dir qa_wo_sub

python parse_answer.py --video_types "s,m,l" --result_dir qa_w_sub

If you find our work helpful for your research, please consider citing our work.

@article{fu2024vita,

title={Vita: Towards open-source interactive omni multimodal llm},

author={Fu, Chaoyou and Lin, Haojia and Long, Zuwei and Shen, Yunhang and Zhao, Meng and Zhang, Yifan and Wang, Xiong and Yin, Di and Ma, Long and Zheng, Xiawu and others},

journal={arXiv preprint arXiv:2408.05211},

year={2024}

}VITA is trained on large-scale open-source corpus, and its output has randomness. Any content generated by VITA does not represent the views of the model developers. We are not responsible for any problems arising from the use, misuse, and dissemination of VITA, including but not limited to public opinion risks and data security issues.

Explore our related researches:

- [VITA-1.0] VITA: Towards Open-Source Interactive Omni Multimodal LLM

- [Awesome-MLLM] A Survey on Multimodal Large Language Models

- [MME] MME: A Comprehensive Evaluation Benchmark for Multimodal Large Language Models

- [Video-MME] Video-MME: The First-Ever Comprehensive Evaluation Benchmark of Multi-modal LLMs in Video Analysis

VITA is built with reference to the following outstanding works: LLaVA-1.5, Bunny, ChatUnivi, InternVL, InternViT, Qwen-2.5, VLMEvalkit, and Mixtral 8*7B. Thanks!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for VITA

Similar Open Source Tools

VITA

VITA is an open-source interactive omni multimodal Large Language Model (LLM) capable of processing video, image, text, and audio inputs simultaneously. It stands out with features like Omni Multimodal Understanding, Non-awakening Interaction, and Audio Interrupt Interaction. VITA can respond to user queries without a wake-up word, track and filter external queries in real-time, and handle various query inputs effectively. The model utilizes state tokens and a duplex scheme to enhance the multimodal interactive experience.

VLM-R1

VLM-R1 is a stable and generalizable R1-style Large Vision-Language Model proposed for Referring Expression Comprehension (REC) task. It compares R1 and SFT approaches, showing R1 model's steady improvement on out-of-domain test data. The project includes setup instructions, training steps for GRPO and SFT models, support for user data loading, and evaluation process. Acknowledgements to various open-source projects and resources are mentioned. The project aims to provide a reliable and versatile solution for vision-language tasks.

LLMTSCS

LLMLight is a novel framework that employs Large Language Models (LLMs) as decision-making agents for Traffic Signal Control (TSC). The framework leverages the advanced generalization capabilities of LLMs to engage in a reasoning and decision-making process akin to human intuition for effective traffic control. LLMLight has been demonstrated to be remarkably effective, generalizable, and interpretable against various transportation-based and RL-based baselines on nine real-world and synthetic datasets.

DeepResearch

Tongyi DeepResearch is an agentic large language model with 30.5 billion total parameters, designed for long-horizon, deep information-seeking tasks. It demonstrates state-of-the-art performance across various search benchmarks. The model features a fully automated synthetic data generation pipeline, large-scale continual pre-training on agentic data, end-to-end reinforcement learning, and compatibility with two inference paradigms. Users can download the model directly from HuggingFace or ModelScope. The repository also provides benchmark evaluation scripts and information on the Deep Research Agent Family.

ChatDev

ChatDev is a virtual software company powered by intelligent agents like CEO, CPO, CTO, programmer, reviewer, tester, and art designer. These agents collaborate to revolutionize the digital world through programming. The platform offers an easy-to-use, highly customizable, and extendable framework based on large language models, ideal for studying collective intelligence. ChatDev introduces innovative methods like Iterative Experience Refinement and Experiential Co-Learning to enhance software development efficiency. It supports features like incremental development, Docker integration, Git mode, and Human-Agent-Interaction mode. Users can customize ChatChain, Phase, and Role settings, and share their software creations easily. The project is open-source under the Apache 2.0 License and utilizes data licensed under CC BY-NC 4.0.

ichigo

Ichigo is a local real-time voice AI tool that uses an early fusion technique to extend a text-based LLM to have native 'listening' ability. It is an open research experiment with improved multiturn capabilities and the ability to refuse processing inaudible queries. The tool is designed for open data, open weight, on-device Siri-like functionality, inspired by Meta's Chameleon paper. Ichigo offers a web UI demo and Gradio web UI for users to interact with the tool. It has achieved enhanced MMLU scores, stronger context handling, advanced noise management, and improved multi-turn capabilities for a robust user experience.

curator

Bespoke Curator is an open-source tool for data curation and structured data extraction. It provides a Python library for generating synthetic data at scale, with features like programmability, performance optimization, caching, and integration with HuggingFace Datasets. The tool includes a Curator Viewer for dataset visualization and offers a rich set of functionalities for creating and refining data generation strategies.

effective_llm_alignment

This is a super customizable, concise, user-friendly, and efficient toolkit for training and aligning LLMs. It provides support for various methods such as SFT, Distillation, DPO, ORPO, CPO, SimPO, SMPO, Non-pair Reward Modeling, Special prompts basket format, Rejection Sampling, Scoring using RM, Effective FAISS Map-Reduce Deduplication, LLM scoring using RM, NER, CLIP, Classification, and STS. The toolkit offers key libraries like PyTorch, Transformers, TRL, Accelerate, FSDP, DeepSpeed, and tools for result logging with wandb or clearml. It allows mixing datasets, generation and logging in wandb/clearml, vLLM batched generation, and aligns models using the SMPO method.

NExT-GPT

NExT-GPT is an end-to-end multimodal large language model that can process input and generate output in various combinations of text, image, video, and audio. It leverages existing pre-trained models and diffusion models with end-to-end instruction tuning. The repository contains code, data, and model weights for NExT-GPT, allowing users to work with different modalities and perform tasks like encoding, understanding, reasoning, and generating multimodal content.

HuixiangDou

HuixiangDou is a **group chat** assistant based on LLM (Large Language Model). Advantages: 1. Design a two-stage pipeline of rejection and response to cope with group chat scenario, answer user questions without message flooding, see arxiv2401.08772 2. Low cost, requiring only 1.5GB memory and no need for training 3. Offers a complete suite of Web, Android, and pipeline source code, which is industrial-grade and commercially viable Check out the scenes in which HuixiangDou are running and join WeChat Group to try AI assistant inside. If this helps you, please give it a star ⭐

rl

TorchRL is an open-source Reinforcement Learning (RL) library for PyTorch. It provides pytorch and **python-first** , low and high level abstractions for RL that are intended to be **efficient** , **modular** , **documented** and properly **tested**. The code is aimed at supporting research in RL. Most of it is written in python in a highly modular way, such that researchers can easily swap components, transform them or write new ones with little effort.

GPTSwarm

GPTSwarm is a graph-based framework for LLM-based agents that enables the creation of LLM-based agents from graphs and facilitates the customized and automatic self-organization of agent swarms with self-improvement capabilities. The library includes components for domain-specific operations, graph-related functions, LLM backend selection, memory management, and optimization algorithms to enhance agent performance and swarm efficiency. Users can quickly run predefined swarms or utilize tools like the file analyzer. GPTSwarm supports local LM inference via LM Studio, allowing users to run with a local LLM model. The framework has been accepted by ICML2024 and offers advanced features for experimentation and customization.

TheoremExplainAgent

TheoremExplainAgent is an AI system that generates long-form Manim videos to visually explain theorems, proving its deep understanding while uncovering reasoning flaws that text alone often hides. The codebase for the paper 'TheoremExplainAgent: Towards Multimodal Explanations for LLM Theorem Understanding' is available in this repository. It provides a tool for creating multimodal explanations for theorem understanding using AI technology.

alexandria-audiobook

Alexandria Audiobook Generator is a tool that transforms any book or novel into a fully-voiced audiobook using AI-powered script annotation and text-to-speech. It features a built-in Qwen3-TTS engine with batch processing and a browser-based editor for fine-tuning every line before final export. The tool offers AI-powered pipeline for automatic script annotation, smart chunking, and context preservation. It also provides voice generation capabilities with built-in TTS engine, multi-language support, custom voices, voice cloning, and LoRA voice training. The web UI editor allows users to edit, preview, and export the audiobook. Export options include combined audiobook, individual voicelines, and Audacity export for DAW editing.

sre

SmythOS is an operating system designed for building, deploying, and managing intelligent AI agents at scale. It provides a unified SDK and resource abstraction layer for various AI services, making it easy to scale and flexible. With an agent-first design, developer-friendly SDK, modular architecture, and enterprise security features, SmythOS offers a robust foundation for AI workloads. The system is built with a philosophy inspired by traditional operating system kernels, ensuring autonomy, control, and security for AI agents. SmythOS aims to make shipping production-ready AI agents accessible and open for everyone in the coming Internet of Agents era.

ebook2audiobook

ebook2audiobook is a CPU/GPU converter tool that converts eBooks to audiobooks with chapters and metadata using tools like Calibre, ffmpeg, XTTSv2, and Fairseq. It supports voice cloning and a wide range of languages. The tool is designed to run on 4GB RAM and provides a new v2.0 Web GUI interface for user-friendly interaction. Users can convert eBooks to text format, split eBooks into chapters, and utilize high-quality text-to-speech functionalities. Supported languages include Arabic, Chinese, English, French, German, Hindi, and many more. The tool can be used for legal, non-DRM eBooks only and should be used responsibly in compliance with applicable laws.

For similar tasks

HPT

Hyper-Pretrained Transformers (HPT) is a novel multimodal LLM framework from HyperGAI, trained for vision-language models capable of understanding both textual and visual inputs. The repository contains the open-source implementation of inference code to reproduce the evaluation results of HPT Air on different benchmarks. HPT has achieved competitive results with state-of-the-art models on various multimodal LLM benchmarks. It offers models like HPT 1.5 Air and HPT 1.0 Air, providing efficient solutions for vision-and-language tasks.

learnopencv

LearnOpenCV is a repository containing code for Computer Vision, Deep learning, and AI research articles shared on the blog LearnOpenCV.com. It serves as a resource for individuals looking to enhance their expertise in AI through various courses offered by OpenCV. The repository includes a wide range of topics such as image inpainting, instance segmentation, robotics, deep learning models, and more, providing practical implementations and code examples for readers to explore and learn from.

spark-free-api

Spark AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, AI drawing support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository includes multiple free-api projects for various AI services. Users can access the API for tasks such as chat completions, AI drawing, document interpretation, image analysis, and ssoSessionId live checking. The project also provides guidelines for deployment using Docker, Docker-compose, Render, Vercel, and native deployment methods. It recommends using custom clients for faster and simpler access to the free-api series projects.

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

clarifai-python-grpc

This is the official Clarifai gRPC Python client for interacting with their recognition API. Clarifai offers a platform for data scientists, developers, researchers, and enterprises to utilize artificial intelligence for image, video, and text analysis through computer vision and natural language processing. The client allows users to authenticate, predict concepts in images, and access various functionalities provided by the Clarifai API. It follows a versioning scheme that aligns with the backend API updates and includes specific instructions for installation and troubleshooting. Users can explore the Clarifai demo, sign up for an account, and refer to the documentation for detailed information.

horde-worker-reGen

This repository provides the latest implementation for the AI Horde Worker, allowing users to utilize their graphics card(s) to generate, post-process, or analyze images for others. It offers a platform where users can create images and earn 'kudos' in return, granting priority for their own image generations. The repository includes important details for setup, recommendations for system configurations, instructions for installation on Windows and Linux, basic usage guidelines, and information on updating the AI Horde Worker. Users can also run the worker with multiple GPUs and receive notifications for updates through Discord. Additionally, the repository contains models that are licensed under the CreativeML OpenRAIL License.

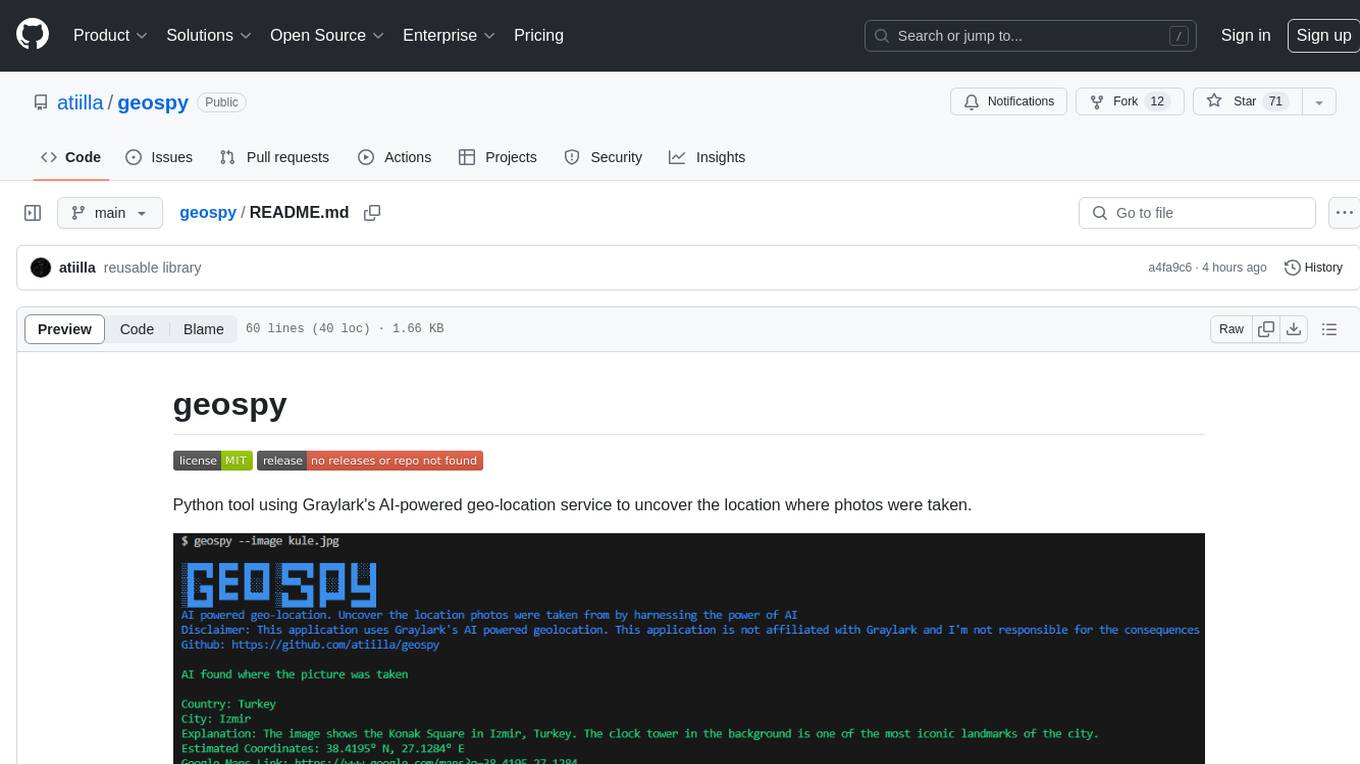

geospy

Geospy is a Python tool that utilizes Graylark's AI-powered geolocation service to determine the location where photos were taken. It allows users to analyze images and retrieve information such as country, city, explanation, coordinates, and Google Maps links. The tool provides a seamless way to integrate geolocation services into various projects and applications.

Awesome-Colorful-LLM

Awesome-Colorful-LLM is a meticulously assembled anthology of vibrant multimodal research focusing on advancements propelled by large language models (LLMs) in domains such as Vision, Audio, Agent, Robotics, and Fundamental Sciences like Mathematics. The repository contains curated collections of works, datasets, benchmarks, projects, and tools related to LLMs and multimodal learning. It serves as a comprehensive resource for researchers and practitioners interested in exploring the intersection of language models and various modalities for tasks like image understanding, video pretraining, 3D modeling, document understanding, audio analysis, agent learning, robotic applications, and mathematical research.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.