julep

Deploy serverless AI workflows at scale. Firebase for AI agents

Stars: 6607

Julep is an advanced platform for creating stateful and functional AI apps powered by large language models. It offers features like statefulness by design, automatic function calling, production-ready deployment, cron-like asynchronous functions, 90+ built-in tools, and the ability to switch between different LLMs easily. Users can build AI applications without the need to write code for embedding, saving, and retrieving conversation history, and can connect to third-party applications using Composio. Julep simplifies the process of getting started with AI apps, whether they are conversational, functional, or agentic.

README:

██╗ ██╗ ██╗ ██╗ ███████╗ ██████╗ █████╗ ██╗

██║ ██║ ██║ ██║ ██╔════╝ ██╔══██╗ ██╔══██╗ ██║

██║ ██║ ██║ ██║ █████╗ ██████╔╝ ███████║ ██║

██ ██║ ██║ ██║ ██║ ██╔══╝ ██╔═══╝ ██╔══██║ ██║

╚█████╔╝ ╚██████╔╝ ███████╗ ███████╗ ██║ ██║ ██║ ██║

╚════╝ ╚═════╝ ╚══════╝ ╚══════╝ ╚═╝ ╚═╝ ╚═╝ ╚═╝

Try Julep Today: Visit the Julep Website · Get started on the Julep Dashboard (free API key) · Read the Documentation

Julep is an open-source platform for building agent-based AI workflows that go far beyond simple chains of prompts. It lets you orchestrate complex, multi-step processes with Large Language Models (LLMs) and tools without managing any infrastructure. With Julep, you can create AI agents that remember past interactions and handle sophisticated tasks with branching logic, loops, parallel execution, and integration of external APIs. In short, Julep acts like a “Firebase for AI agents,” providing a robust backend for intelligent workflows at scale.

Key Features and Benefits:

- Persistent Memory: Build AI agents that maintain context and long-term memory across conversations, so they can learn and improve over time.

- Modular Workflows: Define complex tasks as modular steps (in YAML or code) with conditional logic, loops, and error handling. Julep’s workflow engine manages multi-step processes and decisions automatically.

- Tool Orchestration: Easily integrate external tools and APIs (web search, databases, third-party services, etc.) as part of your agent’s toolkit. Julep’s agents can invoke these tools to augment their capabilities, enabling Retrieval-Augmented Generation and more.

- Parallel & Scalable: Run multiple operations in parallel for efficiency, and let Julep handle scaling and concurrency under the hood. The platform is serverless, so it seamlessly scales workflows without extra devops overhead.

- Reliable Execution: Don’t worry about glitches – Julep provides built-in retries, self-healing steps, and robust error handling to keep long-running tasks on track. You also get real-time monitoring and logging to track progress.

- Easy Integration: Get started quickly with our SDKs for Python and Node.js, or use the Julep CLI for scripting. Julep’s REST API is available if you want to integrate directly into other systems.

Focus on your AI logic and creativity, while Julep takes care of the heavy lifting!

-

Sign Up & API Key: First, sign up on the Julep Dashboard to obtain your API key (needed for authenticating your SDK calls).

-

Install the SDK: Install the Julep SDK for your preferred language:

-

Define Your Agent: Use the SDK or YAML to define an agent and its task workflow. For example, you can specify the agent’s memory, tools it can use, and a step-by-step task logic. (See the Quick Start in our docs for a detailed walkthrough.)

-

Run a Workflow: Invoke your agent through the SDK to execute the task. The Julep platform will orchestrate the entire workflow in the cloud and manage the state, tool calls, and LLM interactions for you. You can check the agent’s output, monitor the execution on the dashboard, and iterate as needed.

That’s it! Your first AI agent can be up and running in minutes. For a complete tutorial, check out the Quick Start Guide in the documentation.

Note: Julep also offers a command-line interface (CLI) (currently in beta for Python) to manage workflows and agents. If you prefer a no-code approach or want to script common tasks, see the Julep CLI docs for details.

Looking to dive deeper? The Julep Documentation covers everything you need to master the platform – from core concepts (Agents, Tasks, Sessions, Tools) to advanced topics like agent memory management and architecture internals. Key resources include:

- Concept Guides: Learn about Julep’s architecture, how sessions and memory work, using tools, managing long conversations, and more.

- API & SDK Reference: Find detailed reference for all SDK methods and REST API endpoints to integrate Julep into your applications.

- Tutorials: Step-by-step guides for building real applications (e.g. a research agent that searches the web, a trip-planning assistant, or a chatbot with custom knowledge).

-

Cookbook Recipes: Explore the Julep Cookbook for ready-made example workflows and agents. These recipes demonstrate common patterns and use cases – a great way to learn by example. Browse the

cookbooks/directory in this repository for sample agent definitions. - IDE Integration: Access Julep documentation directly in your IDE! Perfect for getting instant answers while coding.

Join our growing community of developers and AI enthusiasts! Here are some ways to get involved and get support:

- Discord Community: Have questions or ideas? Join the conversation on our official Discord server to chat with the Julep team and other users. We’re happy to help with troubleshooting or brainstorm new use cases.

- GitHub Discussions and Issues: Feel free to use GitHub for reporting bugs, requesting features, or discussing implementation details. Check out the good first issues if you’d like to contribute – we welcome contributions of all kinds.

- Contributing: If you want to contribute code or improvements, please see our Contributing Guide for how to get started. We appreciate all PRs and feedback. By collaborating, we can make Julep even better!

Pro tip: Star our repo to stay updated – we’re constantly adding new features and examples.

Your contributions, big or small, are valuable to us. Let's build something amazing together!

![]()

Julep is offered under the Apache 2.0 License, which means it’s free to use in your own projects. See the LICENSE file for details. Enjoy building with Julep!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for julep

Similar Open Source Tools

julep

Julep is an advanced platform for creating stateful and functional AI apps powered by large language models. It offers features like statefulness by design, automatic function calling, production-ready deployment, cron-like asynchronous functions, 90+ built-in tools, and the ability to switch between different LLMs easily. Users can build AI applications without the need to write code for embedding, saving, and retrieving conversation history, and can connect to third-party applications using Composio. Julep simplifies the process of getting started with AI apps, whether they are conversational, functional, or agentic.

tandem

Tandem is a local-first, privacy-focused AI workspace that runs entirely on your machine. It is inspired by early AI coworking research previews, open source, and provider-agnostic. Tandem offers privacy-first operation, provider agnosticism, zero trust model, true cross-platform support, open-source licensing, modern stack, and developer superpowers for everyone. It provides folder-wide intelligence, multi-step automation, visual change review, complete undo, zero telemetry, provider freedom, secure design, cross-platform support, visual permissions, full undo, long-term memory, skills system, document text extraction, workspace Python venv, rich themes, execution planning, auto-updates, multiple specialized agent modes, multi-agent orchestration, project management, and various artifacts and outputs.

OpenOutreach

OpenOutreach is a self-hosted, open-source LinkedIn automation tool designed for B2B lead generation. It automates the entire outreach process in a stealthy, human-like way by discovering and enriching target profiles, ranking profiles using ML for smart prioritization, sending personalized connection requests, following up with custom messages after acceptance, and tracking everything in a built-in CRM with web UI. It offers features like undetectable behavior, fully customizable Python-based campaigns, local execution with CRM, easy deployment with Docker, and AI-ready templating for hyper-personalized messages.

LLMGA

LLMGA (Multimodal Large Language Model-based Generation Assistant) is a tool that leverages Large Language Models (LLMs) to assist users in image generation and editing. It provides detailed language generation prompts for precise control over Stable Diffusion (SD), resulting in more intricate and precise content in generated images. The tool curates a dataset for prompt refinement, similar image generation, inpainting & outpainting, and visual question answering. It offers a two-stage training scheme to optimize SD alignment and a reference-based restoration network to alleviate texture, brightness, and contrast disparities in image editing. LLMGA shows promising generative capabilities and enables wider applications in an interactive manner.

refact

This repository contains Refact WebUI for fine-tuning and self-hosting of code models, which can be used inside Refact plugins for code completion and chat. Users can fine-tune open-source code models, self-host them, download and upload Lloras, use models for code completion and chat inside Refact plugins, shard models, host multiple small models on one GPU, and connect GPT-models for chat using OpenAI and Anthropic keys. The repository provides a Docker container for running the self-hosted server and supports various models for completion, chat, and fine-tuning. Refact is free for individuals and small teams under the BSD-3-Clause license, with custom installation options available for GPU support. The community and support include contributing guidelines, GitHub issues for bugs, a community forum, Discord for chatting, and Twitter for product news and updates.

llmos

LLMos is an operating system designed for physical AI agents, providing a hybrid runtime environment where AI agents can perceive, reason, act on hardware, and evolve over time locally without cloud dependency. It allows natural language programming, dual-brain architecture for fast instinct and deep planner brains, markdown-as-code for defining agents and skills, and supports swarm intelligence and cognitive world models. The tool is built on a tech stack including Next.js, Electron, Python, and WebAssembly, and is structured around a dual-brain cognitive architecture, volume system, HAL for hardware abstraction, applet system for dynamic UI, and dreaming & evolution for robot improvement. The project is in Phase 1 (Foundation) and aims to move into Phase 2 (Dual-Brain & Local Intelligence), with contributions welcomed under the Apache 2.0 license by Evolving Agents Labs.

mcp-gateway-registry

The MCP Gateway & Registry is a unified, enterprise-ready platform that centralizes access to both MCP Servers and AI Agents using the Model Context Protocol (MCP). It serves as a Unified MCP Server Gateway, MCP Servers Registry, and Agent Registry & A2A Communication Hub. The platform integrates with external registries, providing a single control plane for tool access, agent orchestration, and communication patterns. It transforms the chaos of managing individual MCP server configurations into an organized approach with secure, governed access to curated servers and registered agents. The platform supports dynamic tool discovery, autonomous agent communication, and unified policies for server and agent access.

Skills-Manager

Skills Manager is a unified desktop application designed to centralize and manage AI coding assistant skills for tools like Claude Code, Codex, and Opencode. It offers smart synchronization, granular control, high performance, cross-platform support, multi-tool compatibility, custom tools integration, and a modern UI. Users can easily organize, sync, and share their skills across different AI tools, enhancing their coding experience and productivity.

QOwnNotes

QOwnNotes is an open source notepad with Markdown support and todo list manager for GNU/Linux, macOS, and Windows. It allows you to write down thoughts, edit, and search for them later from mobile devices. Notes are stored as plain text markdown files and synced with Nextcloud's/ownCloud's file sync functionality. QOwnNotes offers features like multiple note folders, restoration of older versions and trashed notes, sub-string searching, customizable keyboard shortcuts, markdown highlighting, spellchecking, tabbing support, scripting support, encryption of notes, dark mode theme support, and more. It supports hierarchical note tagging, note subfolders, sharing notes on Nextcloud/ownCloud server, portable mode, Vim mode, distraction-free mode, full-screen mode, typewriter mode, Evernote and Joplin import, and is available in over 60 languages.

GPT4Point

GPT4Point is a unified framework for point-language understanding and generation. It aligns 3D point clouds with language, providing a comprehensive solution for tasks such as 3D captioning and controlled 3D generation. The project includes an automated point-language dataset annotation engine, a novel object-level point cloud benchmark, and a 3D multi-modality model. Users can train and evaluate models using the provided code and datasets, with a focus on improving models' understanding capabilities and facilitating the generation of 3D objects.

AudioMuse-AI

AudioMuse-AI is a deep learning-based tool for audio analysis and music generation. It provides a user-friendly interface for processing audio data and generating music compositions. The tool utilizes state-of-the-art machine learning algorithms to analyze audio signals and extract meaningful features for music generation. With AudioMuse-AI, users can explore the possibilities of AI in music creation and experiment with different styles and genres. Whether you are a music enthusiast, a researcher, or a developer, AudioMuse-AI offers a versatile platform for audio analysis and music generation.

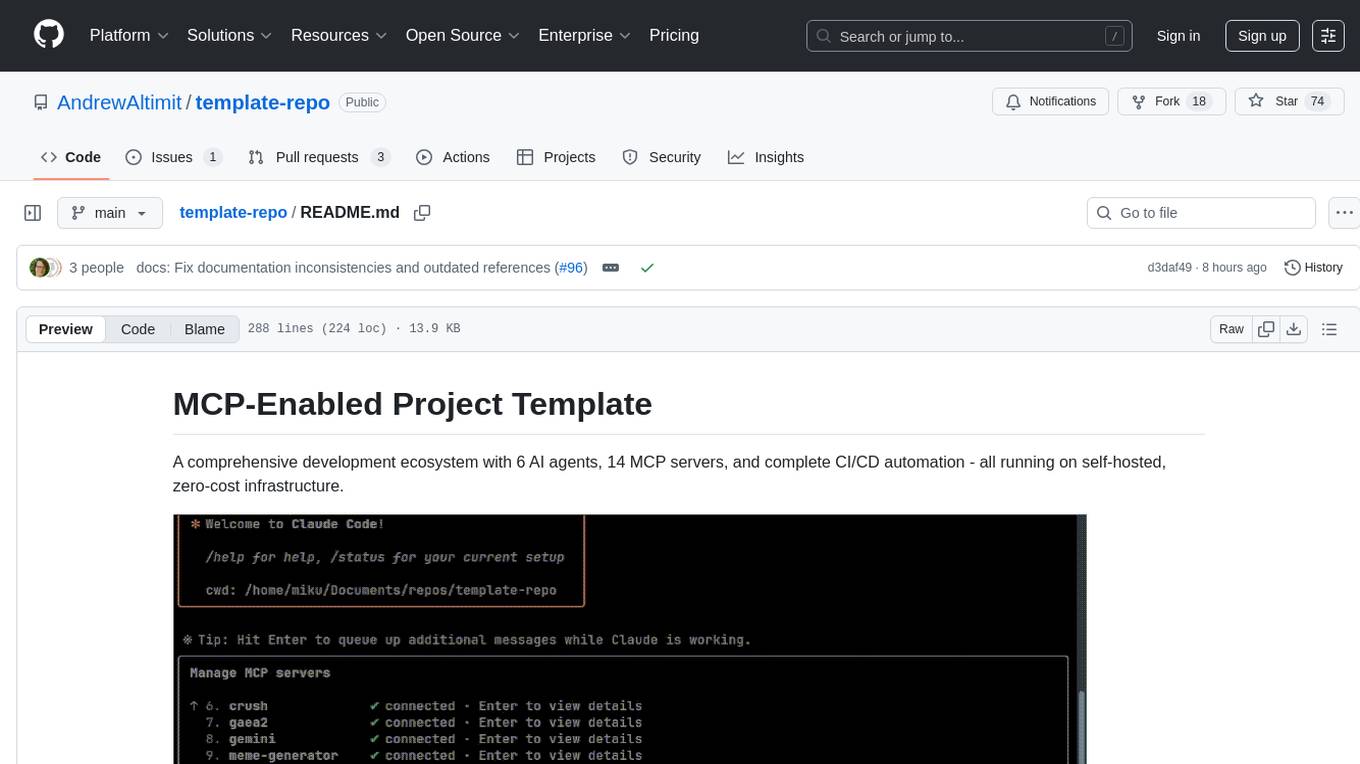

template-repo

The template-repo is a comprehensive development ecosystem with 6 AI agents, 14 MCP servers, and complete CI/CD automation running on self-hosted, zero-cost infrastructure. It follows a container-first approach, with all tools and operations running in Docker containers, zero external dependencies, self-hosted infrastructure, single maintainer design, and modular MCP architecture. The repo provides AI agents for development and automation, features 14 MCP servers for various tasks, and includes security measures, safety training, and sleeper detection system. It offers features like video editing, terrain generation, 3D content creation, AI consultation, image generation, and more, with a focus on maximum portability and consistency.

AgriTech

AgriTech is an AI-powered smart agriculture platform designed to assist farmers with crop recommendations, yield prediction, plant disease detection, and community-driven collaboration—enabling sustainable and data-driven farming practices. It offers AI-driven decision support for modern agriculture, early-stage plant disease detection, crop yield forecasting using machine learning models, and a collaborative ecosystem for farmers and stakeholders. The platform includes features like crop recommendation, yield prediction, disease detection, an AI chatbot for platform guidance and agriculture support, a farmer community, and shopkeeper listings. AgriTech's AI chatbot provides comprehensive support for farmers with features like platform guidance, agriculture support, decision making, image analysis, and 24/7 support. The tech stack includes frontend technologies like HTML5, CSS3, JavaScript, backend technologies like Python (Flask) and optional Node.js, machine learning libraries like TensorFlow, Scikit-learn, OpenCV, and database & DevOps tools like MySQL, MongoDB, Firebase, Docker, and GitHub Actions.

SAM

SAM is a native macOS AI assistant built with Swift and SwiftUI, designed for non-developers who want powerful tools in their everyday life. It provides real assistance, smart memory, voice control, image generation, and custom AI model training. SAM keeps your data on your Mac, supports multiple AI providers, and offers features for documents, creativity, writing, organization, learning, and more. It is privacy-focused, user-friendly, and accessible from various devices. SAM stands out with its privacy-first approach, intelligent memory, task execution capabilities, powerful tools, image generation features, custom AI model training, and flexible AI provider support.

EpicStaff

EpicStaff is a powerful project management tool designed to streamline team collaboration and task management. It provides a user-friendly interface for creating and assigning tasks, tracking progress, and communicating with team members in real-time. With features such as task prioritization, deadline reminders, and file sharing capabilities, EpicStaff helps teams stay organized and productive. Whether you're working on a small project or managing a large team, EpicStaff is the perfect solution to keep everyone on the same page and ensure project success.

ai-flow

AI Flow is an open-source, user-friendly UI application that empowers you to seamlessly connect multiple AI models together, specifically leveraging the capabilities of multiples AI APIs such as OpenAI, StabilityAI and Replicate. In a nutshell, AI Flow provides a visual platform for crafting and managing AI-driven workflows, thereby facilitating diverse and dynamic AI interactions.

For similar tasks

julep

Julep is an advanced platform for creating stateful and functional AI apps powered by large language models. It offers features like statefulness by design, automatic function calling, production-ready deployment, cron-like asynchronous functions, 90+ built-in tools, and the ability to switch between different LLMs easily. Users can build AI applications without the need to write code for embedding, saving, and retrieving conversation history, and can connect to third-party applications using Composio. Julep simplifies the process of getting started with AI apps, whether they are conversational, functional, or agentic.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.