tambo

Generative UI SDK for React

Stars: 10896

tambo ai is a React library that simplifies the process of building AI assistants and agents in React by handling thread management, state persistence, streaming responses, AI orchestration, and providing a compatible React UI library. It eliminates React boilerplate for AI features, allowing developers to focus on creating exceptional user experiences with clean React hooks that seamlessly integrate with their codebase.

README:

The open-source generative UI toolkit for React. Connect your components—Tambo handles streaming, state management, and MCP.

Start For Free • Docs • Discord

Tambo 1.0 is here! Read the announcement: Introducing Tambo: Generative UI for React

Tambo is a React toolkit for building agents that render UI (also known as generative UI).

Register your components with Zod schemas. The agent picks the right one and streams the props so users can interact with them. "Show me sales by region" renders your <Chart>. "Add a task" updates your <TaskBoard>.

https://github.com/user-attachments/assets/8381d607-b878-4823-8b24-ecb8053bef23

Tambo is a fullstack solution for adding generative UI to your app. You get a React SDK plus a backend that handles conversation state and agent execution.

1. Agent included — Tambo runs the LLM conversation loop for you. Bring your own API key (OpenAI, Anthropic, Gemini, Mistral, or any OpenAI-compatible provider). Works with agent frameworks like LangChain and Mastra, but they're not required.

2. Streaming infrastructure — Props stream to your components as the LLM generates them. Cancellation, error recovery, and reconnection are handled for you.

3. Tambo Cloud or self-host — Cloud is a hosted backend that manages conversation state and agent orchestration. Self-hosted runs the same backend on your infrastructure via Docker.

Most software is built around a one-size-fits-all mental model. We built Tambo to help developers build software that adapts to users.

npm create tambo-app my-tambo-app # auto-initializes git + tambo setup

cd my-tambo-app

npm run dev

Tambo Cloud is a hosted backend, free to get started with plenty of credits to start building. Self-hosted runs on your own infrastructure.

Check out the pre-built component library for agent and generative UI primitives:

https://github.com/user-attachments/assets/6cbc103b-9cc7-40f5-9746-12e04c976dff

Or fork a template:

| Template | Description |

|---|---|

| AI Chat with Generative UI | Chat interface with dynamic component generation |

| AI Analytics Dashboard | Analytics dashboard with AI-powered visualization |

Tell the AI which components it can use. Zod schemas define the props. These schemas become LLM tool definitions—the agent calls them like functions and Tambo renders the result.

Render once in response to a message. Charts, summaries, data visualizations.

https://github.com/user-attachments/assets/3bd340e7-e226-4151-ae40-aab9b3660d8b

const components: TamboComponent[] = [

{

name: "Graph",

description: "Displays data as charts using Recharts library",

component: Graph,

propsSchema: z.object({

data: z.array(z.object({ name: z.string(), value: z.number() })),

type: z.enum(["line", "bar", "pie"]),

}),

},

];

Persist and update as users refine requests. Shopping carts, spreadsheets, task boards.

https://github.com/user-attachments/assets/12d957cd-97f1-488e-911f-0ff900ef4062

const InteractableNote = withInteractable(Note, {

componentName: "Note",

description: "A note supporting title, content, and color modifications",

propsSchema: z.object({

title: z.string(),

content: z.string(),

color: z.enum(["white", "yellow", "blue", "green"]).optional(),

}),

});

Docs: generative components, interactable components

Wrap your app with TamboProvider. You must provide either userKey or userToken to identify the thread owner.

<TamboProvider

apiKey={process.env.NEXT_PUBLIC_TAMBO_API_KEY!}

userKey={currentUserId}

components={components}

>

<Chat />

<InteractableNote id="note-1" title="My Note" content="Start writing..." />

</TamboProvider>

Use userKey for server-side or trusted environments. Use userToken (OAuth access token) for client-side apps where the token contains the user identity. See User Authentication for details.

Docs: provider options

useTambo() is the primary hook — it gives you messages, streaming state, and thread management. useTamboThreadInput() handles user input and message submission.

const { messages, isStreaming } = useTambo();

const { value, setValue, submit, isPending } = useTamboThreadInput();

Docs: threads and messages, streaming status, full tutorial

Connect to Linear, Slack, databases, or your own MCP servers. Tambo supports the full MCP protocol: tools, prompts, elicitations, and sampling.

import { MCPTransport } from "@tambo-ai/react/mcp";

const mcpServers = [

{

name: "filesystem",

url: "http://localhost:8261/mcp",

transport: MCPTransport.HTTP,

},

];

<TamboProvider

apiKey={process.env.NEXT_PUBLIC_TAMBO_API_KEY!}

userKey={currentUserId}

components={components}

mcpServers={mcpServers}

>

<App />

</TamboProvider>;

https://github.com/user-attachments/assets/c7a13915-8fed-4758-be1b-30a60fad0cda

Docs: MCP integration

Sometimes you need functions that run in the browser. DOM manipulation, authenticated fetches, accessing React state. Define them as tools and the AI can call them.

const tools: TamboTool[] = [

{

name: "getWeather",

description: "Fetches weather for a location",

tool: async (params: { location: string }) =>

fetch(`/api/weather?q=${encodeURIComponent(params.location)}`).then((r) =>

r.json(),

),

inputSchema: z.object({

location: z.string(),

}),

outputSchema: z.object({

temperature: z.number(),

condition: z.string(),

location: z.string(),

}),

},

];

<TamboProvider

apiKey={process.env.NEXT_PUBLIC_TAMBO_API_KEY!}

userKey={currentUserId}

tools={tools}

components={components}

>

<App />

</TamboProvider>;

Docs: local tools

Additional context lets you pass metadata to give the AI better responses. User state, app settings, current page. User authentication passes tokens from your auth provider. Suggestions generates prompts users can click based on what they're doing.

<TamboProvider

apiKey={process.env.NEXT_PUBLIC_TAMBO_API_KEY!}

userToken={userToken}

contextHelpers={{

selectedItems: () => ({

key: "selectedItems",

value: selectedItems.map((i) => i.name).join(", "),

}),

currentPage: () => ({ key: "page", value: window.location.pathname }),

}}

/>

const { suggestions, accept } = useTamboSuggestions({ maxSuggestions: 3 });

suggestions.map((s) => (

<button key={s.id} onClick={() => accept(s)}>

{s.title}

</button>

));

Docs: additional context, user authentication, suggestions

OpenAI, Anthropic, Cerebras, Google Gemini, Mistral, and any OpenAI-compatible provider. Full list. Missing one? Let us know.

| Feature | Tambo | Vercel AI SDK | CopilotKit | Assistant UI |

|---|---|---|---|---|

| Component selection | AI decides which components to render | Manual tool-to-component mapping | Via agent frameworks (LangGraph) | Chat-focused tool UI |

| MCP integration | Built-in | Experimental (v4.2+) | Recently added | Requires AI SDK v5 |

| Persistent stateful components | Yes | No | Shared state patterns | No |

| Client-side tool execution | Declarative, automatic | Manual via onToolCall | Agent-side only | No |

| Self-hostable | MIT (SDK + backend) | Apache 2.0 (SDK only) | MIT | MIT |

| Hosted option | Tambo Cloud | No | CopilotKit Cloud | Assistant Cloud |

| Best for | Full app UI control | Streaming and tool abstractions | Multi-agent workflows | Chat interfaces |

Join the Discord to chat with other developers and the core team.

Interested in contributing? Read the Contributing Guide.

Join the conversation on Twitter and follow @tambo_ai.

MIT unless otherwise noted. Some workspaces (like apps/api) are Apache-2.0.

For AI/LLM agents: docs.tambo.co/llms.txt

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for tambo

Similar Open Source Tools

tambo

tambo ai is a React library that simplifies the process of building AI assistants and agents in React by handling thread management, state persistence, streaming responses, AI orchestration, and providing a compatible React UI library. It eliminates React boilerplate for AI features, allowing developers to focus on creating exceptional user experiences with clean React hooks that seamlessly integrate with their codebase.

node-sdk

The ChatBotKit Node SDK is a JavaScript-based platform for building conversational AI bots and agents. It offers easy setup, serverless compatibility, modern framework support, customizability, and multi-platform deployment. With capabilities like multi-modal and multi-language support, conversation management, chat history review, custom datasets, and various integrations, this SDK enables users to create advanced chatbots for websites, mobile apps, and messaging platforms.

logicstamp-context

LogicStamp Context is a static analyzer that extracts deterministic component contracts from TypeScript codebases, providing structured architectural context for AI coding assistants. It helps AI assistants understand architecture by extracting props, hooks, and dependencies without implementation noise. The tool works with React, Next.js, Vue, Express, and NestJS, and is compatible with various AI assistants like Claude, Cursor, and MCP agents. It offers features like watch mode for real-time updates, breaking change detection, and dependency graph creation. LogicStamp Context is a security-first tool that protects sensitive data, runs locally, and is non-opinionated about architectural decisions.

trpc-agent-go

A powerful Go framework for building intelligent agent systems with large language models (LLMs), hierarchical planners, memory, telemetry, and a rich tool ecosystem. tRPC-Agent-Go enables the creation of autonomous or semi-autonomous agents that reason, call tools, collaborate with sub-agents, and maintain long-term state. The framework provides detailed documentation, examples, and tools for accelerating the development of AI applications.

agentops

AgentOps is a toolkit for evaluating and developing robust and reliable AI agents. It provides benchmarks, observability, and replay analytics to help developers build better agents. AgentOps is open beta and can be signed up for here. Key features of AgentOps include: - Session replays in 3 lines of code: Initialize the AgentOps client and automatically get analytics on every LLM call. - Time travel debugging: (coming soon!) - Agent Arena: (coming soon!) - Callback handlers: AgentOps works seamlessly with applications built using Langchain and LlamaIndex.

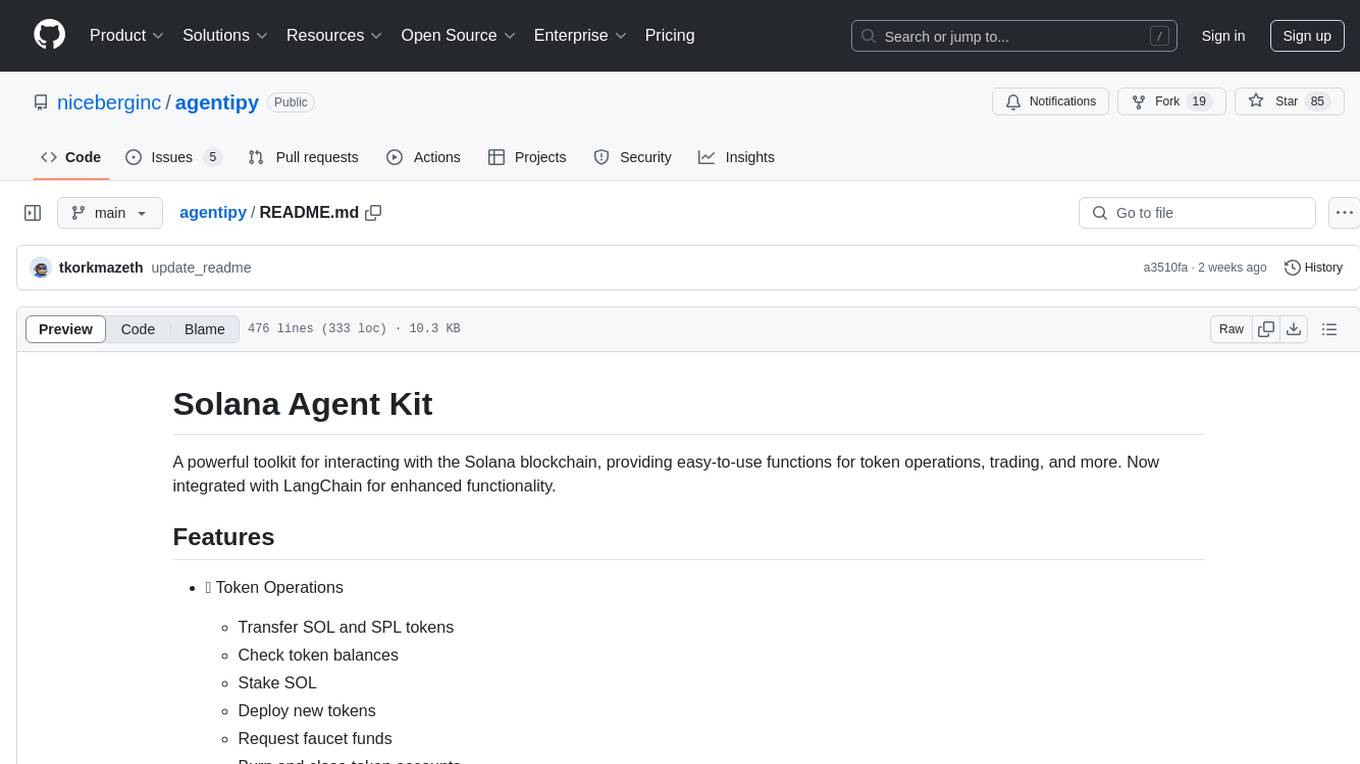

agentipy

Agentipy is a powerful toolkit for interacting with the Solana blockchain, providing easy-to-use functions for token operations, trading, yield farming, LangChain integration, performance tracking, token data retrieval, pump & fun token launching, Meteora DLMM pool creation, and more. It offers features like token transfers, balance checks, staking, deploying new tokens, requesting faucet funds, trading with customizable slippage, yield farming with Lulo, and accessing LangChain tools for enhanced blockchain interactions. Users can also track current transactions per second (TPS), fetch token data by ticker or address, launch pump & fun tokens, create Meteora DLMM pools, buy/sell tokens with Raydium liquidity, and burn/close token accounts individually or in batches.

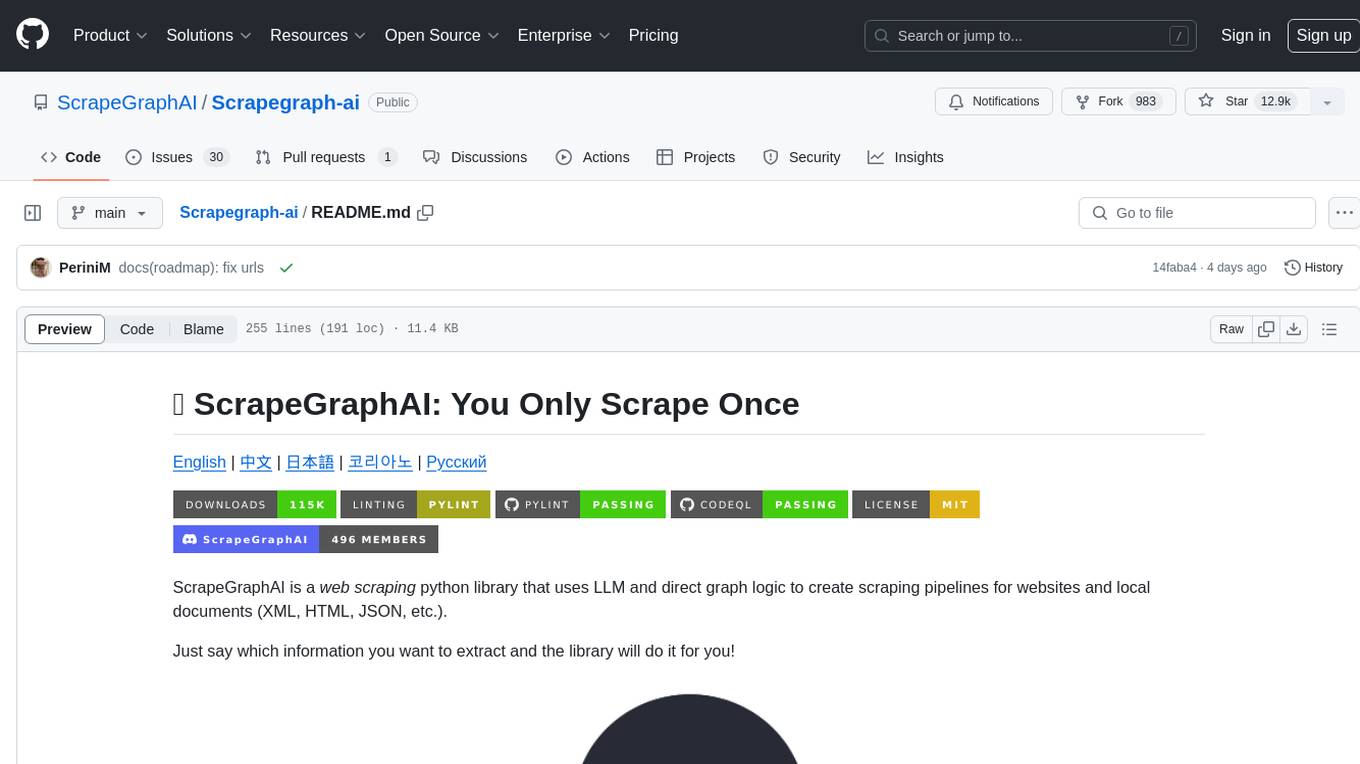

Scrapegraph-ai

ScrapeGraphAI is a web scraping Python library that utilizes LLM and direct graph logic to create scraping pipelines for websites and local documents. It offers various standard scraping pipelines like SmartScraperGraph, SearchGraph, SpeechGraph, and ScriptCreatorGraph. Users can extract information by specifying prompts and input sources. The library supports different LLM APIs such as OpenAI, Groq, Azure, and Gemini, as well as local models using Ollama. ScrapeGraphAI is designed for data exploration and research purposes, providing a versatile tool for extracting information from web pages and generating outputs like Python scripts, audio summaries, and search results.

agentfield

AgentField is an open-source control plane designed for autonomous AI agents, providing infrastructure for agents to make decisions beyond chatbots. It offers features like scaling infrastructure, routing & discovery, async execution, durable state, observability, trust infrastructure with cryptographic identity, verifiable credentials, and policy enforcement. Users can write agents in Python, Go, TypeScript, or interact via REST APIs. The tool enables the creation of AI backends that reason autonomously within defined boundaries, offering predictability and flexibility. AgentField aims to bridge the gap between AI frameworks and production-ready infrastructure for AI agents.

lingo.dev

Replexica AI automates software localization end-to-end, producing authentic translations instantly across 60+ languages. Teams can do localization 100x faster with state-of-the-art quality, reaching more paying customers worldwide. The tool offers a GitHub Action for CI/CD automation and supports various formats like JSON, YAML, CSV, and Markdown. With lightning-fast AI localization, auto-updates, native quality translations, developer-friendly CLI, and scalability for startups and enterprise teams, Replexica is a top choice for efficient and effective software localization.

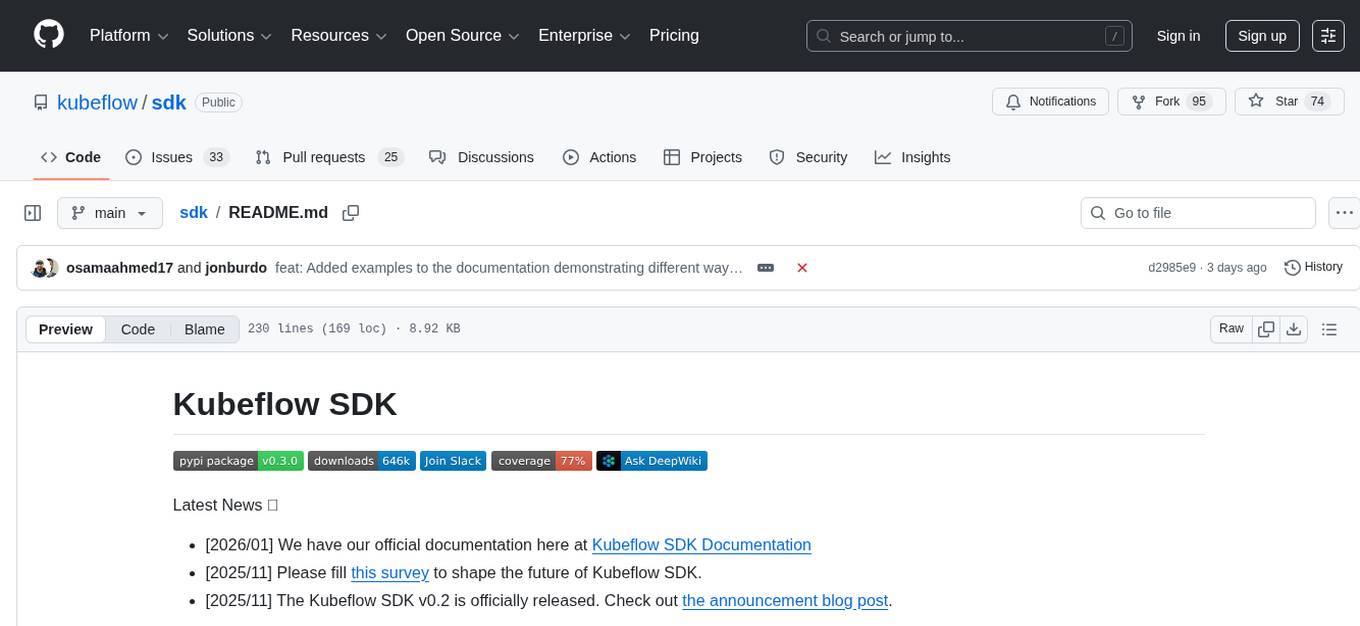

sdk

The Kubeflow SDK is a set of unified Pythonic APIs that simplify running AI workloads at any scale without needing to learn Kubernetes. It offers consistent APIs across the Kubeflow ecosystem, enabling users to focus on building AI applications rather than managing complex infrastructure. The SDK provides a unified experience, simplifies AI workloads, is built for scale, allows rapid iteration, and supports local development without a Kubernetes cluster.

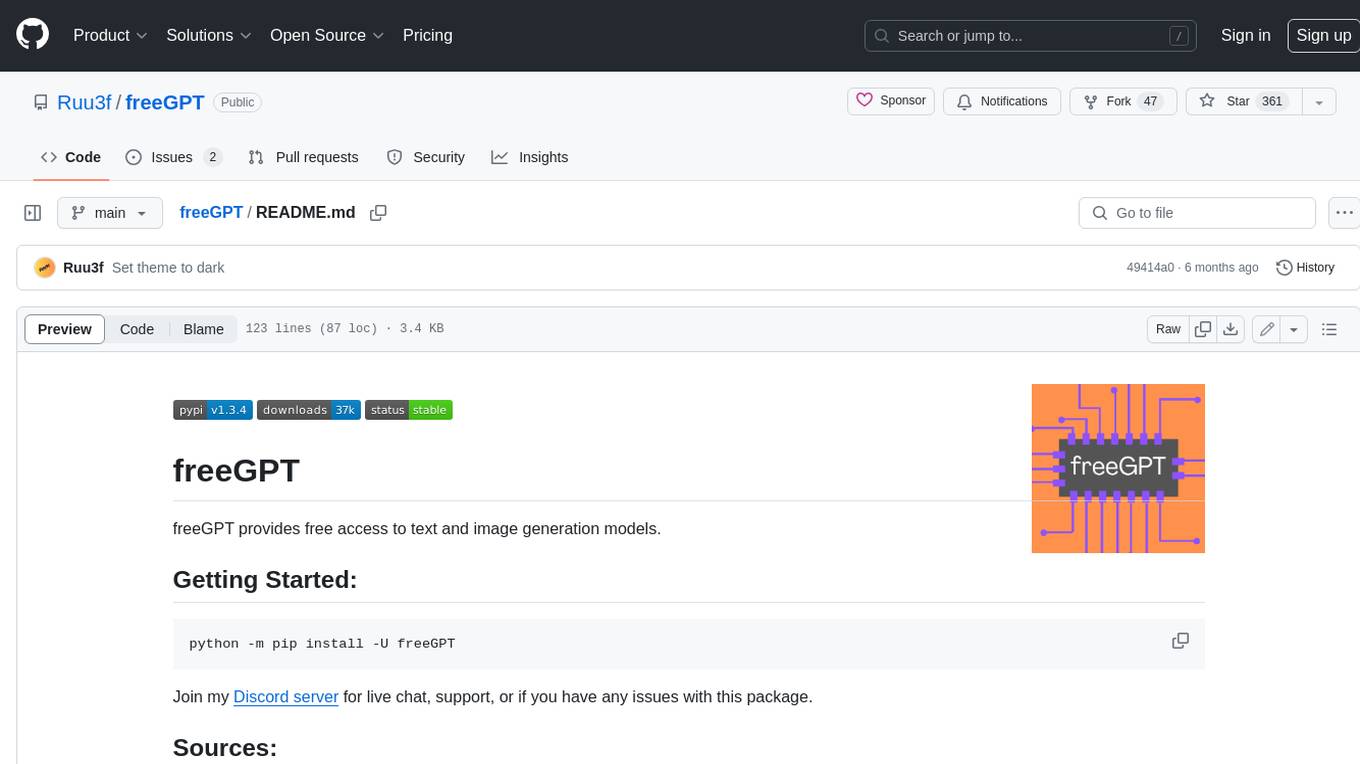

freeGPT

freeGPT provides free access to text and image generation models. It supports various models, including gpt3, gpt4, alpaca_7b, falcon_40b, prodia, and pollinations. The tool offers both asynchronous and non-asynchronous interfaces for text completion and image generation. It also features an interactive Discord bot that provides access to all the models in the repository. The tool is easy to use and can be integrated into various applications.

pixeltable

Pixeltable is a Python library designed for ML Engineers and Data Scientists to focus on exploration, modeling, and app development without the need to handle data plumbing. It provides a declarative interface for working with text, images, embeddings, and video, enabling users to store, transform, index, and iterate on data within a single table interface. Pixeltable is persistent, acting as a database unlike in-memory Python libraries such as Pandas. It offers features like data storage and versioning, combined data and model lineage, indexing, orchestration of multimodal workloads, incremental updates, and automatic production-ready code generation. The tool emphasizes transparency, reproducibility, cost-saving through incremental data changes, and seamless integration with existing Python code and libraries.

BrowserAI

BrowserAI is a production-ready tool that allows users to run AI models directly in the browser, offering simplicity, speed, privacy, and open-source capabilities. It provides WebGPU acceleration for fast inference, zero server costs, offline capability, and developer-friendly features. Perfect for web developers, companies seeking privacy-conscious AI solutions, researchers experimenting with browser-based AI, and hobbyists exploring AI without infrastructure overhead. The tool supports various AI tasks like text generation, speech recognition, and text-to-speech, with pre-configured popular models ready to use. It offers a simple SDK with multiple engine support and seamless switching between MLC and Transformers engines.

alphora

Alphora is a full-stack framework for building production AI agents, providing agent orchestration, prompt engineering, tool execution, memory management, streaming, and deployment with an async-first, OpenAI-compatible design. It offers features like agent derivation, reasoning-action loop, async streaming, visual debugger, OpenAI compatibility, multimodal support, tool system with zero-config tools and type safety, prompt engine with dynamic prompts, memory and storage management, sandbox for secure execution, deployment as API, and more. Alphora allows users to build sophisticated AI agents easily and efficiently.

x

Ant Design X is a tool for crafting AI-driven interfaces effortlessly. It is built on the best practices of enterprise-level AI products, offering flexible and diverse atomic components for various AI dialogue scenarios. The tool provides out-of-the-box model integration with inference services compatible with OpenAI standards. It also enables efficient management of conversation data flows, supports rich template options, complete TypeScript support, and advanced theme customization. Ant Design X is designed to enhance development efficiency and deliver exceptional AI interaction experiences.

adk-rust

ADK-Rust is a comprehensive and production-ready Rust framework for building AI agents. It features type-safe agent abstractions with async execution and event streaming, multiple agent types including LLM agents, workflow agents, and custom agents, realtime voice agents with bidirectional audio streaming, a tool ecosystem with function tools, Google Search, and MCP integration, production features like session management, artifact storage, memory systems, and REST/A2A APIs, and a developer-friendly experience with interactive CLI, working examples, and comprehensive documentation. The framework follows a clean layered architecture and is production-ready and actively maintained.

For similar tasks

dust

Dust is a platform that provides customizable and secure AI assistants to amplify your team's potential. With Dust, you can build and deploy AI assistants that are tailored to your specific needs, without the need for extensive technical expertise. Dust's platform is easy to use and provides a variety of features to help you get started quickly, including a library of pre-built blocks, a developer platform, and an API reference.

superagent-js

Superagent is an open source framework that enables any developer to integrate production ready AI Assistants into any application in a matter of minutes.

django-ai-assistant

Combine the power of LLMs with Django's productivity to build intelligent applications. Let AI Assistants call methods from Django's side and do anything your users need! Use AI Tool Calling and RAG with Django to easily build state of the art AI Assistants.

Olares

Olares is an open-source sovereign cloud OS designed for local AI, enabling users to build their own AI assistants, sync data across devices, self-host their workspace, stream media, and more within a sovereign cloud environment. Users can effortlessly run leading AI models, deploy open-source AI apps, access AI apps and models anywhere, and benefit from integrated AI for personalized interactions. Olares offers features like edge AI, personal data repository, self-hosted workspace, private media server, smart home hub, and user-owned decentralized social media. The platform provides enterprise-grade security, secure application ecosystem, unified file system and database, single sign-on, AI capabilities, built-in applications, seamless access, and development tools. Olares is compatible with Linux, Raspberry Pi, Mac, and Windows, and offers a wide range of system-level applications, third-party components and services, and additional libraries and components.

tambo

tambo ai is a React library that simplifies the process of building AI assistants and agents in React by handling thread management, state persistence, streaming responses, AI orchestration, and providing a compatible React UI library. It eliminates React boilerplate for AI features, allowing developers to focus on creating exceptional user experiences with clean React hooks that seamlessly integrate with their codebase.

helix-db

HelixDB is a database designed specifically for AI applications, providing a single platform to manage all components needed for AI applications. It supports graph + vector data model and also KV, documents, and relational data. Key features include built-in tools for MCP, embeddings, knowledge graphs, RAG, security, logical isolation, and ultra-low latency. Users can interact with HelixDB using the Helix CLI tool and SDKs in TypeScript and Python. The roadmap includes features like organizational auth, server code improvements, 3rd party integrations, educational content, and binary quantisation for better performance. Long term projects involve developing in-house tools for knowledge graph ingestion, graph-vector storage engine, and network protocol & serdes libraries.

gemini-ai

Gemini AI is a Ruby Gem designed to provide low-level access to Google's generative AI services through Vertex AI, Generative Language API, or AI Studio. It allows users to interact with Gemini to build abstractions on top of it. The Gem provides functionalities for tasks such as generating content, embeddings, predictions, and more. It supports streaming capabilities, server-sent events, safety settings, system instructions, JSON format responses, and tools (functions) calling. The Gem also includes error handling, development setup, publishing to RubyGems, updating the README, and references to resources for further learning.

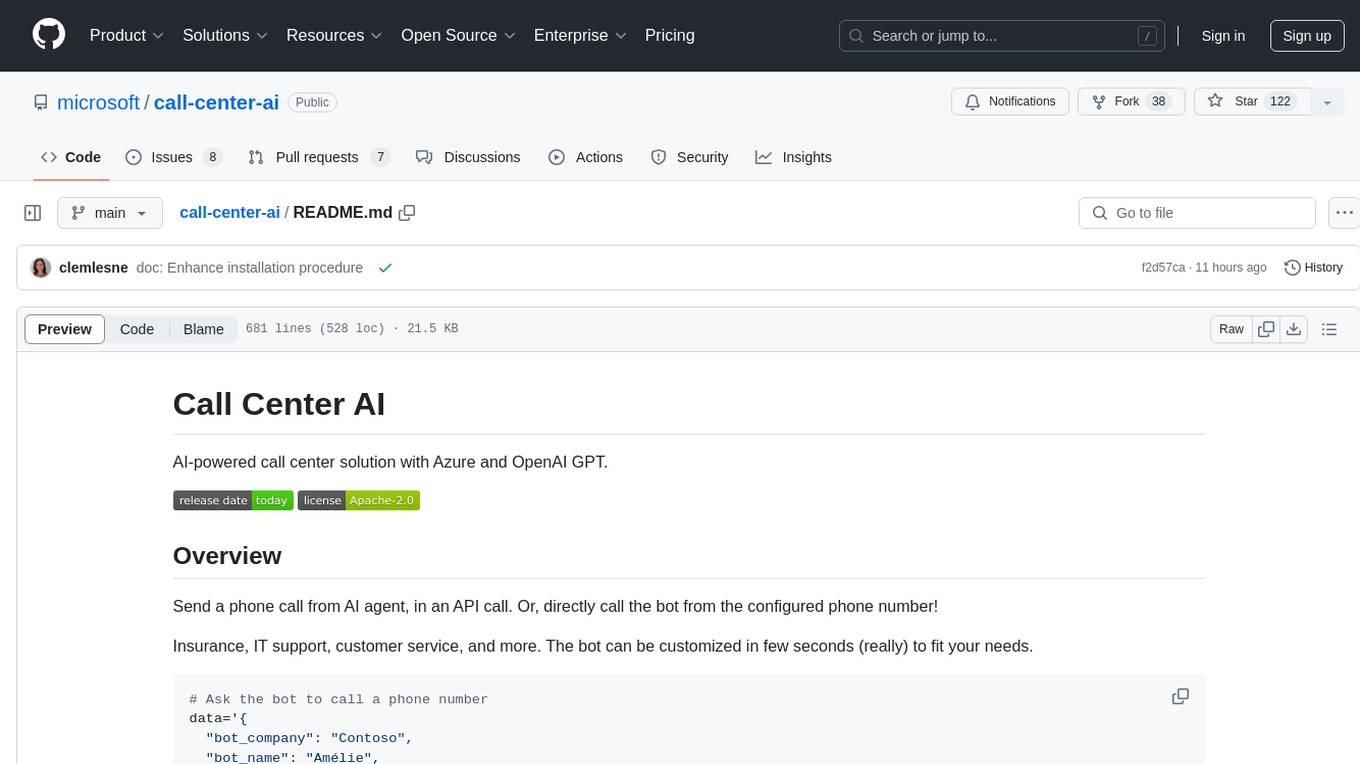

call-center-ai

Call Center AI is an AI-powered call center solution leveraging Azure and OpenAI GPT. It allows for AI agent-initiated phone calls or direct calls to the bot from a configured phone number. The bot is customizable for various industries like insurance, IT support, and customer service, with features such as accessing claim information, conversation history, language change, SMS sending, and more. The project is a proof of concept showcasing the integration of Azure Communication Services, Azure Cognitive Services, and Azure OpenAI for an automated call center solution.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.