ElevenLabs-DotNet

A Non-Official ElevenLabs RESTful API Client for dotnet

Stars: 53

ElevenLabs-DotNet is a non-official Eleven Labs voice synthesis RESTful client that allows users to convert text to speech. The library targets .NET 8.0 and above, working across various platforms like console apps, winforms, wpf, and asp.net, and across Windows, Linux, and Mac. Users can authenticate using API keys directly, from a configuration file, or system environment variables. The tool provides functionalities for text to speech conversion, streaming text to speech, accessing voices, dubbing audio or video files, generating sound effects, managing history of synthesized audio clips, and accessing user information and subscription status.

README:

A non-official Eleven Labs voice synthesis RESTful client.

I am not affiliated with ElevenLabs and an account with api access is required.

All copyrights, trademarks, logos, and assets are the property of their respective owners.

- This library targets .NET 8.0 and above.

- It should work across console apps, winforms, wpf, asp.net, etc.

- It should also work across Windows, Linux, and Mac.

Install package ElevenLabs-DotNet from Nuget. Here's how via command line:

Install-Package ElevenLabs-DotNetdotnet add package ElevenLabs-DotNet

Looking to use ElevenLabs in the Unity Game Engine? Check out our unity package on OpenUPM:

There are 3 ways to provide your API keys, in order of precedence:

- Pass keys directly with constructor

- Load key from configuration file

- Use System Environment Variables

var api = new ElevenLabsClient("yourApiKey");Or create a ElevenLabsAuthentication object manually

var api = new ElevenLabsClient(new ElevenLabsAuthentication("yourApiKey"));Attempts to load api keys from a configuration file, by default .elevenlabs in the current directory, optionally traversing up the directory tree or in the user's home directory.

To create a configuration file, create a new text file named .elevenlabs and containing the line:

{

"apiKey": "yourApiKey",

}You can also load the file directly with known path by calling a static method in Authentication:

var api = new ElevenLabsClient(ElevenLabsAuthentication.LoadFromDirectory("your/path/to/.elevenlabs"));;Use your system's environment variables specify an api key to use.

- Use

ELEVEN_LABS_API_KEYfor your api key.

var api = new ElevenLabsClient(ElevenLabsAuthentication.LoadFromEnv());Using either the ElevenLabs-DotNet or com.rest.elevenlabs packages directly in your front-end app may expose your API keys and other sensitive information. To mitigate this risk, it is recommended to set up an intermediate API that makes requests to ElevenLabs on behalf of your front-end app. This library can be utilized for both front-end and intermediary host configurations, ensuring secure communication with the ElevenLabs API.

In the front end example, you will need to securely authenticate your users using your preferred OAuth provider. Once the user is authenticated, exchange your custom auth token with your API key on the backend.

Follow these steps:

- Setup a new project using either the ElevenLabs-DotNet or com.rest.elevenlabs packages.

- Authenticate users with your OAuth provider.

- After successful authentication, create a new

ElevenLabsAuthenticationobject and pass in the custom token. - Create a new

ElevenLabsClientSettingsobject and specify the domain where your intermediate API is located. - Pass your new

authandsettingsobjects to theElevenLabsClientconstructor when you create the client instance.

Here's an example of how to set up the front end:

var authToken = await LoginAsync();

var auth = new ElevenLabsAuthentication(authToken);

var settings = new ElevenLabsClientSettings(domain: "api.your-custom-domain.com");

var api = new ElevenLabsClient(auth, settings);This setup allows your front end application to securely communicate with your backend that will be using the ElevenLabs-DotNet-Proxy, which then forwards requests to the ElevenLabs API. This ensures that your ElevenLabs API keys and other sensitive information remain secure throughout the process.

In this example, we demonstrate how to set up and use ElevenLabsProxyStartup in a new ASP.NET Core web app. The proxy server will handle authentication and forward requests to the ElevenLabs API, ensuring that your API keys and other sensitive information remain secure.

- Create a new ASP.NET Core minimal web API project.

- Add the ElevenLabs-DotNet nuget package to your project.

- Powershell install:

Install-Package ElevenLabs-DotNet-Proxy - Dotnet install:

dotnet add package ElevenLabs-DotNet-Proxy - Manually editing .csproj:

<PackageReference Include="ElevenLabs-DotNet-Proxy" />

- Powershell install:

- Create a new class that inherits from

AbstractAuthenticationFilterand override theValidateAuthenticationmethod. This will implement theIAuthenticationFilterthat you will use to check user session token against your internal server. - In

Program.cs, create a new proxy web application by callingElevenLabsProxyStartup.CreateWebApplicationmethod, passing your customAuthenticationFilteras a type argument. - Create

ElevenLabsAuthenticationandElevenLabsClientSettingsas you would normally with your API keys, org id, or Azure settings.

public partial class Program

{

private class AuthenticationFilter : AbstractAuthenticationFilter

{

public override async Task ValidateAuthenticationAsync(IHeaderDictionary request)

{

await Task.CompletedTask; // remote resource call

// You will need to implement your own class to properly test

// custom issued tokens you've setup for your end users.

if (!request["xi-api-key"].ToString().Contains(TestUserToken))

{

throw new AuthenticationException("User is not authorized");

}

}

}

public static void Main(string[] args)

{

var auth = ElevenLabsAuthentication.LoadFromEnv();

var client = new ElevenLabsClient(auth);

ElevenLabsProxyStartup.CreateWebApplication<AuthenticationFilter>(args, client).Run();

}

}Once you have set up your proxy server, your end users can now make authenticated requests to your proxy api instead of directly to the ElevenLabs API. The proxy server will handle authentication and forward requests to the ElevenLabs API, ensuring that your API keys and other sensitive information remain secure.

Convert text to speech.

var api = new ElevenLabsClient();

var text = "The quick brown fox jumps over the lazy dog.";

var voice = (await api.VoicesEndpoint.GetAllVoicesAsync()).FirstOrDefault();

var defaultVoiceSettings = await api.VoicesEndpoint.GetDefaultVoiceSettingsAsync();

var voiceClip = await api.TextToSpeechEndpoint.TextToSpeechAsync(text, voice, defaultVoiceSettings);

await File.WriteAllBytesAsync($"{voiceClip.Id}.mp3", voiceClip.ClipData.ToArray());Stream text to speech.

var api = new ElevenLabsClient();

var text = "The quick brown fox jumps over the lazy dog.";

var voice = (await api.VoicesEndpoint.GetAllVoicesAsync()).FirstOrDefault();

string fileName = "myfile.mp3";

using var outputFileStream = File.OpenWrite(fileName);

var voiceClip = await api.TextToSpeechEndpoint.TextToSpeechAsync(text, voice,

partialClipCallback: async (partialClip) =>

{

// Write the incoming data to the output file stream.

// Alternatively you can play this clip data directly.

await outputFileStream.WriteAsync(partialClip.ClipData);

});Access to voices created either by the user or ElevenLabs.

Gets a list of shared voices in the public voice library.

var api = new ElevenLabsClient();

var results = await ElevenLabsClient.SharedVoicesEndpoint.GetSharedVoicesAsync();

foreach (var voice in results.Voices)

{

Console.WriteLine($"{voice.OwnerId} | {voice.VoiceId} | {voice.Date} | {voice.Name}");

}Gets a list of all available voices available to your account.

var api = new ElevenLabsClient();

var allVoices = await api.VoicesEndpoint.GetAllVoicesAsync();

foreach (var voice in allVoices)

{

Console.WriteLine($"{voice.Id} | {voice.Name} | similarity boost: {voice.Settings?.SimilarityBoost} | stability: {voice.Settings?.Stability}");

}Gets the global default voice settings.

var api = new ElevenLabsClient();

var result = await api.VoicesEndpoint.GetDefaultVoiceSettingsAsync();

Console.WriteLine($"stability: {result.Stability} | similarity boost: {result.SimilarityBoost}");var api = new ElevenLabsClient();

var voice = await api.VoicesEndpoint.GetVoiceAsync("voiceId");

Console.WriteLine($"{voice.Id} | {voice.Name} | {voice.PreviewUrl}");Edit the settings for a specific voice.

var api = new ElevenLabsClient();

var success = await api.VoicesEndpoint.EditVoiceSettingsAsync(voice, new VoiceSettings(0.7f, 0.7f));

Console.WriteLine($"Was successful? {success}");var api = new ElevenLabsClient();

var labels = new Dictionary<string, string>

{

{ "accent", "american" }

};

var audioSamplePaths = new List<string>();

var voice = await api.VoicesEndpoint.AddVoiceAsync("Voice Name", audioSamplePaths, labels);var api = new ElevenLabsClient();

var labels = new Dictionary<string, string>

{

{ "age", "young" }

};

var audioSamplePaths = new List<string>();

var success = await api.VoicesEndpoint.EditVoiceAsync(voice, audioSamplePaths, labels);

Console.WriteLine($"Was successful? {success}");var api = new ElevenLabsClient();

var success = await api.VoicesEndpoint.DeleteVoiceAsync(voiceId);

Console.WriteLine($"Was successful? {success}");Access to your samples, created by you when cloning voices.

var api = new ElevenLabsClient();

var voiceClip = await api.VoicesEndpoint.DownloadVoiceSampleAsync(voice, sample);

await File.WriteAllBytesAsync($"{voiceClip.Id}.mp3", voiceClip.ClipData.ToArray());var api = new ElevenLabsClient();

var success = await api.VoicesEndpoint.DeleteVoiceSampleAsync(voiceId, sampleId);

Console.WriteLine($"Was successful? {success}");Dubs provided audio or video file into given language.

var api = new ElevenLabsClient();

// from URI

var request = new DubbingRequest(new Uri("https://youtu.be/Zo5-rhYOlNk"), "ja", "en", 1, true);

// from file

var request = new DubbingRequest(filePath, "es", "en", 1);

var metadata = await api.DubbingEndpoint.DubAsync(request, progress: new Progress<DubbingProjectMetadata>(metadata =>

{

switch (metadata.Status)

{

case "dubbing":

Console.WriteLine($"Dubbing for {metadata.DubbingId} in progress... Expected Duration: {metadata.ExpectedDurationSeconds:0.00} seconds");

break;

case "dubbed":

Console.WriteLine($"Dubbing for {metadata.DubbingId} complete in {metadata.TimeCompleted.TotalSeconds:0.00} seconds!");

break;

default:

Console.WriteLine($"Status: {metadata.Status}");

break;

}

}));Returns metadata about a dubbing project, including whether it’s still in progress or not.

var api = new ElevenLabsClient();

var metadata = api.await GetDubbingProjectMetadataAsync("dubbing-id");Returns dubbed file as a streamed file.

[!NOTE] Videos will be returned in MP4 format and audio only dubs will be returned in MP3.

var assetsDir = Path.GetFullPath("../../../Assets");

var dubbedPath = new FileInfo(Path.Combine(assetsDir, $"online.dubbed.{request.TargetLanguage}.mp4"));

{

await using var fs = File.Open(dubbedPath.FullName, FileMode.Create);

await foreach (var chunk in ElevenLabsClient.DubbingEndpoint.GetDubbedFileAsync(metadata.DubbingId, request.TargetLanguage))

{

await fs.WriteAsync(chunk);

}

}Returns transcript for the dub in the desired format.

var assetsDir = Path.GetFullPath("../../../Assets");

var transcriptPath = new FileInfo(Path.Combine(assetsDir, $"online.dubbed.{request.TargetLanguage}.srt"));

{

var transcriptFile = await api.DubbingEndpoint.GetTranscriptForDubAsync(metadata.DubbingId, request.TargetLanguage);

await File.WriteAllTextAsync(transcriptPath.FullName, transcriptFile);

}Deletes a dubbing project.

var api = new ElevenLabsClient();

await api.DubbingEndpoint.DeleteDubbingProjectAsync("dubbing-id");API that converts text into sounds & uses the most advanced AI audio model ever.

var api = new ElevenLabsClient();

var request = new SoundGenerationRequest("Star Wars Light Saber parry");

var clip = await api.SoundGenerationEndpoint.GenerateSoundAsync(request);Access to your previously synthesized audio clips including its metadata.

Get metadata about all your generated audio.

var api = new ElevenLabsClient();

var historyItems = await api.HistoryEndpoint.GetHistoryAsync();

foreach (var item in historyItems.OrderBy(historyItem => historyItem.Date))

{

Console.WriteLine($"{item.State} {item.Date} | {item.Id} | {item.Text.Length} | {item.Text}");

}Get information about a specific item.

var api = new ElevenLabsClient();

var historyItem = await api.HistoryEndpoint.GetHistoryItemAsync(voiceClip.Id);var api = new ElevenLabsClient();

var voiceClip = await api.HistoryEndpoint.DownloadHistoryAudioAsync(historyItem);

await File.WriteAllBytesAsync($"{voiceClip.Id}.mp3", voiceClip.ClipData.ToArray());Downloads the last 100 history items, or the collection of specified items.

var api = new ElevenLabsClient();

var voiceClips = await api.HistoryEndpoint.DownloadHistoryItemsAsync();var api = new ElevenLabsClient();

var success = await api.HistoryEndpoint.DeleteHistoryItemAsync(historyItem);

Console.WriteLine($"Was successful? {success}");Access to your user Information and subscription status.

Gets information about your user account with ElevenLabs.

var api = new ElevenLabsClient();

var userInfo = await api.UserEndpoint.GetUserInfoAsync();Gets information about your subscription with ElevenLabs.

var api = new ElevenLabsClient();

var subscriptionInfo = await api.UserEndpoint.GetSubscriptionInfoAsync();For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ElevenLabs-DotNet

Similar Open Source Tools

ElevenLabs-DotNet

ElevenLabs-DotNet is a non-official Eleven Labs voice synthesis RESTful client that allows users to convert text to speech. The library targets .NET 8.0 and above, working across various platforms like console apps, winforms, wpf, and asp.net, and across Windows, Linux, and Mac. Users can authenticate using API keys directly, from a configuration file, or system environment variables. The tool provides functionalities for text to speech conversion, streaming text to speech, accessing voices, dubbing audio or video files, generating sound effects, managing history of synthesized audio clips, and accessing user information and subscription status.

OpenAI-DotNet

OpenAI-DotNet is a simple C# .NET client library for OpenAI to use through their RESTful API. It is independently developed and not an official library affiliated with OpenAI. Users need an OpenAI API account to utilize this library. The library targets .NET 6.0 and above, working across various platforms like console apps, winforms, wpf, asp.net, etc., and on Windows, Linux, and Mac. It provides functionalities for authentication, interacting with models, assistants, threads, chat, audio, images, files, fine-tuning, embeddings, and moderations.

com.openai.unity

com.openai.unity is an OpenAI package for Unity that allows users to interact with OpenAI's API through RESTful requests. It is independently developed and not an official library affiliated with OpenAI. Users can fine-tune models, create assistants, chat completions, and more. The package requires Unity 2021.3 LTS or higher and can be installed via Unity Package Manager or Git URL. Various features like authentication, Azure OpenAI integration, model management, thread creation, chat completions, audio processing, image generation, file management, fine-tuning, batch processing, embeddings, and content moderation are available.

java-genai

Java idiomatic SDK for the Gemini Developer APIs and Vertex AI APIs. The SDK provides a Client class for interacting with both APIs, allowing seamless switching between the 2 backends without code rewriting. It supports features like generating content, embedding content, generating images, upscaling images, editing images, and generating videos. The SDK also includes options for setting API versions, HTTP request parameters, client behavior, and response schemas.

UnrealGenAISupport

The Unreal Engine Generative AI Support Plugin is a tool designed to integrate various cutting-edge LLM/GenAI models into Unreal Engine for game development. It aims to simplify the process of using AI models for game development tasks, such as controlling scene objects, generating blueprints, running Python scripts, and more. The plugin currently supports models from organizations like OpenAI, Anthropic, XAI, Google Gemini, Meta AI, Deepseek, and Baidu. It provides features like API support, model control, generative AI capabilities, UI generation, project file management, and more. The plugin is still under development but offers a promising solution for integrating AI models into game development workflows.

js-genai

The Google Gen AI JavaScript SDK is an experimental SDK for TypeScript and JavaScript developers to build applications powered by Gemini. It supports both the Gemini Developer API and Vertex AI. The SDK is designed to work with Gemini 2.0 features. Users can access API features through the GoogleGenAI classes, which provide submodules for querying models, managing caches, creating chats, uploading files, and starting live sessions. The SDK also allows for function calling to interact with external systems. Users can find more samples in the GitHub samples directory.

client-python

The Mistral Python Client is a tool inspired by cohere-python that allows users to interact with the Mistral AI API. It provides functionalities to access and utilize the AI capabilities offered by Mistral. Users can easily install the client using pip and manage dependencies using poetry. The client includes examples demonstrating how to use the API for various tasks, such as chat interactions. To get started, users need to obtain a Mistral API Key and set it as an environment variable. Overall, the Mistral Python Client simplifies the integration of Mistral AI services into Python applications.

acte

Acte is a framework designed to build GUI-like tools for AI Agents. It aims to address the issues of cognitive load and freedom degrees when interacting with multiple APIs in complex scenarios. By providing a graphical user interface (GUI) for Agents, Acte helps reduce cognitive load and constraints interaction, similar to how humans interact with computers through GUIs. The tool offers APIs for starting new sessions, executing actions, and displaying screens, accessible via HTTP requests or the SessionManager class.

higlabo

HigLabo is a versatile C# library that provides various features such as an OpenAI client library, the fastest object mapper, a DAL generator, and support for functionalities like Mail, FTP, RSS, and Twitter. The library includes modules like HigLabo.OpenAI for chat completion and Groq support, HigLabo.Anthropic for Anthropic Claude AI, HigLabo.Mapper for object mapping, DbSharp for stored procedure calls, HigLabo.Mime for MIME parsing, HigLabo.Mail for SMTP, POP3, and IMAP functionalities, and other utility modules like HigLabo.Data, HigLabo.Converter, and HigLabo.Net.Slack. HigLabo is designed to be easy to use and highly customizable, offering performance optimizations for tasks like object mapping and database access.

client-js

The Mistral JavaScript client is a library that allows you to interact with the Mistral AI API. With this client, you can perform various tasks such as listing models, chatting with streaming, chatting without streaming, and generating embeddings. To use the client, you can install it in your project using npm and then set up the client with your API key. Once the client is set up, you can use it to perform the desired tasks. For example, you can use the client to chat with a model by providing a list of messages. The client will then return the response from the model. You can also use the client to generate embeddings for a given input. The embeddings can then be used for various downstream tasks such as clustering or classification.

instructor

Instructor is a Python library that makes it a breeze to work with structured outputs from large language models (LLMs). Built on top of Pydantic, it provides a simple, transparent, and user-friendly API to manage validation, retries, and streaming responses. Get ready to supercharge your LLM workflows!

ai-sdk-cpp

The AI SDK CPP is a modern C++ toolkit that provides a unified, easy-to-use API for building AI-powered applications with popular model providers like OpenAI and Anthropic. It bridges the gap for C++ developers by offering a clean, expressive codebase with minimal dependencies. The toolkit supports text generation, streaming content, multi-turn conversations, error handling, tool calling, async tool execution, and configurable retries. Future updates will include additional providers, text embeddings, and image generation models. The project also includes a patched version of nlohmann/json for improved thread safety and consistent behavior in multi-threaded environments.

obsei

Obsei is an open-source, low-code, AI powered automation tool that consists of an Observer to collect unstructured data from various sources, an Analyzer to analyze the collected data with various AI tasks, and an Informer to send analyzed data to various destinations. The tool is suitable for scheduled jobs or serverless applications as all Observers can store their state in databases. Obsei is still in alpha stage, so caution is advised when using it in production. The tool can be used for social listening, alerting/notification, automatic customer issue creation, extraction of deeper insights from feedbacks, market research, dataset creation for various AI tasks, and more based on creativity.

mcphub.nvim

MCPHub.nvim is a powerful Neovim plugin that integrates MCP (Model Context Protocol) servers into your workflow. It offers a centralized config file for managing servers and tools, with an intuitive UI for testing resources. Ideal for LLM integration, it provides programmatic API access and interactive testing through the `:MCPHub` command.

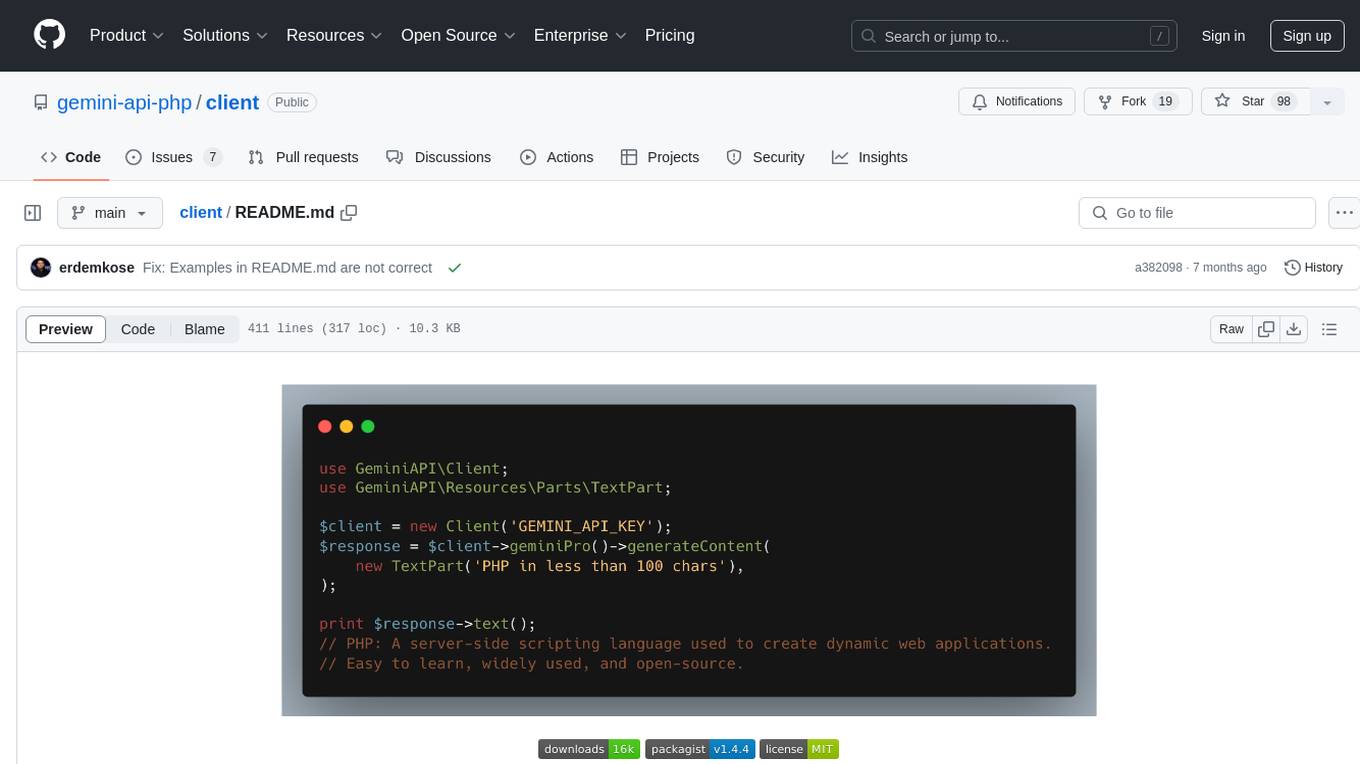

client

Gemini API PHP Client is a library that allows you to interact with Google's generative AI models, such as Gemini Pro and Gemini Pro Vision. It provides functionalities for basic text generation, multimodal input, chat sessions, streaming responses, tokens counting, listing models, and advanced usages like safety settings and custom HTTP client usage. The library requires an API key to access Google's Gemini API and can be installed using Composer. It supports various features like generating content, starting chat sessions, embedding content, counting tokens, and listing available models.

aiotdlib

aiotdlib is a Python asyncio Telegram client based on TDLib. It provides automatic generation of types and functions from tl schema, validation, good IDE type hinting, and high-level API methods for simpler work with tdlib. The package includes prebuilt TDLib binaries for macOS (arm64) and Debian Bullseye (amd64). Users can use their own binary by passing `library_path` argument to `Client` class constructor. Compatibility with other versions of the library is not guaranteed. The tool requires Python 3.9+ and users need to get their `api_id` and `api_hash` from Telegram docs for installation and usage.

For similar tasks

ElevenLabs-DotNet

ElevenLabs-DotNet is a non-official Eleven Labs voice synthesis RESTful client that allows users to convert text to speech. The library targets .NET 8.0 and above, working across various platforms like console apps, winforms, wpf, and asp.net, and across Windows, Linux, and Mac. Users can authenticate using API keys directly, from a configuration file, or system environment variables. The tool provides functionalities for text to speech conversion, streaming text to speech, accessing voices, dubbing audio or video files, generating sound effects, managing history of synthesized audio clips, and accessing user information and subscription status.

wunjo.wladradchenko.ru

Wunjo AI is a comprehensive tool that empowers users to explore the realm of speech synthesis, deepfake animations, video-to-video transformations, and more. Its user-friendly interface and privacy-first approach make it accessible to both beginners and professionals alike. With Wunjo AI, you can effortlessly convert text into human-like speech, clone voices from audio files, create multi-dialogues with distinct voice profiles, and perform real-time speech recognition. Additionally, you can animate faces using just one photo combined with audio, swap faces in videos, GIFs, and photos, and even remove unwanted objects or enhance the quality of your deepfakes using the AI Retouch Tool. Wunjo AI is an all-in-one solution for your voice and visual AI needs, offering endless possibilities for creativity and expression.

airunner

AI Runner is a multi-modal AI interface that allows users to run open-source large language models and AI image generators on their own hardware. The tool provides features such as voice-based chatbot conversations, text-to-speech, speech-to-text, vision-to-text, text generation with large language models, image generation capabilities, image manipulation tools, utility functions, and more. It aims to provide a stable and user-friendly experience with security updates, a new UI, and a streamlined installation process. The application is designed to run offline on users' hardware without relying on a web server, offering a smooth and responsive user experience.

Wechat-AI-Assistant

Wechat AI Assistant is a project that enables multi-modal interaction with ChatGPT AI assistant within WeChat. It allows users to engage in conversations, role-playing, respond to voice messages, analyze images and videos, summarize articles and web links, and search the internet. The project utilizes the WeChatFerry library to control the Windows PC desktop WeChat client and leverages the OpenAI Assistant API for intelligent multi-modal message processing. Users can interact with ChatGPT AI in WeChat through text or voice, access various tools like bing_search, browse_link, image_to_text, text_to_image, text_to_speech, video_analysis, and more. The AI autonomously determines which code interpreter and external tools to use to complete tasks. Future developments include file uploads for AI to reference content, integration with other APIs, and login support for enterprise WeChat and WeChat official accounts.

Generative-AI-Pharmacist

Generative AI Pharmacist is a project showcasing the use of generative AI tools to create an animated avatar named Macy, who delivers medication counseling in a realistic and professional manner. The project utilizes tools like Midjourney for image generation, ChatGPT for text generation, ElevenLabs for text-to-speech conversion, and D-ID for creating a photorealistic talking avatar video. The demo video featuring Macy discussing commonly-prescribed medications demonstrates the potential of generative AI in healthcare communication.

AnyGPT

AnyGPT is a unified multimodal language model that utilizes discrete representations for processing various modalities like speech, text, images, and music. It aligns the modalities for intermodal conversions and text processing. AnyInstruct dataset is constructed for generative models. The model proposes a generative training scheme using Next Token Prediction task for training on a Large Language Model (LLM). It aims to compress vast multimodal data on the internet into a single model for emerging capabilities. The tool supports tasks like text-to-image, image captioning, ASR, TTS, text-to-music, and music captioning.

Pallaidium

Pallaidium is a generative AI movie studio integrated into the Blender video editor. It allows users to AI-generate video, image, and audio from text prompts or existing media files. The tool provides various features such as text to video, text to audio, text to speech, text to image, image to image, image to video, video to video, image to text, and more. It requires a Windows system with a CUDA-supported Nvidia card and at least 6 GB VRAM. Pallaidium offers batch processing capabilities, text to audio conversion using Bark, and various performance optimization tips. Users can install the tool by downloading the add-on and following the installation instructions provided. The tool comes with a set of restrictions on usage, prohibiting the generation of harmful, pornographic, violent, or false content.

omniai

OmniAI provides a unified Ruby API for integrating with multiple AI providers, streamlining AI development by offering a consistent interface for features such as chat, text-to-speech, speech-to-text, and embeddings. It ensures seamless interoperability across platforms and effortless switching between providers, making integrations more flexible and reliable.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.