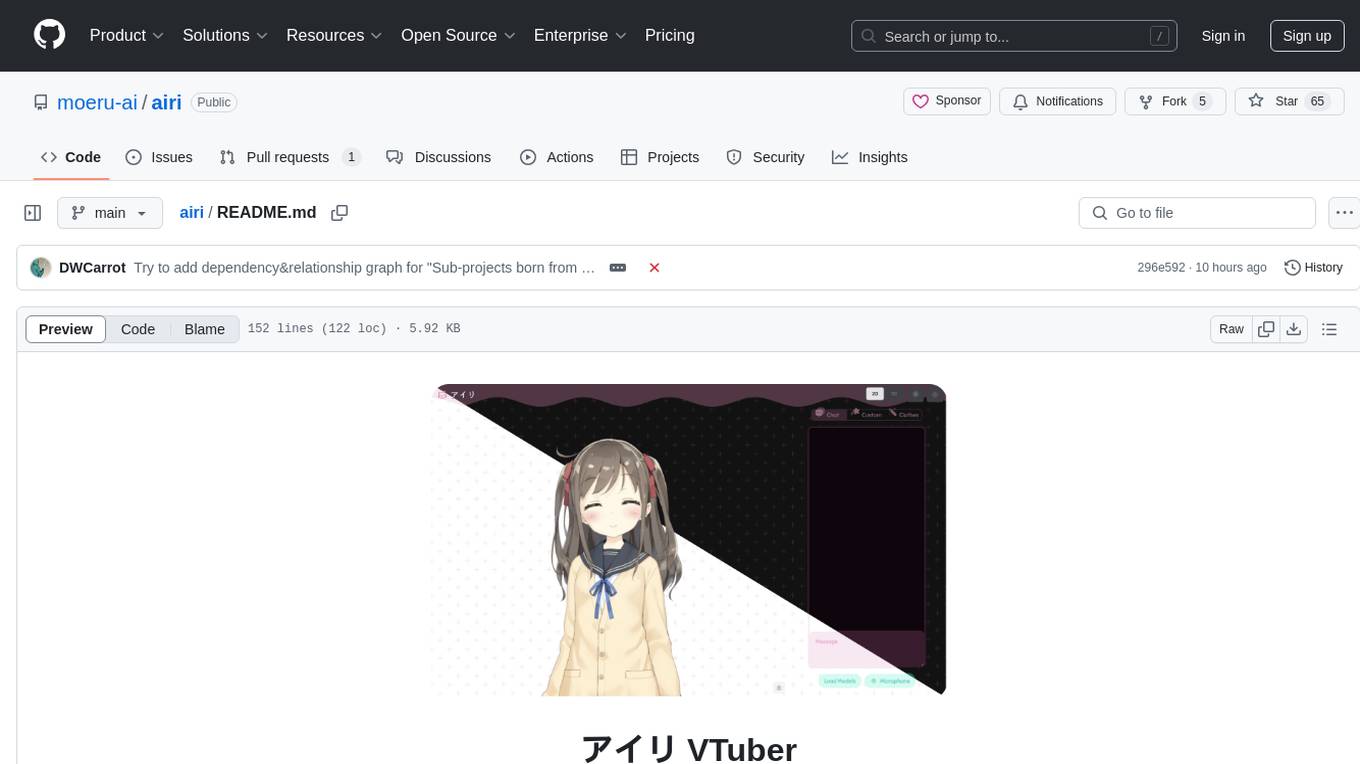

airi

💖🧸 Self hosted, you owned Grok Companion, a container of souls of waifu, cyber livings to bring them into our worlds, wishing to achieve Neuro-sama's altitude. Capable of realtime voice chat, Minecraft, Factorio playing. Web / macOS / Windows supported.

Stars: 14522

Airi is a VTuber project heavily inspired by Neuro-sama. It is capable of various functions such as playing Minecraft, chatting in Telegram and Discord, audio input from browser and Discord, client side speech recognition, VRM and Live2D model support with animations, and more. The project also includes sub-projects like unspeech, hfup, Drizzle ORM driver for DuckDB WASM, and various other tools. Airi uses models like whisper-large-v3-turbo from Hugging Face and is similar to projects like z-waif, amica, eliza, AI-Waifu-Vtuber, and AIVTuber. The project acknowledges contributions from various sources and implements packages to interact with LLMs and models.

README:

Re-creating Neuro-sama, a soul container of AI waifu / virtual characters to bring them into our world.

[Join Discord Server] [Try it] [简体中文] [日本語] [Русский] [Tiếng Việt] [Français]

Heavily inspired by Neuro-sama

[!WARNING] Attention: We do not have any officially minted cryptocurrency or token associated with this project. Please check the information and proceed with caution.

[!NOTE]

We've got a whole dedicated organization @proj-airi for all the sub-projects born from Project AIRI. Check it out!

RAG, memory system, embedded database, icons, Live2D utilities, and more!

Have you dreamed about having a cyber living being (cyber waifu / husbando, digital pet) or digital companion that could play with and talk to you?

With the power of modern large language models like ChatGPT and famous Claude, asking a virtual being to roleplay and chat with us is already easy enough for everyone. Platforms like Character.ai (a.k.a. c.ai) and JanitorAI as well as local playgrounds like SillyTavern are already good-enough solutions for a chat based or visual adventure game like experience.

But, what about the abilities to play games? And see what you are coding at? Chatting while playing games, watching videos, and capable of doing many other things.

Perhaps you know Neuro-sama already. She is currently the best virtual streamer capable of playing games, chatting, and interacting with you and the participants. Some also call this kind of being "digital human." Sadly, as it's not open sourced, you cannot interact with her after her live streams go offline.

Therefore, this project, AIRI, offers another possibility here: let you own your digital life, cyber living, easily, anywhere, anytime.

- DevLog @ 2025.07.18 on July 18, 2025

- DreamLog 0x1 on June 16, 2025

- DevLog @ 2025.06.08 on June 8, 2025

- DevLog @ 2025.05.16 on May 16, 2025

- ...more on documentation site

Unlike the other AI driven VTuber open source projects, アイリ was built with support of many Web technologies such as WebGPU, WebAudio, Web Workers, WebAssembly, WebSocket, etc. from the first day.

[!TIP] Worrying about the performance drop since we are using Web related technologies?

Don't worry, while Web browser version is meant to give an insight about how much we can push and do inside browsers, and webviews, we will never fully rely on this, the desktop version of AIRI is capable of using native NVIDIA CUDA and Apple Metal by default (thanks to HuggingFace & beloved candle project), without any complex dependency managements, considering the tradeoff, it was partially powered by Web technologies for graphics, layouts, animations, and the WIP plugin systems for everyone to integrate things.

This means that アイリ is capable of running on modern browsers and devices and even on mobile devices (already done with PWA support). This brings a lot of possibilities for us (the developers) to build and extend the power of アイリ VTuber to the next level, while still leaving the flexibilities for users to enable features that requires TCP connections or other non-Web technologies such as connecting to Discord voice channel or playing Minecraft and Factorio with friends.

[!NOTE]

We are still in the early stage of development where we are seeking out talented developers to join us and help us to make アイリ a reality.

It's ok if you are not familiar with Vue.js, TypeScript, and devtools that required for this project, you can join us as an artist, designer, or even help us to launch our first live stream.

Even you are a big fan of React, Svelte or even Solid, we welcome you. You can open a sub-directory to add features that you want to see in アイリ, or would like to experiment with.

Fields (and related projects) that we are looking for:

- Live2D modeller

- VRM modeller

- VRChat avatar designer

- Computer Vision

- Reinforcement Learning

- Speech Recognition

- Speech Synthesis

- ONNX Runtime

- Transformers.js

- vLLM

- WebGPU

- Three.js

- WebXR (checkout the another project we have under the @moeru-ai organization)

If you are interested, why not introduce yourself here? Would like to join part of us to build AIRI?

Capable of

- [x] Brain

- [x] Play Minecraft

- [x] Play Factorio (WIP, but PoC and demo available)

- [x] Chat in Telegram

- [x] Chat in Discord

- [ ] Memory

- [x] Pure in-browser database support (DuckDB WASM |

pglite) - [ ] Memory Alaya (WIP)

- [x] Pure in-browser database support (DuckDB WASM |

- [ ] Pure in-browser local (WebGPU) inference

- [x] Ears

- [x] Audio input from browser

- [x] Audio input from Discord

- [x] Client side speech recognition

- [x] Client side talking detection

- [x] Mouth

- [x] ElevenLabs voice synthesis

- [x] Body

- [x] VRM support

- [x] Control VRM model

- [x] VRM model animations

- [x] Auto blink

- [x] Auto look at

- [x] Idle eye movement

- [x] Live2D support

- [x] Control Live2D model

- [x] Live2D model animations

- [x] Auto blink

- [x] Auto look at

- [x] Idle eye movement

- [x] VRM support

For detailed instructions to develop this project, follow CONTRIBUTING.md

[!NOTE] By default,

pnpm devwill start the development server for the Stage Web (browser version). If you would like to try developing the desktop version, please make sure you read CONTRIBUTING.md to setup the environment correctly.

pnpm i

pnpm devStage Web (Browser Version at airi.moeru.ai)

pnpm devpnpm dev:tamagotchiA Nix package for Tamagotchi is included. To run airi with Nix, first make sure to enable flakes, then run:

nix run github:moeru-ai/airipnpm dev:docsPlease update the version in Cargo.toml after running bumpp:

npx bumpp --no-commit --no-tagSupport of LLM API Providers (powered by xsai)

- [x] 302.AI (sponsored)

- [x] OpenRouter

- [x] vLLM

- [x] SGLang

- [x] Ollama

- [x] Google Gemini

- [x] OpenAI

- [ ] Azure OpenAI API (PR welcome)

- [x] Anthropic Claude

- [ ] AWS Claude (PR welcome)

- [x] DeepSeek

- [x] Qwen

- [x] xAI

- [x] Groq

- [x] Mistral

- [x] Cloudflare Workers AI

- [x] Together.ai

- [x] Fireworks.ai

- [x] Novita

- [x] Zhipu

- [x] SiliconFlow

- [x] Stepfun

- [x] Baichuan

- [x] Minimax

- [x] Moonshot AI

- [x] ModelScope

- [x] Player2

- [x] Tencent Cloud

- [ ] Sparks (PR welcome)

- [ ] Volcano Engine (PR welcome)

- Awesome AI VTuber: A curated list of AI VTubers and related projects

-

unspeech: Universal endpoint proxy server for/audio/transcriptionsand/audio/speech, like LiteLLM but for any ASR and TTS -

hfup: tools to help on deploying, bundling to HuggingFace Spaces -

xsai-transformers: Experimental 🤗 Transformers.js provider for xsAI. - WebAI: Realtime Voice Chat: Full example of implementing ChatGPT's realtime voice from scratch with VAD + STT + LLM + TTS.

-

@proj-airi/drizzle-duckdb-wasm: Drizzle ORM driver for DuckDB WASM -

@proj-airi/duckdb-wasm: Easy to use wrapper for@duckdb/duckdb-wasm -

tauri-plugin-mcp: A Tauri plugin for interacting with MCP servers. - AIRI Factorio: Allow AIRI to play Factorio

- Factorio RCON API: RESTful API wrapper for Factorio headless server console

-

autorio: Factorio automation library -

tstl-plugin-reload-factorio-mod: Reload Factorio mod when developing - Velin: Use Vue SFC and Markdown to write easy to manage stateful prompts for LLM

-

demodel: Easily boost the speed of pulling your models and datasets from various of inference runtimes. -

inventory: Centralized model catalog and default provider configurations backend service - MCP Launcher: Easy to use MCP builder & launcher for all possible MCP servers, just like Ollama for models!

- 🥺 SAD: Documentation and notes for self-host and browser running LLMs.

%%{ init: { 'flowchart': { 'curve': 'catmullRom' } } }%%

flowchart TD

Core("Core")

Unspeech("unspeech")

DBDriver("@proj-airi/drizzle-duckdb-wasm")

MemoryDriver("[WIP] Memory Alaya")

DB1("@proj-airi/duckdb-wasm")

SVRT("@proj-airi/server-runtime")

Memory("Memory")

STT("STT")

Stage("Stage")

StageUI("@proj-airi/stage-ui")

UI("@proj-airi/ui")

subgraph AIRI

DB1 --> DBDriver --> MemoryDriver --> Memory --> Core

UI --> StageUI --> Stage --> Core

Core --> STT

Core --> SVRT

end

subgraph UI_Components

UI --> StageUI

UITransitions("@proj-airi/ui-transitions") --> StageUI

UILoadingScreens("@proj-airi/ui-loading-screens") --> StageUI

FontCJK("@proj-airi/font-cjkfonts-allseto") --> StageUI

FontXiaolai("@proj-airi/font-xiaolai") --> StageUI

end

subgraph Apps

Stage --> StageWeb("@proj-airi/stage-web")

Stage --> StageTamagotchi("@proj-airi/stage-tamagotchi")

Core --> RealtimeAudio("@proj-airi/realtime-audio")

Core --> PromptEngineering("@proj-airi/playground-prompt-engineering")

end

subgraph Server_Components

Core --> ServerSDK("@proj-airi/server-sdk")

ServerShared("@proj-airi/server-shared") --> SVRT

ServerShared --> ServerSDK

end

STT -->|Speaking| Unspeech

SVRT -->|Playing Factorio| F_AGENT

SVRT -->|Playing Minecraft| MC_AGENT

subgraph Factorio_Agent

F_AGENT("Factorio Agent")

F_API("Factorio RCON API")

factorio-server("factorio-server")

F_MOD1("autorio")

F_AGENT --> F_API -.-> factorio-server

F_MOD1 -.-> factorio-server

end

subgraph Minecraft_Agent

MC_AGENT("Minecraft Agent")

Mineflayer("Mineflayer")

minecraft-server("minecraft-server")

MC_AGENT --> Mineflayer -.-> minecraft-server

end

XSAI("xsAI") --> Core

XSAI --> F_AGENT

XSAI --> MC_AGENT

Core --> TauriMCP("@proj-airi/tauri-plugin-mcp")

Memory_PGVector("@proj-airi/memory-pgvector") --> Memory

style Core fill:#f9d4d4,stroke:#333,stroke-width:1px

style AIRI fill:#fcf7f7,stroke:#333,stroke-width:1px

style UI fill:#d4f9d4,stroke:#333,stroke-width:1px

style Stage fill:#d4f9d4,stroke:#333,stroke-width:1px

style UI_Components fill:#d4f9d4,stroke:#333,stroke-width:1px

style Server_Components fill:#d4e6f9,stroke:#333,stroke-width:1px

style Apps fill:#d4d4f9,stroke:#333,stroke-width:1px

style Factorio_Agent fill:#f9d4f2,stroke:#333,stroke-width:1px

style Minecraft_Agent fill:#f9d4f2,stroke:#333,stroke-width:1px

style DBDriver fill:#f9f9d4,stroke:#333,stroke-width:1px

style MemoryDriver fill:#f9f9d4,stroke:#333,stroke-width:1px

style DB1 fill:#f9f9d4,stroke:#333,stroke-width:1px

style Memory fill:#f9f9d4,stroke:#333,stroke-width:1px

style Memory_PGVector fill:#f9f9d4,stroke:#333,stroke-width:1px- kimjammer/Neuro: A recreation of Neuro-Sama originally created in 7 days.: very well completed implementation.

- SugarcaneDefender/z-waif: Great at gaming, autonomous, and prompt engineering

- semperai/amica: Great at VRM, WebXR

- elizaOS/eliza: Great examples and software engineering on how to integrate agent into various of systems and APIs

- ardha27/AI-Waifu-Vtuber: Great about Twitch API integrations

- InsanityLabs/AIVTuber: Nice UI and UX

- IRedDragonICY/vixevia

- t41372/Open-LLM-VTuber

- PeterH0323/Streamer-Sales

- https://clips.twitch.tv/WanderingCaringDeerDxCat-Qt55xtiGDSoNmDDr https://www.youtube.com/watch?v=8Giv5mupJNE

- https://clips.twitch.tv/TriangularAthleticBunnySoonerLater-SXpBk1dFso21VcWD

- https://www.youtube.com/@NOWA_Mirai

- Reka UI: for designing the documentation site, new landing page is based on this, as well as implementing massive amount of UI components. (shadcn-vue is using Reka UI as the headless, do checkout!)

- pixiv/ChatVRM

- josephrocca/ChatVRM-js: A JS conversion/adaptation of parts of the ChatVRM (TypeScript) code for standalone use in OpenCharacters and elsewhere

- Design of UI and style was inspired by Cookard, UNBEATABLE, and Sensei! I like you so much!, and artworks of Ayame by Mercedes Bazan with Wish by Mercedes Bazan

- mallorbc/whisper_mic

-

xsai: Implemented a decent amount of packages to interact with LLMs and models, like Vercel AI SDK but way small.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for airi

Similar Open Source Tools

airi

Airi is a VTuber project heavily inspired by Neuro-sama. It is capable of various functions such as playing Minecraft, chatting in Telegram and Discord, audio input from browser and Discord, client side speech recognition, VRM and Live2D model support with animations, and more. The project also includes sub-projects like unspeech, hfup, Drizzle ORM driver for DuckDB WASM, and various other tools. Airi uses models like whisper-large-v3-turbo from Hugging Face and is similar to projects like z-waif, amica, eliza, AI-Waifu-Vtuber, and AIVTuber. The project acknowledges contributions from various sources and implements packages to interact with LLMs and models.

nyxtext

Nyxtext is a text editor built using Python, featuring Custom Tkinter with the Catppuccin color scheme and glassmorphic design. It follows a modular approach with each element organized into separate files for clarity and maintainability. NyxText is not just a text editor but also an AI-powered desktop application for creatives, developers, and students.

esp-ai

ESP-AI provides a complete AI conversation solution for your development board, including IAT+LLM+TTS integration solutions for ESP32 series development boards. It can be injected into projects without affecting existing ones. By providing keys from platforms like iFlytek, Jiling, and local services, you can run the services without worrying about interactions between services or between development boards and services. The project's server-side code is based on Node.js, and the hardware code is based on Arduino IDE.

Devon

Devon is an open-source pair programmer tool designed to facilitate collaborative coding sessions. It provides features such as multi-file editing, codebase exploration, test writing, bug fixing, and architecture exploration. The tool supports Anthropic, OpenAI, and Groq APIs, with plans to add more models in the future. Devon is community-driven, with ongoing development goals including multi-model support, plugin system for tool builders, self-hostable Electron app, and setting SOTA on SWE-bench Lite. Users can contribute to the project by developing core functionality, conducting research on agent performance, providing feedback, and testing the tool.

gemini-next-chat

Gemini Next Chat is an open-source, extensible high-performance Gemini chatbot framework that supports one-click free deployment of private Gemini web applications. It provides a simple interface with image recognition and voice conversation, supports multi-modal models, talk mode, visual recognition, assistant market, support plugins, conversation list, full Markdown support, privacy and security, PWA support, well-designed UI, fast loading speed, static deployment, and multi-language support.

amplication

Amplication is a robust, open-source development platform designed to revolutionize the creation of scalable and secure .NET and Node.js applications. It automates backend applications development, ensuring consistency, predictability, and adherence to the highest standards with code that's built to scale. The user-friendly interface fosters seamless integration of APIs, data models, databases, authentication, and authorization. Built on a flexible, plugin-based architecture, Amplication allows effortless customization of the code and offers a diverse range of integrations. With a strong focus on collaboration, Amplication streamlines team-oriented development, making it an ideal choice for groups of all sizes, from startups to large enterprises. It enables users to concentrate on business logic while handling the heavy lifting of development. Experience the fastest way to develop .NET and Node.js applications with Amplication.

pollinations

pollinations.ai is an open-source generative AI platform based in Berlin, empowering community projects with accessible text, image, video, and audio generation APIs. It offers a unified API endpoint for various AI generation needs, including text, images, audio, and video. The platform provides features like image generation using models such as Flux, GPT Image, Seedream, and Kontext, video generation with Seedance and Veo, and audio generation with text-to-speech and speech-to-text capabilities. Users can access the platform through a web interface or API, and authentication is managed through API keys. The platform is community-driven, transparent, and ethical, aiming to make AI technology open, accessible, and interconnected while fostering innovation and responsible development.

allchat

ALLCHAT is a Node.js backend and React MUI frontend for an application that interacts with the Gemini Pro 1.5 (and others), with history, image generating/recognition, PDF/Word/Excel upload, code run, model function calls and markdown support. It is a comprehensive tool that allows users to connect models to the world with Web Tools, run locally, deploy using Docker, configure Nginx, and monitor the application using a dockerized monitoring solution (Loki+Grafana).

superlinked

Superlinked is a compute framework for information retrieval and feature engineering systems, focusing on converting complex data into vector embeddings for RAG, Search, RecSys, and Analytics stack integration. It enables custom model performance in machine learning with pre-trained model convenience. The tool allows users to build multimodal vectors, define weights at query time, and avoid postprocessing & rerank requirements. Users can explore the computational model through simple scripts and python notebooks, with a future release planned for production usage with built-in data infra and vector database integrations.

llm-x

LLM X is a ChatGPT-style UI for the niche group of folks who run Ollama (think of this like an offline chat gpt server) locally. It supports sending and receiving images and text and works offline through PWA (Progressive Web App) standards. The project utilizes React, Typescript, Lodash, Mobx State Tree, Tailwind css, DaisyUI, NextUI, Highlight.js, React Markdown, kbar, Yet Another React Lightbox, Vite, and Vite PWA plugin. It is inspired by ollama-ui's project and Perplexity.ai's UI advancements in the LLM UI space. The project is still under development, but it is already a great way to get started with building your own LLM UI.

GPTSwarm

GPTSwarm is a graph-based framework for LLM-based agents that enables the creation of LLM-based agents from graphs and facilitates the customized and automatic self-organization of agent swarms with self-improvement capabilities. The library includes components for domain-specific operations, graph-related functions, LLM backend selection, memory management, and optimization algorithms to enhance agent performance and swarm efficiency. Users can quickly run predefined swarms or utilize tools like the file analyzer. GPTSwarm supports local LM inference via LM Studio, allowing users to run with a local LLM model. The framework has been accepted by ICML2024 and offers advanced features for experimentation and customization.

WebMasterLog

WebMasterLog is a comprehensive repository showcasing various web development projects built with front-end and back-end technologies. It highlights interactive user interfaces, dynamic web applications, and a spectrum of web development solutions. The repository encourages contributions in areas such as adding new projects, improving existing projects, updating documentation, fixing bugs, implementing responsive design, enhancing code readability, and optimizing project functionalities. Contributors are guided to follow specific guidelines for project submissions, including directory naming conventions, README file inclusion, project screenshots, and commit practices. Pull requests are reviewed based on criteria such as proper PR template completion, originality of work, code comments for clarity, and sharing screenshots for frontend updates. The repository also participates in various open-source programs like JWOC, GSSoC, Hacktoberfest, KWOC, 24 Pull Requests, IWOC, SWOC, and DWOC, welcoming valuable contributors.

DriveLM

DriveLM is a multimodal AI model that enables autonomous driving by combining computer vision and natural language processing. It is designed to understand and respond to complex driving scenarios using visual and textual information. DriveLM can perform various tasks related to driving, such as object detection, lane keeping, and decision-making. It is trained on a massive dataset of images and text, which allows it to learn the relationships between visual cues and driving actions. DriveLM is a powerful tool that can help to improve the safety and efficiency of autonomous vehicles.

RWKV-Runner

RWKV Runner is a project designed to simplify the usage of large language models by automating various processes. It provides a lightweight executable program and is compatible with the OpenAI API. Users can deploy the backend on a server and use the program as a client. The project offers features like model management, VRAM configurations, user-friendly chat interface, WebUI option, parameter configuration, model conversion tool, download management, LoRA Finetune, and multilingual localization. It can be used for various tasks such as chat, completion, composition, and model inspection.

aimeos

Aimeos is a full-featured e-commerce platform that is ultra-fast, cloud-native, and API-first. It offers a wide range of features including JSON REST API, GraphQL API, multi-vendor support, various product types, subscriptions, multiple payment gateways, admin backend, modular structure, SEO optimization, multi-language support, AI-based text translation, mobile optimization, and high-quality source code. It is highly configurable and extensible, making it suitable for e-commerce SaaS solutions, marketplaces, and various cloud environments. Aimeos is designed for scalability, security, and performance, catering to a diverse range of e-commerce needs.

screenpipe

24/7 Screen & Audio Capture Library to build personalized AI powered by what you've seen, said, or heard. Works with Ollama. Alternative to Rewind.ai. Open. Secure. You own your data. Rust. We are shipping daily, make suggestions, post bugs, give feedback. Building a reliable stream of audio and screenshot data, simplifying life for developers by solving non-trivial problems. Multiple installation options available. Experimental tool with various integrations and features for screen and audio capture, OCR, STT, and more. Open source project focused on enabling tooling & infrastructure for a wide range of applications.

For similar tasks

airi

Airi is a VTuber project heavily inspired by Neuro-sama. It is capable of various functions such as playing Minecraft, chatting in Telegram and Discord, audio input from browser and Discord, client side speech recognition, VRM and Live2D model support with animations, and more. The project also includes sub-projects like unspeech, hfup, Drizzle ORM driver for DuckDB WASM, and various other tools. Airi uses models like whisper-large-v3-turbo from Hugging Face and is similar to projects like z-waif, amica, eliza, AI-Waifu-Vtuber, and AIVTuber. The project acknowledges contributions from various sources and implements packages to interact with LLMs and models.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

basehub

JavaScript / TypeScript SDK for BaseHub, the first AI-native content hub. **Features:** * ✨ Infers types from your BaseHub repository... _meaning IDE autocompletion works great._ * 🏎️ No dependency on graphql... _meaning your bundle is more lightweight._ * 🌐 Works everywhere `fetch` is supported... _meaning you can use it anywhere._

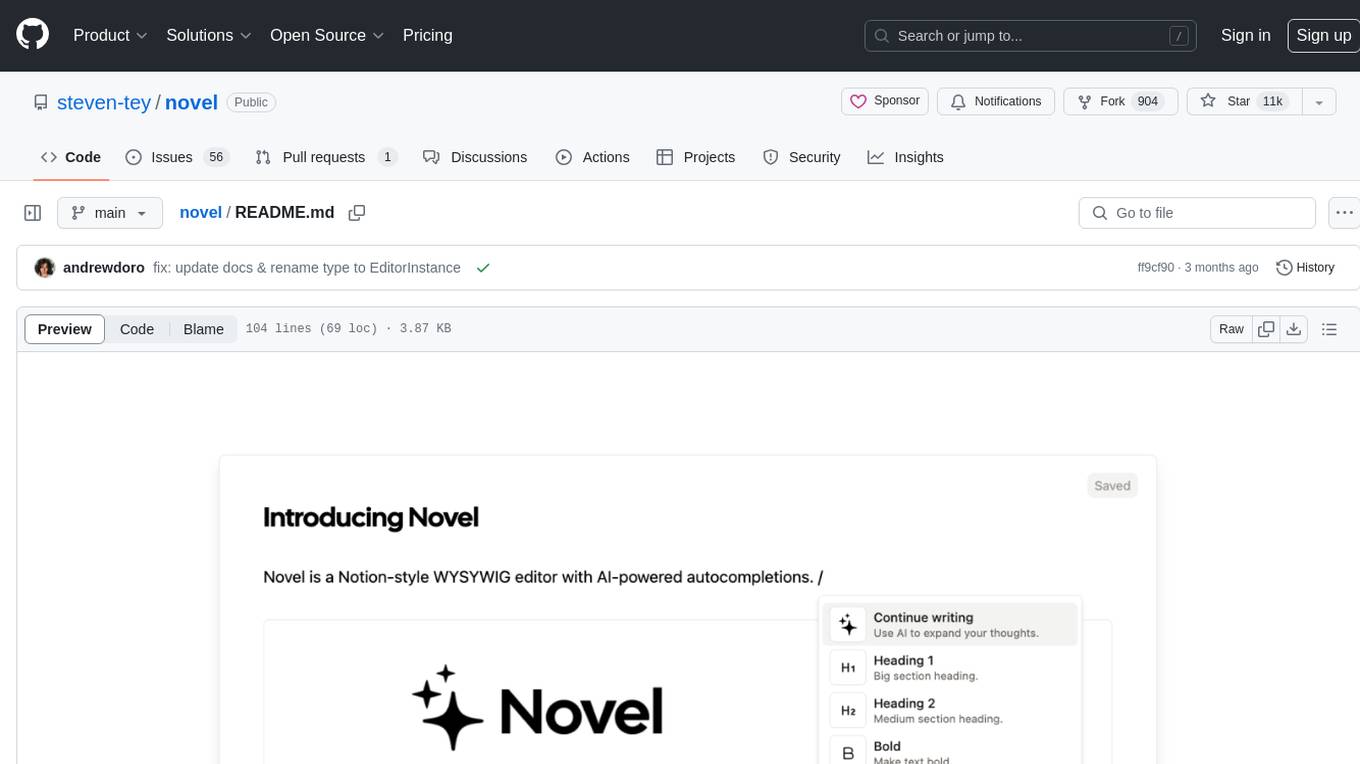

novel

Novel is an open-source Notion-style WYSIWYG editor with AI-powered autocompletions. It allows users to easily create and edit content with the help of AI suggestions. The tool is built on a modern tech stack and supports cross-framework development. Users can deploy their own version of Novel to Vercel with one click and contribute to the project by reporting bugs or making feature enhancements through pull requests.

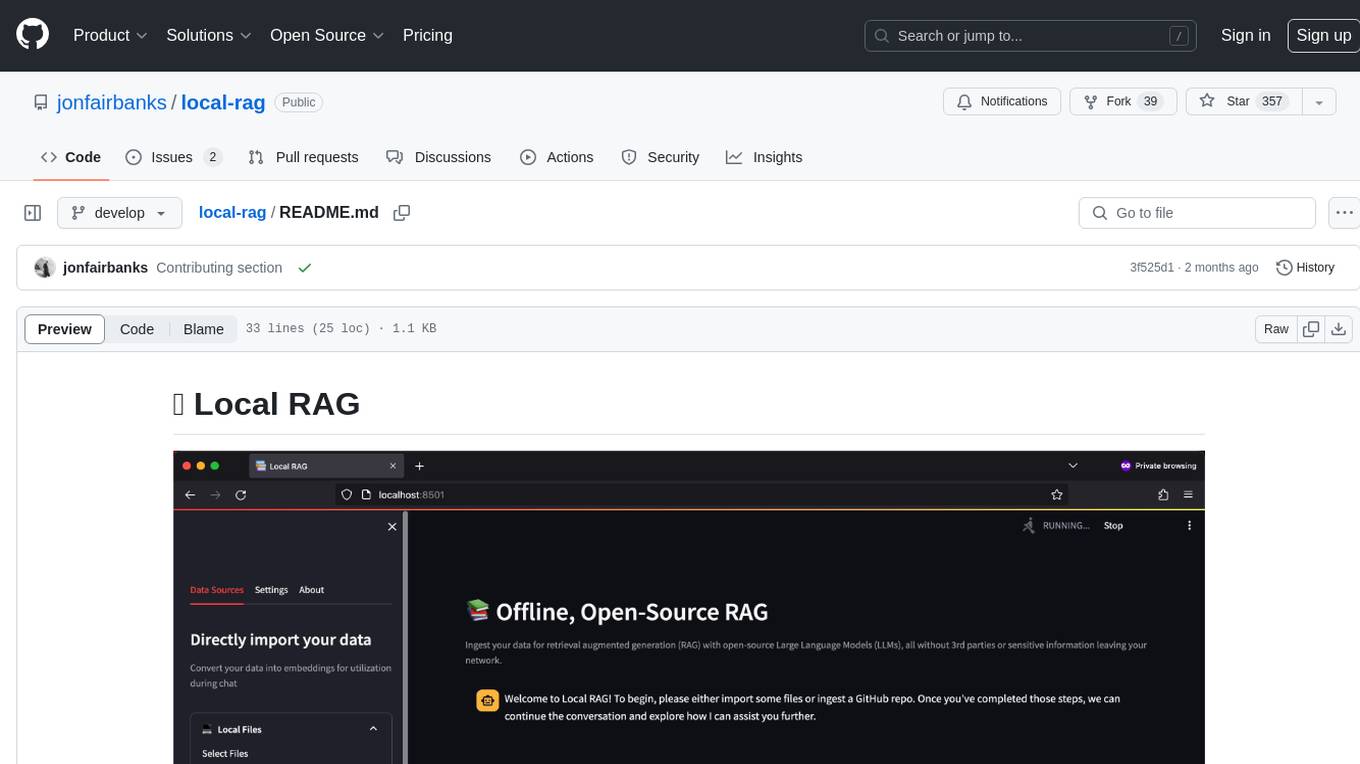

local-rag

Local RAG is an offline, open-source tool that allows users to ingest files for retrieval augmented generation (RAG) using large language models (LLMs) without relying on third parties or exposing sensitive data. It supports offline embeddings and LLMs, multiple sources including local files, GitHub repos, and websites, streaming responses, conversational memory, and chat export. Users can set up and deploy the app, learn how to use Local RAG, explore the RAG pipeline, check planned features, known bugs and issues, access additional resources, and contribute to the project.

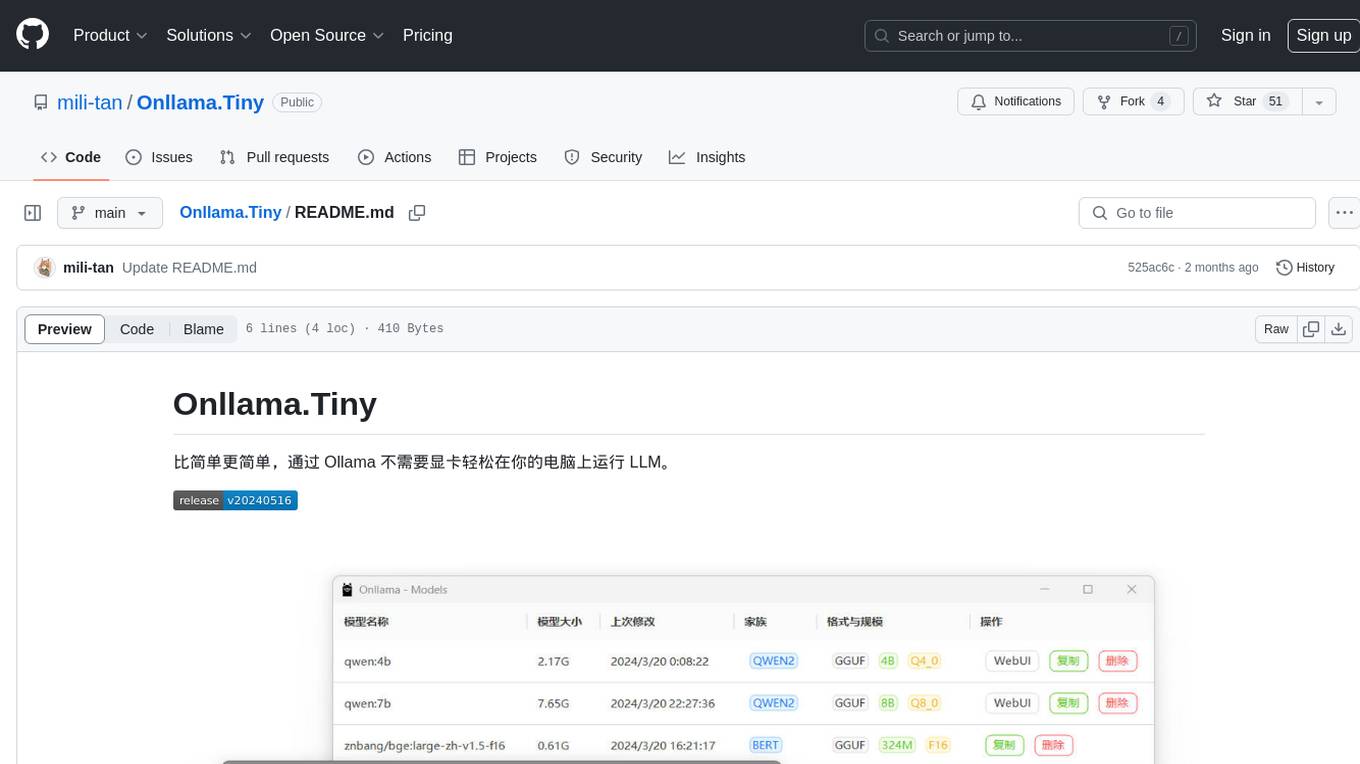

Onllama.Tiny

Onllama.Tiny is a lightweight tool that allows you to easily run LLM on your computer without the need for a dedicated graphics card. It simplifies the process of running LLM, making it more accessible for users. The tool provides a user-friendly interface and streamlines the setup and configuration required to run LLM on your machine. With Onllama.Tiny, users can quickly set up and start using LLM for various applications and projects.

ComfyUI-BRIA_AI-RMBG

ComfyUI-BRIA_AI-RMBG is an unofficial implementation of the BRIA Background Removal v1.4 model for ComfyUI. The tool supports batch processing, including video background removal, and introduces a new mask output feature. Users can install the tool using ComfyUI Manager or manually by cloning the repository. The tool includes nodes for automatically loading the Removal v1.4 model and removing backgrounds. Updates include support for batch processing and the addition of a mask output feature.

enterprise-h2ogpte

Enterprise h2oGPTe - GenAI RAG is a repository containing code examples, notebooks, and benchmarks for the enterprise version of h2oGPTe, a powerful AI tool for generating text based on the RAG (Retrieval-Augmented Generation) architecture. The repository provides resources for leveraging h2oGPTe in enterprise settings, including implementation guides, performance evaluations, and best practices. Users can explore various applications of h2oGPTe in natural language processing tasks, such as text generation, content creation, and conversational AI.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.