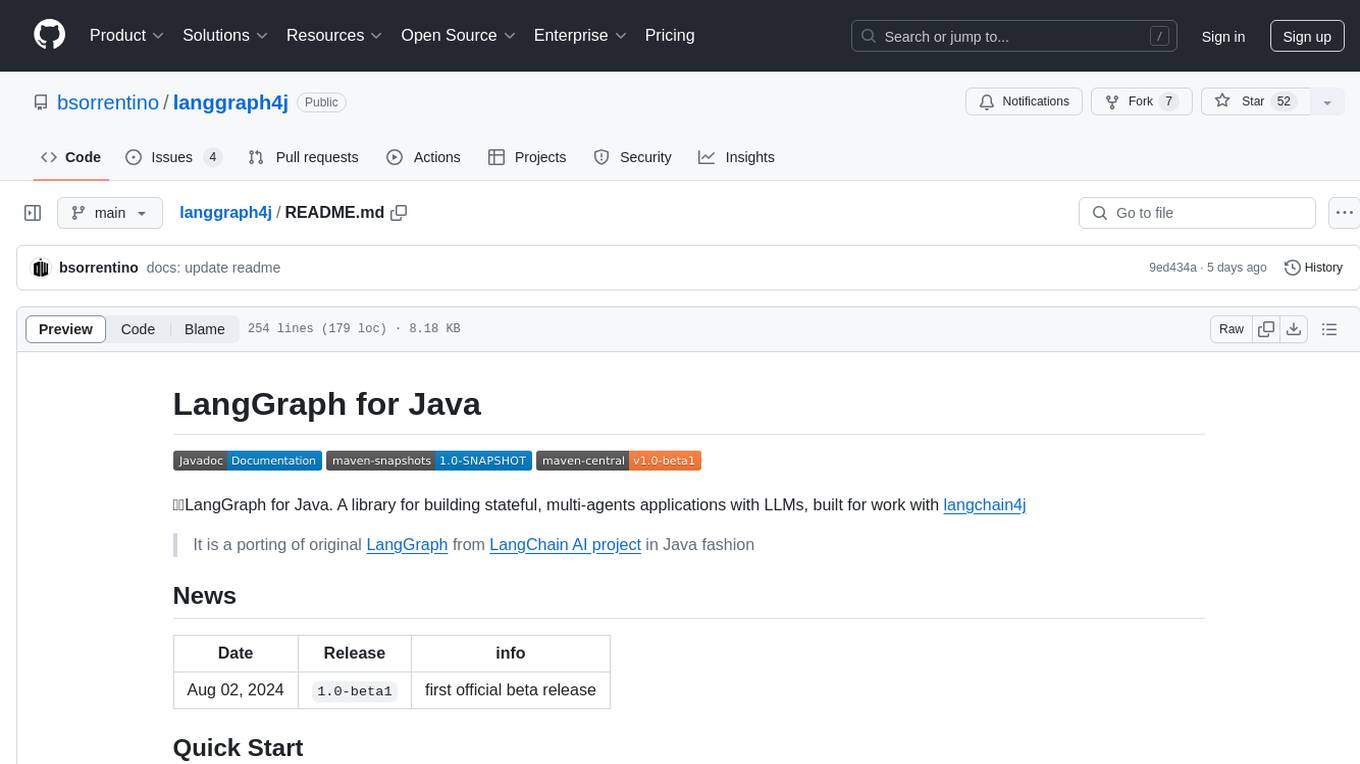

langgraph4j

🚀 LangGraph for Java. A library for building stateful, multi-actor applications with LLMs, built for work jointly with langchain4j

Stars: 365

LangGraph for Java is a library designed for building stateful, multi-agent applications with LLMs. It is a porting of the original LangGraph from the LangChain AI project to Java. The library allows users to define agent states, nodes, and edges in a graph structure to create complex workflows. It integrates with LangChain4j and provides tools for executing actions based on agent decisions. LangGraph for Java enables users to create asynchronous node actions, conditional edges, and normal edges to model decision-making processes in applications.

README:

LangGraph for Java. A library for building stateful, multi-agents applications with LLMs, built for work with langchain4j

It is a porting of original LangGraph from LangChain AI project in Java fashion

- [x] StateGraph

- [x] Nodes

- [x] Edges

- [x] Conditional Edges

- [x] Entry Points

- [x] Conditional Entry Points

- [x] State

- [x] Schema (a series of Channels)

- [x] Reducer (how apply updates to the state attributes)

- [x] Default provider

- [x] Appender channel (values accumulator)

- [x] delete messages

- [x] Schema (a series of Channels)

- [x] Compiling graph

- [x] Async support (throught CompletableFuture)

- [x] Streaming support (throught java-async-generator)

- [x] Checkpoints (save and replay feature)

- [x] Graph visualization

- [x] Playground (Embeddable Webapp that plays with LangGraph4j)

- [x] Threads (checkpointing of multiple different runs)

- [x] Update state (interact with the state directly and update it)

- [x] Breakpoints (pause and resume feature)

- [x] Studio (Playground Webapp)

- [x] Spring Boot

- [x] Jetty

- [x] Quarkus

- [X] Streaming response from LLM results

- [X] Child Graphs

- [X] Parallel Node Execution

- With some constraints

- [X] Generator (Visual Builder)

Note:

From release 1.2.x the miminum supported Java version is the

Java 17and the artifactlanggraph4j-core-jdk8is replaced bylanggraph4j-core

| Date | Release | info |

|---|---|---|

| Apr 06, 2025 | 1.5.4 |

official release |

- How to add persistence ("memory") to your graph

- How to view and update past graph state

- How to parallel branch

- How to wait for user input

- How to sub-graph

- Use Case

| Project | Integrated With |

|---|---|

| Agent Executor | SpringAI |

| Agent Executor | Langchain4j |

| Image To PlantUML Diagram | Langchain4j |

| Adaptive RAG | Langchain4j |

Maven

<dependency>

<groupId>org.bsc.langgraph4j</groupId>

<artifactId>langgraph4j-core</artifactId>

<version>1.5.4</version>

</dependency>Maven

<dependency>

<groupId>org.bsc.langgraph4j</groupId>

<artifactId>langgraph4j-core</artifactId>

<version>1.5-SNAPSHOT</version>

</dependency>The main type of graph in langgraph is the StatefulGraph. This graph is parameterized by a state object that it passes around to each node.

Each node then returns operations to update that state. These operations can either SET specific attributes on the state (e.g. overwrite the existing values) or ADD to the existing attribute.

Whether to set or add is described in the state's schema provided to the graph. The schema is a Map of Channels, each Channel represent an attribute in the state. If an attribute is described with an AppendeChannel it will be a List and each element referring the attribute will be automaically added by graph during processing. The State must inherit from AgentState base class (that essentially is a Map wrapper).

public class AgentState {

public AgentState( Map<String,Object> initData ) { ... }

public final java.util.Map<String,Object> data() { ... }

public final <T> Optional<T> value(String key) { ... }

public final <T> T value(String key, T defaultValue ) { ... }

public final <T> T value(String key, Supplier<T> defaultProvider ) { ... }

}We now need to define a few different nodes in our graph. In langgraph, a node is an async/sync function that accept an AgentState as argument and returns a (partial) state update. There are two main nodes we need for this:

- The agent: responsible for deciding what (if any) actions to take.

- A function to invoke tools: if the agent decides to take an action, this node will then execute that action.

/**

* Represents an asynchronous node action that operates on an agent state and returns state update.

*

* @param <S> the type of the agent state

*/

@FunctionalInterface

public interface AsyncNodeAction<S extends AgentState> extends Function<S, CompletableFuture<Map<String, Object>>> {

CompletableFuture<Map<String, Object>> apply(S t);

/**

* Creates an asynchronous node action from a synchronous node action.

*/

static <S extends AgentState> AsyncNodeAction<S> node_async(NodeAction<S> syncAction) { ... }

}We will also need to define some edges. Some of these edges may be conditional. The reason they are conditional is that based on the output of a node, one of several paths may be taken. The path that is taken is not known until that node is run (the LLM decides).

-

Conditional Edge: after the agent is called, we should either:

- If the agent said to take an action, then the function to invoke tools should be called

- If the agent said that it was finished, then it should finish

- Normal Edge: after the tools are invoked, it should always go back to the agent to decide what to do next

/**

* Represents an asynchronous edge action that operates on an agent state and returns a new route.

*

* @param <S> the type of the agent state

*/

public interface AsyncEdgeAction<S extends AgentState> extends Function<S, CompletableFuture<String>> {

CompletableFuture<String> apply(S t);

/**

* Creates an asynchronous edge action from a synchronous edge action.

*/

static <S extends AgentState> AsyncEdgeAction<S> edge_async(EdgeAction<S> syncAction ) { ... }

}We can now put it all together and define the graph! (see example below)

Like default use case proposed in LangGraph blog, We have converted AgentExecutor implementation from langchain using LangGraph4j. In the agent-executor project's sample, there is the complete working code with tests. Feel free to checkout and use it as a reference.

Below you can find a piece of code of the AgentExecutor to give you an idea of how is has built in langgraph style.

/**

* Represents the state of an agent.

*/

class State extends MessagesState<ChatMessage> {

public State(Map<String, Object> initData) {

super(initData);

}

public Optional<String> finalResponse() {

return value("agent_response");

}

}

var toolNode = ToolNode.builder()

.toolSpecification( tools )

.build();

var agent = Agent.builder()

.chatLanguageModel(chatLanguageModel)

.tools(toolNode.toolSpecifications())

.build();

var callAgent = new CallAgent(agent);

var executeTools = new ExecuteTools(agent, toolNode);

// Fluent Interface

var app = new StateGraph<>(State.SCHEMA, State::new)

.addEdge(START,"agent")

.addNode("agent", node_async(callAgent))

.addNode("action", node_async(executeTools))

.addConditionalEdges(

"agent",

edge_async( state ->

state.finalResponse()

.map(res -> "end")

.orElse("continue");

),

Map.of("continue", "action", "end", END)

)

.addEdge("action", "agent")

.compile();

return app.stream( inputs );For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for langgraph4j

Similar Open Source Tools

langgraph4j

LangGraph for Java is a library designed for building stateful, multi-agent applications with LLMs. It is a porting of the original LangGraph from the LangChain AI project to Java. The library allows users to define agent states, nodes, and edges in a graph structure to create complex workflows. It integrates with LangChain4j and provides tools for executing actions based on agent decisions. LangGraph for Java enables users to create asynchronous node actions, conditional edges, and normal edges to model decision-making processes in applications.

rl

TorchRL is an open-source Reinforcement Learning (RL) library for PyTorch. It provides pytorch and **python-first** , low and high level abstractions for RL that are intended to be **efficient** , **modular** , **documented** and properly **tested**. The code is aimed at supporting research in RL. Most of it is written in python in a highly modular way, such that researchers can easily swap components, transform them or write new ones with little effort.

mcp-agent

mcp-agent is a simple, composable framework designed to build agents using the Model Context Protocol. It handles the lifecycle of MCP server connections and implements patterns for building production-ready AI agents in a composable way. The framework also includes OpenAI's Swarm pattern for multi-agent orchestration in a model-agnostic manner, making it the simplest way to build robust agent applications. It is purpose-built for the shared protocol MCP, lightweight, and closer to an agent pattern library than a framework. mcp-agent allows developers to focus on the core business logic of their AI applications by handling mechanics such as server connections, working with LLMs, and supporting external signals like human input.

Endia

Endia is a dynamic Array library for Scientific Computing, offering automatic differentiation of arbitrary order, complex number support, dual API with PyTorch-like imperative or JAX-like functional interface, and JIT Compilation for speeding up training and inference. It can handle complex valued functions, perform both forward and reverse-mode automatic differentiation, and has a builtin JIT compiler. Endia aims to advance AI & Scientific Computing by pushing boundaries with clear algorithms, providing high-performance open-source code that remains readable and pythonic, and prioritizing clarity and educational value over exhaustive features.

ChatRex

ChatRex is a Multimodal Large Language Model (MLLM) designed to seamlessly integrate fine-grained object perception and robust language understanding. By adopting a decoupled architecture with a retrieval-based approach for object detection and leveraging high-resolution visual inputs, ChatRex addresses key challenges in perception tasks. It is powered by the Rexverse-2M dataset with diverse image-region-text annotations. ChatRex can be applied to various scenarios requiring fine-grained perception, such as object detection, grounded conversation, grounded image captioning, and region understanding.

UniChat

UniChat is a pipeline tool for creating online and offline chat-bots in Unity. It leverages Unity.Sentis and text vector embedding technology to enable offline mode text content search based on vector databases. The tool includes a chain toolkit for embedding LLM and Agent in games, along with middleware components for Text to Speech, Speech to Text, and Sub-classifier functionalities. UniChat also offers a tool for invoking tools based on ReActAgent workflow, allowing users to create personalized chat scenarios and character cards. The tool provides a comprehensive solution for designing flexible conversations in games while maintaining developer's ideas.

LightRAG

LightRAG is a repository hosting the code for LightRAG, a system that supports seamless integration of custom knowledge graphs, Oracle Database 23ai, Neo4J for storage, and multiple file types. It includes features like entity deletion, batch insert, incremental insert, and graph visualization. LightRAG provides an API server implementation for RESTful API access to RAG operations, allowing users to interact with it through HTTP requests. The repository also includes evaluation scripts, code for reproducing results, and a comprehensive code structure.

MCPSharp

MCPSharp is a .NET library that helps build Model Context Protocol (MCP) servers and clients for AI assistants and models. It allows creating MCP-compliant tools, connecting to existing MCP servers, exposing .NET methods as MCP endpoints, and handling MCP protocol details seamlessly. With features like attribute-based API, JSON-RPC support, parameter validation, and type conversion, MCPSharp simplifies the development of AI capabilities in applications through standardized interfaces.

chatgpt

The ChatGPT R package provides a set of features to assist in R coding. It includes addins like Ask ChatGPT, Comment selected code, Complete selected code, Create unit tests, Create variable name, Document code, Explain selected code, Find issues in the selected code, Optimize selected code, and Refactor selected code. Users can interact with ChatGPT to get code suggestions, explanations, and optimizations. The package helps in improving coding efficiency and quality by providing AI-powered assistance within the RStudio environment.

LarAgent

LarAgent is a framework designed to simplify the creation and management of AI agents within Laravel projects. It offers an Eloquent-like syntax for creating and managing AI agents, Laravel-style artisan commands, flexible agent configuration, structured output handling, image input support, and extensibility. LarAgent supports multiple chat history storage options, custom tool creation, event system for agent interactions, multiple provider support, and can be used both in Laravel and standalone environments. The framework is constantly evolving to enhance developer experience, improve AI capabilities, enhance security and storage features, and enable advanced integrations like provider fallback system, Laravel Actions integration, and voice chat support.

letta

Letta is an open source framework for building stateful LLM applications. It allows users to build stateful agents with advanced reasoning capabilities and transparent long-term memory. The framework is white box and model-agnostic, enabling users to connect to various LLM API backends. Letta provides a graphical interface, the Letta ADE, for creating, deploying, interacting, and observing with agents. Users can access Letta via REST API, Python, Typescript SDKs, and the ADE. Letta supports persistence by storing agent data in a database, with PostgreSQL recommended for data migrations. Users can install Letta using Docker or pip, with Docker defaulting to PostgreSQL and pip defaulting to SQLite. Letta also offers a CLI tool for interacting with agents. The project is open source and welcomes contributions from the community.

IntelliNode

IntelliNode is a javascript module that integrates cutting-edge AI models like ChatGPT, LLaMA, WaveNet, Gemini, and Stable diffusion into projects. It offers functions for generating text, speech, and images, as well as semantic search, multi-model evaluation, and chatbot capabilities. The module provides a wrapper layer for low-level model access, a controller layer for unified input handling, and a function layer for abstract functionality tailored to various use cases.

volga

Volga is a general purpose real-time data processing engine in Python for modern AI/ML systems. It aims to be a Python-native alternative to Flink/Spark Streaming with extended functionality for real-time AI/ML workloads. It provides a hybrid push+pull architecture, Entity API for defining data entities and feature pipelines, DataStream API for general data processing, and customizable data connectors. Volga can run on a laptop or a distributed cluster, making it suitable for building custom real-time AI/ML feature platforms or general data pipelines without relying on third-party platforms.

continuous-eval

Open-Source Evaluation for LLM Applications. `continuous-eval` is an open-source package created for granular and holistic evaluation of GenAI application pipelines. It offers modularized evaluation, a comprehensive metric library covering various LLM use cases, the ability to leverage user feedback in evaluation, and synthetic dataset generation for testing pipelines. Users can define their own metrics by extending the Metric class. The tool allows running evaluation on a pipeline defined with modules and corresponding metrics. Additionally, it provides synthetic data generation capabilities to create user interaction data for evaluation or training purposes.

curator

Bespoke Curator is an open-source tool for data curation and structured data extraction. It provides a Python library for generating synthetic data at scale, with features like programmability, performance optimization, caching, and integration with HuggingFace Datasets. The tool includes a Curator Viewer for dataset visualization and offers a rich set of functionalities for creating and refining data generation strategies.

sre

SmythOS is an operating system designed for building, deploying, and managing intelligent AI agents at scale. It provides a unified SDK and resource abstraction layer for various AI services, making it easy to scale and flexible. With an agent-first design, developer-friendly SDK, modular architecture, and enterprise security features, SmythOS offers a robust foundation for AI workloads. The system is built with a philosophy inspired by traditional operating system kernels, ensuring autonomy, control, and security for AI agents. SmythOS aims to make shipping production-ready AI agents accessible and open for everyone in the coming Internet of Agents era.

For similar tasks

langgraph4j

LangGraph for Java is a library designed for building stateful, multi-agent applications with LLMs. It is a porting of the original LangGraph from the LangChain AI project to Java. The library allows users to define agent states, nodes, and edges in a graph structure to create complex workflows. It integrates with LangChain4j and provides tools for executing actions based on agent decisions. LangGraph for Java enables users to create asynchronous node actions, conditional edges, and normal edges to model decision-making processes in applications.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.