dom-to-semantic-markdown

DOM to Semantic-Markdown for use with LLMs

Stars: 708

DOM to Semantic Markdown is a tool that converts HTML DOM to Semantic Markdown for use in Large Language Models (LLMs). It maximizes semantic information, token efficiency, and preserves metadata to enhance LLMs' processing capabilities. The tool captures rich web content structure, including semantic tags, image metadata, table structures, and link destinations. It offers customizable conversion options and supports both browser and Node.js environments.

README:

This library converts HTML DOM to a semantic Markdown format optimized for use with Large Language Models (LLMs). It preserves the semantic structure of web content, extracts essential metadata, and reduces token usage compared to raw HTML, making it easier for LLMs to understand and process information.

-

Semantic Structure Preservation: Retains the meaning of HTML elements like

<header>,<footer>,<nav>, and more. - Metadata Extraction: Captures important metadata such as title, description, keywords, Open Graph tags, Twitter Card tags, and JSON-LD data.

- Token Efficiency: Optimizes for token usage through URL refification and concise representation of content.

- Main Content Detection: Automatically identifies and extracts the primary content section of a webpage.

- Table Column Tracking: Adds unique identifiers to table columns, improving LLM's ability to correlate data across rows.

Here are examples showcasing the library's special features using the CLI tool:

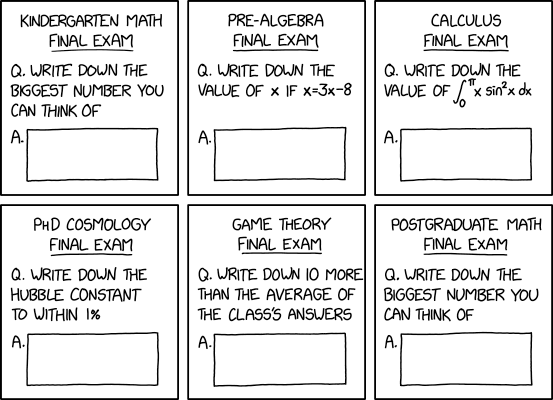

1. Simple Content Extraction:

npx d2m@latest -u https://xkcd.comThis command fetches https://xkcd.com and converts it to Markdown

Click to view the output

- [Archive](/archive)

- [What If?](https://what-if.xkcd.com/)

- [About](/about)

- [Feed](/atom.xml) • [Email](/newsletter/)

- [TW](https://twitter.com/xkcd/) • [FB](https://www.facebook.com/TheXKCD/)

• [IG](https://www.instagram.com/xkcd/)

- [-Books-](/books/)

- [What If? 2](/what-if-2/)

- [WI?](/what-if/) • [TE](/thing-explainer/) • [HT](/how-to/)

<a href="/"></a> A webcomic of romance,

sarcasm, math, and language. [Special 10th anniversary edition of WHAT IF?](https://xkcd.com/what-if/) —revised and

annotated with brand-new illustrations and answers to important questions you never thought to ask—coming from

November 2024. Preorder [here](https://bit.ly/WhatIf10th) ! Exam Numbers

- [|<](/1/)

- [< Prev](/2965/)

- [Random](//c.xkcd.com/random/comic/)

- [Next >](about:blank#)

- [>|](/)

- [|<](/1/)

- [< Prev](/2965/)

- [Random](//c.xkcd.com/random/comic/)

- [Next >](about:blank#)

- [>|](/)

Permanent link to this comic: [https://xkcd.com/2966/](https://xkcd.com/2966)

Image URL (for

hotlinking/embedding): [https://imgs.xkcd.com/comics/exam_numbers.png](https://imgs.xkcd.com/comics/exam_numbers.png)

<a href="//xkcd.com/1732/"></a>

[RSS Feed](/rss.xml) - [Atom Feed](/atom.xml) - [Email](/newsletter/)

Comics I enjoy:

[Three Word Phrase](http://threewordphrase.com/) , [SMBC](https://www.smbc-comics.com/) , [Dinosaur Comics](https://www.qwantz.com/) , [Oglaf](https://oglaf.com/) (

nsfw), [A Softer World](https://www.asofterworld.com/) , [Buttersafe](https://buttersafe.com/) , [Perry Bible Fellowship](https://pbfcomics.com/) , [Questionable Content](https://questionablecontent.net/) , [Buttercup Festival](http://www.buttercupfestival.com/) , [Homestuck](https://www.homestuck.com/) , [Junior Scientist Power Hour](https://www.jspowerhour.com/)

Other things:

[Tips on technology and government](https://medium.com/civic-tech-thoughts-from-joshdata/so-you-want-to-reform-democracy-7f3b1ef10597) ,

[Climate FAQ](https://www.nytimes.com/interactive/2017/climate/what-is-climate-change.html) , [Katharine Hayhoe](https://twitter.com/KHayhoe)

xkcd.com is best viewed with Netscape Navigator 4.0 or below on a Pentium 3±1 emulated in Javascript on an Apple IIGS

at a screen resolution of 1024x1. Please enable your ad blockers, disable high-heat drying, and remove your device

from Airplane Mode and set it to Boat Mode. For security reasons, please leave caps lock on while browsing. This work is

licensed under

a [Creative Commons Attribution-NonCommercial 2.5 License](https://creativecommons.org/licenses/by-nc/2.5/).

This means you're free to copy and share these comics (but not to sell them). [More details](/license.html).2. Table Column Tracking:

npx d2m@latest -u https://softwareyoga.com/latency-numbers-everyone-should-know/ -t -eThis command fetches and converts the main content from to Markdown and adds unique identifiers to table columns, aiding LLMs in understanding table structure.

Click to view the output

# Latency Numbers Everyone Should Know

## Latency

In a computer network, latency is defined as the amount of time it takes for a packet of data to get from one designated

point to another.

In more general terms, it is the amount of time between the cause and the observation of the effect.

As you would expect, latency is important, very important. As programmers, we all know reading from disk takes longer

than reading from memory or the fact that L1 cache is faster than the L2 cache.

But do you know the orders of magnitude by which these aspects are faster/slower compared to others?

## Latency for common operations

Jeff Dean from Google studied exactly that and came up with figures for latency in various situations.

With improving hardware, the latency at the higher ends of the spectrum are reducing, but not enough to ignore them

completely! For instance, to read 1MB sequentially from disk might have taken 20,000,000 ns a decade earlier and with

the advent of SSDs may probably take 1,000,000 ns today. But it is never going to surpass reading directly from memory.

The table below presents the latency for the most common operations on commodity hardware. These data are only

approximations and will vary with the hardware and the execution environment of your code. However, they do serve their

primary purpose, which is to enable us make informed technical decisions to reduce latency.

For better comprehension of  the multi-fold increase in latency, scaled figures in relation to L2 cache are also

provided by assuming that the L1 cache reference is 1 sec.

**Scroll horizontally on the table in smaller screens**

| Operation <!-- col-0 --> | Note <!-- col-1 --> | Latency <!-- col-2 --> | Scaled Latency <!-- col-3 --> |

| --- | --- | --- | --- |

| L1 cache reference <!-- col-0 --> | Level-1 cache, usually built onto the microprocessor chip itself. <!-- col-1 --> | 0.5 ns <!-- col-2 --> | Consider L1 cache reference duration is 1 sec <!-- col-3 --> |

| Branch mispredict <!-- col-0 --> | During the execution of a program, CPU predicts the next set of instructions. Branch misprediction is when it makes the wrong prediction. Hence, the previous prediction has to be erased and new one calculated and placed on the execution stack. <!-- col-1 --> | 5 ns <!-- col-2 --> | 10 s <!-- col-3 --> |

| L2 cache reference <!-- col-0 --> | Level-2 cache is memory built on a separate chip. <!-- col-1 --> | 7 ns <!-- col-2 --> | 14 s <!-- col-3 --> |

| Mutex lock/unlock <!-- col-0 --> | Simple synchronization method used to ensure exclusive access to resources shared between many threads. <!-- col-1 --> | 25 ns <!-- col-2 --> | 50 s <!-- col-3 --> |

| Main memory reference <!-- col-0 --> | Time to reference main memory i.e. RAM. <!-- col-1 --> | 100 ns <!-- col-2 --> | 3m 20s <!-- col-3 --> |

| Compress 1K bytes with Snappy <!-- col-0 --> | Snappy is a fast data compression and decompression library written in C++ by Google and used in many Google projects like BigTable, MapReduce and other open source projects. <!-- col-1 --> | 3,000 ns <!-- col-2 --> | 1h 40 m <!-- col-3 --> |

| Send 1K bytes over 1 Gbps network <!-- col-0 --> | <!-- col-1 --> | 10,000 ns <!-- col-2 --> | 5h 33m 20s <!-- col-3 --> |

| Read 1 MB sequentially from memory <!-- col-0 --> | Read from RAM. <!-- col-1 --> | 250,000 ns <!-- col-2 --> | 5d 18h 53m 20s <!-- col-3 --> |

| Round trip within same datacenter <!-- col-0 --> | We can assume that the DNS lookup will be much faster within a datacenter than it is to go over an external router. <!-- col-1 --> | 500,000 ns <!-- col-2 --> | 11d 13h 46m 40s <!-- col-3 --> |

| Read 1 MB sequentially from SSD disk <!-- col-0 --> | Assumes SSD disk. SSD boasts random data access times of 100000 ns or less. <!-- col-1 --> | 1,000,000 ns <!-- col-2 --> | 23d 3h 33m 20s <!-- col-3 --> |

| Disk seek <!-- col-0 --> | Disk seek is method to get to the sector and head in the disk where the required data exists. <!-- col-1 --> | 10,000,000 ns <!-- col-2 --> | 231d 11h 33m 20s <!-- col-3 --> |

| Read 1 MB sequentially from disk <!-- col-0 --> | Assumes regular disk, not SSD. Check the difference in comparison to SSD! <!-- col-1 --> | 20,000,000 ns <!-- col-2 --> | 462d 23h 6m 40s <!-- col-3 --> |

| Send packet CA->Netherlands->CA <!-- col-0 --> | Round trip for packet data from U.S.A to Europe and back. <!-- col-1 --> | 150,000,000 ns <!-- col-2 --> | 3472d 5h 20m <!-- col-3 --> |

### References:

1. [Designs, Lessons and Advice from Building Large Distributed Systems](http://www.cs.cornell.edu/projects/ladis2009/talks/dean-keynote-ladis2009.pdf)

2. [Peter Norvig’s post on – Teach Yourself Programming in Ten Years](http://norvig.com/21-days.html#answers)3. Metadata Extraction (Basic):

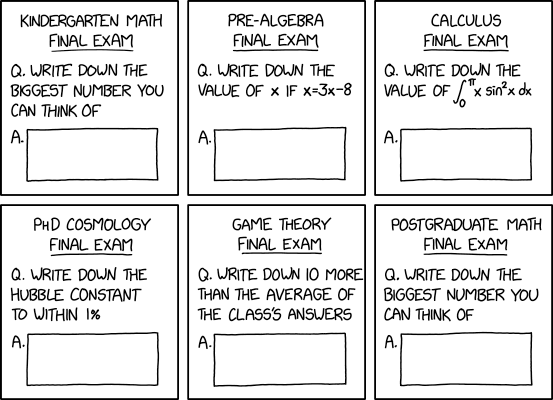

npx d2m@latest -u https://xkcd.com -meta basicThis command extracts basic metadata (title, description, keywords) and includes it in the Markdown output.

Click to view the output

---

title: "xkcd: Exam Numbers"

---

- [Archive](/archive)

- [What If?](https://what-if.xkcd.com/)

- [About](/about)

- [Feed](/atom.xml) • [Email](/newsletter/)

- [TW](https://twitter.com/xkcd/) • [FB](https://www.facebook.com/TheXKCD/)

• [IG](https://www.instagram.com/xkcd/)

- [-Books-](/books/)

- [What If? 2](/what-if-2/)

- [WI?](/what-if/) • [TE](/thing-explainer/) • [HT](/how-to/)

<a href="/"></a> A webcomic of romance,

sarcasm, math, and language. [Special 10th anniversary edition of WHAT IF?](https://xkcd.com/what-if/) —revised and

annotated with brand-new illustrations and answers to important questions you never thought to ask—coming from

November 2024. Preorder [here](https://bit.ly/WhatIf10th) ! Exam Numbers

- [|<](/1/)

- [< Prev](/2965/)

- [Random](//c.xkcd.com/random/comic/)

- [Next >](about:blank#)

- [>|](/)

- [|<](/1/)

- [< Prev](/2965/)

- [Random](//c.xkcd.com/random/comic/)

- [Next >](about:blank#)

- [>|](/)

Permanent link to this comic: [https://xkcd.com/2966/](https://xkcd.com/2966)

Image URL (for

hotlinking/embedding): [https://imgs.xkcd.com/comics/exam_numbers.png](https://imgs.xkcd.com/comics/exam_numbers.png)

<a href="//xkcd.com/1732/"></a>

[RSS Feed](/rss.xml) - [Atom Feed](/atom.xml) - [Email](/newsletter/)

Comics I enjoy:

[Three Word Phrase](http://threewordphrase.com/) , [SMBC](https://www.smbc-comics.com/) , [Dinosaur Comics](https://www.qwantz.com/) , [Oglaf](https://oglaf.com/) (

nsfw), [A Softer World](https://www.asofterworld.com/) , [Buttersafe](https://buttersafe.com/) , [Perry Bible Fellowship](https://pbfcomics.com/) , [Questionable Content](https://questionablecontent.net/) , [Buttercup Festival](http://www.buttercupfestival.com/) , [Homestuck](https://www.homestuck.com/) , [Junior Scientist Power Hour](https://www.jspowerhour.com/)

Other things:

[Tips on technology and government](https://medium.com/civic-tech-thoughts-from-joshdata/so-you-want-to-reform-democracy-7f3b1ef10597) ,

[Climate FAQ](https://www.nytimes.com/interactive/2017/climate/what-is-climate-change.html) , [Katharine Hayhoe](https://twitter.com/KHayhoe)

xkcd.com is best viewed with Netscape Navigator 4.0 or below on a Pentium 3±1 emulated in Javascript on an Apple IIGS

at a screen resolution of 1024x1. Please enable your ad blockers, disable high-heat drying, and remove your device

from Airplane Mode and set it to Boat Mode. For security reasons, please leave caps lock on while browsing. This work is

licensed under

a [Creative Commons Attribution-NonCommercial 2.5 License](https://creativecommons.org/licenses/by-nc/2.5/).

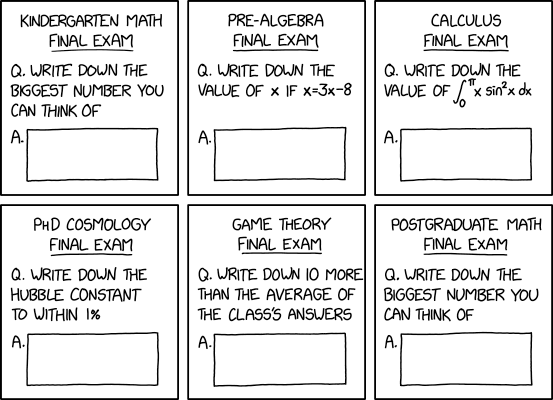

This means you're free to copy and share these comics (but not to sell them). [More details](/license.html).4. Metadata Extraction (Extended):

npx d2m@latest -u https://xkcd.com -meta extendedThis command extracts extended metadata, including Open Graph, Twitter Card tags, and JSON-LD data, and includes it in the Markdown output.

Click to view the output

---

title: "xkcd: Exam Numbers"

openGraph:

site_name: "xkcd"

title: "Exam Numbers"

url: "https://xkcd.com/2966/"

image: "https://imgs.xkcd.com/comics/exam_numbers_2x.png"

twitter:

card: "summary_large_image"

---

- [Archive](/archive)

- [What If?](https://what-if.xkcd.com/)

- [About](/about)

- [Feed](/atom.xml) • [Email](/newsletter/)

- [TW](https://twitter.com/xkcd/) • [FB](https://www.facebook.com/TheXKCD/)

• [IG](https://www.instagram.com/xkcd/)

- [-Books-](/books/)

- [What If? 2](/what-if-2/)

- [WI?](/what-if/) • [TE](/thing-explainer/) • [HT](/how-to/)

<a href="/"></a> A webcomic of romance,

sarcasm, math, and language. [Special 10th anniversary edition of WHAT IF?](https://xkcd.com/what-if/) —revised and

annotated with brand-new illustrations and answers to important questions you never thought to ask—coming from

November 2024. Preorder [here](https://bit.ly/WhatIf10th) ! Exam Numbers

- [|<](/1/)

- [< Prev](/2965/)

- [Random](//c.xkcd.com/random/comic/)

- [Next >](about:blank#)

- [>|](/)

- [|<](/1/)

- [< Prev](/2965/)

- [Random](//c.xkcd.com/random/comic/)

- [Next >](about:blank#)

- [>|](/)

Permanent link to this comic: [https://xkcd.com/2966/](https://xkcd.com/2966)

Image URL (for

hotlinking/embedding): [https://imgs.xkcd.com/comics/exam_numbers.png](https://imgs.xkcd.com/comics/exam_numbers.png)

<a href="//xkcd.com/1732/"></a>

[RSS Feed](/rss.xml) - [Atom Feed](/atom.xml) - [Email](/newsletter/)

Comics I enjoy:

[Three Word Phrase](http://threewordphrase.com/) , [SMBC](https://www.smbc-comics.com/) , [Dinosaur Comics](https://www.qwantz.com/) , [Oglaf](https://oglaf.com/) (

nsfw), [A Softer World](https://www.asofterworld.com/) , [Buttersafe](https://buttersafe.com/) , [Perry Bible Fellowship](https://pbfcomics.com/) , [Questionable Content](https://questionablecontent.net/) , [Buttercup Festival](http://www.buttercupfestival.com/) , [Homestuck](https://www.homestuck.com/) , [Junior Scientist Power Hour](https://www.jspowerhour.com/)

Other things:

[Tips on technology and government](https://medium.com/civic-tech-thoughts-from-joshdata/so-you-want-to-reform-democracy-7f3b1ef10597) ,

[Climate FAQ](https://www.nytimes.com/interactive/2017/climate/what-is-climate-change.html) , [Katharine Hayhoe](https://twitter.com/KHayhoe)

xkcd.com is best viewed with Netscape Navigator 4.0 or below on a Pentium 3±1 emulated in Javascript on an Apple IIGS

at a screen resolution of 1024x1. Please enable your ad blockers, disable high-heat drying, and remove your device

from Airplane Mode and set it to Boat Mode. For security reasons, please leave caps lock on while browsing. This work is

licensed under

a [Creative Commons Attribution-NonCommercial 2.5 License](https://creativecommons.org/licenses/by-nc/2.5/).

This means you're free to copy and share these comics (but not to sell them). [More details](/license.html).npm install dom-to-semantic-markdown> npx d2m@latest -h

Usage: d2m [options]

Convert DOM to Semantic Markdown

Options:

-V, --version output the version number

-i, --input <file> Input HTML file

-o, --output <file> Output Markdown file

-e, --extract-main Extract main content

-u, --url <url> URL to fetch HTML content from

-t, --track-table-columns Enable table column tracking for improved LLM data correlation

-meta, --include-meta-data <"basic" | "extended"> Include metadata extracted from the HTML head

-h, --help display help for commandimport {convertHtmlToMarkdown} from 'dom-to-semantic-markdown';

const markdown = convertHtmlToMarkdown(document.body);

console.log(markdown);import {convertHtmlToMarkdown} from 'dom-to-semantic-markdown';

import {JSDOM} from 'jsdom';

const html = '<h1>Hello, World!</h1><p>This is a <strong>test</strong>.</p>';

const dom = new JSDOM(html);

const markdown = convertHtmlToMarkdown(html, {overrideDOMParser: new dom.window.DOMParser()});

console.log(markdown);d2m -i input.html -o output.md # Convert input.html to output.md

d2m -u https://example.com -o output.md # Fetch and convert a webpage to Markdown

d2m -i input.html -e # Extract main content from input.html

d2m -i input.html -t # Enable table column tracking

d2m -i input.html -meta basic # Include basic metadata

d2m -i input.html -meta extended # Include extended metadataConverts an HTML string to semantic Markdown.

Converts an HTML Element to semantic Markdown.

-

websiteDomain?: string: The domain of the website being converted. -

extractMainContent?: boolean: Whether to extract only the main content of the page. -

refifyUrls?: boolean: Whether to convert URLs to reference-style links. -

debug?: boolean: Enable debug logging. -

overrideDOMParser?: DOMParser: Custom DOMParser for Node.js environments. -

enableTableColumnTracking?: boolean: Adds unique identifiers to table columns. -

overrideElementProcessing?: (element: Element, options: ConversionOptions, indentLevel: number) => SemanticMarkdownAST[] | undefined: Custom processing for HTML elements. -

processUnhandledElement?: (element: Element, options: ConversionOptions, indentLevel: number) => SemanticMarkdownAST[] | undefined: Handler for unknown HTML elements. -

overrideNodeRenderer?: (node: SemanticMarkdownAST, options: ConversionOptions, indentLevel: number) => string | undefined: Custom renderer for AST nodes. -

renderCustomNode?: (node: CustomNode, options: ConversionOptions, indentLevel: number) => string | undefined: Renderer for custom AST nodes. -

includeMetaData?: 'basic' | 'extended': Controls whether to include metadata extracted from the HTML head.-

'basic': Includes standard meta tags like title, description, and keywords. -

'extended': Includes basic meta tags, Open Graph tags, Twitter Card tags, and JSON-LD data.

-

The semantic Markdown produced by this library is optimized for use with Large Language Models (LLMs). To use it effectively:

- Extract the Markdown content using the library.

- Start with a brief instruction or context for the LLM.

- Wrap the extracted Markdown in triple backticks (```).

- Follow the Markdown with your question or prompt.

Example:

The following is a semantic Markdown representation of a webpage. Please analyze its content:

```markdown

{paste your extracted markdown here}

```

{your question, e.g., "What are the main points discussed in this article?"}

This format helps the LLM understand its task and the context of the content, enabling more accurate and relevant responses to your questions.

Contributions are welcome! See the CONTRIBUTING.md file for details.

This project is licensed under the MIT License. See the LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for dom-to-semantic-markdown

Similar Open Source Tools

dom-to-semantic-markdown

DOM to Semantic Markdown is a tool that converts HTML DOM to Semantic Markdown for use in Large Language Models (LLMs). It maximizes semantic information, token efficiency, and preserves metadata to enhance LLMs' processing capabilities. The tool captures rich web content structure, including semantic tags, image metadata, table structures, and link destinations. It offers customizable conversion options and supports both browser and Node.js environments.

superlinked

Superlinked is a compute framework for information retrieval and feature engineering systems, focusing on converting complex data into vector embeddings for RAG, Search, RecSys, and Analytics stack integration. It enables custom model performance in machine learning with pre-trained model convenience. The tool allows users to build multimodal vectors, define weights at query time, and avoid postprocessing & rerank requirements. Users can explore the computational model through simple scripts and python notebooks, with a future release planned for production usage with built-in data infra and vector database integrations.

curator

Bespoke Curator is an open-source tool for data curation and structured data extraction. It provides a Python library for generating synthetic data at scale, with features like programmability, performance optimization, caching, and integration with HuggingFace Datasets. The tool includes a Curator Viewer for dataset visualization and offers a rich set of functionalities for creating and refining data generation strategies.

pytorch-grad-cam

This repository provides advanced AI explainability for PyTorch, offering state-of-the-art methods for Explainable AI in computer vision. It includes a comprehensive collection of Pixel Attribution methods for various tasks like Classification, Object Detection, Semantic Segmentation, and more. The package supports high performance with full batch image support and includes metrics for evaluating and tuning explanations. Users can visualize and interpret model predictions, making it suitable for both production and model development scenarios.

DeepResearch

Tongyi DeepResearch is an agentic large language model with 30.5 billion total parameters, designed for long-horizon, deep information-seeking tasks. It demonstrates state-of-the-art performance across various search benchmarks. The model features a fully automated synthetic data generation pipeline, large-scale continual pre-training on agentic data, end-to-end reinforcement learning, and compatibility with two inference paradigms. Users can download the model directly from HuggingFace or ModelScope. The repository also provides benchmark evaluation scripts and information on the Deep Research Agent Family.

rl

TorchRL is an open-source Reinforcement Learning (RL) library for PyTorch. It provides pytorch and **python-first** , low and high level abstractions for RL that are intended to be **efficient** , **modular** , **documented** and properly **tested**. The code is aimed at supporting research in RL. Most of it is written in python in a highly modular way, such that researchers can easily swap components, transform them or write new ones with little effort.

simple-openai

Simple-OpenAI is a Java library that provides a simple way to interact with the OpenAI API. It offers consistent interfaces for various OpenAI services like Audio, Chat Completion, Image Generation, and more. The library uses CleverClient for HTTP communication, Jackson for JSON parsing, and Lombok to reduce boilerplate code. It supports asynchronous requests and provides methods for synchronous calls as well. Users can easily create objects to communicate with the OpenAI API and perform tasks like text-to-speech, transcription, image generation, and chat completions.

mcp-agent

mcp-agent is a simple, composable framework designed to build agents using the Model Context Protocol. It handles the lifecycle of MCP server connections and implements patterns for building production-ready AI agents in a composable way. The framework also includes OpenAI's Swarm pattern for multi-agent orchestration in a model-agnostic manner, making it the simplest way to build robust agent applications. It is purpose-built for the shared protocol MCP, lightweight, and closer to an agent pattern library than a framework. mcp-agent allows developers to focus on the core business logic of their AI applications by handling mechanics such as server connections, working with LLMs, and supporting external signals like human input.

ChatRex

ChatRex is a Multimodal Large Language Model (MLLM) designed to seamlessly integrate fine-grained object perception and robust language understanding. By adopting a decoupled architecture with a retrieval-based approach for object detection and leveraging high-resolution visual inputs, ChatRex addresses key challenges in perception tasks. It is powered by the Rexverse-2M dataset with diverse image-region-text annotations. ChatRex can be applied to various scenarios requiring fine-grained perception, such as object detection, grounded conversation, grounded image captioning, and region understanding.

CrackSQL

CrackSQL is a powerful SQL dialect translation tool that integrates rule-based strategies with large language models (LLMs) for high accuracy. It enables seamless conversion between dialects (e.g., PostgreSQL → MySQL) with flexible access through Python API, command line, and web interface. The tool supports extensive dialect compatibility, precision & advanced processing, and versatile access & integration. It offers three modes for dialect translation and demonstrates high translation accuracy over collected benchmarks. Users can deploy CrackSQL using PyPI package installation or source code installation methods. The tool can be extended to support additional syntax, new dialects, and improve translation efficiency. The project is actively maintained and welcomes contributions from the community.

LLMRec

LLMRec is a PyTorch implementation for the WSDM 2024 paper 'Large Language Models with Graph Augmentation for Recommendation'. It is a novel framework that enhances recommenders by applying LLM-based graph augmentation strategies to recommendation systems. The tool aims to make the most of content within online platforms to augment interaction graphs by reinforcing u-i interactive edges, enhancing item node attributes, and conducting user node profiling from a natural language perspective.

Scrapegraph-ai

ScrapeGraphAI is a web scraping Python library that utilizes LLM and direct graph logic to create scraping pipelines for websites and local documents. It offers various standard scraping pipelines like SmartScraperGraph, SearchGraph, SpeechGraph, and ScriptCreatorGraph. Users can extract information by specifying prompts and input sources. The library supports different LLM APIs such as OpenAI, Groq, Azure, and Gemini, as well as local models using Ollama. ScrapeGraphAI is designed for data exploration and research purposes, providing a versatile tool for extracting information from web pages and generating outputs like Python scripts, audio summaries, and search results.

continuous-eval

Open-Source Evaluation for LLM Applications. `continuous-eval` is an open-source package created for granular and holistic evaluation of GenAI application pipelines. It offers modularized evaluation, a comprehensive metric library covering various LLM use cases, the ability to leverage user feedback in evaluation, and synthetic dataset generation for testing pipelines. Users can define their own metrics by extending the Metric class. The tool allows running evaluation on a pipeline defined with modules and corresponding metrics. Additionally, it provides synthetic data generation capabilities to create user interaction data for evaluation or training purposes.

LLM4Decompile

LLM4Decompile is an open-source large language model dedicated to decompilation of Linux x86_64 binaries, supporting GCC's O0 to O3 optimization levels. It focuses on assessing re-executability of decompiled code through HumanEval-Decompile benchmark. The tool includes models with sizes ranging from 1.3 billion to 33 billion parameters, available on Hugging Face. Users can preprocess C code into binary and assembly instructions, then decompile assembly instructions into C using LLM4Decompile. Ongoing efforts aim to expand capabilities to support more architectures and configurations, integrate with decompilation tools like Ghidra and Rizin, and enhance performance with larger training datasets.

raid

RAID is the largest and most comprehensive dataset for evaluating AI-generated text detectors. It contains over 10 million documents spanning 11 LLMs, 11 genres, 4 decoding strategies, and 12 adversarial attacks. RAID is designed to be the go-to location for trustworthy third-party evaluation of popular detectors. The dataset covers diverse models, domains, sampling strategies, and attacks, making it a valuable resource for training detectors, evaluating generalization, protecting against adversaries, and comparing to state-of-the-art models from academia and industry.

WordLlama

WordLlama is a fast, lightweight NLP toolkit optimized for CPU hardware. It recycles components from large language models to create efficient word representations. It offers features like Matryoshka Representations, low resource requirements, binarization, and numpy-only inference. The tool is suitable for tasks like semantic matching, fuzzy deduplication, ranking, and clustering, making it a good option for NLP-lite tasks and exploratory analysis.

For similar tasks

dom-to-semantic-markdown

DOM to Semantic Markdown is a tool that converts HTML DOM to Semantic Markdown for use in Large Language Models (LLMs). It maximizes semantic information, token efficiency, and preserves metadata to enhance LLMs' processing capabilities. The tool captures rich web content structure, including semantic tags, image metadata, table structures, and link destinations. It offers customizable conversion options and supports both browser and Node.js environments.

1filellm

1filellm is a command-line data aggregation tool designed for LLM ingestion. It aggregates and preprocesses data from various sources into a single text file, facilitating the creation of information-dense prompts for large language models. The tool supports automatic source type detection, handling of multiple file formats, web crawling functionality, integration with Sci-Hub for research paper downloads, text preprocessing, and token count reporting. Users can input local files, directories, GitHub repositories, pull requests, issues, ArXiv papers, YouTube transcripts, web pages, Sci-Hub papers via DOI or PMID. The tool provides uncompressed and compressed text outputs, with the uncompressed text automatically copied to the clipboard for easy pasting into LLMs.

AudioNotes

AudioNotes is a system built on FunASR and Qwen2 that can quickly extract content from audio and video, and organize it using large models into structured markdown notes for easy reading. Users can interact with the audio and video content, install Ollama, pull models, and deploy services using Docker or locally with a PostgreSQL database. The system provides a seamless way to convert audio and video into structured notes for efficient consumption.

scrape-it-now

Scrape It Now is a versatile tool for scraping websites with features like decoupled architecture, CLI functionality, idempotent operations, and content storage options. The tool includes a scraper component for efficient scraping, ad blocking, link detection, markdown extraction, dynamic content loading, and anonymity features. It also offers an indexer component for creating AI search indexes, chunking content, embedding chunks, and enabling semantic search. The tool supports various configurations for Azure services and local storage, providing flexibility and scalability for web scraping and indexing tasks.

open-deep-research

Open Deep Research is an open-source tool designed to generate AI-powered reports from web search results efficiently. It combines Bing Search API for search results retrieval, JinaAI for content extraction, and customizable report generation. Users can customize settings, export reports in multiple formats, and benefit from rate limiting for stability. The tool aims to streamline research and report creation in a user-friendly platform.

DevDocs

DevDocs is a platform designed to simplify the process of digesting technical documentation for software engineers and developers. It automates the extraction and conversion of web content into markdown format, making it easier for users to access and understand the information. By crawling through child pages of a given URL, DevDocs provides a streamlined approach to gathering relevant data and integrating it into various tools for software development. The tool aims to save time and effort by eliminating the need for manual research and content extraction, ultimately enhancing productivity and efficiency in the development process.

mcp-omnisearch

mcp-omnisearch is a Model Context Protocol (MCP) server that acts as a unified gateway to multiple search providers and AI tools. It integrates Tavily, Perplexity, Kagi, Jina AI, Brave, Exa AI, and Firecrawl to offer a wide range of search, AI response, content processing, and enhancement features through a single interface. The server provides powerful search capabilities, AI response generation, content extraction, summarization, web scraping, structured data extraction, and more. It is designed to work flexibly with the API keys available, enabling users to activate only the providers they have keys for and easily add more as needed.

datalore-localgen-cli

Datalore is a terminal tool for generating structured datasets from local files like PDFs, Word docs, images, and text. It extracts content, uses semantic search to understand context, applies instructions through a generated schema, and outputs clean, structured data. Perfect for converting raw or unstructured local documents into ready-to-use datasets for training, analysis, or experimentation, all without manual formatting.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.