rss-can

🚀 Harness the power of AI, Got RSS CAN be better and simple.

Stars: 61

RSS Can is a tool designed to simplify and improve RSS feed management. It supports various systems and architectures, including Linux and macOS. Users can download the binary from the GitHub release page or use the Docker image for easy deployment. The tool provides CLI parameters and environment variables for customization. It offers features such as memory and Redis cache services, web service configuration, and rule directory settings. The project aims to support RSS pipeline flow, NLP tasks, integration with open-source software rules, and tools like a quick RSS rules generator.

README:

📰 🥫 Got RSS CAN be better and simple.

- Linux: AMD64(x86_64)

- macOS: AMD64(x86_64) / ARMv64

Download the binary from the github release page, with the following command:

./rsscPull the docker image and mount the Feed rules file in the project to the docker container:

docker pull soulteary/rss-can:v0.3.8

docker run --rm -it -p 8080:8080 -v `pwd`/rules:/rules soulteary/rss-can:v0.3.8

All parameters are optional, please adjust according to your needs

The parameters supported by the program can be obtained through -h or --help:

Usage of rssc:

-debug RSS_DEBUG

whether to output debugging logging, env: RSS_DEBUG

-debug-level RSS_DEBUG_LEVEL

set debug log printing level, env: RSS_DEBUG_LEVEL (default "info")

-feed-path RSS_HTTP_FEED_PATH

http feed path, env: RSS_HTTP_FEED_PATH (default "/feed")

-headless-addr RSS_HEADLESS_SERVER

set Headless server address, env: RSS_HEADLESS_SERVER (default "127.0.0.1:9222")

-headless-slow-motion RSS_HEADLESS_SLOW_MOTION

set Headless slow motion, env: RSS_HEADLESS_SLOW_MOTION (default 2)

-host RSS_HOST

web service listening address, env: RSS_HOST (default "0.0.0.0")

-memory RSS_MEMORY

using Memory(build-in) as a cache service, env: RSS_MEMORY (default true)

-memory-expiration RSS_MEMORY_EXPIRATION

set Memory cache expiration, env: RSS_MEMORY_EXPIRATION (default 600)

-port RSS_PORT

web service listening port, env: RSS_PORT (default 8080)

-proxy RSS_PROXY

Proxy, env: RSS_PROXY

-redis RSS_REDIS

using Redis as a cache service, env: RSS_REDIS (default true)

-redis-addr RSS_SERVER

set Redis server address, env: RSS_SERVER (default "127.0.0.1:6379")

-redis-db RSS_REDIS_DB

set Redis db, env: RSS_REDIS_DB

-redis-pass RSS_REDIS_PASSWD

set Redis password, env: RSS_REDIS_PASSWD

-rod string

Set the default value of options used by rod.

-rule RSS_RULE

set Rule directory, env: RSS_RULE (default "./rules")

-timeout-headless RSS_HEADLESS_EXEC_TIMEOUT

set headless execution timeout, env: RSS_HEADLESS_EXEC_TIMEOUT (default 5)

-timeout-js RSS_JS_EXEC_TIMEOUT

set js sandbox code execution timeout, env: RSS_JS_EXEC_TIMEOUT (default 200)

-timeout-request RSS_REQUEST_TIMEOUT

set request timeout, env: RSS_REQUEST_TIMEOUT (default 5)

-timeout-server RSS_SERVER_TIMEOUT

set web server response timeout, env: RSS_SERVER_TIMEOUT (default 8)- Base CLI & WebUI Support

- Aggregate Results, JS SDK, Dockerize

- Redis, in-memory cache, Dynamic loading rules

- Charset auto detection, Mix parser support, Improve CSR, Muti-page data extract

- Websites parsing via SSR render, Blog

- Dynamic rule capability, Blog

- Convert website page as RSS feeds, Blog

- Websites parsing via CSR render, Blog

- [ ] Docs: Provide a simple tutorial on how to use Docker images with common technology stacks #16

- [ ] Pipeline: Support RSS pipeline flow, customize information processing tasks and integrate other open-source software

- [ ] AI: NLP tasks

- [ ] Rules: Support merge open-source software rules: rss-bridge / RSSHub

- [ ] Tools: Quick RSS rules generator, like: damoeb/rss-proxy

This project is licensed under the MIT License

The rapid evolution of the project is inseparable from the following excellent open source software, you can click this link to know who they are : Credits

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for rss-can

Similar Open Source Tools

rss-can

RSS Can is a tool designed to simplify and improve RSS feed management. It supports various systems and architectures, including Linux and macOS. Users can download the binary from the GitHub release page or use the Docker image for easy deployment. The tool provides CLI parameters and environment variables for customization. It offers features such as memory and Redis cache services, web service configuration, and rule directory settings. The project aims to support RSS pipeline flow, NLP tasks, integration with open-source software rules, and tools like a quick RSS rules generator.

moling

MoLing is a computer-use and browser-use MCP Server that implements system interaction through operating system APIs, enabling file system operations such as reading, writing, merging, statistics, and aggregation, as well as the ability to execute system commands. It is a dependency-free local office automation assistant. Requiring no installation of any dependencies, MoLing can be run directly and is compatible with multiple operating systems, including Windows, Linux, and macOS. This eliminates the hassle of dealing with environment conflicts involving Node.js, Python, Docker, and other development environments. Command-line operations are dangerous and should be used with caution. MoLing supports features like file system operations, command-line terminal execution, browser control powered by 'github.com/chromedp/chromedp', and future plans for personal PC data organization, document writing assistance, schedule planning, and life assistant features. MoLing has been tested on macOS but may have issues on other operating systems.

parseable

Parseable is a full stack observability platform designed to ingest, analyze, and extract insights from various types of telemetry data. It can be run locally, in the cloud, or as a managed service. The platform offers features like high availability, smart cache, alerts, role-based access control, OAuth2 support, and OpenTelemetry integration. Users can easily ingest data, query logs, and access the dashboard to monitor and analyze data. Parseable provides a seamless experience for observability and monitoring tasks.

obsei

Obsei is an open-source, low-code, AI powered automation tool that consists of an Observer to collect unstructured data from various sources, an Analyzer to analyze the collected data with various AI tasks, and an Informer to send analyzed data to various destinations. The tool is suitable for scheduled jobs or serverless applications as all Observers can store their state in databases. Obsei is still in alpha stage, so caution is advised when using it in production. The tool can be used for social listening, alerting/notification, automatic customer issue creation, extraction of deeper insights from feedbacks, market research, dataset creation for various AI tasks, and more based on creativity.

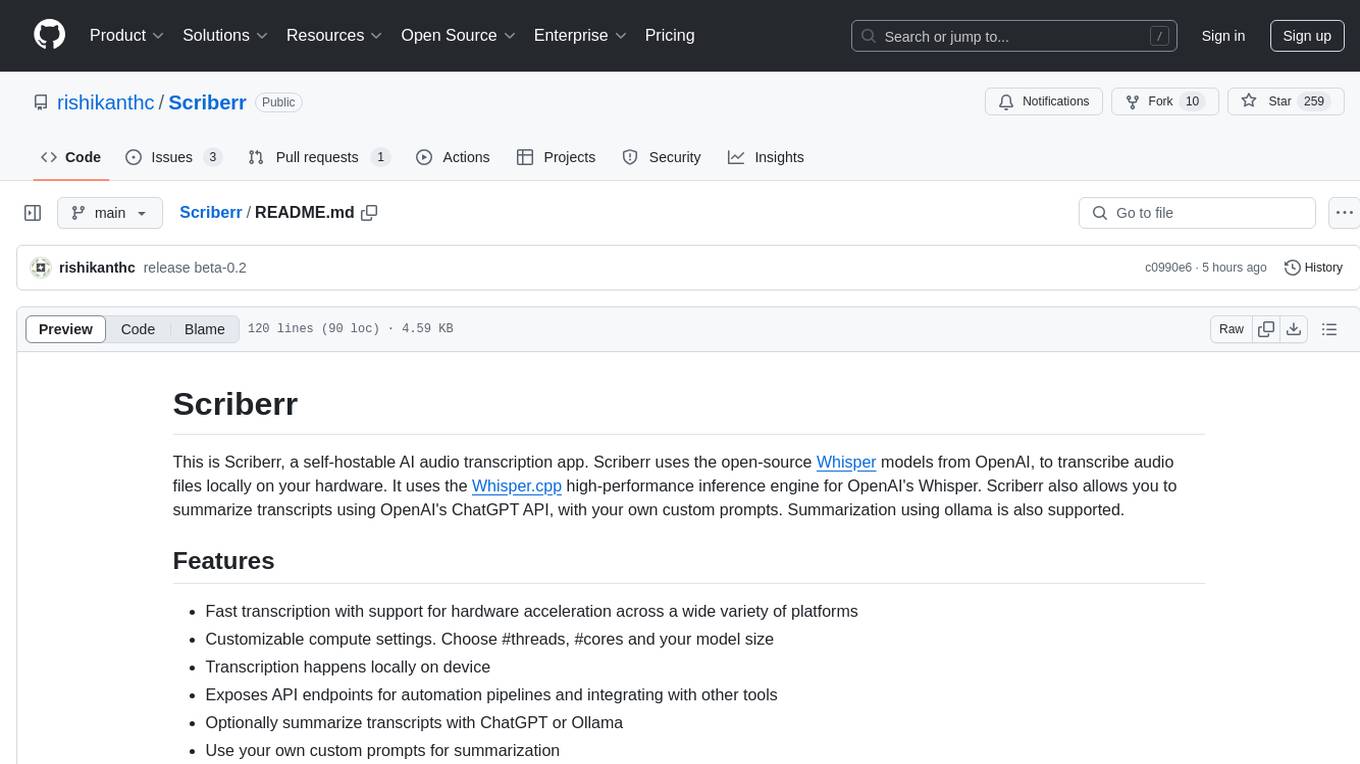

Scriberr

Scriberr is a self-hostable AI audio transcription app that utilizes open-source Whisper models from OpenAI for transcribing audio files locally on user's hardware. It offers fast transcription with customizable compute settings, local transcription on device, API endpoints for automation, and integration with other tools. Users can optionally summarize transcripts using ChatGPT or Ollama, with support for custom prompts. The app is mobile-ready, simple, and easy to use, with planned features including speaker diarization, audio recording, file actions, full text fuzzy search, tag-based organization, follow-along text with playback, edit summaries, export options, and support for other languages. Despite being in beta, Scriberr is functional and usable, albeit with some rough edges and minor bugs.

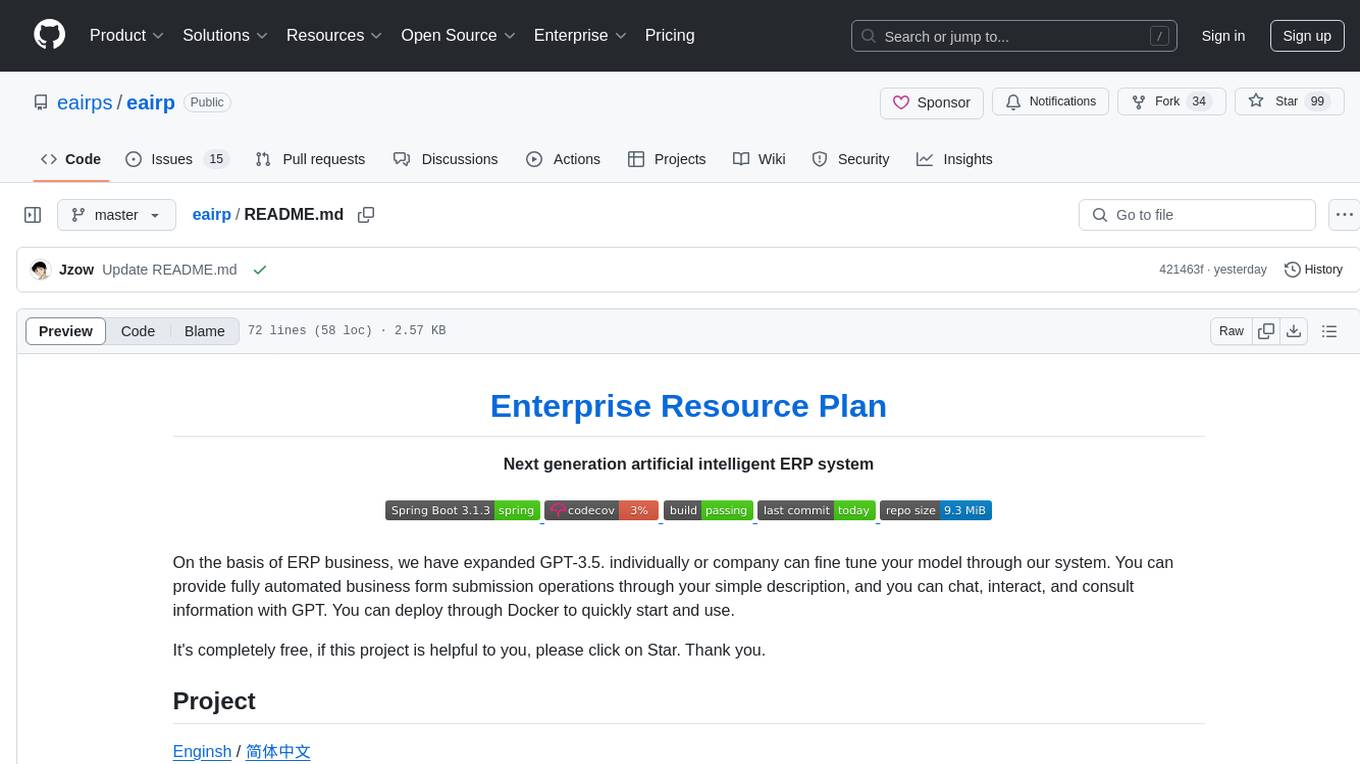

eairp

Next generation artificial intelligent ERP system. On the basis of ERP business, we have expanded GPT-3.5. Individually or company can fine-tune your model through our system. You can provide fully automated business form submission operations through your simple description, and you can chat, interact, and consult information with GPT. You can deploy through Docker to quickly start and use. Completely free project. Enginsh / 简体中文.

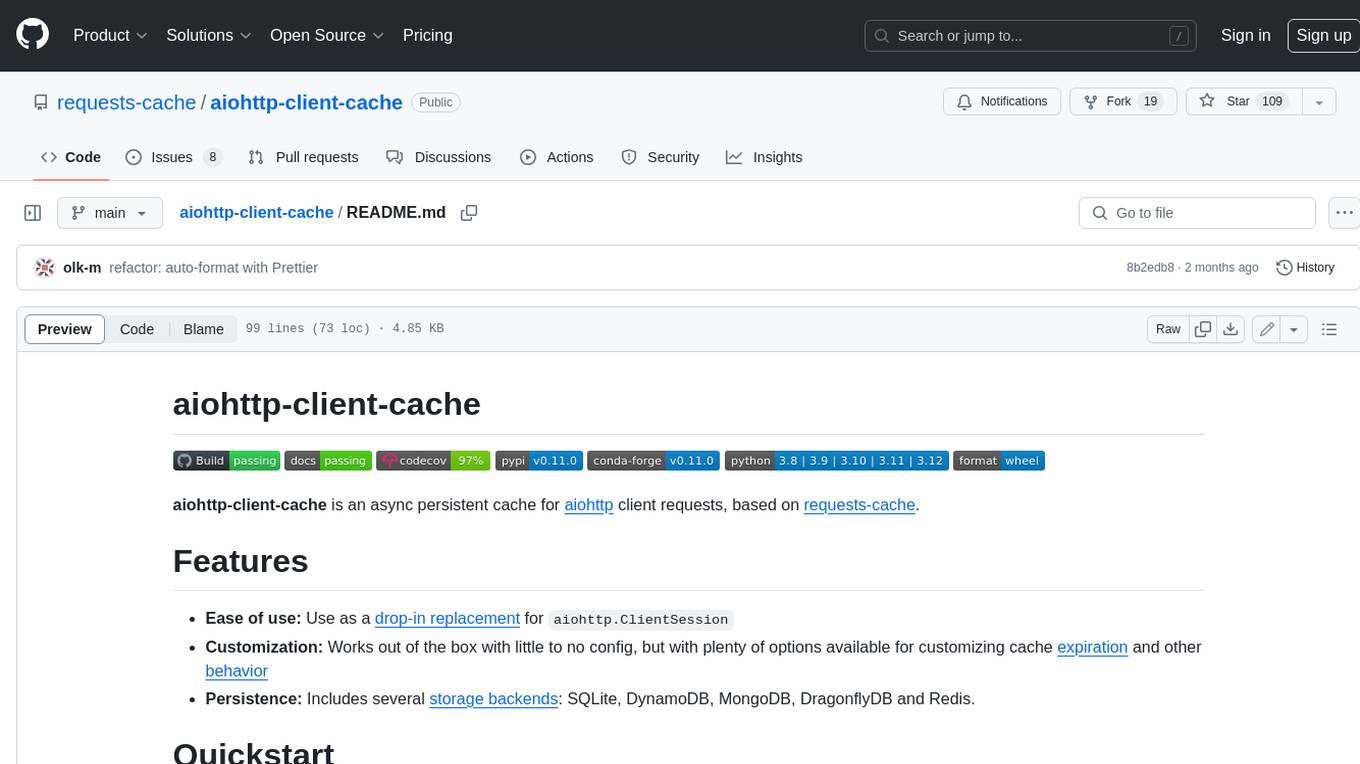

aiohttp-client-cache

aiohttp-client-cache is an asynchronous persistent cache for aiohttp client requests, based on requests-cache. It is easy to use, customizable, and persistent, with several storage backends available, including SQLite, DynamoDB, MongoDB, DragonflyDB, and Redis.

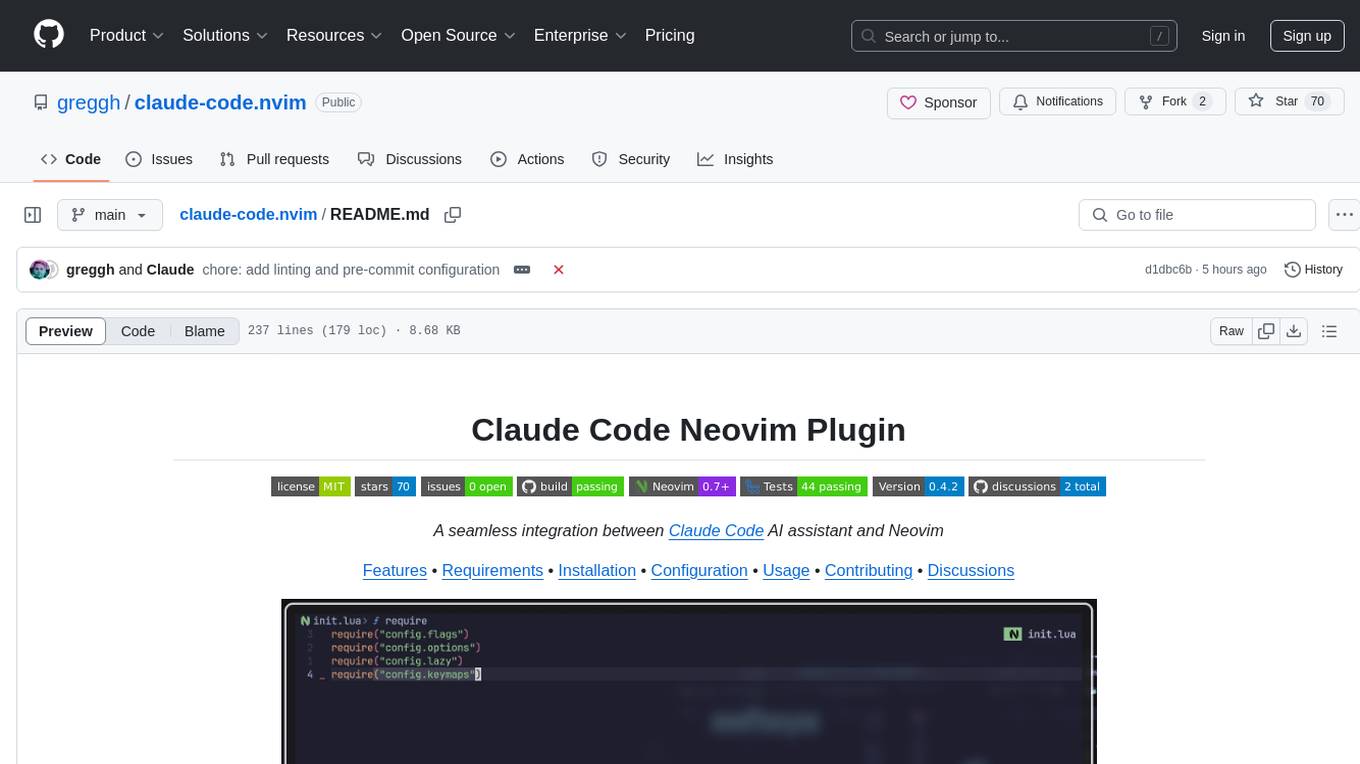

claude-code.nvim

Claude Code Neovim Plugin is a seamless integration between Claude Code AI assistant and Neovim. It allows users to toggle Claude Code in a terminal window with a single key press, automatically detect and reload files modified by Claude Code, provide real-time buffer updates when files are changed externally, offer customizable window position and size, integrate with which-key, use git project root as working directory, maintain a modular code structure, provide type annotations with LuaCATS for better IDE support, offer configuration validation, and include a testing framework for reliability. The plugin creates a terminal buffer running the Claude Code CLI, sets up autocommands to detect file changes on disk, automatically reloads files modified by Claude Code, provides keymaps and commands for toggling the terminal, and detects git repositories to set the working directory to the git root.

kernel-memory

Kernel Memory (KM) is a multi-modal AI Service specialized in the efficient indexing of datasets through custom continuous data hybrid pipelines, with support for Retrieval Augmented Generation (RAG), synthetic memory, prompt engineering, and custom semantic memory processing. KM is available as a Web Service, as a Docker container, a Plugin for ChatGPT/Copilot/Semantic Kernel, and as a .NET library for embedded applications. Utilizing advanced embeddings and LLMs, the system enables Natural Language querying for obtaining answers from the indexed data, complete with citations and links to the original sources. Designed for seamless integration as a Plugin with Semantic Kernel, Microsoft Copilot and ChatGPT, Kernel Memory enhances data-driven features in applications built for most popular AI platforms.

easy-dataset

Easy Dataset is a specialized application designed to streamline the creation of fine-tuning datasets for Large Language Models (LLMs). It offers an intuitive interface for uploading domain-specific files, intelligently splitting content, generating questions, and producing high-quality training data for model fine-tuning. With Easy Dataset, users can transform domain knowledge into structured datasets compatible with all OpenAI-format compatible LLM APIs, making the fine-tuning process accessible and efficient.

RWKV-Runner

RWKV Runner is a project designed to simplify the usage of large language models by automating various processes. It provides a lightweight executable program and is compatible with the OpenAI API. Users can deploy the backend on a server and use the program as a client. The project offers features like model management, VRAM configurations, user-friendly chat interface, WebUI option, parameter configuration, model conversion tool, download management, LoRA Finetune, and multilingual localization. It can be used for various tasks such as chat, completion, composition, and model inspection.

rikkahub

RikkaHub is a native Android LLM chat client that supports switching between different providers for conversations. It features a modern Android app design with dark mode, support for multiple provider types, multimodal input support, Markdown rendering, search capabilities, prompt variables, QR code export/import, agent customization, ChatGPT-like memory feature, AI translation, and custom HTTP request headers and bodies. The project is developed using Kotlin, Koin, Jetpack Compose, DataStore, Room, Coil, Material You, Navigation Compose, Okhttp, kotlinx.serialization, and compose-icons/lucide.

DB-GPT

DB-GPT is a personal database administrator that can solve database problems by reading documents, using various tools, and writing analysis reports. It is currently undergoing an upgrade. **Features:** * **Online Demo:** * Import documents into the knowledge base * Utilize the knowledge base for well-founded Q&A and diagnosis analysis of abnormal alarms * Send feedbacks to refine the intermediate diagnosis results * Edit the diagnosis result * Browse all historical diagnosis results, used metrics, and detailed diagnosis processes * **Language Support:** * English (default) * Chinese (add "language: zh" in config.yaml) * **New Frontend:** * Knowledgebase + Chat Q&A + Diagnosis + Report Replay * **Extreme Speed Version for localized llms:** * 4-bit quantized LLM (reducing inference time by 1/3) * vllm for fast inference (qwen) * Tiny LLM * **Multi-path extraction of document knowledge:** * Vector database (ChromaDB) * RESTful Search Engine (Elasticsearch) * **Expert prompt generation using document knowledge** * **Upgrade the LLM-based diagnosis mechanism:** * Task Dispatching -> Concurrent Diagnosis -> Cross Review -> Report Generation * Synchronous Concurrency Mechanism during LLM inference * **Support monitoring and optimization tools in multiple levels:** * Monitoring metrics (Prometheus) * Flame graph in code level * Diagnosis knowledge retrieval (dbmind) * Logical query transformations (Calcite) * Index optimization algorithms (for PostgreSQL) * Physical operator hints (for PostgreSQL) * Backup and Point-in-time Recovery (Pigsty) * **Continuously updated papers and experimental reports** This project is constantly evolving with new features. Don't forget to star ⭐ and watch 👀 to stay up to date.

macai

Macai is a native macOS client for interacting with modern AI tools, such as ChatGPT and Ollama. It features organized chats with custom system messages, system-defined light/dark themes, backup and restore functionality, customizable context size, support for any model with a compatible API, formatted code blocks and tables, multiple chat tabs, CoreData data storage, streamed responses, and automatic chat name generation. Macai is in active development, with contributions welcome.

ai-microcore

MicroCore is a collection of python adapters for Large Language Models and Vector Databases / Semantic Search APIs. It allows convenient communication with these services, easy switching between models & services, and separation of business logic from implementation details. Users can keep applications simple and try various models & services without changing application code. MicroCore connects MCP tools to language models easily, supports text completion and chat completion models, and provides features for configuring, installing vendor-specific packages, and using vector databases.

sdk-python

Strands Agents is a lightweight and flexible SDK that takes a model-driven approach to building and running AI agents. It supports various model providers, offers advanced capabilities like multi-agent systems and streaming support, and comes with built-in MCP server support. Users can easily create tools using Python decorators, integrate MCP servers seamlessly, and leverage multiple model providers for different AI tasks. The SDK is designed to scale from simple conversational assistants to complex autonomous workflows, making it suitable for a wide range of AI development needs.

For similar tasks

gptlint

GPTLint is a tool that utilizes Large Language Models (LLMs) to enforce higher-level best practices across a codebase. It offers features such as enforcing rules that are impossible with AST-based approaches, simple markdown format for rules, easy customization of rules, support for custom project-specific rules, content-based caching, and outputting LLM stats per run. GPTLint supports all major LLM providers and local models, augments ESLint instead of replacing it, and includes guidelines for creating custom rules. However, the MVP rules are currently limited to JS/TS only, single-file context only, and do not support autofixing.

rss-can

RSS Can is a tool designed to simplify and improve RSS feed management. It supports various systems and architectures, including Linux and macOS. Users can download the binary from the GitHub release page or use the Docker image for easy deployment. The tool provides CLI parameters and environment variables for customization. It offers features such as memory and Redis cache services, web service configuration, and rule directory settings. The project aims to support RSS pipeline flow, NLP tasks, integration with open-source software rules, and tools like a quick RSS rules generator.

SinkFinder

SinkFinder + LLM is a closed-source semi-automatic vulnerability discovery tool that performs static code analysis on jar/war/zip files. It enhances the capability of LLM large models to verify path reachability and assess the trustworthiness score of the path based on the contextual code environment. Users can customize class and jar exclusions, depth of recursive search, and other parameters through command-line arguments. The tool generates rule.json configuration file after each run and requires configuration of the DASHSCOPE_API_KEY for LLM capabilities. The tool provides detailed logs on high-risk paths, LLM results, and other findings. Rules.json file contains sink rules for various vulnerability types with severity levels and corresponding sink methods.

promptmap

promptmap2 is a vulnerability scanning tool that automatically tests prompt injection attacks on custom LLM applications. It analyzes LLM system prompts, runs them, and sends attack prompts to determine if injection was successful. It has ready-to-use rules to steal system prompts or distract LLM applications. Supports multiple LLM providers like OpenAI, Anthropic, and open source models via Ollama. Customizable test rules in YAML format and automatic model download for Ollama.

mcp

Semgrep MCP Server is a beta server under active development for using Semgrep to scan code for security vulnerabilities. It provides a Model Context Protocol (MCP) for various coding tools to get specialized help in tasks. Users can connect to Semgrep AppSec Platform, scan code for vulnerabilities, customize Semgrep rules, analyze and filter scan results, and compare results. The tool is published on PyPI as semgrep-mcp and can be installed using pip, pipx, uv, poetry, or other methods. It supports CLI and Docker environments for running the server. Integration with VS Code is also available for quick installation. The project welcomes contributions and is inspired by core technologies like Semgrep and MCP, as well as related community projects and tools.

Panora

Panora is an open-source unified API tool that allows users to easily integrate and interact with various software platforms. It provides features like Magic Links for data access, Custom Fields for specific data points, Passthrough Requests for interacting with other platforms, and Webhooks for receiving normalized data. The tool supports integrations with CRM, Ticketing, ATS, HRIS, File Storage, Ecommerce, and more. Users can easily manage contacts, deals, notes, engagements, tasks, users, companies, and other data across different platforms. Panora aims to simplify data management and streamline workflows for businesses.

feeds.fun

Feeds Fun is a self-hosted news reader tool that automatically assigns tags to news entries. Users can create rules to score news based on tags, filter and sort news as needed, and track read news. The tool offers multi/single-user support, feeds management, and various features for personalized news consumption. Users can access the tool's backend as the ffun package on PyPI and the frontend as the feeds-fun package on NPM. Feeds Fun requires setting up OpenAI or Gemini API keys for full tag generation capabilities. The tool uses tag processors to detect tags for news entries, with options for simple and complex processors. Feeds Fun primarily relies on LLM tag processors from OpenAI and Google for tag generation.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.