json-translator

jsontt 💡 - AI JSON Translator with GPT + other FREE translation modules to translate your json/yaml files into other languages ✅ Check Readme ✌ Supports GPT / DeepL / Google / Bing / Libre / Argos

Stars: 577

The json-translator repository provides a free tool to translate JSON/YAML files or JSON objects into different languages using various translation modules. It supports CLI usage and package support, allowing users to translate words, sentences, JSON objects, and JSON files. The tool also offers multi-language translation, ignoring specific words, and safe translation practices. Users can contribute to the project by updating CLI, translation functions, JSON operations, and more. The roadmap includes features like Libre Translate option, Argos Translate option, Bing Translate option, and support for additional translation modules.

README:

- Contact with me on Twitter to advertise your project on jsontt cli

✨ Sponsored by fotogram.ai - Transform Your Selfies into Masterpieces with AI ✨

🚀 AI / FREE JSON & YAML TRANSLATOR 🆓

This package will provide you to translate your JSON/YAML files or JSON objects into different languages FREE.

| Translation Module | Support | FREE |

|---|---|---|

| Google Translate | ✅ | ✅ FREE |

| Google Translate 2 | ✅ | ✅ FREE |

| Microsoft Bing Translate | ✅ | ✅ FREE |

| Libre Translate | ✅ | ✅ FREE |

| Argos Translate | ✅ | ✅ FREE |

| DeepL Translate | ✅ |

require API KEY (DEEPL_API_KEY as env) optional API URL (DEEPL_API_URL as env)

|

| gpt-4o | ✅ | require API KEY (OPENAI_API_KEY as env) |

| gpt-3.5-turbo | ✅ | require API KEY (OPENAI_API_KEY as env) |

| gpt-4 | ✅ | require API KEY (OPENAI_API_KEY as env) |

| gpt-4o-mini | ✅ | require API KEY (OPENAI_API_KEY as env) |

| gemma-7b | ✅ | require API KEY (GROQ_API_KEY as env) |

| gemma2-9b | ✅ | require API KEY (GROQ_API_KEY as env) |

| mixtral-8x7b | ✅ | require API KEY (GROQ_API_KEY as env) |

| llama3-8b | ✅ | require API KEY (GROQ_API_KEY as env) |

| llama3-70b | ✅ | require API KEY (GROQ_API_KEY as env) |

| Translation Module | Support | FREE |

|---|---|---|

| Google Translate | ✅ | ✅ FREE |

| Google Translate 2 | ✅ | ✅ FREE |

| Microsoft Bing Translate | ✅ | ✅ FREE |

| Libre Translate | ✅ | ✅ FREE |

| Argos Translate | ✅ | ✅ FREE |

| DeepL Translate | ✅ |

require API KEY (DEEPL_API_KEY as env) optional API URL (DEEPL_API_URL as env)

|

| gpt-4o | ✅ | require API KEY (OPENAI_API_KEY as env) |

| gpt-3.5-turbo | ✅ | require API KEY (OPENAI_API_KEY as env) |

| gpt-4 | ✅ | require API KEY (OPENAI_API_KEY as env) |

| gpt-4o-mini | ✅ | require API KEY (OPENAI_API_KEY as env) |

| gemma-7b | ✅ | require API KEY (GROQ_API_KEY as env) |

| gemma2-9b | ✅ | require API KEY (GROQ_API_KEY as env) |

| mixtral-8x7b | ✅ | require API KEY (GROQ_API_KEY as env) |

| llama3-8b | ✅ | require API KEY (GROQ_API_KEY as env) |

| llama3-70b | ✅ | require API KEY (GROQ_API_KEY as env) |

Browser support will come soon...

npm i @parvineyvazov/json-translator- OR you can install it globally (in case of using CLI)

npm i -g @parvineyvazov/json-translatorjsontt <your/path/to/file.json>

or

jsontt <your/path/to/file.yaml/yml>-

[path]: Required JSON/YAML file path<your/path/to/file.json> -

[path]: optional proxy list txt file path<your/path/to/proxy_list.txt>

-V, --version output the version number

-m, --module <Module> specify translation module

-f, --from <Language> from language

-t, --to <Languages...> to translates

-n, --name <string> optional ↵ | output filename

-fb, --fallback <string> optional ↵ | fallback logic,

try other translation modules on fail | yes, no | default: no

-cl, --concurrencylimit <number> optional ↵ | set max concurrency limit

(higher faster, but easy to get banned) | default: 3

-c, --cache optional ↵ | enabled cache | default: no

-h, --help display help for command

Translate a JSON file using Google Translate:

jsontt <your/path/to/file.json> --module google --from en --to ar fr zh-CN- with output name

jsontt <your/path/to/file.json> --module google --from en --to ar fr zh-CN --name myFiles- with fallback logic (try other possible translation modules on fail)

jsontt <your/path/to/file.json> --module google --from en --to ar fr zh-CN --name myFiles --fallback yes- set concurrency limit (higher faster, but easy to get banned | default: 3)

jsontt <your/path/to/file.json> --module google --from en --to ar fr zh-CN --name myFiles --fallback yes --concurrencylimit 10- translate (json/yaml)

jsontt file.jsonjsontt folder/file.jsonjsontt "folder\file.json"jsontt "C:\folder1\folder\en.json"- with proxy (only Google Translate module)

jsontt file.json proxy.txtResult will be in the same folder as the original JSON/YAML file.

- help

jsontt -hjsontt --help- Import the library to your code.

For JavaScript

const translator = require('@parvineyvazov/json-translator');For TypeScript:

import * as translator from '@parvineyvazov/json-translator';// Let`s translate `Home sweet home!` string from English to Chinese

const my_str = await translator.translateWord(

'Home sweet home!',

translator.languages.English,

translator.languages.Chinese_Simplified

);

// my_str: 家,甜蜜的家!- Import the library to your code

For JavaScript

const translator = require('@parvineyvazov/json-translator');For TypeScript:

import * as translator from '@parvineyvazov/json-translator';/*

Let`s translate our deep object from English to Spanish

*/

const en_lang: translator.translatedObject = {

login: {

title: 'Login {{name}}',

email: 'Please, enter your email',

failure: 'Failed',

},

homepage: {

welcoming: 'Welcome!',

title: 'Live long, live healthily!',

},

profile: {

edit_screen: {

edit: 'Edit your informations',

edit_age: 'Edit your age',

number_editor: [

{

title: 'Edit number 1',

button: 'Edit 1',

},

{

title: 'Edit number 2',

button: 'Edit 2',

},

],

},

},

};

/*

FOR JavaScript don`t use translator.translatedObject (No need to remark its type)

*/

let es_lang = await translator.translateObject(

en_lang,

translator.languages.English,

translator.languages.Spanish

);

/*

es_lang:

{

"login": {

"title": "Acceso {{name}}",

"email": "Por favor introduzca su correo electrónico",

"failure": "Fallida"

},

"homepage": {

"welcoming": "¡Bienvenidas!",

"title": "¡Vive mucho tiempo, vivo saludable!"

},

"profile": {

"edit_screen": {

"edit": "Edita tus informaciones",

"edit_age": "Editar tu edad",

"number_editor": [

{

"title": "Editar número 1",

"button": "Editar 1"

},

{

"title": "Editar número 2",

"button": "Editar 2"

}

]

}

}

}

*/- Import the library to your code

For JavaScript

const translator = require('@parvineyvazov/json-translator');For TypeScript:

import * as translator from '@parvineyvazov/json-translator';/*

Let`s translate our object from English to French, Georgian and Japanese in the same time:

*/

const en_lang: translator.translatedObject = {

login: {

title: 'Login',

email: 'Please, enter your email',

failure: 'Failed',

},

edit_screen: {

edit: 'Edit your informations',

number_editor: [

{

title: 'Edit number 1',

button: 'Edit 1',

},

],

},

};

/*

FOR JavaScript don`t use translator.translatedObject (No need to remark its type)

*/

const [french, georgian, japanese] = (await translator.translateObject(

en_lang,

translator.languages.Automatic,

[

translator.languages.French,

translator.languages.Georgian,

translator.languages.Japanese,

]

)) as Array<translator.translatedObject>; // FOR JAVASCRIPT YOU DO NOT NEED TO SPECIFY THE TYPE

/*

french:

{

"login": {

"title": "Connexion",

"email": "S'il vous plaît, entrez votre email",

"failure": "Manquée"

},

"edit_screen": {

"edit": "Modifier vos informations",

"number_editor": [

{

"title": "Modifier le numéro 1",

"button": "Éditer 1"

}

]

}

}

georgian:

{

"login": {

"title": "Შესვლა",

"email": "გთხოვთ, შეიყვანეთ თქვენი ელ",

"failure": "მცდელობა"

},

"edit_screen": {

"edit": "თქვენი ინფორმაციათა რედაქტირება",

"number_editor": [

{

"title": "რედაქტირების ნომერი 1",

"button": "რედაქტირება 1"

}

]

}

}

japanese:

{

"login": {

"title": "ログイン",

"email": "あなたのメールアドレスを入力してください",

"failure": "失敗した"

},

"edit_screen": {

"edit": "あなたの情報を編集します",

"number_editor": [

{

"title": "番号1を編集します",

"button": "編集1を編集します"

}

]

}

}

*/- Import the library to your code.

For JavaScript

const translator = require('@parvineyvazov/json-translator');For TypeScript:

import * as translator from '@parvineyvazov/json-translator';/*

Let`s translate our json file into another language and save it into the same folder of en.json

*/

let path = 'C:/files/en.json'; // PATH OF YOUR JSON FILE (includes file name)

await translator.translateFile(path, translator.languages.English, [

translator.languages.German,

]);── files

├── en.json

└── de.json- Import the library to your code.

For JavaScript

const translator = require('@parvineyvazov/json-translator');For TypeScript:

import * as translator from '@parvineyvazov/json-translator';/*

Let`s translate our json file into multiple languages and save them into the same folder of en.json

*/

let path = 'C:/files/en.json'; // PATH OF YOUR JSON FILE (includes file name)

await translator.translateFile(path, translator.languages.English, [

translator.languages.Cebuano,

translator.languages.French,

translator.languages.German,

translator.languages.Hungarian,

translator.languages.Japanese,

]);── files

├── en.json

├── ceb.json

├── fr.json

├── de.json

├── hu.json

└── ja.jsonTo ignore words on translation use {{word}} OR {word} style on your object.

{

"one": "Welcome {{name}}",

"two": "Welcome {name}",

"three": "I am {name} {{surname}}"

}

...translating to spanish

{

"one": "Bienvenido {{name}}",

"two": "Bienvenido {name}",

"three": "Soy {name} {{surname}}"

}

-

jsontt also ignores the

URLin the text which means sometimes translations ruin the URL in the given string while translating that string. It prevents such cases by ignoring URLs in the string while translating.- You don't especially need to do anything for it, it ignores them automatically.

{

"text": "this is a puppy https://shorturl.at/lvPY5"

}

...translating to german

{

"text": "das ist ein welpe https://shorturl.at/lvPY5"

}

- Clone it

git clone https://github.com/mololab/json-translator.git

- Install dependencies (with using yarn - install yarn if you don't have)

yarn

-

Show the magic:

-

Update CLI

Go to file

src/cli/cli.ts -

Update translation

Go to file

src/modules/functions.ts -

Update JSON operations(deep dive, send translation request)

Go to file

src/core/json_object.ts -

Update JSON file read/write operations

Go to file

src/core/json_file.ts -

Update ignoring values in translation (map/unmap)

Go to file

src/core/ignorer.ts

-

-

Check CLI locally

For checking CLI locally we need to link the package using npm

npm link

Or you can run the whole steps using make

make run-only-cli

Make sure your terminal has admin access while running these commands to prevent any access issues.

✔️ Translate a word | sentence

- for JSON objects

✔️ Translate JSON object

✔️ Translate deep JSON object

✔️ Multi language translate for JSON object

- [ ] Translate JSON object with extracting OR filtering some of its fields

- for JSON files

✔️ Translate JSON file

✔️ Translate deep JSON file

✔️ Multi language translate for JSON file

- [ ] Translate JSON file with extracting OR filtering some of its fields

- General

✔️ CLI support

✔️ Safe translation (Checking undefined, long, or empty values)

✔️ Queue support for big translations

✔️ Informing the user about the translation process (number of completed ones, the total number of lines and etc.)

✔️ Ignore value words in translation (such as ignore {{name}} OR {name} on translation)

✔️ Libre Translate option (CLI)

✔️ Argos Translate option (CLI)

✔️ Bing Translate option (CLI)

✔️ Ignore URL translation on given string

✔️ CLI options for languages & source selection

✔️ Define output file names on CLI (optional command for CLI)

✔️ YAML file Translate

✔️ Fallback Translation (try new module on fail)

✔️ Can set the concurrency limit manually

-

[ ] Libre Translate option (in code package)

-

[ ] Argos Translate option (in code package)

-

[ ] Bing Translate option (in code package)

-

[ ] Openrouter Translate module

-

[ ] Cohere Translate module

-

[ ] Anthropic/Claude Translate module

-

[ ] Together AI Translate module

-

[ ] llamacpp Translate module

-

[ ] Google Gemini API Translate module

-

[ ] Groq support - Full list as new Translate modules

✔️ ChatGPT support

-

[ ] Sync translation

-

[ ] Browser support

-

[ ] Translation Option for own LibreTranslate instance

-

[ ] Make "--" dynamically adjustable (placeholder of not translated ones).

-

[ ] Update name -> prefix in CLI / Ability to pass empty to prefix in CLI (better for autonomous tasks)

-

[ ]

--prettyPrintto CLI which will print json in a pretty way

@parvineyvazov/json-translator will be available under the MIT license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for json-translator

Similar Open Source Tools

json-translator

The json-translator repository provides a free tool to translate JSON/YAML files or JSON objects into different languages using various translation modules. It supports CLI usage and package support, allowing users to translate words, sentences, JSON objects, and JSON files. The tool also offers multi-language translation, ignoring specific words, and safe translation practices. Users can contribute to the project by updating CLI, translation functions, JSON operations, and more. The roadmap includes features like Libre Translate option, Argos Translate option, Bing Translate option, and support for additional translation modules.

lingo.dev

Replexica AI automates software localization end-to-end, producing authentic translations instantly across 60+ languages. Teams can do localization 100x faster with state-of-the-art quality, reaching more paying customers worldwide. The tool offers a GitHub Action for CI/CD automation and supports various formats like JSON, YAML, CSV, and Markdown. With lightning-fast AI localization, auto-updates, native quality translations, developer-friendly CLI, and scalability for startups and enterprise teams, Replexica is a top choice for efficient and effective software localization.

fittencode.nvim

Fitten Code AI Programming Assistant for Neovim provides fast completion using AI, asynchronous I/O, and support for various actions like document code, edit code, explain code, find bugs, generate unit test, implement features, optimize code, refactor code, start chat, and more. It offers features like accepting suggestions with Tab, accepting line with Ctrl + Down, accepting word with Ctrl + Right, undoing accepted text, automatic scrolling, and multiple HTTP/REST backends. It can run as a coc.nvim source or nvim-cmp source.

openlrc

Open-Lyrics is a Python library that transcribes voice files using faster-whisper and translates/polishes the resulting text into `.lrc` files in the desired language using LLM, e.g. OpenAI-GPT, Anthropic-Claude. It offers well preprocessed audio to reduce hallucination and context-aware translation to improve translation quality. Users can install the library from PyPI or GitHub and follow the installation steps to set up the environment. The tool supports GUI usage and provides Python code examples for transcription and translation tasks. It also includes features like utilizing context and glossary for translation enhancement, pricing information for different models, and a list of todo tasks for future improvements.

SwiftAgent

A type-safe, declarative framework for building AI agents in Swift, SwiftAgent is built on Apple FoundationModels. It allows users to compose agents by combining Steps in a declarative syntax similar to SwiftUI. The framework ensures compile-time checked input/output types, native Apple AI integration, structured output generation, and built-in security features like permission, sandbox, and guardrail systems. SwiftAgent is extensible with MCP integration, distributed agents, and a skills system. Users can install SwiftAgent with Swift 6.2+ on iOS 26+, macOS 26+, or Xcode 26+ using Swift Package Manager.

nextlint

Nextlint is a rich text editor (WYSIWYG) written in Svelte, using MeltUI headless UI and tailwindcss CSS framework. It is built on top of tiptap editor (headless editor) and prosemirror. Nextlint is easy to use, develop, and maintain. It has a prompt engine that helps to integrate with any AI API and enhance the writing experience. Dark/Light theme is supported and customizable.

Code

A3S Code is an embeddable AI coding agent framework in Rust that allows users to build agents capable of reading, writing, and executing code with tool access, planning, and safety controls. It is production-ready with features like permission system, HITL confirmation, skill-based tool restrictions, and error recovery. The framework is extensible with 19 trait-based extension points and supports lane-based priority queue for scalable multi-machine task distribution.

zeroclaw

ZeroClaw is a fast, small, and fully autonomous AI assistant infrastructure built with Rust. It features a lean runtime, cost-efficient deployment, fast cold starts, and a portable architecture. It is secure by design, fully swappable, and supports OpenAI-compatible provider support. The tool is designed for low-cost boards and small cloud instances, with a memory footprint of less than 5MB. It is suitable for tasks like deploying AI assistants, swapping providers/channels/tools, and pluggable everything.

AnyCrawl

AnyCrawl is a high-performance crawling and scraping toolkit designed for SERP crawling, web scraping, site crawling, and batch tasks. It offers multi-threading and multi-process capabilities for high performance. The tool also provides AI extraction for structured data extraction from pages, making it LLM-friendly and easy to integrate and use.

node-sdk

The ChatBotKit Node SDK is a JavaScript-based platform for building conversational AI bots and agents. It offers easy setup, serverless compatibility, modern framework support, customizability, and multi-platform deployment. With capabilities like multi-modal and multi-language support, conversation management, chat history review, custom datasets, and various integrations, this SDK enables users to create advanced chatbots for websites, mobile apps, and messaging platforms.

Conduit

Conduit is a unified Swift 6.2 SDK for local and cloud LLM inference, providing a single Swift-native API that can target Anthropic, OpenRouter, Ollama, MLX, HuggingFace, and Apple’s Foundation Models without rewriting your prompt pipeline. It allows switching between local, cloud, and system providers with minimal code changes, supports downloading models from HuggingFace Hub for local MLX inference, generates Swift types directly from LLM responses, offers privacy-first options for on-device running, and is built with Swift 6.2 concurrency features like actors, Sendable types, and AsyncSequence.

llama_ros

This repository provides a set of ROS 2 packages to integrate llama.cpp into ROS 2. By using the llama_ros packages, you can easily incorporate the powerful optimization capabilities of llama.cpp into your ROS 2 projects by running GGUF-based LLMs and VLMs.

opencode.nvim

opencode.nvim is a tool that integrates the opencode AI assistant with Neovim, allowing users to streamline editor-aware research, reviews, and requests. It provides features such as connecting to opencode instances, sharing editor context, input prompts with completions, executing commands, and monitoring state via statusline component. Users can define their own prompts, reload edited buffers in real-time, and forward Server-Sent-Events for automation. The tool offers sensible defaults with flexible configuration and API to fit various workflows, supporting ranges and dot-repeat in a Vim-like manner.

oh-my-pi

oh-my-pi is an AI coding agent for the terminal, providing tools for interactive coding, AI-powered git commits, Python code execution, LSP integration, time-traveling streamed rules, interactive code review, task management, interactive questioning, custom TypeScript slash commands, universal config discovery, MCP & plugin system, web search & fetch, SSH tool, Cursor provider integration, multi-credential support, image generation, TUI overhaul, edit fuzzy matching, and more. It offers a modern terminal interface with smart session management, supports multiple AI providers, and includes various tools for coding, task management, code review, and interactive questioning.

Bindu

Bindu is an operating layer for AI agents that provides identity, communication, and payment capabilities. It delivers a production-ready service with a convenient API to connect, authenticate, and orchestrate agents across distributed systems using open protocols: A2A, AP2, and X402. Built with a distributed architecture, Bindu makes it fast to develop and easy to integrate with any AI framework. Transform any agent framework into a fully interoperable service for communication, collaboration, and commerce in the Internet of Agents.

For similar tasks

hass-ollama-conversation

The Ollama Conversation integration adds a conversation agent powered by Ollama in Home Assistant. This agent can be used in automations to query information provided by Home Assistant about your house, including areas, devices, and their states. Users can install the integration via HACS and configure settings such as API timeout, model selection, context size, maximum tokens, and other parameters to fine-tune the responses generated by the AI language model. Contributions to the project are welcome, and discussions can be held on the Home Assistant Community platform.

rclip

rclip is a command-line photo search tool powered by the OpenAI's CLIP neural network. It allows users to search for images using text queries, similar image search, and combining multiple queries. The tool extracts features from photos to enable searching and indexing, with options for previewing results in supported terminals or custom viewers. Users can install rclip on Linux, macOS, and Windows using different installation methods. The repository follows the Conventional Commits standard and welcomes contributions from the community.

honcho

Honcho is a platform for creating personalized AI agents and LLM powered applications for end users. The repository is a monorepo containing the server/API for managing database interactions and storing application state, along with a Python SDK. It utilizes FastAPI for user context management and Poetry for dependency management. The API can be run using Docker or manually by setting environment variables. The client SDK can be installed using pip or Poetry. The project is open source and welcomes contributions, following a fork and PR workflow. Honcho is licensed under the AGPL-3.0 License.

core

OpenSumi is a framework designed to help users quickly build AI Native IDE products. It provides a set of tools and templates for creating Cloud IDEs, Desktop IDEs based on Electron, CodeBlitz web IDE Framework, Lite Web IDE on the Browser, and Mini-App liked IDE. The framework also offers documentation for users to refer to and a detailed guide on contributing to the project. OpenSumi encourages contributions from the community and provides a platform for users to report bugs, contribute code, or improve documentation. The project is licensed under the MIT license and contains third-party code under other open source licenses.

yolo-ios-app

The Ultralytics YOLO iOS App GitHub repository offers an advanced object detection tool leveraging YOLOv8 models for iOS devices. Users can transform their devices into intelligent detection tools to explore the world in a new and exciting way. The app provides real-time detection capabilities with multiple AI models to choose from, ranging from 'nano' to 'x-large'. Contributors are welcome to participate in this open-source project, and licensing options include AGPL-3.0 for open-source use and an Enterprise License for commercial integration. Users can easily set up the app by following the provided steps, including cloning the repository, adding YOLOv8 models, and running the app on their iOS devices.

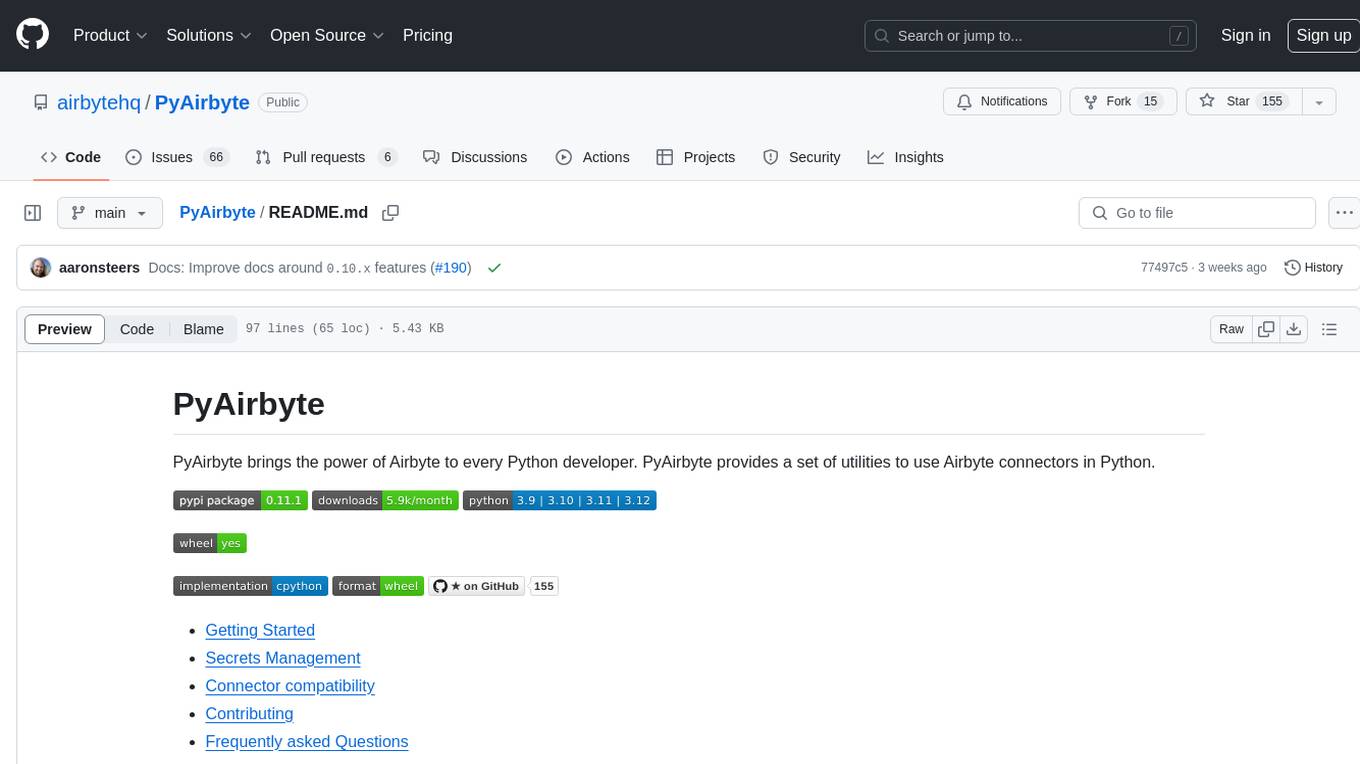

PyAirbyte

PyAirbyte brings the power of Airbyte to every Python developer by providing a set of utilities to use Airbyte connectors in Python. It enables users to easily manage secrets, work with various connectors like GitHub, Shopify, and Postgres, and contribute to the project. PyAirbyte is not a replacement for Airbyte but complements it, supporting data orchestration frameworks like Airflow and Snowpark. Users can develop ETL pipelines and import connectors from local directories. The tool simplifies data integration tasks for Python developers.

md-agent

MD-Agent is a LLM-agent based toolset for Molecular Dynamics. It uses Langchain and a collection of tools to set up and execute molecular dynamics simulations, particularly in OpenMM. The tool assists in environment setup, installation, and usage by providing detailed steps. It also requires API keys for certain functionalities, such as OpenAI and paper-qa for literature searches. Contributions to the project are welcome, with a detailed Contributor's Guide available for interested individuals.

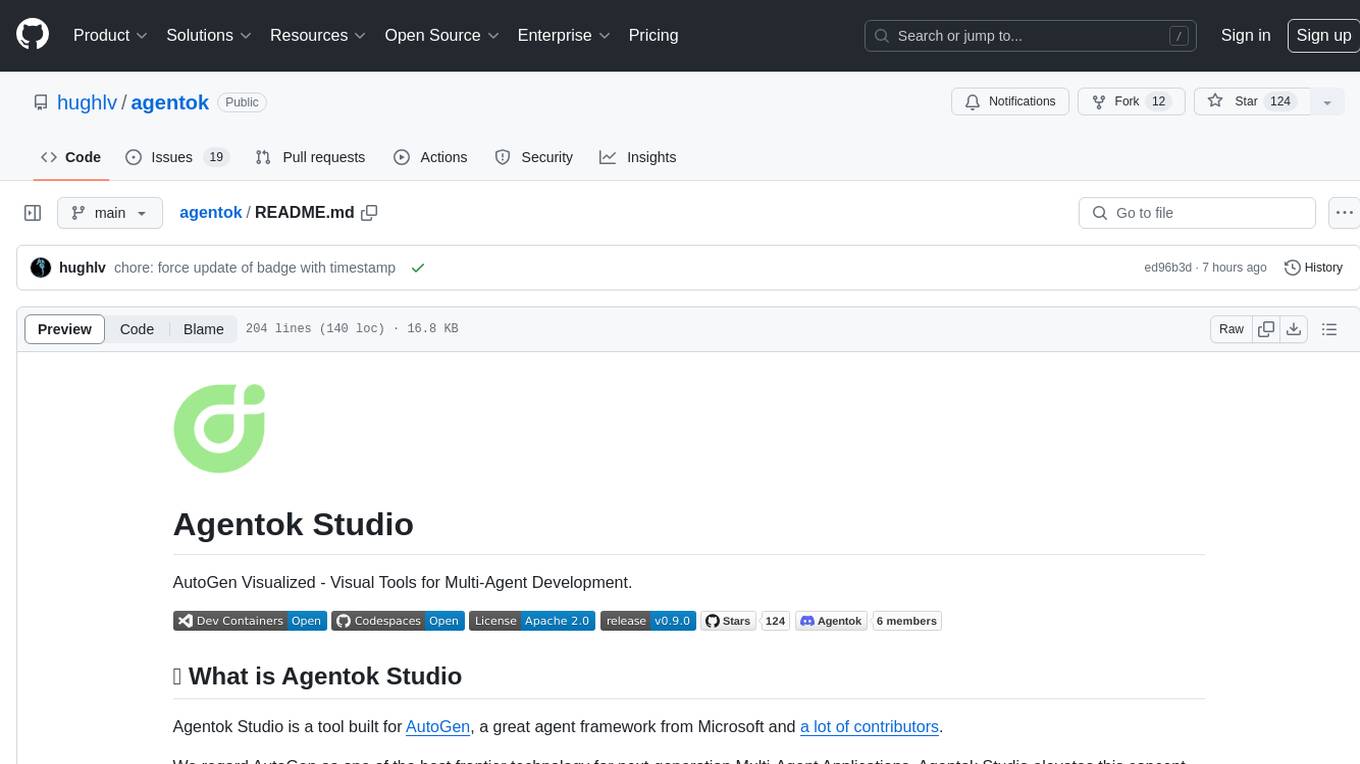

flowgen

FlowGen is a tool built for AutoGen, a great agent framework from Microsoft and a lot of contributors. It provides intuitive visual tools that streamline the construction and oversight of complex agent-based workflows, simplifying the process for creators and developers. Users can create Autoflows, chat with agents, and share flow templates. The tool is fully dockerized and supports deployment on Railway.app. Contributions to the project are welcome, and the platform uses semantic-release for versioning and releases.

For similar jobs

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.