flowgen

AutoGen Visualized - Visual Tools for Multi-Agent Development.

Stars: 123

FlowGen is a tool built for AutoGen, a great agent framework from Microsoft and a lot of contributors. It provides intuitive visual tools that streamline the construction and oversight of complex agent-based workflows, simplifying the process for creators and developers. Users can create Autoflows, chat with agents, and share flow templates. The tool is fully dockerized and supports deployment on Railway.app. Contributions to the project are welcome, and the platform uses semantic-release for versioning and releases.

README:

FlowGen is a tool built for AutoGen, a great agent framework from Microsoft and a lot of contributors.

We regard AutoGen as one of the best frontier technology for next-generation Multi-Agent Applications. FlowGen elevates this concept, providing intuitive visual tools that streamline the construction and oversight of complex agent-based workflows, thereby simplifying the entire process for creators and developers.

Contributions (Issues, Pull Requests, even Typo-corrections) to this project are welcome! All contributors will be added to the Contribution Wall.

You can create an Autoflow from scratch, or fork from a template. The Autoflow is visualized as a graph, and you can drag and drop nodes to build agents in flow style.

You can launch an Autoflow or an Autoflow Template in a chat window, and chat with the agents.

Place to share and discover flow templates.

To quickly explore what FlowGen has to offer, simply visit it https://platform.flowgen.app.

For a more in-depth look at the platform, please refer to our Getting Started and other documents.

We made tutorials based on the official notebooks from Autogen repository. You can refer to the original notebook here.

🔲 Planned/Working ✅ Completed 🆘 With Issues ⭕ Out of Scope

| Example | Status | Comments |

|---|---|---|

| simple_chat | ✅ | Simple Chat |

| auto_feedback_from_code_execution | ✅ | Feedback from Code Execution |

| ⭕ | This is a feature to be considered to add to flow generation. #40 | |

| chess | 🔲 | This depends on the feature of importing custom Agent #38 |

| compression | ✅ | |

| dalle_and_gpt4v | ✅ | Supported with app.extensions |

| function_call_async | ✅ | |

| function_call | ✅ | |

| graph_modelling_language | ⭕ | This is out of project scope. Open an issue if necessary |

| group_chat_RAG | 🆘 | This notebook does not work |

| groupchat_research | ✅ | |

| groupchat_vis | ✅ | |

| groupchat | ✅ | |

| hierarchy_flow_using_select_speaker | 🔲 | |

| human_feedback | ✅ | Human in the Loop |

| inception_function | 🔲 | |

| ⭕ | No plan to support | |

| lmm_gpt-4v | ✅ | |

| lmm_llava | ✅ | Depends on Replicate |

| MathChat | ✅ | Math Chat |

| oai_assistant_function_call | ✅ | |

| oai_assistant_groupchat | 🆘 | Very slow and not work well, sometimes not returning. |

| oai_assistant_retrieval | ✅ | Retrieval (OAI) |

| oai_assistant_twoagents_basic | ✅ | |

| oai_code_interpreter | ✅ | |

| planning | ✅ | This sample works fine, but does not exit gracefully. |

| qdrant_RetrieveChat | 🔲 | |

| RetrieveChat | 🔲 | |

| stream | 🔲 | |

| teachability | 🔲 | |

| teaching | 🔲 | |

| two_users | ✅ | The response will be very long and should set a large max_tokens. |

| video_transcript_translate_with_whisper | ✅ | Depends on ffmpeg lib, should brew install ffmpeg and export IMAGEIO_FFMPEG_EXE. Since ffmpeg occupies too much space, the online version has removed the support. |

| web_info | ✅ | |

| cq_math | ⭕ | This example is quite irrelevant to autogen, why not just use OpenAI API? |

| Async_human_input | ⭕ | Need scenario. |

| oai_chatgpt_gpt4 | ⭕ | Fine-tuning, out of project scope |

| oai_completion | ⭕ | Fine-tuning, out of project scope |

The project contains Frontend (Built with Next.js) and Backend service (Built with Flask in Python), and have been fully dockerized.

The easiest way to run on local is using docker-compose:

docker-compose up -dYou can also build and run the ui and service separately with docker:

docker build -t flowgen-api ./api

docker run -d -p 5004:5004 flowgen-api

docker build -t flowgen-ui ./ui

docker run -d -p 2855:2855 flowgen-ui

docker build -t flowgen-db ./pocketbase

docker run -d -p 7676:7676 flowgen-db(The default port number 2855 is the address of our first office.)

Railway.app supports the deployment of applications in Dockers. By clicking the "Deploy on Railway" button, you'll streamline the setup and deployment of your application on Railway platform:

- Click the "Deploy on Railway" button to start the process on Railway.app.

- Log in to Railway and set the following environment variables:

-

PORT: Please set for each services as2855,5004,7676respectively.

-

- Confirm the settings and deploy.

- After deployment, visit the provided URL to access your deployed application.

If you're interested in contributing to the development of this project or wish to run it from the source code, you have the option to run the ui and service independently. Here's how you can do that:

-

UI (Frontend)

- Navigate to the ui directory

cd ui. - Rename

.env.sampleto.env.localand set the value of variables correctly. - Install the necessary dependencies using the appropriate package manager command (e.g.,

pnpm installoryarn). - Run the ui service using the start-up script provided (e.g.,

pnpm devoryarn dev).

- Navigate to the ui directory

-

API (Backend Services)

- Switch to the api service directory

cd api. - Create virtual environment:

python3 -m venv venv. - Activate virtual environment:

source venv/bin/activate. - Install all required dependencies:

pip install -r requirements.txt. - Launch the api service using command

uvicorn app.main:app --reload --port 5004.

- Switch to the api service directory

REPLICATE_API_TOKEN is needed for LLaVa agent. If you need to use this agent, make sure to include this token in environment variables, such as the Environment Variables on Railway.app.

IMPORTANT: For security reasons, the latest version of autogen requires Docker for code execution. So you need to install Docker on your local machine beforehand, or add AUTOGEN_USE_DOCKER=False to file /api/.env.

-

PocketBase:

- Switch to the PocketBase directory

cd pocketbase. - Build the container:

docker build -t flowgen-db . - Run the container:

docker run -it --rm -p 7676:7676 flowgen-db

- Switch to the PocketBase directory

Each new commit to the main branch triggers an automatic deployment on Railway.app, ensuring you experience the latest version of the service.

[!WARNING]

Changes to Pocketbase project will cause the rebuild and redeployment of all instances, which will swipe all the data.

Please do not use it for production purpose, and make sure you export flows in time.

Once you've started both the ui and api services by following the steps previously outlined, you can access the application by opening your web browser and navigating to:

- ui: http://localhost:2855

- api: http://localhost:5004 (OpenAPI docs served at http://localhost:5004/redoc)

- pocketbase: http://localhost:7676

If your services are started successfully and running on the expected ports, you should see the user interface or receive responses from the api services via this URL.

Contributions are welcome! It's not limited to code, but also includes documentation and other aspects of the project. You can leave your comments on the Discord Server.

This project welcomes contributions and suggestions. Please read our Contributing Guide first.

If you are new to GitHub, here is a detailed help source on getting involved with development on GitHub.

Please consider contributing to AutoGen, as FlowGen relies on a robust foundation to deliver its capabilities. Your contributions can help enhance the platform's core functionalities, ensuring a more seamless and efficient development experience for Multi-Agent Applications.

This project uses 📦🚀semantic-release to manage versioning and releases. To avoid too frequent auto-releases, we make it a manual GitHub Action to trigger the release.

To follow the Semantic Release process, we enforced commit-lint convention on commit messages. Please refer to Commitlint for more details.

The project is licensed under Apache 2.0 with additional terms and conditions.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for flowgen

Similar Open Source Tools

flowgen

FlowGen is a tool built for AutoGen, a great agent framework from Microsoft and a lot of contributors. It provides intuitive visual tools that streamline the construction and oversight of complex agent-based workflows, simplifying the process for creators and developers. Users can create Autoflows, chat with agents, and share flow templates. The tool is fully dockerized and supports deployment on Railway.app. Contributions to the project are welcome, and the platform uses semantic-release for versioning and releases.

AI-Toolbox

AI-Toolbox is a C++ library aimed at representing and solving common AI problems, with a focus on MDPs, POMDPs, and related algorithms. It provides an easy-to-use interface that is extensible to many problems while maintaining readable code. The toolbox includes tutorials for beginners in reinforcement learning and offers Python bindings for seamless integration. It features utilities for combinatorics, polytopes, linear programming, sampling, distributions, statistics, belief updating, data structures, logging, seeding, and more. Additionally, it supports bandit/normal games, single agent MDP/stochastic games, single agent POMDP, and factored/joint multi-agent scenarios.

wppconnect

WPPConnect is an open source project developed by the JavaScript community with the aim of exporting functions from WhatsApp Web to the node, which can be used to support the creation of any interaction, such as customer service, media sending, intelligence recognition based on phrases artificial and many other things.

mcp-for-beginners

The Model Context Protocol (MCP) Curriculum for Beginners is an open-source framework designed to standardize interactions between AI models and client applications. It offers a structured learning path with practical coding examples and real-world use cases in popular programming languages like C#, Java, JavaScript, Rust, Python, and TypeScript. Whether you're an AI developer, system architect, or software engineer, this guide provides comprehensive resources for mastering MCP fundamentals and implementation strategies.

torchtune

Torchtune is a PyTorch-native library for easily authoring, fine-tuning, and experimenting with LLMs. It provides native-PyTorch implementations of popular LLMs using composable and modular building blocks, easy-to-use and hackable training recipes for popular fine-tuning techniques, YAML configs for easily configuring training, evaluation, quantization, or inference recipes, and built-in support for many popular dataset formats and prompt templates to help you quickly get started with training.

FFAIVideo

FFAIVideo is a lightweight node.js project that utilizes popular AI LLM to intelligently generate short videos. It supports multiple AI LLM models such as OpenAI, Moonshot, Azure, g4f, Google Gemini, etc. Users can input text to automatically synthesize exciting video content with subtitles, background music, and customizable settings. The project integrates Microsoft Edge's online text-to-speech service for voice options and uses Pexels website for video resources. Installation of FFmpeg is essential for smooth operation. Inspired by MoneyPrinterTurbo, MoneyPrinter, and MsEdgeTTS, FFAIVideo is designed for front-end developers with minimal dependencies and simple usage.

page-assist

Page Assist is an open-source Chrome Extension that provides a Sidebar and Web UI for your Local AI model. It allows you to interact with your model from any webpage.

llm-python

A set of instructional materials, code samples and Python scripts featuring LLMs (GPT etc) through interfaces like llamaindex, langchain, Chroma (Chromadb), Pinecone etc. Mainly used to store reference code for my LangChain tutorials on YouTube.

SemanticFinder

SemanticFinder is a frontend-only live semantic search tool that calculates embeddings and cosine similarity client-side using transformers.js and SOTA embedding models from Huggingface. It allows users to search through large texts like books with pre-indexed examples, customize search parameters, and offers data privacy by keeping input text in the browser. The tool can be used for basic search tasks, analyzing texts for recurring themes, and has potential integrations with various applications like wikis, chat apps, and personal history search. It also provides options for building browser extensions and future ideas for further enhancements and integrations.

floneum

Floneum is a graph editor that makes it easy to develop your own AI workflows. It uses large language models (LLMs) to run AI models locally, without any external dependencies or even a GPU. This makes it easy to use LLMs with your own data, without worrying about privacy. Floneum also has a plugin system that allows you to improve the performance of LLMs and make them work better for your specific use case. Plugins can be used in any language that supports web assembly, and they can control the output of LLMs with a process similar to JSONformer or guidance.

last_layer

last_layer is a security library designed to protect LLM applications from prompt injection attacks, jailbreaks, and exploits. It acts as a robust filtering layer to scrutinize prompts before they are processed by LLMs, ensuring that only safe and appropriate content is allowed through. The tool offers ultra-fast scanning with low latency, privacy-focused operation without tracking or network calls, compatibility with serverless platforms, advanced threat detection mechanisms, and regular updates to adapt to evolving security challenges. It significantly reduces the risk of prompt-based attacks and exploits but cannot guarantee complete protection against all possible threats.

outspeed

Outspeed is a PyTorch-inspired SDK for building real-time AI applications on voice and video input. It offers low-latency processing of streaming audio and video, an intuitive API familiar to PyTorch users, flexible integration of custom AI models, and tools for data preprocessing and model deployment. Ideal for developing voice assistants, video analytics, and other real-time AI applications processing audio-visual data.

cambrian

Cambrian-1 is a fully open project focused on exploring multimodal Large Language Models (LLMs) with a vision-centric approach. It offers competitive performance across various benchmarks with models at different parameter levels. The project includes training configurations, model weights, instruction tuning data, and evaluation details. Users can interact with Cambrian-1 through a Gradio web interface for inference. The project is inspired by LLaVA and incorporates contributions from Vicuna, LLaMA, and Yi. Cambrian-1 is licensed under Apache 2.0 and utilizes datasets and checkpoints subject to their respective original licenses.

symbiotic-ai

Symbiotic AI is a tool that transforms any AI into a symbiotic agent by providing persistent memory, pattern recognition, and autonomous execution across sessions. It is not a chatbot but rather a co-pilot that resides in your filesystem. The system consists of four markdown files that define the agent's personality, user profile, agent operations, and current state. By updating these files, the agent gains insights and evolves based on real context about the user. Symbiotic AI challenges users, remembers information across sessions, takes actions such as writing code and researching, and evolves over time to provide personalized insights and advice.

zoonk

Zoonk is a web app designed for creating interactive courses using AI. Currently in early development stage, it is not yet ready for use but aims to be available for testing and contributions in the future. The project focuses on leveraging AI technology to enhance the learning experience by providing interactive course creation tools. Zoonk also conducts model evaluations on different prompts to improve its AI capabilities. The project has garnered support from various individuals who believe in its vision and potential.

superduperdb

SuperDuperDB is a Python framework for integrating AI models, APIs, and vector search engines directly with your existing databases, including hosting of your own models, streaming inference and scalable model training/fine-tuning. Build, deploy and manage any AI application without the need for complex pipelines, infrastructure as well as specialized vector databases, and moving our data there, by integrating AI at your data's source: - Generative AI, LLMs, RAG, vector search - Standard machine learning use-cases (classification, segmentation, regression, forecasting recommendation etc.) - Custom AI use-cases involving specialized models - Even the most complex applications/workflows in which different models work together SuperDuperDB is **not** a database. Think `db = superduper(db)`: SuperDuperDB transforms your databases into an intelligent platform that allows you to leverage the full AI and Python ecosystem. A single development and deployment environment for all your AI applications in one place, fully scalable and easy to manage.

For similar tasks

flowgen

FlowGen is a tool built for AutoGen, a great agent framework from Microsoft and a lot of contributors. It provides intuitive visual tools that streamline the construction and oversight of complex agent-based workflows, simplifying the process for creators and developers. Users can create Autoflows, chat with agents, and share flow templates. The tool is fully dockerized and supports deployment on Railway.app. Contributions to the project are welcome, and the platform uses semantic-release for versioning and releases.

agentok

Agentok Studio is a visual tool built for AutoGen, a cutting-edge agent framework from Microsoft and various contributors. It offers intuitive visual tools to simplify the construction and management of complex agent-based workflows. Users can create workflows visually as graphs, chat with agents, and share flow templates. The tool is designed to streamline the development process for creators and developers working on next-generation Multi-Agent Applications.

eternal-ai

Eternal AI is an open source AI protocol for fully onchain agents, enabling developers to create various types of onchain AI agents without middlemen. It operates on a decentralized infrastructure with state-of-the-art models and omnichain interoperability. The protocol architecture includes components like ai-kernel, decentralized-agents, decentralized-inference, decentralized-compute, agent-as-a-service, and agent-studio. Ongoing research projects include cuda-evm, nft-ai, and physical-ai. The system requires Node.js, npm, Docker Desktop, Go, and Ollama for installation. The 'eai' CLI tool allows users to create agents, fetch agent info, list agents, and chat with agents. Design principles focus on decentralization, trustlessness, production-grade quality, and unified agent interface. Featured integrations can be quickly implemented, and governance will be overseen by EAI holders in the future.

agent-shell

Agent-Shell is a native Emacs shell designed to interact with LLM agents powered by ACP (Agent Client Protocol). With Agent-Shell, users can chat with various ACP-driven agents like Gemini CLI, Claude Code, Auggie, Mistral Vibe, and more. The tool provides a seamless interface for communication and interaction with these agents within the Emacs environment.

hass-ollama-conversation

The Ollama Conversation integration adds a conversation agent powered by Ollama in Home Assistant. This agent can be used in automations to query information provided by Home Assistant about your house, including areas, devices, and their states. Users can install the integration via HACS and configure settings such as API timeout, model selection, context size, maximum tokens, and other parameters to fine-tune the responses generated by the AI language model. Contributions to the project are welcome, and discussions can be held on the Home Assistant Community platform.

rclip

rclip is a command-line photo search tool powered by the OpenAI's CLIP neural network. It allows users to search for images using text queries, similar image search, and combining multiple queries. The tool extracts features from photos to enable searching and indexing, with options for previewing results in supported terminals or custom viewers. Users can install rclip on Linux, macOS, and Windows using different installation methods. The repository follows the Conventional Commits standard and welcomes contributions from the community.

honcho

Honcho is a platform for creating personalized AI agents and LLM powered applications for end users. The repository is a monorepo containing the server/API for managing database interactions and storing application state, along with a Python SDK. It utilizes FastAPI for user context management and Poetry for dependency management. The API can be run using Docker or manually by setting environment variables. The client SDK can be installed using pip or Poetry. The project is open source and welcomes contributions, following a fork and PR workflow. Honcho is licensed under the AGPL-3.0 License.

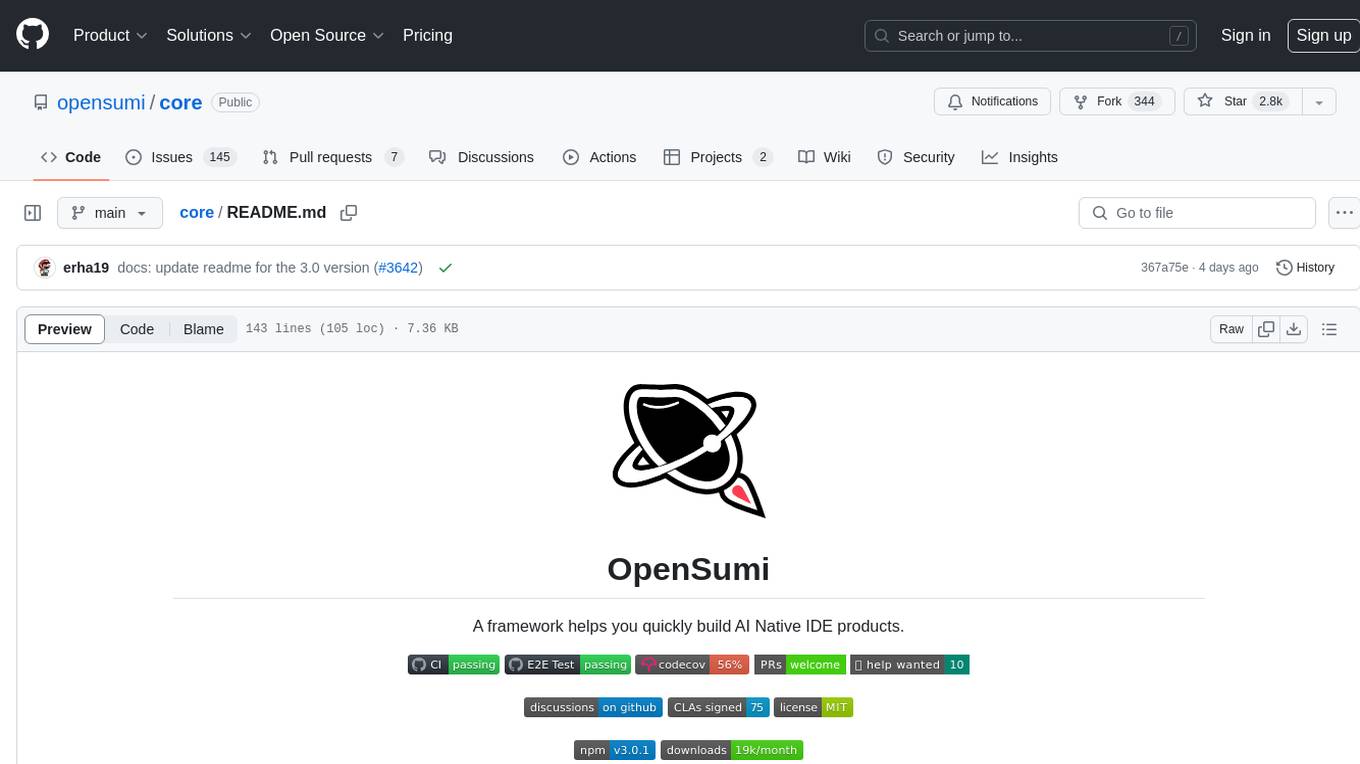

core

OpenSumi is a framework designed to help users quickly build AI Native IDE products. It provides a set of tools and templates for creating Cloud IDEs, Desktop IDEs based on Electron, CodeBlitz web IDE Framework, Lite Web IDE on the Browser, and Mini-App liked IDE. The framework also offers documentation for users to refer to and a detailed guide on contributing to the project. OpenSumi encourages contributions from the community and provides a platform for users to report bugs, contribute code, or improve documentation. The project is licensed under the MIT license and contains third-party code under other open source licenses.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.