eternal-ai

A Peer-to-Peer Autonomous Agent System

Stars: 88

Eternal AI is an open source AI protocol for fully onchain agents, enabling developers to create various types of onchain AI agents without middlemen. It operates on a decentralized infrastructure with state-of-the-art models and omnichain interoperability. The protocol architecture includes components like ai-kernel, decentralized-agents, decentralized-inference, decentralized-compute, agent-as-a-service, and agent-studio. Ongoing research projects include cuda-evm, nft-ai, and physical-ai. The system requires Node.js, npm, Docker Desktop, Go, and Ollama for installation. The 'eai' CLI tool allows users to create agents, fetch agent info, list agents, and chat with agents. Design principles focus on decentralization, trustlessness, production-grade quality, and unified agent interface. Featured integrations can be quickly implemented, and governance will be overseen by EAI holders in the future.

README:

Eternal AI is an open source AI protocol for fully onchain agents. Deployed onchain, these AI agents run exactly as programmed — all without a middleman or counterparty risk. They are permissionless, uncensored, trustless, and unstoppable.

Eternal AI agents operate on a powerful peer-to-peer global infrastructure with many unique properties:

- End-to-end decentralization: Inference, Compute, Storage, etc.

- State-of-the-art models: DeepSeek, Llama, FLUX, etc.

- Multichain support: Bitcoin, Ethereum, Solana, etc.

Run the following command to start the whole system with your local network.

sudo bash quickstart.sh

Navigate to ./agent-cli to install and use eai CLI.

Run the command to install:

sh install.sh

Copy .env.example to .env and update the .env file:

cp .env.example .env

PRIVATE_KEY=

ETERNALAI_API_KEY=

For the PRIVATE_KEY, make sure your account has enough gas tokens on the blockchains where you intend to create agents.

For the ETERNALAI_API_KEY, you can get it here.

eai agent create

-p <system_prompt_file_path>

-n <agent_name> -c <chain_name> -f <framework> -m <model_name> Only the param -p is required, and others are optional.

Example:

eai agent create

-p ../decentralized-agents/characters/donald_trump.txt

-n trump-agent -c base -f eternalai -m DeepSeek-R1-Distill-Llama-70B

We are creating an agent who is a Donald Trump twin called trump-agent on the Base Chain. It uses the EternalAI framework and the DeepSeek-R1-Distill-Llama-70B model. The .txt file is the system prompt for your agent, which defines its initial behavior. You can edit this file to customize the agent’s personality.

eai agent lseai agent start -n <agent_name>eai agent chat -n <agent_name>eai agent stop -n <agent_name>- Decentralize everything. Ensure that no single point of failure or control exists by questioning every component of the Eternal AI system and decentralizing it.

- Trustless. Use smart contracts at every step to trustlessly coordinate all parties in the system.

- Production grade. Code must be written with production-grade quality and designed for scale.

- Everything is an agent. Not just user-facing agents, but every component in the infrastructure, whether a swarm of agents, an AI model storage system, a GPU compute node, a cross-chain bridge, an infrastructure microservice, or an API, is implemented as an agent.

- Agents do one thing and do it well. Each agent should have a single, well-defined purpose and perform it well.

- Prompting as the unified agent interface. All agents have a unified, simplified I/O interface with prompting and response for both human-to-agent interactions and agent-to-agent interactions.

- Composable. Agents can work together to perform complex tasks via a chain of prompts.

Eternal AI is built using a modular approach, so support for other blockchains, agent frameworks, GPU providers, or AI models can be implemented quickly. Please reach out if you run into issues while working on an integration.

We are still building out the Eternal AI DAO.

Once the DAO is in place, EAI holders will oversee the governance and the treasury of the Eternal AI project with a clear mission: to build truly open AI.

Thank you for considering contributing to the source code. We welcome contributions from anyone and are grateful for even the most minor fixes.

If you'd like to contribute to Eternal AI, please fork, fix, commit, and send a pull request for the maintainers to review and merge into the main code base.

- GitHub Issues: bug reports, feature requests, issues, etc.

- GitHub Discussions: discuss designs, research, new ideas, thoughts, etc.

- X (Twitter): announcements about Eternal AI

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for eternal-ai

Similar Open Source Tools

eternal-ai

Eternal AI is an open source AI protocol for fully onchain agents, enabling developers to create various types of onchain AI agents without middlemen. It operates on a decentralized infrastructure with state-of-the-art models and omnichain interoperability. The protocol architecture includes components like ai-kernel, decentralized-agents, decentralized-inference, decentralized-compute, agent-as-a-service, and agent-studio. Ongoing research projects include cuda-evm, nft-ai, and physical-ai. The system requires Node.js, npm, Docker Desktop, Go, and Ollama for installation. The 'eai' CLI tool allows users to create agents, fetch agent info, list agents, and chat with agents. Design principles focus on decentralization, trustlessness, production-grade quality, and unified agent interface. Featured integrations can be quickly implemented, and governance will be overseen by EAI holders in the future.

web-llm-chat

WebLLM Chat is a private AI chat interface that combines WebLLM with a user-friendly design, leveraging WebGPU to run large language models natively in your browser. It offers browser-native AI experience with WebGPU acceleration, guaranteed privacy as all data processing happens locally, offline accessibility, user-friendly interface with markdown support, and open-source customization. The project aims to democratize AI technology by making powerful tools accessible directly to end-users, enhancing the chatting experience and broadening the scope for deployment of self-hosted and customizable language models.

llm-engine

Scale's LLM Engine is an open-source Python library, CLI, and Helm chart that provides everything you need to serve and fine-tune foundation models, whether you use Scale's hosted infrastructure or do it in your own cloud infrastructure using Kubernetes.

labs-ai-tools-for-devs

This repository provides AI tools for developers through Docker containers, enabling agentic workflows. It allows users to create complex workflows using Dockerized tools and Markdown, leveraging various LLM models. The core features include Dockerized tools, conversation loops, multi-model agents, project-first design, and trackable prompts stored in a git repo.

mahilo

Mahilo is a flexible framework for creating multi-agent systems that can interact with humans while sharing context internally. It allows developers to set up complex agent networks for various applications, from customer service to emergency response simulations. Agents can communicate with each other and with humans, making the system efficient by handling context from multiple agents and helping humans stay focused on specific problems. The system supports Realtime API for voice interactions, WebSocket-based communication, flexible communication patterns, session management, and easy agent definition.

agentok

Agentok Studio is a visual tool built for AutoGen, a cutting-edge agent framework from Microsoft and various contributors. It offers intuitive visual tools to simplify the construction and management of complex agent-based workflows. Users can create workflows visually as graphs, chat with agents, and share flow templates. The tool is designed to streamline the development process for creators and developers working on next-generation Multi-Agent Applications.

hashbrown

Hashbrown is a lightweight and efficient hashing library for Python, designed to provide easy-to-use cryptographic hashing functions for secure data storage and transmission. It supports a variety of hashing algorithms, including MD5, SHA-1, SHA-256, and SHA-512, allowing users to generate hash values for strings, files, and other data types. With Hashbrown, developers can quickly implement data integrity checks, password hashing, digital signatures, and other security features in their Python applications.

writer-framework

Writer Framework is an open-source framework for creating AI applications. It allows users to build user interfaces using a visual editor and write the backend code in Python. The framework is fast, flexible, and provides separation of concerns between UI and business logic. It is reactive and state-driven, highly customizable without requiring CSS, fast in event handling, developer-friendly with easy installation and quick start options, and contains full documentation for using its AI module and deployment options.

cloudflare-rag

This repository provides a fullstack example of building a Retrieval Augmented Generation (RAG) app with Cloudflare. It utilizes Cloudflare Workers, Pages, D1, KV, R2, AI Gateway, and Workers AI. The app features streaming interactions to the UI, hybrid RAG with Full-Text Search and Vector Search, switchable providers using AI Gateway, per-IP rate limiting with Cloudflare's KV, OCR within Cloudflare Worker, and Smart Placement for workload optimization. The development setup requires Node, pnpm, and wrangler CLI, along with setting up necessary primitives and API keys. Deployment involves setting up secrets and deploying the app to Cloudflare Pages. The project implements a Hybrid Search RAG approach combining Full Text Search against D1 and Hybrid Search with embeddings against Vectorize to enhance context for the LLM.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

InfiniStore

InfiniStore is an open-source high-performance KV store designed to support LLM Inference clusters. It provides high-performance and low-latency KV cache transfer and reuse among inference nodes. In addition to inference clusters, it can be used as a standalone KV store for integration with LLM training or inference services. InfiniStore is currently integrated with vLLM via LMCache and is in progress for integration with SGLang and other inference engines.

vector-vein

VectorVein is a no-code AI workflow software inspired by LangChain and langflow, aiming to combine the powerful capabilities of large language models and enable users to achieve intelligent and automated daily workflows through simple drag-and-drop actions. Users can create powerful workflows without the need for programming, automating all tasks with ease. The software allows users to define inputs, outputs, and processing methods to create customized workflow processes for various tasks such as translation, mind mapping, summarizing web articles, and automatic categorization of customer reviews.

MARS5-TTS

MARS5 is a novel English speech model (TTS) developed by CAMB.AI, featuring a two-stage AR-NAR pipeline with a unique NAR component. The model can generate speech for various scenarios like sports commentary and anime with just 5 seconds of audio and a text snippet. It allows steering prosody using punctuation and capitalization in the transcript. Speaker identity is specified using an audio reference file, enabling 'deep clone' for improved quality. The model can be used via torch.hub or HuggingFace, supporting both shallow and deep cloning for inference. Checkpoints are provided for AR and NAR models, with hardware requirements of 750M+450M params on GPU. Contributions to improve model stability, performance, and reference audio selection are welcome.

langchainjs-quickstart-demo

Discover the journey of building a generative AI application using LangChain.js and Azure. This demo explores the development process from idea to production, using a RAG-based approach for a Q&A system based on YouTube video transcripts. The application allows to ask text-based questions about a YouTube video and uses the transcript of the video to generate responses. The code comes in two versions: local prototype using FAISS and Ollama with LLaMa3 model for completion and all-minilm-l6-v2 for embeddings, and Azure cloud version using Azure AI Search and GPT-4 Turbo model for completion and text-embedding-3-large for embeddings. Either version can be run as an API using the Azure Functions runtime.

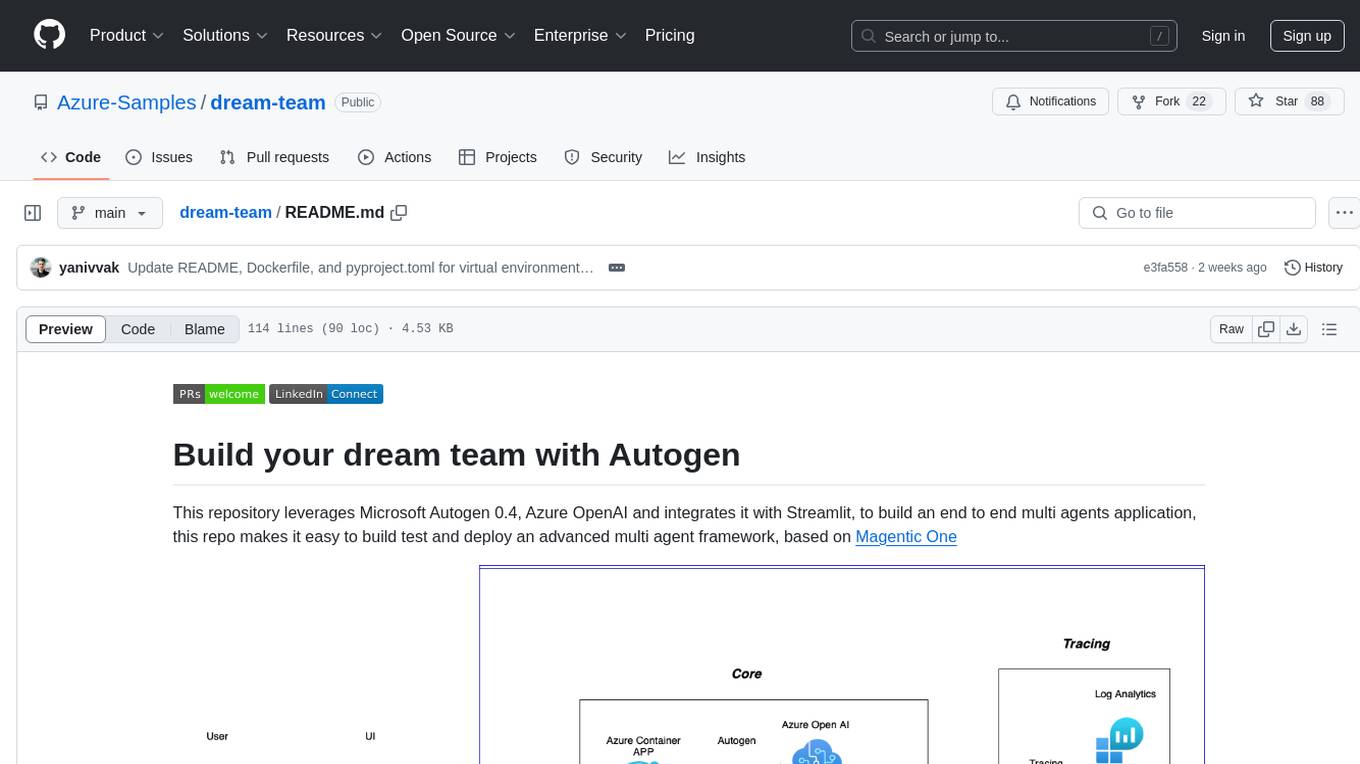

dream-team

Build your dream team with Autogen is a repository that leverages Microsoft Autogen 0.4, Azure OpenAI, and Streamlit to create an end-to-end multi-agent application. It provides an advanced multi-agent framework based on Magentic One, with features such as a friendly UI, single-line deployment, secure code execution, managed identities, and observability & debugging tools. Users can deploy Azure resources and the app with simple commands, work locally with virtual environments, install dependencies, update configurations, and run the application. The repository also offers resources for learning more about building applications with Autogen.

ezkl

EZKL is a library and command-line tool for doing inference for deep learning models and other computational graphs in a zk-snark (ZKML). It enables the following workflow: 1. Define a computational graph, for instance a neural network (but really any arbitrary set of operations), as you would normally in pytorch or tensorflow. 2. Export the final graph of operations as an .onnx file and some sample inputs to a .json file. 3. Point ezkl to the .onnx and .json files to generate a ZK-SNARK circuit with which you can prove statements such as: > "I ran this publicly available neural network on some private data and it produced this output" > "I ran my private neural network on some public data and it produced this output" > "I correctly ran this publicly available neural network on some public data and it produced this output" In the backend we use the collaboratively-developed Halo2 as a proof system. The generated proofs can then be verified with much less computational resources, including on-chain (with the Ethereum Virtual Machine), in a browser, or on a device.

For similar tasks

flowgen

FlowGen is a tool built for AutoGen, a great agent framework from Microsoft and a lot of contributors. It provides intuitive visual tools that streamline the construction and oversight of complex agent-based workflows, simplifying the process for creators and developers. Users can create Autoflows, chat with agents, and share flow templates. The tool is fully dockerized and supports deployment on Railway.app. Contributions to the project are welcome, and the platform uses semantic-release for versioning and releases.

agentok

Agentok Studio is a visual tool built for AutoGen, a cutting-edge agent framework from Microsoft and various contributors. It offers intuitive visual tools to simplify the construction and management of complex agent-based workflows. Users can create workflows visually as graphs, chat with agents, and share flow templates. The tool is designed to streamline the development process for creators and developers working on next-generation Multi-Agent Applications.

eternal-ai

Eternal AI is an open source AI protocol for fully onchain agents, enabling developers to create various types of onchain AI agents without middlemen. It operates on a decentralized infrastructure with state-of-the-art models and omnichain interoperability. The protocol architecture includes components like ai-kernel, decentralized-agents, decentralized-inference, decentralized-compute, agent-as-a-service, and agent-studio. Ongoing research projects include cuda-evm, nft-ai, and physical-ai. The system requires Node.js, npm, Docker Desktop, Go, and Ollama for installation. The 'eai' CLI tool allows users to create agents, fetch agent info, list agents, and chat with agents. Design principles focus on decentralization, trustlessness, production-grade quality, and unified agent interface. Featured integrations can be quickly implemented, and governance will be overseen by EAI holders in the future.

agent-shell

Agent-Shell is a native Emacs shell designed to interact with LLM agents powered by ACP (Agent Client Protocol). With Agent-Shell, users can chat with various ACP-driven agents like Gemini CLI, Claude Code, Auggie, Mistral Vibe, and more. The tool provides a seamless interface for communication and interaction with these agents within the Emacs environment.

honcho

Honcho is a platform for creating personalized AI agents and LLM powered applications for end users. The repository is a monorepo containing the server/API for managing database interactions and storing application state, along with a Python SDK. It utilizes FastAPI for user context management and Poetry for dependency management. The API can be run using Docker or manually by setting environment variables. The client SDK can be installed using pip or Poetry. The project is open source and welcomes contributions, following a fork and PR workflow. Honcho is licensed under the AGPL-3.0 License.

sagentic-af

Sagentic.ai Agent Framework is a tool for creating AI agents with hot reloading dev server. It allows users to spawn agents locally by calling specific endpoint. The framework comes with detailed documentation and supports contributions, issues, and feature requests. It is MIT licensed and maintained by Ahyve Inc.

tinyllm

tinyllm is a lightweight framework designed for developing, debugging, and monitoring LLM and Agent powered applications at scale. It aims to simplify code while enabling users to create complex agents or LLM workflows in production. The core classes, Function and FunctionStream, standardize and control LLM, ToolStore, and relevant calls for scalable production use. It offers structured handling of function execution, including input/output validation, error handling, evaluation, and more, all while maintaining code readability. Users can create chains with prompts, LLM models, and evaluators in a single file without the need for extensive class definitions or spaghetti code. Additionally, tinyllm integrates with various libraries like Langfuse and provides tools for prompt engineering, observability, logging, and finite state machine design.

council

Council is an open-source platform designed for the rapid development and deployment of customized generative AI applications using teams of agents. It extends the LLM tool ecosystem by providing advanced control flow and scalable oversight for AI agents. Users can create sophisticated agents with predictable behavior by leveraging Council's powerful approach to control flow using Controllers, Filters, Evaluators, and Budgets. The framework allows for automated routing between agents, comparing, evaluating, and selecting the best results for a task. Council aims to facilitate packaging and deploying agents at scale on multiple platforms while enabling enterprise-grade monitoring and quality control.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.