docs-mcp-server

Grounded Docs MCP Server: Open-Source Alternative to Context7, Nia, and Ref.Tools

Stars: 1047

The docs-mcp-server repository contains the server-side code for the documentation management system. It provides functionalities for managing, storing, and retrieving documentation files. Users can upload, update, and delete documents through the server. The server also supports user authentication and authorization to ensure secure access to the documentation system. Additionally, the server includes APIs for integrating with other systems and tools, making it a versatile solution for managing documentation in various projects and organizations.

README:

Docs MCP Server solves the problem of AI hallucinations and outdated knowledge by providing a personal, always-current documentation index for your AI coding assistant. It fetches official docs from websites, GitHub, npm, PyPI, and local files, allowing your AI to query the exact version you are using.

The open-source alternative to Context7, Nia, and Ref.Tools.

- ✅ Up-to-Date Context: Fetches documentation directly from official sources on demand.

- 🎯 Version-Specific: Queries target the exact library versions in your project.

- 💡 Reduces Hallucinations: Grounds LLMs in real documentation.

- 🔒 Private & Local: Runs entirely on your machine; your code never leaves your network.

- 🧩 Broad Compatibility: Works with any MCP-compatible client (Claude, Cline, etc.).

- 📁 Multiple Sources: Index websites, GitHub repositories, local folders, and zip archives.

- 📄 Rich File Support: Processes HTML, Markdown, PDF, Word (.docx), Excel, PowerPoint, and source code.

1. Start the server (requires Node.js 22+):

npx @arabold/docs-mcp-server@latest2. Open the Web UI at http://localhost:6280 to add documentation.

3. Connect your AI client by adding this to your MCP settings (e.g., claude_desktop_config.json):

{

"mcpServers": {

"docs-mcp-server": {

"type": "sse",

"url": "http://localhost:6280/sse"

}

}

}See Connecting Clients for VS Code (Cline, Roo) and other setup options.

Alternative: Run with Docker

docker run --rm \

-v docs-mcp-data:/data \

-v docs-mcp-config:/config \

-p 6280:6280 \

ghcr.io/arabold/docs-mcp-server:latest \

--protocol http --host 0.0.0.0 --port 6280Using an embedding model is optional but dramatically improves search quality by enabling semantic vector search.

Example: Enable OpenAI Embeddings

OPENAI_API_KEY="sk-proj-..." npx @arabold/docs-mcp-server@latestSee Embedding Models for configuring Ollama, Gemini, Azure, and others.

- Installation: Detailed setup guides for Docker, Node.js (npx), and Embedded mode.

- Connecting Clients: How to connect Claude, VS Code (Cline/Roo), and other MCP clients.

- Basic Usage: Using the Web UI, CLI, and scraping local files.

- Configuration: Full reference for config files and environment variables.

- Embedding Models: Configure OpenAI, Ollama, Gemini, and other providers.

- Deployment Modes: Standalone vs. Distributed (Docker Compose).

- Authentication: Securing your server with OAuth2/OIDC.

- Telemetry: Privacy-first usage data collection.

- Architecture: Deep dive into the system design.

We welcome contributions! Please see CONTRIBUTING.md for development guidelines and setup instructions.

This project is licensed under the MIT License. See LICENSE for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for docs-mcp-server

Similar Open Source Tools

docs-mcp-server

The docs-mcp-server repository contains the server-side code for the documentation management system. It provides functionalities for managing, storing, and retrieving documentation files. Users can upload, update, and delete documents through the server. The server also supports user authentication and authorization to ensure secure access to the documentation system. Additionally, the server includes APIs for integrating with other systems and tools, making it a versatile solution for managing documentation in various projects and organizations.

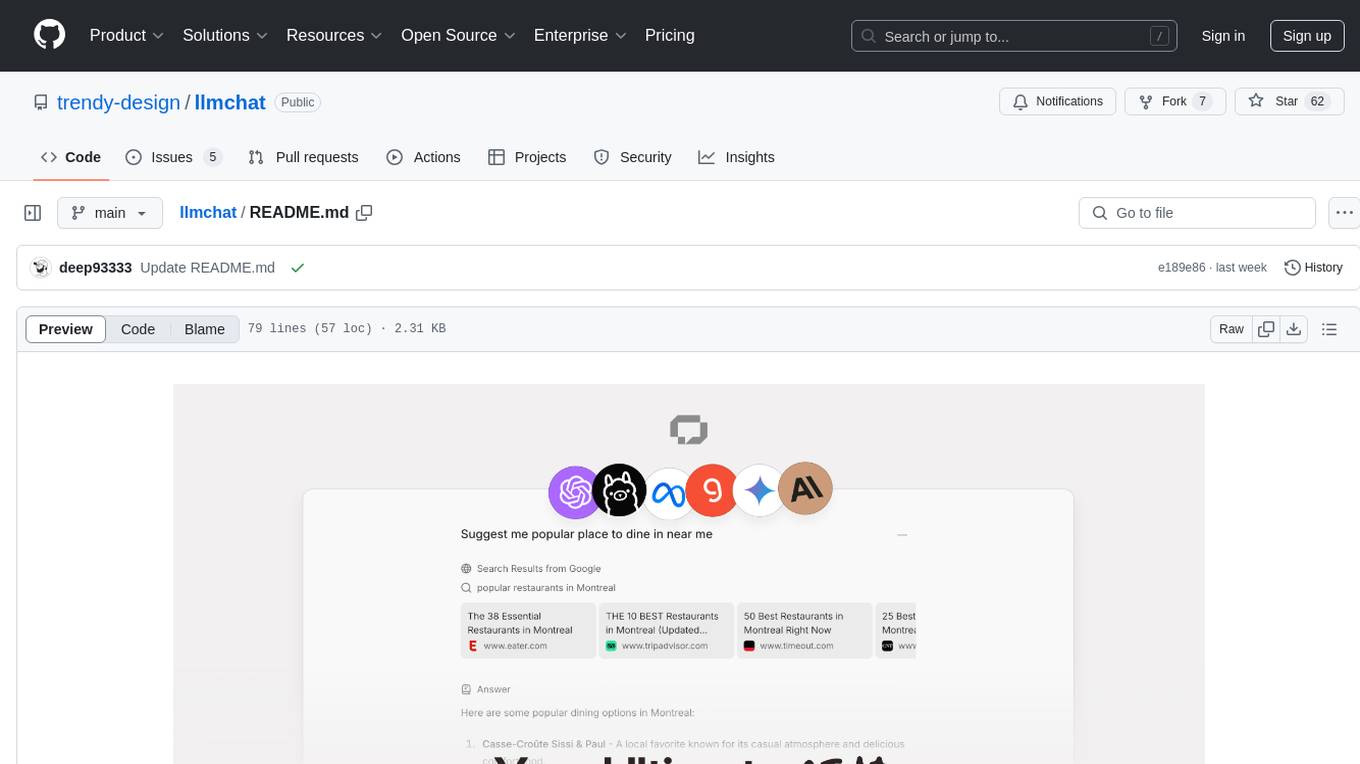

llmchat

LLMChat is an all-in-one AI chat interface that supports multiple language models, offers a plugin library for enhanced functionality, enables web search capabilities, allows customization of AI assistants, provides text-to-speech conversion, ensures secure local data storage, and facilitates data import/export. It also includes features like knowledge spaces, prompt library, personalization, and can be installed as a Progressive Web App (PWA). The tech stack includes Next.js, TypeScript, Pglite, LangChain, Zustand, React Query, Supabase, Tailwind CSS, Framer Motion, Shadcn, and Tiptap. The roadmap includes upcoming features like speech-to-text and knowledge spaces.

sandboxed.sh

sandboxed.sh is a self-hosted cloud orchestrator for AI coding agents that provides isolated Linux workspaces with Claude Code, OpenCode & Amp runtimes. It allows users to hand off entire development cycles, run multi-day operations unattended, and keep sensitive data local by analyzing data against scientific literature. The tool features dual runtime support, mission control for remote agent management, isolated workspaces, a git-backed library, MCP registry, and multi-platform support with a web dashboard and iOS app.

layra

LAYRA is the world's first visual-native AI automation engine that sees documents like a human, preserves layout and graphical elements, and executes arbitrarily complex workflows with full Python control. It empowers users to build next-generation intelligent systems with no limits or compromises. Built for Enterprise-Grade deployment, LAYRA features a modern frontend, high-performance backend, decoupled service architecture, visual-native multimodal document understanding, and a powerful workflow engine.

MCP-Nest

A NestJS module to effortlessly expose tools, resources, and prompts for AI using the Model Context Protocol (MCP). It allows defining tools, resources, and prompts in a familiar NestJS way, supporting multi-transport, tool validation, interactive tool calls, request context access, fine-grained authorization, resource serving, dynamic resources, prompt templates, guard-based authentication, dependency injection, server mutation, and instrumentation. It provides features for building ChatGPT widgets and MCP apps.

aider-desk

AiderDesk is a desktop application that enhances coding workflow by leveraging AI capabilities. It offers an intuitive GUI, project management, IDE integration, MCP support, settings management, cost tracking, structured messages, visual file management, model switching, code diff viewer, one-click reverts, and easy sharing. Users can install it by downloading the latest release and running the executable. AiderDesk also supports Python version detection and auto update disabling. It includes features like multiple project management, context file management, model switching, chat mode selection, question answering, cost tracking, MCP server integration, and MCP support for external tools and context. Development setup involves cloning the repository, installing dependencies, running in development mode, and building executables for different platforms. Contributions from the community are welcome following specific guidelines.

transformerlab-app

Transformer Lab is an app that allows users to experiment with Large Language Models by providing features such as one-click download of popular models, finetuning across different hardware, RLHF and Preference Optimization, working with LLMs across different operating systems, chatting with models, using different inference engines, evaluating models, building datasets for training, calculating embeddings, providing a full REST API, running in the cloud, converting models across platforms, supporting plugins, embedded Monaco code editor, prompt editing, inference logs, all through a simple cross-platform GUI.

zotero-mcp

Zotero MCP is an open-source project that integrates AI capabilities with Zotero using the Model Context Protocol. It consists of a Zotero plugin and an MCP server, enabling AI assistants to search, retrieve, and cite references from Zotero library. The project features a unified architecture with an integrated MCP server, eliminating the need for a separate server process. It provides features like intelligent search, detailed reference information, filtering by tags and identifiers, aiding in academic tasks such as literature reviews and citation management.

tingly-box

Tingly Box is a tool that helps in deciding which model to call, compressing context, and routing requests efficiently. It offers secure, reliable, and customizable functional extensions. With features like unified API, smart routing, context compression, auto API translation, blazing fast performance, flexible authentication, visual control panel, and client-side usage stats, Tingly Box provides a comprehensive solution for managing AI models and tokens. It supports integration with various IDEs, CLI tools, SDKs, and AI applications, making it versatile and easy to use. The tool also allows seamless integration with OAuth providers like Claude Code, enabling users to utilize existing quotas in OpenAI-compatible tools. Tingly Box aims to simplify AI model management and usage by providing a single endpoint for multiple providers with minimal configuration, promoting seamless integration with SDKs and CLI tools.

persistent-ai-memory

Persistent AI Memory System is a comprehensive tool that offers persistent, searchable storage for AI assistants. It includes features like conversation tracking, MCP tool call logging, and intelligent scheduling. The system supports multiple databases, provides enhanced memory management, and offers various tools for memory operations, schedule management, and system health checks. It also integrates with various platforms like LM Studio, VS Code, Koboldcpp, Ollama, and more. The system is designed to be modular, platform-agnostic, and scalable, allowing users to handle large conversation histories efficiently.

neuropilot

NeuroPilot is an open-source AI-powered education platform that transforms study materials into interactive learning resources. It provides tools like contextual chat, smart notes, flashcards, quizzes, and AI podcasts. Supported by various AI models and embedding providers, it offers features like WebSocket streaming, JSON or vector database support, file-based storage, and configurable multi-provider setup for LLMs and TTS engines. The technology stack includes Node.js, TypeScript, Vite, React, TailwindCSS, JSON database, multiple LLM providers, and Docker for deployment. Users can contribute to the project by integrating AI models, adding mobile app support, improving performance, enhancing accessibility features, and creating documentation and tutorials.

llxprt-code

LLxprt Code is an AI-powered coding assistant that works with any LLM provider, offering a command-line interface for querying and editing codebases, generating applications, and automating development workflows. It supports various subscriptions, provider flexibility, top open models, local model support, and a privacy-first approach. Users can interact with LLxprt Code in both interactive and non-interactive modes, leveraging features like subscription OAuth, multi-account failover, load balancer profiles, and extensive provider support. The tool also allows for the creation of advanced subagents for specialized tasks and integrates with the Zed editor for in-editor chat and code selection.

sparka

Sparka AI is a multi-provider AI chat tool that allows users to access various AI models like Claude, GPT-5, Gemini, and Grok through a single interface. It offers features such as document analysis, image generation, code execution, and research tools without the need for multiple subscriptions. The tool is open-source, production-ready, and provides capabilities for collaboration, secure authentication, attachment support, AI-powered image generation, syntax highlighting, resumable streams, chat branching, chat sharing, deep research, code execution, document creation, and web analytics. Built with modern technologies for scalability and performance, Sparka AI integrates with Vercel AI SDK, tRPC, Drizzle ORM, PostgreSQL, Redis, and AI SDK Gateway.

OpenChat

OS Chat is a free, open-source AI personal assistant that combines 40+ language models with powerful automation capabilities. It allows users to deploy background agents, connect services like Gmail, Calendar, Notion, GitHub, and Slack, and get things done through natural conversation. With features like smart automation, service connectors, AI models, chat management, interface customization, and premium features, OS Chat offers a comprehensive solution for managing digital life and workflows. It prioritizes privacy by being open source and self-hostable, with encrypted API key storage.

opencode-manager

OpenCode Manager is a mobile-first web interface for managing and coding with OpenCode AI agents. It allows users to control and code from any device, including phones, tablets, and desktops. The tool provides features for repository and Git management, file management, chat and sessions, AI configuration, as well as mobile and PWA support. Users can clone and manage multiple git repos, work on multiple branches simultaneously, view changes, commits, and branches in a unified interface, create pull requests, navigate files with tree view and search, preview code with syntax highlighting, and perform various file operations. Additionally, the tool supports real-time streaming, slash commands, file mentions, plan/build modes, Mermaid diagrams, text-to-speech, speech-to-text, model selection, provider management, OAuth support, custom agents creation, and more. It is optimized for mobile devices, installable as a PWA, and offers push notifications for agent events.

talkcody

TalkCody is a free, open-source AI coding agent designed for developers who value speed, cost, control, and privacy. It offers true freedom to use any AI model without vendor lock-in, maximum speed through unique four-level parallelism, and complete privacy as everything runs locally without leaving the user's machine. With professional-grade features like multimodal input support, MCP server compatibility, and a marketplace for agents and skills, TalkCody aims to enhance development productivity and flexibility.

For similar tasks

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

airbroke

Airbroke is an open-source error catcher tool designed for modern web applications. It provides a PostgreSQL-based backend with an Airbrake-compatible HTTP collector endpoint and a React-based frontend for error management. The tool focuses on simplicity, maintaining a small database footprint even under heavy data ingestion. Users can ask AI about issues, replay HTTP exceptions, and save/manage bookmarks for important occurrences. Airbroke supports multiple OAuth providers for secure user authentication and offers occurrence charts for better insights into error occurrences. The tool can be deployed in various ways, including building from source, using Docker images, deploying on Vercel, Render.com, Kubernetes with Helm, or Docker Compose. It requires Node.js, PostgreSQL, and specific system resources for deployment.

aiohttp-security

aiohttp_security is a library that provides identity and authorization for aiohttp.web. It offers features for handling authorization via cookies and supports aiohttp-session. The library includes examples for basic usage and database authentication, along with demos in the demo directory. For development, the library requires installation of specific requirements listed in the requirements-dev.txt file. aiohttp_security is licensed under the Apache 2 license.

EvoMaster

EvoMaster is an open-source AI-driven tool that automatically generates system-level test cases for web/enterprise applications. It uses Evolutionary Algorithm and Dynamic Program Analysis to evolve test cases, maximizing code coverage and fault detection. It supports REST, GraphQL, and RPC APIs, with whitebox testing for JVM-compiled APIs. The tool generates JUnit tests in Java or Kotlin, focusing on fault detection, self-contained tests, SQL handling, and authentication. Known limitations include manual driver creation for whitebox testing and longer execution times for better results. EvoMaster has been funded by ERC and RCN grants.

clarifai-python-grpc

This is the official Clarifai gRPC Python client for interacting with their recognition API. Clarifai offers a platform for data scientists, developers, researchers, and enterprises to utilize artificial intelligence for image, video, and text analysis through computer vision and natural language processing. The client allows users to authenticate, predict concepts in images, and access various functionalities provided by the Clarifai API. It follows a versioning scheme that aligns with the backend API updates and includes specific instructions for installation and troubleshooting. Users can explore the Clarifai demo, sign up for an account, and refer to the documentation for detailed information.

GeminiChatUp

Gemini ChatUp is a chat application utilizing the Google GeminiPro API Key. It supports responsive layout and can store multiple sets of conversations with customizable parameters for each set. Users can log in with a test account or provide their own API Key to deploy the feature. The application also offers user authentication through Edge config in Vercel, allowing users to add usernames and passwords in JSON format. Local deployment is possible by installing dependencies, setting up environment variables, and running the application locally.

serverless-pdf-chat

The serverless-pdf-chat repository contains a sample application that allows users to ask natural language questions of any PDF document they upload. It leverages serverless services like Amazon Bedrock, AWS Lambda, and Amazon DynamoDB to provide text generation and analysis capabilities. The application architecture involves uploading a PDF document to an S3 bucket, extracting metadata, converting text to vectors, and using a LangChain to search for information related to user prompts. The application is not intended for production use and serves as a demonstration and educational tool.

obot

Obot is an open source AI agent platform that allows users to build agents for various use cases such as copilots, assistants, and autonomous workflows. It offers integration with leading LLM providers, built-in RAG for data, easy integration with custom web services and APIs, and OAuth 2.0 authentication.

For similar jobs

DocsGPT

DocsGPT is an open-source documentation assistant powered by GPT models. It simplifies the process of searching for information in project documentation by allowing developers to ask questions and receive accurate answers. With DocsGPT, users can say goodbye to manual searches and quickly find the information they need. The tool aims to revolutionize project documentation experiences and offers features like live previews, Discord community, guides, and contribution opportunities. It consists of a Flask app, Chrome extension, similarity search index creation script, and a frontend built with Vite and React. Users can quickly get started with DocsGPT by following the provided setup instructions and can contribute to its development by following the guidelines in the CONTRIBUTING.md file. The project follows a Code of Conduct to ensure a harassment-free community environment for all participants. DocsGPT is licensed under MIT and is built with LangChain.

airflow-site

This repository contains the source code for the Apache Airflow website, including directories for archived documentation versions, landing pages, license templates, and the Sphinx theme. To work on the site locally, users need to install coreutils, Node.js, NPM, and HUGO, and run specific scripts provided in the repository. Contributors can refer to the contributor's guide for detailed instructions on how to contribute to the website.

lumentis

Lumentis is a tool that allows users to generate beautiful and comprehensive documentation from meeting transcripts and large documents with a single command. It reads transcripts, asks questions to understand themes and audience, generates an outline, and creates detailed pages with visual variety and styles. Users can switch models for different tasks, control the process, and deploy the generated docs to Vercel. The tool is designed to be open, clean, fast, and easy to use, with upcoming features including folders, PDFs, auto-transcription, website scraping, scientific papers handling, summarization, and continuous updates.

dify-docs

Dify Docs is a repository that houses the documentation website code and Markdown source files for docs.dify.ai. It contains assets, content, and data folders that are licensed under a CC-BY license.

code2prompt

Code2Prompt is a powerful command-line tool that generates comprehensive prompts from codebases, designed to streamline interactions between developers and Large Language Models (LLMs) for code analysis, documentation, and improvement tasks. It bridges the gap between codebases and LLMs by converting projects into AI-friendly prompts, enabling users to leverage AI for various software development tasks. The tool offers features like holistic codebase representation, intelligent source tree generation, customizable prompt templates, smart token management, Gitignore integration, flexible file handling, clipboard-ready output, multiple output options, and enhanced code readability.

semantic-kernel-docs

The Microsoft Semantic Kernel Documentation GitHub repository contains technical product documentation for Semantic Kernel. It serves as the home of technical content for Microsoft products and services. Contributors can learn how to make contributions by following the Docs contributor guide. The project follows the Microsoft Open Source Code of Conduct.

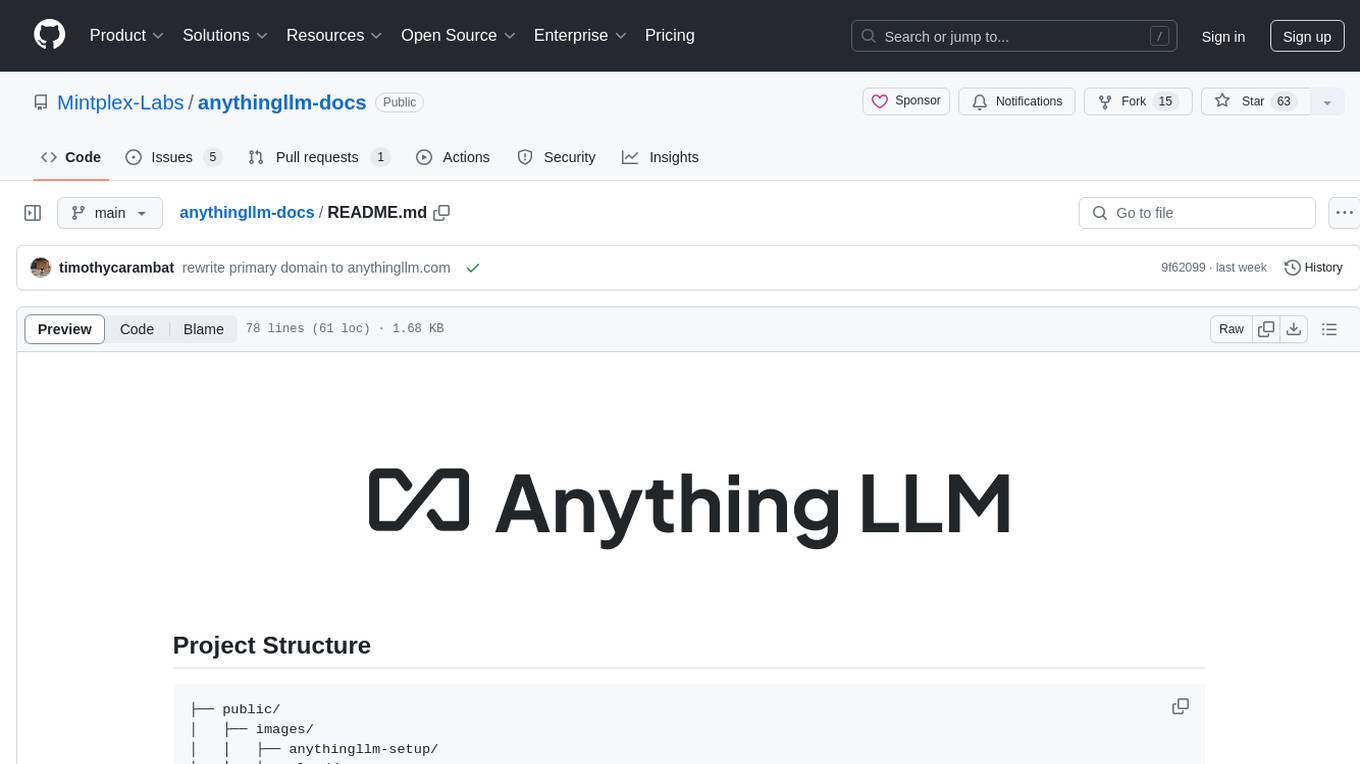

anythingllm-docs

anythingllm-docs is a documentation repository for the AnythingLLM project. It contains detailed guides, setup instructions, and information on features and legal aspects of the project. The repository structure is organized into public, pages, components, and configuration files. Users can contribute by creating issues and pull requests following specific guidelines. The project is licensed under the MIT License and has been migrated to NextJS with the help of @ShadowArcanist.

RepoAgent

RepoAgent is an LLM-powered framework designed for repository-level code documentation generation. It automates the process of detecting changes in Git repositories, analyzing code structure through AST, identifying inter-object relationships, replacing Markdown content, and executing multi-threaded operations. The tool aims to assist developers in understanding and maintaining codebases by providing comprehensive documentation, ultimately improving efficiency and saving time.