lumentis

AI powered one-click comprehensive docs from transcripts and text.

Stars: 1381

Lumentis is a tool that allows users to generate beautiful and comprehensive documentation from meeting transcripts and large documents with a single command. It reads transcripts, asks questions to understand themes and audience, generates an outline, and creates detailed pages with visual variety and styles. Users can switch models for different tasks, control the process, and deploy the generated docs to Vercel. The tool is designed to be open, clean, fast, and easy to use, with upcoming features including folders, PDFs, auto-transcription, website scraping, scientific papers handling, summarization, and continuous updates.

README:

A simple way to generate comprehensive, easy-to-skim docs from your meeting transcripts and large documents.

- Run

npx lumentisin an empty directory. That's really it. You can skip the rest of this README. (DON'T run lumentis in its own project directory after cloning the repo!) - Feed it a transcript, doc or notes when asked.

- Answer some questions about themes and audience.

- Pick what you like from the generated outline.

- Wait for your docs to be written up!

- Deploy your docs to Vercel by pushing your folder and following the guide.

Lumentis lets you swap models between stages. Here's some docs exactly as Lumentis generated them, no editing. I just hit Enter a few times.

- The Feynman Lectures on Physics - taken from the 5 hour Feynman Lectures, this is Sonnet doing the hard work for 72 cents, and Haiku writing it out for 38 cents.

- Designing Frictionless Interfaces for Google - Mustafa Kurtuldu gave a wonderful talk on design and UX I wish more people would watch. Now you can read it. (Do still watch it) but this is Haiku doing the whole thing for less than 8 (not eighty) cents!

- How the AI in Spiderman 2 works - from something that's been on my list for a long time. Opus took about $3.80 to do the whole thing.

- Sam Altman and Lex Friedman on GPT-5 - Sam and Lex had a conversation recently. Here's Opus doing the hard work for $2.3, and Sonnet doing the rest for $2.5. This is the expensive option.

- Self-Discover in DSPy with Chris Dossman - an interesting conversation between Chris Dossman and Weviate about DSPy and structured reasoning, one of the core concepts behind the framework. Eugene splurged something like $25 on this 😱 because he wanted to see how Lumentis would do at its best.

- Cost before run: Lumentis will dynamically tell you what each operation costs.

- Switch models: Use a smarter model to do the hard parts, and a cheaper model for long-form work. See the examples.

- Easy to change: Ctrl+C at any time and restart. Lumentis remembers your responses, and lets you change them.

- Everything in the open: want to know how it works? Check the

.lumentisfolder to see every message and response to the AI. - Super clean: Other than

.lumentiswith the prompts and state, you have a clean project to do anything with. Git/Vercel/Camera ready. - Super fast: (If you run with

bun. Can't vouch for npm.)

Lumentis reads your transcript and:

- Asks you some questions to understand the themes and audience. Also to surf the latent space or things.

- Generates an outline and asks you to select what you want to keep.

- Auto generates structure from the information and further refines it with your input, while self-healing things.

- Generates detailed pages with visual variety, formatting and styles.

- Folders

- PDFs

- Auto-transcription with a rubber ducky

- Scraping entire websites

- Scientific papers

- Recursive summarisation and expansion

- Continuously updating docs

git clone https://github.com/hrishioa/lumentis.git

cd lumentis

bun install

bun run runUsing bun because it's fast. You can also use npm or yarn if you prefer.

Try it out and let me know the URL so I can add it here! There's also some badly organized things in TODO.md that I need to get around to.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for lumentis

Similar Open Source Tools

lumentis

Lumentis is a tool that allows users to generate beautiful and comprehensive documentation from meeting transcripts and large documents with a single command. It reads transcripts, asks questions to understand themes and audience, generates an outline, and creates detailed pages with visual variety and styles. Users can switch models for different tasks, control the process, and deploy the generated docs to Vercel. The tool is designed to be open, clean, fast, and easy to use, with upcoming features including folders, PDFs, auto-transcription, website scraping, scientific papers handling, summarization, and continuous updates.

aitools_client

Seth's AI Tools is a Unity-based front-end that interfaces with various AI APIs to perform tasks such as generating Twine games, quizzes, posters, and more. The tool is a native Windows application that supports features like live update integration with image editors, text-to-image conversion, image processing, mask painting, and more. It allows users to connect to multiple servers for fast generation using GPUs and offers a neat workflow for evolving images in real-time. The tool respects user privacy by operating locally and includes built-in games and apps to test AI/SD capabilities. Additionally, it features an AI Guide for creating motivational posters and illustrated stories, as well as an Adventure mode with presets for generating web quizzes and Twine game projects.

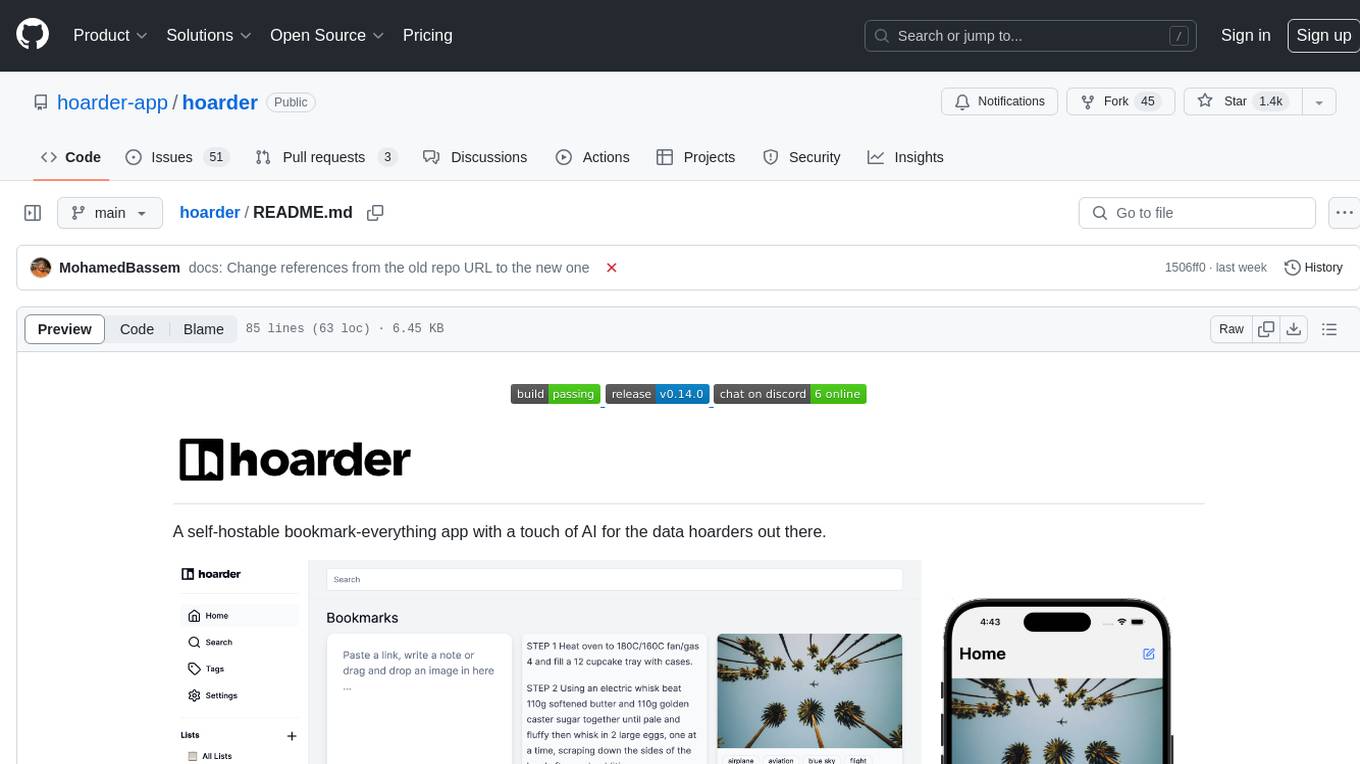

hoarder

A self-hostable bookmark-everything app with a touch of AI for data hoarders. Features include bookmarking links, taking notes, storing images, automatic fetching for link details, full-text search, AI-based automatic tagging, Chrome and Firefox plugins, iOS and Android apps, dark mode support, and self-hosting. Built to address the need for archiving and previewing links with automatic tagging. Developed by a systems engineer to stay connected with web development and cater to personal use cases.

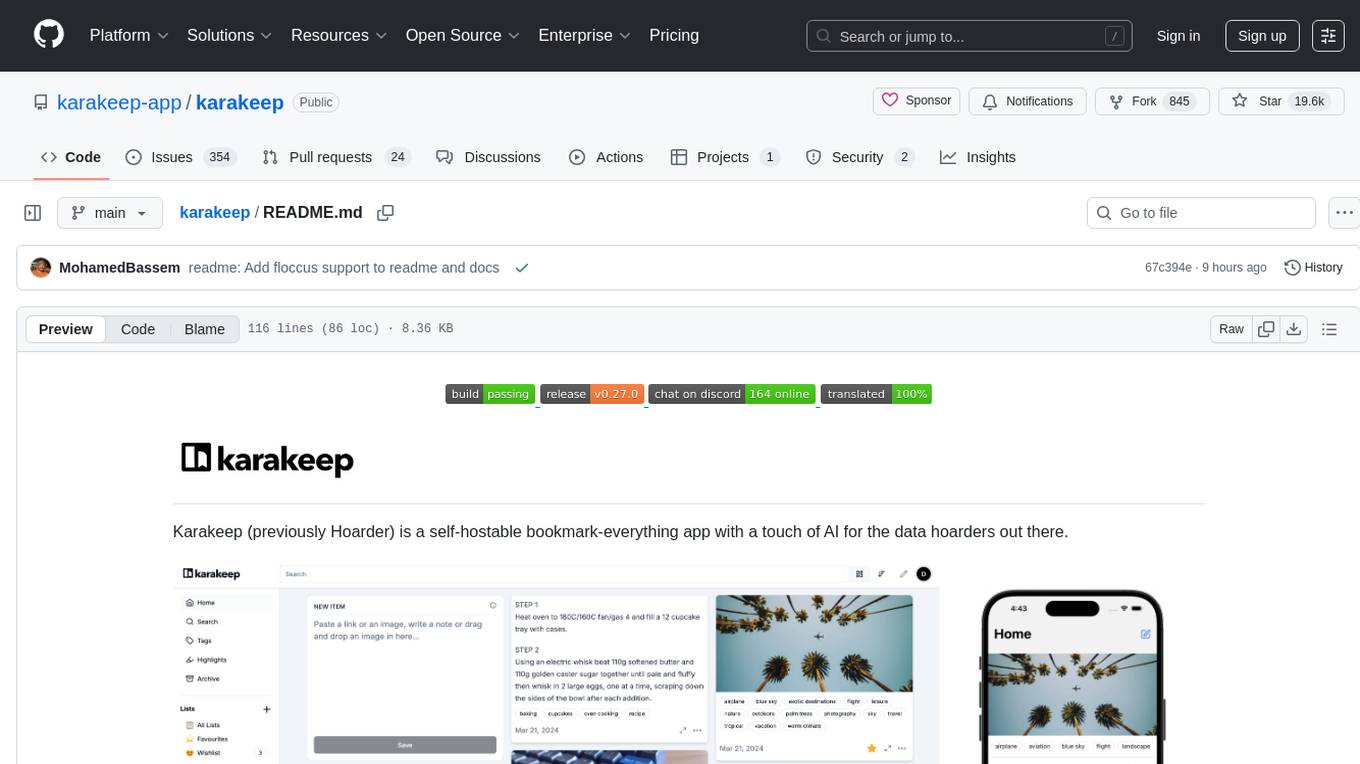

karakeep

Karakeep is a self-hostable bookmark-everything app with a touch of AI for data hoarders. It allows users to bookmark links, take notes, store images and pdfs, and offers features like automatic fetching, full-text search, AI-based tagging, OCR, rule-based engine, Chrome plugin, Firefox addon, iOS and Android apps, auto hoarding from RSS feeds, REST API, multi-language support, and more. The app is under heavy development and aims to provide a self-hosting first solution for managing bookmarks and content.

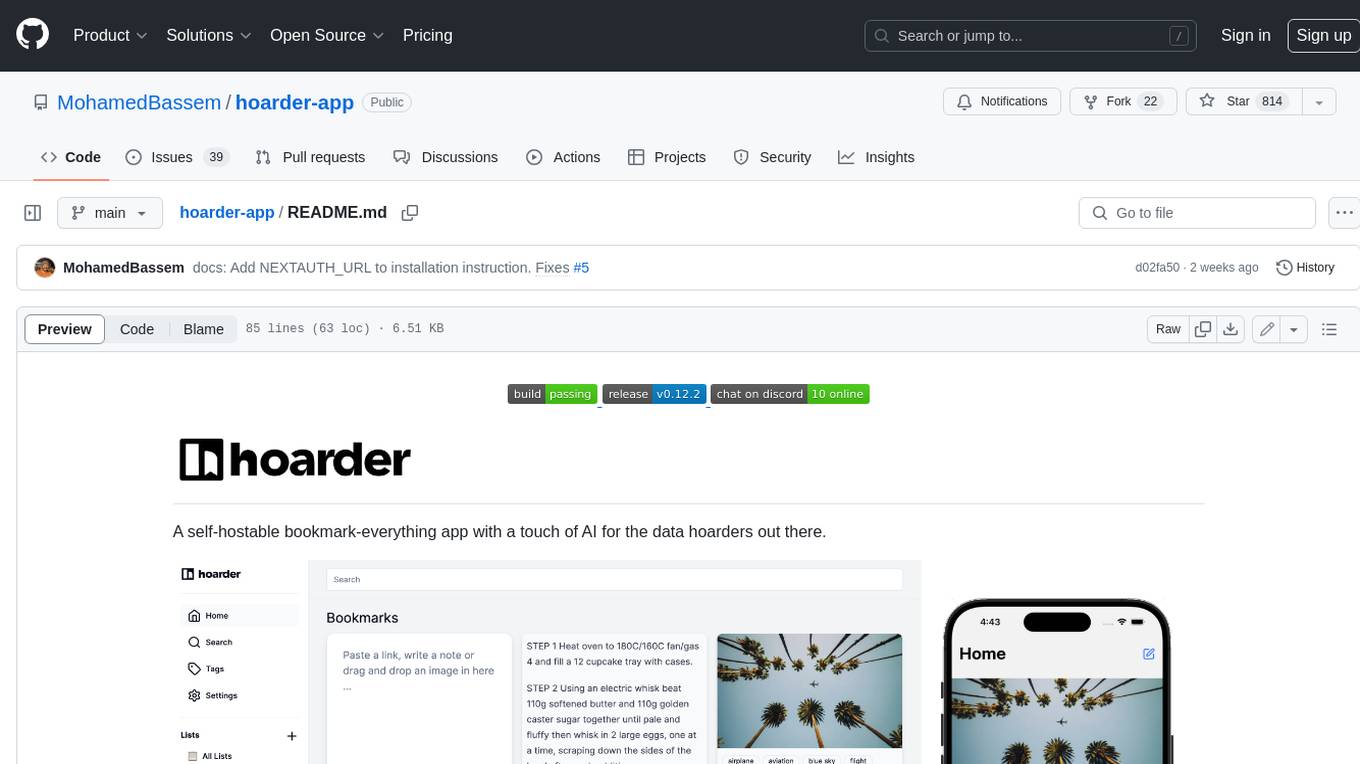

hoarder-app

Hoarder is a self-hostable bookmark manager with a focus on privacy and customization. It features automatic link previews, full-text search, AI-based tagging, and a variety of import and export options. Hoarder is designed to be easy to use and extensible, with a plugin system that allows users to add their own features and integrations.

EdgeChains

EdgeChains is an open-source chain-of-thought engineering framework tailored for Large Language Models (LLMs)- like OpenAI GPT, LLama2, Falcon, etc. - With a focus on enterprise-grade deployability and scalability. EdgeChains is specifically designed to **orchestrate** such applications. At EdgeChains, we take a unique approach to Generative AI - we think Generative AI is a deployment and configuration management challenge rather than a UI and library design pattern challenge. We build on top of a tech that has solved this problem in a different domain - Kubernetes Config Management - and bring that to Generative AI. Edgechains is built on top of jsonnet, originally built by Google based on their experience managing a vast amount of configuration code in the Borg infrastructure.

chatgpt-universe

ChatGPT is a large language model that can generate human-like text, translate languages, write different kinds of creative content, and answer your questions in a conversational way. It is trained on a massive amount of text data, and it is able to understand and respond to a wide range of natural language prompts. Here are 5 jobs suitable for this tool, in lowercase letters: 1. content writer 2. chatbot assistant 3. language translator 4. creative writer 5. researcher

kobold_assistant

Kobold-Assistant is a fully offline voice assistant interface to KoboldAI's large language model API. It can work online with the KoboldAI horde and online speech-to-text and text-to-speech models. The assistant, called Jenny by default, uses the latest coqui 'jenny' text to speech model and openAI's whisper speech recognition. Users can customize the assistant name, speech-to-text model, text-to-speech model, and prompts through configuration. The tool requires system packages like GCC, portaudio development libraries, and ffmpeg, along with Python >=3.7, <3.11, and runs on Ubuntu/Debian systems. Users can interact with the assistant through commands like 'serve' and 'list-mics'.

Generative-AI-Pharmacist

Generative AI Pharmacist is a project showcasing the use of generative AI tools to create an animated avatar named Macy, who delivers medication counseling in a realistic and professional manner. The project utilizes tools like Midjourney for image generation, ChatGPT for text generation, ElevenLabs for text-to-speech conversion, and D-ID for creating a photorealistic talking avatar video. The demo video featuring Macy discussing commonly-prescribed medications demonstrates the potential of generative AI in healthcare communication.

llm_engineering

LLM Engineering is an 8-week course designed to help learners master AI and LLMs through a series of projects that gradually increase in complexity. The course covers setting up the environment, working with APIs, using Google Colab for GPU processing, and building an autonomous Agentic AI solution. Learners are encouraged to actively participate, run code cells, tweak code, and share their progress with the community. The emphasis is on practical, educational projects that teach valuable business skills.

wingman-ai

Wingman AI allows you to use your voice to talk to various AI providers and LLMs, process your conversations, and ultimately trigger actions such as pressing buttons or reading answers. Our _Wingmen_ are like characters and your interface to this world, and you can easily control their behavior and characteristics, even if you're not a developer. AI is complex and it scares people. It's also **not just ChatGPT**. We want to make it as easy as possible for you to get started. That's what _Wingman AI_ is all about. It's a **framework** that allows you to build your own Wingmen and use them in your games and programs. The idea is simple, but the possibilities are endless. For example, you could: * **Role play** with an AI while playing for more immersion. Have air traffic control (ATC) in _Star Citizen_ or _Flight Simulator_. Talk to Shadowheart in Baldur's Gate 3 and have her respond in her own (cloned) voice. * Get live data such as trade information, build guides, or wiki content and have it read to you in-game by a _character_ and voice you control. * Execute keystrokes in games/applications and create complex macros. Trigger them in natural conversations with **no need for exact phrases.** The AI understands the context of your dialog and is quite _smart_ in recognizing your intent. Say _"It's raining! I can't see a thing!"_ and have it trigger a command you simply named _WipeVisors_. * Automate tasks on your computer * improve accessibility * ... and much more

FreeChat

FreeChat is a native LLM appliance for macOS that runs completely locally. Download it and ask your LLM a question without doing any configuration. A local/llama version of OpenAI's chat without login or tracking. You should be able to install from the Mac App Store and use it immediately.

maxheadbox

Max Headbox is an open-source voice-activated LLM Agent designed to run on a Raspberry Pi. It can be configured to execute a variety of tools and perform actions. The project requires specific hardware and software setups, and provides detailed instructions for installation, configuration, and usage. Users can create custom tools by making JavaScript modules and backend API handlers. The project acknowledges the use of various open-source projects and resources in its development.

whisper_dictation

Whisper Dictation is a fast, offline, privacy-focused tool for voice typing, AI voice chat, voice control, and translation. It allows hands-free operation, launching and controlling apps, and communicating with OpenAI ChatGPT or a local chat server. The tool also offers the option to speak answers out loud and draw pictures. It includes client and server versions, inspired by the Star Trek series, and is designed to keep data off the internet and confidential. The project is optimized for dictation and translation tasks, with voice control capabilities and AI image generation using stable-diffusion API.

CodeProject.AI-Server

CodeProject.AI Server is a standalone, self-hosted, fast, free, and open-source Artificial Intelligence microserver designed for any platform and language. It can be installed locally without the need for off-device or out-of-network data transfer, providing an easy-to-use solution for developers interested in AI programming. The server includes a HTTP REST API server, backend analysis services, and the source code, enabling users to perform various AI tasks locally without relying on external services or cloud computing. Current capabilities include object detection, face detection, scene recognition, sentiment analysis, and more, with ongoing feature expansions planned. The project aims to promote AI development, simplify AI implementation, focus on core use-cases, and leverage the expertise of the developer community.

For similar tasks

lumentis

Lumentis is a tool that allows users to generate beautiful and comprehensive documentation from meeting transcripts and large documents with a single command. It reads transcripts, asks questions to understand themes and audience, generates an outline, and creates detailed pages with visual variety and styles. Users can switch models for different tasks, control the process, and deploy the generated docs to Vercel. The tool is designed to be open, clean, fast, and easy to use, with upcoming features including folders, PDFs, auto-transcription, website scraping, scientific papers handling, summarization, and continuous updates.

prompt-generator-comfyui

Custom AI prompt generator node for ComfyUI. With this node, you can use text generation models to generate prompts. Before using, text generation model has to be trained with prompt dataset.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.