prompt-generator-comfyui

Custom AI prompt generator node for the ComfyUI

Stars: 87

Custom AI prompt generator node for ComfyUI. With this node, you can use text generation models to generate prompts. Before using, text generation model has to be trained with prompt dataset.

README:

Custom AI prompt generator node for ComfyUI. With this node, you can use text generation models to generate prompts. Before using, text generation model has to be trained with prompt dataset or you can use the pretrained models.

- prompt-generator-comfyui

- Table Of Contents

- Setup

- Features

- Example Workflow

- Pretrained Prompt Models

- Variables

- Troubleshooting

- Contributing

- Example Outputs

- [x] Automatic installation is added for portable version.

- Clone the repository with

git clone https://github.com/alpertunga-bile/prompt-generator-comfyui.gitcommand undercustom_nodesfolder. - Go to the

ComfyUI_windows_portablefolder and run the run_nvidia_gpu.bat file - Open the

hires.fixWithPromptGenerator.jsonorbasicWorkflowWithPromptGenerator.jsonworkflow - Put your generator under the

models/prompt_generatorsfolder. You can create your prompt generator with this repository. You have to put generator as folder. Do not just putpytorch_model.binfile for example. - Click

Refreshbutton in ComfyUI

- Clone the repository with

git clone https://github.com/alpertunga-bile/prompt-generator-comfyui.gitcommand undercustom_nodesfolder. - Run the ComfyUI

- Open the

hires.fixWithPromptGenerator.jsonorbasicWorkflowWithPromptGenerator.jsonworkflow - Put your generator under the

models/prompt_generatorsfolder. You can create your prompt generator with this repository. You have to put generator as folder. Do not just putpytorch_model.binfile for example. - Click

Refreshbutton in ComfyUI

- Download the node with ComfyUI Manager

- Restart the ComfyUI

- Open the

hires.fixWithPromptGenerator.jsonorbasicWorkflowWithPromptGenerator.jsonworkflow - Put your generator under the

models/prompt_generatorsfolder. You can create your prompt generator with this repository. You have to put generator as folder. Do not just putpytorch_model.binfile for example. - Click

Refreshbutton in ComfyUI

- Multiple output generation is added. You can choose from 5 outputs with the index value. You can check the generated prompts from the log file and terminal. The prompts are logged and printed in order.

- Randomness is added. See this section.

- Quantization is added with Quanto and Bitsandbytes packages. See this section.

- Lora adapter model loading is added with Peft package. (The feature is not full tested in this repository because of my low VRAM but I am using the same implementation in Google Colab for training and inference and it is working there)

- Optimizations are done with Optimum package.

- ONNX and transformers models are supported.

- Preprocessing outputs. See this section.

- Recursive generation is supported. See this section.

- Print generated text to terminal and log the node's state under the

generated_promptsfolder with date as filename.

- Prompt Generator Node may look different with final version but workflow in ComfyUI is not going to change

-

You can find the models in this link

-

For to use the pretrained model follow these steps:

- Download the model and unzip to

models/prompt_generatorsfolder. - Click

Refreshbutton in ComfyUI. - Then select the generator with the node's

model_namevariable (If you can't see the generator, restart the ComfyUI).

- Download the model and unzip to

- Huggingface Dataset

- Process of data cleaning and gathering can be found here

-

The model versions are used to differentiate models rather than showing which one is better.

-

The v2 version is the latest trained model and the v4 and v5 models are experimental models.

-

female_positive_generator_v2 | (Training In Process)

- Base model

- using distilgpt2 model

- ~500 MB

-

female_positive_generator_v3 | (Training In Process)

- Base model

- using bigscience/bloom-560m model

- ~1.3 GB

-

female_positive_generator_v4 | Experimental

- Lora adapter model

- using senseable/WestLake-7B-v2 model as the base model

- Base model is ~14 GB

| Variable Names | Definitions |

|---|---|

| model_name | Folder name that contains the model |

| accelerate | Open optimizations. Some of the models are not supported by BetterTransformer (Check your model). If it is not supported switch this option to disable or convert your model to ONNX |

| quantize | Quantize the model. The quantize type is changed based on your OS and torch version. none value disables the quantization. Check this section for more information |

| token_healing | Enable token healing algorithm which is used for fixing unintended bias. Read more from this blog post. To use it, the transformers package version has to be newer from or equal to 4.6 version |

| prompt | Input prompt for the generator |

| seed | Seed value for the model |

| lock | Lock the generation and select from the last generated prompts with index value |

| random_index | Random index value in [1, 5]. If the value is enable, the index value is not used |

| index | User specified index value for selecting prompt from the generated prompts. random_index variable must be disable |

| cfg | CFG is enabled by setting guidance_scale > 1. Higher guidance scale encourages the model to generate samples that are more closely linked to the input prompt, usually at the expense of poorer quality |

| min_new_tokens | The minimum numbers of tokens to generate, ignoring the number of tokens in the prompt. |

| max_new_tokens | The maximum numbers of tokens to generate, ignoring the number of tokens in the prompt. |

| do_sample | Whether or not to use sampling; use greedy decoding otherwise |

| early_stopping | Controls the stopping condition for beam-based methods, like beam-search |

| num_beams | Number of steps for each search path |

| num_beam_groups | Number of groups to divide num_beams into in order to ensure diversity among different groups of beams |

| diversity_penalty | This value is subtracted from a beam’s score if it generates a token same as any beam from other group at a particular time. Note that diversity_penalty is only effective if group beam search is enabled. |

| temperature | How sensitive the algorithm is to selecting low probability options |

| top_k | The number of highest probability vocabulary tokens to keep for top-k-filtering |

| top_p | If set to float < 1, only the smallest set of most probable tokens with probabilities that add up to top_p or higher are kept for generation |

| repetition_penalty | The parameter for repetition penalty. 1.0 means no penalty |

| no_repeat_ngram_size | The size of an n-gram that cannot occur more than once. (0=infinity) |

| remove_invalid_values | Whether to remove possible nan and inf outputs of the model to prevent the generation method to crash. Note that using remove_invalid_values can slow down generation. |

| self_recursive | See this section |

| recursive_level | See this section |

| preprocess_mode | See this section |

- Quantization is added with Quanto and Bitsandbytes packages.

- The Quanto package requires

torch >= 2.4and Bitsandbytes package works out-of-box with Linux OS. So the node is checking which package to use:- If requirements are not specified for this packages, you can not use the

quantizevariable and it has onlynonevalue. - If the Quanto requirements are filled then you can choose between

none, int8, float8, int4values. - If the Bitsandbytes requirements are filled then you can choose between

none, int8, int4values.

- If requirements are not specified for this packages, you can not use the

- If your environment can use the Quanto and Bitsandbytes packages, the node selects the Bitsandbytes package.

-

For random generation:

- Enable do_sample

-

You can find this text generation strategy from the upper link. The strategy is called Multinomial sampling.

-

Changing variable of do_sample to disable gives deterministic generation.

-

For more randomness, you can:

- Set num_beams to 1

- Enable random_index variable

- Increase recursive_level

- Enable self_recursive

- Enabling the lock variable skip the generation and let you choose from the last generated prompts.

- You can choose from the index value or use the random_index.

- If random_index is enabled, the index value is ignored.

- Let's say we give

a,as seed and recursive level is 1. I am going to use the same outputs for this example to explain the functionality more understandable. - With self recursive, let's say generator's output is

b. So next seed is going to beband generator's output isc. Final output isa, c. It can be used for generating random outputs. - Without self recursive, let's say generator's output is

b. So next seed is going to bea, band generator's output isc. Final output isa, b, c. It can be used for more accurate prompts.

-

exact_keyword =>

(masterpiece), ((masterpiece))is not allowed. Checking the pure keyword without parantheses and weights. The algorithm is adding the prompts from the beginning of the generated text, so add important prompts to prompt variable. -

exact_prompt =>

(masterpiece), ((masterpiece))is allowed but(masterpiece), (masterpiece)is not. Checking the exact match of the prompt. - none => Everything is allowed even the repeated prompts.

# ---------------------------------------------------------------------- Original ---------------------------------------------------------------------- #

((masterpiece)), ((masterpiece:1.2)), (masterpiece), blahblah, blah, blah, ((blahblah)), (((((blah))))), ((same prompt)), same prompt, (masterpiece)

# ------------------------------------------------------------- Preprocess (Exact Keyword) ------------------------------------------------------------- #

((masterpiece)), blahblah, blah, ((same prompt))

# ------------------------------------------------------------- Preprocess (Exact Prompt) -------------------------------------------------------------- #

((masterpiece)), ((masterpiece:1.2)), (masterpiece), blahblah, blah, ((blahblah)), (((((blah))))), ((same prompt)), same prompt

- If the below solutions are not fixed your issue please create an issue with

buglabel

- The node is based on transformers and optimum packages. So most of the problems may be caused from these packages. For overcome these problems you can try to update these packages:

- Activate the virtual environment if there is one.

- Run the

pip install --upgrade transformers optimum optimum[onnxruntime-gpu]command.

- Go to the

ComfyUI_windows_portablefolder. - Open the command prompt in this folder.

- Run the

.\python_embeded\python.exe -s -m pip install --upgrade transformers optimum optimum[onnxruntime-gpu]command.

- The users have to check if they activate the virtual environment if there is one.

- The users have to check that they are starting the ComfyUI in the

ComfyUI_windows_portablefolder. - Because the node is checking the

python_embededfolder if it is exists and is using it to install the required packages.

- Sometimes the variables are changed with updates, so it may broke the workflow. But don't worry, you have to just delete the node in the workflow and add it again.

-

Contributions are welcome. If you have an idea and want to implement it by yourself please follow these steps:

- Create a fork

- Pull request the fork with the description that explaining the new feature

-

If you have an idea but don't know how to implement it, please create an issue with

enhancementlabel. -

[x] The contributing can be done in several ways. You can contribute to code or to README file.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for prompt-generator-comfyui

Similar Open Source Tools

prompt-generator-comfyui

Custom AI prompt generator node for ComfyUI. With this node, you can use text generation models to generate prompts. Before using, text generation model has to be trained with prompt dataset.

nvim-aider

Nvim-aider is a plugin for Neovim that provides additional functionality and key mappings to enhance the user's editing experience. It offers features such as code navigation, quick access to commonly used commands, and improved text manipulation tools. With Nvim-aider, users can streamline their workflow and increase productivity while working with Neovim.

note-gen

Note-gen is a simple tool for generating notes automatically based on user input. It uses natural language processing techniques to analyze text and extract key information to create structured notes. The tool is designed to save time and effort for users who need to summarize large amounts of text or generate notes quickly. With note-gen, users can easily create organized and concise notes for study, research, or any other purpose.

mcp-use

MCP-Use is a Python library for analyzing and processing text data using Markov Chains. It provides functionalities for generating text based on input data, calculating transition probabilities, and simulating text sequences. The library is designed to be user-friendly and efficient, making it suitable for natural language processing tasks.

mistral.rs

Mistral.rs is a fast LLM inference platform written in Rust. We support inference on a variety of devices, quantization, and easy-to-use application with an Open-AI API compatible HTTP server and Python bindings.

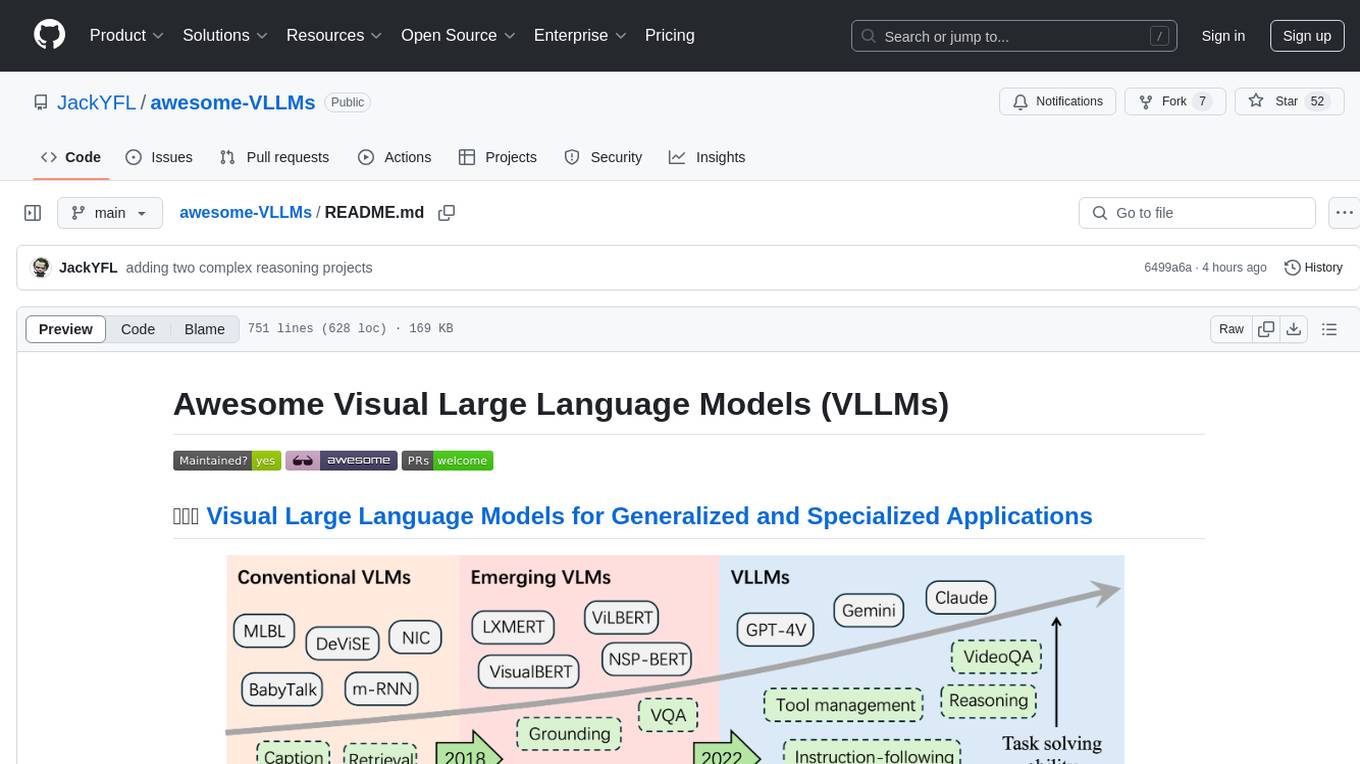

awesome-VLLMs

This repository contains a collection of pre-trained Very Large Language Models (VLLMs) that can be used for various natural language processing tasks. The models are fine-tuned on large text corpora and can be easily integrated into existing NLP pipelines for tasks such as text generation, sentiment analysis, and language translation. The repository also provides code examples and tutorials to help users get started with using these powerful language models in their projects.

PaddleOCR

PaddleOCR is an easy-to-use and scalable OCR toolkit based on PaddlePaddle. It provides a series of text detection and recognition models, supporting multiple languages and various scenarios. With PaddleOCR, users can perform accurate and efficient text extraction from images and videos, making it suitable for tasks such as document scanning, text recognition, and information extraction.

LocalLLMClient

LocalLLMClient is a Swift package designed to interact with local Large Language Models (LLMs) on Apple platforms. It supports GGUF, MLX models, and the FoundationModels framework, providing streaming API, multimodal capabilities, and tool calling functionalities. Users can easily integrate this tool to work with various models for text generation and processing. The package also includes advanced features for low-level API control and multimodal image processing. LocalLLMClient is experimental and subject to API changes, offering support for iOS, macOS, and Linux platforms.

orate

Orate is an AI toolkit designed for speech processing tasks. It allows users to generate realistic, human-like speech and transcribe audio using a unified API that integrates with popular AI providers such as OpenAI, ElevenLabs, and AssemblyAI. The toolkit can be easily installed using npm or other package managers. For more details, visit the website.

ai21-python

The AI21 Labs Python SDK is a comprehensive tool for interacting with the AI21 API. It provides functionalities for chat completions, conversational RAG, token counting, error handling, and support for various cloud providers like AWS, Azure, and Vertex. The SDK offers both synchronous and asynchronous usage, along with detailed examples and documentation. Users can quickly get started with the SDK to leverage AI21's powerful models for various natural language processing tasks.

turftopic

Turftopic is a Python library that provides tools for sentiment analysis and topic modeling of text data. It allows users to analyze large volumes of text data to extract insights on sentiment and topics. The library includes functions for preprocessing text data, performing sentiment analysis using machine learning models, and conducting topic modeling using algorithms such as Latent Dirichlet Allocation (LDA). Turftopic is designed to be user-friendly and efficient, making it suitable for both beginners and experienced data analysts.

continue

Continue is an open-source autopilot for VS Code and JetBrains that allows you to code with any LLM. With Continue, you can ask coding questions, edit code in natural language, generate files from scratch, and more. Continue is easy to use and can help you save time and improve your coding skills.

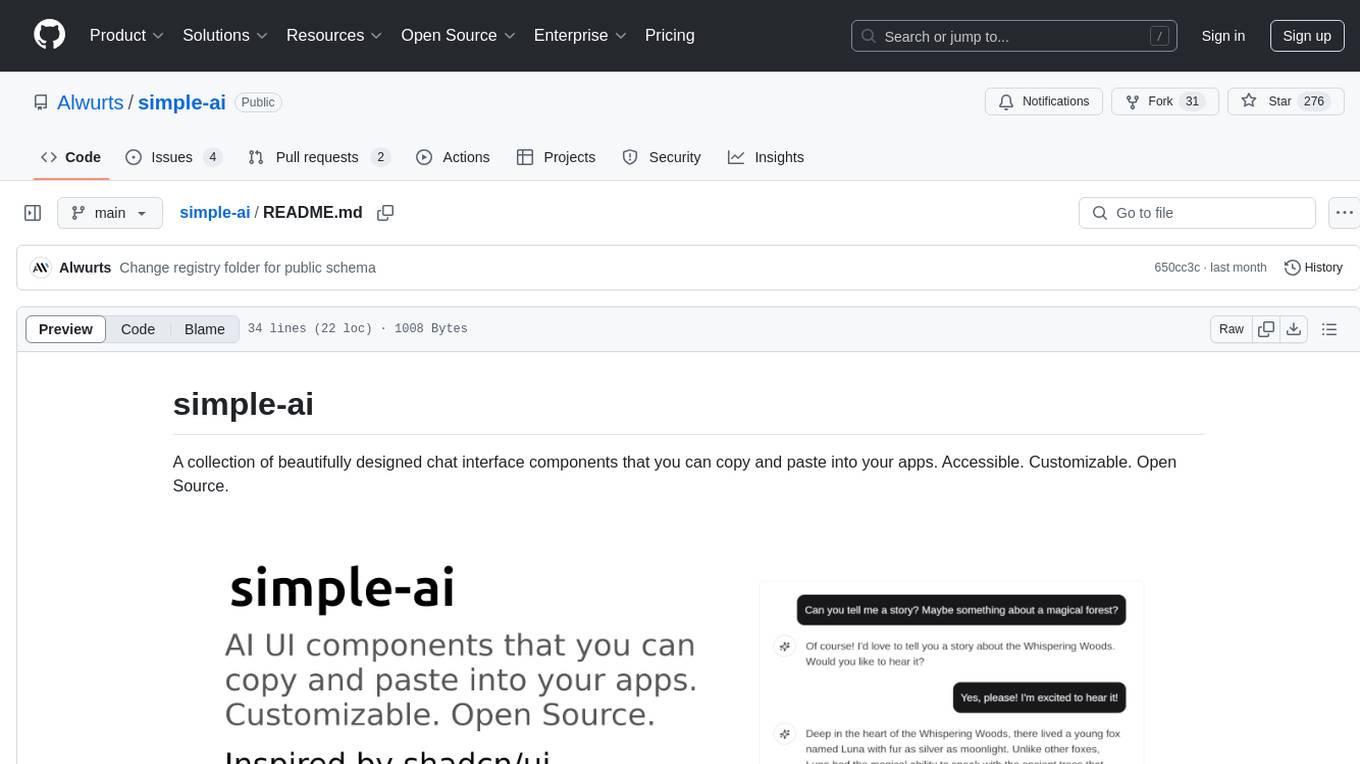

simple-ai

Simple AI is a lightweight Python library for implementing basic artificial intelligence algorithms. It provides easy-to-use functions and classes for tasks such as machine learning, natural language processing, and computer vision. With Simple AI, users can quickly prototype and deploy AI solutions without the complexity of larger frameworks.

MateCat

Matecat is an enterprise-level, web-based CAT tool designed to make post-editing and outsourcing easy and to provide a complete set of features to manage and monitor translation projects.

mdream

Mdream is a lightweight and user-friendly markdown editor designed for developers and writers. It provides a simple and intuitive interface for creating and editing markdown files with real-time preview. The tool offers syntax highlighting, markdown formatting options, and the ability to export files in various formats. Mdream aims to streamline the writing process and enhance productivity for individuals working with markdown documents.

cellm

Cellm is an Excel extension that allows users to leverage Large Language Models (LLMs) like ChatGPT within cell formulas. It enables users to extract AI responses to text ranges, making it useful for automating repetitive tasks that involve data processing and analysis. Cellm supports various models from Anthropic, Mistral, OpenAI, and Google, as well as locally hosted models via Llamafiles, Ollama, or vLLM. The tool is designed to simplify the integration of AI capabilities into Excel for tasks such as text classification, data cleaning, content summarization, entity extraction, and more.

For similar tasks

prompt-generator-comfyui

Custom AI prompt generator node for ComfyUI. With this node, you can use text generation models to generate prompts. Before using, text generation model has to be trained with prompt dataset.

lumentis

Lumentis is a tool that allows users to generate beautiful and comprehensive documentation from meeting transcripts and large documents with a single command. It reads transcripts, asks questions to understand themes and audience, generates an outline, and creates detailed pages with visual variety and styles. Users can switch models for different tasks, control the process, and deploy the generated docs to Vercel. The tool is designed to be open, clean, fast, and easy to use, with upcoming features including folders, PDFs, auto-transcription, website scraping, scientific papers handling, summarization, and continuous updates.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

quivr

Quivr is a personal assistant powered by Generative AI, designed to be a second brain for users. It offers fast and efficient access to data, ensuring security and compatibility with various file formats. Quivr is open source and free to use, allowing users to share their brains publicly or keep them private. The marketplace feature enables users to share and utilize brains created by others, boosting productivity. Quivr's offline mode provides anytime, anywhere access to data. Key features include speed, security, OS compatibility, file compatibility, open source nature, public/private sharing options, a marketplace, and offline mode.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

wingman-ai

Wingman-AI is a free and open-source AI coding assistant that brings high-quality AI-assisted coding right to your computer. It offers features such as code completion, interactive chat, and support for multiple AI providers, including Ollama, Hugging Face, and OpenAI. Wingman-AI is designed to enhance your coding workflow by providing real-time assistance and suggestions, making it an ideal tool for developers of all levels.

morphic

Morphic is an AI-powered answer engine with a generative UI. It utilizes a stack of Next.js, Vercel AI SDK, OpenAI, Tavily AI, shadcn/ui, Radix UI, and Tailwind CSS. To get started, fork and clone the repo, install dependencies, fill out secrets in the .env.local file, and run the app locally using 'bun dev'. You can also deploy your own live version of Morphic with Vercel. Verified models that can be specified to writers include Groq, LLaMA3 8b, and LLaMA3 70b.

gpt-engineer

GPT-Engineer is a tool that allows you to specify a software in natural language, sit back and watch as an AI writes and executes the code, and ask the AI to implement improvements.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

mikupad

mikupad is a lightweight and efficient language model front-end powered by ReactJS, all packed into a single HTML file. Inspired by the likes of NovelAI, it provides a simple yet powerful interface for generating text with the help of various backends.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

firecrawl

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It crawls all accessible subpages and provides clean markdown for each, without requiring a sitemap. The API is easy to use and can be self-hosted. It also integrates with Langchain and Llama Index. The Python SDK makes it easy to crawl and scrape websites in Python code.