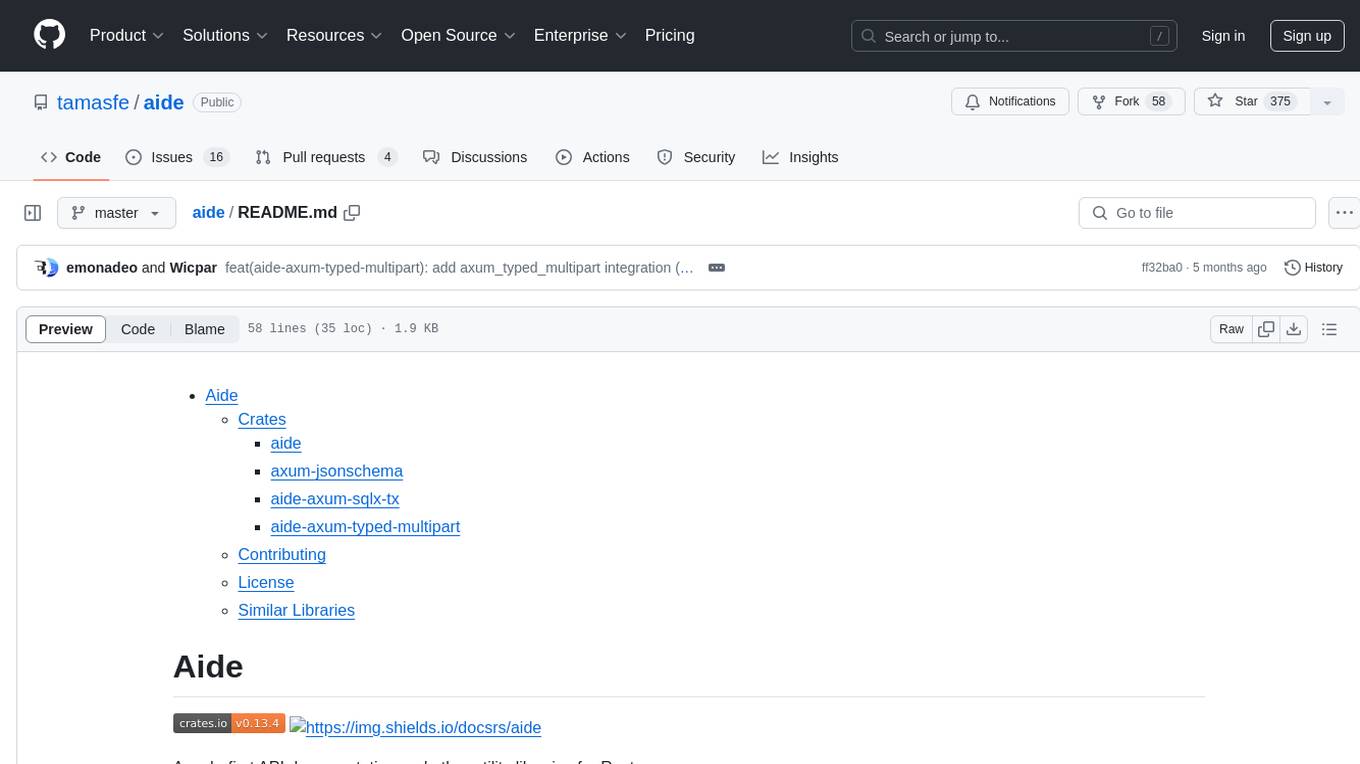

aide

An API documentation library

Stars: 433

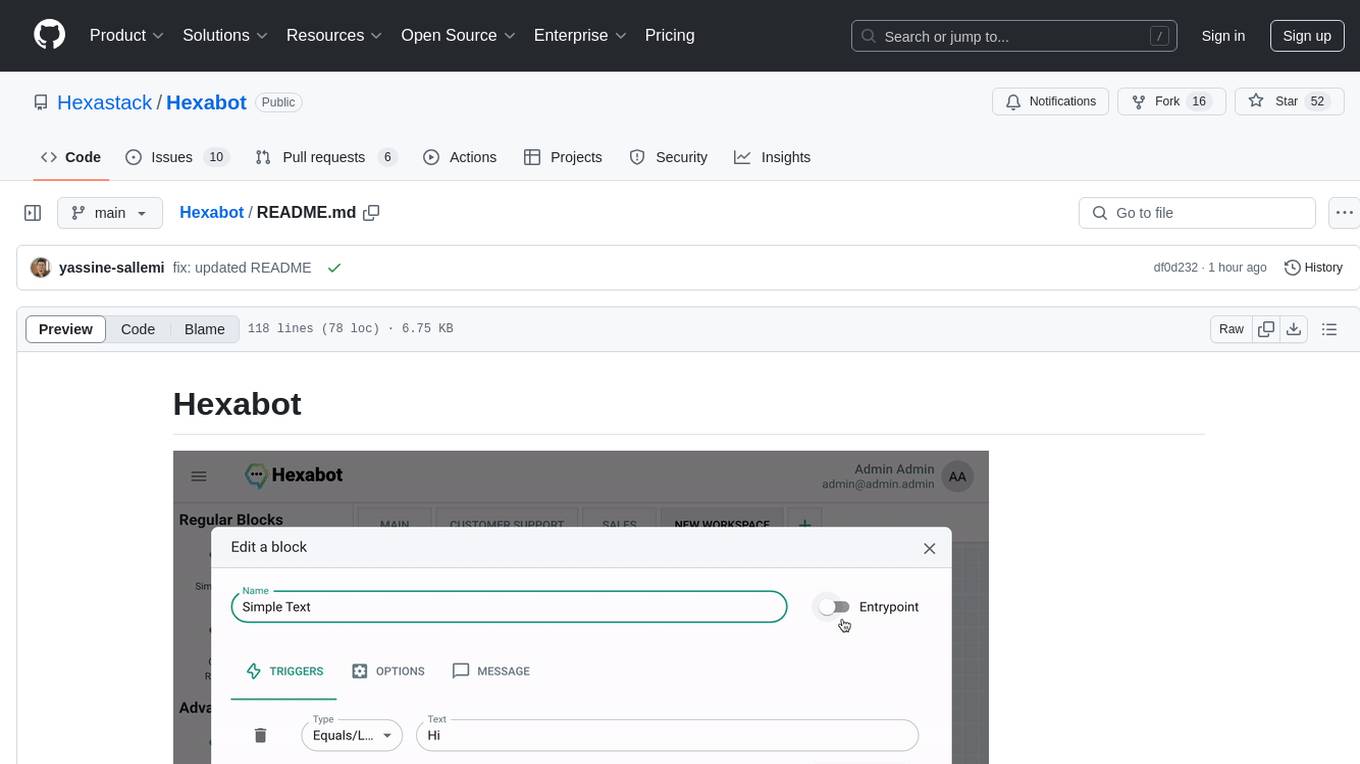

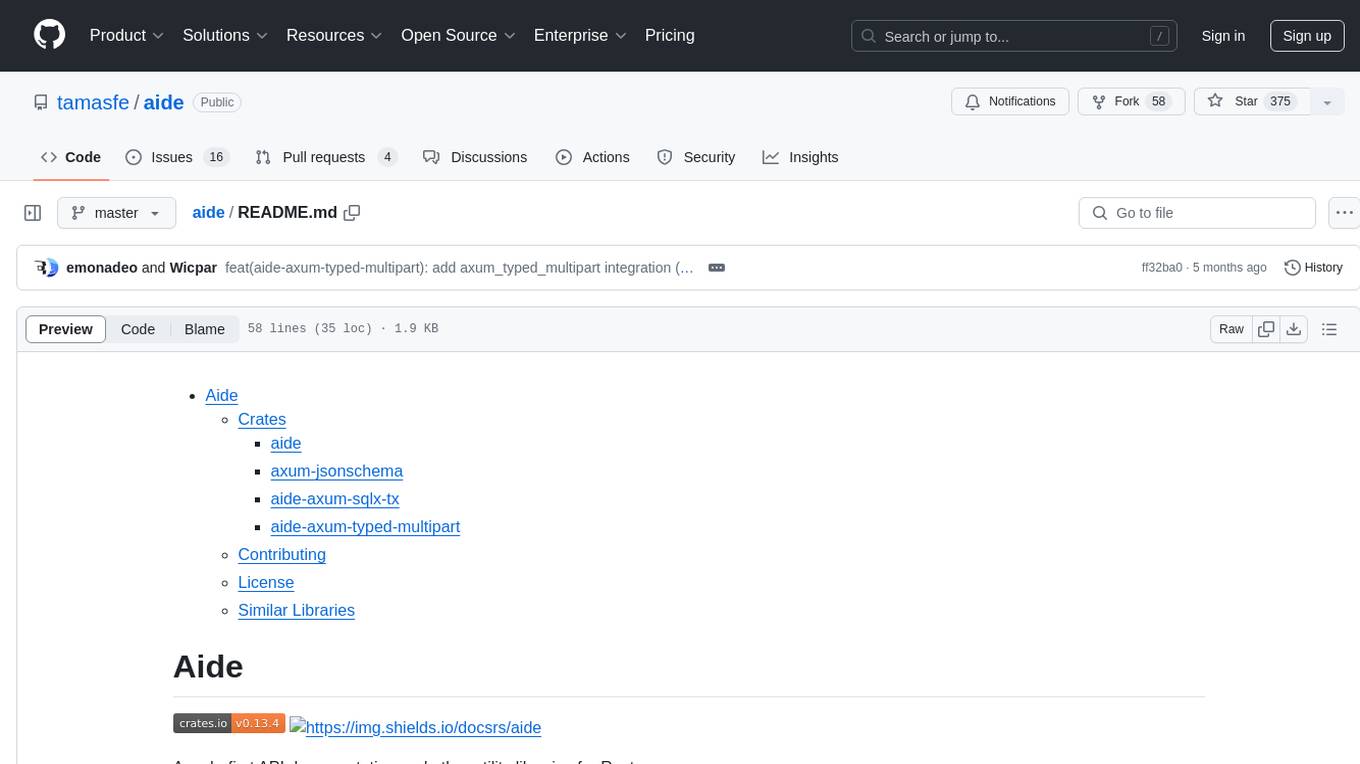

Aide is a code-first API documentation and utility library for Rust, along with other related utility crates for web-servers. It provides tools for creating API documentation and handling JSON request validation. The repository contains multiple crates that offer drop-in replacements for existing libraries, ensuring compatibility with Aide. Contributions are welcome, and the code is dual licensed under MIT and Apache-2.0. If Aide does not meet your requirements, you can explore similar libraries like paperclip, utoipa, and okapi.

README:

A code-first API documentation and other utility libraries for Rust.

This repository contains several crates related to web-servers and their documentation.

A code-first API documentation and utility library.

Read the docs.

A JSON request validation library for axum.

Read the docs.

[!IMPORTANT]

theaxum-sqlx-txfeature is deprecated and replaced by this crate.

Drop-in replacement for axum-sqlx-tx compatible with aide.

Drop-in replacement for axum-typed-multipart compatible with aide.

All contributions are welcome! Make sure to read CONTRIBUTING.md.

All code in this repository is dual licensed under MIT and Apache-2.0.

If Aide is not exactly what you are looking for, make sure to take a look at the alternatives:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aide

Similar Open Source Tools

aide

Aide is a code-first API documentation and utility library for Rust, along with other related utility crates for web-servers. It provides tools for creating API documentation and handling JSON request validation. The repository contains multiple crates that offer drop-in replacements for existing libraries, ensuring compatibility with Aide. Contributions are welcome, and the code is dual licensed under MIT and Apache-2.0. If Aide does not meet your requirements, you can explore similar libraries like paperclip, utoipa, and okapi.

ollama4j

Ollama4j is a Java library that serves as a wrapper or binding for the Ollama server. It allows users to communicate with the Ollama server and manage models for various deployment scenarios. The library provides APIs for interacting with Ollama, generating fake data, testing UI interactions, translating messages, and building web UIs. Users can easily integrate Ollama4j into their Java projects to leverage the functionalities offered by the Ollama server.

hyper-mcp

hyper-mcp is a fast and secure MCP server that enables adding AI capabilities to applications through WebAssembly plugins. It supports writing plugins in various languages, distributing them via standard OCI registries, and running them in resource-constrained environments. The tool offers sandboxing with WASM for limiting access, cross-platform compatibility, and deployment flexibility. Security features include sandboxed plugins, memory-safe execution, secure plugin distribution, and fine-grained access control. Users can configure the tool for global or project-specific use, start the server with different transport options, and utilize available plugins for tasks like time calculations, QR code generation, hash generation, IP retrieval, and webpage fetching.

databerry

Chaindesk is a no-code platform that allows users to easily set up a semantic search system for personal data without technical knowledge. It supports loading data from various sources such as raw text, web pages, files (Word, Excel, PowerPoint, PDF, Markdown, Plain Text), and upcoming support for web sites, Notion, and Airtable. The platform offers a user-friendly interface for managing datastores, querying data via a secure API endpoint, and auto-generating ChatGPT Plugins for each datastore. Chaindesk utilizes a Vector Database (Qdrant), Openai's text-embedding-ada-002 for embeddings, and has a chunk size of 1024 tokens. The technology stack includes Next.js, Joy UI, LangchainJS, PostgreSQL, Prisma, and Qdrant, inspired by the ChatGPT Retrieval Plugin.

vibe

Vibe Design System is a collection of packages for React.js development, providing components, styles, and guidelines to streamline the development process and enhance user experience. It includes a Core component library, Icons library, Testing utilities, Codemods, and more. The system also features an MCP server for intelligent assistance with component APIs, usage examples, icons, and best practices. Vibe 2 is no longer actively maintained, with users encouraged to upgrade to Vibe 3 for the latest improvements and ongoing support.

ai21-python

The AI21 Labs Python SDK is a comprehensive tool for interacting with the AI21 API. It provides functionalities for chat completions, conversational RAG, token counting, error handling, and support for various cloud providers like AWS, Azure, and Vertex. The SDK offers both synchronous and asynchronous usage, along with detailed examples and documentation. Users can quickly get started with the SDK to leverage AI21's powerful models for various natural language processing tasks.

arcade-ai

Arcade AI is a developer-focused tooling and API platform designed to enhance the capabilities of LLM applications and agents. It simplifies the process of connecting agentic applications with user data and services, allowing developers to concentrate on building their applications. The platform offers prebuilt toolkits for interacting with various services, supports multiple authentication providers, and provides access to different language models. Users can also create custom toolkits and evaluate their tools using Arcade AI. Contributions are welcome, and self-hosting is possible with the provided documentation.

AIaW

AIaW is a next-generation LLM client with full functionality, lightweight, and extensible. It supports various basic functions such as streaming transfer, image uploading, and latex formulas. The tool is cross-platform with a responsive interface design. It supports multiple service providers like OpenAI, Anthropic, and Google. Users can modify questions, regenerate in a forked manner, and visualize conversations in a tree structure. Additionally, it offers features like file parsing, video parsing, plugin system, assistant market, local storage with real-time cloud sync, and customizable interface themes. Users can create multiple workspaces, use dynamic prompt word variables, extend plugins, and benefit from detailed design elements like real-time content preview, optimized code pasting, and support for various file types.

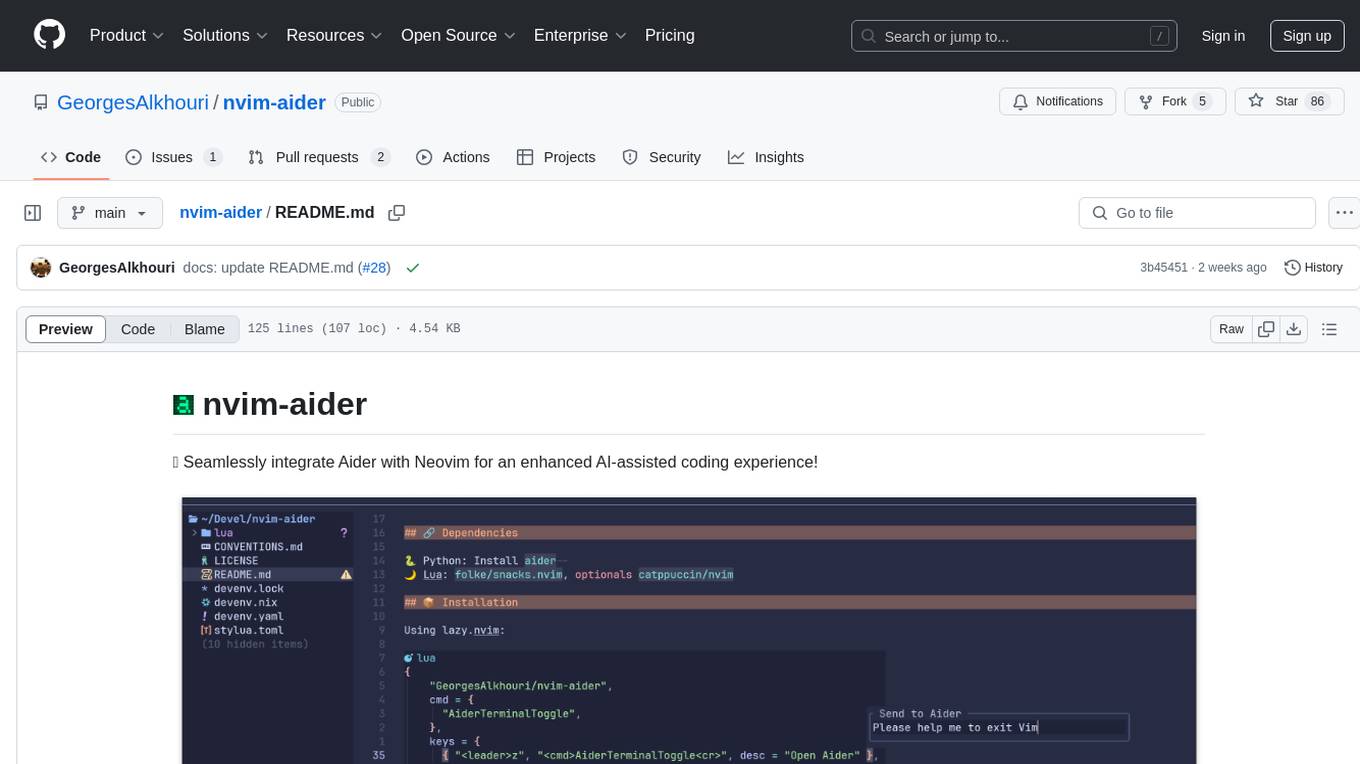

nvim-aider

Nvim-aider is a plugin for Neovim that provides additional functionality and key mappings to enhance the user's editing experience. It offers features such as code navigation, quick access to commonly used commands, and improved text manipulation tools. With Nvim-aider, users can streamline their workflow and increase productivity while working with Neovim.

jadx-mcp-server

JADX-MCP-SERVER is a standalone Python server that interacts with JADX-AI-MCP Plugin to analyze Android APKs using LLMs like Claude. It enables live communication with decompiled Android app context, uncovering vulnerabilities, parsing manifests, and facilitating reverse engineering effortlessly. The tool combines JADX-AI-MCP and JADX MCP SERVER to provide real-time reverse engineering support with LLMs, offering features like quick analysis, vulnerability detection, AI code modification, static analysis, and reverse engineering helpers. It supports various MCP tools for fetching class information, text, methods, fields, smali code, AndroidManifest.xml content, strings.xml file, resource files, and more. Tested on Claude Desktop, it aims to support other LLMs in the future, enhancing Android reverse engineering and APK modification tools connectivity for easier reverse engineering purely from vibes.

chatluna

Chatluna is a machine learning model plugin that provides chat services with large language models. It is highly extensible, supports multiple output formats, and offers features like custom conversation presets, rate limiting, and context awareness. Users can deploy Chatluna under Koishi without additional configuration. The plugin supports various models/platforms like OpenAI, Azure OpenAI, Google Gemini, and more. It also provides preset customization using YAML files and allows for easy forking and development within Koishi projects. However, the project lacks web UI, HTTP server, and project documentation, inviting contributions from the community.

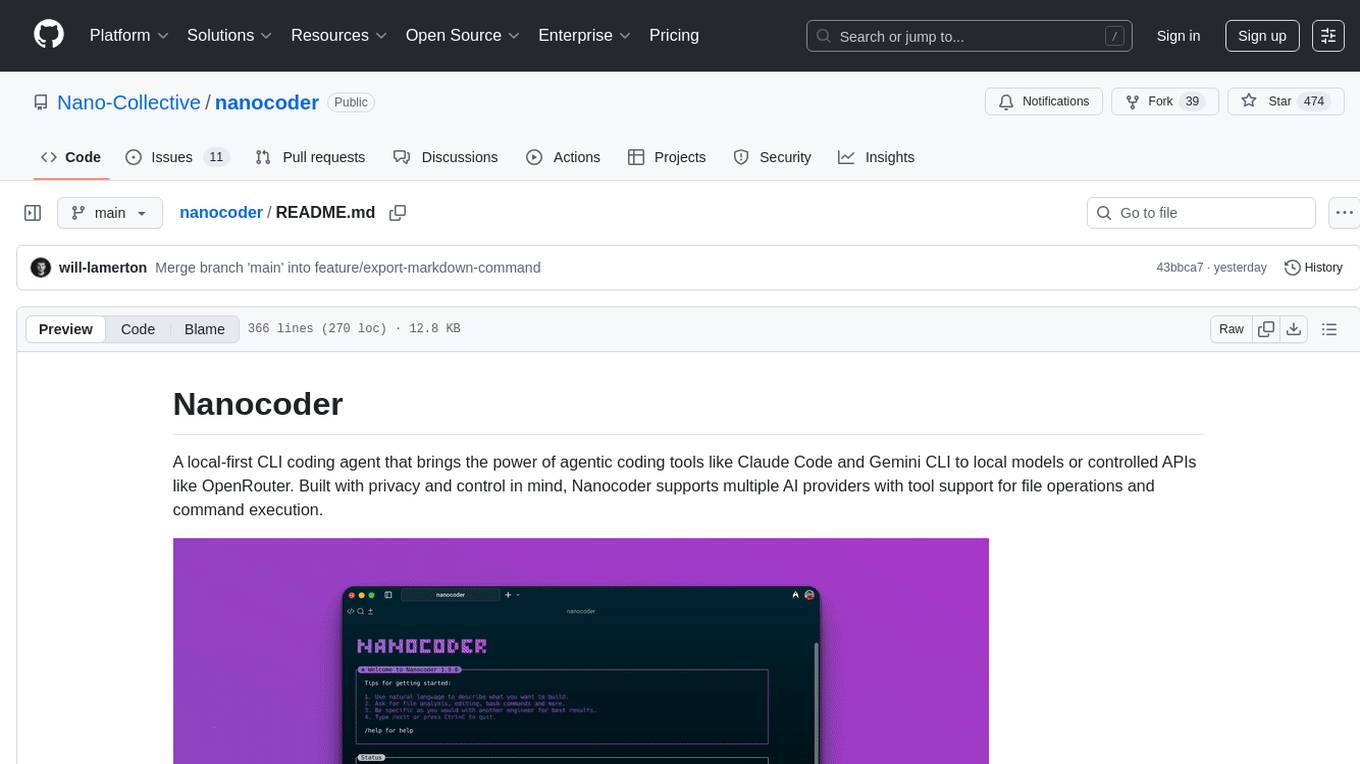

nanocoder

Nanocoder is a versatile code editor designed for beginners and experienced programmers alike. It provides a user-friendly interface with features such as syntax highlighting, code completion, and error checking. With Nanocoder, you can easily write and debug code in various programming languages, making it an ideal tool for learning, practicing, and developing software projects. Whether you are a student, hobbyist, or professional developer, Nanocoder offers a seamless coding experience to boost your productivity and creativity.

OllamaSharp

OllamaSharp is a .NET binding for the Ollama API, providing an intuitive API client to interact with Ollama. It offers support for all Ollama API endpoints, real-time streaming, progress reporting, and an API console for remote management. Users can easily set up the client, list models, pull models with progress feedback, stream completions, and build interactive chats. The project includes a demo console for exploring and managing the Ollama host.

BrowserGym

BrowserGym is an open, easy-to-use, and extensible framework designed to accelerate web agent research. It provides benchmarks like MiniWoB, WebArena, VisualWebArena, WorkArena, AssistantBench, and WebLINX. Users can design new web benchmarks by inheriting the AbstractBrowserTask class. The tool allows users to install different packages for core functionalities, experiments, and specific benchmarks. It supports the development setup and offers boilerplate code for running agents on various tasks. BrowserGym is not a consumer product and should be used with caution.

Hexabot

Hexabot Community Edition is an open-source chatbot solution designed for flexibility and customization, offering powerful text-to-action capabilities. It allows users to create and manage AI-powered, multi-channel, and multilingual chatbots with ease. The platform features an analytics dashboard, multi-channel support, visual editor, plugin system, NLP/NLU management, multi-lingual support, CMS integration, user roles & permissions, contextual data, subscribers & labels, and inbox & handover functionalities. The directory structure includes frontend, API, widget, NLU, and docker components. Prerequisites for running Hexabot include Docker and Node.js. The installation process involves cloning the repository, setting up the environment, and running the application. Users can access the UI admin panel and live chat widget for interaction. Various commands are available for managing the Docker services. Detailed documentation and contribution guidelines are provided for users interested in contributing to the project.

nekro-agent

Nekro Agent is an AI chat plugin and proxy execution bot that is highly scalable, offers high freedom, and has minimal deployment requirements. It features context-aware chat for group/private chats, custom character settings, sandboxed execution environment, interactive image resource handling, customizable extension development interface, easy deployment with docker-compose, integration with Stable Diffusion for AI drawing capabilities, support for various file types interaction, hot configuration updates and command control, native multimodal understanding, visual application management control panel, CoT (Chain of Thought) support, self-triggered timers and holiday greetings, event notification understanding, and more. It allows for third-party extensions and AI-generated extensions, and includes features like automatic context trigger based on LLM, and a variety of basic commands for bot administrators.

For similar tasks

aide

Aide is a code-first API documentation and utility library for Rust, along with other related utility crates for web-servers. It provides tools for creating API documentation and handling JSON request validation. The repository contains multiple crates that offer drop-in replacements for existing libraries, ensuring compatibility with Aide. Contributions are welcome, and the code is dual licensed under MIT and Apache-2.0. If Aide does not meet your requirements, you can explore similar libraries like paperclip, utoipa, and okapi.

crawlchat

CrawlChat is an open sourced AI-powered platform that transforms technical documentation into intelligent chatbots. It allows users to connect documentation from various sources and deploy AI assistants that can answer questions across multiple channels. The platform includes services like front-facing React app, Express app, Discord bot, Slack app, and more. Users can self-host the platform using Dockerfiles and set up environment variables for easy deployment. Local development is quick and easy, allowing users to contribute by starting the necessary services and making improvements or adding features.

SpecForge

SpecForge is a powerful tool for generating API specifications from code. It helps developers to easily create and maintain accurate API documentation by extracting information directly from the codebase. With SpecForge, users can streamline the process of documenting APIs, ensuring consistency and reducing manual effort. The tool supports various programming languages and frameworks, making it versatile and adaptable to different development environments. By automating the generation of API specifications, SpecForge enhances collaboration between developers and stakeholders, improving overall project efficiency and quality.

LambsDanger

LAMBS Danger FSM is an open-source mod developed for Arma3, aimed at enhancing the AI behavior by integrating buildings into the tactical landscape, creating distinct AI states, and ensuring seamless compatibility with vanilla, ACE3, and modded assets. Originally created for the Norwegian gaming community, it is now available on Steam Workshop and GitHub for wider use. Users are allowed to customize and redistribute the mod according to their requirements. The project is licensed under the GNU General Public License (GPLv2) with additional amendments.

LiteRT

LiteRT is Google's open-source high-performance runtime for on-device AI, previously known as TensorFlow Lite. The repository is currently not intended for open-source development, but aims to evolve to allow direct building and contributions. LiteRT supports Python versions 3.9, 3.10, 3.11 on Linux and MacOS. It ensures compatibility with existing .tflite file extension and format, offering conversion tools and continued active development under the name LiteRT.

For similar jobs

google.aip.dev

API Improvement Proposals (AIPs) are design documents that provide high-level, concise documentation for API development at Google. The goal of AIPs is to serve as the source of truth for API-related documentation and to facilitate discussion and consensus among API teams. AIPs are similar to Python's enhancement proposals (PEPs) and are organized into different areas within Google to accommodate historical differences in customs, styles, and guidance.

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.

speakeasy

Speakeasy is a tool that helps developers create production-quality SDKs, Terraform providers, documentation, and more from OpenAPI specifications. It supports a wide range of languages, including Go, Python, TypeScript, Java, and C#, and provides features such as automatic maintenance, type safety, and fault tolerance. Speakeasy also integrates with popular package managers like npm, PyPI, Maven, and Terraform Registry for easy distribution.

apicat

ApiCat is an API documentation management tool that is fully compatible with the OpenAPI specification. With ApiCat, you can freely and efficiently manage your APIs. It integrates the capabilities of LLM, which not only helps you automatically generate API documentation and data models but also creates corresponding test cases based on the API content. Using ApiCat, you can quickly accomplish anything outside of coding, allowing you to focus your energy on the code itself.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

ain

Ain is a terminal HTTP API client designed for scripting input and processing output via pipes. It allows flexible organization of APIs using files and folders, supports shell-scripts and executables for common tasks, handles url-encoding, and enables sharing the resulting curl, wget, or httpie command-line. Users can put things that change in environment variables or .env-files, and pipe the API output for further processing. Ain targets users who work with many APIs using a simple file format and uses curl, wget, or httpie to make the actual calls.

OllamaKit

OllamaKit is a Swift library designed to simplify interactions with the Ollama API. It handles network communication and data processing, offering an efficient interface for Swift applications to communicate with the Ollama API. The library is optimized for use within Ollamac, a macOS app for interacting with Ollama models.

ollama4j

Ollama4j is a Java library that serves as a wrapper or binding for the Ollama server. It facilitates communication with the Ollama server and provides models for deployment. The tool requires Java 11 or higher and can be installed locally or via Docker. Users can integrate Ollama4j into Maven projects by adding the specified dependency. The tool offers API specifications and supports various development tasks such as building, running unit tests, and integration tests. Releases are automated through GitHub Actions CI workflow. Areas of improvement include adhering to Java naming conventions, updating deprecated code, implementing logging, using lombok, and enhancing request body creation. Contributions to the project are encouraged, whether reporting bugs, suggesting enhancements, or contributing code.