Best AI tools for< Backend Engineer >

Infographic

20 - AI tool Sites

Lovable

Lovable is an AI-powered application that allows users to describe their software ideas in natural language and then automatically transforms them into fully functional applications with beautiful aesthetics. It enables users to build high-quality software without writing a single line of code, making software creation more accessible and faster than traditional coding methods. With features like live rendering, instant undo, beautiful design principles, and seamless GitHub integration, Lovable empowers product builders, developers, and designers to bring their ideas to life effortlessly.

Autobackend.dev

Autobackend.dev is a web development platform that provides tools and resources for building and managing backend systems. It offers a range of features such as database management, API integration, and server configuration. With Autobackend.dev, users can streamline the process of backend development and focus on creating innovative web applications.

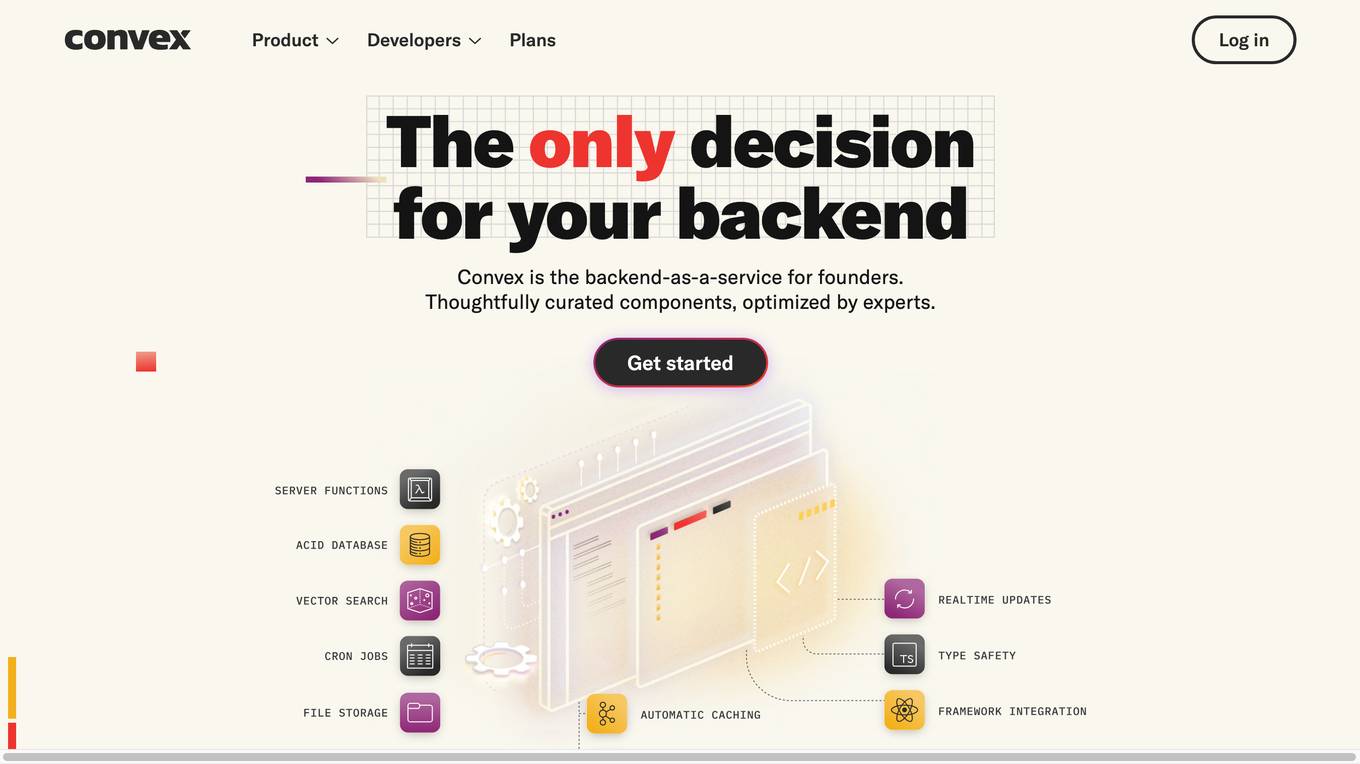

Convex

Convex is a fullstack TypeScript development platform that serves as an open-source backend for application builders. It offers a comprehensive set of APIs and tools to build, launch, and scale applications efficiently. With features like real-time collaboration, optimized transactions, and over 80 OAuth integrations, Convex simplifies backend operations and allows developers to focus on delivering value to customers. The platform enables developers to write backend logic in TypeScript, perform database operations with strong consistency, and integrate with various third-party services seamlessly. Convex is praised for its reliability, simplicity, and developer experience, making it a popular choice for modern software development projects.

BackX

BackX is an AI development platform that empowers developers to quickly ship backends across various use cases, environments, and scales. It offers unparalleled accuracy, flexibility, and efficiency by overcoming the limitations of traditional AI-assisted programming. With features like one-click production-grade code, context-aware consistent code output, versioned artifacts, instant deploy, and a suite of AI-powered dev tools, BackX revolutionizes the backend development process. Developers can effortlessly design and manage databases, generate CRUD operations, implement complex business logic, and deploy serverless applications with ease. The platform aims to streamline development processes, increase cost-effectiveness, and provide more accurate outputs than traditional methods.

Amplication

Amplication is an AI-powered platform for .NET and Node.js app development, offering the world's fastest way to build backend services. It empowers developers by providing customizable, production-ready backend services without vendor lock-ins. Users can define data models, extend and customize with plugins, generate boilerplate code, and modify the generated code freely. The platform supports role-based access control, microservices architecture, continuous Git sync, and automated deployment. Amplication is SOC-2 certified, ensuring data security and compliance.

Koxy AI

Koxy AI is an AI-powered serverless back-end platform that allows users to build globally distributed, fast, secure, and scalable back-ends with no code required. It offers features such as live logs, smart errors handling, integration with over 80,000 AI models, and more. Koxy AI is designed to help users focus on building the best service possible without wasting time on security and latency concerns. It provides a No-SQL JSON-based database, real-time data synchronization, cloud functions, and a drag-and-drop builder for API flows.

CHAI AI

CHAI AI is a leading conversational AI platform that focuses on building AI solutions for quant traders. The platform has secured significant funding rounds to expand its computational capabilities and talent acquisition. CHAI AI offers a range of models and techniques, such as reinforcement learning with human feedback, model blending, and direct preference optimization, to enhance user engagement and retention. The platform aims to provide users with the ability to create their own ChatAIs and offers custom GPU orchestration for efficient inference. With a strong focus on user feedback and recognition, CHAI AI continues to innovate and improve its AI models to meet the demands of a growing user base.

Backend.AI

Backend.AI is an enterprise-scale cluster backend for AI frameworks that offers scalability, GPU virtualization, HPC optimization, and DGX-Ready software products. It provides a fast and efficient way to build, train, and serve AI models of any type and size, with flexible infrastructure options. Backend.AI aims to optimize backend resources, reduce costs, and simplify deployment for AI developers and researchers. The platform integrates seamlessly with existing tools and offers fractional GPU usage and pay-as-you-play model to maximize resource utilization.

Software Engineer Interview Questions Generator

The Software Engineer Interview Questions Generator is an AI tool that helps software engineers prepare for interviews by generating a wide range of technical questions based on various programming languages, frameworks, and technologies. Users can select specific topics of interest and customize the number of questions to generate. This tool leverages AI technology to provide a tailored and comprehensive interview preparation experience for software engineering candidates.

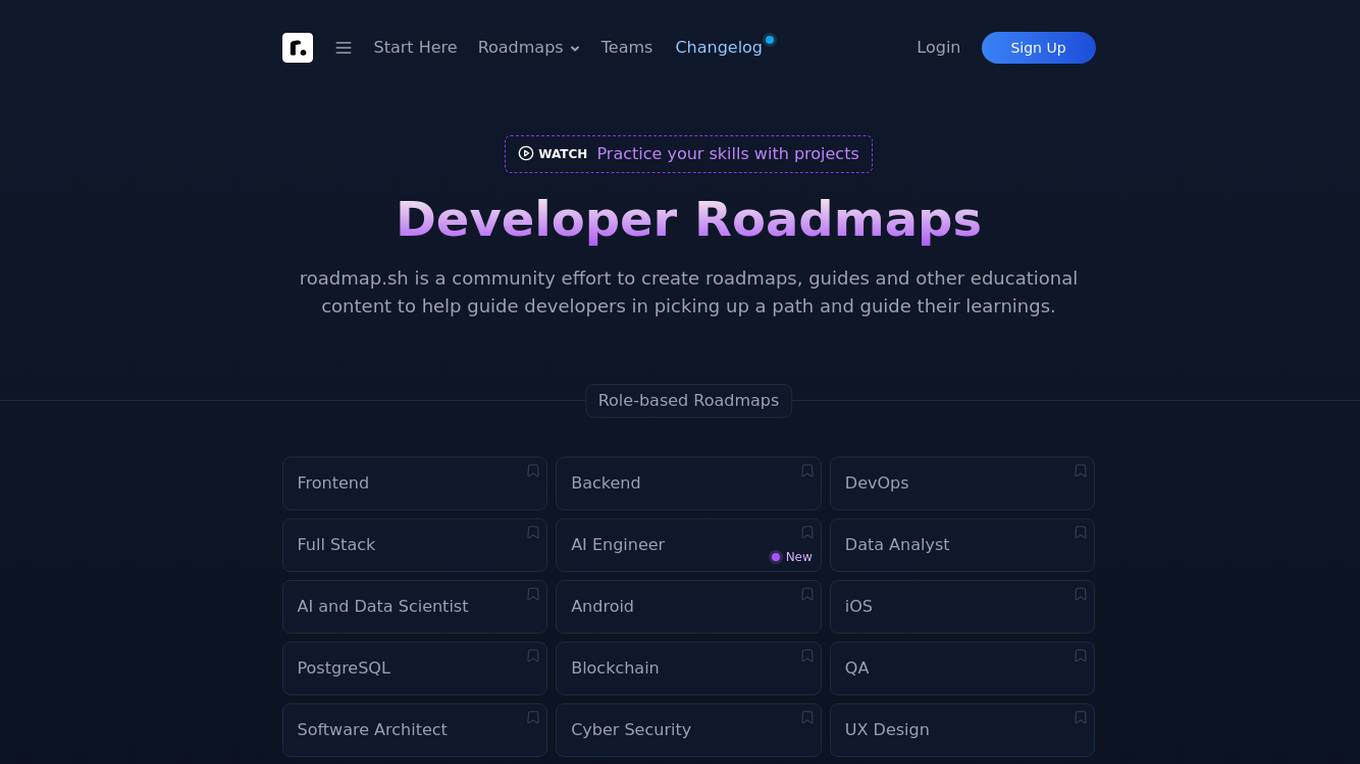

Developer Roadmaps

Developer Roadmaps (roadmap.sh) is a community-driven platform offering official roadmaps, guides, projects, best practices, questions, and videos to assist developers in skill development and career growth. It provides role-based and skill-based roadmaps covering various technologies and domains. The platform is actively maintained and continuously updated to enhance the learning experience for developers worldwide.

Works

Works is a platform that connects enterprises with the top 1% of remote tech talent. It uses advanced AI technology to ensure precision-matching of talent to project requirements, saving time and resources. Works offers transparent pricing with a flat 10% transaction fee and provides risk-free hiring with payment only when the work is completed to satisfaction.

BugFree.ai

BugFree.ai is an AI-powered platform designed to help users practice system design and behavior interviews, similar to Leetcode. The platform offers a range of features to assist users in preparing for technical interviews, including mock interviews, real-time feedback, and personalized study plans. With BugFree.ai, users can improve their problem-solving skills and gain confidence in tackling complex interview questions.

Goptimise

Goptimise is a no-code AI-powered scalable backend builder that helps developers craft scalable, seamless, powerful, and intuitive backend solutions. It offers a solid foundation with robust and scalable infrastructure, including dedicated infrastructure, security, and scalability. Goptimise simplifies software rollouts with one-click deployment, automating the process and amplifying productivity. It also provides smart API suggestions, leveraging AI algorithms to offer intelligent recommendations for API design and accelerating development with automated recommendations tailored to each project. Goptimise's intuitive visual interface and effortless integration make it easy to use, and its customizable workspaces allow for dynamic data management and a personalized development experience.

YouTeam

YouTeam is an AI-powered platform that offers a transparent vetting process for hiring engineers. Leveraging deep learning technology, YouTeam provides customized vetting criteria tailored to each role's responsibilities and required skillset. The platform streamlines the hiring process by presenting engineering candidates aligned with the company's unique standards, allowing for a final interview to select the perfect match. With features like technical skill review assessments, coding assessments, and soft skills & culture fit questions, YouTeam ensures a data-rich candidate profile for informed decision-making.

Backmesh

Backmesh is an AI tool that serves as a proxy on edge CDN servers, enabling secure and direct access to LLM APIs without the need for a backend or SDK. It allows users to call LLM APIs from their apps, ensuring protection through JWT verification and rate limits. Backmesh also offers user analytics for LLM API calls, helping identify usage patterns and enhance user satisfaction within AI applications.

BuildShip

BuildShip is a low-code visual backend builder that allows users to create powerful APIs in minutes. It is powered by AI and offers a variety of features such as pre-built nodes, multimodal flows, and integration with popular AI models. BuildShip is suitable for a wide range of users, from beginners to experienced developers. It is also a great tool for teams who want to collaborate on backend development projects.

Privado AI

Privado AI is a privacy engineering tool that bridges the gap between privacy compliance and software development. It automates personal data visibility and privacy governance, helping organizations to identify privacy risks, track data flows, and ensure compliance with regulations such as CPRA, MHMDA, FTC, and GDPR. The tool provides real-time visibility into how personal data is collected, used, shared, and stored by scanning the code of websites, user-facing applications, and backend systems. Privado offers features like Privacy Code Scanning, programmatic privacy governance, automated GDPR RoPA reports, risk identification without assessments, and developer-friendly privacy guidance.

Treblle

Treblle is an End to End APIOps Platform that helps engineering and product teams build, ship, and understand their REST APIs in one single place. It offers features such as API Observability, API Documentation, API Governance, API Security, and API Analytics. With a focus on empowering API producers and consumers, Treblle provides actionable data in real-time, customizable dashboards, and automated API development. The platform aims to improve API release times, enhance developer experience, and ensure API quality and security.

DocDriven

DocDriven is an AI-powered documentation-driven API development tool that provides a shared workspace for optimizing the API development process. It helps in designing APIs faster and more efficiently, collaborating on API changes in real-time, exploring all APIs in one workspace, generating AI code, maintaining API documentation, and much more. DocDriven aims to streamline communication and coordination among backend developers, frontend developers, UI designers, and product managers, ensuring high-quality API design and development.

Kie.ai

Kie.ai is an AI platform that offers access to DeepSeek R1 & V3 APIs for secure and scalable AI solutions. It provides advanced reasoning models for tasks in math, coding, and language, along with versatile natural language processing capabilities. With no local deployment required, developers can easily integrate the APIs into their projects for fast and efficient AI solutions. Kie.ai ensures data security by hosting the APIs on U.S.-based servers, offering affordable pricing plans and comprehensive documentation for seamless integration.

19 - Open Source Tools

aiomcache

aiomcache is a Python library that provides an asyncio (PEP 3156) interface to work with memcached. It allows users to interact with memcached servers asynchronously, making it suitable for high-performance applications that require non-blocking I/O operations. The library offers similar functionality to other memcache clients and includes features like setting and getting values, multi-get operations, and deleting keys. Version 0.8 introduces the `FlagClient` class, which enables users to register callbacks for setting or processing flags, providing additional flexibility and customization options for working with memcached servers.

aiolimiter

An efficient implementation of a rate limiter for asyncio using the Leaky bucket algorithm, providing precise control over the rate a code section can be entered. It allows for limiting the number of concurrent entries within a specified time window, ensuring that a section of code is executed a maximum number of times in that period.

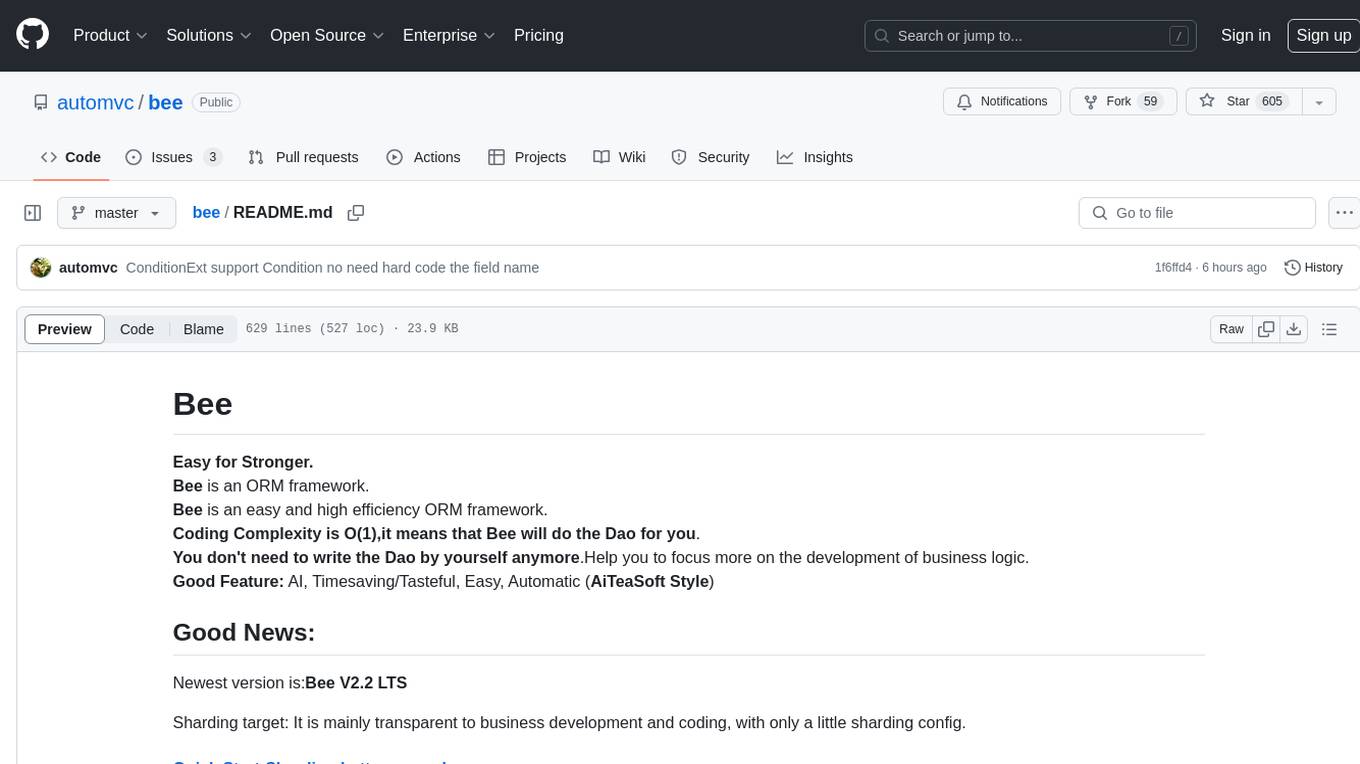

bee

Bee is an easy and high efficiency ORM framework that simplifies database operations by providing a simple interface and eliminating the need to write separate DAO code. It supports various features such as automatic filtering of properties, partial field queries, native statement pagination, JSON format results, sharding, multiple database support, and more. Bee also offers powerful functionalities like dynamic query conditions, transactions, complex queries, MongoDB ORM, cache management, and additional tools for generating distributed primary keys, reading Excel files, and more. The newest versions introduce enhancements like placeholder precompilation, default date sharding, ElasticSearch ORM support, and improved query capabilities.

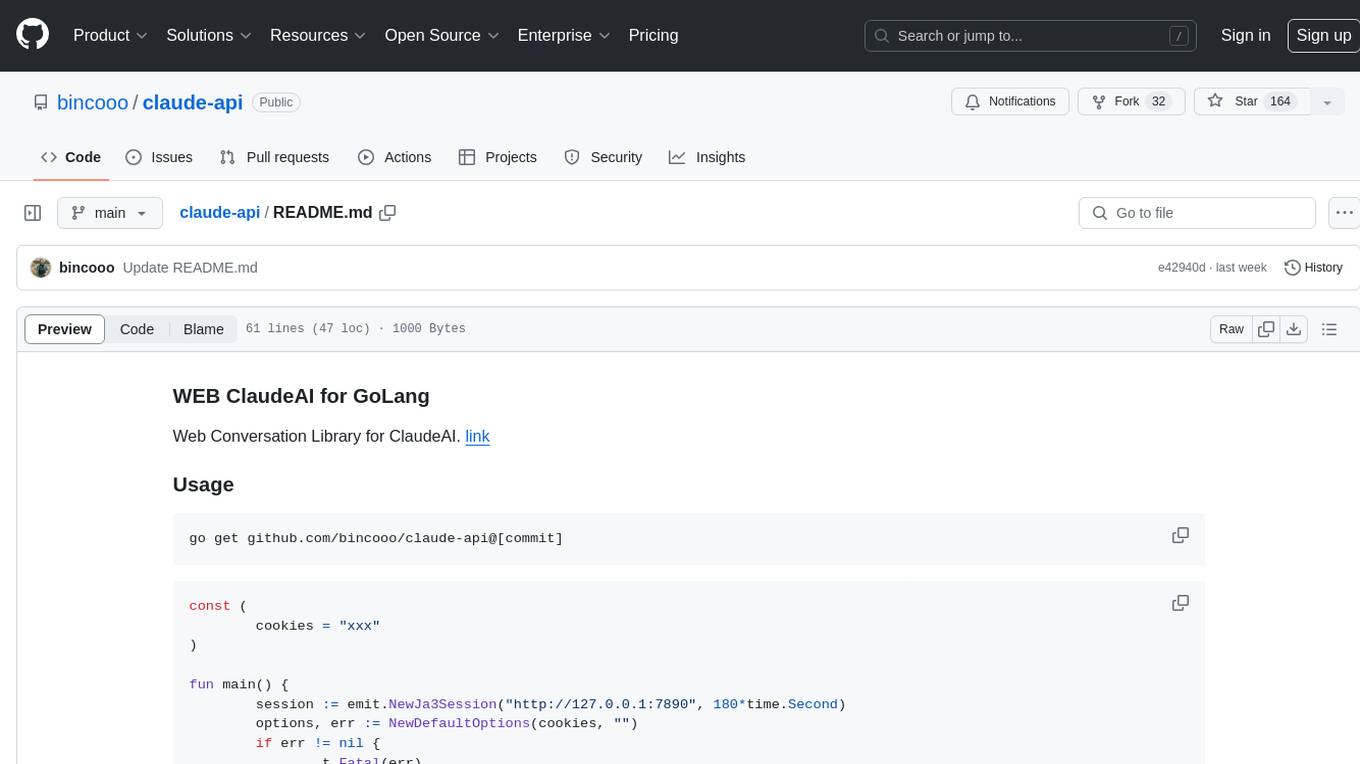

claude-api

claude-api is a web conversation library for ClaudeAI implemented in GoLang. It provides functionalities to interact with ClaudeAI for web-based conversations. Users can easily integrate this library into their Go projects to enable chatbot capabilities and handle conversations with ClaudeAI. The library includes features for sending messages, receiving responses, and managing chat sessions, making it a valuable tool for developers looking to incorporate AI-powered chatbots into their applications.

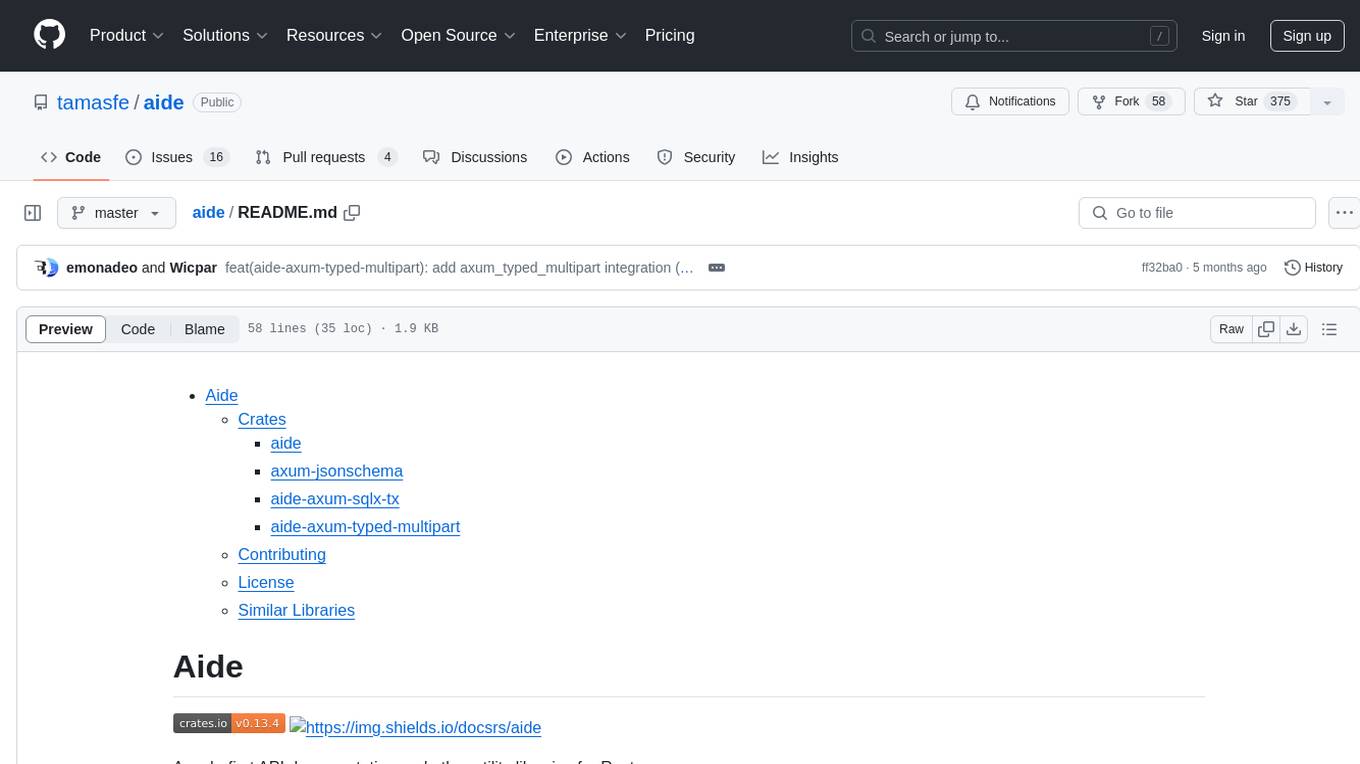

aide

Aide is a code-first API documentation and utility library for Rust, along with other related utility crates for web-servers. It provides tools for creating API documentation and handling JSON request validation. The repository contains multiple crates that offer drop-in replacements for existing libraries, ensuring compatibility with Aide. Contributions are welcome, and the code is dual licensed under MIT and Apache-2.0. If Aide does not meet your requirements, you can explore similar libraries like paperclip, utoipa, and okapi.

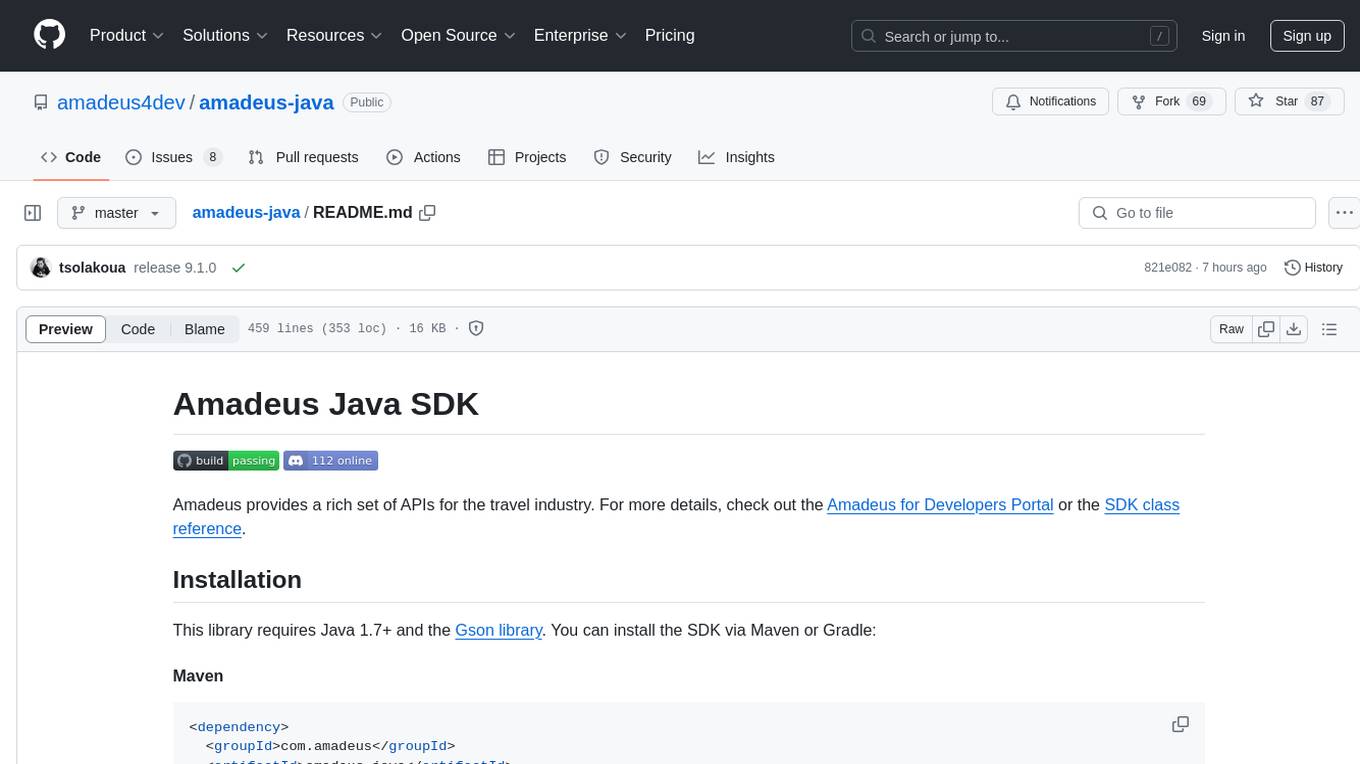

amadeus-java

Amadeus Java SDK provides a rich set of APIs for the travel industry, allowing developers to access various functionalities such as flight search, booking, airport information, and more. The SDK simplifies interaction with the Amadeus API by providing self-contained code examples and detailed documentation. Developers can easily make API calls, handle responses, and utilize features like pagination and logging. The SDK supports various endpoints for tasks like flight search, booking management, airport information retrieval, and travel analytics. It also offers functionalities for hotel search, booking, and sentiment analysis. Overall, the Amadeus Java SDK is a comprehensive tool for integrating Amadeus APIs into Java applications.

rig

Rig is a Rust library designed for building scalable, modular, and user-friendly applications powered by large language models (LLMs). It provides full support for LLM completion and embedding workflows, offers simple yet powerful abstractions for LLM providers like OpenAI and Cohere, as well as vector stores such as MongoDB and in-memory storage. With Rig, users can easily integrate LLMs into their applications with minimal boilerplate code.

celery-aio-pool

Celery AsyncIO Pool is a free software tool licensed under GNU Affero General Public License v3+. It provides an AsyncIO worker pool for Celery, enabling users to leverage the power of AsyncIO in their Celery applications. The tool allows for easy installation using Poetry, pip, or directly from GitHub. Users can configure Celery to use the AsyncIO pool provided by celery-aio-pool, or they can wait for the upcoming support for out-of-tree worker pools in Celery 5.3. The tool is actively maintained and welcomes contributions from the community.

Awesome-RoadMaps-and-Interviews

Awesome RoadMaps and Interviews is a comprehensive repository that aims to provide guidance for technical interviews and career development in the ITCS field. It covers a wide range of topics including interview strategies, technical knowledge, and practical insights gained from years of interviewing experience. The repository emphasizes the importance of combining theoretical knowledge with practical application, and encourages users to expand their interview preparation beyond just algorithms. It also offers resources for enhancing knowledge breadth, depth, and programming skills through curated roadmaps, mind maps, cheat sheets, and coding snippets. The content is structured to help individuals navigate various technical roles and technologies, fostering continuous learning and professional growth.

airtable

A simple Golang package to access the Airtable API. It provides functionalities to interact with Airtable such as initializing client, getting tables, listing records, adding records, updating records, deleting records, and bulk deleting records. The package is compatible with Go 1.13 and above.

aiohttp-remotes

aiohttp-remotes is a library containing a set of useful tools for aiohttp.web server. It includes functionalities such as restricting incoming connections to allowed hosts only, protecting web applications with basic auth authorization, ensuring web applications are protected by CloudFlare, processing HTTP headers for secure and relaxed modes, handling HTTPS only, and redirecting plain HTTP to HTTPS automatically. The library provides tools for managing various aspects of web server security and configuration.

trigger.dev

Trigger.dev is an open source platform and SDK for creating long-running background jobs. It provides features like JavaScript and TypeScript SDK, no timeouts, retries, queues, schedules, observability, React hooks, Realtime API, custom alerts, elastic scaling, and works with existing tech stack. Users can create tasks in their codebase, deploy tasks using the SDK, manage tasks in different environments, and have full visibility of job runs. The platform offers a trace view of every task run for detailed monitoring. Getting started is easy with account creation, project setup, and onboarding instructions. Self-hosting and development guides are available for users interested in contributing or hosting Trigger.dev.

AppFlowy-Cloud

AppFlowy Cloud is a secure user authentication, file storage, and real-time WebSocket communication tool written in Rust. It is part of the AppFlowy ecosystem, providing an efficient and collaborative user experience. The tool offers deployment guides, development setup with Rust and Docker, debugging tips for components like PostgreSQL, Redis, Minio, and Portainer, and guidelines for contributing to the project.

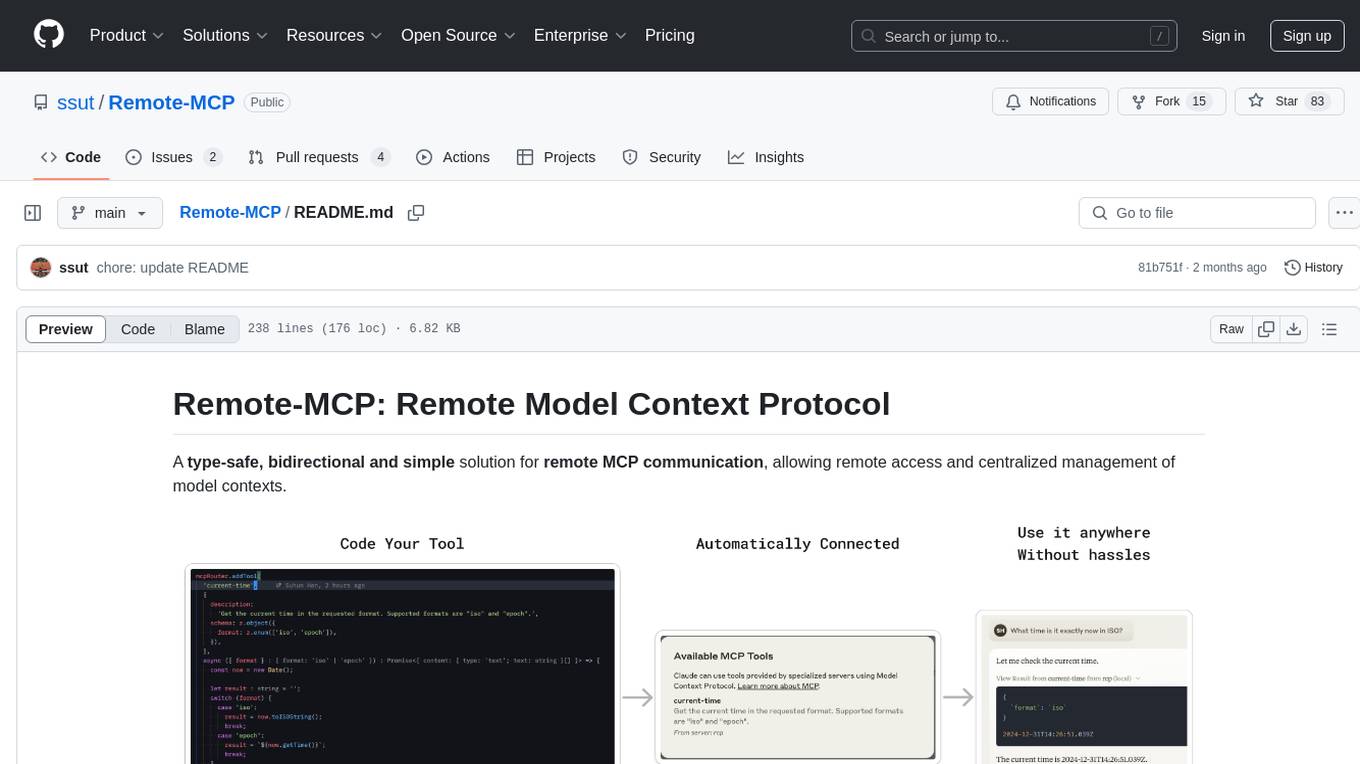

Remote-MCP

Remote-MCP is a type-safe, bidirectional, and simple solution for remote MCP communication, enabling remote access and centralized management of model contexts. It provides a bridge for immediate remote access to a remote MCP server from a local MCP client, without waiting for future official implementations. The repository contains client and server libraries for creating and connecting to remotely accessible MCP services. The core features include basic type-safe client/server communication, MCP command/tool/prompt support, custom headers, and ongoing work on crash-safe handling and event subscription system.

mcp-graphql

mcp-graphql is a Model Context Protocol server that enables Large Language Models (LLMs) to interact with GraphQL APIs. It provides schema introspection and query execution capabilities, allowing models to dynamically discover and use GraphQL APIs. The server offers tools for retrieving the GraphQL schema and executing queries against the endpoint. Mutations are disabled by default for security reasons. Users can install mcp-graphql via Smithery or manually to Claude Desktop. It is recommended to carefully consider enabling mutations in production environments to prevent unauthorized data modifications.

cs-self-learning

This repository serves as an archive for computer science learning notes, codes, and materials. It covers a wide range of topics including basic knowledge, AI, backend & big data, tools, and other related areas. The content is organized into sections and subsections for easy navigation and reference. Users can find learning resources, programming practices, and tutorials on various subjects such as languages, data structures & algorithms, AI, frameworks, databases, development tools, and more. The repository aims to support self-learning and skill development in the field of computer science.

apidash

API Dash is an open-source cross-platform API Client that allows users to easily create and customize API requests, visually inspect responses, and generate API integration code. It supports various HTTP methods, GraphQL requests, and multimedia API responses. Users can organize requests in collections, preview data in different formats, and generate code for multiple languages. The tool also offers dark mode support, data persistence, and various customization options.

agentfield

AgentField is an open-source control plane designed for autonomous AI agents, providing infrastructure for agents to make decisions beyond chatbots. It offers features like scaling infrastructure, routing & discovery, async execution, durable state, observability, trust infrastructure with cryptographic identity, verifiable credentials, and policy enforcement. Users can write agents in Python, Go, TypeScript, or interact via REST APIs. The tool enables the creation of AI backends that reason autonomously within defined boundaries, offering predictability and flexibility. AgentField aims to bridge the gap between AI frameworks and production-ready infrastructure for AI agents.

claude-code-settings

A repository collecting best practices for Claude Code settings and customization. It provides configuration files for customizing Claude Code's behavior and building an efficient development environment. The repository includes custom agents and skills for specific domains, interactive development workflow features, efficient development rules, and team workflow with Codex MCP. Users can leverage the provided configuration files and tools to enhance their development process and improve code quality.

20 - OpenAI Gpts

Principal Backend Engineer

Expert Backend Developer: Skilled in Python, Java, Node.js, Ruby, PHP for robust backend solutions.

Octorate Code Companion

I help developers understand and use APIs, referencing a YAML model.

![[サンプル] InterviewCat Backend Questions Screenshot](/screenshots_gpts/g-sC05Z23Zt.jpg)

[サンプル] InterviewCat Backend Questions

問題集からバックエンドの技術質問(57+問)をランダムで出題します。回答に対して点数、評価ポイント、改善点を出し、最終的に面接に合格したかどうかを判断します。こちらはサンプルバージョンです。掲載問題数は減らしています。

FAANG Interviewer

I simulate software engineering interviews using FAANG Technical Questions and provide feedback.

Serverless Architect Pro

Helping software engineers to architect domain-driven serverless systems on AWS

C# Expert

Hello, I'm your C# Backend Expert! Ready to solve all your C# and .Net Core queries.

ReScript

Write ReScript code. Trained with versions 10 & 11. Documentation github.com/guillempuche/gpt-rescript

JAVA开发工程师

精通所有java知识和框架,特别是springboot和springcloud后台接口开发以及实体类和mysql完整流程crud完整代码开发。解决一切java报错问题,具备JAVA资深工程师能力

FastAPIHTMX

Assists with `fastapi-htmx` package queries, using specific documentation for accurate solutions.