chord-seq-ai-app

AI-powered chord progression suggester built with Next.js for composers and music enthusiasts.

Stars: 68

ChordSeqAI Web App is a user-friendly interface for composing chord progressions using deep learning models. The app allows users to interact with suggestions, customize signatures, specify durations, select models and styles, transpose, import, export, and utilize chord variants. It supports keyboard shortcuts, automatic local saving, and playback features. The app is designed for desktop use and offers features for both beginners and advanced users in music composition.

README:

- Introduction

-

Getting Started

- 2.1. Prerequisites

- 2.2. Installation

- 2.3. Running the App

- Support

-

Usage

- 4.1. Adding and Deleting Chords

- 4.2. Suggestions

- 4.3. Timeline Controls

- 4.4. Specifying Duration

- 4.5. Customizing Signature

- 4.6. Playback

- 4.7. Selecting Models and Styles

- 4.8. Transpose, Import, Export

- 4.9. Chord Variants

- FAQ

- Web Stack

- License

The ChordSeqAI Web App is a dynamic and user-friendly interface for interactions with deep learning models. This Next.js application enables users to compose beautiful chord progressions by suggesting the next chord.

This app originates from the ChordSeqAI graduate project, development is continued in this new repository.

This section describes how to run the app locally. If you want to instead use a deployed version, visit chordseqai.com.

Before you begin, ensure you have the following installed:

-

Navigate to the place where you want the app to be downloaded in Command Prompt.

-

Clone the repository:

git clone https://github.com/PetrIvan/chord-seq-ai-app.git -

Navigate to the project directory:

cd chord-seq-ai-app -

Install NPM packages:

npm install

To run the application locally:

- Start the development server:

npm run dev - Open http://localhost:3000 in your browser to view the app.

This app is currently supported only on desktop devices. A Chromium browser is recommended, as another alternative may not be stable.

Keyboard shortcuts, also sometimes called hotkeys, are available for most of the functions of the app. When you hover over an element of a component, it shows you what happens on click as well as the shortcut for it. The state of the app is automatically saved locally in the browser, so you will not lose progress unless you delete the site data.

The plus icon (shortcut A) above the timeline can be used to add a new chord. It is initialized as an empty chord, denoted by ?. Clicking on it will select it and suggestions will show, selection can also be handled by the arrow keys.

The selected chord can be deleted by the delete icon (Del) located next to the plus icon. If you accidentally delete something, you can undo and redo the changes by the arrow icons (Ctrl + Z, Ctrl + Y).

Located below the timeline. Clicking on any suggested chord will replace the selected chord with it. You can search the chords by their symbol or by the notes. If you cannot find the chord you are looking for, try enabling Include Variants.

Similar to that of video editors. Scroll the mouse wheel to zoom in/out, dragging the mouse wheel will move the view. Chords cannot be shuffled around.

You can drag the right edge of the chord to make it span a different duration. It will snap to the ticks at the top and bottom of the timeline.

A 4/4 signature is the most common in Western music, but you may need another variant. Simply clicking on the signature will display a menu to change it to something else.

Clicking on the play icon (Space) will start the playback. The blue playhead will start moving and chords playing, clicking on the icon again will pause it. You can move the playhead by clicking or dragging your mouse on the ticks, but letting the playback finish will automatically move the playhead to the start.

A metronome can be turned on (M) and the tempo (in beats per minute) can be specified from the icons next to the middle play icon.

The base Transformer S model may not be enough for you as you may want to also try applying some custom styles to the recommendations. Clicking on the top menu will allow you to change the model.

Recurrent Network is the simplest, fastest model, but it may not have enough capacity to suit your needs. We recommend using this model only on slower devices.

Transformer models and their S, M, and L variants (standing for small, medium, and large) are a better option. A bigger model may produce better suggestions at the cost of slower inference.

Conditional Transformer models allow you to also choose the specific genre and decade of the chord progression you are composing. A new part will show next to the name of the model, where you can select the style you are going for. Multiple genres can be selected and custom weighting can be applied to put a higher emphasis on a specific style.

The transposition is done from the left icon at the top right menu. Negative semitone values can be entered to transpose down.

You can import and export the sequence you are composing in the .chseq format (recommended for saving), but you can also use MIDI files. If you somehow manage to break the app by importing an invalid file, you can clear the browser site data to fix the problem (in Google Chrome under Settings > Site Settings > View permissions and data stored across sites find localhost and delete it).

Recommended for more advanced users. By opening the variant menu in the timeline for the currently selected chord (V) or suggestions via its button, you can specify which variant to use (usually, alternative notations or inversions). Clicking on any alternative will change the visualization on the piano. When this menu is open from the timeline, the newly selected variant can be either applied once (only to that chord) or to all (replacing all of the same chords with this variant). When it is open from the suggestions, it can be used once (replacing the selected chord with this variant) or set as default (which makes it the preferred variant in the suggestions). You can close this menu from the close icon (alternatively Esc).

While you might try to use variants to compose chord voicings, this is not recommended. Only use chord variants when you specifically want to use another symbol for that chord.

Scientific pitch notation, also known as American standard pitch notation, is used. Sharps are used instead of flats for note names to make the notation easier to read and understand.

For a list of all available features, check out the page Features in the wiki.

Q: How can ChordSeqAI be used for professional music production? Is attribution required for the chord progressions?

A: You can export the produced chord progressions as a MIDI file and use it in a different music production software (e.g. in DAWs). Everything you produce using this app is yours, therefore no attribution is needed.

Q: What information do the models use to produce suggestions?

A: The preceding chords without their variants are used. The duration of a chord is ignored for now, consecutive chords get merged.

Q: Is there any limit to the number of chords per sequence that can be entered?

A: There can be a maximum of 255 chords (after removing duplicates and empty chords) due to the restrictions of the models.

Q: Is AI trained on my artworks?

A: Your data remains private and is not used for training our AI models. All processing occurs locally in your browser.

Q: What kind of user data does ChordSeqAI track?

A: ChordSeqAI employs Umami Analytics for basic usage statistics, focusing on privacy and anonymity. We collect minimal, anonymized data solely to enhance app functionality and user experience. No personal or detailed usage data is tracked.

This is a Next.js 14 app. Tailwind CSS is used as the library for styling, Zustand serves as the state management library, ONNX runtime is employed to run the AI models, and Tone.js does the job of an audio playback library for the composed chord progressions.

Distributed under the MIT License. See LICENSE for more information.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for chord-seq-ai-app

Similar Open Source Tools

chord-seq-ai-app

ChordSeqAI Web App is a user-friendly interface for composing chord progressions using deep learning models. The app allows users to interact with suggestions, customize signatures, specify durations, select models and styles, transpose, import, export, and utilize chord variants. It supports keyboard shortcuts, automatic local saving, and playback features. The app is designed for desktop use and offers features for both beginners and advanced users in music composition.

ultimate-rvc

Ultimate RVC is an extension of AiCoverGen, offering new features and improvements for generating audio content using RVC. It is designed for users looking to integrate singing functionality into AI assistants/chatbots/vtubers, create character voices for songs or books, and train voice models. The tool provides easy setup, voice conversion enhancements, TTS functionality, voice model training suite, caching system, UI improvements, and support for custom configurations. It is available for local and Google Colab use, with a PyPI package for easy access. The tool also offers CLI usage and customization through environment variables.

obsidian-companion

Companion is an Obsidian plugin that adds an AI-powered autocomplete feature to your note-taking and personal knowledge management platform. With Companion, you can write notes more quickly and easily by receiving suggestions for completing words, phrases, and even entire sentences based on the context of your writing. The autocomplete feature uses OpenAI's state-of-the-art GPT-3 and GPT-3.5, including ChatGPT, and locally hosted Ollama models, among others, to generate smart suggestions that are tailored to your specific writing style and preferences. Support for more models is planned, too.

lfai-landscape

LF AI & Data Landscape is a map to explore open source projects in the AI & Data domains, highlighting companies that are members of LF AI & Data. It showcases members of the Foundation and is modelled after the Cloud Native Computing Foundation landscape. The landscape includes current version, interactive version, new entries, logos, proper SVGs, corrections, external data, best practices badge, non-updated items, license, formats, installation, vulnerability reporting, and adjusting the landscape view.

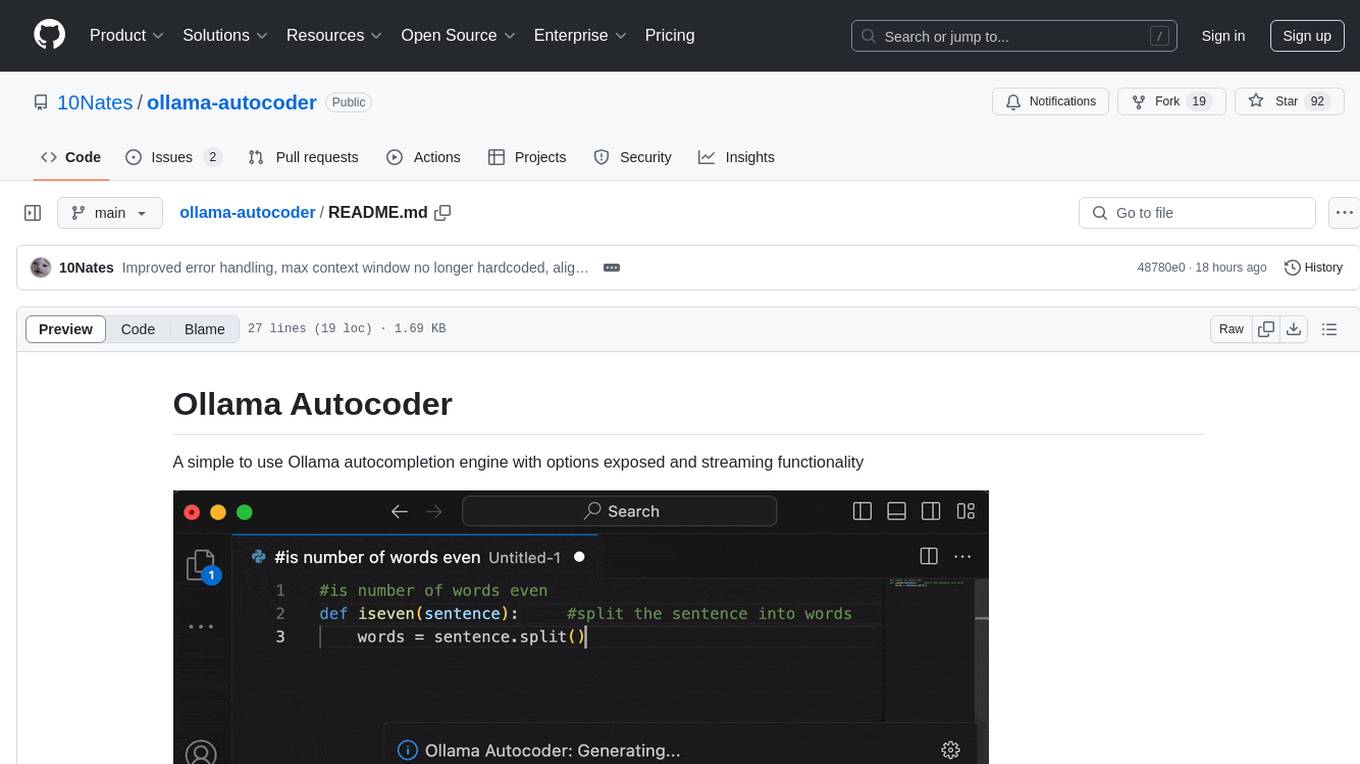

ollama-autocoder

Ollama Autocoder is a simple to use autocompletion engine that integrates with Ollama AI. It provides options for streaming functionality and requires specific settings for optimal performance. Users can easily generate text completions by pressing a key or using a command pallete. The tool is designed to work with Ollama API and a specified model, offering real-time generation of text suggestions.

brokk

Brokk is a code assistant designed to understand code semantically, allowing LLMs to work effectively on large codebases. It offers features like agentic search, summarizing related classes, parsing stack traces, adding source for usages, and autonomously fixing errors. Users can interact with Brokk through different panels and commands, enabling them to manipulate context, ask questions, search codebase, run shell commands, and more. Brokk helps with tasks like debugging regressions, exploring codebase, AI-powered refactoring, and working with dependencies. It is particularly useful for making complex, multi-file edits with o1pro.

lumigator

Lumigator is an open-source platform developed by Mozilla.ai to help users select the most suitable language model for their specific needs. It supports the evaluation of summarization tasks using sequence-to-sequence models such as BART and BERT, as well as causal models like GPT and Mistral. The platform aims to make model selection transparent, efficient, and empowering by providing a framework for comparing LLMs using task-specific metrics to evaluate how well a model fits a project's needs. Lumigator is in the early stages of development and plans to expand support to additional machine learning tasks and use cases in the future.

chaiNNer

ChaiNNer is a node-based image processing GUI aimed at making chaining image processing tasks easy and customizable. It gives users a high level of control over their processing pipeline and allows them to perform complex tasks by connecting nodes together. ChaiNNer is cross-platform, supporting Windows, MacOS, and Linux. It features an intuitive drag-and-drop interface, making it easy to create and modify processing chains. Additionally, ChaiNNer offers a wide range of nodes for various image processing tasks, including upscaling, denoising, sharpening, and color correction. It also supports batch processing, allowing users to process multiple images or videos at once.

AppAgent

AppAgent is a novel LLM-based multimodal agent framework designed to operate smartphone applications. Our framework enables the agent to operate smartphone applications through a simplified action space, mimicking human-like interactions such as tapping and swiping. This novel approach bypasses the need for system back-end access, thereby broadening its applicability across diverse apps. Central to our agent's functionality is its innovative learning method. The agent learns to navigate and use new apps either through autonomous exploration or by observing human demonstrations. This process generates a knowledge base that the agent refers to for executing complex tasks across different applications.

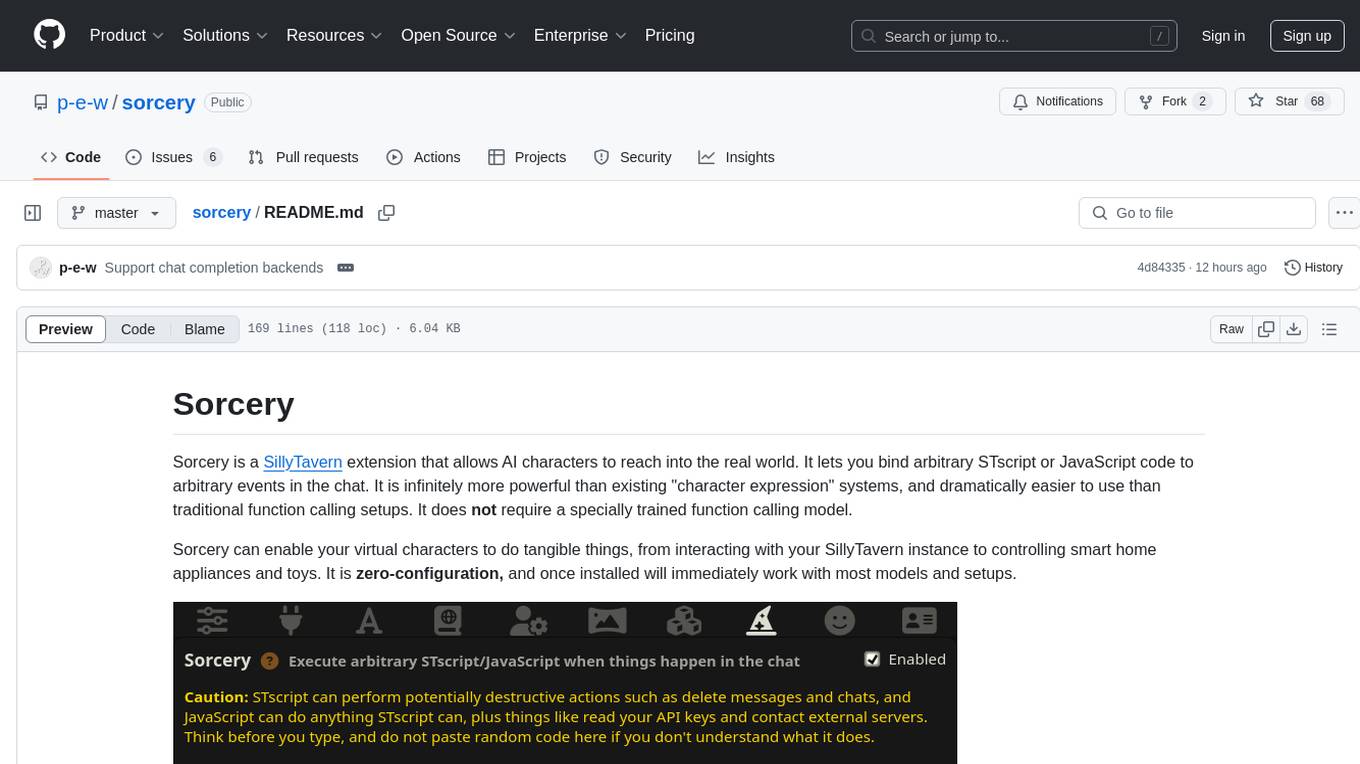

sorcery

Sorcery is a SillyTavern extension that allows AI characters to interact with the real world by executing user-defined scripts at specific events in the chat. It is easy to use and does not require a specially trained function calling model. Sorcery can be used to control smart home appliances, interact with virtual characters, and perform various tasks in the chat environment. It works by injecting instructions into the system prompt and intercepting markers to run associated scripts, providing a seamless user experience.

aitools_client

Seth's AI Tools is a Unity-based front-end that interfaces with various AI APIs to perform tasks such as generating Twine games, quizzes, posters, and more. The tool is a native Windows application that supports features like live update integration with image editors, text-to-image conversion, image processing, mask painting, and more. It allows users to connect to multiple servers for fast generation using GPUs and offers a neat workflow for evolving images in real-time. The tool respects user privacy by operating locally and includes built-in games and apps to test AI/SD capabilities. Additionally, it features an AI Guide for creating motivational posters and illustrated stories, as well as an Adventure mode with presets for generating web quizzes and Twine game projects.

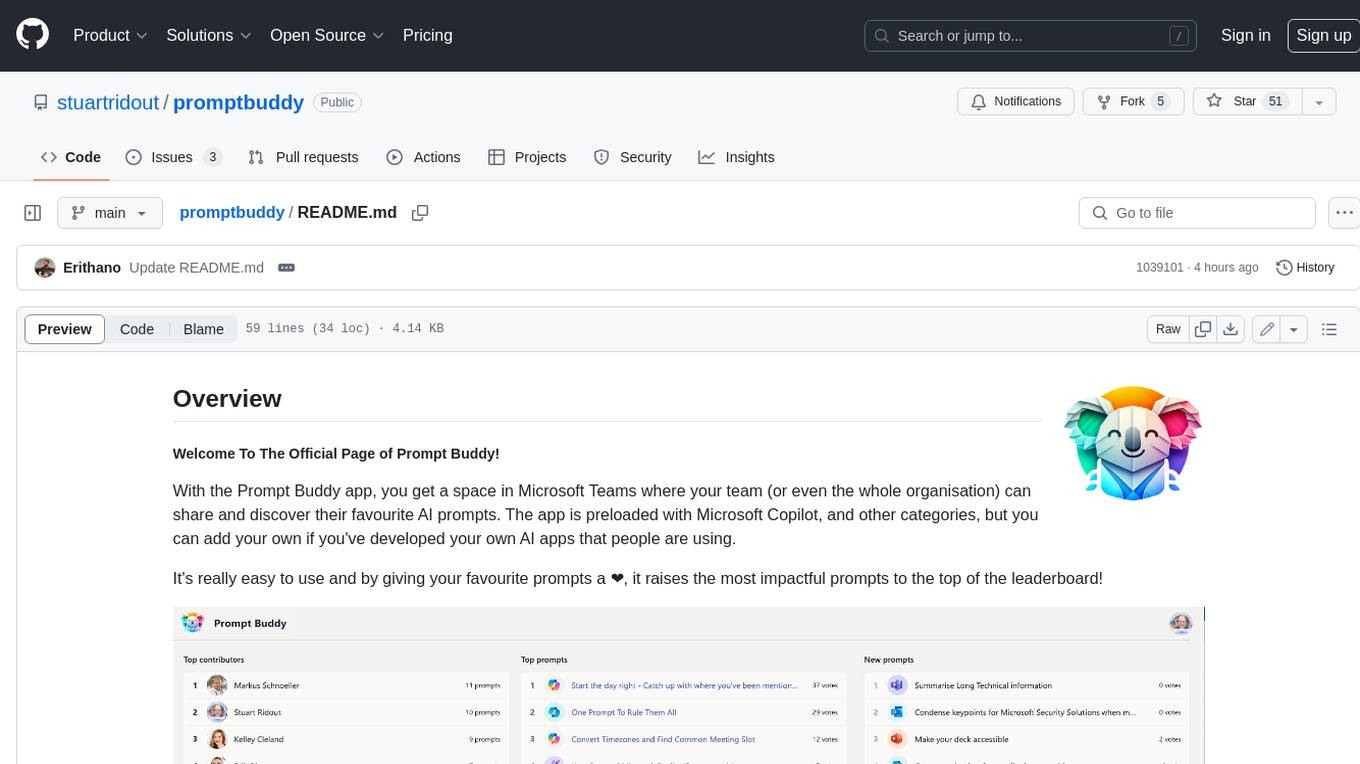

promptbuddy

Prompt Buddy is a Microsoft Teams app that provides a central location for teams to share and discover their favorite AI prompts. It comes preloaded with Microsoft Copilot and other categories, but users can also add their own custom prompts. The app is easy to use and allows users to upvote their favorite prompts, which raises them to the top of the leaderboard. Prompt Buddy also supports dark mode and offers a mobile layout for use on phones. It is built on the Power Platform and can be customized and extended by the installer.

lovelaice

Lovelaice is an AI-powered assistant for your terminal and editor. It can run bash commands, search the Internet, answer general and technical questions, complete text files, chat casually, execute code in various languages, and more. Lovelaice is configurable with API keys and LLM models, and can be used for a wide range of tasks requiring bash commands or coding assistance. It is designed to be versatile, interactive, and helpful for daily tasks and projects.

reverse-engineering-assistant

ReVA (Reverse Engineering Assistant) is a project aimed at building a disassembler agnostic AI assistant for reverse engineering tasks. It utilizes a tool-driven approach, providing small tools to the user to empower them in completing complex tasks. The assistant is designed to accept various inputs, guide the user in correcting mistakes, and provide additional context to encourage exploration. Users can ask questions, perform tasks like decompilation, class diagram generation, variable renaming, and more. ReVA supports different language models for online and local inference, with easy configuration options. The workflow involves opening the RE tool and program, then starting a chat session to interact with the assistant. Installation includes setting up the Python component, running the chat tool, and configuring the Ghidra extension for seamless integration. ReVA aims to enhance the reverse engineering process by breaking down actions into small parts, including the user's thoughts in the output, and providing support for monitoring and adjusting prompts.

LLocalSearch

LLocalSearch is a completely locally running search aggregator using LLM Agents. The user can ask a question and the system will use a chain of LLMs to find the answer. The user can see the progress of the agents and the final answer. No OpenAI or Google API keys are needed.

qlora-pipe

qlora-pipe is a pipeline parallel training script designed for efficiently training large language models that cannot fit on one GPU. It supports QLoRA, LoRA, and full fine-tuning, with efficient model loading and the ability to load any dataset that Axolotl can handle. The script allows for raw text training, resuming training from a checkpoint, logging metrics to Tensorboard, specifying a separate evaluation dataset, training on multiple datasets simultaneously, and supports various models like Llama, Mistral, Mixtral, Qwen-1.5, and Cohere (Command R). It handles pipeline- and data-parallelism using Deepspeed, enabling users to set the number of GPUs, pipeline stages, and gradient accumulation steps for optimal utilization.

For similar tasks

chord-seq-ai-app

ChordSeqAI Web App is a user-friendly interface for composing chord progressions using deep learning models. The app allows users to interact with suggestions, customize signatures, specify durations, select models and styles, transpose, import, export, and utilize chord variants. It supports keyboard shortcuts, automatic local saving, and playback features. The app is designed for desktop use and offers features for both beginners and advanced users in music composition.

For similar jobs

chord-seq-ai-app

ChordSeqAI Web App is a user-friendly interface for composing chord progressions using deep learning models. The app allows users to interact with suggestions, customize signatures, specify durations, select models and styles, transpose, import, export, and utilize chord variants. It supports keyboard shortcuts, automatic local saving, and playback features. The app is designed for desktop use and offers features for both beginners and advanced users in music composition.

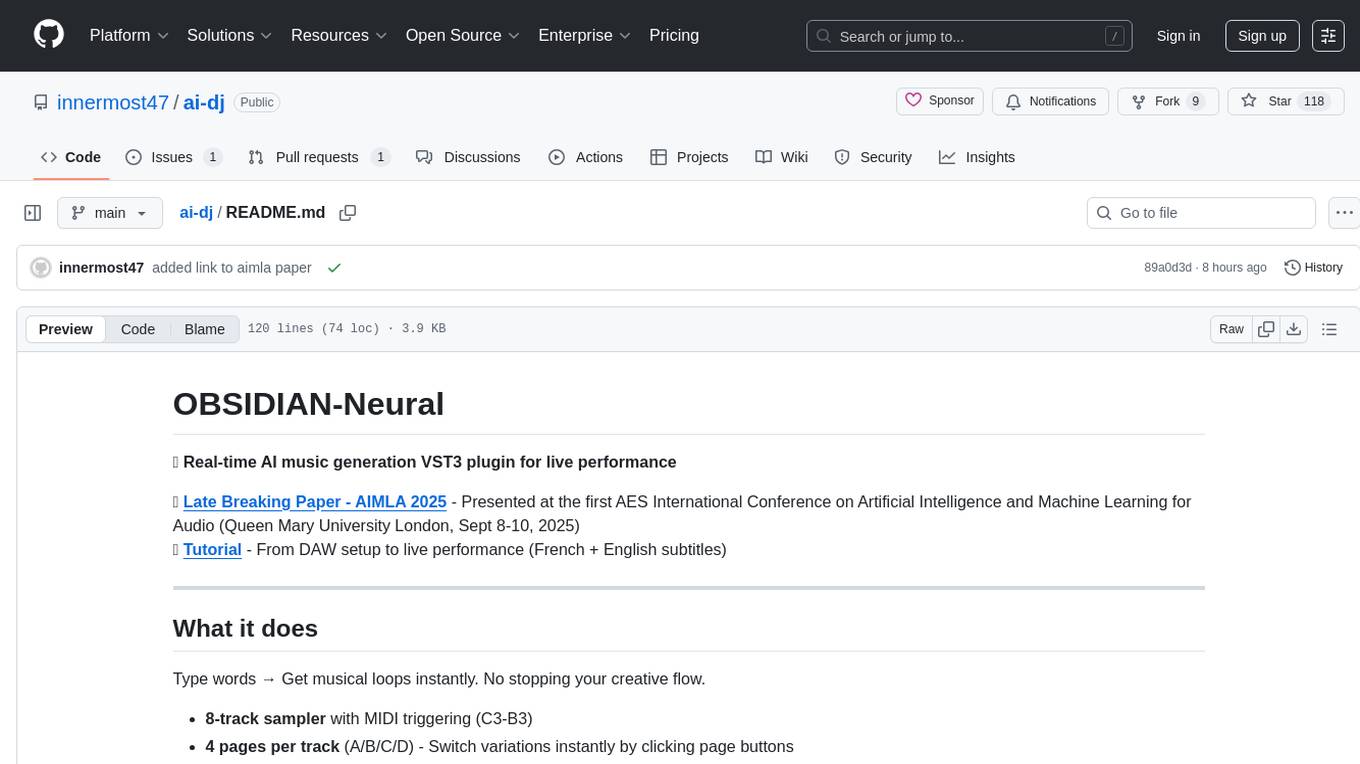

ai-dj

OBSIDIAN-Neural is a real-time AI music generation VST3 plugin designed for live performance. It allows users to type words and instantly receive musical loops, enhancing creative flow. The plugin features an 8-track sampler with MIDI triggering, 4 pages per track for easy variation switching, perfect DAW sync, real-time generation without pre-recorded samples, and stems separation for isolated drums, bass, and vocals. Users can generate music by typing specific keywords and trigger loops with MIDI while jamming. The tool offers different setups for server + GPU, local models for offline use, and a free API option with no setup required. OBSIDIAN-Neural is actively developed and has received over 110 GitHub stars, with ongoing updates and bug fixes. It is dual licensed under GNU Affero General Public License v3.0 and offers a commercial license option for interested parties.

suno-api

Suno AI API is an open-source project that allows developers to integrate the music generation capabilities of Suno.ai into their own applications. The API provides a simple and convenient way to generate music, lyrics, and other audio content using Suno.ai's powerful AI models. With Suno AI API, developers can easily add music generation functionality to their apps, websites, and other projects.

NSMusicS

NSMusicS is a local music software that is expected to support multiple platforms with AI capabilities and multimodal features. The goal of NSMusicS is to integrate various functions (such as artificial intelligence, streaming, music library management, cross platform, etc.), which can be understood as similar to Navidrome but with more features than Navidrome. It wants to become a plugin integrated application that can almost have all music functions.

RVC_CLI

**RVC_CLI: Retrieval-based Voice Conversion Command Line Interface** This command-line interface (CLI) provides a comprehensive set of tools for voice conversion, enabling you to modify the pitch, timbre, and other characteristics of audio recordings. It leverages advanced machine learning models to achieve realistic and high-quality voice conversions. **Key Features:** * **Inference:** Convert the pitch and timbre of audio in real-time or process audio files in batch mode. * **TTS Inference:** Synthesize speech from text using a variety of voices and apply voice conversion techniques. * **Training:** Train custom voice conversion models to meet specific requirements. * **Model Management:** Extract, blend, and analyze models to fine-tune and optimize performance. * **Audio Analysis:** Inspect audio files to gain insights into their characteristics. * **API:** Integrate the CLI's functionality into your own applications or workflows. **Applications:** The RVC_CLI finds applications in various domains, including: * **Music Production:** Create unique vocal effects, harmonies, and backing vocals. * **Voiceovers:** Generate voiceovers with different accents, emotions, and styles. * **Audio Editing:** Enhance or modify audio recordings for podcasts, audiobooks, and other content. * **Research and Development:** Explore and advance the field of voice conversion technology. **For Jobs:** * Audio Engineer * Music Producer * Voiceover Artist * Audio Editor * Machine Learning Engineer **AI Keywords:** * Voice Conversion * Pitch Shifting * Timbre Modification * Machine Learning * Audio Processing **For Tasks:** * Convert Pitch * Change Timbre * Synthesize Speech * Train Model * Analyze Audio

openvino-plugins-ai-audacity

OpenVINO™ AI Plugins for Audacity* are a set of AI-enabled effects, generators, and analyzers for Audacity®. These AI features run 100% locally on your PC -- no internet connection necessary! OpenVINO™ is used to run AI models on supported accelerators found on the user's system such as CPU, GPU, and NPU. * **Music Separation**: Separate a mono or stereo track into individual stems -- Drums, Bass, Vocals, & Other Instruments. * **Noise Suppression**: Removes background noise from an audio sample. * **Music Generation & Continuation**: Uses MusicGen LLM to generate snippets of music, or to generate a continuation of an existing snippet of music. * **Whisper Transcription**: Uses whisper.cpp to generate a label track containing the transcription or translation for a given selection of spoken audio or vocals.

WavCraft

WavCraft is an LLM-driven agent for audio content creation and editing. It applies LLM to connect various audio expert models and DSP function together. With WavCraft, users can edit the content of given audio clip(s) conditioned on text input, create an audio clip given text input, get more inspiration from WavCraft by prompting a script setting and let the model do the scriptwriting and create the sound, and check if your audio file is synthesized by WavCraft.

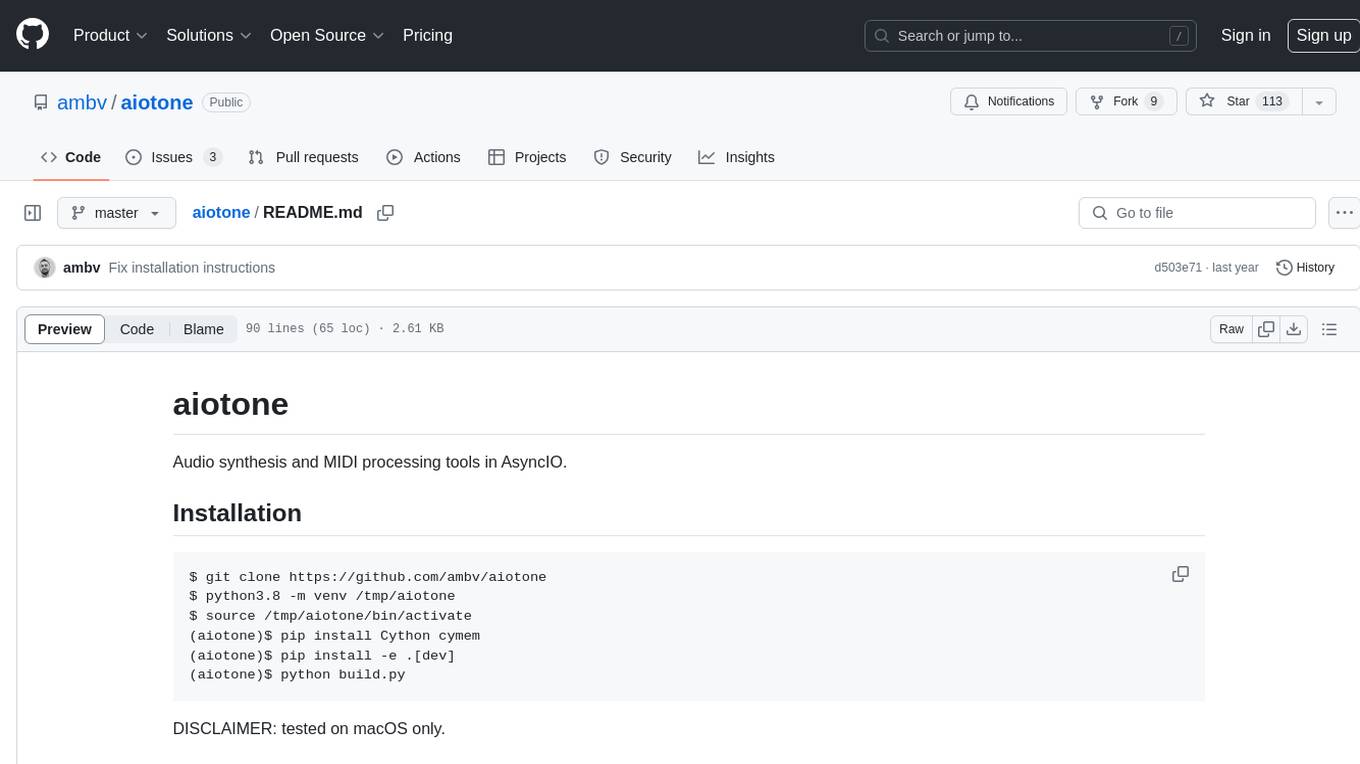

aiotone

Aiotone is a repository containing audio synthesis and MIDI processing tools in AsyncIO. It includes a work-in-progress polyphonic 4-operator FM synthesizer, tools for performing on Moog Mother 32 synthesizers, sequencing Novation Circuit and Novation Circuit Mono Station, and self-generating sequences for Moog Mother 32 synthesizers and Moog Subharmonicon. The tools are designed for real-time audio processing and MIDI control, with features like polyphony, modulation, and sequencing. The repository provides examples and tutorials for using the tools in music production and live performances.