generator

CTX: a tool that solves the context management gap when working with LLMs like ChatGPT or Claude. It helps developers organize and automatically collect information from their codebase into structured documents that can be easily shared with AI assistants.

Stars: 218

ctx is a tool designed to automatically generate organized context files from code files, GitHub repositories, Git commits, web pages, and plain text. It aims to efficiently provide necessary context to AI language models like ChatGPT and Claude, enabling users to streamline code refactoring, multiple iteration development, documentation generation, and seamless AI integration. With ctx, users can create structured markdown documents, save context files, and serve context through an MCP server for real-time assistance. The tool simplifies the process of sharing project information with AI assistants, making AI conversations smarter and easier.

README:

Create LLM-ready contexts in minutes

During development, your codebase constantly evolves. Files are added, modified, and removed. Each time you need to continue working with an LLM, you need to regenerate context to provide updated information about your current codebase state.

CTX is a context management tool that gives developers full control over what AI sees from their codebase. Instead of letting AI tools guess what's relevant, you define exactly what context to provide - making your AI-assisted development more predictable, secure, and efficient.

It helps developers organize contexts and automatically collect information from their codebase into structured documents that can be easily shared with LLM.

For example, a developer describes what context they need:

# context.yaml

documents:

- description: User Authentication System

outputPath: auth.md

sources:

- type: file

description: Authentication Controllers

sourcePaths:

- src/Auth

filePattern: "*.php"

- type: file

description: Authentication Models

sourcePaths:

- src/Models

filePattern: "*User*.php"

- description: Another Document

outputPath: another-document.md

sources:

- type: file

sourcePaths:

- src/SomeModuleThis configuration will gather all PHP files from the src/Auth directory and any PHP files containing "User" in

their name from the src/Models directory into a single context file .context/auth.md. This file can then be pasted

into a chat session or provided via the built-in MCP server.

Current AI coding tools automatically scan your entire codebase, which creates several issues:

- Security risk: Your sensitive files (env vars, tokens, private code) get uploaded to cloud services

- Context dilution: AI gets overwhelmed with irrelevant code, reducing output quality

- No control: You can't influence what the AI considers when generating responses

- Expensive: Premium tools charge based on how much they scan, not how much you actually need

You know your code better than any AI. CTX puts you in control:

- ✅ Define exactly what context to share - no more, no less

- ✅ Keep sensitive data local - works with local LLMs or carefully curated cloud contexts

- ✅ Generate reusable, shareable contexts - commit configurations to your repo

- ✅ Improve code architecture - designing for AI context windows naturally leads to better modular code

- ✅ Works with any LLM - Claude, ChatGPT, local models, or future tools

Download and install the tool using our installation script:

curl -sSL https://raw.githubusercontent.com/context-hub/generator/main/download-latest.sh | shThis installs the ctx command to your system (typically in /usr/local/bin).

Want more options? See the complete Installation Guide for alternative installation methods.

- Initialize your project:

cd your-project

ctx initThis generates a context.yaml file with a basic configuration and shows your project structure, helping you understand

what contexts might be useful.

Check the Command Reference for all available commands and options.

- Create your first context:

ctx generate- Use with your favorite AI:

- Copy the generated markdown files to your AI chat

- Or use the built-in MCP server with Claude Desktop

- Or process locally with open-source models

# Quick project overview for new developers

documents:

- description: "Project Architecture Overview"

outputPath: "docs/architecture.md"

sources:

- type: tree

sourcePaths: [ "src" ]

maxDepth: 2

- type: file

description: "Core interfaces and main classes"

sourcePaths: [ "src" ]

filePattern: "*Interface.php"# Context for developing a new feature

documents:

- description: "User Authentication System"

outputPath: "contexts/auth-context.md"

sources:

- type: file

sourcePaths: [ "src/Auth", "src/Models" ]

filePattern: "*.php"

- type: git_diff

description: "Recent auth changes"

commit: "last-week"# Generate API documentation

documents:

- description: "API Documentation"

outputPath: "docs/api.md"

sources:

- type: file

sourcePaths: [ "src/Controllers" ]

modifiers: [ "php-signature" ]

contains: [ "@Route", "@Api" ]- Define exactly which files, directories, or code patterns to include

- Filter by content, file patterns, date ranges, or size

- Apply modifiers to extract only relevant parts (e.g., function signatures)

- Local-first: Generate contexts locally, choose what to share

- No automatic uploads: Unlike tools that scan everything, you control what gets sent

- Works with local models: Use completely offline with Ollama, LM Studio, etc.

- Context configurations are part of your project

- Team members get the same contexts

- Evolve contexts as your codebase changes

- Include git diffs to show recent changes

- Fast: Generate contexts in seconds, not minutes of manual copying

- Flexible: Works with any AI tool or local model

- Shareable: Commit configurations, share with team

- Extensible: Plugin system for custom sources and modifiers

CTX follows a simple pipeline:

Configuration → Sources → Filters → Modifiers → Output

- Sources: Where to get content (files, GitHub, git diffs, URLs, etc.)

- Filters: What to include/exclude (patterns, content, dates, sizes)

- Modifiers: How to transform content (extract signatures, remove comments)

- Output: Structured markdown ready for AI consumption

For a more seamless experience, you can connect Context Generator directly to Claude AI using the MCP server.

# Auto-detect OS and generate configuration

ctx mcp:configThis command:

- 🔍 Auto-detects your OS (Windows, Linux, macOS, WSL)

- 🎯 Generates the right config for your environment

- 📋 Provides copy-paste ready JSON for Claude Desktop

- 🧭 Includes setup instructions and troubleshooting tips

Global Registry Mode (recommended for multiple projects):

{

"mcpServers": {

"ctx": {

"command": "ctx",

"args": [

"server"

]

}

}

}If you prefer manual setup, point the MCP client to the Context Generator server:

{

"mcpServers": {

"ctx": {

"command": "ctx",

"args": [

"server",

"-c",

"/path/to/project"

]

}

}

}Note: Read more about MCP Server for detailed setup instructions and troubleshooting.

Now you can ask Claude questions about your codebase without manually uploading context files!

Define project-specific commands that can be executed through the MCP interface:

tools:

- id: run-tests

description: "Run project tests with coverage"

type: run

commands:

- cmd: npm

args: [ "test", "--coverage" ]For complete documentation, including all available features and configuration options, please visit:

Join hundreds of developers using CTX for professional AI-assisted coding:

What you'll find in our Discord:

- 💡 Share and discover context configurations

- 🛠️ Get help with setup and advanced usage

- 🚀 Showcase your AI development workflows

- 🤝 Connect with like-minded developers

- 📢 First to know about new releases and features

This project is licensed under the MIT License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for generator

Similar Open Source Tools

generator

ctx is a tool designed to automatically generate organized context files from code files, GitHub repositories, Git commits, web pages, and plain text. It aims to efficiently provide necessary context to AI language models like ChatGPT and Claude, enabling users to streamline code refactoring, multiple iteration development, documentation generation, and seamless AI integration. With ctx, users can create structured markdown documents, save context files, and serve context through an MCP server for real-time assistance. The tool simplifies the process of sharing project information with AI assistants, making AI conversations smarter and easier.

crawl4ai

Crawl4AI is a powerful and free web crawling service that extracts valuable data from websites and provides LLM-friendly output formats. It supports crawling multiple URLs simultaneously, replaces media tags with ALT, and is completely free to use and open-source. Users can integrate Crawl4AI into Python projects as a library or run it as a standalone local server. The tool allows users to crawl and extract data from specified URLs using different providers and models, with options to include raw HTML content, force fresh crawls, and extract meaningful text blocks. Configuration settings can be adjusted in the `crawler/config.py` file to customize providers, API keys, chunk processing, and word thresholds. Contributions to Crawl4AI are welcome from the open-source community to enhance its value for AI enthusiasts and developers.

aider-desk

AiderDesk is a desktop application that enhances coding workflow by leveraging AI capabilities. It offers an intuitive GUI, project management, IDE integration, MCP support, settings management, cost tracking, structured messages, visual file management, model switching, code diff viewer, one-click reverts, and easy sharing. Users can install it by downloading the latest release and running the executable. AiderDesk also supports Python version detection and auto update disabling. It includes features like multiple project management, context file management, model switching, chat mode selection, question answering, cost tracking, MCP server integration, and MCP support for external tools and context. Development setup involves cloning the repository, installing dependencies, running in development mode, and building executables for different platforms. Contributions from the community are welcome following specific guidelines.

nanocoder

Nanocoder is a local-first CLI coding agent that supports multiple AI providers with tool support for file operations and command execution. It focuses on privacy and control, allowing users to code locally with AI tools. The tool is designed to bring the power of agentic coding tools to local models or controlled APIs like OpenRouter, promoting community-led development and inclusive collaboration in the AI coding space.

agentpress

AgentPress is a collection of simple but powerful utilities that serve as building blocks for creating AI agents. It includes core components for managing threads, registering tools, processing responses, state management, and utilizing LLMs. The tool provides a modular architecture for handling messages, LLM API calls, response processing, tool execution, and results management. Users can easily set up the environment, create custom tools with OpenAPI or XML schema, and manage conversation threads with real-time interaction. AgentPress aims to be agnostic, simple, and flexible, allowing users to customize and extend functionalities as needed.

fast-mcp

Fast MCP is a Ruby gem that simplifies the integration of AI models with your Ruby applications. It provides a clean implementation of the Model Context Protocol, eliminating complex communication protocols, integration challenges, and compatibility issues. With Fast MCP, you can easily connect AI models to your servers, share data resources, choose from multiple transports, integrate with frameworks like Rails and Sinatra, and secure your AI-powered endpoints. The gem also offers real-time updates and authentication support, making AI integration a seamless experience for developers.

Groqqle

Groqqle 2.1 is a revolutionary, free AI web search and API that instantly returns ORIGINAL content derived from source articles, websites, videos, and even foreign language sources, for ANY target market of ANY reading comprehension level! It combines the power of large language models with advanced web and news search capabilities, offering a user-friendly web interface, a robust API, and now a powerful Groqqle_web_tool for seamless integration into your projects. Developers can instantly incorporate Groqqle into their applications, providing a powerful tool for content generation, research, and analysis across various domains and languages.

hayhooks

Hayhooks is a tool that simplifies the deployment and serving of Haystack pipelines as REST APIs. It allows users to wrap their pipelines with custom logic and expose them via HTTP endpoints, including OpenAI-compatible chat completion endpoints. With Hayhooks, users can easily convert their Haystack pipelines into API services with minimal boilerplate code.

docs-mcp-server

The docs-mcp-server repository contains the server-side code for the documentation management system. It provides functionalities for managing, storing, and retrieving documentation files. Users can upload, update, and delete documents through the server. The server also supports user authentication and authorization to ensure secure access to the documentation system. Additionally, the server includes APIs for integrating with other systems and tools, making it a versatile solution for managing documentation in various projects and organizations.

LEANN

LEANN is an innovative vector database that democratizes personal AI, transforming your laptop into a powerful RAG system that can index and search through millions of documents using 97% less storage than traditional solutions without accuracy loss. It achieves this through graph-based selective recomputation and high-degree preserving pruning, computing embeddings on-demand instead of storing them all. LEANN allows semantic search of file system, emails, browser history, chat history, codebase, or external knowledge bases on your laptop with zero cloud costs and complete privacy. It is a drop-in semantic search MCP service fully compatible with Claude Code, enabling intelligent retrieval without changing your workflow.

g4f.dev

G4f.dev is the official documentation hub for GPT4Free, a free and convenient AI tool with endpoints that can be integrated directly into apps, scripts, and web browsers. The documentation provides clear overviews, quick examples, and deeper insights into the major features of GPT4Free, including text and image generation. Users can choose between Python and JavaScript for installation and setup, and can access various API endpoints, providers, models, and client options for different tasks.

CyberStrikeAI

CyberStrikeAI is an AI-native security testing platform built in Go that integrates 100+ security tools, an intelligent orchestration engine, role-based testing with predefined security roles, a skills system with specialized testing skills, and comprehensive lifecycle management capabilities. It enables end-to-end automation from conversational commands to vulnerability discovery, attack-chain analysis, knowledge retrieval, and result visualization, delivering an auditable, traceable, and collaborative testing environment for security teams. The platform features an AI decision engine with OpenAI-compatible models, native MCP implementation with various transports, prebuilt tool recipes, large-result pagination, attack-chain graph, password-protected web UI, knowledge base with vector search, vulnerability management, batch task management, role-based testing, and skills system.

uLoopMCP

uLoopMCP is a Unity integration tool designed to let AI drive your Unity project forward with minimal human intervention. It provides a 'self-hosted development loop' where an AI can compile, run tests, inspect logs, and fix issues using tools like compile, run-tests, get-logs, and clear-console. It also allows AI to operate the Unity Editor itself—creating objects, calling menu items, inspecting scenes, and refining UI layouts from screenshots via tools like execute-dynamic-code, execute-menu-item, and capture-window. The tool enables AI-driven development loops to run autonomously inside existing Unity projects.

mcp-documentation-server

The mcp-documentation-server is a lightweight server application designed to serve documentation files for projects. It provides a simple and efficient way to host and access project documentation, making it easy for team members and stakeholders to find and reference important information. The server supports various file formats, such as markdown and HTML, and allows for easy navigation through the documentation. With mcp-documentation-server, teams can streamline their documentation process and ensure that project information is easily accessible to all involved parties.

effective_llm_alignment

This is a super customizable, concise, user-friendly, and efficient toolkit for training and aligning LLMs. It provides support for various methods such as SFT, Distillation, DPO, ORPO, CPO, SimPO, SMPO, Non-pair Reward Modeling, Special prompts basket format, Rejection Sampling, Scoring using RM, Effective FAISS Map-Reduce Deduplication, LLM scoring using RM, NER, CLIP, Classification, and STS. The toolkit offers key libraries like PyTorch, Transformers, TRL, Accelerate, FSDP, DeepSpeed, and tools for result logging with wandb or clearml. It allows mixing datasets, generation and logging in wandb/clearml, vLLM batched generation, and aligns models using the SMPO method.

code_puppy

Code Puppy is an AI-powered code generation agent designed to understand programming tasks, generate high-quality code, and explain its reasoning. It supports multi-language code generation, interactive CLI, and detailed code explanations. The tool requires Python 3.9+ and API keys for various models like GPT, Google's Gemini, Cerebras, and Claude. It also integrates with MCP servers for advanced features like code search and documentation lookups. Users can create custom JSON agents for specialized tasks and access a variety of tools for file management, code execution, and reasoning sharing.

For similar tasks

generator

ctx is a tool designed to automatically generate organized context files from code files, GitHub repositories, Git commits, web pages, and plain text. It aims to efficiently provide necessary context to AI language models like ChatGPT and Claude, enabling users to streamline code refactoring, multiple iteration development, documentation generation, and seamless AI integration. With ctx, users can create structured markdown documents, save context files, and serve context through an MCP server for real-time assistance. The tool simplifies the process of sharing project information with AI assistants, making AI conversations smarter and easier.

pr-agent

PR-Agent is a tool that helps to efficiently review and handle pull requests by providing AI feedbacks and suggestions. It supports various commands such as generating PR descriptions, providing code suggestions, answering questions about the PR, and updating the CHANGELOG.md file. PR-Agent can be used via CLI, GitHub Action, GitHub App, Docker, and supports multiple git providers and models. It emphasizes real-life practical usage, with each tool having a single GPT-4 call for quick and affordable responses. The PR Compression strategy enables effective handling of both short and long PRs, while the JSON prompting strategy allows for modular and customizable tools. PR-Agent Pro, the hosted version by CodiumAI, provides additional benefits such as full management, improved privacy, priority support, and extra features.

shell_gpt

ShellGPT is a command-line productivity tool powered by AI large language models (LLMs). This command-line tool offers streamlined generation of shell commands, code snippets, documentation, eliminating the need for external resources (like Google search). Supports Linux, macOS, Windows and compatible with all major Shells like PowerShell, CMD, Bash, Zsh, etc.

gpt-pilot

GPT Pilot is a core technology for the Pythagora VS Code extension, aiming to provide the first real AI developer companion. It goes beyond autocomplete, helping with writing full features, debugging, issue discussions, and reviews. The tool utilizes LLMs to generate production-ready apps, with developers overseeing the implementation. GPT Pilot works step by step like a developer, debugging issues as they arise. It can work at any scale, filtering out code to show only relevant parts to the AI during tasks. Contributions are welcome, with debugging and telemetry being key areas of focus for improvement.

sirji

Sirji is an agentic AI framework for software development where various AI agents collaborate via a messaging protocol to solve software problems. It uses standard or user-generated recipes to list tasks and tips for problem-solving. Agents in Sirji are modular AI components that perform specific tasks based on custom pseudo code. The framework is currently implemented as a Visual Studio Code extension, providing an interactive chat interface for problem submission and feedback. Sirji sets up local or remote development environments by installing dependencies and executing generated code.

awesome-ai-devtools

Awesome AI-Powered Developer Tools is a curated list of AI-powered developer tools that leverage AI to assist developers in tasks such as code completion, refactoring, debugging, documentation, and more. The repository includes a wide range of tools, from IDEs and Git clients to assistants, agents, app generators, UI generators, snippet generators, documentation tools, code generation tools, agent platforms, OpenAI plugins, search tools, and testing tools. These tools are designed to enhance developer productivity and streamline various development tasks by integrating AI capabilities.

doc-comments-ai

doc-comments-ai is a tool designed to automatically generate code documentation using language models. It allows users to easily create documentation comment blocks for methods in various programming languages such as Python, Typescript, Javascript, Java, Rust, and more. The tool supports both OpenAI and local LLMs, ensuring data privacy and security. Users can generate documentation comments for methods in files, inline comments in method bodies, and choose from different models like GPT-3.5-Turbo, GPT-4, and Azure OpenAI. Additionally, the tool provides support for Treesitter integration and offers guidance on selecting the appropriate model for comprehensive documentation needs.

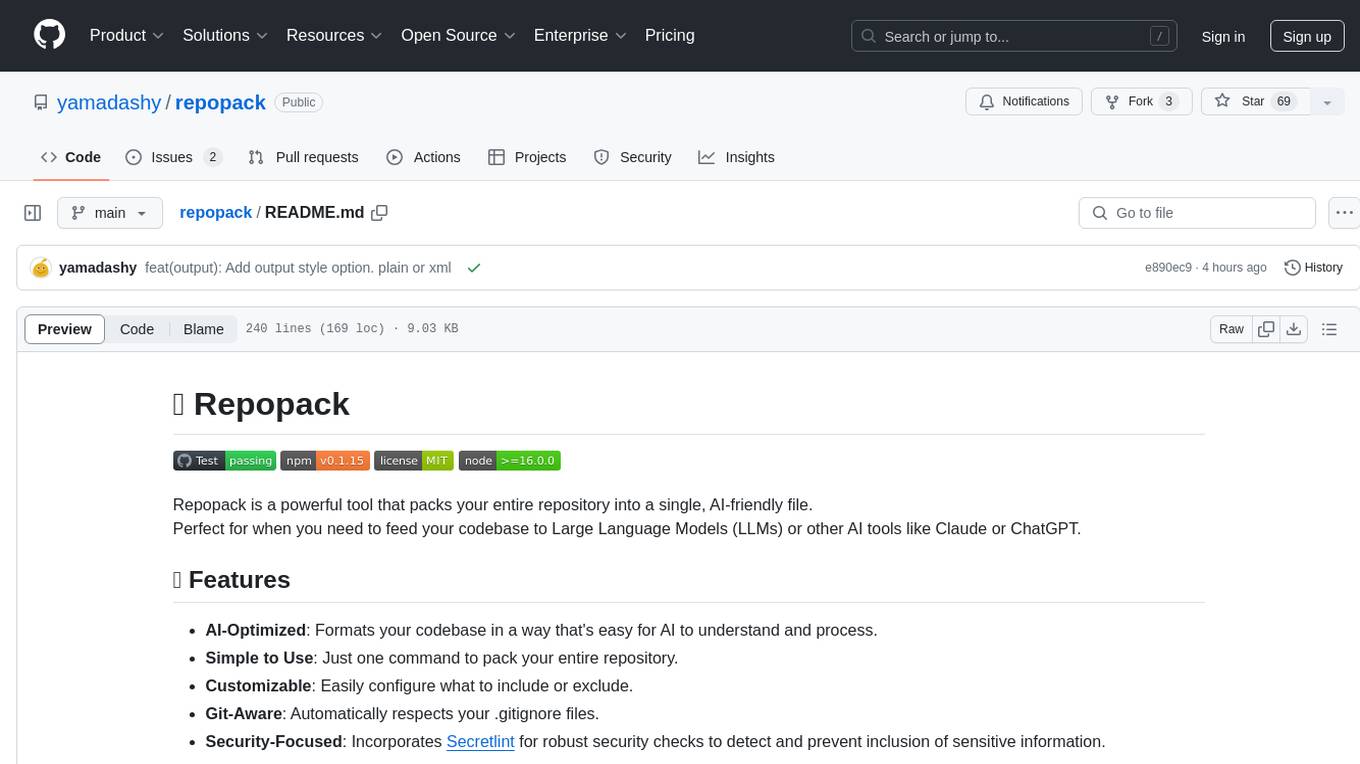

repopack

Repopack is a powerful tool that packs your entire repository into a single, AI-friendly file. It optimizes your codebase for AI comprehension, is simple to use with customizable options, and respects Gitignore files for security. The tool generates a packed file with clear separators and AI-oriented explanations, making it ideal for use with Generative AI tools like Claude or ChatGPT. Repopack offers command line options, configuration settings, and multiple methods for setting ignore patterns to exclude specific files or directories during the packing process. It includes features like comment removal for supported file types and a security check using Secretlint to detect sensitive information in files.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.