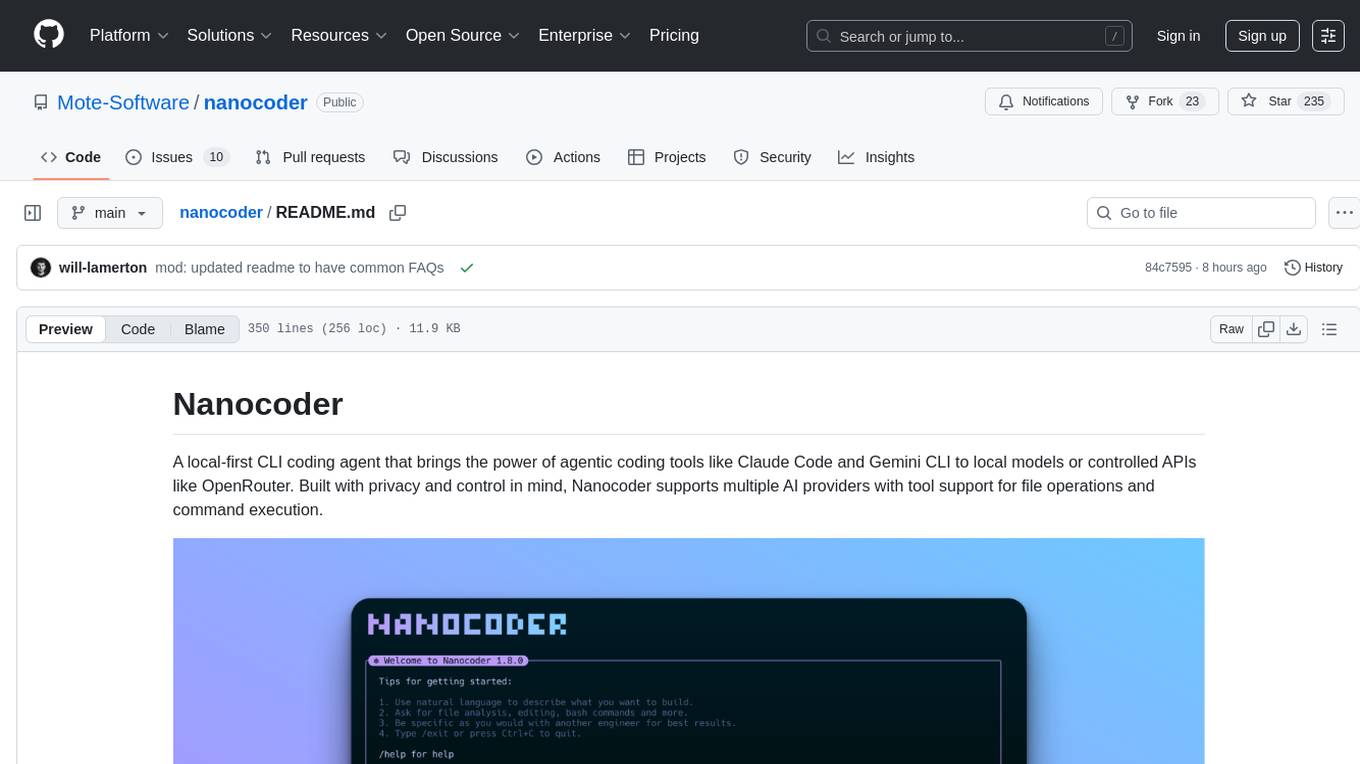

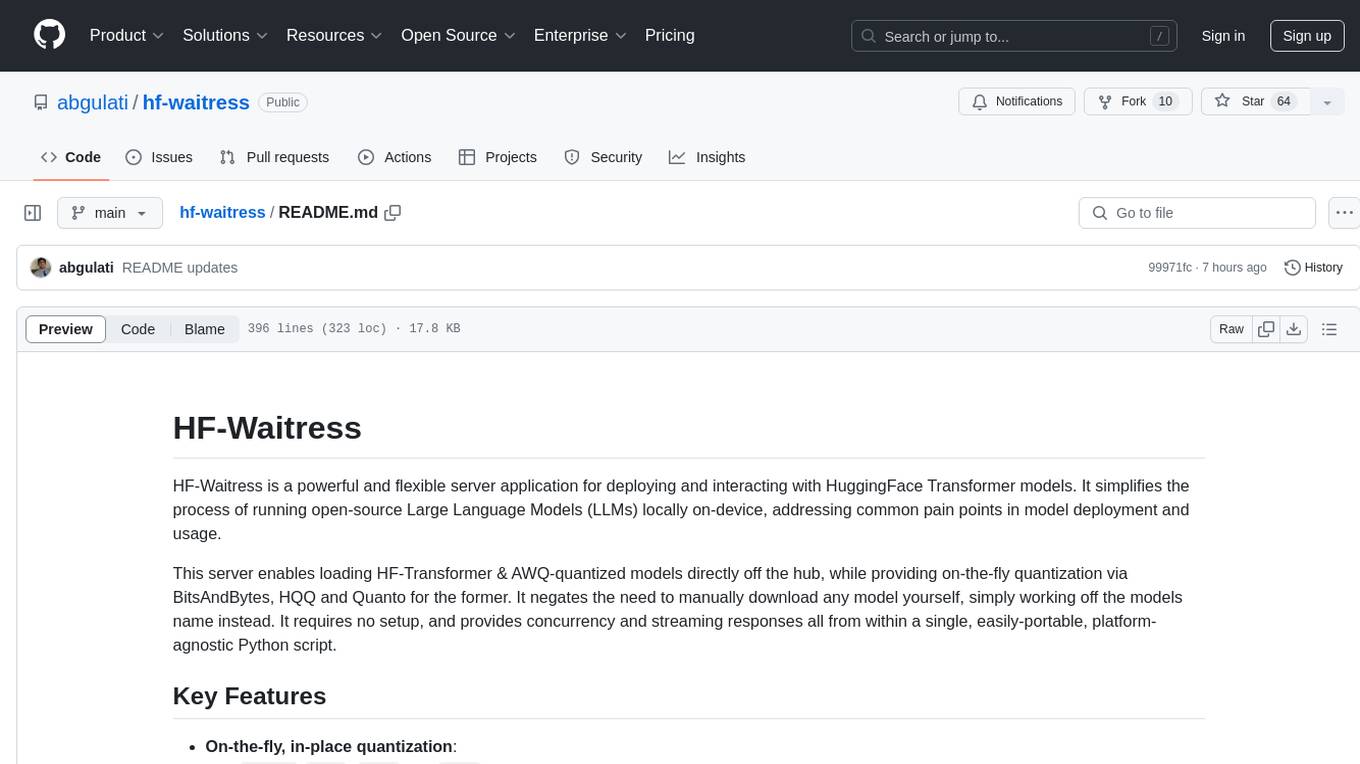

nanocoder

A beautiful local-first coding agent running in your terminal - built by the community for the community ⚒

Stars: 422

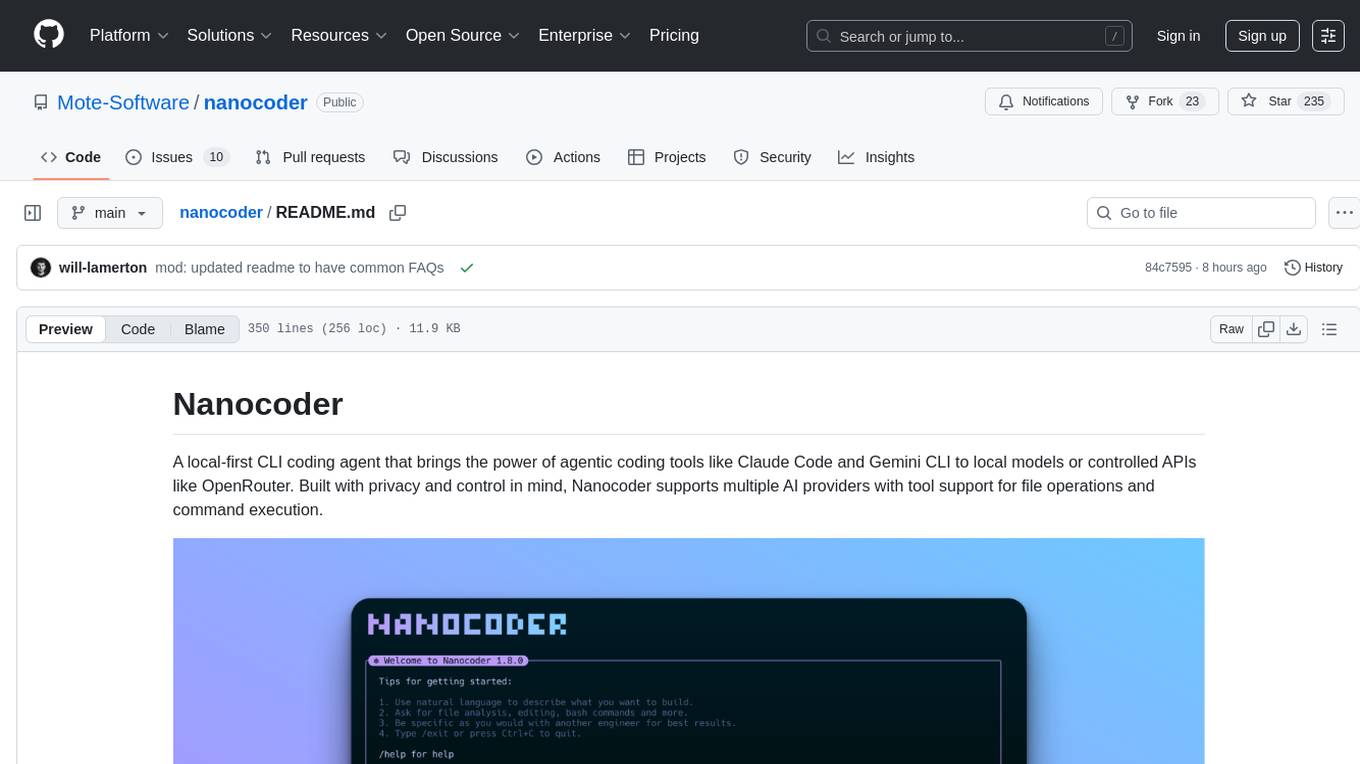

Nanocoder is a local-first CLI coding agent that supports multiple AI providers with tool support for file operations and command execution. It focuses on privacy and control, allowing users to code locally with AI tools. The tool is designed to bring the power of agentic coding tools to local models or controlled APIs like OpenRouter, promoting community-led development and inclusive collaboration in the AI coding space.

README:

A local-first CLI coding agent that brings the power of agentic coding tools like Claude Code and Gemini CLI to local models or controlled APIs like OpenRouter. Built with privacy and control in mind, Nanocoder supports multiple AI providers with tool support for file operations and command execution.

Nanocoder is a local-first CLI coding agent that brings the power of agentic coding tools like Claude Code and Gemini CLI to local models or controlled APIs like OpenRouter. Built with privacy and control in mind, Nanocoder supports any AI provider that has an OpenAI compatible end-point, tool and non-tool calling models.

This comes down to philosophy. OpenCode is a great tool, but it's owned and managed by a venture-backed company that restricts community and open-source involvement to the outskirts. With Nanocoder, the focus is on building a true community-led project where anyone can contribute openly and directly. We believe AI is too powerful to be in the hands of big corporations and everyone should have access to it.

We also strongly believe in the "local-first" approach, where your data, models, and processing stay on your machine whenever possible to ensure maximum privacy and user control. Beyond that, we're actively pushing to develop advancements and frameworks for small, local models to be effective at coding locally.

Not everyone will agree with this philosophy, and that's okay. We believe in fostering an inclusive community that's focused on open collaboration and privacy-first AI coding tools.

Firstly, we would love for you to be involved. You can get started contributing to Nanocoder in several ways, check out the Community section of this README.

Install globally and use anywhere:

npm install -g @motesoftware/nanocoderThen run in any directory:

nanocoderIf you want to contribute or modify Nanocoder:

Prerequisites:

- Node.js 18+

- npm

Setup:

- Clone and install dependencies:

git clone [repo-url]

cd nanocoder

npm install- Build the project:

npm run build- Run locally:

npm run startOr build and run in one command:

npm run devNanocoder supports any OpenAI-compatible API through a unified provider configuration. Create agents.config.json in your working directory (where you run nanocoder):

{

"nanocoder": {

"providers": [

{

"name": "llama-cpp",

"baseUrl": "http://localhost:8080/v1",

"models": ["qwen3-coder:a3b", "deepseek-v3.1"]

},

{

"name": "Ollama",

"baseUrl": "http://localhost:11434/v1",

"models": ["qwen2.5-coder:14b", "llama3.2"]

},

{

"name": "OpenRouter",

"baseUrl": "https://openrouter.ai/api/v1",

"apiKey": "your-openrouter-api-key",

"models": ["openai/gpt-4o-mini", "anthropic/claude-3-haiku"]

},

{

"name": "LM Studio",

"baseUrl": "http://localhost:1234/v1",

"models": ["local-model"]

}

]

}

}Common Provider Examples:

-

llama.cpp server:

"baseUrl": "http://localhost:8080/v1" -

llama-swap:

"baseUrl": "http://localhost:9292/v1" -

Ollama (Local):

- First run:

ollama pull qwen2.5-coder:14b - Use:

"baseUrl": "http://localhost:11434/v1"

- First run:

-

OpenRouter (Cloud):

- Use:

"baseUrl": "https://openrouter.ai/api/v1" - Requires:

"apiKey": "your-api-key"

- Use:

-

LM Studio:

"baseUrl": "http://localhost:1234/v1" -

vLLM:

"baseUrl": "http://localhost:8000/v1" -

LocalAI:

"baseUrl": "http://localhost:8080/v1" -

OpenAI:

"baseUrl": "https://api.openai.com/v1"

Provider Configuration:

-

name: Display name used in/providercommand -

baseUrl: OpenAI-compatible API endpoint -

apiKey: API key (optional for local servers) -

models: Available model list for/modelcommand

Nanocoder supports connecting to MCP servers to extend its capabilities with additional tools. Configure MCP servers in your agents.config.json:

{

"nanocoder": {

"mcpServers": [

{

"name": "filesystem",

"command": "npx",

"args": [

"@modelcontextprotocol/server-filesystem",

"/path/to/allowed/directory"

]

},

{

"name": "github",

"command": "npx",

"args": ["@modelcontextprotocol/server-github"],

"env": {

"GITHUB_TOKEN": "your-github-token"

}

},

{

"name": "custom-server",

"command": "python",

"args": ["path/to/server.py"],

"env": {

"API_KEY": "your-api-key"

}

}

]

}

}When MCP servers are configured, Nanocoder will:

- Automatically connect to all configured servers on startup

- Make all server tools available to the AI model

- Show connected servers and their tools with the

/mcpcommand

Popular MCP servers:

- Filesystem: Enhanced file operations

- GitHub: Repository management

- Brave Search: Web search capabilities

- Memory: Persistent context storage

- View more MCP servers

Note: The

agents.config.jsonfile should be placed in the directory where you run Nanocoder, allowing for project-by-project configuration with different models or API keys per repository.

Nanocoder automatically saves your preferences to remember your choices across sessions. Preferences are stored in ~/.nanocoder-preferences.json in your home directory.

What gets saved automatically:

- Last provider used: The AI provider you last selected (by name from your configuration)

- Last model per provider: Your preferred model for each provider

- Session continuity: Automatically switches back to your preferred provider/model when restarting

How it works:

- When you switch providers with

/provider, your choice is saved - When you switch models with

/model, the selection is saved for that specific provider - Next time you start Nanocoder, it will use your last provider and model

- Each provider remembers its own preferred model independently

Manual management:

- View current preferences: The file is human-readable JSON

- Reset preferences: Delete

~/.nanocoder-preferences.jsonto start fresh - No manual editing needed: Use the

/providerand/modelcommands instead

-

/help- Show available commands -

/init- Initialize project with intelligent analysis, create AGENTS.md and configuration files -

/clear- Clear chat history -

/model- Switch between available models -

/provider- Switch between configured AI providers -

/mcp- Show connected MCP servers and their tools -

/debug- Toggle logging levels (silent/normal/verbose) -

/custom-commands- List all custom commands -

/exit- Exit the application -

/export- Export current session to markdown file -

/theme- Select a theme for the Nanocoder CLI -

/update- Update Nanocoder to the latest version -

!command- Execute bash commands directly without leaving Nanocoder (output becomes context for the LLM)

Nanocoder supports custom commands defined as markdown files in the .nanocoder/commands directory. Like agents.config.json, this directory is created per codebase, allowing you to create reusable prompts with parameters and organize them by category specific to each project.

Example custom command (.nanocoder/commands/test.md):

---

description: 'Generate comprehensive unit tests for the specified component'

aliases: ['testing', 'spec']

parameters:

- name: 'component'

description: 'The component or function to test'

required: true

---

Generate comprehensive unit tests for {{component}}. Include:

- Happy path scenarios

- Edge cases and error handling

- Mock dependencies where appropriate

- Clear test descriptionsUsage: /test component="UserService"

Features:

- YAML frontmatter for metadata (description, aliases, parameters)

- Template variable substitution with

{{parameter}}syntax - Namespace support through directories (e.g.,

/refactor:dry) - Autocomplete integration for command discovery

- Parameter validation and prompting

Pre-installed Commands:

-

/test- Generate comprehensive unit tests for components -

/review- Perform thorough code reviews with suggestions -

/refactor:dry- Apply DRY (Don't Repeat Yourself) principle -

/refactor:solid- Apply SOLID design principles

- Universal OpenAI compatibility: Works with any OpenAI-compatible API

- Local providers: Ollama, LM Studio, vLLM, LocalAI, llama.cpp

- Cloud providers: OpenRouter, OpenAI, and other hosted services

- Smart fallback: Automatically switches to available providers if one fails

- Per-provider preferences: Remembers your preferred model for each provider

- Dynamic configuration: Add any provider with just a name and endpoint

- Built-in tools: File operations, bash command execution

- MCP (Model Context Protocol) servers: Extend capabilities with any MCP-compatible tool

- Dynamic tool loading: Tools are loaded on-demand from configured MCP servers

- Tool approval: Optional confirmation before executing potentially destructive operations

-

Markdown-based commands: Define reusable prompts in

.nanocoder/commands/ -

Template variables: Use

{{parameter}}syntax for dynamic content -

Namespace organization: Organize commands in folders (e.g.,

refactor/dry.md) - Autocomplete support: Tab completion for command discovery

- Rich metadata: YAML frontmatter for descriptions, aliases, and parameters

- Smart autocomplete: Tab completion for commands with real-time suggestions

-

Prompt history: Access and reuse previous prompts with

/history - Configurable logging: Silent, normal, or verbose output levels

- Colorized output: Syntax highlighting and structured display

- Session persistence: Maintains context and preferences across sessions

- Real-time indicators: Shows token usage, timing, and processing status

- First-time directory security disclaimer: Prompts on first run and stores a per-project trust decision to prevent accidental exposure of local code or secrets.

- TypeScript-first: Full type safety and IntelliSense support

- Extensible architecture: Plugin-style system for adding new capabilities

-

Project-specific config: Different settings per project via

agents.config.json - Debug tools: Built-in debugging commands and verbose logging

- Error resilience: Graceful handling of provider failures and network issues

We're a small community-led team building Nanocoder and would love your help! Whether you're interested in contributing code, documentation, or just being part of our community, there are several ways to get involved.

If you want to contribute to the code:

- Read our detailed CONTRIBUTING.md guide for information on development setup, coding standards, and how to submit your changes.

If you want to be part of our community or help with other aspects like design or marketing:

-

Join our Discord server to connect with other users, ask questions, share ideas, and get help: Join our Discord server

-

Head to our GitHub issues or discussions to open and join current conversations with others in the community.

What does Nanocoder you need help with?

Nanocoder could benefit from help all across the board. Such as:

- Adding support for new AI providers

- Improving tool functionality

- Enhancing the user experience

- Writing documentation

- Reporting bugs or suggesting features

- Marketing and getting the word out

- Design and building more great software

All contributions and community participation are welcome!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for nanocoder

Similar Open Source Tools

nanocoder

Nanocoder is a local-first CLI coding agent that supports multiple AI providers with tool support for file operations and command execution. It focuses on privacy and control, allowing users to code locally with AI tools. The tool is designed to bring the power of agentic coding tools to local models or controlled APIs like OpenRouter, promoting community-led development and inclusive collaboration in the AI coding space.

aider-desk

AiderDesk is a desktop application that enhances coding workflow by leveraging AI capabilities. It offers an intuitive GUI, project management, IDE integration, MCP support, settings management, cost tracking, structured messages, visual file management, model switching, code diff viewer, one-click reverts, and easy sharing. Users can install it by downloading the latest release and running the executable. AiderDesk also supports Python version detection and auto update disabling. It includes features like multiple project management, context file management, model switching, chat mode selection, question answering, cost tracking, MCP server integration, and MCP support for external tools and context. Development setup involves cloning the repository, installing dependencies, running in development mode, and building executables for different platforms. Contributions from the community are welcome following specific guidelines.

code_puppy

Code Puppy is an AI-powered code generation agent designed to understand programming tasks, generate high-quality code, and explain its reasoning. It supports multi-language code generation, interactive CLI, and detailed code explanations. The tool requires Python 3.9+ and API keys for various models like GPT, Google's Gemini, Cerebras, and Claude. It also integrates with MCP servers for advanced features like code search and documentation lookups. Users can create custom JSON agents for specialized tasks and access a variety of tools for file management, code execution, and reasoning sharing.

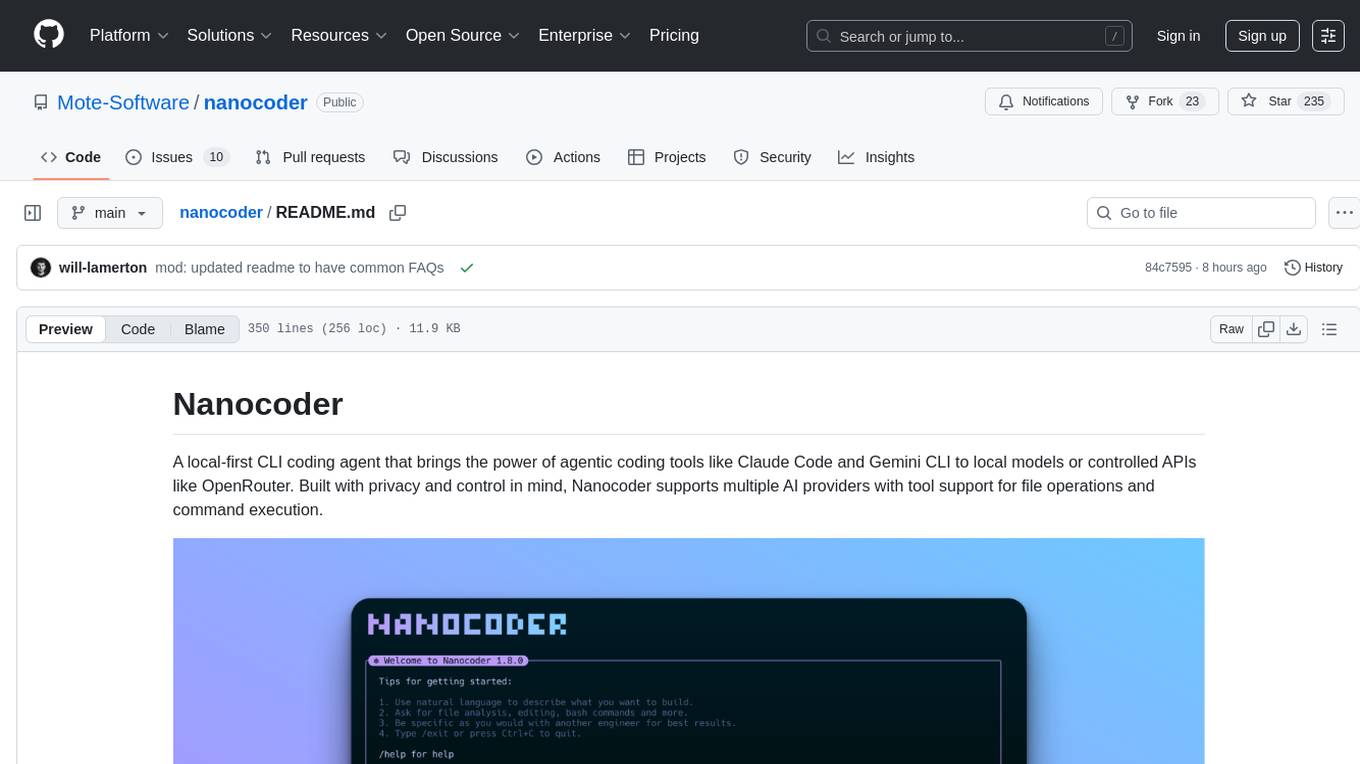

memento-mcp

Memento MCP is a scalable, high-performance knowledge graph memory system designed for LLMs. It offers semantic retrieval, contextual recall, and temporal awareness to any LLM client supporting the model context protocol. The system is built on core concepts like entities and relations, utilizing Neo4j as its storage backend for unified graph and vector search capabilities. With advanced features such as semantic search, temporal awareness, confidence decay, and rich metadata support, Memento MCP provides a robust solution for managing knowledge graphs efficiently and effectively.

mcp-documentation-server

The mcp-documentation-server is a lightweight server application designed to serve documentation files for projects. It provides a simple and efficient way to host and access project documentation, making it easy for team members and stakeholders to find and reference important information. The server supports various file formats, such as markdown and HTML, and allows for easy navigation through the documentation. With mcp-documentation-server, teams can streamline their documentation process and ensure that project information is easily accessible to all involved parties.

supergateway

Supergateway is a tool that allows running MCP stdio-based servers over SSE (Server-Sent Events) with one command. It is useful for remote access, debugging, or connecting to SSE-based clients when your MCP server only speaks stdio. The tool supports running in SSE to Stdio mode as well, where it connects to a remote SSE server and exposes a local stdio interface for downstream clients. Supergateway can be used with ngrok to share local MCP servers with remote clients and can also be run in a Docker containerized deployment. It is designed with modularity in mind, ensuring compatibility and ease of use for AI tools exchanging data.

mcp-omnisearch

mcp-omnisearch is a Model Context Protocol (MCP) server that acts as a unified gateway to multiple search providers and AI tools. It integrates Tavily, Perplexity, Kagi, Jina AI, Brave, Exa AI, and Firecrawl to offer a wide range of search, AI response, content processing, and enhancement features through a single interface. The server provides powerful search capabilities, AI response generation, content extraction, summarization, web scraping, structured data extraction, and more. It is designed to work flexibly with the API keys available, enabling users to activate only the providers they have keys for and easily add more as needed.

LightRAG

LightRAG is a repository hosting the code for LightRAG, a system that supports seamless integration of custom knowledge graphs, Oracle Database 23ai, Neo4J for storage, and multiple file types. It includes features like entity deletion, batch insert, incremental insert, and graph visualization. LightRAG provides an API server implementation for RESTful API access to RAG operations, allowing users to interact with it through HTTP requests. The repository also includes evaluation scripts, code for reproducing results, and a comprehensive code structure.

open-edison

OpenEdison is a secure MCP control panel that connects AI to data/software with additional security controls to reduce data exfiltration risks. It helps address the lethal trifecta problem by providing visibility, monitoring potential threats, and alerting on data interactions. The tool offers features like data leak monitoring, controlled execution, easy configuration, visibility into agent interactions, a simple API, and Docker support. It integrates with LangGraph, LangChain, and plain Python agents for observability and policy enforcement. OpenEdison helps gain observability, control, and policy enforcement for AI interactions with systems of records, existing company software, and data to reduce risks of AI-caused data leakage.

agentpress

AgentPress is a collection of simple but powerful utilities that serve as building blocks for creating AI agents. It includes core components for managing threads, registering tools, processing responses, state management, and utilizing LLMs. The tool provides a modular architecture for handling messages, LLM API calls, response processing, tool execution, and results management. Users can easily set up the environment, create custom tools with OpenAPI or XML schema, and manage conversation threads with real-time interaction. AgentPress aims to be agnostic, simple, and flexible, allowing users to customize and extend functionalities as needed.

FlashLearn

FlashLearn is a tool that provides a simple interface and orchestration for incorporating Agent LLMs into workflows and ETL pipelines. It allows data transformations, classifications, summarizations, rewriting, and custom multi-step tasks using LLMs. Each step and task has a compact JSON definition, making pipelines easy to understand and maintain. FlashLearn supports LiteLLM, Ollama, OpenAI, DeepSeek, and other OpenAI-compatible clients.

hf-waitress

HF-Waitress is a powerful server application for deploying and interacting with HuggingFace Transformer models. It simplifies running open-source Large Language Models (LLMs) locally on-device, providing on-the-fly quantization via BitsAndBytes, HQQ, and Quanto. It requires no manual model downloads, offers concurrency, streaming responses, and supports various hardware and platforms. The server uses a `config.json` file for easy configuration management and provides detailed error handling and logging.

ck

ck (seek) is a semantic grep tool that finds code by meaning, not just keywords. It replaces traditional grep by understanding the user's search intent. It allows users to search for code based on concepts like 'error handling' and retrieves relevant code even if the exact keywords are not present. ck offers semantic search, drop-in grep compatibility, hybrid search combining keyword precision with semantic understanding, agent-friendly output in JSONL format, smart file filtering, and various advanced features. It supports multiple search modes, relevance scoring, top-K results, and smart exclusions. Users can index projects for semantic search, choose embedding models, and search specific files or directories. The tool is designed to improve code search efficiency and accuracy for developers and AI agents.

pipelex

Pipelex is an open-source devtool designed to transform how users build repeatable AI workflows. It acts as a Docker or SQL for AI operations, allowing users to create modular 'pipes' using different LLMs for structured outputs. These pipes can be connected sequentially, in parallel, or conditionally to build complex knowledge transformations from reusable components. With Pipelex, users can share and scale proven methods instantly, saving time and effort in AI workflow development.

genaiscript

GenAIScript is a scripting environment designed to facilitate file ingestion, prompt development, and structured data extraction. Users can define metadata and model configurations, specify data sources, and define tasks to extract specific information. The tool provides a convenient way to analyze files and extract desired content in a structured format. It offers a user-friendly interface for working with data and automating data extraction processes, making it suitable for various data processing tasks.

quantalogic

QuantaLogic is a ReAct framework for building advanced AI agents that seamlessly integrates large language models with a robust tool system. It aims to bridge the gap between advanced AI models and practical implementation in business processes by enabling agents to understand, reason about, and execute complex tasks through natural language interaction. The framework includes features such as ReAct Framework, Universal LLM Support, Secure Tool System, Real-time Monitoring, Memory Management, and Enterprise Ready components.

For similar tasks

nanocoder

Nanocoder is a local-first CLI coding agent that supports multiple AI providers with tool support for file operations and command execution. It focuses on privacy and control, allowing users to code locally with AI tools. The tool is designed to bring the power of agentic coding tools to local models or controlled APIs like OpenRouter, promoting community-led development and inclusive collaboration in the AI coding space.

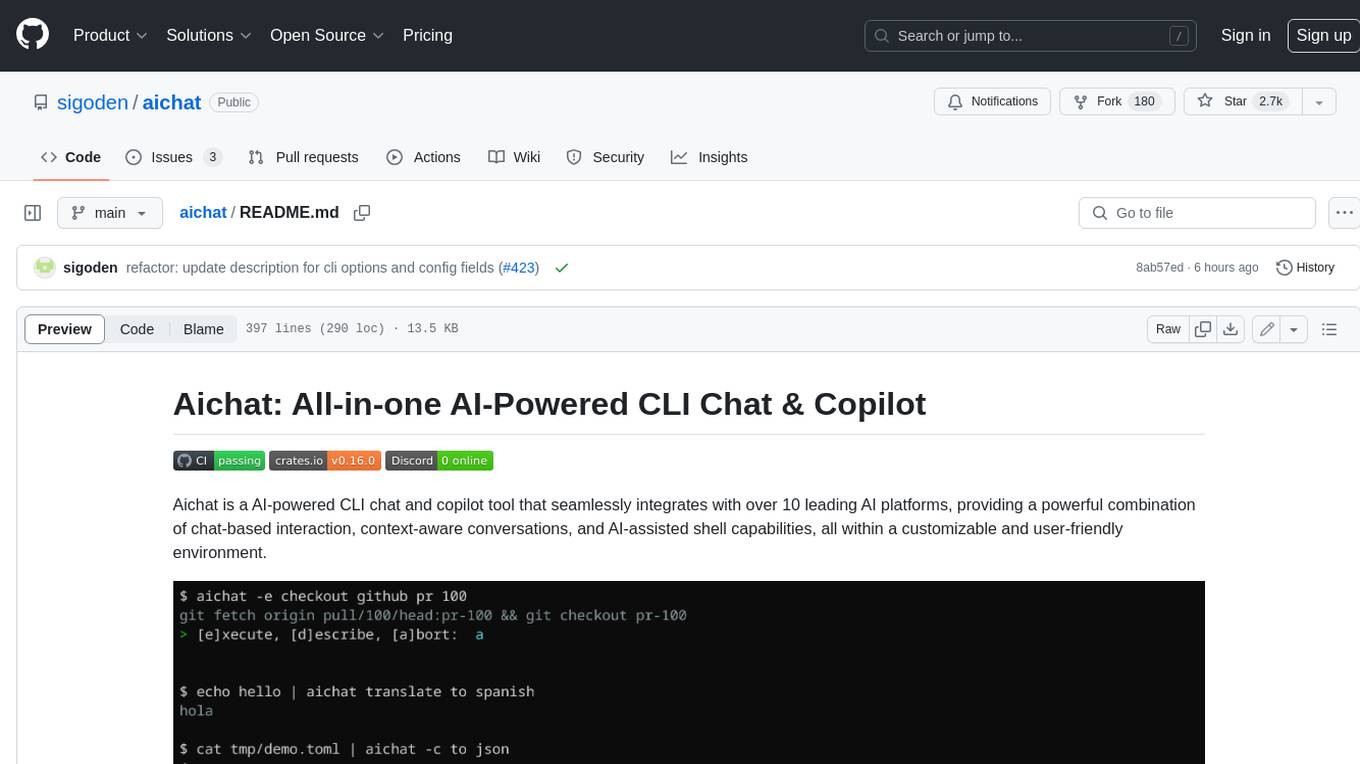

aichat

Aichat is an AI-powered CLI chat and copilot tool that seamlessly integrates with over 10 leading AI platforms, providing a powerful combination of chat-based interaction, context-aware conversations, and AI-assisted shell capabilities, all within a customizable and user-friendly environment.

wingman-ai

Wingman AI allows you to use your voice to talk to various AI providers and LLMs, process your conversations, and ultimately trigger actions such as pressing buttons or reading answers. Our _Wingmen_ are like characters and your interface to this world, and you can easily control their behavior and characteristics, even if you're not a developer. AI is complex and it scares people. It's also **not just ChatGPT**. We want to make it as easy as possible for you to get started. That's what _Wingman AI_ is all about. It's a **framework** that allows you to build your own Wingmen and use them in your games and programs. The idea is simple, but the possibilities are endless. For example, you could: * **Role play** with an AI while playing for more immersion. Have air traffic control (ATC) in _Star Citizen_ or _Flight Simulator_. Talk to Shadowheart in Baldur's Gate 3 and have her respond in her own (cloned) voice. * Get live data such as trade information, build guides, or wiki content and have it read to you in-game by a _character_ and voice you control. * Execute keystrokes in games/applications and create complex macros. Trigger them in natural conversations with **no need for exact phrases.** The AI understands the context of your dialog and is quite _smart_ in recognizing your intent. Say _"It's raining! I can't see a thing!"_ and have it trigger a command you simply named _WipeVisors_. * Automate tasks on your computer * improve accessibility * ... and much more

letmedoit

LetMeDoIt AI is a virtual assistant designed to revolutionize the way you work. It goes beyond being a mere chatbot by offering a unique and powerful capability - the ability to execute commands and perform computing tasks on your behalf. With LetMeDoIt AI, you can access OpenAI ChatGPT-4, Google Gemini Pro, and Microsoft AutoGen, local LLMs, all in one place, to enhance your productivity.

shell-ai

Shell-AI (`shai`) is a CLI utility that enables users to input commands in natural language and receive single-line command suggestions. It leverages natural language understanding and interactive CLI tools to enhance command line interactions. Users can describe tasks in plain English and receive corresponding command suggestions, making it easier to execute commands efficiently. Shell-AI supports cross-platform usage and is compatible with Azure OpenAI deployments, offering a user-friendly and efficient way to interact with the command line.

AIRAVAT

AIRAVAT is a multifunctional Android Remote Access Tool (RAT) with a GUI-based Web Panel that does not require port forwarding. It allows users to access various features on the victim's device, such as reading files, downloading media, retrieving system information, managing applications, SMS, call logs, contacts, notifications, keylogging, admin permissions, phishing, audio recording, music playback, device control (vibration, torch light, wallpaper), executing shell commands, clipboard text retrieval, URL launching, and background operation. The tool requires a Firebase account and tools like ApkEasy Tool or ApkTool M for building. Users can set up Firebase, host the web panel, modify Instagram.apk for RAT functionality, and connect the victim's device to the web panel. The tool is intended for educational purposes only, and users are solely responsible for its use.

chatflow

Chatflow is a tool that provides a chat interface for users to interact with systems using natural language. The engine understands user intent and executes commands for tasks, allowing easy navigation of complex websites/products. This approach enhances user experience, reduces training costs, and boosts productivity.

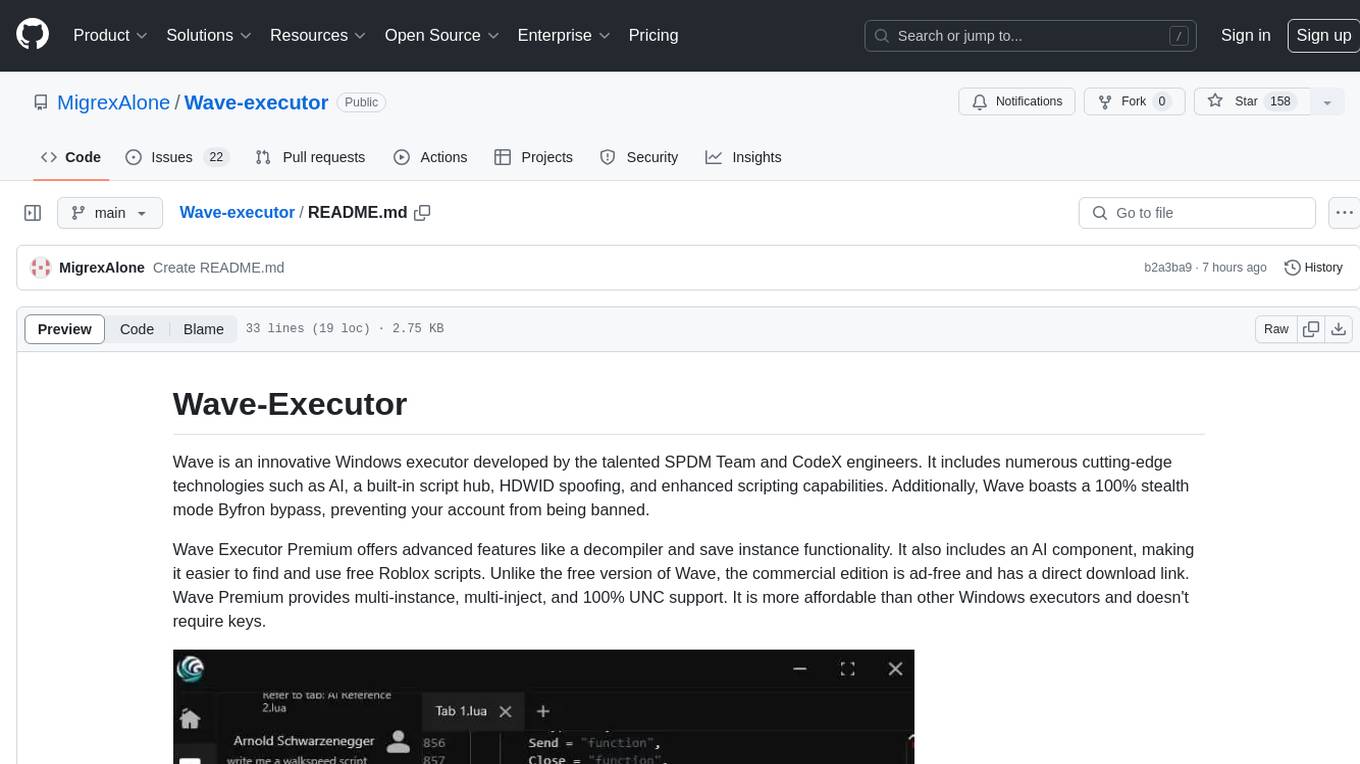

Wave-executor

Wave Executor is an innovative Windows executor developed by SPDM Team and CodeX engineers, featuring cutting-edge technologies like AI, built-in script hub, HDWID spoofing, and enhanced scripting capabilities. It offers a 100% stealth mode Byfron bypass, advanced features like decompiler and save instance functionality, and a commercial edition with ad-free experience and direct download link. Wave Premium provides multi-instance, multi-inject, and 100% UNC support, making it a cost-effective option for executing scripts in popular Roblox games.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.