mcp-documentation-server

MCP Documentation Server - Bridge the AI Knowledge Gap. ✨ Features: Document management • Gemini integration • AI-powered semantic search • File uploads • Smart chunking • Multilingual support • Zero-setup 🎯 Perfect for: New frameworks • API docs • Internal guides

Stars: 205

The mcp-documentation-server is a lightweight server application designed to serve documentation files for projects. It provides a simple and efficient way to host and access project documentation, making it easy for team members and stakeholders to find and reference important information. The server supports various file formats, such as markdown and HTML, and allows for easy navigation through the documentation. With mcp-documentation-server, teams can streamline their documentation process and ensure that project information is easily accessible to all involved parties.

README:

A TypeScript-based Model Context Protocol (MCP) server that provides local-first document management and semantic search using embeddings. The server exposes a collection of MCP tools and is optimized for performance with on-disk persistence, an in-memory index, and caching.

NEW! Enhanced with Google Gemini AI for advanced document analysis and contextual understanding. Ask complex questions and get intelligent summaries, explanations, and insights from your documents. To get API Key go to Google AI Studio

- Intelligent Document Analysis: Gemini AI understands context, relationships, and concepts

- Natural Language Queries: Ask a question, not just keywords

- Smart Summarization: Get comprehensive overviews and explanations

- Contextual Insights: Understand how different parts of your documents relate

- File Mapping Cache: Avoid re-uploading the same files to Gemini for efficiency

- AI-Powered Search 🤖: Advanced document analysis with Gemini AI for contextual understanding and intelligent insights

- Traditional Semantic Search: Chunk-based search using embeddings plus in-memory keyword index

- Context Window Retrieval: Gather surrounding chunks for richer LLM answers

-

O(1) Document lookup and keyword index through

DocumentIndexfor instant retrieval -

LRU

EmbeddingCacheto avoid recomputing embeddings and speed up repeated queries - Parallel chunking and batch processing to accelerate ingestion of large documents

- Streaming file reader to process large files without high memory usage

- Intelligent file handling: copy-based storage with automatic backup preservation

- Complete deletion: removes both JSON files and associated original files

-

Local-only storage: no external database required. All data resides in

~/.mcp-documentation-server/

Example configuration for an MCP client (e.g., Claude Desktop):

{

"mcpServers": {

"documentation": {

"command": "npx",

"args": [

"-y",

"@andrea9293/mcp-documentation-server"

],

"env": {

"GEMINI_API_KEY": "your-api-key-here", // Optional, enables AI-powered search

"MCP_EMBEDDING_MODEL": "Xenova/all-MiniLM-L6-v2",

}

}

}

}- Add documents using the

add_documenttool or by placing.txt,.md, or.pdffiles into the uploads folder and callingprocess_uploads. - Search documents with

search_documentsto get ranked chunk hits. - Use

get_context_windowto fetch neighboring chunks and provide LLMs with richer context.

The server exposes several tools (validated with Zod schemas) for document lifecycle and search:

-

add_document— Add a document (title, content, metadata) -

list_documents— List stored documents and metadata -

get_document— Retrieve a full document by id -

delete_document— Remove a document, its chunks, and associated original files

-

process_uploads— Convert files in uploads folder into documents (chunking + embeddings + backup preservation) -

get_uploads_path— Returns the absolute uploads folder path -

list_uploads_files— Lists files in uploads folder

-

search_documents_with_ai— 🤖 AI-powered search using Gemini for advanced document analysis (requiresGEMINI_API_KEY) -

search_documents— Semantic search within a document (returns chunk hits and LLM hint) -

get_context_window— Return a window of chunks around a target chunk index

Configure behavior via environment variables. Important options:

-

MCP_EMBEDDING_MODEL— embedding model name (default:Xenova/all-MiniLM-L6-v2). Changing the model requires re-adding documents. -

GEMINI_API_KEY— Google Gemini API key for AI-powered search features (optional, enablessearch_documents_with_ai). -

MCP_INDEXING_ENABLED— enable/disable theDocumentIndex(true/false). Default:true. -

MCP_CACHE_SIZE— LRU embedding cache size (integer). Default:1000. -

MCP_PARALLEL_ENABLED— enable parallel chunking (true/false). Default:true. -

MCP_MAX_WORKERS— number of parallel workers for chunking/indexing. Default:4. -

MCP_STREAMING_ENABLED— enable streaming reads for large files. Default:true. -

MCP_STREAM_CHUNK_SIZE— streaming buffer size in bytes. Default:65536(64KB). -

MCP_STREAM_FILE_SIZE_LIMIT— threshold (bytes) to switch to streaming path. Default:10485760(10MB).

Example .env (defaults applied when variables are not set):

MCP_INDEXING_ENABLED=true # Enable O(1) indexing (default: true)

GEMINI_API_KEY=your-api-key-here # Google Gemini API key (optional)

MCP_CACHE_SIZE=1000 # LRU cache size (default: 1000)

MCP_PARALLEL_ENABLED=true # Enable parallel processing (default: true)

MCP_MAX_WORKERS=4 # Parallel worker count (default: 4)

MCP_STREAMING_ENABLED=true # Enable streaming (default: true)

MCP_STREAM_CHUNK_SIZE=65536 # Stream chunk size (default: 64KB)

MCP_STREAM_FILE_SIZE_LIMIT=10485760 # Streaming threshold (default: 10MB)Default storage layout (data directory):

~/.mcp-documentation-server/

├── data/ # Document JSON files

└── uploads/ # Drop files (.txt, .md, .pdf) to import

Add a document via MCP tool:

{

"tool": "add_document",

"arguments": {

"title": "Python Basics",

"content": "Python is a high-level programming language...",

"metadata": {

"category": "programming",

"tags": ["python", "tutorial"]

}

}

}Search a document:

{

"tool": "search_documents",

"arguments": {

"document_id": "doc-123",

"query": "variable assignment",

"limit": 5

}

}Advanced Analysis (requires GEMINI_API_KEY):

{

"tool": "search_documents_with_ai",

"arguments": {

"document_id": "doc-123",

"query": "explain the main concepts and their relationships"

}

}Complex Questions:

{

"tool": "search_documents_with_ai",

"arguments": {

"document_id": "doc-123",

"query": "what are the key architectural patterns and how do they work together?"

}

}Summarization Requests:

{

"tool": "search_documents_with_ai",

"arguments": {

"document_id": "doc-123",

"query": "summarize the core principles and provide examples"

}

}Fetch context window:

{

"tool": "get_context_window",

"arguments": {

"document_id": "doc-123",

"chunk_index": 5,

"before": 2,

"after": 2

}

}- Complex Questions: "How do these concepts relate to each other?"

- Summarization: "Give me an overview of the main principles"

- Analysis: "What are the key patterns and their trade-offs?"

- Explanation: "Explain this topic as if I were new to it"

- Comparison: "Compare these different approaches"

-

Smart Caching: File mapping prevents re-uploading the same content

-

Efficient Processing: Only relevant sections are analyzed by Gemini

-

Contextual Results: More accurate and comprehensive answers

-

Natural Interaction: Ask questions in plain English

-

Embedding models are downloaded on first use; some models require several hundred MB of downloads.

-

The

DocumentIndexpersists an index file and can be rebuilt if necessary. -

The

EmbeddingCachecan be warmed by callingprocess_uploads, issuing curated queries, or using a preload API when available.

Set via MCP_EMBEDDING_MODEL environment variable:

-

Xenova/all-MiniLM-L6-v2(default) - Fast, good quality (384 dimensions) -

Xenova/paraphrase-multilingual-mpnet-base-v2(recommended) - Best quality, multilingual (768 dimensions)

The system automatically manages the correct embedding dimension for each model. Embedding providers expose their dimension via getDimensions().

git clone https://github.com/andrea9293/mcp-documentation-server.gitcd mcp-documentation-servernpm run devnpm run buildnpm run inspect- Fork the repository

- Create a feature branch:

git checkout -b feature/name - Follow Conventional Commits for messages

- Open a pull request

MIT - see LICENSE file

Built with FastMCP and TypeScript 🚀

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mcp-documentation-server

Similar Open Source Tools

mcp-documentation-server

The mcp-documentation-server is a lightweight server application designed to serve documentation files for projects. It provides a simple and efficient way to host and access project documentation, making it easy for team members and stakeholders to find and reference important information. The server supports various file formats, such as markdown and HTML, and allows for easy navigation through the documentation. With mcp-documentation-server, teams can streamline their documentation process and ensure that project information is easily accessible to all involved parties.

mcp-omnisearch

mcp-omnisearch is a Model Context Protocol (MCP) server that acts as a unified gateway to multiple search providers and AI tools. It integrates Tavily, Perplexity, Kagi, Jina AI, Brave, Exa AI, and Firecrawl to offer a wide range of search, AI response, content processing, and enhancement features through a single interface. The server provides powerful search capabilities, AI response generation, content extraction, summarization, web scraping, structured data extraction, and more. It is designed to work flexibly with the API keys available, enabling users to activate only the providers they have keys for and easily add more as needed.

nanocoder

Nanocoder is a versatile code editor designed for beginners and experienced programmers alike. It provides a user-friendly interface with features such as syntax highlighting, code completion, and error checking. With Nanocoder, you can easily write and debug code in various programming languages, making it an ideal tool for learning, practicing, and developing software projects. Whether you are a student, hobbyist, or professional developer, Nanocoder offers a seamless coding experience to boost your productivity and creativity.

ai-counsel

AI Counsel is a true deliberative consensus MCP server where AI models engage in actual debate, refine positions across multiple rounds, and converge with voting and confidence levels. It features two modes (quick and conference), mixed adapters (CLI tools and HTTP services), auto-convergence, structured voting, semantic grouping, model-controlled stopping, evidence-based deliberation, local model support, data privacy, context injection, semantic search, fault tolerance, and full transcripts. Users can run local and cloud models to deliberate on various questions, ground decisions in reality by querying code and files, and query past decisions for analysis. The tool is designed for critical technical decisions requiring multi-model deliberation and consensus building.

code_puppy

Code Puppy is an AI-powered code generation agent designed to understand programming tasks, generate high-quality code, and explain its reasoning. It supports multi-language code generation, interactive CLI, and detailed code explanations. The tool requires Python 3.9+ and API keys for various models like GPT, Google's Gemini, Cerebras, and Claude. It also integrates with MCP servers for advanced features like code search and documentation lookups. Users can create custom JSON agents for specialized tasks and access a variety of tools for file management, code execution, and reasoning sharing.

open-edison

OpenEdison is a secure MCP control panel that connects AI to data/software with additional security controls to reduce data exfiltration risks. It helps address the lethal trifecta problem by providing visibility, monitoring potential threats, and alerting on data interactions. The tool offers features like data leak monitoring, controlled execution, easy configuration, visibility into agent interactions, a simple API, and Docker support. It integrates with LangGraph, LangChain, and plain Python agents for observability and policy enforcement. OpenEdison helps gain observability, control, and policy enforcement for AI interactions with systems of records, existing company software, and data to reduce risks of AI-caused data leakage.

text-extract-api

The text-extract-api is a powerful tool that allows users to convert images, PDFs, or Office documents to Markdown text or JSON structured documents with high accuracy. It is built using FastAPI and utilizes Celery for asynchronous task processing, with Redis for caching OCR results. The tool provides features such as PDF/Office to Markdown and JSON conversion, improving OCR results with LLama, removing Personally Identifiable Information from documents, distributed queue processing, caching using Redis, switchable storage strategies, and a CLI tool for task management. Users can run the tool locally or on cloud services, with support for GPU processing. The tool also offers an online demo for testing purposes.

oxylabs-mcp

The Oxylabs MCP Server acts as a bridge between AI models and the web, providing clean, structured data from any site. It enables scraping of URLs, rendering JavaScript-heavy pages, content extraction for AI use, bypassing anti-scraping measures, and accessing geo-restricted web data from 195+ countries. The implementation utilizes the Model Context Protocol (MCP) to facilitate secure interactions between AI assistants and web content. Key features include scraping content from any site, automatic data cleaning and conversion, bypassing blocks and geo-restrictions, flexible setup with cross-platform support, and built-in error handling and request management.

sonarqube-mcp-server

The SonarQube MCP Server is a Model Context Protocol (MCP) server that enables seamless integration with SonarQube Server or Cloud for code quality and security. It supports the analysis of code snippets directly within the agent context. The server provides various tools for analyzing code, managing issues, accessing metrics, and interacting with SonarQube projects. It also supports advanced features like dependency risk analysis, enterprise portfolio management, and system health checks. The server can be configured for different transport modes, proxy settings, and custom certificates. Telemetry data collection can be disabled if needed.

receipt-ocr

An efficient OCR engine for receipt image processing, providing a comprehensive solution for Optical Character Recognition (OCR) on receipt images. The repository includes a dedicated Tesseract OCR module and a general receipt processing package using LLMs. Users can extract structured data from receipts, configure environment variables for multiple LLM providers, process receipts using CLI or programmatically in Python, and run the OCR engine as a Docker web service. The project also offers direct OCR capabilities using Tesseract and provides troubleshooting tips, contribution guidelines, and license information under the MIT license.

ck

ck (seek) is a semantic grep tool that finds code by meaning, not just keywords. It replaces traditional grep by understanding the user's search intent. It allows users to search for code based on concepts like 'error handling' and retrieves relevant code even if the exact keywords are not present. ck offers semantic search, drop-in grep compatibility, hybrid search combining keyword precision with semantic understanding, agent-friendly output in JSONL format, smart file filtering, and various advanced features. It supports multiple search modes, relevance scoring, top-K results, and smart exclusions. Users can index projects for semantic search, choose embedding models, and search specific files or directories. The tool is designed to improve code search efficiency and accuracy for developers and AI agents.

quantalogic

QuantaLogic is a ReAct framework for building advanced AI agents that seamlessly integrates large language models with a robust tool system. It aims to bridge the gap between advanced AI models and practical implementation in business processes by enabling agents to understand, reason about, and execute complex tasks through natural language interaction. The framework includes features such as ReAct Framework, Universal LLM Support, Secure Tool System, Real-time Monitoring, Memory Management, and Enterprise Ready components.

firecrawl-mcp-server

Firecrawl MCP Server is a Model Context Protocol (MCP) server implementation that integrates with Firecrawl for web scraping capabilities. It offers features such as web scraping, crawling, and discovery, search and content extraction, deep research and batch scraping, automatic retries and rate limiting, cloud and self-hosted support, and SSE support. The server can be configured to run with various tools like Cursor, Windsurf, SSE Local Mode, Smithery, and VS Code. It supports environment variables for cloud API and optional configurations for retry settings and credit usage monitoring. The server includes tools for scraping, batch scraping, mapping, searching, crawling, and extracting structured data from web pages. It provides detailed logging and error handling functionalities for robust performance.

connectonion

ConnectOnion is a simple, elegant open-source framework for production-ready AI agents. It provides a platform for creating and using AI agents with a focus on simplicity and efficiency. The framework allows users to easily add tools, debug agents, make them production-ready, and enable multi-agent capabilities. ConnectOnion offers a simple API, is production-ready with battle-tested models, and is open-source under the MIT license. It features a plugin system for adding reflection and reasoning capabilities, interactive debugging for easy troubleshooting, and no boilerplate code for seamless scaling from prototypes to production systems.

repomix

Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. It is designed to format your codebase for easy understanding by AI tools like Large Language Models (LLMs), Claude, ChatGPT, and Gemini. Repomix offers features such as AI optimization, token counting, simplicity in usage, customization options, Git awareness, and security-focused checks using Secretlint. It allows users to pack their entire repository or specific directories/files using glob patterns, and even supports processing remote Git repositories. The tool generates output in plain text, XML, or Markdown formats, with options for including/excluding files, removing comments, and performing security checks. Repomix also provides a global configuration option, custom instructions for AI context, and a security check feature to detect sensitive information in files.

flo-ai

Flo AI is a Python framework that enables users to build production-ready AI agents and teams with minimal code. It allows users to compose complex AI architectures using pre-built components while maintaining the flexibility to create custom components. The framework supports composable, production-ready, YAML-first, and flexible AI systems. Users can easily create AI agents and teams, manage teams of AI agents working together, and utilize built-in support for Retrieval-Augmented Generation (RAG) and compatibility with Langchain tools. Flo AI also provides tools for output parsing and formatting, tool logging, data collection, and JSON output collection. It is MIT Licensed and offers detailed documentation, tutorials, and examples for AI engineers and teams to accelerate development, maintainability, scalability, and testability of AI systems.

For similar tasks

mcp-documentation-server

The mcp-documentation-server is a lightweight server application designed to serve documentation files for projects. It provides a simple and efficient way to host and access project documentation, making it easy for team members and stakeholders to find and reference important information. The server supports various file formats, such as markdown and HTML, and allows for easy navigation through the documentation. With mcp-documentation-server, teams can streamline their documentation process and ensure that project information is easily accessible to all involved parties.

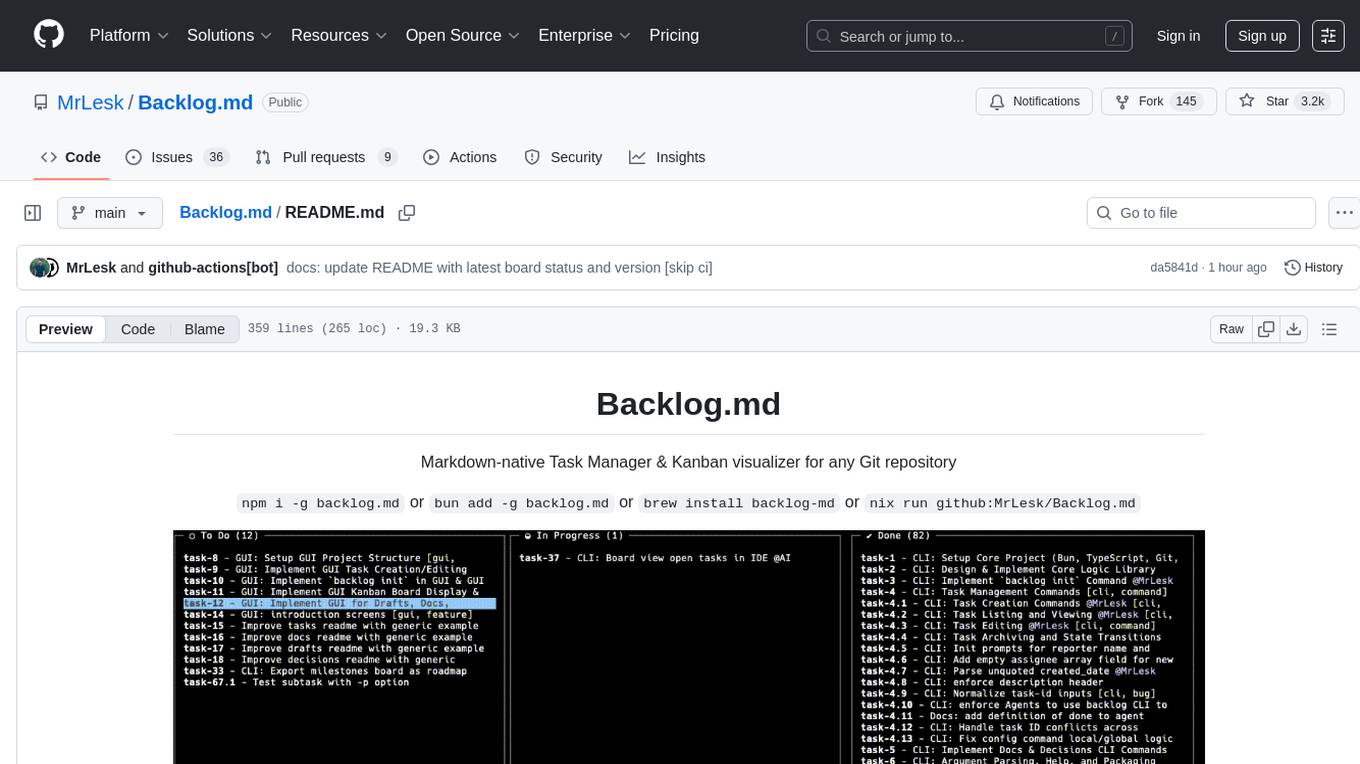

Backlog.md

Backlog.md is a Markdown-native Task Manager & Kanban visualizer for any Git repository. It turns any folder with a Git repo into a self-contained project board powered by plain Markdown files and a zero-config CLI. Features include managing tasks as plain .md files, private & offline usage, instant terminal Kanban visualization, board export, modern web interface, AI-ready CLI, rich query commands, cross-platform support, and MIT-licensed open-source. Users can create tasks, view board, assign tasks to AI, manage documentation, make decisions, and configure settings easily.

coco-app

Coco AI is a unified search platform that connects enterprise applications and data into a single, powerful search interface. The COCO App allows users to search and interact with their enterprise data across platforms. It also offers a Gen-AI Chat for Teams tailored to team's unique knowledge and internal resources, enhancing collaboration by making information instantly accessible and providing AI-driven insights based on enterprise's specific data.

For similar jobs

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

quivr

Quivr is a personal assistant powered by Generative AI, designed to be a second brain for users. It offers fast and efficient access to data, ensuring security and compatibility with various file formats. Quivr is open source and free to use, allowing users to share their brains publicly or keep them private. The marketplace feature enables users to share and utilize brains created by others, boosting productivity. Quivr's offline mode provides anytime, anywhere access to data. Key features include speed, security, OS compatibility, file compatibility, open source nature, public/private sharing options, a marketplace, and offline mode.

Avalonia-Assistant

Avalonia-Assistant is an open-source desktop intelligent assistant that aims to provide a user-friendly interactive experience based on the Avalonia UI framework and the integration of Semantic Kernel with OpenAI or other large LLM models. By utilizing Avalonia-Assistant, you can perform various desktop operations through text or voice commands, enhancing your productivity and daily office experience.

MetaGPT

MetaGPT is a multi-agent framework that enables GPT to work in a software company, collaborating to tackle more complex tasks. It assigns different roles to GPTs to form a collaborative entity for complex tasks. MetaGPT takes a one-line requirement as input and outputs user stories, competitive analysis, requirements, data structures, APIs, documents, etc. Internally, MetaGPT includes product managers, architects, project managers, and engineers. It provides the entire process of a software company along with carefully orchestrated SOPs. MetaGPT's core philosophy is "Code = SOP(Team)", materializing SOP and applying it to teams composed of LLMs.

UFO

UFO is a UI-focused dual-agent framework to fulfill user requests on Windows OS by seamlessly navigating and operating within individual or spanning multiple applications.

timefold-solver

Timefold Solver is an optimization engine evolved from OptaPlanner. Developed by the original OptaPlanner team, our aim is to free the world of wasteful planning.

MateCat

Matecat is an enterprise-level, web-based CAT tool designed to make post-editing and outsourcing easy and to provide a complete set of features to manage and monitor translation projects.

crewAI

crewAI is a cutting-edge framework for orchestrating role-playing, autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It provides a flexible and structured approach to AI collaboration, enabling users to define agents with specific roles, goals, and tools, and assign them tasks within a customizable process. crewAI supports integration with various LLMs, including OpenAI, and offers features such as autonomous task delegation, flexible task management, and output parsing. It is open-source and welcomes contributions, with a focus on improving the library based on usage data collected through anonymous telemetry.