code2prompt

Code2Prompt is a powerful command-line tool that simplifies the process of providing context to Large Language Models (LLMs) by generating a comprehensive Markdown file containing the content of your codebase. ⭐ If you find Code2Prompt useful, consider giving us a star on GitHub! It helps us reach more developers and improve the tool. ⭐

Stars: 734

Code2Prompt is a powerful command-line tool that generates comprehensive prompts from codebases, designed to streamline interactions between developers and Large Language Models (LLMs) for code analysis, documentation, and improvement tasks. It bridges the gap between codebases and LLMs by converting projects into AI-friendly prompts, enabling users to leverage AI for various software development tasks. The tool offers features like holistic codebase representation, intelligent source tree generation, customizable prompt templates, smart token management, Gitignore integration, flexible file handling, clipboard-ready output, multiple output options, and enhanced code readability.

README:

Code2Prompt is a powerful command-line tool that generates comprehensive prompts from codebases, designed to streamline interactions between developers and Large Language Models (LLMs) for code analysis, documentation, and improvement tasks.

- Why Code2Prompt?

- Features

- Installation

- Getting Started

- Quick Start

- Usage

- Options

- Examples

- Templating System

- Integration with LLM CLI

- GitHub Actions Integration

- Configuration File

- Troubleshooting

- Contributing

- License

Code2Prompt is a powerful, open-source command-line tool that bridges the gap between your codebase and Large Language Models (LLMs). By converting your entire project into a comprehensive, AI-friendly prompt, Code2Prompt enables you to leverage the full potential of AI for code analysis, documentation, and improvement tasks.

- Holistic Codebase Representation: Generate a well-structured Markdown prompt that captures your entire project's essence, making it easier for LLMs to understand the context.

- Intelligent Source Tree Generation: Create a clear, hierarchical view of your codebase structure, allowing for better navigation and understanding of the project.

- Customizable Prompt Templates: Tailor your output using Jinja2 templates to suit specific AI tasks, enhancing the relevance of generated prompts.

- Smart Token Management: Count and optimize tokens to ensure compatibility with various LLM token limits, preventing errors during processing.

- Gitignore Integration: Respect your project's .gitignore rules for accurate representation, ensuring that irrelevant files are excluded from processing.

- Flexible File Handling: Filter and exclude files using powerful glob patterns, giving you control over which files are included in the prompt generation.

-

Custom Syntax Highlighting: Pair custom file extensions with specific syntax highlighting using the

--syntax-mapoption. For example, you can specify that.incfiles should be treated asbashscripts. - Clipboard Ready: Instantly copy generated prompts to your clipboard for quick AI interactions, streamlining your workflow.

- Multiple Output Options: Save to file or display in the console, providing flexibility in how you want to use the generated prompts.

- Enhanced Code Readability: Add line numbers to source code blocks for precise referencing, making it easier to discuss specific parts of the code.

- Include file: Support of template import, allowing for modular template design.

- Input variables: Support of Input Variables in templates, enabling dynamic prompt generation based on user input.

- Contextual Understanding: Provide LLMs with a comprehensive view of your project for more accurate suggestions and analysis.

- Consistency Boost: Maintain coding style and conventions across your entire project, improving code quality.

- Efficient Refactoring: Enable better interdependency analysis and smarter refactoring recommendations, saving time and effort.

- Improved Documentation: Generate contextually relevant documentation that truly reflects your codebase, enhancing maintainability.

- Pattern Recognition: Help LLMs learn and apply your project-specific patterns and idioms, improving the quality of AI interactions.

Transform the way you interact with AI for software development. With Code2Prompt, harness the full power of your codebase in every AI conversation.

Ready to elevate your AI-assisted development? Let's dive in! 🏊♂️

Choose one of the following methods to install Code2Prompt:

pip install code2promptUsing pipx (recommended)

pipx install code2promptTo get started with Code2Prompt, follow these steps:

- Install Code2Prompt: Use one of the installation methods mentioned above.

-

Prepare Your Codebase: Ensure your project is organized and that you have a

.gitignorefile if necessary. - Run Code2Prompt: Use the command line to generate prompts from your codebase.

For example, to generate a prompt from a single Python file, run:

code2prompt --path /path/to/your/script.py-

Generate a prompt from a single Python file:

code2prompt --path /path/to/your/script.py

-

Process an entire project directory and save the output:

code2prompt --path /path/to/your/project --output project_summary.md

-

Generate a prompt for multiple files, excluding tests:

code2prompt --path /path/to/src --path /path/to/lib --exclude "*/tests/*" --output codebase_summary.md

The basic syntax for Code2Prompt is:

code2prompt --path /path/to/your/code [OPTIONS]For multiple paths:

code2prompt --path /path/to/dir1 --path /path/to/file2.py [OPTIONS]To pair custom file extensions with specific syntax highlighting, use the --syntax-map option. This allows you to specify mappings in the format extension:syntax. For example:

code2prompt --path /path/to/your/code --syntax-map "inc:bash,customext:python,ext2:javascript"

This command will treat .inc files as bash scripts, .customext files as python, and .ext2 files as javascript.

You can also use multiple --syntax-map arguments or separate mappings with commas:

code2prompt --path /path/to/your/script.py --syntax-map "inc:bash"

code2prompt --path /path/to/your/project --syntax-map "inc:bash,txt:markdown" --output project_summary.md

code2prompt --path /path/to/src --path /path/to/lib --syntax-map "inc:bash,customext:python" --output codebase_summary.md

| Option | Short | Description |

|---|---|---|

--path |

-p |

Path(s) to the directory or file to process (required, multiple allowed) |

--output |

-o |

Name of the output Markdown file |

--gitignore |

-g |

Path to the .gitignore file |

--filter |

-f |

Comma-separated filter patterns to include files (e.g., ".py,.js") |

--exclude |

-e |

Comma-separated patterns to exclude files (e.g., ".txt,.md") |

--case-sensitive |

Perform case-sensitive pattern matching | |

--suppress-comments |

-s |

Strip comments from the code files |

--line-number |

-ln |

Add line numbers to source code blocks |

--no-codeblock |

Disable wrapping code inside markdown code blocks | |

--template |

-t |

Path to a Jinja2 template file for custom prompt generation |

--tokens |

Display the token count of the generated prompt | |

--encoding |

Specify the tokenizer encoding to use (default: "cl100k_base") | |

--create-templates |

Create a templates directory with example templates | |

--version |

-v |

Show the version and exit |

--log-level |

Set the logging level (e.g., DEBUG, INFO, WARNING, ERROR, CRITICAL) | |

--interactive |

-i |

Activate interactive mode for file selection |

--syntax-map |

Pair custom file extensions with specific syntax highlighting (e.g., "inc:bash,customext:python,ext2:javascript") |

The --filter and --exclude options allow you to specify patterns for files or directories that should be included in or excluded from processing, respectively.

--filter "PATTERN1,PATTERN2,..."

--exclude "PATTERN1,PATTERN2,..."

or

-f "PATTERN1,PATTERN2,..."

-e "PATTERN1,PATTERN2,..."

- Both options accept a comma-separated list of patterns.

- Patterns can include wildcards (

*) and directory indicators (**). - Case-sensitive by default (use

--case-sensitiveflag to change this behavior). -

--excludepatterns take precedence over--filterpatterns.

-

Include only Python files:

--filter "**.py" -

Exclude all Markdown files:

--exclude "**.md" -

Include specific file types in the src directory:

--filter "src/**.{js,ts}" -

Exclude multiple file types and a specific directory:

--exclude "**.log,**.tmp,**/node_modules/**" -

Include all files except those in 'test' directories:

--filter "**" --exclude "**/test/**" -

Complex filtering (include JavaScript files, exclude minified and test files):

--filter "**.js" --exclude "**.min.js,**test**.js" -

Include specific files across all directories:

--filter "**/config.json,**/README.md" -

Exclude temporary files and directories:

--exclude "**/.cache/**,**/tmp/**,**.tmp" -

Include source files but exclude build output:

--filter "src/**/*.{js,ts}" --exclude "**/dist/**,**/build/**" -

Exclude version control and IDE-specific files:

--exclude "**/.git/**,**/.vscode/**,**/.idea/**"

- Always use double quotes around patterns to prevent shell interpretation of special characters.

- Patterns are matched against the full path of each file, relative to the project root.

- The

**wildcard matches any number of directories. - Single

*matches any characters within a single directory or filename. - Use commas to separate multiple patterns within the same option.

- Combine

--filterand--excludefor fine-grained control over which files are processed.

- Start with broader patterns and refine as needed.

- Test your patterns on a small subset of your project first.

- Use the

--case-sensitiveflag if you need to distinguish between similarly named files with different cases. - When working with complex projects, consider using a configuration file to manage your filter and exclude patterns.

By using the --filter and --exclude options effectively and safely (with proper quoting), you can precisely control which files are processed in your project, ensuring both accuracy and security in your command execution.

-

Generate documentation for a Python library:

code2prompt --path /path/to/library --output library_docs.md --suppress-comments --line-number --filter "*.py" -

Prepare a codebase summary for a code review, focusing on JavaScript and TypeScript files:

code2prompt --path /path/to/project --filter "*.js,*.ts" --exclude "node_modules/*,dist/*" --template code_review.j2 --output code_review.md

-

Create input for an AI model to suggest improvements, focusing on a specific directory:

code2prompt --path /path/to/src/components --suppress-comments --tokens --encoding cl100k_base --output ai_input.md

-

Analyze comment density across a multi-language project:

code2prompt --path /path/to/project --template comment_density.j2 --output comment_analysis.md --filter "*.py,*.js,*.java" -

Generate a prompt for a specific set of files, adding line numbers:

code2prompt --path /path/to/important_file1.py --path /path/to/important_file2.js --line-number --output critical_files.md

Code2Prompt supports custom output formatting using Jinja2 templates. To use a custom template:

code2prompt --path /path/to/code --template /path/to/your/template.j2Use the --create-templates command to generate example templates:

code2prompt --create-templatesThis creates a templates directory with sample Jinja2 templates, including:

- default.j2: A general-purpose template

- analyze-code.j2: For detailed code analysis

- code-review.j2: For thorough code reviews

- create-readme.j2: To assist in generating README files

- improve-this-prompt.j2: For refining AI prompts

For full template documentation, see Documentation Templating.

Code2Prompt can be integrated with Simon Willison's llm CLI tool for enhanced code analysis or qllm, or for the Rust lovers hiramu-cli.

pip install code2prompt llm-

Generate a code summary and analyze it with an LLM:

code2prompt --path /path/to/your/project | llm "Analyze this codebase and provide insights on its structure and potential improvements"

-

Process a specific file and get refactoring suggestions:

code2prompt --path /path/to/your/script.py | llm "Suggest refactoring improvements for this code"

For more advanced use cases, refer to the Integration with LLM CLI section in the full documentation.

You can integrate Code2Prompt into your GitHub Actions workflow. Here's an example:

name: Code Analysis

on: [push]

jobs:

analyze-code:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Set up Python

uses: actions/setup-python@v2

with:

python-version: '3.x'

- name: Install dependencies

run: |

pip install code2prompt llm

- name: Analyze codebase

run: |

code2prompt --path . | llm "Perform a comprehensive analysis of this codebase. Identify areas for improvement, potential bugs, and suggest optimizations." > analysis.md

- name: Upload analysis

uses: actions/upload-artifact@v2

with:

name: code-analysis

path: analysis.mdTokens are the basic units of text that language models process. They can be words, parts of words, or even punctuation marks. Different tokenizer encodings split text into tokens in various ways. Code2Prompt supports multiple token types through its --encoding option, with "cl100k_base" as the default. This encoding, used by models like GPT-3.5 and GPT-4, is adept at handling code and technical content. Other common encodings include "p50k_base" (used by earlier GPT-3 models) and "r50k_base" (used by models like CodeX).

To count tokens in your generated prompt, use the --tokens flag:

code2prompt --path /your/project --tokensFor a specific encoding:

code2prompt --path /your/project --tokens --encoding p50k_baseUnderstanding token counts is crucial when working with AI models that have token limits, ensuring your prompts fit within the model's context window.

Code2Prompt now includes a powerful feature for estimating token prices across various AI providers and models. Use the --price option in conjunction with --tokens to display a comprehensive breakdown of estimated costs. This feature calculates prices based on both input and output tokens, with input tokens determined by your codebase and a default of 1000 output tokens (customizable via --output-tokens). You can specify a particular provider or model, or view prices across all available options. This functionality helps developers make informed decisions about AI model usage and cost management. For example:

code2prompt --path /your/project --tokens --price --provider openai --model gpt-4This command will analyze your project, count the tokens, and provide a detailed price estimation for OpenAI's GPT-4 model.

code2prompt now offers a powerful feature to analyze codebases and provide a summary of file extensions. Use the --analyze option along with the -p (path) option to get an overview of your project's file composition. For example:

code2prompt --analyze -p code2prompt

Result:

.j2: 6 files

.json: 1 file

.py: 33 files

.pyc: 56 files

Comma-separated list of extensions:

.j2,.json,.py,.pyc

This command will analyze the 'code2prompt' directory and display a summary of all file extensions found, including their counts. You can choose between two output formats:

- Flat format (default): Lists all unique extensions alphabetically with their file counts.

- Tree-like format: Displays extensions in a directory tree structure with counts at each level.

To use the tree-like format, add the --format tree option:

code2prompt --analyze -p code2prompt --format tree

Result:

└── code2prompt

├── utils

│ ├── .py

│ └── __pycache__

│ └── .pyc

├── .py

├── core

│ ├── .py

│ └── __pycache__

│ └── .pyc

├── comment_stripper

│ ├── .py

│ └── __pycache__

│ └── .pyc

├── __pycache__

│ └─ .pyc

├── templates

│ └── .j2

└── data

└── .json

Comma-separated list of extensions:

.j2,.json,.py,.pyc

The analysis also generates a comma-separated list of file extensions, which can be easily copied and used with the --filter option for more targeted code processing.

code2prompt offers a powerful feature for dynamic variable extraction from templates, allowing for interactive and customizable prompt generation. Using the syntax {{input:variable_name}}, you can easily define variables that will prompt users for input during execution.

This is particularly useful for creating flexible templates for various purposes, such as generating AI prompts for Chrome extensions. Here's an example:

# AI Prompt Generator for Chrome Extension

Generate a prompt for an AI to create a Chrome extension with the following specifications:

Extension Name: {{input:extension_name}}

Main Functionality: {{input:main_functionality}}

Target Audience: {{input:target_audience}}

## Prompt:

You are an experienced Chrome extension developer. Create a detailed plan for a Chrome extension named "{{input:extension_name}}" that {{input:main_functionality}}. This extension is designed for {{input:target_audience}}.

Your response should include:

1. A brief description of the extension's purpose and functionality

2. Key features (at least 3)

3. User interface design considerations

4. Potential challenges in development and how to overcome them

5. Security and privacy considerations

6. A basic code structure for the main components (manifest.json, background script, content script, etc.)

Ensure that your plan is detailed, technically sound, and tailored to the needs of {{input:target_audience}}.

Start from this codebase:

----

## The codebase:

<codebase>When you run code2prompt with this template, it will automatically detect the {{input:variable_name}} patterns and prompt the user to provide values for each variable (extension_name, main_functionality, and target_audience). This allows for flexible and interactive prompt generation, making it easy to create customized AI prompts for various Chrome extension ideas.

For example, if a user inputs:

- Extension Name: "ProductivityBoost"

- Main Functionality: "tracks time spent on different websites and provides productivity insights"

- Target Audience: "professionals working from home"

The tool will generate a tailored prompt for an AI to create a detailed plan for this specific Chrome extension. This feature is particularly useful for developers, product managers, or anyone looking to quickly generate customized AI prompts for various projects or ideas.

The code2prompt project now supports a powerful "include file" feature, enhancing template modularity and reusability.

This feature allows you to seamlessly incorporate external file content into your main template using the {% include %} directive. For example, in the main analyze-code.j2 template, you can break down complex sections into smaller, manageable files:

# Elite Code Analyzer and Improvement Strategist 2.0

{% include 'sections/role_and_goal.j2' %}

{% include 'sections/core_competencies.j2' %}

## Task Breakdown

1. Initial Assessment

{% include 'tasks/initial_assessment.j2' %}

2. Multi-Dimensional Analysis (Utilize Tree of Thought)

{% include 'tasks/multi_dimensional_analysis.j2' %}

// ... other sections ...This approach allows you to organize your template structure more efficiently, improving maintainability and allowing for easy updates to specific sections without modifying the entire template. The include feature supports both relative and absolute paths, making it flexible for various project structures. By leveraging this feature, you can significantly reduce code duplication, improve template management, and create a more modular and scalable structure for your code2prompt templates.

The interactive mode allows users to select files for processing in a user-friendly manner. This feature is particularly useful when dealing with large codebases or when you want to selectively include files without manually specifying each path.

To activate interactive mode, use the --interactive or -i option when running the code2prompt command. Here's an example:

code2prompt --path /path/to/your/project --interactive- File Selection: Navigate through the directory structure and select files using keyboard controls.

- Visual Feedback: The interface provides visual cues to help you understand which files are selected or ignored.

- Arrow Keys: Navigate through the list of files.

- Spacebar: Toggle the selection of a file.

- Enter: Confirm your selection and proceed with the command.

- Esc: Exit the interactive mode without making any changes.

This mode enhances the usability of Code2Prompt, making it easier to manage file selections in complex projects.

Code2Prompt supports a .code2promptrc configuration file in JSON format for setting default options. Place this file in your project or home directory.

Example .code2promptrc:

{

"suppress_comments": true,

"line_number": true,

"encoding": "cl100k_base",

"filter": "*.py,*.js",

"exclude": "tests/*,docs/*"

}-

Issue: Code2Prompt is not recognizing my .gitignore file. Solution: Run Code2Prompt from the project root, or specify the .gitignore path with

--gitignore. -

Issue: The generated output is too large for my AI model. Solution: Use

--tokensto check the count, and refine--filteror--excludeoptions. -

Issue: Some files are not being processed. Solution: Check for binary files or exclusion patterns. Use

--case-sensitiveif needed.

- [X] Interactive filtering

- [X] Include system in template to promote re-usability of sub templates.

- [X] Support of input variables

- [ ] Tokens count for Anthropic Models and other models such as LLama3 or Mistral

- [X] Cost Estimations for main LLM providers based on token count

- [ ] Integration with qllm (Quantalogic LLM)

- [ ] Embedding of file summary in SQL-Lite

- [ ] Intelligence selection of file based on an LLM

- [ ] Git power tools (Git diff integration / PR Assisted Review)

Contributions to Code2Prompt are welcome! Please read our Contributing Guide for details on our code of conduct and the process for submitting pull requests.

Code2Prompt is released under the MIT License. See the LICENSE file for details.

⭐ If you find Code2Prompt useful, please give us a star on GitHub! It helps us reach more developers and improve the tool. ⭐

Made with ❤️ by Raphaël MANSUY. Founder of Quantalogic. Creator of qllm.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for code2prompt

Similar Open Source Tools

code2prompt

Code2Prompt is a powerful command-line tool that generates comprehensive prompts from codebases, designed to streamline interactions between developers and Large Language Models (LLMs) for code analysis, documentation, and improvement tasks. It bridges the gap between codebases and LLMs by converting projects into AI-friendly prompts, enabling users to leverage AI for various software development tasks. The tool offers features like holistic codebase representation, intelligent source tree generation, customizable prompt templates, smart token management, Gitignore integration, flexible file handling, clipboard-ready output, multiple output options, and enhanced code readability.

patchwork

PatchWork is an open-source framework designed for automating development tasks using large language models. It enables users to automate workflows such as PR reviews, bug fixing, security patching, and more through a self-hosted CLI agent and preferred LLMs. The framework consists of reusable atomic actions called Steps, customizable LLM prompts known as Prompt Templates, and LLM-assisted automations called Patchflows. Users can run Patchflows locally in their CLI/IDE or as part of CI/CD pipelines. PatchWork offers predefined patchflows like AutoFix, PRReview, GenerateREADME, DependencyUpgrade, and ResolveIssue, with the flexibility to create custom patchflows. Prompt templates are used to pass queries to LLMs and can be customized. Contributions to new patchflows, steps, and the core framework are encouraged, with chat assistants available to aid in the process. The roadmap includes expanding the patchflow library, introducing a debugger and validation module, supporting large-scale code embeddings, parallelization, fine-tuned models, and an open-source GUI. PatchWork is licensed under AGPL-3.0 terms, while custom patchflows and steps can be shared using the Apache-2.0 licensed patchwork template repository.

chatgpt-cli

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure. It features streaming capabilities, extensive configuration options, and supports various modes like streaming, query, and interactive mode. Users can manage thread-based context, sliding window history, and provide custom context from any source. The CLI also offers model and thread listing, advanced configuration options, and supports GPT-4, GPT-3.5-turbo, and Perplexity's models. Installation is available via Homebrew or direct download, and users can configure settings through default values, a config.yaml file, or environment variables.

Fabric

Fabric is an open-source framework designed to augment humans using AI by organizing prompts by real-world tasks. It addresses the integration problem of AI by creating and organizing prompts for various tasks. Users can create, collect, and organize AI solutions in a single place for use in their favorite tools. Fabric also serves as a command-line interface for those focused on the terminal. It offers a wide range of features and capabilities, including support for multiple AI providers, internationalization, speech-to-text, AI reasoning, model management, web search, text-to-speech, desktop notifications, and more. The project aims to help humans flourish by leveraging AI technology to solve human problems and enhance creativity.

RA.Aid

RA.Aid is an AI software development agent powered by `aider` and advanced reasoning models like `o1`. It combines `aider`'s code editing capabilities with LangChain's agent-based task execution framework to provide an intelligent assistant for research, planning, and implementation of multi-step development tasks. It handles complex programming tasks by breaking them down into manageable steps, running shell commands automatically, and leveraging expert reasoning models like OpenAI's o1. RA.Aid is designed for everyday software development, offering features such as multi-step task planning, automated command execution, and the ability to handle complex programming tasks beyond single-shot code edits.

Biomni

Biomni is a general-purpose biomedical AI agent designed to autonomously execute a wide range of research tasks across diverse biomedical subfields. By integrating cutting-edge large language model (LLM) reasoning with retrieval-augmented planning and code-based execution, Biomni helps scientists dramatically enhance research productivity and generate testable hypotheses.

cursor-tools

cursor-tools is a CLI tool designed to enhance AI agents with advanced skills, such as web search, repository context, documentation generation, GitHub integration, Xcode tools, and browser automation. It provides features like Perplexity for web search, Gemini 2.0 for codebase context, and Stagehand for browser operations. The tool requires API keys for Perplexity AI and Google Gemini, and supports global installation for system-wide access. It offers various commands for different tasks and integrates with Cursor Composer for AI agent usage.

sec-code-bench

SecCodeBench is a benchmark suite for evaluating the security of AI-generated code, specifically designed for modern Agentic Coding Tools. It addresses challenges in existing security benchmarks by ensuring test case quality, employing precise evaluation methods, and covering Agentic Coding Tools. The suite includes 98 test cases across 5 programming languages, focusing on functionality-first evaluation and dynamic execution-based validation. It offers a highly extensible testing framework for end-to-end automated evaluation of agentic coding tools, generating comprehensive reports and logs for analysis and improvement.

rag-gpt

RAG-GPT is a tool that allows users to quickly launch an intelligent customer service system with Flask, LLM, and RAG. It includes frontend, backend, and admin console components. The tool supports cloud-based and local LLMs, enables deployment of conversational service robots in minutes, integrates diverse knowledge bases, offers flexible configuration options, and features an attractive user interface.

llama_index

LlamaIndex is a data framework for building LLM applications. It provides tools for ingesting, structuring, and querying data, as well as integrating with LLMs and other tools. LlamaIndex is designed to be easy to use for both beginner and advanced users, and it provides a comprehensive set of features for building LLM applications.

LLMBox

LLMBox is a comprehensive library designed for implementing Large Language Models (LLMs) with a focus on a unified training pipeline and comprehensive model evaluation. It serves as a one-stop solution for training and utilizing LLMs, offering flexibility and efficiency in both training and utilization stages. The library supports diverse training strategies, comprehensive datasets, tokenizer vocabulary merging, data construction strategies, parameter efficient fine-tuning, and efficient training methods. For utilization, LLMBox provides comprehensive evaluation on various datasets, in-context learning strategies, chain-of-thought evaluation, evaluation methods, prefix caching for faster inference, support for specific LLM models like vLLM and Flash Attention, and quantization options. The tool is suitable for researchers and developers working with LLMs for natural language processing tasks.

action_mcp

Action MCP is a powerful tool for managing and automating your cloud infrastructure. It provides a user-friendly interface to easily create, update, and delete resources on popular cloud platforms. With Action MCP, you can streamline your deployment process, reduce manual errors, and improve overall efficiency. The tool supports various cloud providers and offers a wide range of features to meet your infrastructure management needs. Whether you are a developer, system administrator, or DevOps engineer, Action MCP can help you simplify and optimize your cloud operations.

gitingest

GitIngest is a tool that allows users to turn any Git repository into a prompt-friendly text ingest for LLMs. It provides easy code context by generating a text digest from a git repository URL or directory. The tool offers smart formatting for optimized output format for LLM prompts and provides statistics about file and directory structure, size of the extract, and token count. GitIngest can be used as a CLI tool on Linux and as a Python package for code integration. The tool is built using Tailwind CSS for frontend, FastAPI for backend framework, tiktoken for token estimation, and apianalytics.dev for simple analytics. Users can self-host GitIngest by building the Docker image and running the container. Contributions to the project are welcome, and the tool aims to be beginner-friendly for first-time contributors with a simple Python and HTML codebase.

datadreamer

DataDreamer is an advanced toolkit designed to facilitate the development of edge AI models by enabling synthetic data generation, knowledge extraction from pre-trained models, and creation of efficient and potent models. It eliminates the need for extensive datasets by generating synthetic datasets, leverages latent knowledge from pre-trained models, and focuses on creating compact models suitable for integration into any device and performance for specialized tasks. The toolkit offers features like prompt generation, image generation, dataset annotation, and tools for training small-scale neural networks for edge deployment. It provides hardware requirements, usage instructions, available models, and limitations to consider while using the library.

lexido

Lexido is an innovative assistant for the Linux command line, designed to boost your productivity and efficiency. Powered by Gemini Pro 1.0 and utilizing the free API, Lexido offers smart suggestions for commands based on your prompts and importantly your current environment. Whether you're installing software, managing files, or configuring system settings, Lexido streamlines the process, making it faster and more intuitive.

For similar tasks

Awesome-LLM4EDA

LLM4EDA is a repository dedicated to showcasing the emerging progress in utilizing Large Language Models for Electronic Design Automation. The repository includes resources, papers, and tools that leverage LLMs to solve problems in EDA. It covers a wide range of applications such as knowledge acquisition, code generation, code analysis, verification, and large circuit models. The goal is to provide a comprehensive understanding of how LLMs can revolutionize the EDA industry by offering innovative solutions and new interaction paradigms.

DeGPT

DeGPT is a tool designed to optimize decompiler output using Large Language Models (LLM). It requires manual installation of specific packages and setting up API key for OpenAI. The tool provides functionality to perform optimization on decompiler output by running specific scripts.

code2prompt

Code2Prompt is a powerful command-line tool that generates comprehensive prompts from codebases, designed to streamline interactions between developers and Large Language Models (LLMs) for code analysis, documentation, and improvement tasks. It bridges the gap between codebases and LLMs by converting projects into AI-friendly prompts, enabling users to leverage AI for various software development tasks. The tool offers features like holistic codebase representation, intelligent source tree generation, customizable prompt templates, smart token management, Gitignore integration, flexible file handling, clipboard-ready output, multiple output options, and enhanced code readability.

SinkFinder

SinkFinder + LLM is a closed-source semi-automatic vulnerability discovery tool that performs static code analysis on jar/war/zip files. It enhances the capability of LLM large models to verify path reachability and assess the trustworthiness score of the path based on the contextual code environment. Users can customize class and jar exclusions, depth of recursive search, and other parameters through command-line arguments. The tool generates rule.json configuration file after each run and requires configuration of the DASHSCOPE_API_KEY for LLM capabilities. The tool provides detailed logs on high-risk paths, LLM results, and other findings. Rules.json file contains sink rules for various vulnerability types with severity levels and corresponding sink methods.

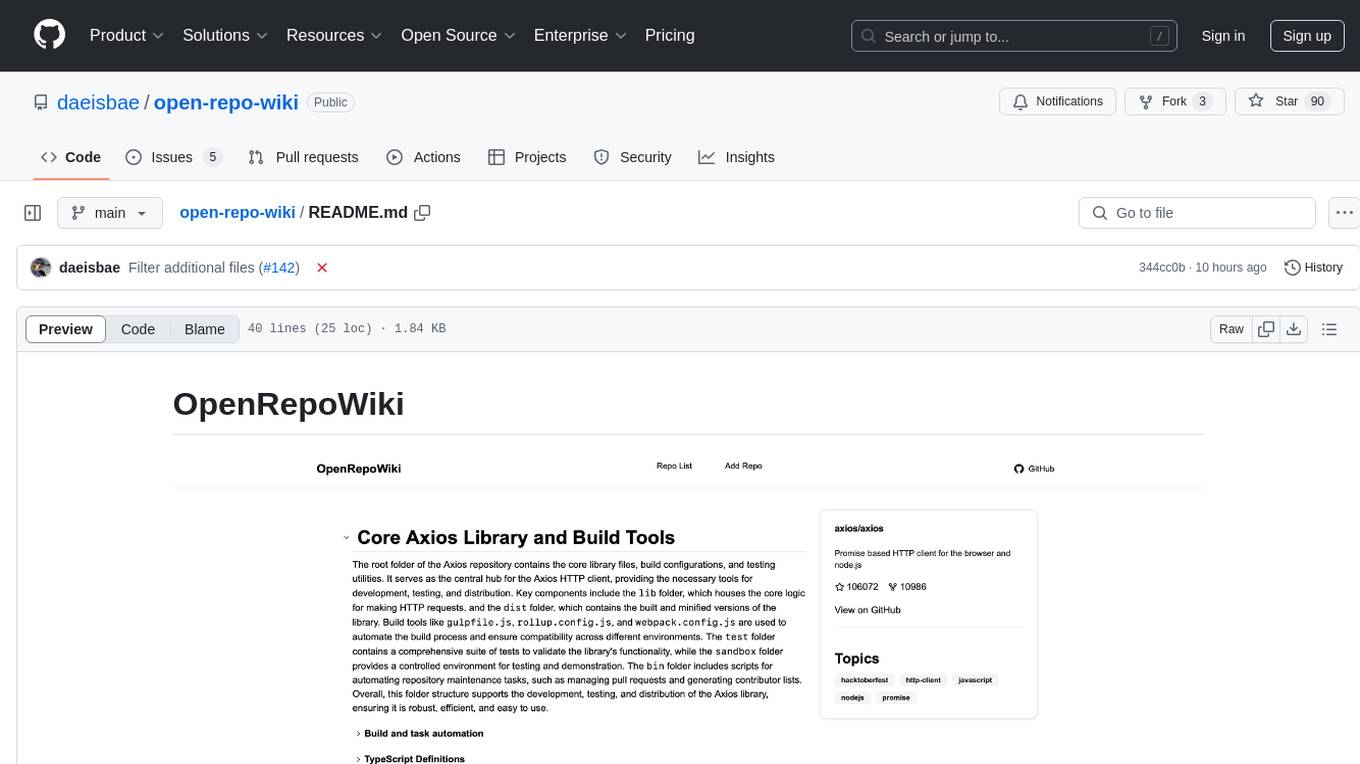

open-repo-wiki

OpenRepoWiki is a tool designed to automatically generate a comprehensive wiki page for any GitHub repository. It simplifies the process of understanding the purpose, functionality, and core components of a repository by analyzing its code structure, identifying key files and functions, and providing explanations. The tool aims to assist individuals who want to learn how to build various projects by providing a summarized overview of the repository's contents. OpenRepoWiki requires certain dependencies such as Google AI Studio or Deepseek API Key, PostgreSQL for storing repository information, Github API Key for accessing repository data, and Amazon S3 for optional usage. Users can configure the tool by setting up environment variables, installing dependencies, building the server, and running the application. It is recommended to consider the token usage and opt for cost-effective options when utilizing the tool.

CodebaseToPrompt

CodebaseToPrompt is a simple tool that converts a local directory into a structured prompt for Large Language Models (LLMs). It allows users to select specific files for code review, analysis, or documentation by exploring and filtering through the file tree in a browser-based interface. The tool generates a formatted output that can be directly used with AI tools, provides token count estimates, and supports local storage for saving selections. Users can easily copy the selected files in the desired format for further use.

air

air is an R formatter and language server written in Rust. It is currently in alpha stage, so users should expect breaking changes in both the API and formatting results. The tool draws inspiration from various sources like roslyn, swift, rust-analyzer, prettier, biome, and ruff. It provides formatters and language servers, influenced by design decisions from these tools. Users can install air using standalone installers for macOS, Linux, and Windows, which automatically add air to the PATH. Developers can also install the dev version of the air CLI and VS Code extension for further customization and development.

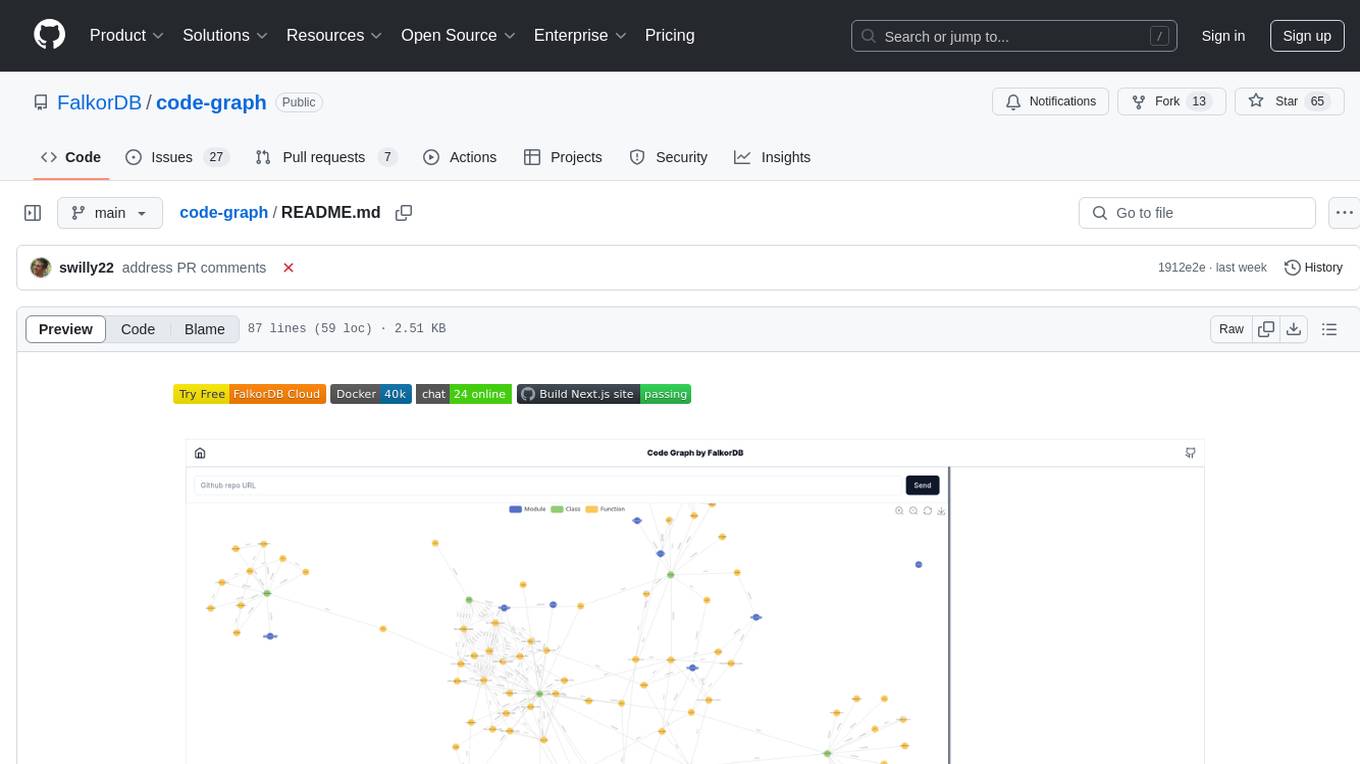

code-graph

Code-graph is a tool composed of FalkorDB Graph DB, Code-Graph-Backend, and Code-Graph-Frontend. It allows users to store and query graphs, manage backend logic, and interact with the website. Users can run the components locally by setting up environment variables and installing dependencies. The tool supports analyzing C & Python source files with plans to add support for more languages in the future. It provides a local repository analysis feature and a live demo accessible through a web browser.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.