Awesome-LLM4EDA

None

Stars: 63

LLM4EDA is a repository dedicated to showcasing the emerging progress in utilizing Large Language Models for Electronic Design Automation. The repository includes resources, papers, and tools that leverage LLMs to solve problems in EDA. It covers a wide range of applications such as knowledge acquisition, code generation, code analysis, verification, and large circuit models. The goal is to provide a comprehensive understanding of how LLMs can revolutionize the EDA industry by offering innovative solutions and new interaction paradigms.

README:

- We would like to maintain a list of resources that utilize Large Language Models to solve problems in Electronic Design Automation

- LLM4EDA Paper Link

- Also see our maintaining list for Awesome Artificial Intelligence for Electronic Design Automation

- Maintained by members in SJTU-Thinklab: Ruizhe Zhong, Xingbo Du

- Users can interact with LLMs for knowledge acquisition and Q&A, providing user-friendly and easy-interactively assistant chatbot and bring us new interaction paradigm with EDA software.

- ChipNeMo: Domain-Adapted LLMs for Chip Design

- New Interaction Paradigm for Complex EDA Software Leveraging GPT

- From English to PCSEL: LLM helps design and optimize photonic crystal surface emitting lasers

- RapidGPT: Your Ultimate HDL Pair-Designer

- EDA Corpus: A Large Language Model Dataset for Enhanced Interaction with OpenROAD

- Given language format specification and requirements, LLMs will generate RTL codes and EDA controlling scripts.

- Besides, how to evaluate the quality of generated codes remains an open research focus, including syntax correctness, functionality equivalence, PPA, and security issues.

- ChatEDA: A Large Language Model Powered Autonomous Agent for EDA

- ChipNeMo: Domain-Adapted LLMs for Chip Design

- ChipGPT: How far are we from natural language hardware design

- CodeGen: An Open Large Language Model for Code with Multi-Turn Program Synthesis

- An Empirical Evaluation of Using Large Language Models for Automated Unit Test Generation

- RTLLM: An Open-Source Benchmark for Design RTL Generation with Large Language Model

- GPT4AIGChip: Towards Next-Generation AI Accelerator Design Automation via Large Language Models

- AutoChip: Automating HDL Generation Using LLM Feedback

- Chip-Chat: Challenges and Opportunities in Conversational Hardware Design

- VeriGen: A Large Language Model for Verilog Code Generation

- Generating Secure Hardware using ChatGPT Resistant to CWEs

- The Power of Large Language Models for Wireless Communication System Development: A Case Study on FPGA Platform

- A Deep Learning Framework for Verilog Autocompletion Towards Design and Verification Automation

- RTLCoder: Outperforming GPT-3.5 in Design RTL Generation with Our Open-Source Dataset and Lightweight Solution

- VerilogEval: Evaluating Large Language Models for Verilog Code Generation

- Benchmarking Large Language Models for Automated Verilog RTL Code Generation

- SpecLLM: Exploring Generation and Review of VLSI Design Specification with Large Language Model

- Zero-Shot RTL Code Generation with Attention Sink Augmented Large Language Models

- Make Every Move Count: LLM-based High-Quality RTL Code Generation Using MCTS

- From English to ASIC Hardware Implementation with Large Language Model

- EDA Corpus: A Large Language Model Dataset for Enhanced Interaction with OpenROAD

- CreativEval: Evaluating Creativity of LLM-Based Hardware Code Generation

- Evaluating LLMs for Hardware Design and Test

- AnalogCoder: Analog Circuit Design via Training-Free Code Generation

- Data is all you need: Finetuning LLMs for Chip Design via an Automated design-data augmentation framework

- SynthAI: A Multi Agent Generative AI Framework for Automated Modular HLS Design Generation

- Evaluating LLMs for Hardware Design and Test

- We also investigate LLMs' wide application in code analysis, such as bug detecting & fixing, code summarization and security checking.

- Besides, LLMs have also demonstrated strong ability for verification, e.g. Assertion Based Verification.

- ChipNeMo: Domain-Adapted LLMs for Chip Design

- LLM4SecHW: Leavering Domain-Specific Large Language Model for Hardware Debugging

- Unlocking Hardware Security Assurance: The Potential of LLMs

- RTLFixer: Automatically Fixing RTL Syntax Errors with Large Language Models

- LLM-assisted Generation of Hardware Assertions

- Using LLMs to Facilitate Formal Verification of RTL

- DIVAS: An LLM-based End-to-End Framework for SoC Security Analysis and Policy-based Protection

- Fixing Hardware Security Bugs with Large Language Models (On Hardware Security Bug Code Fixes By Prompting Large Language Models)

- LLM for SoC Security: A Paradigm Shift

- The Power of Large Language Models for Wireless Communication System Development: A Case Study on FPGA Platform

- A Deep Learning Framework for Verilog Autocompletion Towards Design and Verification Automation

- SpecLLM: Exploring Generation and Review of VLSI Design Specification with Large Language Model

- AssertLLM: Generating and Evaluating Hardware Verification Assertions from Design Specifications via Multi-LLMs

- Self-HWDebug: Automation of LLM Self-Instructing for Hardware Security Verification

- Data is all you need: Finetuning LLMs for Chip Design via an Automated design-data augmentation framework

- LLMs for Hardware Security: Boon or Bane?

- A multimodal circuit representation learning technique, poised to provide a comprehensive understanding by harmonizing and extracting insights from varied data sources, such as functional specifications, RTL designs, circuit netlists, and physical layouts.

- The Dawn of AI-Native EDA: Promises and Challenges of Large Circuit Models

LLM4EDA: Emerging Progress in Large Language Models for Electronic Design Automation

If you find this repo useful, please cite our paper.

@article{zhong2023llm4eda,

title={LLM4EDA: Emerging Progress in Large Language Models for Electronic Design Automation},

author={Zhong, Ruizhe and Du, Xingbo and Kai, Shixiong and Tang, Zhentao and Xu, Siyuan and Zhen, Hui-Ling and Hao, Jianye and Xu, Qiang and Yuan, Mingxuan and Yan, Junchi},

journal={arXiv preprint arXiv:2401.12224},

year={2023}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-LLM4EDA

Similar Open Source Tools

Awesome-LLM4EDA

LLM4EDA is a repository dedicated to showcasing the emerging progress in utilizing Large Language Models for Electronic Design Automation. The repository includes resources, papers, and tools that leverage LLMs to solve problems in EDA. It covers a wide range of applications such as knowledge acquisition, code generation, code analysis, verification, and large circuit models. The goal is to provide a comprehensive understanding of how LLMs can revolutionize the EDA industry by offering innovative solutions and new interaction paradigms.

awesome-mlops

Awesome MLOps is a curated list of tools related to Machine Learning Operations, covering areas such as AutoML, CI/CD for Machine Learning, Data Cataloging, Data Enrichment, Data Exploration, Data Management, Data Processing, Data Validation, Data Visualization, Drift Detection, Feature Engineering, Feature Store, Hyperparameter Tuning, Knowledge Sharing, Machine Learning Platforms, Model Fairness and Privacy, Model Interpretability, Model Lifecycle, Model Serving, Model Testing & Validation, Optimization Tools, Simplification Tools, Visual Analysis and Debugging, and Workflow Tools. The repository provides a comprehensive collection of tools and resources for individuals and teams working in the field of MLOps.

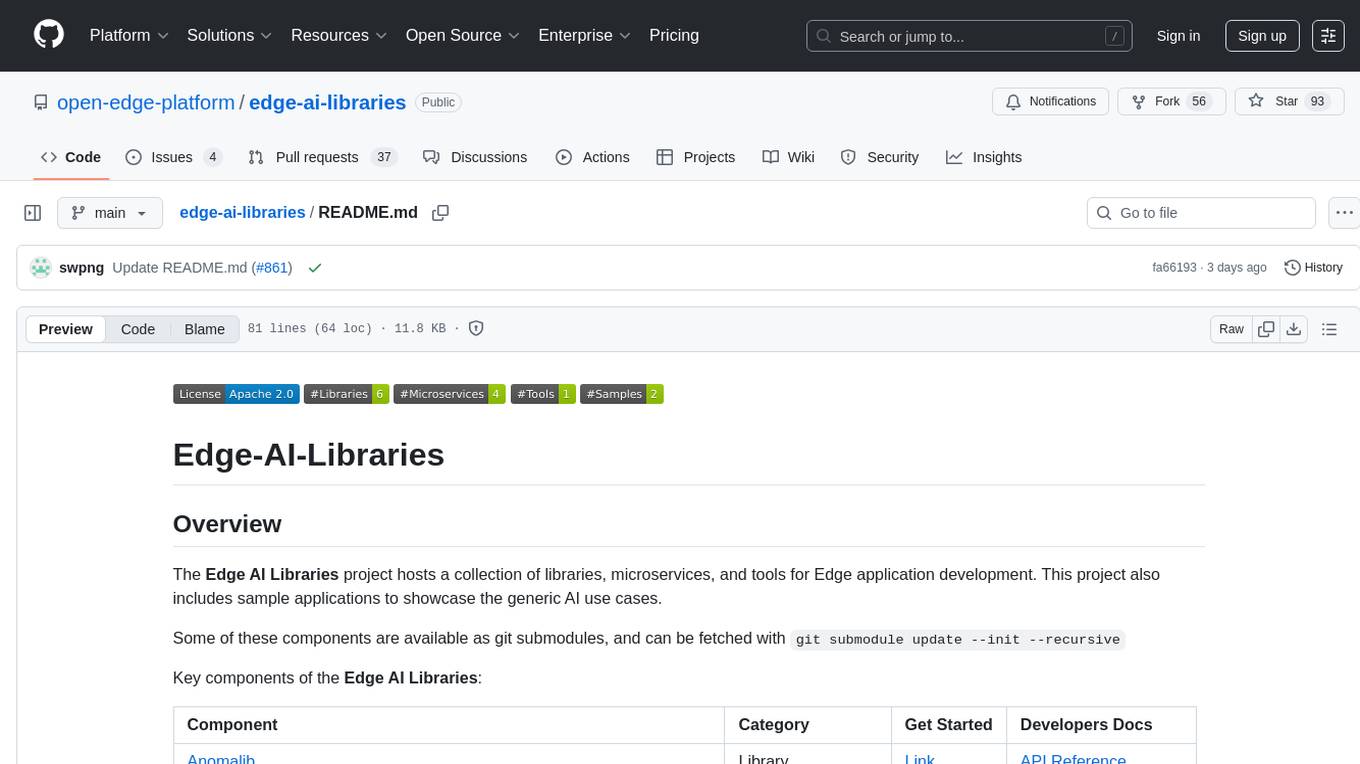

edge-ai-libraries

The Edge AI Libraries project is a collection of libraries, microservices, and tools for Edge application development. It includes sample applications showcasing generic AI use cases. Key components include Anomalib, Dataset Management Framework, Deep Learning Streamer, ECAT EnableKit, EtherCAT Masterstack, FLANN, OpenVINO toolkit, Audio Analyzer, ORB Extractor, PCL, PLCopen Servo, Real-time Data Agent, RTmotion, Audio Intelligence, Deep Learning Streamer Pipeline Server, Document Ingestion, Model Registry, Multimodal Embedding Serving, Time Series Analytics, Vector Retriever, Visual-Data Preparation, VLM Inference Serving, Intel Geti, Intel SceneScape, Visual Pipeline and Platform Evaluation Tool, Chat Question and Answer, Document Summarization, PLCopen Benchmark, PLCopen Databus, Video Search and Summarization, Isolation Forest Classifier, Random Forest Microservices. Visit sub-directories for instructions and guides.

model_server

OpenVINO™ Model Server (OVMS) is a high-performance system for serving models. Implemented in C++ for scalability and optimized for deployment on Intel architectures, the model server uses the same architecture and API as TensorFlow Serving and KServe while applying OpenVINO for inference execution. Inference service is provided via gRPC or REST API, making deploying new algorithms and AI experiments easy.

LAMBDA

LAMBDA is a code-free multi-agent data analysis system that utilizes large models to address data analysis challenges in complex data-driven applications. It allows users to perform complex data analysis tasks through human language instruction, seamlessly generate and debug code using two key agent roles, integrate external models and algorithms, and automatically generate reports. The system has demonstrated strong performance on various machine learning datasets, enhancing data science practice by integrating human and artificial intelligence.

akeru

Akeru.ai is an open-source AI platform leveraging the power of decentralization. It offers transparent, safe, and highly available AI capabilities. The platform aims to give developers access to open-source and transparent AI resources through its decentralized nature hosted on an edge network. Akeru API introduces features like retrieval, function calling, conversation management, custom instructions, data input optimization, user privacy, testing and iteration, and comprehensive documentation. It is ideal for creating AI agents and enhancing web and mobile applications with advanced AI capabilities. The platform runs on a Bittensor Subnet design that aims to democratize AI technology and promote an equitable AI future. Akeru.ai embraces decentralization challenges to ensure a decentralized and equitable AI ecosystem with security features like watermarking and network pings. The API architecture integrates with technologies like Bun, Redis, and Elysia for a robust, scalable solution.

learn-modern-ai-python

This repository is part of the Certified Agentic & Robotic AI Engineer program, covering the first quarter of the course work. It focuses on Modern AI Python Programming, emphasizing static typing for robust and scalable AI development. The course includes modules on Python fundamentals, object-oriented programming, advanced Python concepts, AI-assisted Python programming, web application basics with Python, and the future of Python in AI. Upon completion, students will be able to write proficient Modern Python code, apply OOP principles, implement asynchronous programming, utilize AI-powered tools, develop basic web applications, and understand the future directions of Python in AI.

ianvs

Ianvs is a distributed synergy AI benchmarking project incubated in KubeEdge SIG AI. It aims to test the performance of distributed synergy AI solutions following recognized standards, providing end-to-end benchmark toolkits, test environment management tools, test case control tools, and benchmark presentation tools. It also collaborates with other organizations to establish comprehensive benchmarks and related applications. The architecture includes critical components like Test Environment Manager, Test Case Controller, Generation Assistant, Simulation Controller, and Story Manager. Ianvs documentation covers quick start, guides, dataset descriptions, algorithms, user interfaces, stories, and roadmap.

tunix

Tunix is a JAX-based library designed for post-training Large Language Models. It provides efficient support for supervised fine-tuning, reinforcement learning, and knowledge distillation. Tunix leverages JAX for accelerated computation and integrates seamlessly with the Flax NNX modeling framework. The library is modular, efficient, and designed for distributed training on accelerators like TPUs. Currently in early development, Tunix aims to expand its capabilities, usability, and performance.

opensourceAI

This repository is a collection of various open source AI projects and topics, each focusing on specific areas such as language models, security, and deepfake technology. It includes projects like privateGPT for building a private version of the GPT language model, AutoGPT for automating training GPT models, and DeepFaceLab for deepfake creation. Explore these repositories to find projects that interest you.

llmariner

LLMariner is an extensible open source platform built on Kubernetes to simplify the management of generative AI workloads. It enables efficient handling of training and inference data within clusters, with OpenAI-compatible APIs for seamless integration with a wide range of AI-driven applications.

psychic

Finic is an open source python-based integration platform designed to simplify integration workflows for both business users and developers. It offers a drag-and-drop UI, a dedicated Python environment for each workflow, and generative AI features to streamline transformation tasks. With a focus on decoupling integration from product code, Finic aims to provide faster and more flexible integrations by supporting custom connectors. The tool is open source and allows deployment to users' own cloud environments with minimal legal friction.

LLM-PLSE-paper

LLM-PLSE-paper is a repository focused on the applications of Large Language Models (LLMs) in Programming Language and Software Engineering (PL/SE) domains. It covers a wide range of topics including bug detection, specification inference and verification, code generation, fuzzing and testing, code model and reasoning, code understanding, IDE technologies, prompting for reasoning tasks, and agent/tool usage and planning. The repository provides a comprehensive collection of research papers, benchmarks, empirical studies, and frameworks related to the capabilities of LLMs in various PL/SE tasks.

openvino

OpenVINO™ is an open-source toolkit for optimizing and deploying AI inference. It provides a common API to deliver inference solutions on various platforms, including CPU, GPU, NPU, and heterogeneous devices. OpenVINO™ supports pre-trained models from Open Model Zoo and popular frameworks like TensorFlow, PyTorch, and ONNX. Key components of OpenVINO™ include the OpenVINO™ Runtime, plugins for different hardware devices, frontends for reading models from native framework formats, and the OpenVINO Model Converter (OVC) for adjusting models for optimal execution on target devices.

geoai

geoai is a Python package designed for utilizing Artificial Intelligence (AI) in the context of geospatial data. It allows users to visualize various types of geospatial data such as vector, raster, and LiDAR data. Additionally, the package offers functionalities for segmenting remote sensing imagery using the Segment Anything Model and classifying remote sensing imagery with deep learning models. With a focus on geospatial AI applications, geoai provides a versatile tool for processing and analyzing spatial data with the power of AI.

For similar tasks

Awesome-LLM4EDA

LLM4EDA is a repository dedicated to showcasing the emerging progress in utilizing Large Language Models for Electronic Design Automation. The repository includes resources, papers, and tools that leverage LLMs to solve problems in EDA. It covers a wide range of applications such as knowledge acquisition, code generation, code analysis, verification, and large circuit models. The goal is to provide a comprehensive understanding of how LLMs can revolutionize the EDA industry by offering innovative solutions and new interaction paradigms.

DeGPT

DeGPT is a tool designed to optimize decompiler output using Large Language Models (LLM). It requires manual installation of specific packages and setting up API key for OpenAI. The tool provides functionality to perform optimization on decompiler output by running specific scripts.

code2prompt

Code2Prompt is a powerful command-line tool that generates comprehensive prompts from codebases, designed to streamline interactions between developers and Large Language Models (LLMs) for code analysis, documentation, and improvement tasks. It bridges the gap between codebases and LLMs by converting projects into AI-friendly prompts, enabling users to leverage AI for various software development tasks. The tool offers features like holistic codebase representation, intelligent source tree generation, customizable prompt templates, smart token management, Gitignore integration, flexible file handling, clipboard-ready output, multiple output options, and enhanced code readability.

SinkFinder

SinkFinder + LLM is a closed-source semi-automatic vulnerability discovery tool that performs static code analysis on jar/war/zip files. It enhances the capability of LLM large models to verify path reachability and assess the trustworthiness score of the path based on the contextual code environment. Users can customize class and jar exclusions, depth of recursive search, and other parameters through command-line arguments. The tool generates rule.json configuration file after each run and requires configuration of the DASHSCOPE_API_KEY for LLM capabilities. The tool provides detailed logs on high-risk paths, LLM results, and other findings. Rules.json file contains sink rules for various vulnerability types with severity levels and corresponding sink methods.

open-repo-wiki

OpenRepoWiki is a tool designed to automatically generate a comprehensive wiki page for any GitHub repository. It simplifies the process of understanding the purpose, functionality, and core components of a repository by analyzing its code structure, identifying key files and functions, and providing explanations. The tool aims to assist individuals who want to learn how to build various projects by providing a summarized overview of the repository's contents. OpenRepoWiki requires certain dependencies such as Google AI Studio or Deepseek API Key, PostgreSQL for storing repository information, Github API Key for accessing repository data, and Amazon S3 for optional usage. Users can configure the tool by setting up environment variables, installing dependencies, building the server, and running the application. It is recommended to consider the token usage and opt for cost-effective options when utilizing the tool.

CodebaseToPrompt

CodebaseToPrompt is a simple tool that converts a local directory into a structured prompt for Large Language Models (LLMs). It allows users to select specific files for code review, analysis, or documentation by exploring and filtering through the file tree in a browser-based interface. The tool generates a formatted output that can be directly used with AI tools, provides token count estimates, and supports local storage for saving selections. Users can easily copy the selected files in the desired format for further use.

air

air is an R formatter and language server written in Rust. It is currently in alpha stage, so users should expect breaking changes in both the API and formatting results. The tool draws inspiration from various sources like roslyn, swift, rust-analyzer, prettier, biome, and ruff. It provides formatters and language servers, influenced by design decisions from these tools. Users can install air using standalone installers for macOS, Linux, and Windows, which automatically add air to the PATH. Developers can also install the dev version of the air CLI and VS Code extension for further customization and development.

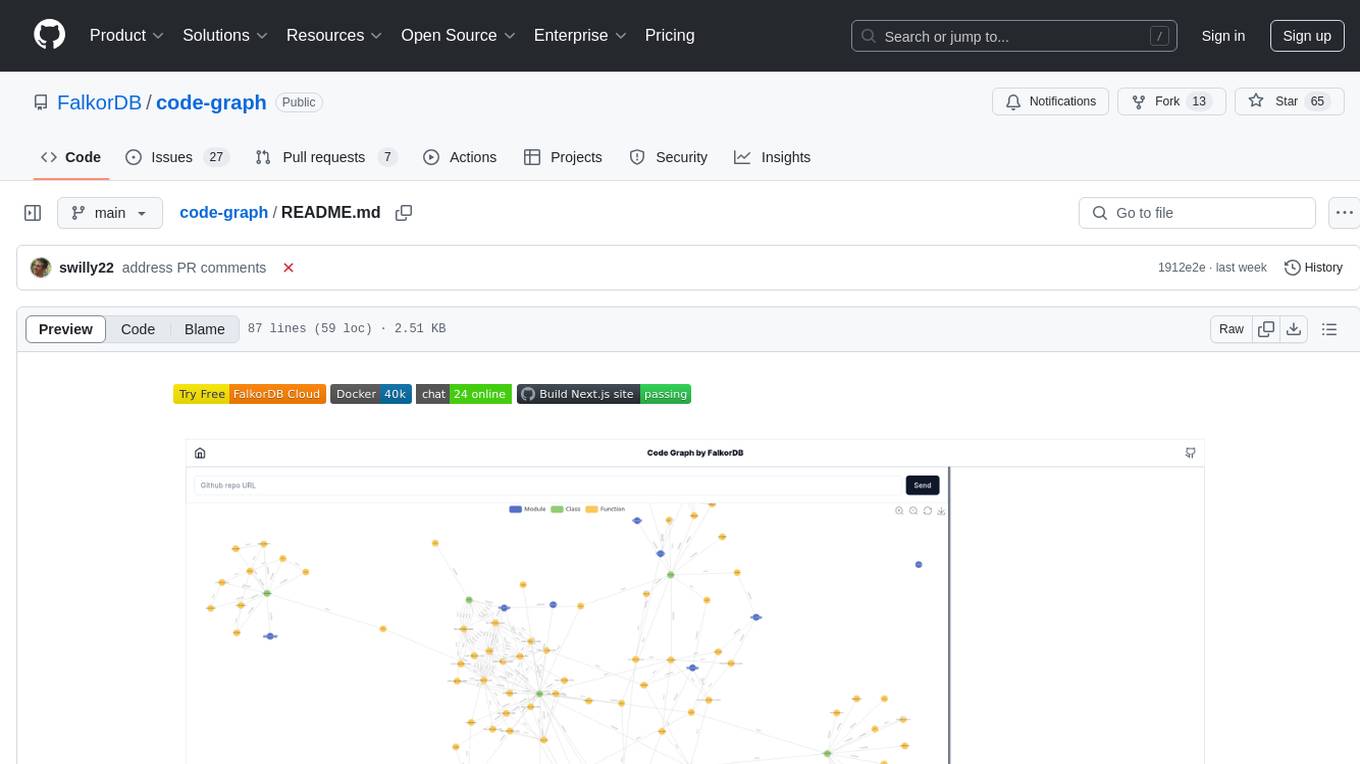

code-graph

Code-graph is a tool composed of FalkorDB Graph DB, Code-Graph-Backend, and Code-Graph-Frontend. It allows users to store and query graphs, manage backend logic, and interact with the website. Users can run the components locally by setting up environment variables and installing dependencies. The tool supports analyzing C & Python source files with plans to add support for more languages in the future. It provides a local repository analysis feature and a live demo accessible through a web browser.

For similar jobs

Awesome-LLM4EDA

LLM4EDA is a repository dedicated to showcasing the emerging progress in utilizing Large Language Models for Electronic Design Automation. The repository includes resources, papers, and tools that leverage LLMs to solve problems in EDA. It covers a wide range of applications such as knowledge acquisition, code generation, code analysis, verification, and large circuit models. The goal is to provide a comprehensive understanding of how LLMs can revolutionize the EDA industry by offering innovative solutions and new interaction paradigms.

ztachip

ztachip is a RISCV accelerator designed for vision and AI edge applications, offering up to 20-50x acceleration compared to non-accelerated RISCV implementations. It features an innovative tensor processor hardware to accelerate various vision tasks and TensorFlow AI models. ztachip introduces a new tensor programming paradigm for massive processing/data parallelism. The repository includes technical documentation, code structure, build procedures, and reference design examples for running vision/AI applications on FPGA devices. Users can build ztachip as a standalone executable or a micropython port, and run various AI/vision applications like image classification, object detection, edge detection, motion detection, and multi-tasking on supported hardware.

aigverse

aigverse is a Python infrastructure framework that bridges the gap between logic synthesis and AI/ML applications. It allows efficient representation and manipulation of logic circuits, making it easier to integrate logic synthesis and optimization tasks into machine learning pipelines. Built upon EPFL Logic Synthesis Libraries, particularly mockturtle, aigverse provides a high-level Python interface to state-of-the-art algorithms for And-Inverter Graph (AIG) manipulation and logic synthesis, widely used in formal verification, hardware design, and optimization tasks.