ztachip

Opensource software/hardware platform to build edge AI solutions deployed on FPGA or custom ASIC hardware.

Stars: 253

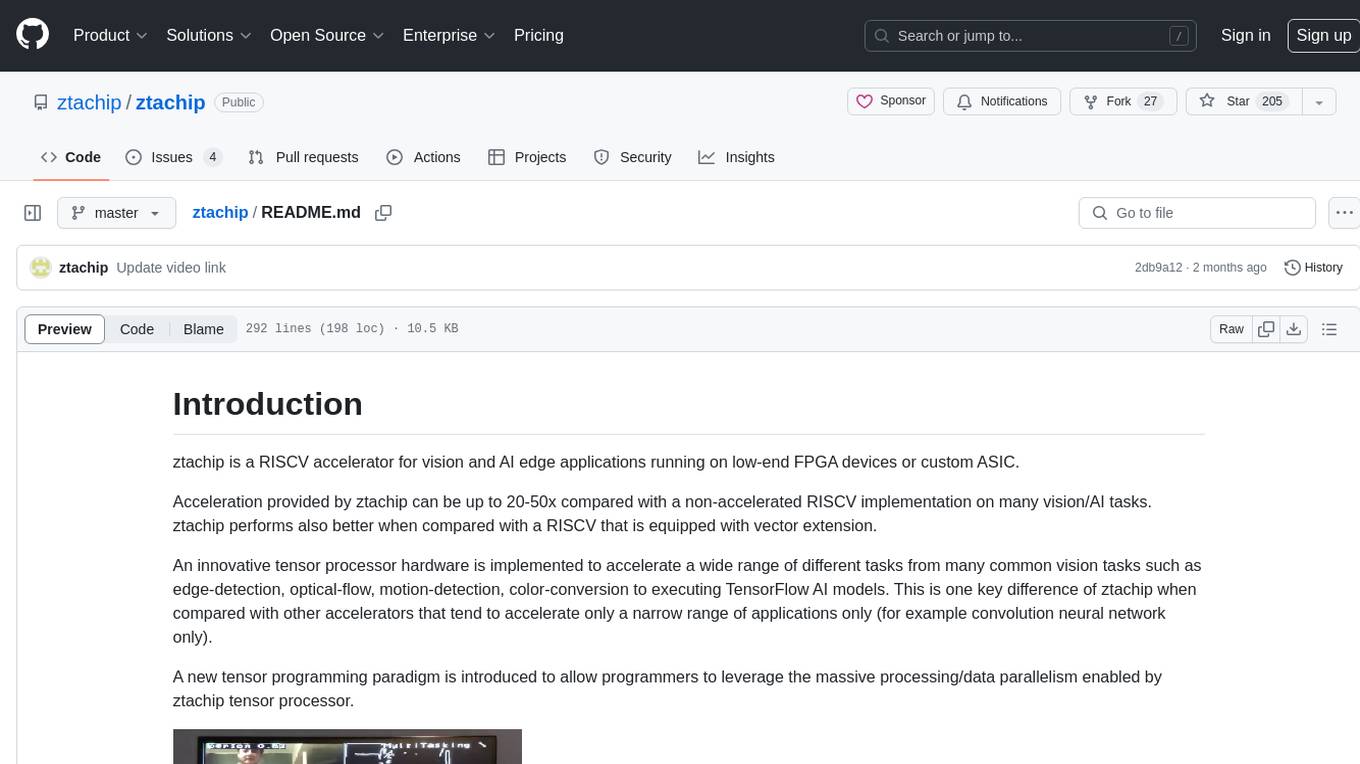

ztachip is a RISCV accelerator designed for vision and AI edge applications, offering up to 20-50x acceleration compared to non-accelerated RISCV implementations. It features an innovative tensor processor hardware to accelerate various vision tasks and TensorFlow AI models. ztachip introduces a new tensor programming paradigm for massive processing/data parallelism. The repository includes technical documentation, code structure, build procedures, and reference design examples for running vision/AI applications on FPGA devices. Users can build ztachip as a standalone executable or a micropython port, and run various AI/vision applications like image classification, object detection, edge detection, motion detection, and multi-tasking on supported hardware.

README:

Ztachip is a Multicore, Data-Aware, Embedded RISC-V AI Accelerator for Edge Inferencing running on low-end FPGA devices or custom ASIC.

Acceleration provided by ztachip can be up to 20-50x compared with a non-accelerated RISCV implementation on many vision/AI tasks. ztachip performs also better when compared with a RISCV that is equipped with vector extension.

An innovative tensor processor hardware is implemented to accelerate a wide range of different tasks from many common vision tasks such as edge-detection, optical-flow, motion-detection, color-conversion to executing TensorFlow AI models. This is one key difference of ztachip when compared with other accelerators that tend to accelerate only a narrow range of applications only (for example convolution neural network only).

A new tensor programming paradigm is introduced to allow programmers to leverage the massive processing/data parallelism enabled by ztachip tensor processor.

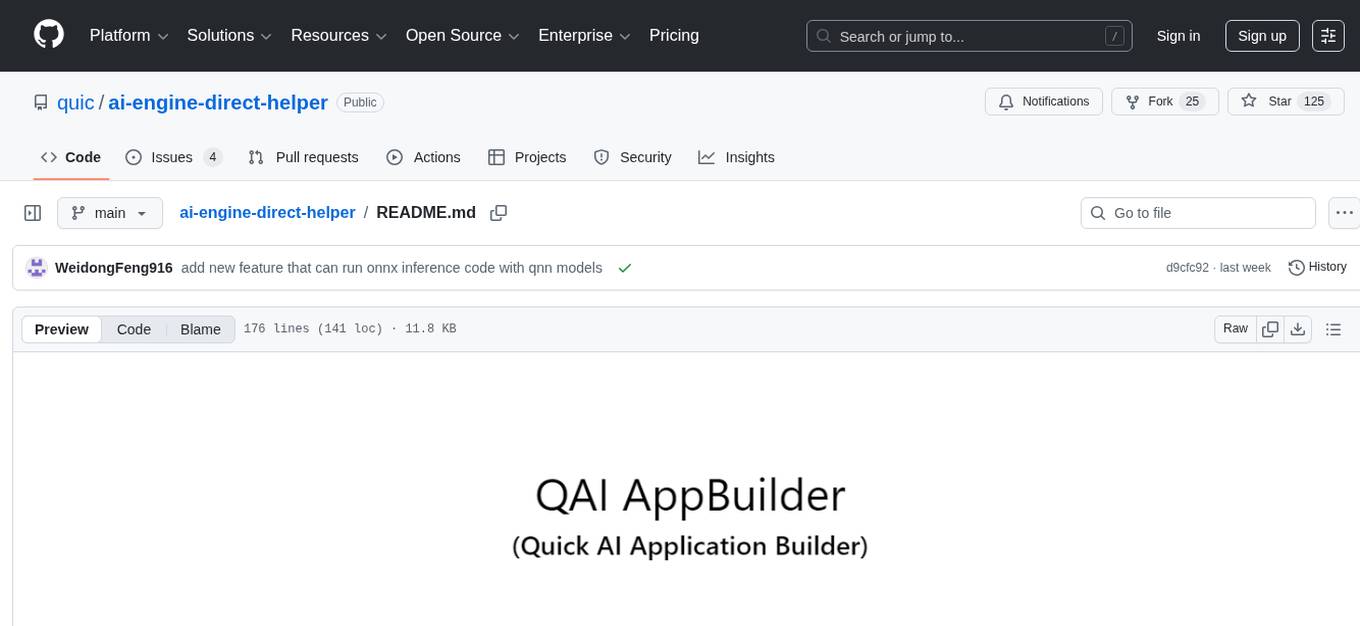

Ztachip consists of the following functional units tied via an AXI Bus to a VexRicsv CPU, a DRAM and other peripherals as follows

- The Mcore, a Scheduling Processor

- A Dataplane, to stream the next data and instruction to the Tensor Engine .

- A Scratch-Pad Memory to temporarily hold data

- A Stream Processor to manage data IO

- Tensor Engine with 28x Pcores that can be configured to act like a systolic array to perform in memory compute each containing a Scalar and Vector ALU, with 16 Threads of execution on private memory.

The software provided consists of

- Ztachip DSL C-like compiler

- AI vision libraries

- Application examples

- Micropython port and examples

.

├── Documentation Overview on HW/SW and programmer's guide for ztachip, pcore, visionai and tensor

├── HW Hardware

│ ├── examples Reference Design: Integration of Vexriscv, Ztachip, DDR3, VGA, Camera, LEDs & Buttons

│ ├── platform Memory IP depenedencies for different FPGA synthesis (e.g. XIlinx, Altera) or ASIC

│ ├── simulation RTL Simulation

│ └── src RTL of Ztachip's top design, Scalar/Vector ALU, Dataplane, Pcore, SoC integration etc

├── LICENSE.md

├── micropython Micropython Support

│ ├── examples edge_detection, image_classification, motion_detect, object_detect, point_of_interest etc

│ ├── micropython micropython

│ └── ztachip_port ztachip micropython port

├── README.md

├── SW Software

│ ├── apps AI kernel libraries of canny edge detector, harris corner, neural nets, optical flow etc

│ ├── base C runtime zero, Ztachip application libraries and other utilities

│ ├── compiler Ztachip C-like DSL compiler that generates instructions for the tensor processor

│ ├── fs File for data inference to be downloaded together with the build image

│ ├── linker.ld linker script for Ztachip

│ ├── makefile Main project makefile

│ ├── makefile.kernels Kernel makefile

│ ├── makefile.sim Makefile to test Kernels

│ ├── sim C source to test kernels

│ └── src SW Main (visionai and unit test entry points), SoC drivers and Zta's micropython API

│ This is a good place to learn on how to use ztachip prebuilt vision and AI stack.

└── tools openocd and vexriscv interface descriptions

In HW/platform, a generic implementation is also provided for simulation environment. Any FPGA/ASIC can be supported with the appropriate implementation of this wrapper layer. Choose the appropriate sub-folder that corresponds to your FPGA target.

Also, in SW/apps, many prebuilt acceleration functions are provided to provide programmers with a fast path to leverage ztachip acceleration. This folder is also a good place to learn on how to program your own custom acceleration functions.

The build procedure produces 2 seperate images.

One image is a standalone executable where user applications are using ztachip using a native [C/C++ library interface] (https://github.com/ztachip/ztachip/raw/master/Documentation/visionai_programmer_guide.pdf)

The second image is a micropython port of ztachip. With this image, applications are using ztachip using a Python programming interface

sudo apt-get install autoconf automake autotools-dev curl python3 libmpc-dev libmpfr-dev libgmp-dev gawk build-essential bison flex texinfo gperf libtool patchutils bc zlib1g-dev libexpat-dev python3-pip

pip3 install numpy

The build below is a pretty long.

export PATH=/opt/riscv/bin:$PATH

git clone https://github.com/riscv/riscv-gnu-toolchain

cd riscv-gnu-toolchain

./configure --prefix=/opt/riscv --with-arch=rv32im --with-abi=ilp32

sudo make

git clone https://github.com/ztachip/ztachip.git

export PATH=/opt/riscv/bin:$PATH

cd ztachip

cd SW/compiler

make clean all

cd ../fs

python3 bin2c.py

cd ..

make clean all -f makefile.kernels

make clean all

You are required to complete the previous build procedure for standalone image even if your target image is micropython image. Below is procedure to build micropython image after you have completed the standalone image build procedure.

git clone https://github.com/micropython/micropython.git

cd micropython/ports

cp -avr <ztachip installation folder>/micropython/ztachip_port .

cd ztachip_port

export PATH=/opt/riscv/bin:$PATH

export ZTACHIP=<ztachip installation folder>

make clean

make

-

Download Xilinx Vivado Webpack free edition.

-

Create the project file, build FPGA image and program it to flash as described in FPGA build procedure

The following demos are demonstrated on the ArtyA7-100T FPGA development board.

-

Image classification with TensorFlow's Mobinet

-

Object detection with TensorFlow's SSD-Mobinet

-

Edge detection using Canny algorithm

-

Point-of-interest using Harris-Corner algorithm

-

Motion detection

-

Multi-tasking with ObjectDetection, edge detection, Harris-Corner, Motion Detection running at same time

To run the demo, press button0 to switch between different AI/vision applications.

Reference design example required the hardware components below...

Attach the VGA and Camera modules to Arty-A7 board according to picture below

Connect camera_module to Arty board according to picture below

If you are running ztachip's micropython image, then you need to connect to the serial port. Arty-A7 provides serial port connectivity via USB. Serial port flow control must be disabled.

sudo minicom -w -D /dev/ttyUSB1

Note: After the first time connecting to serial port, reset the board again (press button next to USB port and wait for led to turn green) since USB serial must be the first device to connect to USB before ztachip.

In this example, we will load the program using GDB debugger and JTAG

sudo apt-get install libtool automake libusb-1.0.0-dev texinfo libusb-dev libyaml-dev pkg-config

git clone https://github.com/SpinalHDL/openocd_riscv

cd openocd_riscv

./bootstrap

./configure --enable-ftdi --enable-dummy

make

cp <ztachip installation folder>/tools/openocd/soc_init.cfg .

cp <ztachip installation folder>/tools/openocd/usb_connect.cfg .

cp <ztachip installation folder>/tools/openocd/xilinx-xc7.cfg .

cp <ztachip installation folder>/tools/openocd/jtagspi.cfg .

cp <ztachip installation folder>/tools/openocd/cpu0.yaml .

Make sure the green led below the reset button (near USB connector) is on. This indicates that FPGA has been loaded correctly. Then launch OpenOCD to provide JTAG connectivity for GDB debugger

cd <openocd_riscv installation folder>

sudo src/openocd -f usb_connect.cfg -c 'set MURAX_CPU0_YAML cpu0.yaml' -f soc_init.cfg

Open another terminal, then issue commands below to upload the standalone image

export PATH=/opt/riscv/bin:$PATH

cd <ztachip installation folder>/SW/src

riscv32-unknown-elf-gdb ../build/ztachip.elf

Open another terminal, then issue commands below to upload the micropython image.

export PATH=/opt/riscv/bin:$PATH

cd <Micropython installation folder>/ports/ztachip_port

riscv32-unknown-elf-gdb ./build/firmware.elf

From GDB debugger prompt, issue the commands below This step takes some time since some AI models are also transfered.

set pagination off

target remote localhost:3333

set remotetimeout 60

set arch riscv:rv32

monitor reset halt

load

After sucessfully loading the program, issue command below at GDB prompt

continue

If you are running the standalone image, press button0 to switch between different AI/vision applications. The sample application running is implemented in vision_ai.cpp

If you are running the micropython image, Micropython allows for entering python code in paste mode at the serial port.

To use the paste mode, hit Ctrl+E then paste one of the examples to the serial port, then hit ctrl+D to execute the python code.

Hit any button to return back to Micropython prompt.

Click here for procedure on how to port ztachip and its applications to other FPGA/ASIC and SOC.

First build example test program for simulation. The example test program is under SW/apps/test and SW/sim

export PATH=/opt/riscv/bin:$PATH

cd ztachip

cd SW/compiler

make clean all

cd ..

make clean all -f makefile.kernels

make clean all -f makefile.sim

Copy the generated image /SW/build/ztachip_sim.hex to folder where you run your simulator.

This image will be loaded to the simulated memory.

Then compile all RTL codes below for simulation

HW/src

HW/platform/simulation

HW/simulation

HW/riscv/sim

The top component of your simulation is HW/simulation/main.vhd

Provide clock to main:clk

main:led_out should blink everytime a test result is passed.

This project is free to use. You can open an issue or a discussion on github. But for business consulting and support, please contact us

Follow ztachip on TwitterFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ztachip

Similar Open Source Tools

ztachip

ztachip is a RISCV accelerator designed for vision and AI edge applications, offering up to 20-50x acceleration compared to non-accelerated RISCV implementations. It features an innovative tensor processor hardware to accelerate various vision tasks and TensorFlow AI models. ztachip introduces a new tensor programming paradigm for massive processing/data parallelism. The repository includes technical documentation, code structure, build procedures, and reference design examples for running vision/AI applications on FPGA devices. Users can build ztachip as a standalone executable or a micropython port, and run various AI/vision applications like image classification, object detection, edge detection, motion detection, and multi-tasking on supported hardware.

frame-codebase

The Frame Firmware & RTL Codebase is a comprehensive repository containing code for the Frame hardware system architecture. It includes sections for nRF52 Application, nRF52 Bootloader, and FPGA RTL. The nRF52 handles system operation, Lua scripting, Bluetooth networking, AI tasks, and power management, while the FPGA accelerates graphics and camera processing. The repository provides instructions for firmware development, debugging in VSCode, and FPGA development using tools like ARM GCC Toolchain, nRF Command Line Tools, Yosys, Project Oxide, and nextpnr. Users can build and flash projects for nRF52840 DK, modify FPGA RTL, and access pre-built accelerators bundled in the repo.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

ai-engine-direct-helper

A simple way to build AI applications based on Qualcomm® AI Runtime SDK. QAI AppBuilder simplifies the deployment of QNN models by encapsulating model execution APIs into simplified interfaces for loading models onto NPU/HTP and performing inference. It supports both C++ and Python projects, Windows and Linux platforms, Genie (Large Language Model), LLM on CPU & NPU, multimodal LLM, float & native input/output data, multi-graph, LoRA, multiple models, inputs & outputs. It makes app development easier, model testing faster, and provides plenty of sample code.

cortex

Nitro is a high-efficiency C++ inference engine for edge computing, powering Jan. It is lightweight and embeddable, ideal for product integration. The binary of nitro after zipped is only ~3mb in size with none to minimal dependencies (if you use a GPU need CUDA for example) make it desirable for any edge/server deployment.

InfiniStore

InfiniStore is an open-source high-performance KV store designed to support LLM Inference clusters. It provides high-performance and low-latency KV cache transfer and reuse among inference nodes. In addition to inference clusters, it can be used as a standalone KV store for integration with LLM training or inference services. InfiniStore is currently integrated with vLLM via LMCache and is in progress for integration with SGLang and other inference engines.

xlang

XLang™ is a cutting-edge language designed for AI and IoT applications, offering exceptional dynamic and high-performance capabilities. It excels in distributed computing and seamless integration with popular languages like C++, Python, and JavaScript. Notably efficient, running 3 to 5 times faster than Python in AI and deep learning contexts. Features optimized tensor computing architecture for constructing neural networks through tensor expressions. Automates tensor data flow graph generation and compilation for specific targets, enhancing GPU performance by 6 to 10 times in CUDA environments.

batteries-included

Batteries Included is an all-in-one platform for building and running modern applications, simplifying cloud infrastructure complexity. It offers production-ready capabilities through an intuitive interface, focusing on automation, security, and enterprise-grade features. The platform includes databases like PostgreSQL and Redis, AI/ML capabilities with Jupyter notebooks, web services deployment, security features like SSL/TLS management, and monitoring tools like Grafana dashboards. Batteries Included is designed to streamline infrastructure setup and management, allowing users to concentrate on application development without dealing with complex configurations.

eShopSupport

eShopSupport is a sample .NET application showcasing common use cases and development practices for building AI solutions in .NET, specifically Generative AI. It demonstrates a customer support application for an e-commerce website using a services-based architecture with .NET Aspire. The application includes support for text classification, sentiment analysis, text summarization, synthetic data generation, and chat bot interactions. It also showcases development practices such as developing solutions locally, evaluating AI responses, leveraging Python projects, and deploying applications to the Cloud.

blinkid-react-native

BlinkID SDK wrapper for React Native provides best-in-class ID scanning software for cross-platform apps built with React Native. It offers complete guidance on installing and linking BlinkID library with iOS and Android apps. The SDK requires a valid license key for scanning, with offline data extraction. It supports React Native v0.71.2 and includes installation and linking instructions for iOS and Android. The repository also contains a script to create a sample React Native project and dependencies. Video tutorials demonstrate using documentVerificationOverlay and CombinedRecognizer for scanning various document types.

OmniSteward

OmniSteward is an AI-powered steward system based on large language models that can interact with users through voice or text to help control smart home devices and computer programs. It supports multi-turn dialogue, tool calling for complex tasks, multiple LLM models, voice recognition, smart home control, computer program management, online information retrieval, command line operations, and file management. The system is highly extensible, allowing users to customize and share their own tools.

sbnb

Sbnb Linux is a minimalist Linux distribution designed for bare-metal servers, offering fast tunnels for remote connections. It supports confidential computing and is ideal for environments from home labs to distributed data centers. The OS runs in memory, is immutable, and features a predictable update cadence. Users can deploy popular AI tools on bare metal using Sbnb Linux in an automated way. The system is resilient to power outages and supports flexible environments with Docker containers. Sbnb Linux is built with Buildroot, ensuring easy maintenance and updates.

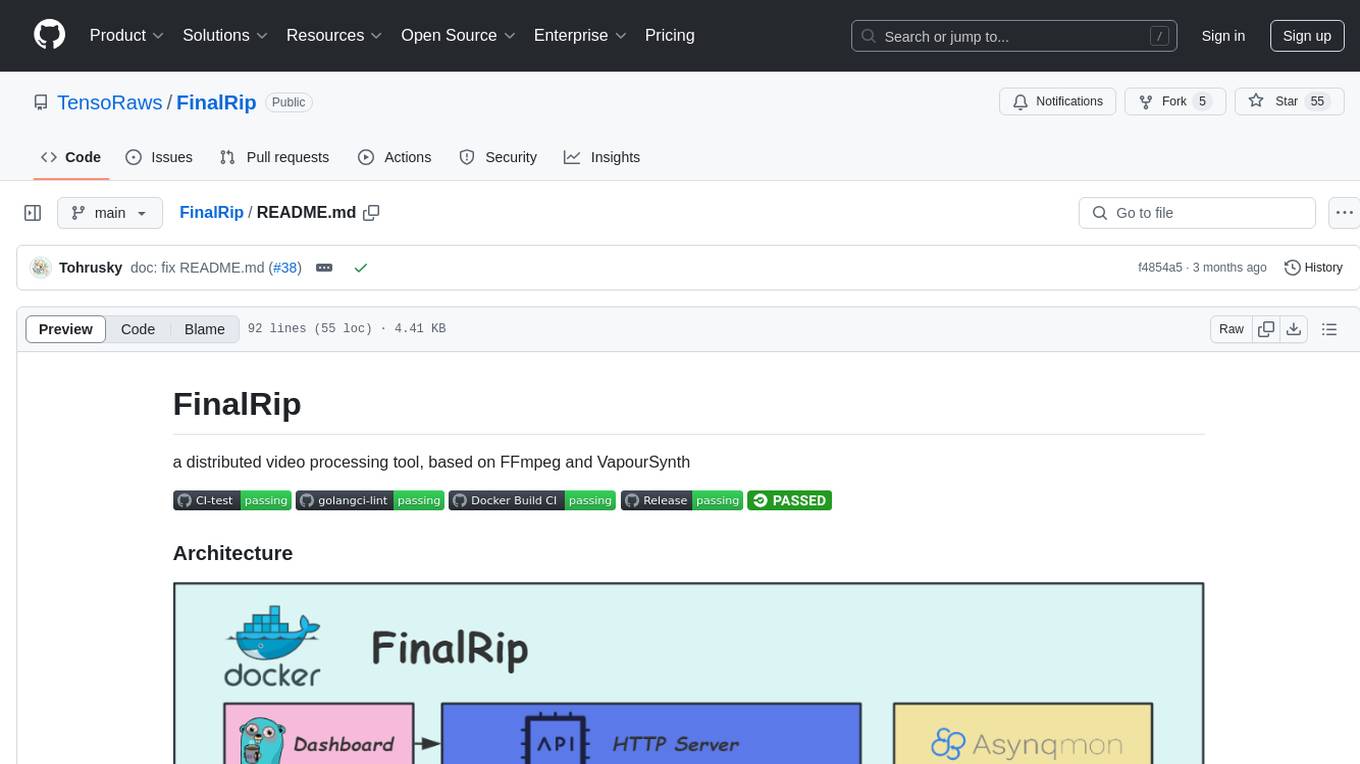

FinalRip

FinalRip is a distributed video processing tool based on FFmpeg and VapourSynth. It cuts the original video into multiple clips, processes each clip in parallel, and merges them into the final video. Users can deploy the system in a distributed way, configure settings via environment variables or remote config files, and develop/test scripts in the vs-playground environment. It supports Nvidia GPU, AMD GPU with ROCm support, and provides a dashboard for selecting compatible scripts to process videos.

anthrax-ai

AnthraxAI is a Vulkan-based game engine that allows users to create and develop 3D games. The engine provides features such as scene selection, camera movement, object manipulation, debugging tools, audio playback, and real-time shader code updates. Users can build and configure the project using CMake and compile shaders using the glslc compiler. The engine supports building on both Linux and Windows platforms, with specific dependencies for each. Visual Studio Code integration is available for building and debugging the project, with instructions provided in the readme for setting up the workspace and required extensions.

pyrfuniverse

pyrfuniverse is a python package used to interact with RFUniverse simulation environment. It is developed with reference to ML-Agents and produce new features. The package allows users to work with RFUniverse for simulation purposes, providing tools and functionalities to interact with the environment and create new features.

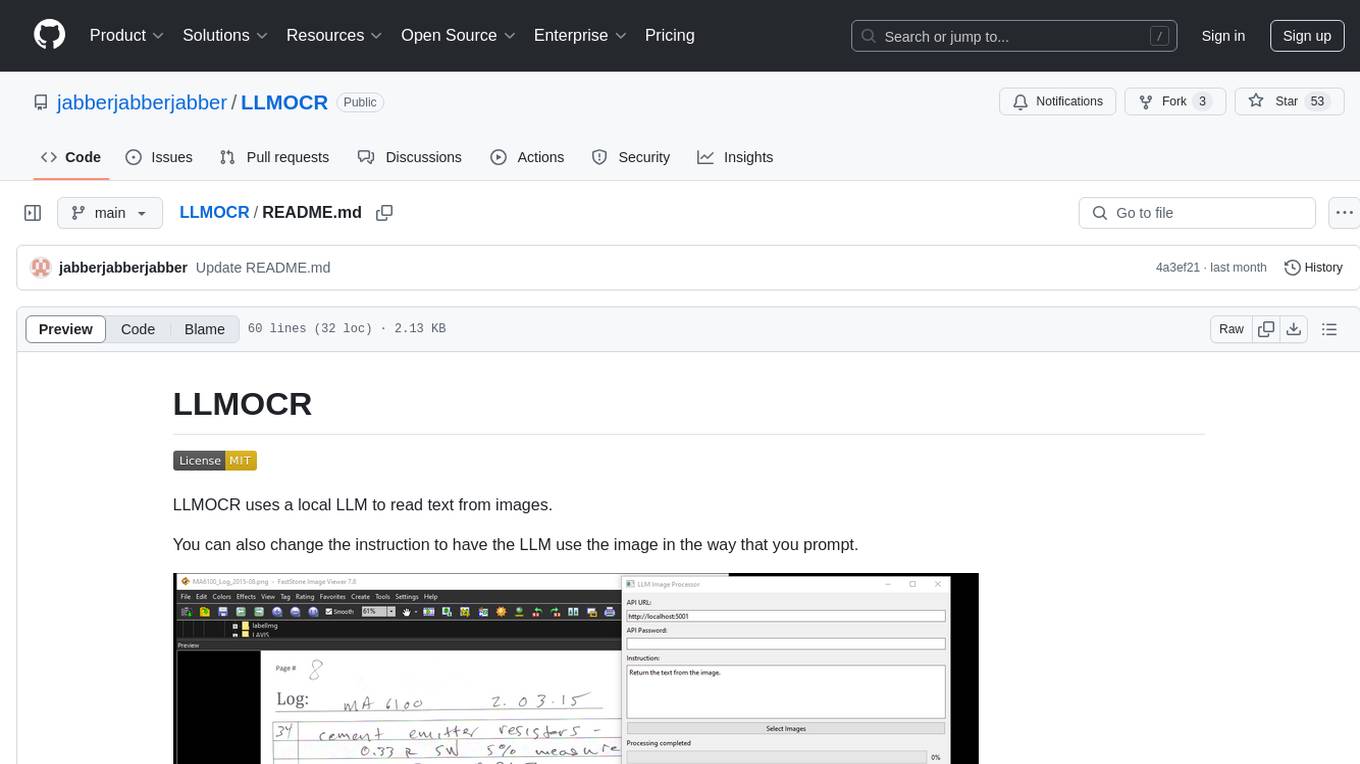

LLMOCR

LLMOCR is a tool that utilizes a local Large Language Model (LLM) to extract text from images. It offers a user-friendly GUI and supports GPU acceleration for faster inference. The tool is cross-platform, compatible with Windows, macOS ARM, and Linux. Users can prompt the LLM to process images in a customized way. The processing is done locally on the user's machine, ensuring data privacy and security. LLMOCR requires Python 3.8 or higher and KoboldCPP for installation and operation.

For similar tasks

AIOsense

AIOsense is an all-in-one sensor that is modular, affordable, and easy to solder. It is designed to be an alternative to commercially available sensors and focuses on upgradeability. AIOsense is cheaper and better than most commercial sensors and supports a variety of sensors and modules, including: - (RGB)-LED - Barometer - Breath VOC equivalent - Buzzer / Beeper - CO² equivalent - Humidity sensor - Light / Illumination sensor - PIR motion sensor - Temperature sensor - mmWave / Radar sensor Upcoming features include full voice assistant support, microphone, and speaker. All supported sensors & modules are listed in the documentation. AIOsense has a low power consumption, with an idle power consumption of 0.45W / 0.09A on a fully equipped board. Without a mmWave sensor, the idle power consumption is around 0.11W / 0.02A. To get started with AIOsense, you can refer to the documentation. If you have any questions, you can open an issue.

viseron

Viseron is a self-hosted, local-only NVR and AI computer vision software that provides features such as object detection, motion detection, and face recognition. It allows users to monitor their home, office, or any other place they want to keep an eye on. Getting started with Viseron is easy by spinning up a Docker container and editing the configuration file using the built-in web interface. The software's functionality is enabled by components, which can be explored using the Component Explorer. Contributors are welcome to help with implementing open feature requests, improving documentation, and answering questions in issues or discussions. Users can also sponsor Viseron or make a one-time donation.

ztachip

ztachip is a RISCV accelerator designed for vision and AI edge applications, offering up to 20-50x acceleration compared to non-accelerated RISCV implementations. It features an innovative tensor processor hardware to accelerate various vision tasks and TensorFlow AI models. ztachip introduces a new tensor programming paradigm for massive processing/data parallelism. The repository includes technical documentation, code structure, build procedures, and reference design examples for running vision/AI applications on FPGA devices. Users can build ztachip as a standalone executable or a micropython port, and run various AI/vision applications like image classification, object detection, edge detection, motion detection, and multi-tasking on supported hardware.

awesome-pi-agent

Awesome Pi Agent is a versatile and powerful tool for building intelligent agents on Raspberry Pi. It provides a framework for developing AI-powered applications that can interact with the physical world through sensors and actuators. With a focus on simplicity and extensibility, this tool enables users to create a wide range of smart devices, from home automation systems to robotics projects. The agent can be easily customized and integrated with various AI algorithms and libraries, making it suitable for both beginners and advanced users interested in exploring the intersection of AI and IoT technologies.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.