ai-exploits

A collection of real world AI/ML exploits for responsibly disclosed vulnerabilities

Stars: 1303

AI Exploits is a repository that showcases practical attacks against AI/Machine Learning infrastructure, aiming to raise awareness about vulnerabilities in the AI/ML ecosystem. It contains exploits and scanning templates for responsibly disclosed vulnerabilities affecting machine learning tools, including Metasploit modules, Nuclei templates, and CSRF templates. Users can use the provided Docker image to easily run the modules and templates. The repository also provides guidelines for using Metasploit modules, Nuclei templates, and CSRF templates to exploit vulnerabilities in machine learning tools.

README:

The AI world has a security problem and it's not just in the inputs given to LLMs such as ChatGPT. Based on research done by Protect AI and independent security experts on the Huntr Bug Bounty Platform, there are far more impactful and practical attacks against the tools, libraries and frameworks used to build, train, and deploy machine learning models. Many of these attacks lead to complete system takeovers and/or loss of sensitive data, models, or credentials most often without the need for authentication.

With the release of this repository, Protect AI hopes to demystify to the Information Security community what practical attacks against AI/Machine Learning infrastructure look like in the real world and raise awareness to the amount of vulnerable components that currently exist in the AI/ML ecosystem. More vulnerabilities can be found here:

- November Vulnerability Report

- December Vulnerability Report

- January Vulnerability Report

- February Vulnerability Report

- March Vulnerability Report

- April Vulnerability Report

- May Vulnerbility Report

This repository, ai-exploits, is a collection of exploits and scanning templates for responsibly disclosed vulnerabilities affecting machine learning tools.

Each vulnerable tool has a number of subfolders containing three types of utilities: Metasploit modules, Nuclei templates and CSRF templates. Metasploit modules are for security professionals looking to exploit the vulnerabilities and Nuclei templates are for scanning a large number of remote servers to determine if they're vulnerable.

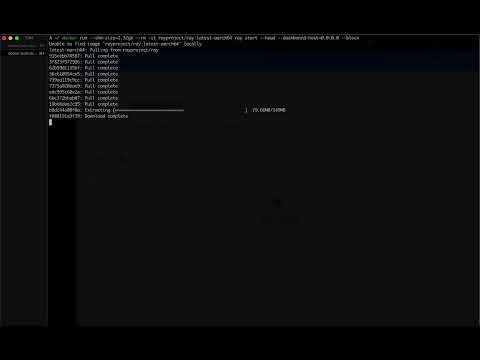

Video demonstrating running one of the Metasploit modules against Ray:

The easiest way to use the modules and scanning templates is to build and run the Docker image provided by the Dockerfile in this repository. The Docker image will have Metasploit and Nuclei already installed along with all the necessary configuration.

-

Build the image:

git clone https://github.com/protectai/ai-exploits && cd ai-exploits docker build -t protectai/ai-exploits .

-

Run the docker image:

docker run -it --rm protectai/ai-exploits /bin/bash

The latter command will drop you into a bash session in the container with msfconsole and nuclei ready to go.

Start the Metasploit console (the new modules will be available under the exploits/protectai category), load a module, set the options, and run the exploit.

msfconsole

msf6 > use exploit/protectai/ray_job_rce

msf6 exploit(protectai/ray_job_rce) > set RHOSTS <target IP>

msf6 exploit(protectai/ray_job_rce) > runCreate a folder ~/.msf4/modules/exploits/protectai and copy the exploit modules into it.

mkdir -p ~/.msf4/modules/exploits/protectai

cp ai-exploits/ray/msfmodules/* ~/.msf4/modules/exploits/protectai

msfconsole

msf6 > use exploit/protectai/<exploit_name.py>Nuclei is a vulnerability scanning engine which can be used to scan large numbers of servers for known vulnerabilities in web applications and networks.

Navigate to nuclei templates folder such as ai-exploits/mlflow/nuclei-templates. In the Docker container these are stored in the /root/nuclei-templates folder. Then simply point to the template file and the target server.

cd ai-exploits/mlflow/nuclei-templates

nuclei -t mlflow-lfi.yaml -u http://<target>:<port>`

Cross-Site Request Forgery (CSRF) vulnerabilities enable attackers to stand up a web server hosting a malicious HTML page that will execute a request to the target server on behalf of the victim. This is a common attack vector for exploiting vulnerabilities in web applications, including web applications which are only exposed on the localhost interface and not to the broader network. Below is a simple demo example of how to use a CSRF template to exploit a vulnerability in a web application.

Start a web server in the csrf-templates folder. Python allows one to stand up a simple web server in any directory. Navigate to the template folder and start the server.

cd ai-exploits/ray/csrf-templates

python3 -m http.server 9999Now visit the web server address you just stood up (http://127.0.0.1:9999) and hit F12 to open the developer tools, then click the Network tab. Click the link to ray-cmd-injection-csrf.html. You should see that the browser sent a request to the vulnerable server on your behalf.

We welcome contributions to this repository. Please read our Contribution Guidelines for more information on how to contribute.

This project is licensed under the Apache 2.0 License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-exploits

Similar Open Source Tools

ai-exploits

AI Exploits is a repository that showcases practical attacks against AI/Machine Learning infrastructure, aiming to raise awareness about vulnerabilities in the AI/ML ecosystem. It contains exploits and scanning templates for responsibly disclosed vulnerabilities affecting machine learning tools, including Metasploit modules, Nuclei templates, and CSRF templates. Users can use the provided Docker image to easily run the modules and templates. The repository also provides guidelines for using Metasploit modules, Nuclei templates, and CSRF templates to exploit vulnerabilities in machine learning tools.

cluster-toolkit

Cluster Toolkit is an open-source software by Google Cloud for deploying AI/ML and HPC environments on Google Cloud. It allows easy deployment following best practices, with high customization and extensibility. The toolkit includes tutorials, examples, and documentation for various modules designed for AI/ML and HPC use cases.

vertex-ai-creative-studio

GenMedia Creative Studio is an application showcasing the capabilities of Google Cloud Vertex AI generative AI creative APIs. It includes features like Gemini for prompt rewriting and multimodal evaluation of generated images. The app is built with Mesop, a Python-based UI framework, enabling rapid development of web and internal apps. The Experimental folder contains stand-alone applications and upcoming features demonstrating cutting-edge generative AI capabilities, such as image generation, prompting techniques, and audio/video tools.

vespa

Vespa is a platform that performs operations such as selecting a subset of data in a large corpus, evaluating machine-learned models over the selected data, organizing and aggregating it, and returning it, typically in less than 100 milliseconds, all while the data corpus is continuously changing. It has been in development for many years and is used on a number of large internet services and apps which serve hundreds of thousands of queries from Vespa per second.

airbroke

Airbroke is an open-source error catcher tool designed for modern web applications. It provides a PostgreSQL-based backend with an Airbrake-compatible HTTP collector endpoint and a React-based frontend for error management. The tool focuses on simplicity, maintaining a small database footprint even under heavy data ingestion. Users can ask AI about issues, replay HTTP exceptions, and save/manage bookmarks for important occurrences. Airbroke supports multiple OAuth providers for secure user authentication and offers occurrence charts for better insights into error occurrences. The tool can be deployed in various ways, including building from source, using Docker images, deploying on Vercel, Render.com, Kubernetes with Helm, or Docker Compose. It requires Node.js, PostgreSQL, and specific system resources for deployment.

raggenie

RAGGENIE is a low-code RAG builder tool designed to simplify the creation of conversational AI applications. It offers out-of-the-box plugins for connecting to various data sources and building conversational AI on top of them, including integration with pre-built agents for actions. The tool is open-source under the MIT license, with a current focus on making it easy to build RAG applications and future plans for maintenance, monitoring, and transitioning applications from pilots to production.

FlowTest

FlowTestAI is the world’s first GenAI powered OpenSource Integrated Development Environment (IDE) designed for crafting, visualizing, and managing API-first workflows. It operates as a desktop app, interacting with the local file system, ensuring privacy and enabling collaboration via version control systems. The platform offers platform-specific binaries for macOS, with versions for Windows and Linux in development. It also features a CLI for running API workflows from the command line interface, facilitating automation and CI/CD processes.

genai-for-marketing

This repository provides a deployment guide for utilizing Google Cloud's Generative AI tools in marketing scenarios. It includes step-by-step instructions, examples of crafting marketing materials, and supplementary Jupyter notebooks. The demos cover marketing insights, audience analysis, trendspotting, content search, content generation, and workspace integration. Users can access and visualize marketing data, analyze trends, improve search experience, and generate compelling content. The repository structure includes backend APIs, frontend code, sample notebooks, templates, and installation scripts.

modus

Modus is an open-source, serverless framework for building APIs powered by WebAssembly. It simplifies integrating AI models, data, and business logic with sandboxed execution. Modus extracts metadata, compiles code with optimizations, caches compiled modules, prepares invocation plans, generates API schema, and activates endpoints. Users query the endpoint, and Modus loads compiled code into a sandboxed environment, runs code securely, queries data and AI models, and responds via API. It provides a production-ready scalable endpoint for AI-enabled apps, optimized for sub-second response times. Modus supports programming languages like AssemblyScript and Go, and can be hosted on Hypermode or any platform. Developed by Hypermode as an open-source project, Modus welcomes external contributions.

shipstation

ShipStation is an AI-based website and agents generation platform that optimizes landing page websites and generic connect-anything-to-anything services. It enables seamless communication between service providers and integration partners, offering features like user authentication, project management, code editing, payment integration, and real-time progress tracking. The project architecture includes server-side (Node.js) and client-side (React with Vite) components. Prerequisites include Node.js, npm or yarn, Anthropic API key, Supabase account, Tavily API key, and Razorpay account. Setup instructions involve cloning the repository, setting up Supabase, configuring environment variables, and starting the backend and frontend servers. Users can access the application through the browser, sign up or log in, create landing pages or portfolios, and get websites stored in an S3 bucket. Deployment to Heroku involves building the client project, committing changes, and pushing to the main branch. Contributions to the project are encouraged, and the license encourages doing good.

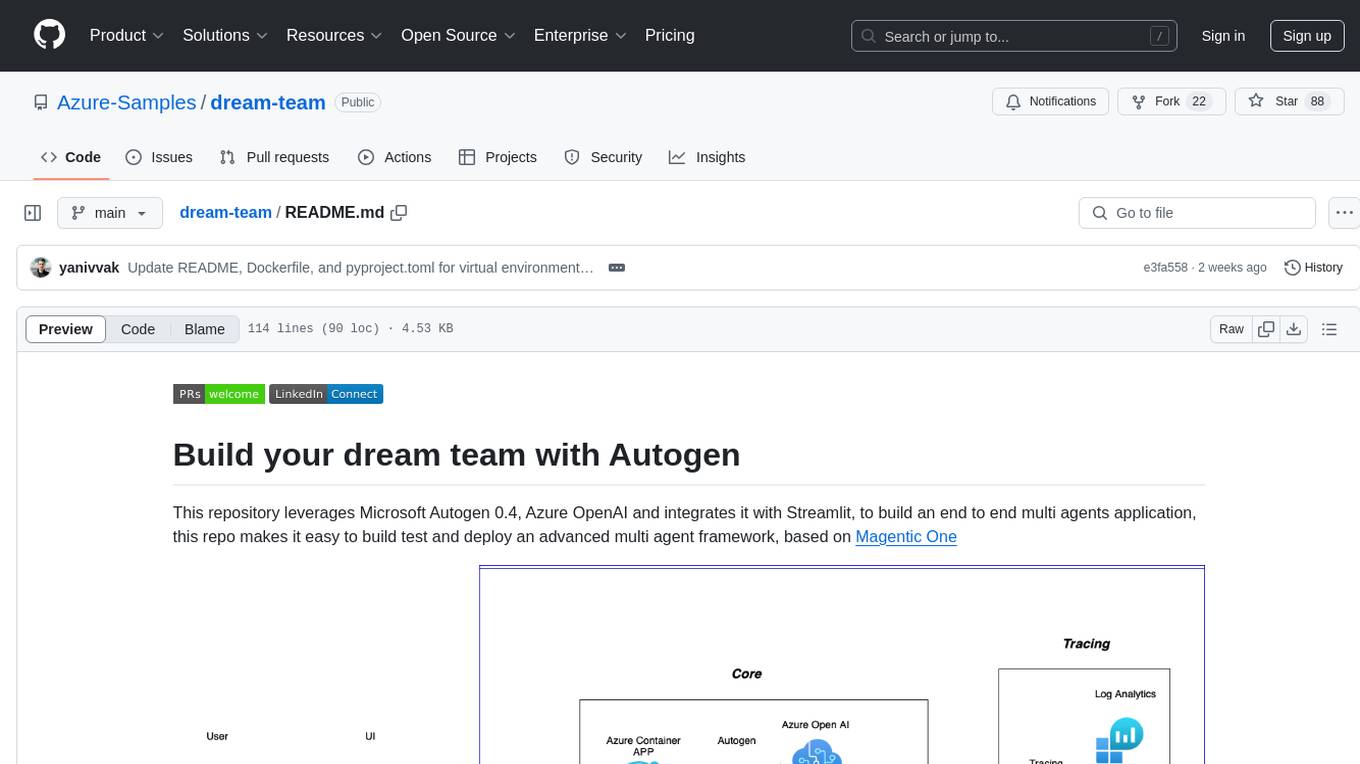

dream-team

Build your dream team with Autogen is a repository that leverages Microsoft Autogen 0.4, Azure OpenAI, and Streamlit to create an end-to-end multi-agent application. It provides an advanced multi-agent framework based on Magentic One, with features such as a friendly UI, single-line deployment, secure code execution, managed identities, and observability & debugging tools. Users can deploy Azure resources and the app with simple commands, work locally with virtual environments, install dependencies, update configurations, and run the application. The repository also offers resources for learning more about building applications with Autogen.

agentok

Agentok Studio is a visual tool built for AutoGen, a cutting-edge agent framework from Microsoft and various contributors. It offers intuitive visual tools to simplify the construction and management of complex agent-based workflows. Users can create workflows visually as graphs, chat with agents, and share flow templates. The tool is designed to streamline the development process for creators and developers working on next-generation Multi-Agent Applications.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

Open_Data_QnA

Open Data QnA is a Python library that allows users to interact with their PostgreSQL or BigQuery databases in a conversational manner, without needing to write SQL queries. The library leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, enabling users to ask questions in natural language and receive informative responses. It offers features such as conversational querying with multiturn support, table grouping, multi schema/dataset support, SQL generation, query refinement, natural language responses, visualizations, and extensibility. The library is built on a modular design and supports various components like Database Connectors, Vector Stores, and Agents for SQL generation, validation, debugging, descriptions, embeddings, responses, and visualizations.

trinityX

TrinityX is an open-source HPC, AI, and cloud platform designed to provide all services required in a modern system, with full customization options. It includes default services like Luna node provisioner, OpenLDAP, SLURM or OpenPBS, Prometheus, Grafana, OpenOndemand, and more. TrinityX also sets up NFS-shared directories, OpenHPC applications, environment modules, HA, and more. Users can install TrinityX on Enterprise Linux, configure network interfaces, set up passwordless authentication, and customize the installation using Ansible playbooks. The platform supports HA, OpenHPC integration, and provides detailed documentation for users to contribute to the project.

conversational-agent-langchain

This repository contains a Rest-Backend for a Conversational Agent that allows embedding documents, semantic search, QA based on documents, and document processing with Large Language Models. It uses Aleph Alpha and OpenAI Large Language Models to generate responses to user queries, includes a vector database, and provides a REST API built with FastAPI. The project also features semantic search, secret management for API keys, installation instructions, and development guidelines for both backend and frontend components.

For similar tasks

ai-exploits

AI Exploits is a repository that showcases practical attacks against AI/Machine Learning infrastructure, aiming to raise awareness about vulnerabilities in the AI/ML ecosystem. It contains exploits and scanning templates for responsibly disclosed vulnerabilities affecting machine learning tools, including Metasploit modules, Nuclei templates, and CSRF templates. Users can use the provided Docker image to easily run the modules and templates. The repository also provides guidelines for using Metasploit modules, Nuclei templates, and CSRF templates to exploit vulnerabilities in machine learning tools.

hackingBuddyGPT

hackingBuddyGPT is a framework for testing LLM-based agents for security testing. It aims to create common ground truth by creating common security testbeds and benchmarks, evaluating multiple LLMs and techniques against those, and publishing prototypes and findings as open-source/open-access reports. The initial focus is on evaluating the efficiency of LLMs for Linux privilege escalation attacks, but the framework is being expanded to evaluate the use of LLMs for web penetration-testing and web API testing. hackingBuddyGPT is released as open-source to level the playing field for blue teams against APTs that have access to more sophisticated resources.

PentestGPT

PentestGPT provides advanced AI and integrated tools to help security teams conduct comprehensive penetration tests effortlessly. Scan, exploit, and analyze web applications, networks, and cloud environments with ease and precision, without needing expert skills. The tool utilizes Supabase for data storage and management, and Vercel for hosting the frontend. It offers a local quickstart guide for running the tool locally and a hosted quickstart guide for deploying it in the cloud. PentestGPT aims to simplify the penetration testing process for security professionals and enthusiasts alike.

nebula

Nebula is an advanced, AI-powered penetration testing tool designed for cybersecurity professionals, ethical hackers, and developers. It integrates state-of-the-art AI models into the command-line interface, automating vulnerability assessments and enhancing security workflows with real-time insights and automated note-taking. Nebula revolutionizes penetration testing by providing AI-driven insights, enhanced tool integration, AI-assisted note-taking, and manual note-taking features. It also supports any tool that can be invoked from the CLI, making it a versatile and powerful tool for cybersecurity tasks.

awsome_kali_MCPServers

awsome-kali-MCPServers is a repository containing Model Context Protocol (MCP) servers tailored for Kali Linux environments. It aims to optimize reverse engineering, security testing, and automation tasks by incorporating powerful tools and flexible features. The collection includes network analysis tools, support for binary understanding, and automation scripts to streamline repetitive tasks. The repository is continuously evolving with new features and integrations based on the FastMCP framework, such as network scanning, symbol analysis, binary analysis, string extraction, network traffic analysis, and sandbox support using Docker containers.

commands

Production-ready slash commands for Claude Code that accelerate development through intelligent automation and multi-agent orchestration. Contains 52 commands organized into workflows and tools categories. Workflows orchestrate complex tasks with multiple agents, while tools provide focused functionality for specific development tasks. Commands can be used with prefixes for organization or flattened for convenience. Best practices include using workflows for complex tasks and tools for specific scopes, chaining commands strategically, and providing detailed context for effective usage.

For similar jobs

ail-framework

AIL framework is a modular framework to analyze potential information leaks from unstructured data sources like pastes from Pastebin or similar services or unstructured data streams. AIL framework is flexible and can be extended to support other functionalities to mine or process sensitive information (e.g. data leak prevention).

ai-exploits

AI Exploits is a repository that showcases practical attacks against AI/Machine Learning infrastructure, aiming to raise awareness about vulnerabilities in the AI/ML ecosystem. It contains exploits and scanning templates for responsibly disclosed vulnerabilities affecting machine learning tools, including Metasploit modules, Nuclei templates, and CSRF templates. Users can use the provided Docker image to easily run the modules and templates. The repository also provides guidelines for using Metasploit modules, Nuclei templates, and CSRF templates to exploit vulnerabilities in machine learning tools.

NGCBot

NGCBot is a WeChat bot based on the HOOK mechanism, supporting scheduled push of security news from FreeBuf, Xianzhi, Anquanke, and Qianxin Attack and Defense Community, KFC copywriting, filing query, phone number attribution query, WHOIS information query, constellation query, weather query, fishing calendar, Weibei threat intelligence query, beautiful videos, beautiful pictures, and help menu. It supports point functions, automatic pulling of people, ad detection, automatic mass sending, Ai replies, rich customization, and easy for beginners to use. The project is open-source and periodically maintained, with additional features such as Ai (Gpt, Xinghuo, Qianfan), keyword invitation to groups, automatic mass sending, and group welcome messages.

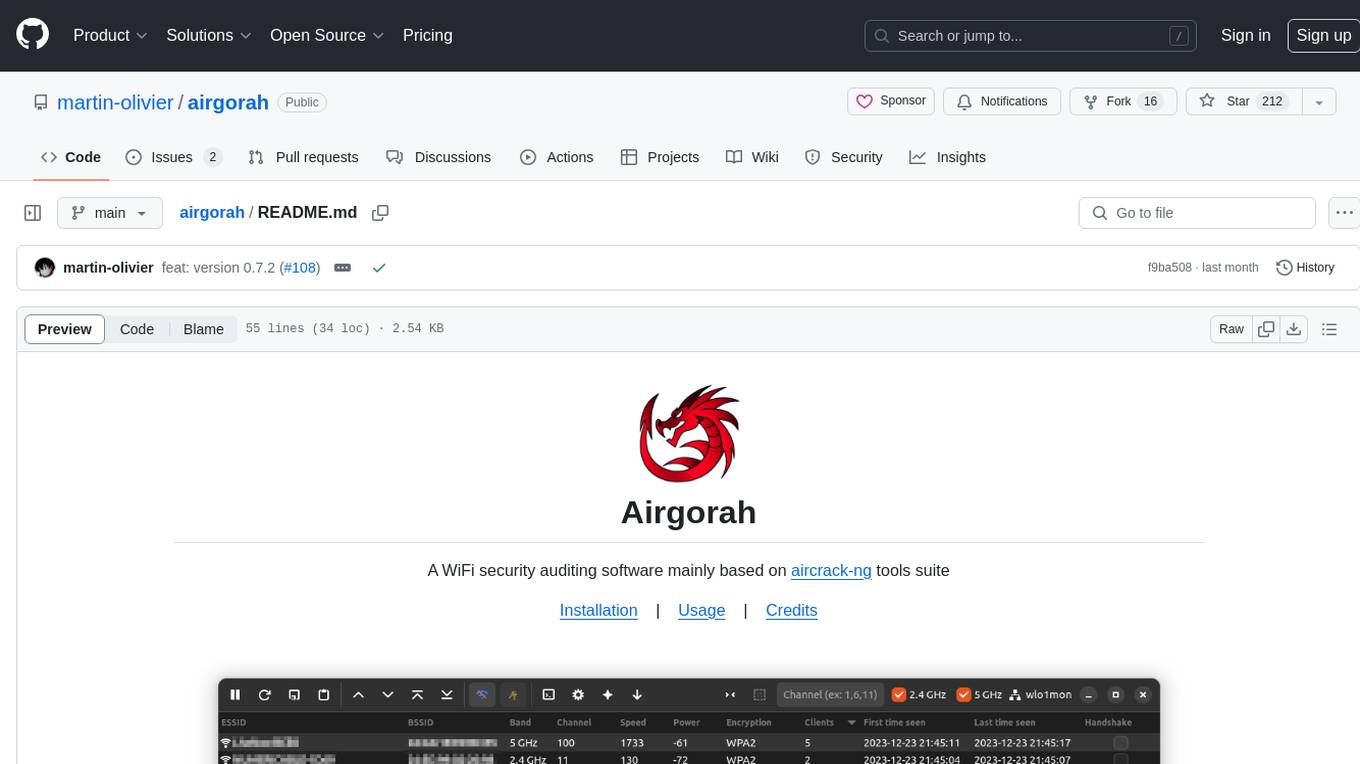

airgorah

Airgorah is a WiFi security auditing software written in Rust that utilizes the aircrack-ng tools suite. It allows users to capture WiFi traffic, discover connected clients, perform deauthentication attacks, capture handshakes, and crack access point passwords. The software is designed for testing and discovering flaws in networks owned by the user, and requires root privileges to run on Linux systems with a wireless network card supporting monitor mode and packet injection. Airgorah is not responsible for any illegal activities conducted with the software.

agentic_security

Agentic Security is an open-source vulnerability scanner designed for safety scanning, offering customizable rule sets and agent-based attacks. It provides comprehensive fuzzing for any LLMs, LLM API integration, and stress testing with a wide range of fuzzing and attack techniques. The tool is not a foolproof solution but aims to enhance security measures against potential threats. It offers installation via pip and supports quick start commands for easy setup. Users can utilize the tool for LLM integration, adding custom datasets, running CI checks, extending dataset collections, and dynamic datasets with mutations. The tool also includes a probe endpoint for integration testing. The roadmap includes expanding dataset variety, introducing new attack vectors, developing an attacker LLM, and integrating OWASP Top 10 classification.

pwnagotchi

Pwnagotchi is an AI tool leveraging bettercap to learn from WiFi environments and maximize crackable WPA key material. It uses LSTM with MLP feature extractor for A2C agent, learning over epochs to improve performance in various WiFi environments. Units can cooperate using a custom parasite protocol. Visit https://www.pwnagotchi.ai for documentation and community links.

DAILA

DAILA is a unified interface for AI systems in decompilers, supporting various decompilers and AI systems. It allows users to utilize local and remote LLMs, like ChatGPT and Claude, and local models such as VarBERT. DAILA can be used as a decompiler plugin with GUI or as a scripting library. It also provides a Docker container for offline installations and supports tasks like summarizing functions and renaming variables in decompilation.

jadx-ai-mcp

JADX-AI-MCP is a plugin for the JADX decompiler that integrates with Model Context Protocol (MCP) to provide live reverse engineering support with LLMs like Claude. It allows for quick analysis, vulnerability detection, and AI code modification, all in real time. The tool combines JADX-AI-MCP and JADX MCP SERVER to analyze Android APKs effortlessly. It offers various prompts for code understanding, vulnerability detection, reverse engineering helpers, static analysis, AI code modification, and documentation. The tool is part of the Zin MCP Suite and aims to connect all android reverse engineering and APK modification tools with a single MCP server for easy reverse engineering of APK files.