enterprise-azureai

Unleash the power of Azure AI to your application developers in a secure & manageable way with Azure API Management and Azure Developer CLI.

Stars: 75

Azure OpenAI Service is a central capability with Azure API Management, providing guidance and tools for organizations to implement Azure OpenAI in a production environment with an emphasis on cost control, secure access, and usage monitoring. It includes infrastructure-as-code templates, CI/CD pipelines, secure access management, usage monitoring, load balancing, streaming requests, and end-to-end samples like ChatApp and Azure Dashboards.

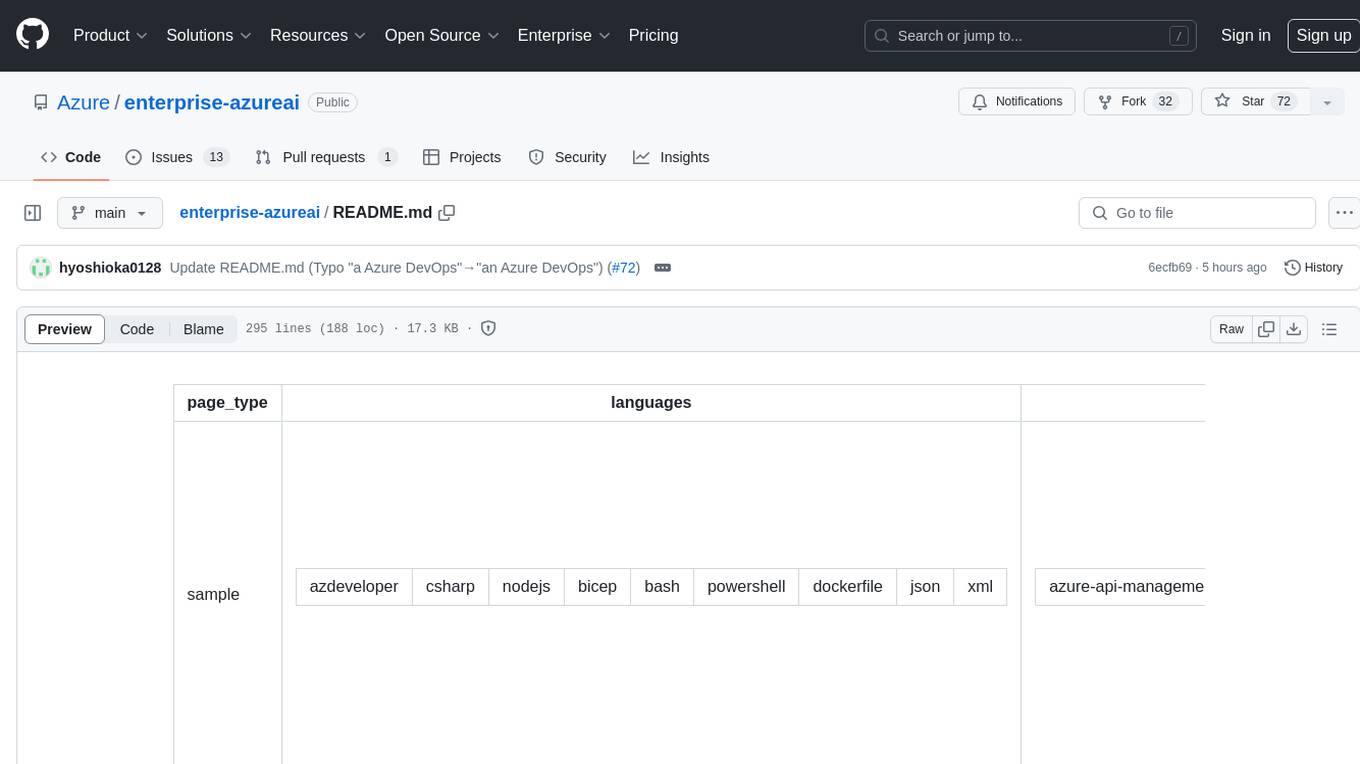

README:

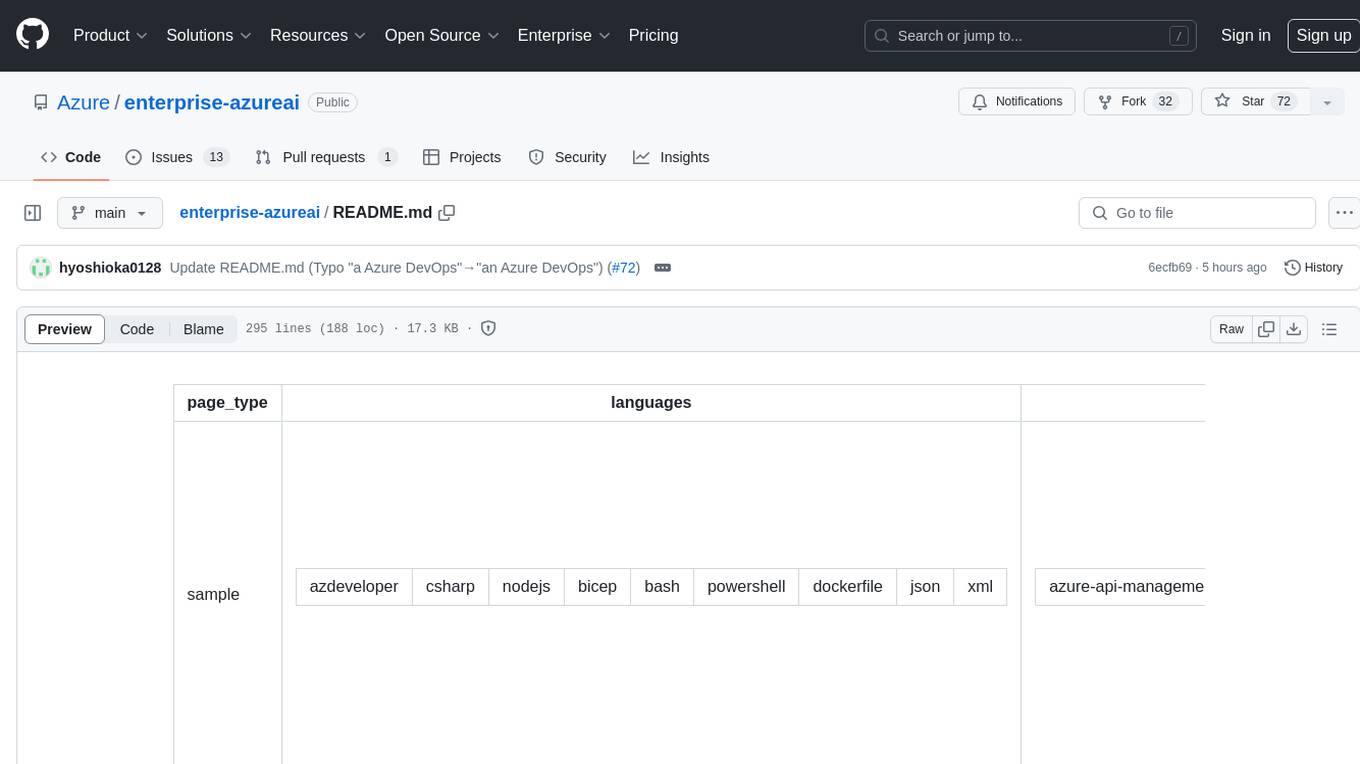

page_type: sample languages:

- azdeveloper

- csharp

- nodejs

- bicep

- bash

- powershell

- dockerfile

- json

- xml products:

- azure-api-management

- azure-app-configuration

- azure-cache-redis

- azure-container-apps

- azure-container-registry

- azure-dns

- azure-log-analytics

- azure-monitor

- azure-policy

- azure-private-link

- dotnet

- azure-app-service

- azure-key-vault

- azure-cosmos-db

- azure-openai urlFragment: enterprise-azureai name: Azure OpenAI Service as a central capability with Azure API Management description: Unleash the power of Azure OpenAI in your company in a secure and manageable way with Azure API Management and Azure Developer CLI

Unleash the power of Azure OpenAI in your company in a secure & manageable way with Azure API Management and Azure Developer CLI (azd).

This repository provides guidance and tools for organizations looking to implement Azure OpenAI in a production environment with an emphasis on cost control, secure access, and usage monitoring. The aim is to enable organizations to effectively manage expenses while ensuring that the consuming application or team is accountable for the costs incurred.

[!NOTE]

This repository uses an AI Proxy to load-balance & log the traffic between Azure API Management and Azure OpenAI Service. In May 2024 Microsoft announced new features in Azure API Management Policies related to integrating with Azure OpenAI Service that overlap with the AI Proxy. We recommend to use the new features in Azure API Management Policies for new deployments, but if you need to implement customizations or additional features in the proxy, the AI Proxy is still very relevant. Forazdimplementation guidance on the new features in Azure API Management Policies, see here.

- Infrastructure-as-code: Bicep templates for provisioning and deploying the resources.

- CI/CD pipeline: GitHub Actions and Azure DevOps Pipelines for continuous deployment of the resources to Azure.

- Secure Access Management: Best practices and configurations for managing secure access to Azure OpenAI Services.

- Usage Monitoring & Cost Control: Solutions for tracking the usage of Azure OpenAI Services to facilitate accurate cost allocation and team charge-back.

- Load Balance: Utilize & loadbalance the capacity of Azure OpenAI across regions or provisioned throughput (PTU)

- Streaming requests: Support for streaming requests to Azure OpenAI, for all features (e.g. additional logging and charge-back)

- End-to-end sample: Including Sample ChatApp, Azure Dashboards, content filters and policies

Read more: Architecture in detail

Read more: Architecture in detail

- Infrastructure-as-code (IaC) Bicep files under the

infrafolder that demonstrate how to provision resources and setup resource tagging for azd. - A dev container configuration file under the

.devcontainerdirectory that installs infrastructure tooling by default. This can be readily used to create cloud-hosted developer environments such as GitHub Codespaces or a local environment via a VSCode DevContainer. - Continuous deployment workflows for CI providers such as GitHub Actions under the

.githubdirectory, and Azure Pipelines under the.azdodirectory that work for most use-cases. - The .NET 8.0 chargeback proxy application under the

srcfolder. - The NodeJS Sample ChatApp application under the

srcfolder.

- Azure Developer CLI

- Azure CLI

- .NET 8.0 SDK

- Docker Desktop

- Node.js v18.17 or higher

- jq required on Mac and Linux

azd init -t Azure/enterprise-azureaiIf you already cloned this repository to your local machine or run from a Dev Container or GitHub Codespaces you can run the following command from the root folder.

azd initIt will prompt you to provide a name that will later be used in the name of the deployed resources. If you're not logged into Azure, it will also prompt you to first login.

azd auth loginThis repository uses environment variables to configure the deployment, which can be used to enable optional features. You can set these variables with the azd env set command. Learn more about all optional features here.

azd env set USE_REDIS_CACHE_APIM '<true-or-false>'

azd env set SECONDARY_OPENAI_LOCATION '<your-secondary-openai-location>'In the azd template, we automatically set an environment variable for your current IP address. During deployment, this allows traffic from your local machine to the Azure Container Registry for deploying the containerized application.

[!NOTE]

To determine your IPv4 address, the service icanhazip.com is being used. To control the IPv4 addresss used directly (without the service), edit the MY_IP_ADDRESS field in the .azure<name>.env file. This file is created after azd init. Without a properly configured IP address, azd up will fail.

azd upIt will prompt you to login, pick a subscription, and provide a location (like "eastus"). We've added an extra conditional parameter to deploy the Sample ChatApp, for demo-ing purposes.

Read more: Sample ChatApp

Read more: Sample ChatApp

Then it will provision the resources in your account and deploy the latest code.

[!NOTE]

Because Azure OpenAI isn't available in all regions, you might get an error when you deploy the resources. You can find more information about the availability of Azure OpenAI here.

For more details on the deployed services, see additional details below.

[!NOTE]

Sometimes the DNS zones for the private endpoints aren't created correctly / in time. If you get an error when you deploy the resources, you can try to deploy the resources again.

You can enable Azure Redis Cache to improve the performance of Azure API Management. To enable this feature, set the USE_REDIS_CACHE_APIM environment variable to true.

azd env set USE_REDIS_CACHE_APIM 'true'[!NOTE] Deployment of Azure Redis Cache can take up to 30 minutes.

You can enable a secondary Azure OpenAI location to improve the availability of Azure OpenAI. To enable this feature, set the SECONDARY_OPENAI_LOCATION environment variable to the location of your choice.

azd env set SECONDARY_OPENAI_LOCATION '<your-secondary-openai-location>'This project includes a Github workflow and an Azure DevOps Pipeline for deploying the resources to Azure on every push to main. That workflow requires several Azure-related authentication secrets to be stored as Github action secrets. To set that up, run:

azd pipeline configYou can configure azd to provision and deploy resources to your deployment environments using standard commands such as azd up or azd provision. When platform.type is set to devcenter, all azd remote environment state and provisioning uses dev center components. azd uses one of the infrastructure templates defined in your dev center catalog for resource provisioning. In this configuration, the infra folder in your local templates isn’t used.

azd config set platform.type devcenterThe Sample ChatApp is a simple NodeJS application that uses the API Management endpoints, exposing Azure OpenAI Service, to test the deployment and see how the Azure OpenAI Service works. In the ChatApp you can configure which API Management Subscription you want to use and with which deployment model, creating an end-to-end experience.

The deployed resources include a Log Analytics workspace with an Application Insights based dashboard to measure metrics like server response time and failed requests. We also included some custom visuals in the dashboard to visualize the token usage per consumer of the Azure OpenAI Service.

To open that dashboard, run this command once you've deployed:

azd monitor --overviewTo clean up all the resources you've created and purge the soft-deletes, simply run:

azd down --purge --forceThe resource group and all the resources will be deleted and you'll be prompted if you want the soft-deletes to be purged.

A tests.http file with relevant tests you can perform is included, to check if your deployment is successful. You need the 2 subcription keys for Marketing and Finance, created in API Management in order to test the API. You can find more information about how to create subscription keys here.

After forking this repo, you can use this GitHub Action to enable CI/CD for your fork. Just adjust the README in your fork to point to your own GitHub repo.

| GitHub Action | Status |

|---|---|

azd Deploy |

The following section examines different concepts that help tie in application and infrastructure.

This repository illustrates how to integrate Azure OpenAI as a central capability within an organization using Azure API Management and Azure Container Apps. Azure OpenAI offers AI models for generating text, images, etc., trained on extensive data. Azure API Management facilitates secure and managed exposure of APIs to the external environment. Azure Container Apps allows running containerized applications in Azure without infrastructure management. The repository includes a .NET 8.0 proxy application to allocate Azure OpenAI Service costs to the consuming application, aiding in cost control. The proxy supports load balancing and horizontal scaling of Azure OpenAI instances. A chargeback report in the Azure Dashboard visualizes Azure OpenAI Service costs, making it a centralized capability within the organization.

We've used the Azure Developer CLI Bicep Starter template to create this repository. With azd you can create a new repository with a fully functional CI/CD pipeline in minutes. You can find more information about azd here.

One of the key points of azd templates is that we can implement best practices together with our solution when it comes to security, network isolation, monitoring, etc. Users are free to define their own best practices for their dev teams & organization, so all deployments are followed by the same standards.

The best practices we've followed for this architecture are: Azure Integration Service Landingzone Accelerator and for Azure OpenAI we've used the blog post Azure OpenAI Landing Zone reference architecture. For the chargeback proxy we've used the setup from the Azure Container Apps Landingzone Accelerator.

When it comes to security, there are recommendations mentioned for securing your Azure API Management instance in the accelerators above. For example, with the use of Front Door or Application Gateway (see this repository), proving Layer 7 protection and WAF capabilities, and by implementing OAuth authentication on the API Management instance. How to implement OAuth authentication on API Management (see here repository).

We're also using Azure Monitor Private Link Scope. This allows us to define the boundaries of my monitoring network, and only allow traffic from within that network to my Log Analytics workspace. This is a great way to secure your monitoring network.

In order to provide an end-to-end experience and enabling user to demo from a GUI, we've included a Sample ChatApp. This is a simple NodeJS application based on the Azure Chat Solution Accelerator. It uses Azure Cosmos DB to store the chat messages and leverages Azure Key Vault to store the secrets used in the appliction.

Azure API Management is a fully managed service that enables customers to publish, secure, transform, maintain, and monitor APIs. It is a great way to expose your APIs to the outside world in a secure and manageable way.

Azure OpenAI is a service that provides AI models that are trained on a large amount of data. You can use these models to generate text, images, and more.

Managed identities allows you to secure communication between services. This is done without having the need for you to manage any credentials.

Azure Virtual Network allows you to create a private network in Azure. You can use this to secure communication between services.

Azure Private DNS Zone allows you to create a private DNS zone in Azure. You can use this to resolve hostnames in your private network.

Application Insights allows you to monitor your application. You can use this to monitor the performance of your application.

Log Analytics allows you to collect and analyze telemetry data from your application. You can use this to monitor the performance of your application.

Azure Monitor Private Link Scope allows you to define the boundaries of your monitoring network, and only allow traffic from within that network to your Log Analytics workspace. This is a great way to secure your monitoring network.

Azure Private Endpoint allows you to connect privately to a service powered by Azure Private Link. Private Endpoint uses a private IP address from your VNet, effectively bringing the service into your VNet.

Azure Container Apps allows you to run containerized applications in Azure without having to manage any infrastructure.

Azure Container Registry allows you to store and manage container images and artifacts in a private registry for all types of container deployments.

Azure Redis Cache allows you to use a secure open source Redis cache.

Azure Container Environment allows you to run containerized applications in Azure without having to manage any infrastructure.

Azure Cosmos DB allows you to use a fully managed NoSQL database for modern app development.

Azure Key Vault allows you to safeguard cryptographic keys and other secrets used by cloud apps and services.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for enterprise-azureai

Similar Open Source Tools

enterprise-azureai

Azure OpenAI Service is a central capability with Azure API Management, providing guidance and tools for organizations to implement Azure OpenAI in a production environment with an emphasis on cost control, secure access, and usage monitoring. It includes infrastructure-as-code templates, CI/CD pipelines, secure access management, usage monitoring, load balancing, streaming requests, and end-to-end samples like ChatApp and Azure Dashboards.

vertex-ai-creative-studio

GenMedia Creative Studio is an application showcasing the capabilities of Google Cloud Vertex AI generative AI creative APIs. It includes features like Gemini for prompt rewriting and multimodal evaluation of generated images. The app is built with Mesop, a Python-based UI framework, enabling rapid development of web and internal apps. The Experimental folder contains stand-alone applications and upcoming features demonstrating cutting-edge generative AI capabilities, such as image generation, prompting techniques, and audio/video tools.

cluster-toolkit

Cluster Toolkit is an open-source software by Google Cloud for deploying AI/ML and HPC environments on Google Cloud. It allows easy deployment following best practices, with high customization and extensibility. The toolkit includes tutorials, examples, and documentation for various modules designed for AI/ML and HPC use cases.

coral-cloud

Coral Cloud Resorts is a sample hospitality application that showcases Data Cloud, Agents, and Prompts. It provides highly personalized guest experiences through smart automation, content generation, and summarization. The app requires licenses for Data Cloud, Agents, Prompt Builder, and Einstein for Sales. Users can activate features, deploy metadata, assign permission sets, import sample data, and troubleshoot common issues. Additionally, the repository offers integration with modern web development tools like Prettier, ESLint, and pre-commit hooks for code formatting and linting.

genai-factory

GenAI Factory is a collection of end-to-end blueprints to deploy generative AI infrastructures in Google Cloud Platform (GCP), following security best practices. It embraces Infrastructure as Code (IaC) best practices, implements infrastructure in Terraform, and follows the least-privilege principle. The tool is compatible with Cloud Foundation Fabric FAST project-factory and application templates, allowing users to deploy various AI applications and systems on GCP.

chat-with-your-data-solution-accelerator

Chat with your data using OpenAI and AI Search. This solution accelerator uses an Azure OpenAI GPT model and an Azure AI Search index generated from your data, which is integrated into a web application to provide a natural language interface, including speech-to-text functionality, for search queries. Users can drag and drop files, point to storage, and take care of technical setup to transform documents. There is a web app that users can create in their own subscription with security and authentication.

ragapp

RAGapp is a tool designed for easy deployment of Agentic RAG in any enterprise. It allows users to configure and deploy RAG in their own cloud infrastructure using Docker. The tool is built using LlamaIndex and supports hosted AI models from OpenAI or Gemini, as well as local models using Ollama. RAGapp provides endpoints for Admin UI, Chat UI, and API, with the option to specify the model and Ollama host. The tool does not come with an authentication layer, requiring users to secure the '/admin' path in their cloud environment. Deployment can be done using Docker Compose with customizable model and Ollama host settings, or in Kubernetes for cloud infrastructure deployment. Development setup involves using Poetry for installation and building frontends.

genai-for-marketing

This repository provides a deployment guide for utilizing Google Cloud's Generative AI tools in marketing scenarios. It includes step-by-step instructions, examples of crafting marketing materials, and supplementary Jupyter notebooks. The demos cover marketing insights, audience analysis, trendspotting, content search, content generation, and workspace integration. Users can access and visualize marketing data, analyze trends, improve search experience, and generate compelling content. The repository structure includes backend APIs, frontend code, sample notebooks, templates, and installation scripts.

serverless-pdf-chat

The serverless-pdf-chat repository contains a sample application that allows users to ask natural language questions of any PDF document they upload. It leverages serverless services like Amazon Bedrock, AWS Lambda, and Amazon DynamoDB to provide text generation and analysis capabilities. The application architecture involves uploading a PDF document to an S3 bucket, extracting metadata, converting text to vectors, and using a LangChain to search for information related to user prompts. The application is not intended for production use and serves as a demonstration and educational tool.

shipstation

ShipStation is an AI-based website and agents generation platform that optimizes landing page websites and generic connect-anything-to-anything services. It enables seamless communication between service providers and integration partners, offering features like user authentication, project management, code editing, payment integration, and real-time progress tracking. The project architecture includes server-side (Node.js) and client-side (React with Vite) components. Prerequisites include Node.js, npm or yarn, Anthropic API key, Supabase account, Tavily API key, and Razorpay account. Setup instructions involve cloning the repository, setting up Supabase, configuring environment variables, and starting the backend and frontend servers. Users can access the application through the browser, sign up or log in, create landing pages or portfolios, and get websites stored in an S3 bucket. Deployment to Heroku involves building the client project, committing changes, and pushing to the main branch. Contributions to the project are encouraged, and the license encourages doing good.

Open_Data_QnA

Open Data QnA is a Python library that allows users to interact with their PostgreSQL or BigQuery databases in a conversational manner, without needing to write SQL queries. The library leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, enabling users to ask questions in natural language and receive informative responses. It offers features such as conversational querying with multiturn support, table grouping, multi schema/dataset support, SQL generation, query refinement, natural language responses, visualizations, and extensibility. The library is built on a modular design and supports various components like Database Connectors, Vector Stores, and Agents for SQL generation, validation, debugging, descriptions, embeddings, responses, and visualizations.

bytechef

ByteChef is an open-source, low-code, extendable API integration and workflow automation platform. It provides an intuitive UI Workflow Editor, event-driven & scheduled workflows, multiple flow controls, built-in code editor supporting Java, JavaScript, Python, and Ruby, rich component ecosystem, extendable with custom connectors, AI-ready with built-in AI components, developer-ready to expose workflows as APIs, version control friendly, self-hosted, scalable, and resilient. It allows users to build and visualize workflows, automate tasks across SaaS apps, internal APIs, and databases, and handle millions of workflows with high availability and fault tolerance.

AppFlowy-Cloud

AppFlowy Cloud is a secure user authentication, file storage, and real-time WebSocket communication tool written in Rust. It is part of the AppFlowy ecosystem, providing an efficient and collaborative user experience. The tool offers deployment guides, development setup with Rust and Docker, debugging tips for components like PostgreSQL, Redis, Minio, and Portainer, and guidelines for contributing to the project.

aws-bedrock-with-rag-and-react

This solution provides a low-code ReactJS application to prototype and vet business use cases for GenAI using Retrieval Augmented Generation (RAG). It includes a backend Flask application that uses LangChain to provide PDF data as embeddings to a text-gen model via Amazon Bedrock and a vector database with FAISS or Kendra Index. The solution utilizes Amazon Bedrock as the only cost-generating AWS service.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

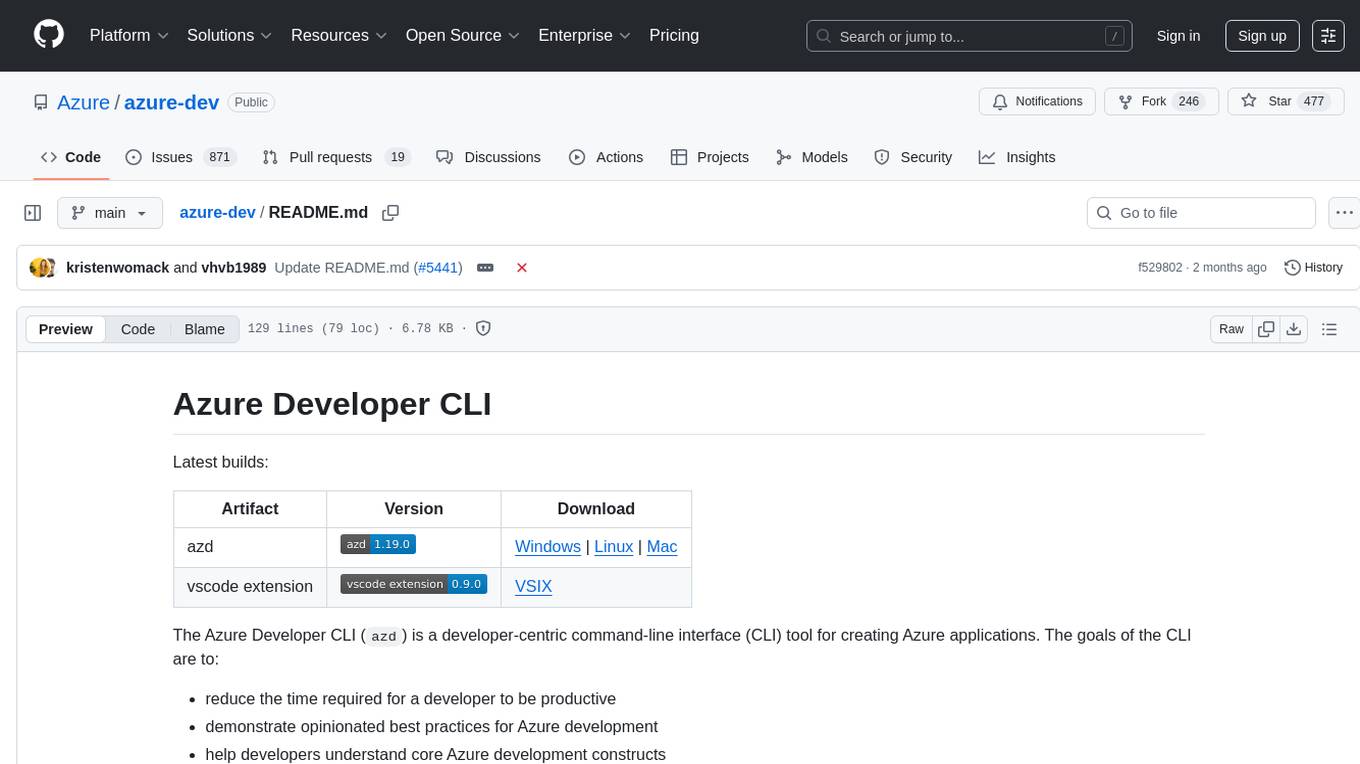

azure-dev

The Azure Developer CLI (`azd`) is a developer-centric command-line interface (CLI) tool for creating Azure applications. It aims to reduce the time required for a developer to be productive, demonstrate best practices for Azure development, and help developers understand core Azure development constructs. The CLI requires code repositories to adhere to specific conventions. It supports shell completion for `bash`, `zsh`, `fish`, and `powershell`. The software may collect information about users and their use of the software for service improvement. Telemetry collection is on by default but can be opted out by setting the environment variable `AZURE_DEV_COLLECT_TELEMETRY` to `no`. Contributions are welcome, and contributors need to agree to a Contributor License Agreement (CLA). The project has adopted the Microsoft Open Source Code of Conduct. The tool is licensed under Azure Developer CLI Templates Trust Notice.

For similar tasks

enterprise-azureai

Azure OpenAI Service is a central capability with Azure API Management, providing guidance and tools for organizations to implement Azure OpenAI in a production environment with an emphasis on cost control, secure access, and usage monitoring. It includes infrastructure-as-code templates, CI/CD pipelines, secure access management, usage monitoring, load balancing, streaming requests, and end-to-end samples like ChatApp and Azure Dashboards.

azure-ai-foundry-baseline

This repository serves as a reference implementation for running a chat application and an AI orchestration layer using Azure AI Foundry Agent service and OpenAI foundation models. It covers common generative AI chat application characteristics such as creating agents, querying data stores, chat memory database, orchestration logic, and calling language models. The implementation also includes production requirements like network isolation, Azure AI Foundry Agent Service dependencies, availability zone reliability, and limiting egress network traffic with Azure Firewall.

aws-reference-architecture-pulumi

The Pinecone AWS Reference Architecture with Pulumi is a distributed system designed for vector-database-enabled semantic search over Postgres records. It serves as a starting point for specific use cases or as a learning resource. The architecture is permissively licensed and supported by Pinecone's open-source team, facilitating the setup of high-scale use cases for Pinecone's scalable vector database.

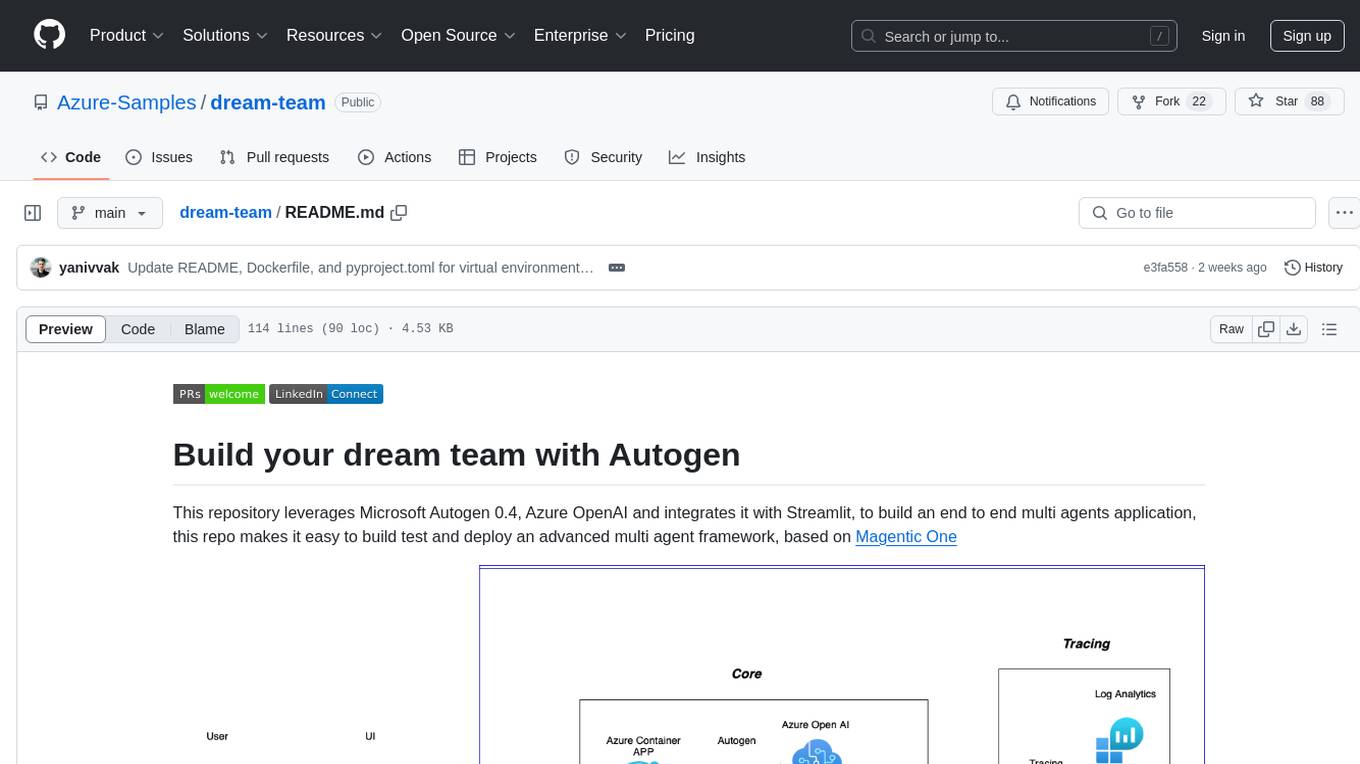

dream-team

Build your dream team with Autogen is a repository that leverages Microsoft Autogen 0.4, Azure OpenAI, and Streamlit to create an end-to-end multi-agent application. It provides an advanced multi-agent framework based on Magentic One, with features such as a friendly UI, single-line deployment, secure code execution, managed identities, and observability & debugging tools. Users can deploy Azure resources and the app with simple commands, work locally with virtual environments, install dependencies, update configurations, and run the application. The repository also offers resources for learning more about building applications with Autogen.

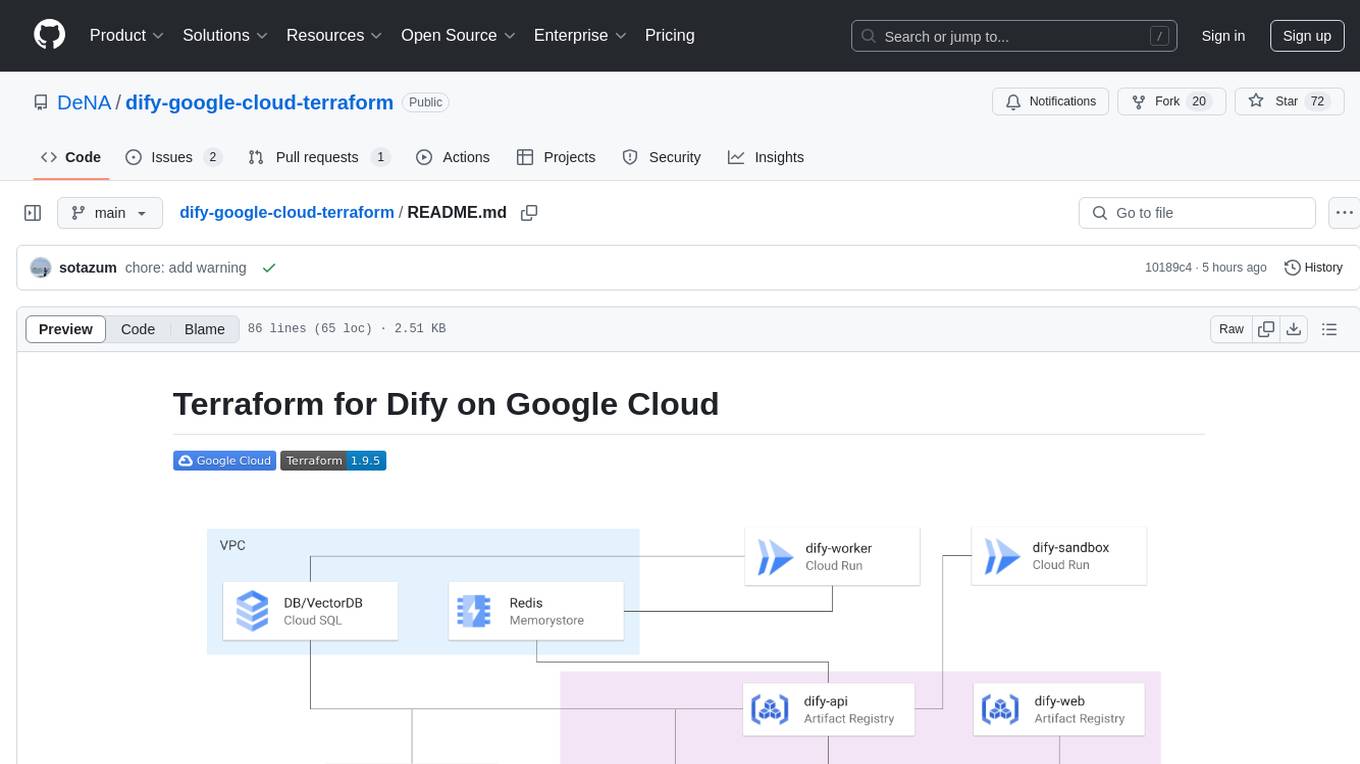

dify-google-cloud-terraform

This repository provides Terraform configurations to automatically set up Google Cloud resources and deploy Dify in a highly available configuration. It includes features such as serverless hosting, auto-scaling, and data persistence. Users need a Google Cloud account, Terraform, and gcloud CLI installed to use this tool. The configuration involves setting environment-specific values and creating a GCS bucket for managing Terraform state. The tool allows users to initialize Terraform, create Artifact Registry repository, build and push container images, plan and apply Terraform changes, and cleanup resources when needed.

action_mcp

Action MCP is a powerful tool for managing and automating your cloud infrastructure. It provides a user-friendly interface to easily create, update, and delete resources on popular cloud platforms. With Action MCP, you can streamline your deployment process, reduce manual errors, and improve overall efficiency. The tool supports various cloud providers and offers a wide range of features to meet your infrastructure management needs. Whether you are a developer, system administrator, or DevOps engineer, Action MCP can help you simplify and optimize your cloud operations.

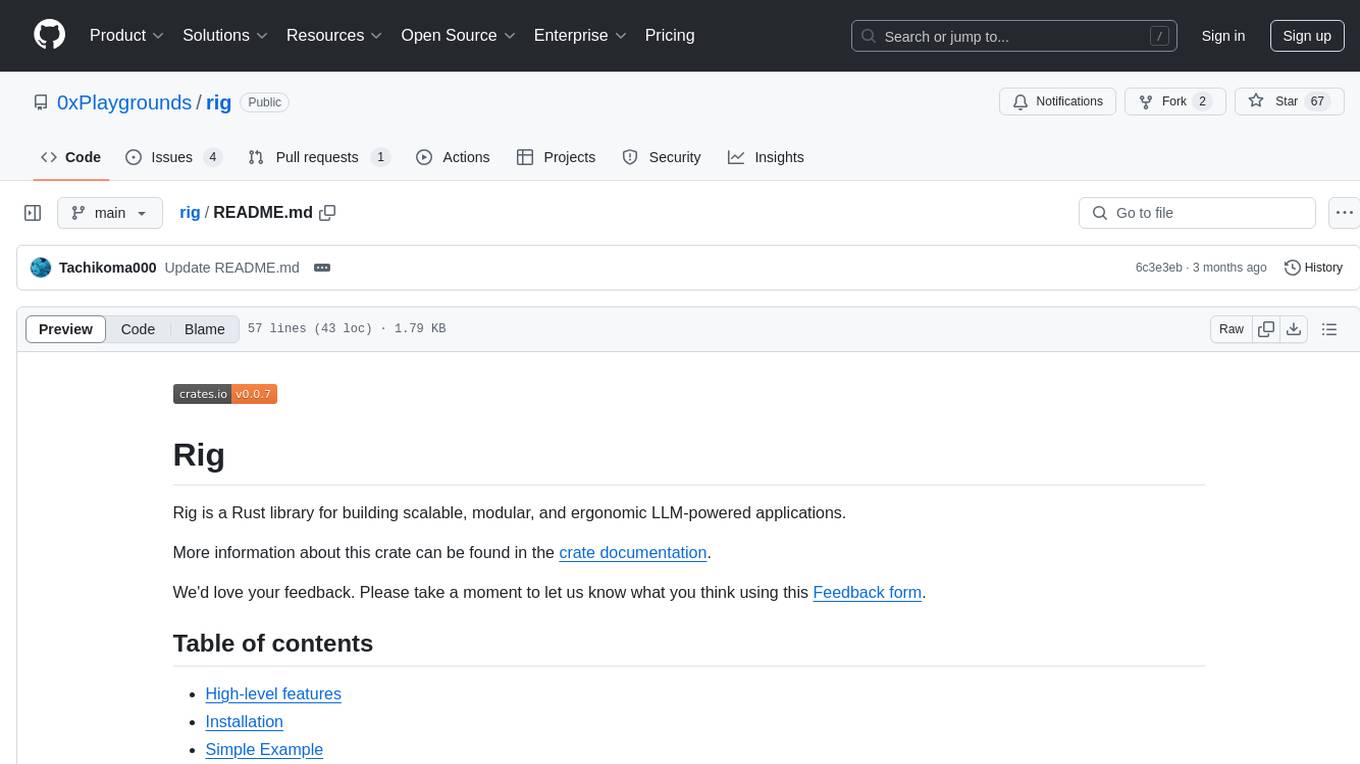

rig

Rig is a Rust library designed for building scalable, modular, and user-friendly applications powered by large language models (LLMs). It provides full support for LLM completion and embedding workflows, offers simple yet powerful abstractions for LLM providers like OpenAI and Cohere, as well as vector stores such as MongoDB and in-memory storage. With Rig, users can easily integrate LLMs into their applications with minimal boilerplate code.

instructor

Instructor is a popular Python library for managing structured outputs from large language models (LLMs). It offers a user-friendly API for validation, retries, and streaming responses. With support for various LLM providers and multiple languages, Instructor simplifies working with LLM outputs. The library includes features like response models, retry management, validation, streaming support, and flexible backends. It also provides hooks for logging and monitoring LLM interactions, and supports integration with Anthropic, Cohere, Gemini, Litellm, and Google AI models. Instructor facilitates tasks such as extracting user data from natural language, creating fine-tuned models, managing uploaded files, and monitoring usage of OpenAI models.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.